Abstract

The purpose of the paper is to propose the Chebyshev spectral collocation method to solve a certain type of stochastic delay differential equations. Based on a spectral collocation method, the scheme is constructed by applying the differentiation matrix \(D_{N}\) to approximate the differential operator \(\frac{d}{dt}\). \(D_{N}\) is obtained by taking the derivative of the interpolation polynomial \(P_{N}(t)\), which is interpolated by choosing the first kind of Chebyshev-Gauss-Lobatto points. Finally, numerical experiments are reported to show the accuracy and effectiveness of the method.

Similar content being viewed by others

1 Introduction

Deterministic differential models require that the parameters involved be completely known, though in the original problem, one often has insufficient information on parameter values. These may fluctuate due to some external or internal ‘noise’, which is random. Thus, it is necessary to move from deterministic problems to stochastic problems. Stochastic differential equations (SDEs) play a prominent role in a range of application areas, such as biology, chemistry, epidemiology, mechanics, microelectronics, economics and so on [1, 2]. For SDEs, roughly speaking, there are two major types of numerical methods, explicit numerical methods [3, 4] and implicit numerical methods [5, 6]. One can refer to [7] for an overview of the numerical solution of SDEs.

In nature, there are many processes which involve time delays. That is, the future state of the system is dependent on some of the past history. Stochastic delay differential equations (SDDEs), which are a generalization of both deterministic delay differential equations (DDEs) and stochastic ordinary differential equations (SODEs), are better to simulate these kinds of systems. In order to give the reader a general insight into the application of SDDEs, we introduce briefly the cell population growth model which is given as follows:

Assume that these biological systems operate in a noisy environment whose overall noise rate is distributed like white noise \(\beta dw(t)\). The constant τ denotes the average cell-division time. Then the population \(x(t)\) is a random process, whose growth can be described by (1.1). In fact, SDDEs as the stochastic models appear frequently in applied research and lead to an increasing interest in the investigation. For additional examples one can refer to applications in neural control mechanisms: neurological diseases [8], human postural sway [9] and pupil light reflex [10]. Since explicit solutions are rarely available for SDDEs, numerical approximations [11, 12] become increasingly important in many applications. To make the implementation viable, effective numerical methods are clearly the key ingredient and deserve much investigation. In the present work we make efforts in this direction and propose a new efficient scheme.

In the paper, we attempt to construct a Chebyshev spectral collocation method to solve SDDEs of the form

where \(0< T<\infty\) and τ is a positive fixed delay, \(f:\mathbb{R}\times\mathbb{R}\rightarrow\mathbb{R}\) and \(g:\mathbb{R}\rightarrow \mathbb{R}\) are assumed to be continuous. \(w(t)\) is a one-dimensional standard Wiener process defined on the complete probability space \((\Omega,\mathcal{F}_{t}, P)\) with a filtration \(\{\mathcal{F}_{t}\}_{t\geq0}\) satisfying the usual conditions (that is, it is increasing and right continuous while \(\mathcal{F}_{0}\) contains all P-null sets). \(\Psi(t)\) is an \(\mathcal{F}_{0}\)-measurable \(C([-\tau,0], \mathbb{R})\)-valued random process such that \(\mathbb{E}\|\Psi\|^{2}<\infty\) (\(C([-\tau,0], \mathbb{R})\) is the Banach space of all continuous paths from \([-\tau ,0]\rightarrow\mathbb{R}\) equipped with the supremum norm).

Our approach is derived by constructing the interpolating polynomial of degree N based on a spectral collocation method and applying the differentiation matrix to approximate the differential operator arising in SDDEs. The interpolating polynomial of degree N is constructed by applying the Chebyshev-Gauss-Lobatto (C-G-L) points as interpolation points and the Lagrange polynomial as a trial function. To the best of our knowledge, they have not been utilized in solving SDDEs. Finally, we would like to mention that the idea of the spectral collocation was previously employed in [13, 14] to construct methods for SODEs and DDEs. The authors in [13] propose a spectral collocation method for SODEs. Inspired by the idea, we construct the Chebyshev spectral collocation method for SDDEs.

This paper is organized as follows. In the next section, some fundamental knowledge is reviewed and the derivation of the Chebyshev spectral collocation for solving SDDEs is introduced. Section 3 is devoted to reporting some numerical experiments to confirm the accuracy and effectiveness of the method. At the end of the article, conclusions are made briefly.

2 Construction of the Chebyshev spectral collocation method

2.1 The Lamperti-type transformation

In this section, we introduce the Lamperti-type transformation [15], which can guarantee that the diffusion term of (1.2) is a constant. For equation (1.2), assume

where u is any arbitrary value in the state space of X. For \(0\leq t\leq T\), applying the Itô formula yields

Let

Hence, equation (2.2) can be rewritten simply as

Using the Lamperti transform, one can transform equation (1.2) into (2.4). Therefore, here and hereafter, we only consider the SDDEs of the form

2.2 Review on Chebyshev interpolation polynomials

Chebyshev polynomials are a well-known family of orthogonal polynomials on the interval \([-1,1]\). These polynomials present, among others, very good properties in the approximation of functions. Therefore, Chebyshev polynomials appear frequently in several fields of mathematics, physics and engineering. In this subsection, we will recall the Chebyshev interpolation polynomial for a given function \(x(t)\in C^{k}(-1,1)\), where \(C^{k}\) is the space of all functions whose k times derivatives are continuous on the interval \((-1,1)\). More details can be found in [16].

Let \(T_{k}(t)=\cos(k\arccos(t))\) be the first kind Chebyshev polynomial of degree k and choose \(N+1\) Chebyshev-Gauss-Lobatto (C-G-L) nodes such that

Define the Lagrange basis functions as follows:

where \(\omega(t)\) is given by

It is noted that for \(k,j=0,1,\ldots, N\) the Lagrange interpolating basis functions have the Kronecker property

To sum up, the Chebyshev interpolation polynomial for a function \(x(t)\) can be given by

where \(x_{k}:=x(t_{k})\), \(k=0,1,\ldots,N\). By calculating the derivative of (2.8) and substituting C-G-L nodes \(t_{j}\), \(j=0,1,\ldots, N\), into it, one can get

Define the differentiation matrix by

Remark 2.1

The differentiation matrix \(D_{N}\) is not dependent on the problem itself but dependent on the C-G-L nodes. Therefore, the differentiation matrices can be obtained before a problem setting.

Remark 2.2

Differentiation matrices are derived from the spectral collocation method for solving differential equations of boundary value type, more details can be found in [16, 17].

Remark 2.3

([13])

The differentiation matrix \(D_{N}\) itself is singular.

2.3 Chebyshev spectral collocation method for DDEs

Spectral method is one of the three technologies for numerical solutions of partial differential equations. The other two are finite difference methods (FDMs) and finite element methods (FEMs). The spectral methods based on Chebyshev polynomials as basis functions for solving numerical differential equations [16–18] with smooth coefficients and simple domain have been well applied by many authors. Furthermore, they can often achieve ten digits of accuracy while FDMs and FEMs would get two or three. An interested reader can refer to references [19, 20]. Later, the spectral methods are developed to solve neutral differential equations [14] or special DDEs [18]. In this subsection, we will introduce the spectral collocation method for DDEs.

Consider the DDEs

Let \(t=\frac{T}{2}(1+s)\), \(x(t)=x (\frac{T}{2}(1+s) ):=y(s)\), one can transform equation (2.11) into the following form:

where \(y(s)=x(\frac{T}{2}(1+s))=\phi(\frac{T}{2}(1+s)):=\varphi(s)\), \(s\in[-\frac{2\tau}{T}-1,-1]\). Using the transformation, we shift the interval of the solution of DDEs (2.11) from \([0,T]\) into \([-1,1]\). Therefore, in the following we focus mainly on the DDEs as follows:

Approximating the function \(x(t)\) on the left-hand side of (2.13) by the interpolation polynomial \(P_{N}(t)\) defined in (2.8) yields

Substituting the C-G-L points \(t_{j}\), \(j=0,1,\ldots, N\), defined in (2.6) into the above equality and replacing the differential operator \(\frac{d}{dt}\) with the differentiation matrix \(D_{N}\), one can obtain

Denoting by

one can obtain the discrete approximative equations for DDEs (2.13)

It is appropriate to emphasize that the remainder elements of the vector \(X=(x_{0},x_{1},\ldots, x_{N})^{T}\) are unknown except the first element \(x_{0}\) which can be calculated by the condition \(x(t)=\varphi(t)\), \(t\in[-\tau-1,-1]\). Here and hereafter, we assume that \(x_{0}=0\). If it is not, we can take a transform \(y(t)=x(t)-x_{0}\) to vanish the initial condition. Subsequently, we give an explanation of the elements in the vector F. On the one hand, if \(t_{k}-\tau\leq-1\), we can calculate correctly by \(x(t_{k}-\tau)=\varphi(t_{k}-\tau)\) due to the initial condition \(x(t)=\varphi(t)\), \(t\in[-\tau-1,-1]\). On the other hand, if \(t_{k}-\tau> -1\), we apply the Chebyshev interpolation polynomial \(P_{N}(t_{k}-\tau)\) to approximate \(x(t_{k}-\tau)\). Therefore, the vector is given by

For convenience, we denote the \(N+1\)-dimensional \(G(X,AX+d)\) with components \(G_{i}=f(x_{i},\varphi(t_{i}-\tau))\) for \(i=0,1,\ldots, m\) and \(G_{i}=f(x_{i},\sum_{k=0}^{N}x_{k}l_{k}(t_{i}-\tau))\) for \(i=m+1,\ldots, N\). Therefore, the vector F can be represented as \(F=G(X,AX+d)\).

Remark 2.4

Note that \(x_{0}\) is fixed at zero in the approximative equation (2.14). This implies that the first column of \(D_{N}\) has no effect (since multiplied by zero) and the first row has no effect either (since ignored). Therefore, by removing the first row and first column of \(D_{N}\), we can get a new matrix denoted by

Remark 2.3 shows that the differentiation matrix \(D_{N}\) itself is singular. However, \(\tilde{D}_{N}\) is invertible.

Remark 2.5

By removing the first row and the first column of A and the first element of the vectors X, d respectively, one can get

Therefore,

where \(\tilde{D}_{N}\), \(\tilde{A}\), \(\tilde{X}\), \(\tilde{d}\) are defined respectively by (2.18), (2.19), and \(g(\tilde{X},\tilde{A} \tilde{X}+\tilde{d})\) is an N-dimensional vector with components \(g_{i}=f(x_{i},\varphi(t_{i}-\tau))\) for \(i=1,\ldots, m\) and \(g_{i}=f(x_{i},\sum_{k=0}^{N}x_{k}l_{k}(t_{i}-\tau))\) for \(i=m+1,\ldots, N\). The solution vector \(\tilde{X}\) can be obtained by solving the discrete approximative (2.20).

2.4 The Chebyshev spectral collocation method for SDDEs

In this subsection, we first give the theorem to guarantee the existence and uniqueness of the exact solution of SDDE (1.2).

Theorem 2.6

Assume that there exist positive constants \(L_{f,i}\), \(i=1,2\), and \(K_{f}\) such that both the functions f and g satisfy a uniform Lipschitz condition and a linear growth bound of the following form, for all \(\zeta_{1}, \zeta_{2}, \eta_{1},\eta_{2},\zeta,\eta\in \mathbb{R}\) and \(t\in[0,T]\):

and likewise for g with constants \(L_{g}\) and \(K_{g}\). Then there exists a path-wise unique strong solution to (1.2).

Consider the SDDEs

Assume that the coefficients in (1.2) satisfy a uniform Lipschitz condition and a linear growth bound mentioned in Theorem 2.6. Therefore, (1.2) has a path-wise unique strong solution. Equation (2.21) is transformed from (1.2) by using the Lamperti-type transformation. Hence, there exists a path-wise unique strong solution to (2.21). Following the same lines as mentioned in Section 2.3, it is easy to obtain the approximative equations for SDDE (2.21) as follows:

where the N-dimensional vector \(g(\tilde{X},\tilde{A}\tilde{X}+\tilde {d})\) has the components \(g_{i}=f(x_{i},\varphi(t_{i}-\tau))\) for \(i=1,\ldots , m\) and \(g_{i}=f(x_{i},\sum_{k=0}^{N}x_{k}l_{k}(t_{i}-\tau))\) for \(i=m+1,\ldots , N\). Applying the invertible property of the matrix yields

Remark 2.7

To find the derivative of function \(x(t)\) on C-G-L nodes, \(D_{N}x\) is of high accuracy only if \(x(t)\) is smooth enough. But the standard Wiener process \(w(t)\) is a nowhere differentiable process, \(D_{N}w\) behaves very badly. However, if the coefficient of diffusion term of SDDEs is a constant, we can avoid \(D_{N}w\) as above (see [13]).

3 Numerical experiments

The theoretical discussion of numerical processes is intended to provide an insight into the performance of numerical methods in practice. Therefore, in this section, some numerical experiments are reported to test the accuracy and the effectiveness of the spectral collocation method.

Example 1

Consider the SDDE

as a test equation for our method. In the case of additive noise (\(\beta_{2}=\beta_{3}=0\)), an explicit solution on the first interval \([0,\tau]\) has been calculated by the method of steps (see, for example, [23]). Using \(\varphi(t)=1+t\) for \(t\in[-1,0]\) as an initial function, the solution on \(t\in[0,1]\) is given by

In our experiments, the mean-square error \(\mathbb{E}(|x(T)-\bar{X}_{N}|^{2})\) (note that \(\bar{X}_{N}\) is the last element of the vector \(\tilde {X}_{N}\) calculated by (2.23)) at the final time T is estimated in the following way. A set of 20 blocks, each containing 100 outcomes (\(\omega_{i,j}\), \(1\leq i\leq20\), \(1\leq j\leq100\)), is simulated, and for each block the estimator

is formed. In Table 1 ϵ denotes the mean of this estimator, which is estimated in the usual way: \(\epsilon=\frac {1}{20}\sum_{i=1}^{20}\epsilon_{i}\).

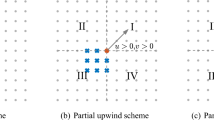

It is noted that, for the Chebyshev spectral method, we collocate \(N+1\) points on the interval. Hence, there exist N subintervals. Different from the Euler-Maruyama method [11] for solving SDDEs, the subintervals are not equidistant, and the distance between successive Chebyshev points is \(\frac{\sqrt{1-x^{2}}}{N}\), \(x\in[-1,1]\). Figure 1 [13] shows the difference between the two methods.

We apply the spectral collocation method to solve (3.1) under the set of coefficients I: \(a=-0.9\), \(b=0.1\), \(\beta_{1}=0.1\) and II: \(a=-2\), \(b=0.1\), \(\beta_{1}=1\). The numerical results are shown in Table 1. In Table 1, we denote N by the number of the C-G-L nodes. The approximation errors reported in Table 1 show that the Chebyshev spectral collocation method works very well for SDDEs and has high accuracy and effectiveness.

Example 2

Consider (3.1) as a DDE, i.e., \(\beta _{1}=\beta_{2}=\beta_{3}=0\), which reads

We apply the spectral collocation method for (3.3) with two groups of parameters I: \(a=-0.9\), \(b=1\) and II: \(a=-2\), \(b=0.1\). In Tables 2 and 3, for (3.3) with the parameters I and II, we list the approximation errors of the spectral collocation methods with different N which is denoted by the number of the C-G-L nodes. It is clear that the spectral accuracy and the convergence are obtained when the spectral collocation method is applied to solve (3.3).

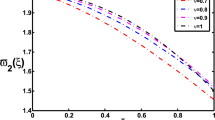

In order to visualize the approximation error behavior of the proposed methods with different N, we plot the speed of the errors damping under different coefficients in Figures 2 and 3. As one may expect, the errors decay very quickly with the increase in the number of the interpolation points.

The error decay for equation ( 3.3 ) in the case of \(\pmb{a=-0.9}\) , \(\pmb{b=1}\) .

The error decay for equation ( 3.3 ) in the case of \(\pmb{a=-2}\) , \(\pmb{b=0.1}\) .

4 Conclusions

In the paper, the Chebyshev spectral collocation is proposed to solve a certain type of SDDEs. The most important step to construct the scheme is the Lamperti-type transformation. This transformation allows to shift non-linearities from the diffusion coefficient into the drift coefficient. Then, based on the spectral collocation method, we construct the Nth degree interpolating polynomials to approximate the SDDEs. The numerical results confirm that the scheme is effective and easy to implement. However, the convergence of the method is not obtained for its complexity. It will be our future work.

References

Bodo, BA, Thompson, ME, Labrie, C, Unny, TE: A review on stochastic differential equations for application in hydrology. Stoch. Hydrol. Hydraul. 1(2), 81-100 (1987)

Wilkinson, DJ, Platen, E, Schurz, H: Stochastic modelling for quantitative description of heterogeneous biological systems. Nat. Rev. Genet. 10, 122-133 (2009)

Maruyama, G: Continuous Markov processes and stochastic equations. Rend. Circ. Mat. Palermo 4(1), 48-90 (1955)

Milstein, GN: Approximate integration of stochastic differential equations. Theory Probab. Appl. 19(3), 557-562 (1975)

Kloeden, PE, Platen, E, Schurz, H: The numerical solution of nonlinear stochastic dynamical systems: a brief introduction. Int. J. Bifurc. Chaos 1(2), 277-286 (1991)

Milstein, GN, Platen, E, Schurz, H: Balanced implicit methods for stiff stochastic systems. SIAM J. Numer. Anal. 35(3), 1010-1019 (1998)

Kloeden, PE, Platen, E: Numerical Solution of Stochastic Differential Equations. Springer, Berlin (1992)

Beuter, A, Bélair, J, Labrie, C: Feedback and delays in neurological diseases: a modelling study using dynamical systems. Bull. Math. Biol. 55(3), 525-541 (1993)

Eurich, CW, Milton, JG: Noise-induced transitions in human postural sway. Phys. Rev. E 54, 6681-6684 (1996)

Mackey, MC, Longtin, A, Milton, JG, Bos, JE: Noise and critical behaviour of the pupil light reflex at oscillation onset. Phys. Rev. A 41, 6992-7005 (1990)

Buckwar, E: Introduction to the numerical analysis of stochastic delay differential equations. J. Comput. Appl. Math. 125, 297-307 (2000)

Christopher, THB, Buckwar, E, Labrie, C, Unny, TE: Numerical analysis of explicit one-step method for stochastic delay differential equations. LMS J. Comput. Math. 3, 315-335 (2000)

Huang, C, Zhang, Z: The spectral collocation method for stochastic differential equations. Discrete Contin. Dyn. Syst., Ser. B 18(3), 667-679 (2013)

Wang, W, Li, D: Convergence of spectral method of linear variable coefficient neutral differential equation with variable delays. Math. Numer. Sin. 34(1), 68-80 (2012)

Lacus, SM, Platen, E: Simulation and Inference for Stochastic Differential Equations. Springer, Berlin (2007)

Elbarbary, EME, El-Kady, M: Chebyshev finite difference approximation for the boundary value problems. Appl. Math. Comput. 139(2-3), 513-523 (2003)

Ibrahim, MAK, Temsah, RS: Spectral methods for some singularly perturbed problems with initial and boundary layers. Int. J. Comput. Math. 25(1), 33-48 (1988)

Ali, I: A spectral method for pantograph-type delay differential equations and its convergence analysis. Appl. Comput. Math. 27(2-3), 254-265 (2009)

Canuto, C, Hussaini, MY, Quarteroni, A, Zang, TA: Spectral Methods Fundamentals in Single Domains. Springer, Berlin (2006)

She, J, Tang, T: Spectral and High-Order Methods with Applications. Science Press, Beijing (2006)

Mizel, VJ, Trutzer, V: Stochastic hereditary equations: existence and asymptotic stability. J. Integral Equ. 7, 1-72 (1984)

Mao, X: Stochastic Differential Equations and Their Applications. Ellis Horwood, Chichester (1997)

Driver, RD: Ordinary and Delay Differential Equations. Springer, New York (1977)

Acknowledgements

The authors would like to thank the anonymous referees for their valuable and insightful comments which have improved the paper. This work is supported by the National Natural Science Foundation of China (No. 11171352, No. 11301550), the New Teachers’ Specialized Research Fund for the Doctoral Program from the Ministry of Education of China (No. 20120162120096) and Mathematics and Interdisciplinary Sciences Project, Central South University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

Both authors contributed equally to the writing of this paper. Both authors read and approved the final manuscript.

Rights and permissions

Open Access This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly credited.

About this article

Cite this article

Yin, Z., Gan, S. Chebyshev spectral collocation method for stochastic delay differential equations. Adv Differ Equ 2015, 113 (2015). https://doi.org/10.1186/s13662-015-0447-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-015-0447-1