Abstract

We compute the numerical solution of the Bratu’s boundary value problem (BVP) on a Banach space setting. To do this, we embed a Green’s function into a new two-step iteration scheme. After this, under some assumptions, we show that this new iterative scheme converges to a sought solution of the one-dimensional non-linear Bratu’s BVP. Furthermore, we show that the suggested new iterative scheme is essentially weak \(w^{2}\)-stable in this setting. We perform some numerical computations and compare our findings with some other iterative schemes of the literature. Numerical results show that our new approach is numerically highly accurate and stable with respect to different set of parameters.

Similar content being viewed by others

1 Introduction

In 1914, Bratu [1] suggested an important BVP as follows:

with the boundary condition (BC):

Bratu’s Problem (1.1)–(1.2) attracted the attention of many researchers due to its fruitful applications in many areas of applied science. Thus in [2], Ascher et al. first time successfully obtained the exact solution for the Bratu’s problem (1.1)–(1.2) as follows:

where the number θ involved in (1.3) solves essentially \(\theta =\sqrt{2\lambda}\cosh (\frac{\theta}{4})\). Suppose, \(\lambda _{c}\) is a number that satisfies the following equation:

Then we remark as follows.

Remark 1.1

-

(i)

The Bratu’s problem admits no solution when \(\lambda >\lambda _{c}\).

-

(ii)

The Bratu’s problem admits one and only one solution when \(\lambda =\lambda _{c}\).

-

(iii)

The Bratu’s problem admits two solutions when \(\lambda <\lambda _{c}\).

The Bratu’s BVP (1.1)–(1.2) has many important applications in various fields of applied sciences, (see, e.g., [3–6] and others). The exact solution of this problem comes from Ascher et al. [2]. Using this exact solution, various researchers investigated different numerical techniques and compared their findings with this exact solution [7–9]. However, we note that these techniques do not guarantee the existence of solution and the methods used have slow speed of convergence and require too much complicated set of parameters. Our alternative approach in this research is to study the existence and iterative approximation for the Bratu’s BVP (1.1)–(1.2) using fixed point and Green function combination technique. We first show that the sought solution of (1.1)–(1.2) can be set as a fixed point of a continuous operator. We will show that this operator has a unique fixed point which solves our problem. Moreover, we use this operator in the well-known Ishikawa [10] iterative scheme to obtain the convergence. The stability result with a comparative numerical experiment is provided that validates the results and shows the high accuracy of the proposed approach.

Often real world problems can be described in the form of differential equations. However, finding analytical solutions for such problems is very difficult (or may be impossible in some cases), and hence one needs approximate solutions for these problems. In such cases, fixed-point theory provides alternative approaches to these problems, since the sought solution to such problem can be in the form of a fixed point of a certain linear or nonlinear operator T, whose domain is normally a suitable Banach space. Hence, once the existence of a fixed point for the involved operator is established, it follows immediately that the solution for the problem under the consideration has been established. The famous Banach Contraction Principle (BCP) [11] proved that if X is any given Banach space and \(T:X\rightarrow X\) is essentially a contraction map, that is, \(\Vert Tx-Ty\Vert \leq \nu \Vert x-y\Vert \) for all \(x,y\in X\) and \(\nu \in (0,1)\), then T admits essentially a unique fixed point, namely, \(x^{\ast}\in X\), which solves the operator equation \(x=Tx\). Once the existence of a fixed point (sought solution for the operator equation \(x=Tx\)), one asks for an iterative scheme to approximate value of the fixed point. The proof of the BCP suggests an iterative scheme of Picard [12], \(x_{k+1}=Tx_{k}\), for finding the approximate value of the unique fixed point of T. It is known that when T is nonexpanansive, that is, \(\Vert Tx-Ty\Vert \leq \Vert x-y\Vert \) for all \(x,y\in X\), then T has a fixed point under some restrictions (see, e.g., [13–15] and others), but the Picard iteration of T generally fails to converge to the fixed point of T. Example of nonexpansive mapping for which Picard iteration is not convergent is the following.

Example 1

Assume that \(X=[-1,1]\) and define the selfmap T on X by \(Tx=-x\) for all \(x\in X\). It follows that the selfmap T is nonexpansive on X and has a unique fixed point, but if \(x_{0}\neq 0\), then we have a divergent sequence from the Picard iterative scheme.

On the other hand, the speed of convergence of Picard iteration is slow. Thus to overcome these difficulties, Mann [16] and Ishikawa [10] generalized the Picard iterative scheme and proved the convergence for nonexpansive mappings. In this paper, we consider the following iterative scheme, which is independent of both the Mann [16] and Ishikawa [10] iterative schemes:

where \(\alpha _{k},\beta _{k}\in (0,1)\).

Recently, some authors suggested novel approaches to different classes of BVPs by embedding a Green’s function into the Picard, Mann, and Ishikawa iterative schemes. They called these new modified schemes Picard–Green’s, Mann–Green’s, and Ishikawa–Green’s iterative schemes, respectively. In particular, Kafri and Khuri [17] suggested Picard–Green’s and Mann–Green’s iterative schemes for solving the Bratu problem (1.1)–(1.2). They compared the speed of convergence of these new schemes with some classical methods and observed that these new schemes are too much better than the corresponding old techniques. Motivated by Kafri and Khuri [17], first we embed a Green’s function into the scheme (1.4) and prove its strong convergence for the Bratu’s problem BVP (1.1)–(1.2). Similar results can be proved for Picard–Green’s and Mann–Green’s iterative schemes. We then show that our new iterative scheme is essentially weakly \(w^{2}\)-stable. Some numerical experiments to support the main outcome are given. These numerical experiments also suggest a high numerical accuracy of the our new iterative scheme.

2 Description of the iterative method

We shall now provide a Green’s function associated with a general class of BVPs having second order. After this, we embed this Green’s function into the iterative scheme (1.4) in order to obtain the desirable new iterative scheme. We now split this section into some sub-sections as follows.

2.1 Solution of BVPs by Green’s functions

We first assume the following BVP with second order:

and assume its BCs as follows:

where the value t lies between 0 and 1, that is, \(0\leq t\leq 1\). Now we decopose the equation (2.1) into linear and nonlinear terms respectively as \(\mathcal{L}[x]=x^{\prime \prime}+y(t)x^{\prime}+z(t)x\) and \(\mathcal{N}[x]=q(t,,x,x^{\prime},x^{\prime})\) and our aim is to construct a Green’s function for \(\mathcal{L}\).

Now we assume that the Problem (2.1)–(2.2) essentially admits a general solution as follows:

where the notations \(x_{h}\) denote a solution for \(\mathcal{L}[x]=0\) (homogeneous part) and \(x_{p}\) is any particular solution for \(\mathcal{L}[x]= q(t,x,x^{\prime},x^{\prime})\). According to [18, pp. 568], the particular solution \(x_{p}\) can be expressed as follows:

The notation \(\mathcal{G}(t,s)\) in (2.4) denotes the Green’s function obtained from \(\mathcal{L}[x]=0\). Notice that when δ denotes essentially the direct delta function, then from [18, pp. 572], we have

Also, using the definition of \(\mathcal{L}\) and keeping (2.1), we have

Subsequently, from (2.5) and (2.6), we have \(\mathcal{L}[\mathcal{G}(t,s)]=\mathcal{G}^{\prime \prime}(t,s)+y(t) \mathcal{G}^{\prime}(t,s)+z(t)\mathcal{G}(t,s)=\delta (t-s)\). Notice that the Green’s function \(G(t,s)\) is defined as the solution to the differential equation

where the new BCs are given as:

Hence it follows that for the given problem (2.1)–(2.2), \(x_{p}\) must obey the homogeneous BCs and \(x_{h}\) must satisfy the nonhomogeneous BCs \(\mathcal{B}_{1}[x] = \beta \) and \(\mathcal{B}_{2}[x] = \gamma \).

Now we want to show that the proposed solution satisfies the BCs as well as the differential equation, provided that certain assumptions are imposed on q. Now we apply the operator \(\mathcal{L}\) on (2.3) and keeping (2.4) and (2.7) in mind, we have

We assume that q is essentially a function in t only or if it satisfies the following:

then subsequently, one can see that (2.1) is satisfied such that:

Note that the for our Problem (1.1)–(1.2), the sought solution of (1.1) endowed with the BCs (1.2) takes the form \(x_{h} = 0\). It follows that the Condition (2.10) is essentially satisfied and so (2.11) is true.

Now consider (2.8), one has

and \(\mathcal{B}_{2}[x_{h}+x_{p}]=\gamma \).

Next we list some basic axioms of a given Green’s function as follows, which can be found in [18].

-

(a1.)

If we denote two linear independent solutions for \(\mathcal{L}[x]=0\) by \(x_{1}\) and \(x_{2}\), then the associated Green’s function can be written in the following form:

$$\begin{aligned} \mathcal{G}(t,s)= \textstyle\begin{cases} c_{1}x_{1}+c_{2}x_{2}& \text{when } a< t< s, \\ d_{1}x_{1}+d_{2}x_{2}& \text{when } s< t< b, \end{cases}\displaystyle \end{aligned}$$(2.13) -

(a2.)

\(\mathcal{G}(t, s)\) is essentially continuous at \(t = s\), that is,

$$\begin{aligned} &\mathcal{G}(t,s)_{t\rightarrow s^{+}}=\mathcal{G}(t,s)_{t\rightarrow s^{-}}, \end{aligned}$$(2.14)$$\begin{aligned} &c_{1}x_{1}(s)+c_{2}x_{2}(s)=d_{1}x_{1}(s)+d_{2}x_{2}(s). \end{aligned}$$(2.15) -

(a3.)

\(\mathcal{G}^{\prime}(t, s)\) admits a unit jump discontinuity.

We are now going to obtain the jump assumption as concerns the terms \(x_{1}\) as well as the term \(x_{2}\). To do this, we first compute essentially the value of the jump. Therefore, we must integrate (2.7) with lower limit \(s^{-}\) to the upper limit \(s^{+}\) as follows:

It follows that

Now the function \(\mathcal{G}\) is essentially continuous, and also \(\mathcal{G}^{\prime}\) admits only the jump discontinuity. Thus, the following equations hold:

Hence, from (2.16) and (2.17), we have

Evaluating integrals in Equation (2.18) gives the value of the required jump as follows:

where \(\mathcal{H}\) is the well-known Heaviside function, where more details on this can be found in the book [18]. Notice that from the jump condition one can write as follows:

where the constants \(c_{i},d_{i}\) (\(i=1,2\)) can be found by solving (2.15), (2.20), and the BCs given in (2.8).

2.2 Green’s functions and novel fixed-point scheme

Now we derive our desired new iterative scheme. To do this, we embed a Green’s function into an integral operator and then apply this operator on the new iterative scheme (1.4). For this purpose, we set

Now if we add and subtract \(q (s, x,x^{\prime},x^{\prime \prime})\) within the integrand, then one has

Now we suppose that q is either a function of t only or it satisfies (2.10), then the last integral in (2.22) is equivalent to that in (2.4), so can be replaced by \(x_{p}\). Since \(x = x_{h} + x_{p}\), (2.22) becomes:

Now applying new fixed-point iterative scheme (1.4) to the latter integral operator and simplifying, we obtain our new Green’s iterative scheme as follows:

It follows that

Finally, we derive calculation for the starting iterate \(x_{0}\). To do this, we use a property of the Green’s function in (2.8) to get

where \(i=1,2\). It now follows that the starting iterate \(x_{0}\) should be select so that it can solve \(\mathcal{L}[x]=0\) with BCs \(\mathcal{B}_{1}[x] = \beta \) and \(\mathcal{B}_{2}[x] = \gamma \).

Now the analysis which helps us in constructing the iterative scheme (2.25) is restricted essentially to case when either q is a function of the t only or it satisfies (2.10). However, if q is a function of x, then \(x_{p}\) satisfies the following:

so

Hence it means that \(\int _{a}^{b}G(t,s)f(s,x,x^{\prime},x^{\prime \prime})\,ds\) in (2.22) cannot be replaced with \(x_{p}\). Thus, we must define \(K[x]\) in (2.21) as follows:

Now similar calculations are made as for (2.21), but the term \(f(s,,x_{p},x^{\prime}_{p})\) is subtracted from and added to the given integral in (2.29), one has

Now using the operator T in (2.30), we apply our new fixed-point scheme (1.4).

3 Convergence result

Now we are going to prove our main convergence result. We use our proposed scheme (2.25) and assume some possible mild conditions, to obtain the approximate solution for the Bratu’s problem (1.1)–(1.2). For this purpose, we first establish a Green’s function to the term \(x^{\prime \prime}=0\) involved in (1.1). The two independent solutions to this \(x^{\prime \prime}=0\) are \(x_{1}(t)=1\) and \(x_{2}(t)=t\). Hence using (2.13), the Green’s function attains the following form:

where \(c_{i}\) and \(d_{i}\) (\(i=1,2\)) are unknowns to be determined using the basic axioms of Green’s functions. Using the homogeneous BCs, we get

Moreover, the jump discontinuity of G at \(t=s\) suggests the following equations

Now solving (3.2) and (3.3), (3.1) becomes as:

Now using the Green’s function above, we set the operator \(T_{\mathcal{G}}:C[0,1]\rightarrow C[0,1]\) by

Hence, iterative scheme (2.25) becomes:

where \(\alpha _{k},\beta _{k}\in (0,1)\).

The iterative scheme (3.5) is the desired new iterative scheme. The main convergence result of the paper is now ready to be established.

Theorem 3.1

Consider a Banach space \(X=C[0,1]\) with the supremum norm. Let \(T_{\mathcal{G}}:X\rightarrow X\) be the operator defined in (3.4) and \(\{x_{k}\}\) be the sequence produced by (3.5). Assume that the following conditions hold:

-

(i)

\(\frac{\lambda ^{2}L_{c}}{8}<1\), where \(L_{c}=\max_{t\in [0,1]}|e^{x(t)}|\).

-

(ii)

Either \(\sum \alpha _{k}=\infty \) or \(\sum \beta _{k}=\infty \)

Subsequently, \(\{x_{k}\}\) converges the unique fixed point of \(T_{\mathcal{G}}\) and hence to the unique sought solution of the given Problem (1.1)–(1.2).

Proof

Put \(\frac{\lambda ^{2}L_{c}}{8}=\nu \), accordingly it follows from the condition (i), that \(T_{\mathcal{G}}\) is a ν-contraction. Thanks to BCP, \(T_{\mathcal{G}}\) has a unique fixed point in \(X=C[0,1]\), namely, \(x^{\ast}\) which is the unique solution for the given problem (1.1)–(1.2).

Now using the condition (ii), we prove that the sequence of our new iterative scheme converges strongly to \(x^{\ast}\). First we consider the case when \(\sum \alpha _{k}=\infty \). For this, we have

Now using \(\Vert y_{k} - x^{\ast}\Vert \leq [1-\beta _{k}(1-\nu )]\Vert x_{k} -x^{\ast}\Vert \) and noting \([1-\beta _{k}(1-\nu )]\Vert x_{k} -x^{\ast}\Vert \leq 1\), we have

Accordingly, we get

Inductively, we obtain

It is well-known from the classical analysis that \(1-x\leq e^{-x}\) for all \(x\in [0,1]\). Taking into account this fact together with (3.6), we get

because \(\sum_{k=0}^{\infty}\alpha_{k}=\infty \) and ν lies in \((0,1)\). Hence from (3.6) and (3.7) that

Accordingly, \(\{x_{k}\}\) converges to the unique fixed point \(x^{\ast}\) of \(T_{\mathcal{G}}\), which is the unique solution of the Problem (1.1)–(1.2). The case when \(\sum \beta _{k}=\infty \) is similar to the above and hence omitted. □

4 Weak \(w^{2}\)-stability result

In some cases, a numerical scheme may not stable when we implement it on a certain operator in order to find a sought solution of a given problem (see, e.g., [19–21] and others). A numerical iterative scheme is said to be stable if the error obtained from any two successive iteration steps do not disturb the convergence of the scheme towards a sought solution. The concept of stability for an iterative scheme has its roots in the work of Urabe [22]. Soon the authors Harder and Hicks [23] suggested the formal notion of stability. Here we need some of their definitions and concepts, which will be used in the sequel.

Definition 4.1

[23] Suppose T is a selfmap on a Banach space X and \(\{x_{k}\}\) is a sequence of iterates of T generated by

where the point \(x_{0}\) essentially denotes a starting value and h is a function. If \(\{x_{k}\}\) converges to a point \(x^{\ast}\in F_{T}\), then \(\{x_{k}\}\) is called stable if for every other sequence \(\{u_{k}\}\) in X, one has the following

Definition 4.2

[24] Two sequence \(\{u_{k}\}\) and \(\{x_{k}\}\) in a Banach space are said to be equivalent if the following property holds

In [25], Timis suggested the natural notion of stability, which he named as weak \(w^{2}\)-stability. He used the concept of equivalent sequences opposed to the of concept of arbitrary sequences in Definition 4.1.

Definition 4.3

[25] Suppose T be a selfmap on a Banach space X and \(\{x_{k}\}\) is a sequence of iterates of T generated by (4.1). If \(\{x_{k}\}\) converges to a point \(x^{\ast}\in F_{T}\), then \(\{x_{k}\}\) is called weakly \(w^{2}\)-stable if for every equivalent sequence \(\{u_{k}\}\subseteq X\) of \(\{x_{k}\}\) one has the following

Finally, we are in the position to obtain the weak \(w^{2}\)-stability for our proposed scheme (3.5).

Theorem 4.4

Let X, \(T_{\mathcal{G}}\) and \(\{x_{k}\}\) be as given in the Theorem 3.1. Subsequently, \(\{x_{k}\}\) is weakly \(w^{2}\)-stable with respect to \(T_{\mathcal{G}}\).

Proof

To complete the proof, we consider any equivalent sequence \(\{u_{k}\}\) of \(\{x_{k}\}\), that is, \(\{u_{k}\}\) satisfies the equation \(\lim_{k\rightarrow \infty}\Vert u_{k}-x_{k}\Vert =0\). Put

where \(v_{k}=(1-\beta _{k})u_{k}+\beta _{k}T_{\mathcal{G}}u_{k}\).

Assume that \(\lim_{k\rightarrow \infty}\epsilon _{k}=0\). The need is to prove that \(\lim_{k\rightarrow \infty}\Vert u_{k}-x^{\ast}\Vert =0\). For this, we have

Hence

Subsequently, we obtained

Since \(\lim_{k\rightarrow \infty}\epsilon _{k}=0\) by assumption, \(\lim_{k\rightarrow \infty}\Vert u_{k}-x_{k}\Vert =0\) as \(\{u_{k}\}\) is an equivalent sequence for \(\{x_{k}\}\) and \(\lim_{k\rightarrow \infty}\Vert x_{k}-x^{\ast}\Vert =0\) as \(\{x_{k}\}\) is convergent to \(x^{\ast}\). Accordingly, from (4.2), \(\lim_{k\rightarrow \infty}\Vert u_{k}-x^{\ast}\Vert =0\). This means that \(\{x_{k}\}\) generated by the iterative scheme (3.5) is weakly \(w^{2}\)-stable with respect to \(T_{\mathcal{G}}\). □

5 Numerical computions

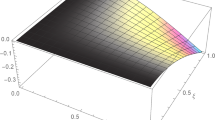

We now choose different values of λ and connect the Mann–Green’s and Ishikawa–Green’s, our new iterative scheme with the Bratu’s problem (1.1)–(1.2). First, we take \(\alpha _{k}=\beta _{k}=0.9\) and \(x_{0}=0\) that satisifies the equation \(x^{\prime \prime}=0\) and the BCs (1.2). The results are displayed in the Tables 1–2. Notice that we assume \(\Vert x_{k}-x^{\ast}\Vert <10^{-6}\) is our stopping criterion, where \(x^{\ast}\) is the requested sough solution. These results confirm the convergence of the all schemes towards the sought solution. Moreover, it is easy to observe that our new scheme is faster than the other two schemes. Graphical convergence is shown in Figs. 1–4.

Eventually, we compare our results with some other numerical methods of the literature in the following Table 3. We now compare our new approach with the the DM scheme [7], LDM scheme [26], B-spline scheme [27], LGSM [28], Spline approach [29], DTM scheme [30], GVM scheme [31], and CW scheme [32] as follows. Clearly, in all the cases, our new approach is highly accurate.

Now we list the following observations.

-

From Tables 1–2, we see that absolute error in our new iterative scheme is much smaller than the Mann–Green’s and Ishikawa–Green’s iterative schemes. This means that our new iterative scheme is moving faster to the solution.

-

From Figs. 1–4, we see that for small values of parameters, \(\alpha _{k}\) and \(\beta _{k}\), the rate of convergence of Mann–Green’s and Ishikawa–Green’s iterative scheme is almost same. However, our new iterative scheme for all values of \(\alpha _{k}\) and \(\beta _{k}\) is moving fast to the solution.

6 Conclusion

We constructed a new highly accurate numerical scheme based on Green’s function for numerical solutions of Bratu’s BVPs in a Banach space framework. We investigated a convergence result under suitable conditions for the mapping and parameters in our scheme. We proved that our new scheme is weakly \(w^{2}\)-stable in this new setting. We provide a numerical simulation to support our claims and results. It has been shown that the convergence of our new scheme is very accurate and can be used effectively for all values of parameters involved in our scheme.

References

Bratu, G.: Sur les equation integrals non-lineaires. Bull. Soc. Math. Fr. 42, 113–142 (1914)

Ascher, U.M., Mattheij, R.M.M., Russell, R.D.: Numerical Solution of Boundary Value Problems for Ordinary Differential Equations. SIAM, Philadelphia (1995)

Chandrasekhar, S.: An Introduction to the Study of Stellar Structure. Dover, New York (1957)

Jacobsen, J., Schmitt, K.: The Liouville–Bratu–Gelfand problem for radial operators. J. Differ. Equ. 184(1), 283–298 (2002)

He, J.H., Kong, H.Y., Chen, R.X., Hu, M.S., Chen, Q.L.: Variational iteration method for Bratu-like equation arising in electrospinning. Carbohydr. Polym. 105, 229–230 (2014)

Wan, Y.Q., Guo, Q., Pan, N.: Thermo-electro-hydrodynamic model for electrospinning process. Int. J. Nonlinear Sci. Numer. Simul. 5(1), 5–8 (2004)

Deeba, E., Khuri, S.A., Xie, S.: An algorithm for solving boundary value problems. J. Comput. Phys. 159(2), 125–138 (2000)

Boyd, J.P.: Chebyshev polynomial expansions for simultaneous approximation of two branches of a function with application to the one-dimensional Bratu equation. Appl. Math. Comput. 143, 189–200 (2003)

Aregbesola, Y.A.S.: Numerical solution of Bratu problem using the method of weighted residual. Electron. J. South. Afr. Math. Sci. Assoc. 3(1), 1–7 (2003)

Ishikawa, S.: Fixed points by a new iteration method. Proc. Am. Math. Soc. 44, 147–150 (1974)

Banach, S.: Sur les operations dans les ensembles abstraits et leur application aux equations integrales. Fundam. Math. 3, 133–181 (1922)

Picard, E.M.: Memorie sur la theorie des equations aux derivees partielles et la methode des approximation ssuccessives. J. Math. Pures Appl. 6, 145–210 (1890)

Browder, F.E.: Nonexpansive nonlinear operators in a Banach space. Proc. Natl. Acad. Sci. USA 54, 1041–1044 (1965)

Gohde, D.: Zum Prinzip der Kontraktiven Abbildung. Math. Nachr. 30, 251–258 (1965)

Kirk, W.A.: A fixed point theorem for mappings which do not increase distance. Am. Math. Mon. 72, 1004–1006 (1965)

Mann, W.R.: Mean value methods in iteration. Proc. Am. Math. Soc. 4, 506–510 (1953)

Kafri, H.Q., Khuri, S.A.: Bratu’s problem: a novel approach using fixed point iterations and Green’s functions. Comput. Phys. Commun. 198, 97–104 (2016)

Bayin, S.: Mathematical Methods in Science and Engineering. Wiley, New York (2006)

Osilike, M.O.: Stability of the Mann and Ishikawa iteration procedures for ϕ-strong pseudocontractions and nonlinear equations of the ϕ-strongly accretive type. J. Math. Anal. Appl. 227, 319–334 (1998)

Sahin, A.: Some new results of M-iteration process in hyperbolic spaces. Carpath. J. Math. 35, 221–232 (2019)

Sahin, A.: Some results of the Picard–Krasnoselskii hybrid iterative process. Filomat 33, 359–365 (2019)

Urabe, M.: Convergence of numerical iteration in solution of equations. J. Sci. Hiroshima Univ. A 19, 479–489 (1956)

Harder, A.M., Hicks, T.L.: Stability results for fixed point iteration procedures. Math. Jpn. 33, 693–706 (1988)

Cardinali, T., Rubbioni, P.: A generalization of the Caristi fixed point theorem in metric spaces. Fixed Point Theory 11, 3–10 (2010)

Timis, I.: On the weak stability of Picard iteration for some contractive type mappings. An. Univ. Craiova, Math. Comput. Sci. Ser. 37, 106–114 (2010)

Khuri, S.A.: A new approach to Bratu’s problem. Appl. Math. Comput. 147(1), 131–136 (2004)

Anagnostopoulos, A.N.A., Caglar, H., Caglar, N., Ozer, M., Valarstos, A.: B-spline method for solving Bratu’s problem. Int. J. Comput. Math. 87(8), 1885–1891 (2010)

Abbasbandy, S., Hashemi, M.S., Liu, C.: The Lie-group shooting method for solving the Bratu equation. Commun. Nonlinear Sci. Numer. Simul. 16(11), 4238–4249 (2011)

Jalilian, R.: Non-polynomial spline method for solving Bratu’s problem. Comput. Phys. Commun. 181(11), 1868–1872 (2010)

Erturk, V.S., Hassan, I.H.A.H.: Applying differential transformation method to the one-dimensional planar Bratu problem. Int. J. Contemp. Math. Sci. 2, 1493–1504 (2007)

Liu, X., Zhou, Y., Wang, X., Wang, J.: A wavelet method for solving a class of nonlinear boundary value problems. Commun. Nonlinear Sci. Numer. Simul. 18, 1939–1948 (2013)

Nasab, A.K., Atabakan, Z.P., Klman, A.: An efficient approach for solving nonlinear Troesch’s and Bratu’s problems by wavelet analysis method. Math. Probl. Eng. 2013, Article ID 825817 (2013)

Acknowledgements

J.A., M.A., K.U., and Z.M., thank the useful suggestions of the unknown reviewer that improved the first submitted version of the paper.

Funding

The authors would like to thank the support provided by Innovation and improvement project of academic team of Hebei University of Architecture Mathematics and Applied Mathematics (No. TD202006), Nature Science Foundation of Hebei Province (No. A2023404001).

Author information

Authors and Affiliations

Contributions

J.A., M.A., K.U., and Z.M., provided equal contribution to this research article.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmad, J., Arshad, M., Ullah, K. et al. Numerical solution of Bratu’s boundary value problem based on Green’s function and a novel iterative scheme. Bound Value Probl 2023, 102 (2023). https://doi.org/10.1186/s13661-023-01791-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13661-023-01791-6