Abstract

In this article, we propose a viscosity extragradient algorithm together with an inertial extrapolation method for approximating the solution of pseudomonotone equilibrium and fixed point problem of a nonexpansive mapping in the setting of a Hadamard manifold. We prove that the sequence generated by our iterative method converges to a solution of the above problems under some mild conditions. Finally, we outline some implications of our results and present several numerical examples showing the implementability of our algorithm. The results of this article extend and complement many related results in linear spaces.

Similar content being viewed by others

1 Introduction

The Equilibrium Problem (EP) is well known because it has wide application in spatial price equilibrium models, computer and electric networks, market behavior, and economic and financial networks models. For instance, the spatial price equilibrium models arising from EPs have provided a framework for analyzing competitive systems over space and time and have formulated contributions that have stimulated the development of new methodologies and opened up prospects for their applications in the energy sector, minerals economics, finance, and agriculture (see, for example, [1]). It is well known that many interesting and challenging problems in nonlinear analysis, such as complementarity, fixed point, Nash equilibrium, optimization, saddle point and variational inequalities, can be reformulated as EP (see [2]). The EP for a bifunction \(g:C\times C \to R\), satisfying the condition \(g(x,x)=0\) for every \(x\in C\) is defined as follows:

where C is a non-empty subset of a topological space X. We denote by EP(g) the solution set of (1.1). Several iterative methods have been designed to approximate the solution of EP (1.1) if the bifunction g is monotone (see, for example, [3–5] and reference therein). One of the crucial methods for solving EP when g is pseudomonotone is the extragradient Method (EM), which involves solving two strongly convex optimization problems at each iteration. In 1997, Korpelevich [6] and Antipin [7] employed the EM for solving the saddle point problems. Later, in 2008, Quoc et al. [8] extended this idea to solve the pseudomonotone EP. Since then, many authors have employed EM and other methods to solve EP of pseudomonotone type in Hilbert and Banach spaces (see, for example, [9–11]). It is important for us to consider EP in a more general space, such as a Riemannian manifold. This idea of extending optimization methods from Euclidean space to Riemannian manifolds has some remarkable advantages. From the point of view of the Riemannian manifold, it is possible to convert nonconvex problems to convex ones by endowing the space with an appropriate Riemannian manifold metric (see, for example, [12–14]). Moreover, a constrained problem can be viewed as unconstrained due to the Riemannian geometry. Recently, some results on Hilbert spaces have been generalized to more general settings, such as the Riemannian manifold, to solve nonconvex cases (see, for example, [15–18]). Most extended methods from linear settings, such as Hilbert space to Riemannian manifolds, require the Riemannian manifold to have nonpositive sectional curvature. This is an essential property shared by a large class of Riemannian manifolds, and it is strong enough to imply tough topology restrictions and rigidity phenomena. The algorithm for solving equilibrium (EP) on Hadamard manifolds has received great concentration (see, for example [19–21]). Lately, Cruz Neto et al. [22] extended the work by Van Nguyen et al. [23] and acquired an extragradient method for solving the equilibrium problem on a complete simple connected sectional curvature. They employed the following algorithm:

where \(0<\lambda _{n}<\beta <\min \{\alpha ^{-1}_{1}, \alpha ^{-1}_{2}\}\) and \(\alpha _{1}\), \(\alpha _{2}\) are Lipschitz constants of the bifunction g. It should be known that Lipschitz-type constants are laborious to approximate even in complex nonlinear problems, and they are generally unknown. In 2020, Junfeng et al. [24] introduced a new extragradient-like method for (EP) on the Hadamard manifold. Their algorithm performed without prior knowledge of the Lipschitz-type constants.

Let C be a non-empty closed and convex subset of a complete Riemannian manifold M. A fixed point set of T is represented by

and a mapping \(T : C \to C \) is said to be

-

(i)

a contraction if there exist \(\alpha \in [0,1]\), such that

$$ d(Tx,Ty)\leq \alpha d(x,y), \quad \forall x,y \in C, $$ -

(ii)

nonexpansive if

$$ d(Tx,Ty)\leq d(x,y), \quad \forall x,y \in C. $$

Several researchers use different methods for the approximation of a fixed point of nonexpansive mapping. Approximation methods have received so much attention in fixed point theory because they are very compelling and important tools of nonlinear science. Moreover, the viscosity-type algorithm converges faster than the Halpern-type algorithm (see, for example, [16, 17, 25–33]). In 2000, Moudafi [25] initiated the viscosity for approximation method for nonexpansive mapping in the Hilbert space, he obtained strong convergence results of both implicit and explicit schemes in Hilbert spaces. In 2004, Xu [26] extended Moudafi’s results [25] to Banach space. Now, the concept of viscosity was recently extended to more general space such as Riemannian manifold. In 2016, Jeong J.U. [34] demonstrated some results using generalized viscosity approximation methods for mixed equilibrium problems and fixed point. Motivated by the work of Daun and He [27], Renu Chugh and Mandeep Kumari [35] extended the work of Duan and He [27] in the framework of Riemannian manifold as follows.

Theorem 1.1

Let C be a closed convex subset of Hadamard manifold M, and let \(T:C\to C\) be a nonexpansive mappings such that \(F(T)\neq \emptyset \). Let \(\psi _{n}:C\to C\) be \(\rho _{n}-\) contraction with \(0\leq p_{i}=\lim_{n\to \infty}\inf \rho _{n} \leq \lim_{n\to \infty}\sup \rho _{n}=\rho _{u}\leq 1\) suppose that \(\{\psi _{n}(x)\}\) is uniformly convergence for any \(x\in A\), where A is any bounded subset of C if the sequence \(\{\lambda _{n}\}\subset (0,1)\) satisfies the following conditions:

(i) \(\lim_{n\to \infty}\lambda _{n}=0\), \(\sum_{n=1}^{\infty}\lambda _{n}=\infty \),

(ii) \(\sum_{n=1}^{\infty}|\lambda _{n+1}-\lambda _{n}|\leq \infty \) and

(iii) \(\lim_{n\to \infty} \frac{\lambda _{n}-\lambda{n-1}}{\lambda _{n}}=0\).

Then, the sequence \(\{x_{n}\}\) generated by the algorithm

An inertial term is necessary to improve the iterative sequence to accomplish the desired solution. These methods of inertial term are basically used to accelerate the iterative sequence towards the required solution by speeding up the convergence rate of the iterative scheme. Several analyzes have shown that inertial effects improve the performance of the algorithm in terms of the number of iterations and time of execution. Due to these two advantages, inertial term have attracted more attention in solving different problems. An algorithm with an inertial term was first initiated by Polyak [36], who proposed inertial extrapolation for solving a smooth convex minimization problem (MP). Since then, authors introduced algorithms with inertial term in different spaces (see, [37, 38]). Khamahawong et al. [39] introduced an inertial Mann algorithm for approximating a fixed point of a nonexpansive mapping on a Hadamard manifold. Under suitable assumptions, they proved that their method was also dedicated to solving inclusion and equilibrium problems. They defined their algorithm as follows.

Let M be a Hadamard manifold, and \(F:M\to M\) is a mapping. Choose \(x_{0},x_{1},\in M\). Define a sequence \(\{x_{n}\}\) by the following iterative scheme:

where \(\{\lambda _{n}\}\subset [0,\infty ) \text{ and } \{\gamma _{n}\}\subset (0,1)\) satisfying the following conditions

\((C_{1})\) \(0\leq \lambda _{n}<\lambda <1\, \forall n\geq 1\),

\((C_{2})\) \(\sum_{n=1}^{\infty}\lambda _{n}d^{2}(x_{n},x_{n-1})\leq \infty \),

\((C_{3})\) \(0<\gamma _{1}\leq \gamma _{n}\leq \gamma _{2}\), \(n\geq 1\),

\((C_{4})\) \(\sum_{n=1}^{\infty}\gamma _{n}<\infty \).

They proved that the sequence generated by their algorithm converges faster and strongly to an element in the solution set. Motivated and inspired by the following works [22, 35, 38–42], we study the viscosity extragradient with a modified inertial algorithm for solving equilibrium and fixed point problems in the Hadamard manifold. The advantages of our results over existing ones are the following:

-

(i)

Our algorithm converges faster than the existing results due to the inertial term we added to the algorithm.

-

(ii)

Our results is obtained in the more general space Hadamard manifold, in contrast to the results in Hilbert and Banach spaces (see, for example, [43, 44]).

The remain sections of the paper are organized as follows: We first give some basic concepts and useful tools in Sect. 2. In Sect. 3, we provide our proposed method and states its convergence analysis. In Sect. 4, we provide some numerical examples. In Sect. 5, we show the outcomes of a computational trial that demonstrate the effectiveness of our approaches.

2 Preliminaries

In this section, we present some basic concepts, definitions, and preliminary results that will be useful in what follows.

Suppose that M is a simply connected n-dimensional manifold. The tangent space of M at x is denoted by \(T_{x}M\), which is a vector space of the same dimension as M. A tangent bundle of M is given by \(TM=\bigcup_{x\in M}T_{x}M\). A smooth mapping \(\langle \cdot,\cdot\rangle \to \mathbb{R}\) is called a Riemannian metric on M if \(\langle \cdot , \cdot \rangle _{x} :T_{x}M\times T_{x}M\to \mathbb{R}\) is an inner product for \(t\in M\). We denote the norm by \(\Vert\cdot\Vert_{x}\) related to the inner product \(\langle \cdot , \cdot\rangle \) on \(T_{x}M\ \). The length of a piecewise smooth curve \(c:[a,b]\to M\) joining x to r defined using the metric \(L(c)=\int _{a}^{b}\Vert c'(t)\Vert \,dt\) where \(c(a)=x\) and \(c(b)=r\). Then, the Riemannian distance denoted by \(d(x,r)\) is defined to be the minimal length over the set of all such curves joining x to r, which induces the topology on M. A geodesic in M joining \(x \to r\) is said to be minimal geodesic if its length is equal to \(d(x,r)\). A geodesic triangle \(\Delta (x_{1},x_{2},x_{3})\) of a Riemannian manifold is defined to be a set consisting of points \(x_{1}\), \(x_{2}\), \(x_{3}\) and three minimal geodesic \(\gamma _{i}\) joining \(x_{i}\) to \(x_{i+1}\) with \(i=1,2,3\) mod3. A Riemannian manifold is said to be complete if for any \(x\in M\) all geodesic emerging from x are defined for all \(t\in (-\infty ,\infty )\). Let M be a complete Riemannian manifold, any pair in M can be joined by minimizing geodesic (Hopf-Rinow Theorem [45]). Thus, \((M,d)\) is a complete metric space, and closed bounded subset is compact. The exponential map \(\exp _{x}:T_{x}M\to M\) at \(x\in M\) such that \(\exp _{x} v=\gamma _{v}(1,x)\) for each \(v\in T_{x}M\), where \(\gamma (i)=\gamma _{v}(1,x)\) is geodesic starting at x with velocity v. Then, \(\exp _{x} tv =\gamma _{v}(t,x)\) for each real number t, and \(\exp _{x}0=\gamma _{v}(0,x)=x\). It should be noted that the mapping \(\exp _{x}\) exhibits differentiability on \(T_{x}M\) for each x within M. For any x and y belonging to M, the exponential map \(\exp _{x}\) possesses an inverse denoted as \(\exp ^{-1}M\to T_{x}M\). Given any \(x, y\in M\), we can observe the quantity \(d(x,y)=\Vert \exp ^{-1}_{y}x\Vert =\Vert \exp ^{-1}_{x}y\Vert \) (refer to [46] for additional examples).

Definition 2.1

A complete simple connected Riemannian manifold of nonpositive sectional curvature is called a Hadamard manifold.

Lemma 2.2

[47] Let \(k,p\in \mathbb{R}\) and \(\lambda \in [0,1]\). Then, the following holds:

-

(i)

\(\Vert \lambda k+(1-\lambda )p\Vert ^{2}=\lambda \Vert k\Vert ^{2}+(1-\lambda )\Vert p\Vert ^{2}- \lambda (1-\lambda )\Vert k-p\Vert ^{2}\),

-

(ii)

\(\Vert k\pm p\Vert ^{2}=\Vert k\Vert ^{2}\pm 2\langle k,p\rangle +\Vert p\Vert ^{2}\),

-

(iii)

\(\Vert k+p\Vert ^{2}\leq \Vert k\Vert ^{2}+2|\langle p,k+p\rangle |\).

Lemma 2.3

Let ρ be a lower semi-continuous, proper, and convex function on Hadamard manifold M, and \(z, t \in M\), \(\lambda >0\). If \(t=\operatorname{prox}_{\lambda \rho}(z)\, \forall y\in M\), then

Proposition 2.4

[48] Assume that M is a Hadamard manifold, and let \(d:M\times M\to \mathbb{R}\) represent a metric function. Then, d is a convex function with respect to the product Riemannian metric, which is, given any two of geodesic \(\gamma _{1}:[0,1]\to M\) and \(\gamma : [0,1] \to M\) the following inequality holds for all \(t\in [0,1]\)

In fact, for each \(y\in M\), the function \(d(.\cdot,y):M\to \mathbb{R}\) is convex function.

Definition 2.5

[49] Let C be a non-empty, closed and convex subset of M.

A bifunction \(f:M\times M \to \mathbb{R}\) is said to be

-

(i)

monotone if \(f(x,y)+f(y,x) \leq 0 \, \forall x\), \(y\in C\);

-

(ii)

pseudomonotone if

$$ f(x,y)\geq 0 \quad \implies \quad f(y,x)\leq 0 \quad \forall x,y\in C; $$ -

(iii)

Lipschitz-type continuous if there exist constants \(c_{1}>0\) and \(c_{2}>0\), such that

$$ f(x,y)+f(y,x)\geq f(x,z)-c_{1}d^{2}(x,y)-c_{2}d^{2}(y,z) \quad \forall x,y,z \in C. $$

Lemma 2.6

[48] Let \(x\in M\), \(\{ x_{k}\} \subset M\) and \(x_{k}\to x\), then for all \(y\in M \)

Proposition 2.7

[48] For any point \(x\in MP_{N}x\) is a singleton, and the following inequality holds for all \(r\in N\)

where \(N\subset M \).

Proposition 2.8

[50] (Comparison theorem for triangle) Let \(\Delta (x_{,}x_{2},x_{3})\) be a geodesic triangle. Denote each \(i=1,2,3 \, (\mod 3)\) by \(\gamma _{i} :[0,l_{i}]\to M\) the geodesic joining \(x_{i}\) to \(x_{i+1}\), and set \(\alpha _{i}=\angle (\gamma _{i}',\gamma _{i-1}(l_{i-1}))\), the angle between the vector \(\gamma '_{i}(0)\) and \(-\gamma _{i-1}(l_{i-1})\textit{ and } l_{i}=L(\gamma _{i})\), then

Using the distance and the exponential map, (2.1) can be indicated as

Since

Let \(x_{i+2}=x_{i}\), then in association with (2.3), we get

Proposition 2.9

[48] Let K be a non-empty convex subset of a Hadamard manifold M and \(g:K\to \mathbb{R}\) be a convex subdifferentiable and lower semi-continuous function on K. Then, p is a solution to the following convex problem

if and only if \(0\in \partial _{g}(p)+N_{k}(p)\).

Proposition 2.10

[48] Let \(p\in M\). The exponential mapping \(\exp _{p}:T_{p}M\to M\) is a diffeomorphism, and for any two points \(p,q \in M\), there exists a unique normalized geodesic joining p to q, which can be expressed by the formula

A geodesic triangle \(\Delta (p_{1},p_{2},p_{3})\) of a Riemannian manifold M is a set consisting of three points \(p_{1}\), \(p_{2}\), and \(p_{3}\) and three minimizing geodesic joining these points.

Proposition 2.11

[48] Let \(\Delta (p_{1},p_{2},p_{3})\) be a geodesic triangle in M. Then,

and

Furthermore, if α is the angle at \(p_{1}\), then we have

The connection between geodesic triangle in Riemannian manifolds and triangles in \(\mathbb{R}^{2}\) has been established in [51].

Lemma 2.12

[51] Let \(\Delta (p_{1},p_{2},p_{3})\) be a geodesic triangle in M. Then, there exits a comparison triangle \(\Delta (\bar{p_{1}},\bar{p_{2}},\bar{p_{3}})\) for \(\Delta (p_{1},p_{2},p_{3})\) such that \(d(p_{i},p_{i+1})=\Vert \bar{p_{i}},\bar{p_{i+1}} \Vert \), with the indices taken modulo 3, it is unique up to an isometry of \(\mathbb{R}^{2}\).

Lemma 2.13

[51] Let \(\Delta (p_{1},p_{2},p_{3})\) be a geodesic triangle and \(\Delta (\bar{p_{1}},\bar{p_{2}},\bar{p_{3}})\) be a comparison triangle.

-

(i)

Let \(\alpha _{1}\), \(\alpha _{2}\), \(\alpha _{3}\) be the angle of \(\Delta (p_{1},p_{2},p_{3})\), respectively, and let \((\bar{\alpha _{1}},\bar{\alpha _{2}},\bar{\alpha _{3}})\) be the angles of \(\Delta (\bar{p_{1}},\bar{p_{2}},\bar{p_{3}})\), respectively. Then,

$$ \alpha _{1}\leq \bar{\alpha _{1}},\qquad \alpha _{2}\leq \bar{\alpha _{2}}\quad \textit{and}\quad \alpha _{3}\leq \bar{\alpha _{3}}. $$ -

(ii)

Let q be a point on geodesic joining \(p_{1} \) to \(p_{2}\) and q̄ its comparison point in interval \([\bar{p_{1}},\bar{p_{2}}]\). If \(d(p_{1},q)=\Vert \bar{p_{1}}-\bar{q}\Vert \) and \(d(p_{2},q)=\Vert\bar{p_{2}}-\bar{q}\Vert\) then,

$$ d(p_{3},q)\leq \Vert \bar{p_{3}}-\bar{q} \Vert . $$

Proposition 2.14

[51] Let \(x\in M\). Then \(\exp _{x}:T_{x}M\to M\) is diffeomorphism. For any two points \(x,r\in M\), there exists a unique normalized geodesic joining x to r, which is, in fact, a minimal geodesic. This result shows that M has the topology and differential structure similar to \(\mathbb{R}^{n}\). Thus, Hadamard manifolds and Euclidean spaces have some similar geometrical properties.

Definition 2.15

[51] A subset \(K\subset M\) is said to be convex if for any \(p,q\in K\), the geodesic connecting p and q is in K.

Proposition 2.16

Let M be Hadamard manifold and \(x\in M\). The map \(\psi _{x}(y)=d^{2}(x,y)\) satisfying the following:

(i) \(\psi _{x}\) is convex. Indeed, for any geodesic, \(\gamma :[0,1]\to M\). The following inequality holds for \(t\in [0,1]\):

(ii) \(\psi _{x}\) is smooth. Further, \(\partial \psi _{x}(y)=-2\exp ^{-1}_{y}x\)

Lemma 2.17

[45] Let \(\{a_{n}\}\) be a sequence of nonnegative real numbers such that

If \(\lim_{k\to \infty}\sup b_{n_{k}}\leq 0\) for every subsequence \(\{a_{n_{k}}\}\) of \(\{a_{k}\}\) satisfying the condition

then \(\lim_{n\to \infty}a_{n}=0\).

3 Main results

Let C be a non-empty, closed convex subset of a Hadamard manifold M and \(S:C\to C\) be a nonexpansive mapping. Let \(\Omega =F(S) \cap EP(f,C) \) be a non-empty solution set. Let \(\phi : C\to C\) be a ρ-contraction with the bifunction f satisfying the following conditions:

\((D_{1})\) For each \(z\in C\), f is pseudomonotone;

\((D_{2})\) f satisfies the Lipschitz-type condition on C;

\((D_{3})\) \(f(x,\cdot)\) is convex and subdifferentiable on C, ∀ fixed \(x\in C\);

\((D_{4})\) \(f(\cdot,y)\) is upper semi-continuous \(\forall \in C\).

Moreover, we assume that the sequence \(\{\beta _{n}\}\), \(\{\alpha _{n}\}\subset (0,1)\) satisfies

\((C_{1})\) \(0<\lim \inf_{n\to \infty}\beta _{n}\leq \lim \sup_{n \to \infty}\beta _{n}<1\),

\((C_{2})\) \(\lim_{n\to \infty}\alpha _{n}=0 \text{ and } \sum_{n=1}^{\infty}\alpha _{n}=\infty \),

\((C_{3})\lim_{n\to \infty} \frac{\epsilon _{n}}{\alpha _{n}}=0\).

Algorithm 3.1

Initialization algorithm: choose \(x_{0}\in C\) and a parameter λ satisfying \(0\leq \lambda <\min\{\frac{1}{2\theta _{1}},\frac{1}{2\theta _{2}}\}\), where \(\theta _{1}\) and \(\theta _{2}\) are positive constants.

Iterative steps: Given \(x_{n-1}\), \(x_{n}\), choose \(\mu \in [0,\bar{\mu _{n}}]\), where

Step 1: Compute

If \(u_{n}=w_{n}\), then stop. Otherwise, go to the next step.

Step 2: Compute

Step 3: Compute

Step 4 Calculate

Set \(n=n+ 1\) and return to step 1.

To prove our main results, we first prove the following Lemma.

Lemma 3.2

For any \(t\in C\) and \(\lambda >0\):

-

(i)

\(\lambda [f(w_{n},t)-f(w_{n},u_{n})]\geq \langle \exp ^{-1}_{u_{n}}w_{n} ,\exp ^{-1}u_{n}t\rangle \),

-

(ii)

\(\lambda [f(u_{n},t)-f(u_{n},y_{n})]\geq \langle \exp ^{-1}_{y_{n}}w_{n} ,\exp ^{-1}y_{n}t\rangle \).

Proof

From the definition of \(u_{n}\) in Algorithm 3.1 and Proposition 2.9, we have

Hence, there is \(\bar{z}\in N_{C}(u_{n})\) and \(z\in \partial _{2}f(w_{n},u_{n})\) such that

Thus, for any \(t\in C\),

Now, as \(\bar{z}\in N_{k}(u_{n})\), \(\langle \bar{z},\exp ^{-1}_{u_{n}}t\rangle \leq 0\) for any \(t\in M\). Thus, we have

Furthermore, from the fact that \(z\in \partial _{2} f(w_{n},u_{n})\) and the definition of subdifferential, we obtain

Multiplying the both sides of inequality (3.3) by \(\lambda >0\) and using (3.2), we get

Similarly, we can prove (ii) using the same idea as in part (i). □

Lemma 3.3

Suppose that \(\{y_{n}\}\), \(\{w_{n}\}\), \(\{u_{n}\}\) are generated by Algorithm 3.1, \(p\in \Omega \) and \(\lambda >0\),

Proof

From the fact that \(y_{n}\in T_{m} \) and by definition of \(T_{m}\), it follows that

for some \(z_{n}\in \partial _{2} f(w_{n},u_{n})\). Hence,

Subsequently, by the definition of subdifferential, we obtain \(z_{n}\in \partial _{2} f(w_{n},u_{n})\).

Letting \(y=y_{n}\) into (3.6), we deduce

It follows from the last inequality and from (3.5) that

Moreover, by Proposition 2.9 and the definition of \(y_{n}\) in Algorithm 3.1, we have

Thus, there exists \(z\in \partial _{2}f(u_{n} y_{n})\) and \(\bar {z}\in N_{T_{m}}(y_{n})\) such that

Note that \(\bar{z} \in N_{T_{m}}(y_{n})\) and the definition of the normal cone implies that

for any \(y\in T_{m}\). Hence, thus from (3.10), it follows that

Equivalently,

Now, using the definition of subdifferential for \(z\in \partial _{2}f(u_{n},y_{n})\), we have

This together with (3.13) gets

Furthermore, letting \(y=p\) in relation (3.14), we obtain

Now, as \(p\in \Omega \), \(f(p,u_{n})\geq 0\). So, by pseudomonotonicity of f, \(f(u_{n},p)\leq 0\), and since \(\lambda >0\), we have

Applying the Lipschitz-type continuity of f, we have

Multiplying both sides of (3.17) by \(\lambda >0\) and merging (3.8) and (3.16), respectively, we obtain

Thus, applying Proposition 2.8, we obtain

Thus, we have

which implies that

□

Lemma 3.4

Let \(\{x_{n}\}\) be a sequence generated by Algorithm 3.1. Then, \(\{x_{n}\}\), \(\{y_{n}\}\), \(\{u_{n}\}\), and \(\{t_{n}\}\) are bounded.

Proof

Let \(p\in \Omega \), then consider the geodesic triangle \(\Delta (w_{n},x_{n},p )\) and \(\Delta (x_{n},x_{n-1},p)\) with their comparison triangle \(\Delta (w_{n}',x_{n}',p')\) and \(\Delta (x_{n}',x_{n-1}',p')\), respectively, in \(\mathbb{R}^{2}\) and where \(p\in \Omega \). We have \(d(w_{n},p)=\Vert w_{n}'-p'\Vert \), \(d(x_{n},p)=\Vert x_{n}'-p'\Vert \) and \(d(x_{n},x_{n-1})=\Vert x_{n}'-x_{n-1}'\Vert \). Since \(w_{n}=\exp _{x_{n}}\mu _{n}\exp ^{-1}_{x_{n}}x_{n-1}\), then the comparison point of \(w_{n}\) is \(w_{n}'=x_{n}'+\mu _{n}(x_{n-1}'-x_{n}')\). Thus, we have

Since \(\frac{\mu _{n}}{\alpha _{n}}\Vert x_{n}'-x_{n-1}'\Vert = \frac{\mu _{n}}{\alpha _{n}}d(x_{n},x_{n-1})\to 0\) as \(n\to \infty \), there exists a constant \(M_{1}>0\) such that \(\frac{\mu _{n}}{\alpha _{n}}d(x_{n},x_{n-1})\leq M_{1}\, \forall n\geq 1\). Thus, we obtain

It is obvious from simple computation that

Besides, by the convexity of Remannian manifold \(t_{n}=\gamma ^{1}_{n}(1-\beta _{n})\), where \(\gamma ^{1}_{n}:[0,1]\to M\) is a sequence of geodesic joining \(y_{n}\) to \(S(y_{n})\), we obtain

Furthermore, by the convexity of Remannian manifold \(x_{n+1}=\gamma ^{2}_{n}(1-\beta _{n})\), where \(\gamma ^{2}_{n}:[0,1]\to M\) is a sequence of geodesic joining \(\phi (x_{n})\) to \(t_{n}\), and from 3.1, (3.19), and (3.21), we obtain

Using mathematical induction, we get

Thus, the sequence \(\{x_{n}\}\) is bounded. Consequently, the sequence \(\{w_{n}\}\), \(\{u_{n}\}\), \(\{t_{n}\}\) and \(\{\phi (x_{n})\}\) are also bounded. □

Lemma 3.5

Let \(\{x_{n_{k}}\}\) be the sequence generated by the Algorithm 3.1. Then, the following conclusion holds:

-

(i)

\(\lim_{k\to \infty}d(u_{n_{k}},w_{n_{k}})=0\),

-

(ii)

\(\lim_{k\to \infty}d(y_{n_{k}},u_{n_{k}})=0\),

-

(iii)

\(\lim_{k\to \infty}d(y_{n_{k}},S(y_{n_{k}}))=0\),

-

(iv)

\(\lim_{k\to \infty}d(w_{n_{k}},x_{n_{k}})=0\),

-

(v)

\(\lim_{k\to \infty}d(t_{n_{k}},y_{n_{k}})=0\).

Proof

Let \(p\in \Omega \) and satisfy \(p\in P_{\Omega }f(p)\). Observe that this fixed point equation has a unique solution by Boyd-Wong fixed point theorem. Now, fix \(n\geq 1\), and let \(q=\phi (x_{n})\), \(r=t_{n}\) and \(s=\phi (p)\). We consider the following geodesic triangle with their respective comparison triangle in \(\mathbb{R}^{2}\) \(\Delta (q,r,s)\) and \(\Delta (q',r',s')\), \(\Delta (s,r,q)\) and \(\Delta (s',r',q')\); \(\Delta (s,r,p)\) and \(\Delta (s,r,p)\) and \(\Delta (s',r',p')\). By Lemma 2.12, we get

Now, using the definition in Algorithm 3.1, we get

The comparison point of \(x_{n+1}\) in \(\mathbb{R}^{2}\) is \(x'_{n+1}=\alpha _{n}q'+(1-\alpha _{n})r'\). Let a and \(a'\) denote the angles at p and \(p'\) in the triangle \(\Delta (s,x_{n+1},p)\) and \(\Delta (s',x'_{n+1},p')\), respectively. Then, we obtain \(a'\leq a\) and \(\cos a'\leq \cos a\). By applying Lemma 2.2, we have

Now, since \(d(x_{n+1},p)d(\phi (p),p)\cos a=\langle \exp ^{-1}_{p}\phi (p),\exp ^{-1}_{p}x_{n+1} \rangle \).

Then, we can rewrite (3.26) as

However, from Lemma 2.16, we obtain

By substituting Lemma 3.3 and (3.22) into (3.28), we get

By substituting (3.29) into (3.28), we have

where

equation (3.29) can be rewritten as

where

Thus, it is not difficult to see from (3.32) that if we let \(h_{n}=\alpha _{n}(1-\rho )\), then the sequence \(\{a_{n}\}\) satisfies

where

Next, we claim that \(\lim \sup_{k\to \infty} H_{n_{k}}\leq 0\). Suppose that there exists a subsequence \(\{a_{n_{k}}\}\) of \(\{a_{n}\}\), which satisfies \(\lim \inf_{k\to \infty}(a_{n_{k}}-a_{n_{k}})\geq 0\). Now, from (3.29), we have

Thus, using \((C_{1})\) and the fact that \((1-2\lambda \alpha _{i})>0\), for \(i=1,2\), we obtain

Also, from (3.35), we have that \(\lim_{k\to \infty}d(y_{n_{k}},w_{n_{k}})=0\). Furthermore, from (3.20) and \((C_{2})\), we have that

Using step 2 of Algorithm 3.1 and (3.34), we get

From step 4 of Algorithm 3.1 and \((C_{2})\), we get

Finally, from (3.33), (3.36), and (3.37), we get

□

Theorem 3.6

Suppose that \((D_{1})-(D_{4})\) holds and \(EP(f,E)\neq \emptyset \), then the sequence \(\{x_{n}\}\) generated by algorithm (3.1) converges to a solution of Ω. Let \(\{x_{n_{k_{i}}}\}\) be a subsequence of \(\{x_{n_{k}}\}\) that converges to \(x^{*}\in C\).

Proof

Let p be in a limit point of the sequence \(\{x_{n_{k}}\}\). There exists subsequence \(\{x_{n_{k_{i}}}\}\) that converges to \(x^{*}\). So, let \(t\in M\) be an arbitrary element. From Lemma 3.2 (ii), we obtain

Using Lipschitz-type continuity of f, we have

When we let \(t=y_{n_{k_{i}}}\) in Lemma 3.2 (i), we obtain the following

Thus, we obtain the following from (3.41) and (3.40)

Merging (3.42) and (3.39), we obtain

Thus, from the boundedness of \(\{x_{n_{k}}\}\), we obtain

Thus, \(x^{*}\in EP(f,C)\). Furthermore, using (3.35), we obtain that \(x^{*}\in F(S)\). Hence, we conclude that \(x^{*}\in \Omega \) □

Next, we show that \(\{x_{n}\}\) converges to \(x^{*}\in \Omega \). To estimate that, we claim that \(\lim \lim_{k\to \infty}\sup H_{n_{k}}\leq 0\). To prove this, we only need to show that

Since \(\{x_{n_{k}}\}\) is bounded, there exists a subsequence \(\{x_{n_{k_{i}}}\}\) of \(\{x_{n_{k}}\}\) that converges to \(x^{*}\) such that

Hence, by substituting (3.46) into (3.33) and applying Lemma 2.17, we conclude that \(\{x_{n}\}\) converges to \(x^{*} \in \Omega \). Thus, we complete the proof.

If we change the mapping S in Algorithm 3.1 to a contraction mapping, we obtain the following.

Corollary 3.7

Let C be a non-empty, closed convex subset of a Hadamard manifold M and \(S:C\to C\) be a contraction mapping. Let \(\Omega =F(S) \cap EP(f,C) \) be the solution set. Let \(\phi : C\to C\) be a ρ-contraction with the bifunction f satisfying the following conditions \((D_{1}\to D_{4})\).

Algorithm 3.8

Initialization algorithm: choose \(x_{0}\in C\) and a parameter λ which satisfies \(0\leq \lambda <\min\{\frac{1}{2\theta _{1}},\frac{1}{2\theta _{2}}\}\) iterative steps: Given \(x_{n-1}\), \(x_{n}\), choose \(\mu \in [0,\bar{\mu _{n}}]\), where

Step 1: Compute

If \(u_{n}=w_{n}\), then stop. Otherwise, go to the next step.

Step 2: Compute

Step 3: Compute

Step 4 Calculate

Set \(n= n+ 1\) and return to step 1.

Then our sequence converges strongly to an element in the \(p\in \Omega \).

4 Applications

4.1 An application to solving Variational inequality problems

.

Suppose

where \(G:C\to M\) is a mapping. Subsequently, the equilibrium problem aligns with the subsequent variational inequality (VIP) (see [52]):

Now, the set of solutions of (4.2) is denoted by \(VIP(G,C)\). The mapping \(G:C\to M\) is said to be pseudomonotone if

Let us suppose that the function G is pseudomonotone and fulfills the following conditions:

-

(V1)

The function G is pseudomonotone on C with \(VIP(G,C)\neq \emptyset \)

-

(V2)

G is L-Lipschitz continuous, which is,

$$ \Vert P_{y,x}Gx-G_{y} \Vert \leq \Vert x-y \Vert ,\quad x,y\in C, $$(4.4) -

(V3)

\(\lim_{n\to \infty}\langle Gx_{n},\exp ^{-1}_{x_{n}}y \rangle \leq \langle G_{p},\exp ^{-1}_{p}y\rangle \) for every \(y\in C\) and \(\{x_{n}\}\) ⊂C such that \(x_{n}\to p\).

By substituting the proximal term \(\arg \min \{f(x,y)+\frac{1}{2\lambda}d(x,y)\, y\in M\}\) with \(P_{C}(\exp _{x}(-\lambda _{n}G(x)))\), where \(P_{C}\) is metric projection of M onto C in Algorithm 3.1, we have the following method for approximating a point in \(VIP(G,C)\).

In this context, we can establish the subsequent convergence theorem for the approximation of a solution to the VIP (4.2)

Theorem 4.1

Let \(g:C\to C\) be a contraction and \(G:C\to M\) be a pseudomonotone operator satisfying condition V1-V3. If \(0< k=\sup \{\frac{\psi d(x_{n},q)}{d(x_{n},q)}:x_{n}\neq q,n\geq 0,q \in VIP(G,C)\}<1\), then the sequence \(\{x_{n}\}\) generated by Algorithm 2 converges to an element \(p\in VIP(G,K)\) which satisfies \(p=P_{VIP(G,C))}g(p)\)

Algorithm 4.2

Initialization algorithm: choose \(x_{0}\in C\) and a parameter λ which satisfies \(0\leq \lambda <\min\{\frac{1}{2\theta _{1}},\frac{1}{2\theta _{2}}\}\) iterative steps: Given \(x_{n-1}\), \(x_{n}\), choose \(\mu \in [0,\bar{\mu _{n}}]\), where

Step 1: Compute

If \(u_{n}=w_{n}\), then stop. Otherwise, go to the next step.

Step 2: Compute

Step 3: Compute

Step 4: Calculate

Set \(n= n+1\) and return to step 1.

5 Numerical example

In this section, we provide a numerical example to show the performance of our iterative scheme and compare it with results existing in the literature. We use MATLAB programming for our numerical experiment.

Example 5.1

Let \(M = \mathbb{R}^{2}\) be a Hadamard manifold, and let \(G:C\to M\) be defined by

where \(C=\{x\in \mathbb{R}^{2}:-10\leq x_{i}<10, i=1,2\}\) and \(x=(x_{1},x_{2})\). By employing the Monte-Carlo approach, it can be shown that G is pseudomonotone on C (see [54]). Define \(f(x,y) : \langle G(x), \exp _{x}^{-1}y \rangle \) for all \(y \in C\). It is easy to see that f satisfies conditions (D1)-(D4). To implement our algorithm, we choose \(\alpha _{n} = \frac{1}{n+1}\), \(\epsilon _{n} = \frac{1}{(n+1)^{2}}\), \(\beta _{n} = \frac{2n}{5n+8}\), \(\mu = 0.8\) and \(\lambda = 0.01\). We use \(\|x_{n+1} - x_{n}\| < 10^{-6}\) as stopping criterion. We use the following as starting point in our implementation:

Case I: \(x_{0} = [-1,4]\) and \(x_{1} = [2,5]\)

Case II: \(x_{0} = [8,9]\) and \(x_{1} = [-3,-6]\)

Case III: \(x_{0} = [1/4, 1/8]\) and \(x_{1} =[0,4]\)

Case IV: \(x_{0} = [6,1]\) and \(x_{1} = [-1,-5]\).

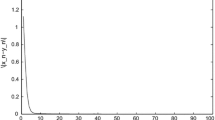

We test the algorithm with the non-inertial version of the proposed Algorithm 3.1 by setting \(\mu _{n} = 0\) in the algorithm. The numerical results are shown in Table 1 and Fig. 1.

Example 5.1, Top Left: Case I; Top Right: Case II; Bottom Left: Case III; Bottom Right: Case IV

Example 5.2

Consider the Nash equilibrium model initiated in [55]. Let \(f:C \times C \to \mathbb{R}\) be a bifunction given by

Where \(C=\{x=(x_{1},x_{2},\ldots x_{m}):1\leq x_{i}\leq 100, i=1,2,\dots , m\}\). Let \(x,y\in C\), and let \(t=(t_{1},t_{2}\dots t_{m})\in \mathbb{R}\) be chosen randomly. Besides, K and R are matrices of order \(m\times m\) such that R is symmetric positive semidefinete and \(R-K\) is negative semidefinite. It was shown in [55] that f is pseudomonotone and satisfies conditions (D1)-(D4) with Lipschitz constants \(c_{1}=c_{2}=\frac{1}{2}\Vert R-K\Vert \). We choose the following parameters: \(\alpha _{n}=\frac{1}{2n+14}\), \(\beta _{n}=\frac{4n}{9n+4}\), \(\epsilon _{n}=\frac{1}{n^{1.7}}\), \(\mu _{1}=0.5\) and \(\lambda =10^{-2}\). Furthermore, we choose our stopping criterion to \(\Vert x_{n+1}-x_{n}\Vert ^{2}=10^{-6}\). The starting points \(x_{0}\) and \(x_{1}\) are generated randomly in \(\mathbb{R}^{m}\) and consider the following values of m

The numerical results are shown in Table 2 and Fig. 2.

Example 5.2, Top Left: Case I; Top Right: Case II; Bottom Left: Case III; Bottom Right: Case IV

6 Conclusion

In this paper, we proposed a viscosity extragradient with a modified inertial method for solving the equilibrium problem and fixed point problem within the Hadamard manifold. A strong convergence result was obtained using viscosity technique and inertial method with conditions on the parameters required for generating the sequence of approximation. Moreover, we provide a numerical example to demonstrate the convergence behavior of the proposed algorithm.

Data Availability

No datasets were generated or analysed during the current study.

References

Nagurney, A.: The application of variational inequality theory to the study of spatial equilibrium and disequilibrium. In: Readings in Econometric Theory and Practice, Contri. Econom. Anal, vol. 209, pp. 327–355. North-Holland, Amsterdam (1992)

Dadashi, V., Iyiola, O.S., Sheshu, Y.: The subgradient extragradient method for pseudomotone equilibrium problems. Optimization 69, 901–923 (2020)

Flåm, S.D., Antipin, A.S.: Equilibrium programming using proximal like algorithms. Math. Program. 78, 29–41 (1997)

Godwin, E.C., Abass, H.A., Izuchukwi, C., Mowemo, O.T.: On split equality equilibrium, monotone variation inclusion and fixed point problems in Banach spaces. Asian-Eur. J. Math. 15, 22501–22539 (2022)

Abass, H.A., Oyewole, O.K., Mebawondu, O.K., Aremu, K.O., Narain, O.K.: O split feasibility problem for finite families of equilibrium and fixed point problems. Demonstr. Math. 55, 658–675 (2020)

Korlpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekon. Math. Met. 12, 747–756 (1976)

Antipin, A.S.: On a method for convex programs using a symmetrical of the Langrangs function. Ekon. Math. Met. 12, 1164–1173 (1976)

Quoc, T.D., Muu, L.D., Nguyan, V.H.: Extragradient algorithms extended to equilibrium problems. Optimization 57, 749–776 (2008)

Jolaoso, L.O., Okeke, S.Y.: Extragradient algorithm for solving pseudomotone equilibrium problems with distance in reflexive Banach spaces. Netw. Spat. Econ. 21, 873–903 (2021)

Eskandani, G.Z., Raeisi, M., Rassians, T.M.: A hybrid extragradient method for solving pseudomonotone equilibrium problems using Bregman distance. Fixed Point Theory Appl. 20, 132 (2018)

Young, P.J., Strodiot, J.J., Nguyan, V.H.: Extragradient methods and linesearch algorithm for solving Ky Fan inequalities and fixed point problems. J. Optim. Theory Appl. 155, 605–627 (2012)

Cruz Neto, J.X., Ferreira, O.P., Lucambio Pe’rez, L.R.: Contribution to the study of monotone vector fields. Acta Math. Hung. 94, 30–320 (2002)

Ferreira, O.P., Lucambio Pe’rez, L.R., Nemeth, S.Z.: Singularities of monotone vector fields and an extragradient type algorithm. J. Glob. Optim. 31, 133–151 (2005)

Rapcsa’k, T.: Smooth Nonlinear Optimization in \(\mathbb{R}^{n}\), Nonconvex Optimization and Its Applications. Kluwer Academic Publishes, Dordrecht (1997)

Ferreira, O.P., Oliveira, P.R.: Proximal point algorithm on Riemannian manifolds. Optimization 51, 257–270 (2002)

Ndlovu, P.V., Jolaoso, L.O., Aphane, M., Khan, S.H.: Approximating a common solution of monotone inclusion problems and fixed point of quasi-pseudocontractive mappings in CAT (0) spaces. Axioms 11(10), 545 (2022)

Ndlovu, P.V., Jolaoso, L.O., Aphane, M.: Strong convergence theorem for finding a common solution of convex minimization and fixed point problems in CAT (0) spaces. Abstr. Appl. Anal. 2022 (2022). Hindawi

Khatibzadeh, H., Ranjbar, S.: A variational inequality in complete CAT(0) paces. J. Fixed Point Theory Appl., 17557–17574 (2015)

Colao, V., Lo’pez, G., Marino, G., Martín-Márquez, V.: Equilibrium problems in Hadamard manifolds. J. Math. Anal. Appl. 388(1), 61–77 (2012)

Salahuddin, S.: The existence of solution for equilibrium problems in Hadamard manifolds. Trans. A. Razmadze Math. Inst. 171(3), 381–388 (2017)

Li, X.B., Zhou, L.W., Huang, N.J.: Gap functions and descent methods for equilibrium problems on Hadamard manifolds. J. Nonlinear Convex Anal. 17(4), 807–826 (2016)

Cruz Neto, J.X., Santos, P.S., Soares, P.A.: An extragradient method for equilibrium problems on Hadamard manifolds. Optim. Lett. 10(6), 1327–1336 (2016)

Van Nguyen, T.T., Strodiot, J.J., Nguyen, V.H.: The interior proximal extragradient method for solving equilibrium problems. J. Glob. Optim. 44(2), 175–192 (2009)

Chen, J., Liu, S.: Extragradient-like method for pseudomontone equilibrium problems on Hadamard manifolds. J. Inequal. Appl., 1–15 (2020)

Moudafi, A.: Viscosity approximation methods for fixed point problems. J. Math. Anal. Appl. 241, 46–55 (2000)

Xu, H.K.: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 298, 279–291 (2004)

Duan, P., He, S.: Generalized viscosity approximation methods for nonexpansive mappings. Fixed Point Theory Appl. 68, 1–11 (2014)

Ceng, L.C., Petrusel, A., Qin, X., Yao, J.C.: On inertial subgradient extragradient rule for monotone bilevel equilibrium problems. Fixed Point Theory 24, 101–126 (2023)

Ceng, L.C., Zhu, L.J., Yao, Z.S.: Mann-type inertial subgradient extragradient methods for bilevel equilibrium problems. Sci. Bull. “Politeh.” Univ. Buchar., Ser. A, Appl. Math. Phys. 84, 19–32 (2022)

Ceng, L.C., Petrusel, A., Qin, X., Yao, J.C.: Pseudomonotone variational inequalities and fixed points. Fixed Point Theory 22, 543–558 (2021)

Ceng, L.C., Petrusel, A., Qin, X., Yao, J.C.: A modified inertial subgradient extragradient method for solving pseudomonotone variational inequalities and common fixed point problems. Fixed Point Theory 21, 93–108 (2020)

Ceng, L.C., Petrusel, A., Qin, X., Yao, J.C.: Two inertial subgradient extragradient algorithms for variational inequalities with fixed-point constraints. Optimization 70, 1337–1358 (2021)

Ceng, L.C., Shang, M.J.: Hybrid inertial subgradient extragradient methods for variational inequalities and fixed point problems involving asymptotically nonexpansive mappings. Optimization 70, 715–740 (2021)

Jeong, J.U.: Generalized viscosity approximation methods for mixed equilibrium problems and fixed point problems. Appl. Math. Comput. 20(283), 168–180 (2016)

Chugh, R., Kumari, M.: Generalized viscosity approximation method for nonexpansive mapping in Hadamard manifolds. J. Math. Comput. Sci. 6, 1012–1023 (2016)

Polyak, B.T.: Some methods of speeding up the convergence of iterates methods. USSR Comput. Math. Math. Phys. 4, 1–17 (1994)

Shehu, Y., Cholamjiak, P.: Iterative method with inertial for variational inequalities in Hilbert spaces. Calcolo 56, 1–21 (2019)

Khan, A.R., Izuchukwu, C., Aphane, M., Ugwunnadi, G.C.: Modified inertial algorithm for solving mixed equilibrium problems in Hadamard spaces. Numer. Algebra Control Optim., 859–877 (2022)

Khammahawong, K., Chaipunya, P., Kumam, P.: An inertial Mann algorithm for nonexpansive mappings on Hadamard manifolds. AIMS Math. 8(1), 2093–2116 (2023)

Oyewole, O.K., An, R.S.: Inertial subgradient extragradient method for approximating solutions to equilibrium problems in Hadamard manifolds. Axioms 12, 256 (2023)

Shehu, Y., Dong, Q., Liu, L., Yao, J.: Alternated inertial subgradient extragradient method for equilibrium problems. Top 31, 1–30 (2023)

Shehu, Y., Izuchukwu, C., Yao, J.: Strongly convergent inertial extragradient type methods for equilibrium problems. Appl. Anal. 102(8), 2160–2188 (2023)

Zhang, S.S.: Generalized mixed equilibrium problem in Banach spaces. Appl. Math. Mech. 30(9), 1105–1112 (2009)

Takahashi, S., Takahashi, W.: Viscosity approximation methods for equilibrium problems and fixed point problems in Hilbert spaces. J. Math. Anal. Appl. 331(1), 506–515 (2007)

Ekeland, I.: The Hopf-Rinow theorem in infinite dimension. J. Differ. Geom. 13(2), 287–301 (1978)

Sakai, T.: Riemannian Geometry. American Mathematical Soc. (1996)

Takahashi, W.: Introduction to Nonlinear and Convex Analysis. Yokoma, Japan (2009)

Sakai, T.: Reimannian Geometry. Translations of Mathematics Monographs, vol. 149. Am. Math. Soc., Providence (1996)

Li, C., Lo’pez, G., Martin-Marquez, V.: Monotone vector fields and the proximal point algorithm on Hadamard manifold. J. Lond. Math. Soc. 79, 663–683 (2009)

Khammahawong, K., Kumam, P., Chaipunya, Y.J., Wen, C., Jirakitpuwapat, W.: An extragradientfor strongly pseudomonotone equilibrium problems on Hadamard manifolds. Thai J. Math. 18, 350–371 (2020)

Undriste, C.: Convex Functions and Optimization Methods on Riemannien Manifolds. Mathematics and Its Applications, vol. 297, pp. 348. Kluwer academics, Dordrecht (1994)

Stampacchia, G.: Formes Bilineaires Coercivites sur les Ensembles Convexes, vol. 258, pp. 4413–4416. C. R. Acad. Paris (1964)

Upadhyay, B., Treantá, S., Mishra, P.: On varaitional principle for nonsmooth multiobjective optimization problems on Hadamard manifolds. Optimization 71, 1–19 (2022)

Hu, X., Wang, J.: Solving pseudo-monotone variational inequalities and pseudo-convex optimization problems using the projection neural network. IEEE Trans. Neural Netw. 17, 1487–1499 (2006)

Khammahawong, K., Kumam, P., Chaipunya, P., Yao, J., Wen, C., Jiraktpuwapat, W.: An extragradient algorithm for strongly pseudomonotone equilibrium problems on Hadamard manifolds. J. Math. Anal. 18, 350–371 (2020)

Acknowledgements

The authors acknowledge with thanks, the Department of Mathematics and Applied Mathematics at the Sefako Makgatho Health Sciences University for making their facilities available for the research.

Funding

This first author is supported by the Postdoctoral research grant from the Sefako Makgatho Health Sciences University, South Africa.

Author information

Authors and Affiliations

Contributions

All authors contributed equally on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ndlovu, P.V., Jolaoso, L.O., Aphane, M. et al. Viscosity extragradient with modified inertial method for solving equilibrium problems and fixed point problem in Hadamard manifold. J Inequal Appl 2024, 21 (2024). https://doi.org/10.1186/s13660-024-03099-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-024-03099-0