Abstract

In this paper, we introduce a new inertial self-adaptive projection method for finding a common element in the set of solution of pseudomonotone variational inequality problem and set of fixed point of a pseudocontractive mapping in real Hilbert spaces. The self-adaptive technique ensures the convergence of the algorithm without any prior estimate of the Lipschitz constant. With the aid of Moudafi’s viscosity approximation method, we prove a strong convergence result for the sequence generated by our algorithm under some mild conditions. We also provide some numerical examples to illustrate the accuracy and efficiency of the algorithm by comparing with other recent methods in the literature.

Similar content being viewed by others

1 Introduction

Let H be a real Hilbert space with inner product \(\langle \cdot , \cdot \rangle \) and norm \(\|\cdot \|\). Let C be a nonempty, closed and convex subset of H and \(A:H\to H\) be a single-valued operator. The variational inequality problem (shortly, VIP) is formulated as

We denote the solution set of problem (1.1) \(VI(C,A)\). It is well known that the VIP is a very fundamental problem in nonlinear analysis. It serves as a useful mathematical model which unifies in several ways, many important concepts in applied mathematics such as optimization, equilibrium problem, Nash equilibrium problem, complementarity problem, fixed point problems and system of nonlinear equations; see for instance [19–21, 31, 33]. Moreover, its solutions have been an important part of optimization theory. For these reasons, several researchers have focused on studying iterative methods for approximating the solutions of the VIP (1.1). Two important approaches for solving the VIP are regularized and projection methods. In this paper, we focus on the projection method. The simplest known projection method is the Goldstein gradient projection method [14] which involve a single projection onto the feasible set C per each iteration as follows:

where \(\lambda \in (0,\frac{2\eta }{L^{2}} )\), η and L are the strongly monotonicity constant and Lipschitz constant of A, respectively, and \(P_{C}\) is the orthogonal projection onto C. It is well known that the gradient projection method converges weakly to a solution of the VIP if and only if the operator A is strongly monotone and L-Lipschitz continuous. When A is monotone, the gradient projection method fails to converge to solution of the VIP. Korpelevich [28] introduced the following extragradient method (EGM) for solving the VIP when A is monotone and L-Lipschitz continuous:

Moreover, the sequence \(\{x_{n}\}\) generated by (1.2) converges weakly to a solution of the VIP if the stepsize condition \(\lambda \in (0, \frac{1}{L} )\) is satisfied. It should be noted that in the EGM, one needs to calculate two projections onto the feasible set C in each iteration. If the set C is not so simple, then the EGM become very difficult and its implementation is costly. In order to address such situation, Censor et al. [6, 7] introduced the following subgradient extragradient method (SEGM) which involves a projecting onto a constructible half-space \(T_{n}\):

The authors also proved that the sequence \(\{x_{n}\}\) generated by the SEGM converges weakly to a solution of the VIP (1.1) if the stepsize condition \(\lambda \in (0, \frac{1}{L} )\). Several modifications of the EGM and SEGM have been introduced by many authors; see for instance [12, 22, 24–26, 41–43]. Recently, He [17] modified the EGM and introduced a projection and contraction method (PCM) which requires only a single projection per each iteration as follows:

where \(\gamma \in (0,2)\), \(\lambda \in (0, \frac{1}{L})\) and

He proved that the sequence \(\{x_{n}\}\) generated by the PCM converges weakly to a solution of VIP.

On the other hand, the inertial-type algorithm which is a two-step iteration process was introduced by Polyak [38] as a means of accelerating the speed of convergence of iterative algorithms. Recently, many inertial-type algorithms have been introduced by some authors, this includes the inertial proximal method [1, 37], inertial forward–backward method [29], inertial split equilibrium method [23], inertial proximal ADMM [9] and fast iterative shrinkage thresholding algorithm FISTA [5, 8].

In order to accelerate the convergence of the PCM, Dong et al. [11] introduced the following inertial PCM and proved its weak convergence to a solution \(\bar{x} \in VI(C,A) \cap F(T)\), where \(F(T)=\{x\in H: Tx =x\}\) is the set of fixed points of a nonexpansive mapping T:

where \(\gamma \in (0,2)\), \(\lambda \in (0, \frac{1}{L} )\), \(\{\alpha _{n}\}\) is a non-decreasing sequence with \(\alpha _{1} = 0\), \(0 \leq \alpha _{n}\leq \alpha <1\) and \(\sigma , \delta >0\) are constants such that

It is important to mention that in solving optimization problems, strong convergence algorithms are more desirable than the weak convergence counterparts (see [3, 15]). Tian and Jiang [45] recently introduced the following hybrid-inertial PCM: \(x_{0}, x_{1} \in H\),

The authors proved that the sequence generated by (1.6) converges strongly to a solution of the VIP with the aid of this stepsize condition: \(0 < a \leq \lambda _{n} \leq b < \frac{1}{L}\). Moreover, other authors have further introduced some strong convergence inertial PCM with certain stepsize conditions in real Hilbert spaces; see e.g. [10, 18, 26, 27, 39, 40, 44]. Note that the stepsize conditions in the above methods restrict the applicability of the methods due to the prior estimate of the Lipschitz constant L. In reality, the Lipschitz constant is very difficult to estimate and even when it is estimated, it is often too small and deteriorates the convergence of the methods. Moreover, the convergence of Algorithm 1.6 involves computing two subsets \(C_{n}\) and \(Q_{n}\), and the projection of \(x_{1}\) onto their intersection \(C_{n} \cap Q_{n}\), which can be very computationally expensive. Hence, it becomes necessary to propose an efficient iterative method which does not depends on the Lipschitz constant and converges strongly to solution of the VIP.

In this paper, we introduce a new self-adaptive inertial projection and contraction method for finding common element in the set of solution of pseudomonotone variational inequalities and the set of fixed points of strictly pseudocontractive mappings in real Hilbert spaces. Our algorithm is designed such that its convergence does not require prior estimate of the Lipschitz constant and we prove a strong convergence result using viscosity approximation method [36]. We also provide some numerical experiments to illustrate the efficiency and accuracy of our proposed method by comparing with other methods in the literature.

2 Preliminaries

Let H be a real Hilbert space and let C be a nonempty, closed and convex subset of H. We use \(x_{n} \to x\) (resp. \(x_{n} \rightharpoonup x\)) to denote that the sequence \(\{x_{n}\}\) converges strongly (resp. weakly) to a point x as \(n \to \infty \).

For each \(x \in H\), there exists a unique nearest point in C, denoted by \(P_{C}x\) satisfying

\(P_{C}\) is called the metric projection from H onto C, and it is characterized by the following properties (see, e.g. [13]):

-

(i)

For each \(x \in H\) and \(z \in C\),

$$ z = P_{C}x \quad \Rightarrow\quad \langle x - z, z - y \rangle \geq 0,\quad \forall y \in C. $$(2.1) -

(ii)

For any \(x,y \in H\),

$$ \langle P_{C}x - P_{C}y, x-y \rangle \geq \Vert P_{C}x - P_{C}y \Vert ^{2}. $$ -

(iii)

For any \(x \in H\) and \(y \in C\),

$$ \Vert P_{C}x - y \Vert ^{2} \leq \Vert x-y \Vert ^{2} - \Vert x - P_{C}x \Vert ^{2}. $$(2.2)

Definition 2.1

A mapping \(A:H \rightarrow H\) is called

-

(i)

η-strongly monotone if there exists a constant \(\eta >0\) such that

$$ \langle Ax -Ay, x-y \rangle \geq \eta \Vert x-y \Vert ^{2} \quad \forall x,y \in H, $$ -

(ii)

α-inverse strongly monotone if there exists a constant \(\alpha >0\) such that

$$ \langle Ax -Ay, x - y\rangle \geq \alpha \Vert Ax - Ay \Vert ^{2}\quad \forall x,y \in H, $$ -

(iii)

monotone if

$$ \langle Ax - Ay, x- y\rangle \geq 0\quad \forall x,y \in H, $$ -

(iv)

pseudomonotone if, for all \(x,y \in H\),

$$ \langle Ax, y -x \rangle \geq 0\quad \Rightarrow\quad \langle Ay, y -x \rangle \geq 0, $$ -

(v)

L- Lipschitz continuous if there exists a constant \(L >0\) such that

$$ \Vert Ax- Ay \Vert \leq L \Vert x-y \Vert \quad \forall x,y \in H. $$

If A is η-strongly monotone and L-Lipschitz continuous, then A is \(\frac{\eta }{L^{2}}\)-inverse strongly monotone. Also, we note that every monotone operator is pseudomonotone but the converse is not true; see, for instance [25, 26].

Let \(T:H \to H\) be a nonlinear mapping. A point \(x \in H\) is called a fixed point of T if \(Tx = x\). The set of fixed points of T is denoted by \(F(T)\). The mapping \(T:H \to H\) is said to be

-

(i)

a contraction, if there exists \(\alpha \in [0,1)\) such that

$$ \Vert Tx - Ty \Vert \leq \alpha \Vert x-y \Vert \quad \forall x,y \in H. $$If \(\alpha =1\), then T is called a nonexpansive mapping,

-

(ii)

a κ-strictly pseudocontraction, if there exists \(\kappa \in [0,1)\) such that

$$ \Vert Tx-Ty \Vert ^{2} \leq \Vert x-y \Vert ^{2} + \kappa \bigl\Vert (I-T)x - (I-T)y \bigr\Vert ^{2}\quad \forall x,y \in H. $$

Remark 2.2

([2])

If T is κ-strictly pseudocontractive, then T has the following important properties:

-

(a)

T satisfies Lipschitz condition with Lipschitz constant \(L = \frac{1+\kappa }{1-\kappa }\).

-

(b)

\(F(T)\) is closed and convex.

-

(c)

\(I-T\) is demiclosed at 0, that is, if \(\{x_{n}\}\) is a sequence in H such that \(x_{n} \rightharpoonup \bar{x}\) and \((I-T)x_{n} \to 0\), then \(\bar{x} \in F(T)\).

Lemma 2.3

([47])

Let H be a real Hilbert space and \(T:H \to H\) be a κ-strictly pseudocontractive mapping with \(\kappa \in [0,1)\). Let \(T_{\alpha }= \alpha I + (1-\alpha )T\) where \(\alpha \in [\kappa ,1)\), then

-

(i)

\(F(T_{\alpha }) = F(T)\),

-

(ii)

\(T_{\alpha }\) is nonexpansive.

Lemma 2.4

For all \(x,y,z \in H\), it is well known that

-

(i)

\(\|x+y\|^{2} = \|x\|^{2} + 2\langle x,y \rangle + \|y\|^{2}\),

-

(ii)

\(\|x+y\|^{2} \leq \|x\|^{2} + 2\langle y,x+y\rangle \),

-

(iii)

\(\|tx + (1-t) y\|^{2} = t\|x\|^{2} + (1-t)\|y\|^{2} - t(1-t)\|x-y\|^{2}\), \(\forall t\in [0,1]\).

Lemma 2.5

(see [35])

Consider the Minty variational inequality problem (MVIP) which is defined as finding a point \(x^{\dagger }\in C\) such that

We denote by \(M(C,A)\) the set of solution of (2.3). If a mapping \(h:[0,1] \rightarrow H\) defined as \(h(t) = A(tx + (1-t)y)\) is continuous for all \(x,y \in C\) (i.e., h is hemicontinuous), then \(M(C,A) \subset VI(C,A)\). Moreover, if A is pseudomonotone, then \(VI(C,A)\) is closed, convex and \(VI(C,A) = M(C,A)\).

Lemma 2.6

([30])

Let \(\{\alpha _{n}\}\) and \(\{\delta _{n}\}\) be sequences of nonnegative real numbers such that

where \(\{\delta _{n}\}\) is a sequence in \((0,1)\) and \(\{\beta _{n}\}\) is a real sequence. Assume that \(\sum_{n=0}^{\infty }\gamma _{n} < \infty \). Then the following results hold:

-

(i)

If \(\beta _{n} \leq \delta _{n} M\) for some \(M \geq 0\), then \(\{\alpha _{n}\}\) is a bounded sequence.

-

(ii)

If \(\sum_{n=0}^{\infty }\delta _{n} = \infty \) and \(\limsup_{n\rightarrow \infty }\frac{\beta _{n}}{\delta _{n}} \leq 0\), then \(\lim_{n\rightarrow \infty }\alpha _{n} =0\).

Lemma 2.7

([32])

Let \(\{a_{n}\}\) be a sequence of real numbers such that there exists a subsequence \(\{a_{n_{i}}\}\) of \(\{a_{n}\}\) with \(a_{n_{i}} < a_{n_{i}+1}\) for all \(i \in \mathbb{N}\). Consider the integer \(\{m_{k}\}\) defined by

Then \(\{m_{k}\}\) is a non-decreasing sequence verifying \(\lim_{n \rightarrow \infty }m_{n} = \infty \), and for all \(k \in \mathbb{N}\), the following estimates hold:

3 Main results

In this section, we introduce a new inertial projection and contraction method with a self-adaptive technique for solving the VIP (1.1). The following conditions are assumed throughout the paper.

Assumption 3.1

-

A.

The feasible set C is a nonempty, closed and convex subset of a real Hilbert space H,

-

B.

the associated operator \(A:H \to H\) is L-Lipschitz continuous, pseudomonotone and weakly sequentially continuous on bounded subset of H, i.e., if for each sequence \(\{x_{n}\}\), we have \(x_{n} \rightharpoonup x\) implies that \(Ax_{n} \rightharpoonup Ax\),

-

C.

\(T:H \to H\) is κ-strictly pseudocontractive mapping,

-

D.

the solution set \(Sol:=VI(C,A)\cap F(T)\) is nonempty,

-

E.

the function \(f: H \to H\) is a contraction with contractive coefficient \(\rho \in (0,1)\),

-

F.

the control sequences \(\{\theta _{n}\}\), \(\{\alpha _{n}\}\), \(\{\beta _{n}\}\) and \(\{\delta _{n}\}\) satisfy

-

\(\{\alpha _{n}\} \subset (0,1)\), \(\lim_{n \rightarrow \infty }\alpha _{n} = 0\) and \(\sum_{n=0}^{\infty }\alpha _{n} = \infty \),

-

\(\{\beta _{n}\} \subset (a,1-\alpha _{n}) \) for some \(a >0\),

-

\(\{\theta _{n}\}\subset [0,\theta )\) for some \(\theta >0\) such that \(\lim_{n \rightarrow \infty }\frac{\theta _{n}}{\alpha _{n}}\|x_{n} - x_{n-1}\| = 0\),

-

\(\{\delta _{n}\} \subset (0,1)\) and \(\liminf_{n\to \infty }(\delta _{n} - \kappa )>0\).

-

Remark 3.2

The inertial parameter \(\theta _{n}\) and \(\alpha _{n}\) can be chosen as follows:

We now present our Algorithm as follows.

Before proving the convergence of Algorithm 3.3, we provide some key lemmas which will be used in the sequel.

Lemma 3.4

The stepsize rule defined by (3.1) is well defined and

Proof

Since A is L-Lipschitz continuous, we have

This is equivalent to

Hence, (3.1) holds for all \(\lambda _{n} \leq \sigma \). If \(\lambda _{n} = \sigma \), then the result follows. On the other hand, if \(\lambda _{n} < \sigma \), then, by the search rule (3.1), \(\frac{\lambda _{n}}{l}\) must violate the inequality (3.1), i.e.,

Combining this with the fact that A is Lipschitz continuous, we have \(\lambda _{n} > \frac{\mu l}{L}\). Hence \(\min \{ \sigma ,\frac{\mu l}{L} \} \leq \lambda _{n} \leq \sigma \). This completes the proof. □

Lemma 3.5

The sequence \(\{x_{n}\}\) generated by Algorithm 3.3 is bounded. In addition

Proof

Let \(x^{*} \in VI(C,A)\). Then

By the definition of \(y_{n}\) and using the variational characterization of the \(P_{C}\), i.e., (2.1), we have

Also since \(x^{*} \in VI(C,A)\) and A is pseudomonotone,

Combining (3.6) and (3.7), we have

Therefore, it follows from (3.5) that

However, from the definition of \(z_{n}\) and \(\xi _{n}\), we have

Combining (3.8) and (3.9), we get

Since \(\gamma \in (0,2)\), we have

Moreover,

Since \(\frac{\theta _{n}}{\alpha _{n}} \|x_{n} -x_{n-1}\| \to 0\), there exists a constant \(M>0\) such that

thus

Therefore, it follows from (ii) of Lemma 2.3 that

By induction, we see that \(\{\|x_{n} - x^{*}\|\}\) is bounded. Consequently, \(\{x_{n}\}\) is bounded. Furthermore,

Also from (3.1), we have

It therefore follows from (3.11) and (3.12) that

This completes the proof. □

Lemma 3.6

Let \(x^{*} \in Sol\). Then the sequence \(\{x_{n}\}\) generated by Algorithm 3.3 satisfies the following inequality:

where \(s_{n} = \|x_{n} - x^{*}\|^{2}\), \(a_{n} = \frac{2\alpha _{n}(1-\rho )}{1-\alpha _{n}\rho }\), \(b_{n} = \frac{\langle f(x^{*}) - x^{*}, x_{n+1} -x^{*} \rangle }{1-\rho }\), \(c_{n} = \frac{\alpha _{n}^{2}}{1-\alpha _{n}\rho } \|x_{n} -x^{*}\|^{2} + \frac{\theta _{n}}{1-\alpha _{n}\rho }\|x_{n}- x_{n-1}\|M_{2}\) for some \(M_{2}>0\).

Proof

From Lemma 2.4(i), we have

where \(M_{2} = \sup_{n\geq 1}\{2\|x_{n} -x^{*}\| + \theta _{n}\|x_{n} -x_{n-1}\| \}\).

Moreover, from Lemma 2.4(iii), we get

Also, using Lemma 2.4(ii) and (3.14), we have

Hence

This completes the proof. □

Now we present our strong convergence theorem.

Theorem 3.7

Let \(\{x_{n}\}\) be the sequence generated by Algorithm 3.3 and suppose Assumption 3.1is satisfied. Then \(\{x_{n}\}\) converges strongly to a point \(\bar{x} \in Sol\), where \(\bar{x} = P_{Sol}f(\bar{x})\).

Proof

Let \(x^{*} \in Sol\) and denote \(\|x_{n}-x^{*}\|^{2}\) by \(\Gamma _{n}\) for all \(n \geq 1\). We consider the following two possible cases.

CASE A: Suppose there exists \(n_{0} \in \mathbb{N}\) such that \(\{\Gamma _{n}\}\) is non-increasing for \(N \geq n_{0}\). Since \(\{\Gamma _{n}\}\) is bounded, \(\Gamma _{n}\) converges and thus \(\Gamma _{n} - { \Gamma _{n+1} } \to 0\) as \(n \to \infty \).

First we show that

From (3.13) and (3.15), we have

Since \(\{\beta _{n}\}\subset (1-\alpha _{n})\) and \(\{\alpha _{n}\}\subset (0,1)\), we have

Using the fact that \(\alpha _{n} \to 0\) and \(\frac{\theta _{n}}{\alpha _{n}}\|x_{n} - x_{n-1}\| \to 0\) as \(n\to \infty \), we obtain

hence

Also from (3.4), (3.9) and the definition of \(z_{n}\), we obtain

Therefore from (3.16), we get

Again from (3.14) and (3.15), we have

Then

Taking the limit of the above inequality and using the fact that \(\liminf_{n\to \infty } (\delta _{n} - \kappa ) >0\), we have

Clearly

This implies that

Also

On the other hand, it is obvious that

hence

Next, we show that \(\omega _{w}(\{x_{n}\}) \subset Sol\), where \(\omega _{w}(\{x_{n}\})\) is the set of weak accumulation points of \(\{x_{n}\}\). Let \(\{x_{n_{k}}\}\) be a subsequence of \(x_{n}\) such that \(x_{n_{k}} \rightharpoonup p\) as \(k \to \infty \). We need to show that \(p \in Sol\). Since \(\|w_{n_{k}} - x_{n_{k}}\| \to 0\) and \(\|z_{n_{k}} - x_{n_{k}}\| \to 0\), \(w_{n_{k}} \rightharpoonup p\) and \(z_{n_{k}} \rightharpoonup p\), respectively. From the variational characterization of \(P_{C}\) (i.e., (2.1)), we obtain

Hence

This implies that

Fix \(y \in C\) and let \(k \to \infty \) in (3.20). Since \(\|w_{n_{k}} - y_{n_{k}}\|\to 0\) and \(\liminf_{k\to \infty }\lambda _{n_{k}}>0\), we have

Now let \(\{\epsilon _{k}\}\) be a sequence of decreasing nonnegative numbers such that \(\epsilon _{k} \to 0\) as \(k \to \infty \). For each \(\epsilon _{k}\), we denote by N the smallest positive integer such that

where the existence of N follows from (3.21). This means that

for some \(t_{n_{k}} \in H\) satisfying \(1 = \langle Aw_{n_{k}}, t_{n_{k}} \rangle \) (since \(Aw_{n_{k}} \neq 0\)). Using the fact that A is pseudomonotone, then we have

Hence

Since \(\epsilon _{k} \to 0\) and A is continuous, the right-hand side of (3.22) tends to zero and thus we obtain

Hence

Thus from Lemma 2.5, we obtain \(p \in VI(C,A)\). Moreover, since \(\|z_{n_{k}}-Tz_{n_{k}}\|\to 0\), it follows from Remark (2.2)(c) that \(p \in F(T)\). Therefore \(p \in Sol:= VI(C,A)\cap F(T)\).

Now we show that \(\{x_{n}\}\) converges strongly to \(\bar{x} = P_{Sol}f(\bar{x})\). To do this, it suffices to show that

Choose a subsequence \(\{x_{n_{k}}\}\) of \(\{x_{n}\}\) such that

Since \(\|x_{n_{k}+1} -x_{n}\| \rightarrow 0\) and \(x_{n_{k}} \rightharpoonup p\), we have from (2.1) that

Hence, putting \(x^{*} = \bar{x}\) in Lemma 3.6 and using Lemma 2.6(ii) and (3.24), we deduce that \(\|x_{n} - \bar{x}\| \rightarrow 0\) as \(n \rightarrow \infty \). This implies that \(\{x_{n}\}\) converges strongly to \(\bar{x} = P_{Sol}f(\bar{x})\).

CASE B: Suppose \(\{\Gamma _{n}\}\) is not eventually decreasing. Hence, we can find a subsequence \(\{\Gamma _{n_{k}}\}\) of \(\{\Gamma _{n}\}\) such that \(\Gamma _{n_{k}} \leq \Gamma _{n_{k}+1}\), for all \(k \geq 1\). Then we can define a subsequence \(\{\Gamma _{{\tau (n)}+1}\}\) as in Lemma 2.7 so that

Moreover, \(\{\tau (n)\}\) is a non-decreasing sequence such that \(\tau (n) \to \infty \) as \(n \to \infty \) and \(\Gamma _{\tau (n)} \leq \Gamma _{{\tau (n)}+1}\) for all \(n \geq n_{0}\). Let \(x^{*} \in Sol\), then from Lemma 3.6, we have

for some \(M >0\). Following a similar process to CASE A, we have

Since \(\{x_{\tau (n)}\}\) is bounded, there exists a subsequence of \(\{x_{\tau (n)}\}\) still denoted by \(\{x_{\tau (n)}\}\) which converges weakly to \(\bar{x} \in C\) and

Furthermore, since \(\|x_{\tau (n)} -x^{*}\|^{2} \leq \|x_{{\tau (n)}+1} -x^{*}\|^{2}\), from (3.25), we have

Hence

Therefore from (3.26), we have

As a consequence, we obtain, for all \(n \geq n_{0}\),

hence \(\lim_{n \rightarrow \infty }\|x_{n} -x^{*}\| =0\). This implies that \(\{x_{n}\}\) converges to \(x^{*}\). This completes the proof. □

Remark 3.8

-

(a)

We emphasize here that the assumption that A is pseudomonotone is more general than the monotone condition used by [11, 17, 41, 44] for PCM.

-

(b)

Also, the convergence result is proved without any prior condition on the stepsize. This improves the results of [10, 11, 44, 45] and many other results in this direction.

-

(c)

The strong convergence result proved in this paper is more desirable in optimization theory than the weak convergence counterparts; see [3].

4 Numerical experiments

In this section, we will test the numerical efficiency of the proposed Algorithm 3.3 by solving some variational inequality problems. We shall compare our method Algorithm 3.3 with other inertial projection contraction methods proposed in [10, 11, 44]. Our interest is to investigate how the line search process improve the numerical efficiency of Algorithm 3.3. It should be noted that the methods proposed in [10, 11, 44] required prior estimate of the Lipschitz constant of the cost operator. Moreover, the methods in [11, 44] converge for monotone variational inequalities, thus may not be applied for pseudomonotone variational inequalities. All numerical computations are carried out using a Lenovo PC with the following specification: Intel(R)core i7-600, CPU 2.48GHz, RAM 8.0GB, MATLAB version 9.5 (R2019b).

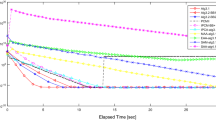

Example 4.1

We consider the variational inequality problem given in [16] which is a HP-hard model in finite dimensional space. The cost operator \(A:\mathbb{R}^{m} \to \mathbb{R}^{m}\) is defined by \(A(x) = Mx + q\), with \(M = BB^{T}+S+D\) where \(B,S,D \in \mathbb{R}^{m \times m}\) are randomly generated matrices such that S is skew symmetric, D is a positive definite diagonal matrix and \(q=0\). In this case, the operator A is monotone and Lipschitz continuous with \(L = \max (eig(BB^{T}+S+D))\). The feasible set is described as linear constraints \(Qx \leq b\) for some \(Q \in \mathbb{R}^{k\times m}\) and a random vector \(b \in \mathbb{R}^{k}\) with nonnegative entries. We also define the mapping \(T:\mathbb{R}^{m} \to \mathbb{R}^{m}\) by \(Tx = (\frac{-3x_{1}}{2},\frac{-3x_{2}}{2},\dots , \frac{-3x_{m}}{2} )\), which is \(\frac{1}{5}\)-strictly pseudocontractive and \(F(T) = \{0\}\). It is easy to see that \(Sol = \{0\}\). We compare the performance of Algorithm 3.3 with Algorithm 1.5 of [11], Algorithm 3.1 of Cholamjiak et al. [10] and Algorithm 1 of Thong et al. [44] which are also versions of projection and contraction method. To validate the convergence of all the algorithms, we use \(\|x_{n+1}-x_{n}\| < 10^{-5}\) as stopping criterion. We choose the following parameters for Algorithm 3.3: \(\theta _{n} =\frac{1}{5n+2}\), \(\alpha _{n} = \frac{1}{\sqrt{5n+2}}\), \(\beta _{n} = \frac{1}{2}-\frac{1}{\sqrt{5n+2}}\), \(\delta _{n} = \frac{1}{5}+\frac{2n}{5n+2}\), \(\gamma =0.85\), \(l = 0.5\), \(\sigma =2\), \(\mu = 0.1\). The projection onto C is easily solved by using the FMINCON Optimization solver in MATLAB Optimization Toolbox. Since the other algorithms require prior estimate of the Lipschitz constant, we choose the following parameters for the algorithms:

-

for Algorithm 1.5 of Dong et al. [11], we take \(\alpha _{n} = \frac{1}{5n+2}\), \(\lambda = \frac{1}{2L}\), \(\gamma = 0.85\), and \(\tau _{n} = \frac{1}{2}\),

-

for Algorithm 3.1 in Cholamjiak et al. [10], we take \(\alpha _{n} = \frac{1}{5n+2}\), \(\lambda = \frac{1}{2L}\), \(\gamma = 0.85\), \(\theta _{n} = \frac{1}{2} - \frac{1}{(5n+2)^{0.5}}\) and \(\beta _{n} = \frac{1}{(5n+2)^{0.5}}\),

-

for Algorithm 1 in Thong et al. [44], we take \(\alpha _{n} = \frac{1}{5n+2}\), \(\lambda = \frac{1}{2L}\), \(\gamma = 0.85\), \(\beta _{n} =\frac{1}{(5n+2)^{0.5}}\) and \(f(x) = \frac{x}{2}\).

The numerical results are presented in Table 1 and Fig. 1.

Example 4.1, Top Left: Case I; Top Right: Case II; Bottom Left: Case III; Bottom Right: Case IV

From the numerical results, it is clear that our Algorithm 3.3 solves the HP-hard problem with a smaller number of iterations and CPU-time (second). This shows the advantage of using a line search process for selecting the stepsize in Algorithm 3.3 rather than a fixed stepsize which depends on the estimate of the Lipschitz constant as used in [10, 11, 44].

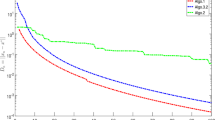

Example 4.2

In this example, we consider a variational inequality problem in an infinite dimensional space where A is a pseudomonotone and Lipschitz continuous operator but not monotone. We only compare our Algorithm 3.3 with Algorithm 3.1 of Cholamjiak et al. [10] which is strongly convergent and also solves the pseudomonotone variational inequality problem.

Let \(H=L_{2}([0,1])\) endowed with inner product \(\langle x,y \rangle = \int _{0}^{1}x(t)y(t)\,dt\) for all \(x,y \in L_{2}([0,1])\) and norm \(\|x\| = (\int _{0}^{1}|x(t)|^{2}\,dt )^{\frac{1}{2}}\) for all \(x \in L_{2}([0,1])\). Let

where \(y= 3t^{2}+9\) and \(a =1\). Then we can define the projection \(P_{C}\) as

Define the operator \(B:C \rightarrow \mathbb{R}\) by \(B(u) = \frac{1}{1+\|u\|^{2}}\) and \(F: L^{2}([0,1]) \rightarrow L^{2}([0,1])\) as the Volterra integral operator defined by \(F(u)(t) = \int _{0}^{t} u (s)\,ds\) for all \(u \in L^{2}([0,1])\) and \(t\in [0,1]\). F is bounded, linear and monotone with \(L = \frac{\pi }{2}\) (cf. Exercise 20.12 in [4]). Let \(A: L^{2}([0,1])\rightarrow L^{2}([0,1])\) be defined by

Suppose \(\langle Au, v-u \rangle \geq 0\) for all \(u,v \in C\), then \(\langle Fu, v-u \rangle \geq 0\). Hence

Thus, A is pseudomonotone. To see that A is not monotone, choose \(v =1\) and \(u =2\), then

Now consider the VIP in which the underlying operator A is as defined above. Let \(T:L^{2}([0,1]) \to L^{2}([0,1])\) be defined by \(Tx(t) = x(t)\) which is 0-strictly pseudocontractive. Clearly, \(Sol = \{0\}\). We choose the following parameters for Algorithm 3.3: \(\alpha _{n} = \frac{1}{n+4}\), \(\theta _{n} =\alpha _{n}^{2}\), \(\beta _{n} = \frac{n+1}{n+4}\), \(\delta _{n} = \frac{2n}{4n+1}\), \(l = 0.28\), \(\mu = 0.57\), \(\sigma = 2\), \(\gamma =1\). We take \(\beta _{n} =\frac{1}{n+4}\), \(\alpha _{n} = \alpha _{n}^{2}\), \(\lambda = \frac{1}{2\pi }\), \(\gamma = 1\) and \(f(x) = x\) in Algorithm 3.1 of Cholamjiak et al. [10]. Using \(\|x_{n+1} -x_{n}\|<10^{-5}\) as stopping criterion, we plot the graphs of \(\|x_{n+1}-x_{n}\|\) against number of iterations with the following initial points:

- Case I::

-

\(x_{0} = \frac{\exp (2t)}{9}\), \(x_{1}= \frac{\exp (3t)}{7}\),

- Case II::

-

\(x_{0} = \sin (2t)\), \(x_{1}= \cos (5t)\),

- Case III::

-

\(x_{0} =\exp (2t)\), \(x_{1} = \sin (7t)\),

- Case IV::

-

\(x_{0} = t^{2} +3t-1\), \(x_{1} = (2t+1)^{2}\).

The numerical results can be found in Table 2 and Fig. 2. The numerical results also show that Algorithm 3.3 performs better in terms of number of iterations and CPU time taken for computation than Algorithm 3.1 of [10]. This also signifies the advantage of using dynamic stepsize rather than a fixed stepsize which depends on an estimate of the Lipschitz constant.

Example 4.2, Top Left: Case I; Top Right: Case II; Bottom Left: Case III; Bottom Right: Case IV

5 Conclusion

In this paper, we introduced a new self-adaptive inertial projection and contraction method for approximating solutions of variational inequalities which are also fixed points of a strictly pseudocontarctive mapping in real Hilbert space. A strong convergence result is proved without prior estimate of the Lipschitz constant of the cost operator for the variational inequality problem. This is very important in the case where the Lipschitz constant cannot be estimated or very difficult to estimate. Furthermore, we provided some numerical examples to show the accuracy and efficiency of the proposed method. This result improves and extends the corresponding results of [11, 17, 26, 41, 42, 44, 45] and other important results in the literature.

Availability of data and materials

Not applicable.

References

Alvarez, F., Attouch, F.: An inertial proximal method for monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 9(1–2), 3–11 (2001)

Anh, P.N., Phuong, N.X.: Fixed point methods for pseudomonotone variational inequalities involving strict pseudocontractions. Optimization 64, 1841–1854 (2015)

Bauschke, H.H., Combettes, P.L.: A weak-to-strong convergence principle for Féjer-monotone methods in Hilbert spaces. Math. Oper. Res. 26(2), 248–264 (2001)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. CMS Books in Mathematics. Springer, New York (2011)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problem. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Censor, Y., Gibali, A., Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 26, 827–845 (2011)

Chambole, A., Dossal, C.H.: On the convergence of the iterates of the “fast shrinkage/thresholding algorithm”. J. Optim. Theory Appl. 166(3), 968–982 (2015)

Chan, R.H., Ma, S., Jang, J.F.: Inertial proximal ADMM for linearly constrained separable convex optimization. SIAM J. Imaging Sci. 8(4), 2239–2267 (2015)

Cholamjiak, P., Thong, D.V., Cho, Y.J.: A novel inertial projection and contraction method for solving pseudomonotone variational inequality problems. Acta Appl. Math. (2020). https://doi.org/10.1007/s10440-019-00297-7

Dong, Q.L., Cho, Y.J., Zhong, L.L., Rassias, T.M.: Inertial projection and contraction algorithms for variational inequalites. J. Glob. Optim. 70, 687–704 (2018)

Dong, Q.L., Jiang, D., Cholamjiak, P., Shehu, Y.: A strong convergence result involving an inertial forward–backward algorithm for monotone inclusions. J. Fixed Point Theory Appl. 19(4), 3097–3118 (2017)

Goebel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Dekker, New York (1984)

Goldstein, A.A.: Convex programming in Hilbert space. Bull. Am. Math. Soc. 70, 709–710 (1964)

Güler, O.: On the convergence of the proximal point algorithms for convex minimization. SIAM J. Control Optim. 29, 403–419 (1991)

Harker, P.T., Pang, J.S.: A damped-Newton method for the linear complementarity problem. In: Allgower, E.L., Georg, K. (eds.) Computational Solution of Nonlinear Systems of Equations. AMS Lectures on Applied Mathematics, vol. 26, pp. 265–284 (1990)

He, B.S.: A class of projection and contraction methods for monotone variational inequalities. Appl. Math. Optim. 35, 69–76 (1997)

Hieu, D.V., Cholamjiak, P.: Modified extragradient method with Bregman distance for variational inequalities. Appl. Anal. (2020). https://doi.org/10.1080/00036811.2020.1757078

Iiduka, H.: A new iterative algorithm for the variational inequality problem over the fixed point set of a firmly nonexpansive mapping. Optimization 59, 873–885 (2010)

Iiduka, H., Yamada, I.: A use of conjugate gradient direction for the convex optimization problem over the fixed point set of a nonexpansive mapping. SIAM J. Optim. 19, 1881–1893 (2009)

Iiduka, H., Yamada, I.: A subgradient-type method for the equilibrium problem over the fixed point set and its applications. Optimization 58, 251–261 (2009)

Jolaoso, L.O., Alakoya, T.O., Taiwo, A., Mewomo, O.T.: An inertial extragradient method via viscosity approximation approach for solving equilibrium problem in Hilbert spaces. Optimization (2020). https://doi.org/10.1080/02331934.2020.1716752

Jolaoso, L.O., Oyewole, K.O., Okeke, C.C., Mewomo, O.T.: A unified algorithm for solving split generalized mixed equilibrium problem and fixed point of nonspreading mapping in Hilbert space. Demonstr. Math. 51(1), 211–232 (2018)

Jolaoso, L.O., Taiwo, A., Alakoya, T.O., Mewomo, O.T.: A self adaptive inertial subgradient extragradient algorithm for variational inequality and common fixed point of multivalued mappings in Hilbert spaces. Demonstr. Math. 52, 183–203 (2019)

Jolaoso, L.O., Taiwo, A., Alakoya, T.O., Mewomo, O.T.: A strong convergence theorem for solving pseudo-monotone variational inequalities using projection methods in a reflexive Banach space. J. Optim. Theory Appl. 185(3), 744–766 (2020). https://doi.org/10.1007/s10957-020-01672-3

Jolaoso, L.O., Taiwo, A., Alakoya, T.O., Mewomo, O.T.: A unified algorithm for solving variational inequality and fixed point problems with application to the split equality problem. Comput. Appl. Math. 39, 38 (2020). https://doi.org/10.1007/s40314-019-1014-2

Kesornprom, S., Cholamjiak, P.: Proximal type algorithms involving linesearch and inertial technique for split variational inclusion problem in Hilbert spaces with applications. Optimization 68, 2365–2391 (2019)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Èkon. Mat. Metody 12, 747–756 (1976) (In Russian)

Lorenz, D., Pock, T.: An inertial forward–backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51(2), 311–325 (2015)

Maingé, P.E.: Approximation methods for common fixed points of nonexpansive mappings in Hilbert spaces. J. Math. Anal. Appl. 325, 469–479 (2007)

Maingé, P.E.: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 47, 1499–1515 (2008)

Maingé, P.E.: Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 16, 899–912 (2008)

Maingé, P.E.: Projected subgradient techniques and viscosity methods for optimization with variational inequality constraints. Eur. J. Oper. Res. 205, 501–506 (2010)

Marino, G., Xu, H.K.: Weak and strong convergence theorems for strict pseudo-contraction in Hilbert spaces. J. Math. Anal. Appl. 329, 336–346 (2007)

Mashreghi, J., Nasri, M.: Forcing strong convergence of Korpelevich’s method in Banach spaces with its applications in game theory. Nonlinear Anal. 72, 2086–2099 (2010)

Moudafi, A.: Viscosity approximation method for fixed-points problems. J. Math. Anal. Appl. 241(1), 46–55 (2000)

Moudafi, A., Oliny, M.: Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 155(2), 447–454 (2003)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Shehu, Y., Cholamjiak, P.: Iterative method with inertial for variational inequalities in Hilbert spaces. Calcolo 56, 4 (2019). https://doi.org/10.1007/s10092-018-0300-5

Thong, D.V., Cholamjiak, P.: Strong convergence of a forward–backward splitting method with a new step size for solving monotone inclusions. Comput. Appl. Math. 38, 94 (2019). https://doi.org/10.1007/s40314-019-0855-z

Thong, D.V., Hieu, D.V.: Inertial subgradient extragradient algorithms with line-search process for solving variational inequality problems and fixed point problems. Numer. Algorithms 80, 1283–1307 (2018)

Thong, D.V., Hieu, D.V.: Modified subgradient extragradient method for variational inequality problems. Numer. Algorithms 79, 597–601 (2018)

Thong, D.V., Hieu, D.V.: Modified subgradient extragdradient algorithms for variational inequalities problems and fixed point algorithms. Optimization 67(1), 83–102 (2018)

Thong, D.V., Vinh, V.N., Cho, Y.J.: New strong convergence theorem of the inertial projection and contraction method for variational inequality problems. Numer. Algorithms 84, 285–305 (2020)

Tian, M., Jiang, B.N.: Inertial hybrid algorithm for variational inequality problems in Hilbert spaces. J. Inequal. Appl. 2020, 12 (2020)

Zegeye, H., Shahzad, N.: Convergence theorems of Mann’s type iteration method for generalized asymptotically nonexpansive mappings. Comput. Math. Appl. 62, 4007–4014 (2011)

Zhou, H.: Convergence theorems of fixed points for k-strict pseudo-contractions in Hilbert spaces. Nonlinear Anal. 69(2), 456–462 (2008)

Acknowledgements

The authors acknowledge with thanks the Department of Mathematics and Applied Mathematics at the Sefako Makgatho Health Sciences University for making their facilities available for the research. The authors thank the anonymous reviewers for valuable and useful suggestions and comments which led to the great improvement of the paper.

Funding

The first author is supported by the Postdoctoral research grant from the Sefako Makgatho Health Sciences University, South Africa.

Author information

Authors and Affiliations

Contributions

All authors worked equally on the results and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jolaoso, L.O., Aphane, M. Strong convergence inertial projection and contraction method with self adaptive stepsize for pseudomonotone variational inequalities and fixed point problems. J Inequal Appl 2020, 261 (2020). https://doi.org/10.1186/s13660-020-02536-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-020-02536-0