Abstract

In this paper, we propose a low-rank matrix approximation algorithm for solving the Toeplitz matrix completion (TMC) problem. The approximation matrix was obtained by the mean projection operator on the set of feasible Toeplitz matrices for every iteration step. Thus, the sequence of the feasible Toeplitz matrices generated by iteration is of Toeplitz structure throughout the process, which reduces the computational time of the singular value decomposition (SVD) and approximates well the solution. On the theoretical side, we provide a convergence analysis to show that the matrix sequences of iterates converge. On the practical side, we report the numerical results to show that the new algorithm is more effective than the other algorithms for the TMC problem.

Similar content being viewed by others

1 Introduction

The problem of completing a low-rank matrix, which is to recover a matrix from the partial entries, has became more and more popular. The problem arises in many areas of engineering and applied science such as machine learning [1, 2], control [20], image inpainting [4], and so on. And the problem is mathematically written as follows:

where the objective function \(\operatorname{rank}(X)\) denotes the rank of X, Ω is a subset of indices for the q known entries, \(D\in\mathbb{R}^{m\times n}\) is the unknown matrix to be reconstructed and the one that has available q sampled entries, \(\mathcal {P}_{\varOmega}(X)\) is the sampling projector which acquires only the entries indexed by Ω.

When the rank of X is known in advance or can be estimated [17, 18], the matrix completion problem (1.1) is equivalent to the following non-convex optimization problem on the manifold:

where \(\mathfrak{X}_{r} \) represents the smooth r-dimensional manifold set of rank r matrix.

It is clear that problem (1.2) is a smooth Riemannian optimization since the objective function is also smooth.

Recently, there have been some algorithms, mentioned in what follows, to solve the problem (1.1) or (1.2). The orthogonal rank-one matrix pursuit (OR1MP) [33] was presented based on the orthogonal matching pursuit for wavelet decomposition [22], only the top singular value and the corresponding singular vector as well as a least square problem on Ω were computed, but the precision was poor. It is well known that a manifold of rank r matrix can be factorized into a bi-linear form: \(X=GH^{T}\) with \(G\in\mathbb{R}^{m\times r}\), \(H\in\mathbb{R}^{n\times r}\), so some algorithms have been proposed to solve the problem (1.1) or (1.2), for example, Vandereycken [27] proposed the geometric conjugate gradient method and Newton method on Riemannian manifold; Mishra [21], Boumal and Absil [5] presented the Riemannian geometry method, i.e., the gradient descent scheme and trust-region scheme; Tanner and Wei [26] took advantage of the different descent directions for exact linear search respectively; the alternating direction methods were presented by Jain [15]; Keshavan [16] and Wen [38] presented gradient descent method and nonlinear successive over-relaxation algorithm respectively. Based on the shortest distance scheme in [32] and the alternating steepest descent algorithm in [26], the inner and outer iteration was presented in [37] and was proved to be feasible, but it depended on the inner iterative methods. The optimal low-rank approximate algorithm in [32] was showed to be effective, since the algorithm was simple and iterated repeatedly on the manifold of rank r matrix.

On the current practice, the sample matrix D often has a special structure such as the Hankel or Toeplitz structure and so on. The Hankel or Toeplitz matrix also plays important roles in many areas of engineering and applied science, especially in signal and image processing [3, 14]. There are a lot algorithms which are effective for the low-rank Hankel or Toeplitz matrix completion problem. For example, the nuclear norm minimization for the low-rank Hankel matrix reconstruction problem under the random Gaussian sampling model was investigated in [7]. The common drawback of this otherwise very appealing convex optimization approach is the high computational complexity of solving the equivalent semi-definite programming (SDP). Cai et al. [6] developed a fast non-convex algorithm for a low-rank Hankel matrix completion by minimizing the distance between a low-rank matrix and a Hankel matrix with partial known anti-diagonals, and an accelerated variant has been developed by using Nesterov’s memory technique. [9] came up with an iterative hard thresholding (IHT) and fast IHT (FIHT) algorithms for efficient reconstruction of spectrally sparse signals via low-rank Hankel matrix completion. By utilizing the low-rank structure of the Hankel matrix corresponding to a spectrally sparse signal x, [8] introduced a computationally efficient algorithm for the spectral compressed sensing problem. Chen and Chi [10] studied nuclear norm minimization for the low-rank Hankel matrix completion problem, the method proposed in [10] utilized convex relaxation and was theoretically guaranteed to work. Fazel et al. [11] proposed also the reconstruction of the low-rank Hankel matrices via nuclear norm minimization for system identification realization. Sebert et al. considered the Toeplitz block matrices as sensing matrices whose elements are drawn from the same distributions in [23]. Shaw et al. [24] presented algorithms for least-squares approximation of the Toeplitz and Hankel matrices from noise corrupted or ill-composed matrices, which may not have correct structural or rank properties. Wang et al. [29, 30] proposed a mean value algorithm to force the Toeplitz structure and a structure-preserving algorithm for the Toeplitz matrix recovery. Wen et al. [34–36] gave some algorithms for the TMC problem. Further, Ying et al. [40] exploited the low-rank tensor structure of the signal when developing recovery problems for the multidimensional spectrally sparse signal recovery problems. For details, one can refer to the above mentioned algorithms and references given therein.

An \(n\times n\) Toeplitz matrix \(T\in\mathbb{R}^{n\times n}\) is of the following form:

It is clear that the entries of T are constant along each diagonal. Based on the Lanczos method [13] and the FFT technique [28], an algorithm for the SVD of a Hankel matrix [19, 39] has been presented; its complexity is \(O(n^{2}\log n)\) but that of the general SVD algorithm is \(O(n^{3})\). It is significant to study the TMC problem because most of the popular algorithms need to compute the SVD. The fast SVD (denoted by lansvd) algorithm with \(O(n^{2}\log n)\) complexity has been applied to some recent algorithms for solving the TMC problem as inspired by [24]. That is also the interest of this study.

Then it motivates us to switch the iteration matrix into the Toeplitz matrix for solving the TMC problem. To exploit the structure well, in this paper, we modify the general matrix completion algorithm to the TMC problem by using mean projection approximation. The proposed algorithm ensures that the sequence of iteration matrices remains the Toeplitz structure so that the Lanczos SVD algorithm can always be used. It not only reduces the computational cost, but also improves the accuracy.

The rest of the paper is organized as follows. We present a new algorithm after giving an outline of the OLRMA [32], MOR1MP, and MEOR1MP [12] algorithms for solving the TMC problem in Sect. 2. Section 3 establishes the convergence results for the new algorithm given in Sect. 2. We compare our algorithm with the OLRMA, MOR1MP, and MEOR1MP algorithms through numerical experiments in Sect. 4. Finally, we conclude the paper with a short discussion in Sect. 5.

Before continuing, we provide here a brief summary of the necessary notations used throughout the paper. \(\mathbb{R}\) is the set of real numbers, \(\mathbb{R}^{m\times n}\) denotes the set of \(m\times n\) real matrices. Likewise, \(\mathbb {T}^{n\times n}\) denotes the set of real \(n\times n\) Toeplitz matrices. \(\|X\|_{F}\) is the F-norm of a matrix X. \(\langle X,Y\rangle =\operatorname{trace}(X^{T}Y)\) represents the standard inner product between two matrices (\(\|X\|_{F}^{2}=\langle X,X\rangle\)), \(X^{T}\) stands for the transpose of X. \(\varOmega\subset\{1,2,\ldots,m\}\times\{ 1,2,\ldots,n\}\) is a subset of indices of the observed entries of the matrix \(X\in\mathbb{R}^{m\times n}\), and Ω̄ represents the indices of the missing entries. The singular value decomposition (SVD) of a matrix \(A \in\mathbb{R} ^{m\times n}\) with rank r is

where \(U \in\mathbb{R} ^{m\times r}\) and \(V \in\mathbb{R} ^{n\times r}\) are column orthogonal matrices, \(\sigma_{1} \geq\sigma_{2}\geq \cdots\geq\sigma_{r}>0\). \(I_{n}=(e_{1},e_{2},\ldots,e_{n})\in\mathbb {R}^{n\times n}\) denotes the \(n\times n\) identity matrix and \(S_{n}=(e_{2},e_{3},\ldots,e_{n},\mathbf{0})\in\mathbb{R}^{n\times n}\) is called the shift matrix where 0 is a zero-vector. It is clear that

with “O” standing for a zero-matrix with the corresponding size. Thus, a Toeplitz matrix \(T\in\mathbb{T} ^{n\times n}\), shown in (1.3), can be rewritten as a linear combination of these shift matrices, that is,

2 Description of algorithms

For the goal of completing comparison subsequently, we briefly review and introduce some algorithms for solving the TMC problem firstly. Here, the TMC problem is considered as follows:

where \(D\in\mathbb{T}^{n\times n}\) is the underlying Toeplitz matrix to be reconstructed, \(\varOmega\subset\{-n+1,\ldots, n-1\}\) is the indices of the observed diagonals of D, Ω̄ is the complementary set of Ω. For \(X\in\mathbb{T}^{n\times n}\), the \(\operatorname{diag}(X,l)\) denotes the vector formed by lth diagonal of X, \(l\in\{-n+1,\ldots, n-1\}\), \(\mathcal{P}_{\varOmega}\) is the orthogonal projector on Ω, satisfying

Likewise, \(\operatorname{diag}(\mathcal{P}_{\bar{\varOmega}}(X),l)\) can be defined as follows:

Based on the OR1MP algorithm in [33], we came up with three schemes for solving the TMC problem in the following. For convenience, \([U_{k},\varSigma_{k},V_{k}]=\operatorname{lansvd}(Y_{k},r)\) denotes the SVD of the matrix \(Y_{k}\) to find the top-r singular pairs by using the Lanczos method.

Algorithm 2.1

([12] (The MOR1MP algorithm for the TMC problem))

Given a sampled set Ω and the sampled entries \(\mathcal {P}_{\varOmega}(D)=Y_{\varOmega}\), \(\varepsilon=10^{-3}\), maxiter. Given also the initial Toeplitz matrix \(X_{0}=0\), \(k:=1\).

- Step 1.

Compute the SVD of \(R_{k}\), \(R_{k}=Y_{\varOmega}-X_{k-1}\), \([U_{k},\sim,V_{k}]=\operatorname{lansvd}(R_{k},1,L)\), set \(M_{k}=U_{k} V_{k}^{T}\), compute \(a_{l}=\operatorname{average}(\operatorname{diag}(M_{k}),l), l\in\{ -n+1,\ldots,n-1\}\), set \(M_{k}=\sum_{l=1}^{n-1}t_{-l}S_{n}^{l}+\sum_{l=0}^{n-1}t_{l}(S_{n}^{T})^{l}\).

- Step 2.

Compute the weight vector \(\theta^{k}\) by using the closed form least squares solution \(\theta^{k}=(\bar{M}_{k}^{T}\bar {M}_{k})^{-1}\bar {M}_{k}^{T}\dot{y}\).

- Step 3.

Set \(X_{k}=\sum_{i=1}^{k}\theta_{i}^{k} \mathcal {P}_{\varOmega}(M_{i})\).

- Step 4.

Compute \(\widehat{Y}_{k}=\sum_{i=1}^{k}\theta_{i}^{k}M_{i}\).

- Step 5.

If \(k\geq \text{maxiter}\) or \(\|R_{k}\|_{F}/\|Y_{\varOmega}\|_{F}\leq \varepsilon\), stop; otherwise set \(k:=k+1\); go to Step 1.

Algorithm 2.2

([12] (The MEOR1MP algorithm for the TMC problem))

Given a sampled set Ω and the sampled entries \(\mathcal {P}_{\varOmega}(D)=Y_{\varOmega}\), \(\varepsilon=10^{-3}\), maxiter. Given also the initial Toeplitz matrix \(X_{0}^{*}=0\), \(k:=1\).

- Step 1.

Compute the SVD of \(R_{k}\), \(R_{k}=Y_{\varOmega}-X_{k-1}^{*}\); \([U_{k},\sim,V_{k}]=\operatorname{lansvd}(R_{k},1,L)\), set \(M_{k}=U_{k} V_{k}^{T}\).

- Step 2.

Compute the optimal weights \(\alpha^{k}\) for \(X_{k-1}^{*}\) and \(M_{k}\) by solving \(\min_{\alpha}\|\alpha_{1}X_{k-1}^{*}+\alpha_{2}\mathcal{P}_{\varOmega}(M_{k})-Y_{\varOmega}\|^{2}\).

- Step 3.

Compute \(X_{k}=\alpha_{1}^{k}X_{k-1}^{*}+\alpha_{2}^{k}\mathcal {P}_{\varOmega}(M_{k})\); \(\theta_{k}^{k}=\alpha_{2}^{k}\) and \(\theta_{i}^{k}=\theta _{i}^{k-1}\alpha_{1}^{k}\) for \(i< k\), set \(a_{l}=\operatorname{average}(\operatorname{diag}(X_{k}),l), l\in\{-n+1,\ldots,n-1\}\), set \(X_{k}^{*}=\sum_{l=1}^{n-1}t_{-l}S_{n}^{l}+\sum_{l=0}^{n-1}t_{l}(S_{n}^{T})^{l}\).

- Step 4.

Compute \(\widehat{Y}_{k}=\sum_{i=1}^{k}\theta _{i}^{k}M_{i}\), set \(a_{l}=\operatorname{average}(\operatorname{diag}(\widehat{Y}_{k}),l), l\in\{-n+1,\ldots,n-1\}\), set \(\widehat{Y}_{k}^{*}=\sum_{l=1}^{n-1}t_{-l}S_{n}^{l}+\sum_{l=0}^{n-1}t_{l}(S_{n}^{T})^{l}\).

- Step 5.

If \(k\geq\text{maxiter}\) or \(\|R_{k}\|_{F}\leq\varepsilon\), stop; otherwise set \(k:=k+1\); go to Step 1.

Remark

The main difference between the proposed algorithms and OR1MP is that the rank-one matrix \(M_{k}\) was switched into the Toeplitz matrix by the mean value operator before solving the least square problem, which guarantees that \(X_{k}\) and \(\widehat{Y}_{k}\) are all Toeplitz matrices. So the computing time of the SVD is decreased due to using of the FFT technique. Then, in the iteration process, all the matrices are of the Toeplitz structure, which ensures lower computational cost even though the process of the mean value increases the computing time.

Algorithm 2.3

([29] (Optimal low-rank matrix approximation (OLRMA) algorithm for solving problem (1.1) or (1.2)))

Given an initial Toeplitz matrix \(\mathcal{P}_{\varOmega}(D)\), \(Y_{1}=\mathcal{P}_{\varOmega}(D)\), tolerance parameter ε, \(0< c<1\), \(X_{0}=0\), \(r=1\), \(k:=1\).

- Step 1.

\([U_{k},\varSigma_{k},V_{k}]=\operatorname{lansvd}(Y_{k},r)\).

- Step 2.

Set \(X_{k}=U_{k}\varSigma_{k}V_{k}^{T}\).

- Step 3.

If \(\|\mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k})\|_{F}\leq c\|\mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k-1})\|_{F}\), \(r=r\); otherwise \(r:=r+1\).

- Step 4.

Set \(Y_{k+1}=\mathcal{P}_{\varOmega}(D)+\mathcal {P}_{\bar {\varOmega}}(X_{k})\).

- Step 5.

If \(\|\mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k})\|_{F}/\|\mathcal{P}_{\varOmega}(D)\|_{F}\leq\varepsilon\), stop; otherwise set \(k:=k+1\); go to Step 1.

And we introduce a modified algorithm by using the mean projection technique. The operator was defined as follows.

Definition 2.1

(Mean projection operator)

For any matrix \(A=(a_{ij})\in\mathbb{R}^{n\times n}\), the mean projection operator \(\mathcal{M}\) is defined as follows:

where \(\tilde{a}_{\alpha}=\frac{\sum_{j-i=\alpha}a_{ij}}{n-|\alpha|}\), \(\alpha=-n+1,-n+2,\ldots, n-1\).

It is clear that \(\mathcal{M}(A)\) is a Toeplitz matrix derived from the matrix A. Namely, any \(A\in\mathbb{R}^{n\times n}\) can be changed into the Toeplitz structure via the mean projection operator \(\mathcal{M}(\cdot )\), which is the best Toeplitz approximation under F-norm (see Theorem 3.1 in Sect. 3).

Next, we are to switch the approximation matrix \(X_{k}\) in Algorithm 2.3 into the Toeplitz one by mean projection matrix for solving the TMC problem, and the new algorithm was presented as follows.

Algorithm 2.4

(Modified low-rank matrix approximation (MOLRMA) algorithm for solving TMC problem (2.1))

Given an initial Toeplitz matrix \(\mathcal{P}_{\varOmega}(D)\), \(Y_{1}=\mathcal{P}_{\varOmega}(D)\), tolerance parameter ε, \(0< c<1\), \(X_{0}=O\), \(r=1\), \(k:=1\).

- Step 1.

\([U_{k},\varSigma_{k},V_{k}]=\operatorname{lansvd}(Y_{k},r)\).

- Step 2.

Set \(X_{k}=U_{k}\varSigma_{k}V_{k}^{T}\).

- Step 3.

If \(\|\mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k})\|_{F}\leq c\|\mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k-1})\|_{F}\), \(r=r\); otherwise \(r:=r+1\).

- Step 4.

Compute the mean projection matrix \(\bar{X}_{k}=\mathcal {M}(X_{k})\) by (2.2).

- Step 5.

Set \(Y_{k+1}=\mathcal{P}_{\varOmega}(D)+\mathcal {P}_{\bar {\varOmega}}(X_{k})\).

- Step 6.

If \(\|\mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k})\|_{F}/\|\mathcal{P}_{\varOmega}(D)\|_{F}\leq\varepsilon\), stop; otherwise set \(k:=k+1\); go to Step 1.

Remark

We can see that both the iteration sequences \(\{Y_{k}\}\) obtained by Algorithms 2.3–2.4 are close to the underlying matrix. If the unknown matrix to be reconstructed D is of the Toeplitz structure, however, it is clear that the iteration sequence \(\{Y_{k}\}\) with the Toeplitz structure in Algorithm 2.4 better approximates to the Toeplitz matrix. Further, the iteration in Algorithm 2.4 ensures lower computational cost even though the process of mean projection increases the computing time since the Lanczos SVD method can be used.

3 Convergence analysis

In this section, based on the structure of a Toeplitz matrix and some reasonable conditions, the convergence analysis of the new algorithm is given. Firstly, the distance between the feasible Toeplitz matrix and its projection onto the r-dimensional manifold is provided. The one-to-one correspondence between a matrix and its projection enables us to devise a notation of distance between a matrix and an r-dimensional manifold as follows.

Definition 3.1

For a matrix \(Y\in\mathbb{R}^{m\times n} \),

is called distance between a matrix Y and an r-dimensional manifold \(\mathfrak{X}_{r}\). That is essentially the distance between matrix Y and its projection onto the r-dimensional manifold \(\mathfrak{X}_{r}\).

We trivially introduce the distance between a feasible matrix and its projection onto the r-dimensional manifold.

For \(\mathcal{P}_{\varOmega}(Y)=\mathcal{P}_{\varOmega}(D)\), we call \(d(Y,r)=\min_{\dim(X)=r}\|Y-X\|_{F}^{2}\) a distance between a feasible matrix Y and an r-dimensional manifold \(\mathfrak{X}_{r}\).

Evidently,

by introducing

Theorem 3.1

([25])

For any matrix\(Y\in\mathbb{R}^{n\times n}\), the mean projection matrix\(\mathcal{M}(Y)\)presented in (2.2) is the solution of the following optimization problem:

Lemma 3.2

([31])

Let\(Y\in\mathbb{R}^{n\times n}\), \(X\in\mathbb{T}^{n\times n}\). Then the mean projection matrix\(\mathcal{M}(Y)\in\mathbb{T}^{n\times n}\), as defined in (2.2), obeys

Lemma 3.3

Let\(\{Y_{k}\}\)and\(\{X_{k}\}\)be the sequences generated by Algorithm 2.4. Then

Proof

It is known that

According to Lemma 3.2 and \(\mathcal{P}_{\bar{\varOmega }}(Y_{k+1})=\mathcal{M}(\mathcal{P}_{\bar{\varOmega}}(X_{k}))\) for the Toeplitz matrix sequence \({Y_{k}}\), we have

and then (3.6) holds true. □

Lemma 3.4

([32])

The \(d(Y,r)\) satisfies

Theorem 3.5

Let\(\{Y_{k}\}\)and\(\{X_{k}\}\)be the matrix sequences generated by Algorithm 2.4. Then they obey

Proof

According to the fact \(\|Y_{k+1}-X_{k+1}\|_{F}^{2}\leq\|Y_{k+1}-X_{k}\|_{F}^{2}\) and Lemma 3.3, (3.8) holds true. □

Lemma 3.6

Suppose that the low-rankris known in problem (2.1), if\(\operatorname{rank}(X_{k})=r\), \(k\geq k_{0}\) (\(k_{0}\)is a positive integer) and there exists a parameter\(0<\theta<1\)such that\(\|Y_{k-1}-Y_{k}\|_{F}\geq\theta\| Y_{k-1}-X_{k-1}\| _{F}\). Then there exists a positive constant\(0< c<1\)such that

Proof

According to Theorem 3.5 and the assumptions of Lemma 3.6, we have

Let \(c=\sqrt{1-\theta^{2}}\). Then

and then (3.9) holds. □

Theorem 3.7

Let\(\{Y_{k}\}\)be the matrix sequence generated by Algorithm 2.4. And suppose that the low-rankris known in problem (2.1), if\(\operatorname{rank}(X_{k})=r\), \(k\geq k_{0}\) (\(k_{0}\)is a positive integer) and there exists\(0<\theta<1\)such that\(\| Y_{k-1}-Y_{k}\|_{F}^{2}\geq\theta\|Y_{k-1}-X_{k-1}\|_{F}^{2}\). Then there existsc (\(0< c <1\)) to satisfy

where\(\sum_{i=1}^{r}m_{i}=k-r\), \(0< c<1\).

Proof

Suppose \(r_{k}=r(Y_{k})=r(Y_{k-1})+1\). From the definition of \(d(Y,r)\), we have

and

(3.11) can be reformulated as by Lemma 3.2:

Hence,

Thus, we obtain the following inequality from Lemma 3.4:

Suppose that (3.9) holds for \(k-m_{k}\) and \(r-1\). Then, from Algorithm 2.4 and Lemma 3.6, we have

From Lemma 3.4, the following inequality holds true:

Thus, (3.10) holds for k and r. □

4 Numerical experiments

This section provides the comparison of the new algorithm with OLRMA, MOR1MP, and MEOR1MP algorithms through numerical experiments. And all of the experiments are performed on a double-precision PC with MATLAB (R2016) and a central processor of 2.40 GHz [Intel(R) Core(TM)i745 CPU], 8GB Microsoft Windows 7 operating system.

In the experiments, we suppose that \(p=q/(2n-1)\) is an observation ratio, where q denotes the number of the observed entries and set \(p=0.1, 0.3, 0.5\), respectively. The true matrix is denoted by D, Ŷ stands for the output matrices. Here, we report the running time in seconds (denoted by CPU (s)), the iteration number (denoted by IT), and the error of the reconstruction matrix is \(\|\hat{Y}-D\|_{F}/\| D\|_{F}\). We generate the Toeplitz matrices or general matrices of rank r by sampling uniformly at random, which is the same as the method in [12], and hence the true matrix D is indeed of low-rank.

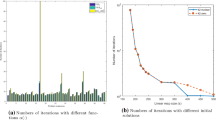

Brief comparison results of four algorithms are provided in Table 1. The changes of the CPU time of four algorithms with respect to the different matrix size are shown in Fig. 1, and Fig. 2 shows the convergence behavior of the relative error in MOLRMA runs. Table 1 describes the comparison between the OLRMA, MOLRMA, MOR1MP, and MEOR1MP algorithms in iteration number, CPU time, and error when the rank of the completion matrix is not known and \(\| \mathcal{P}_{\varOmega}(D)-\mathcal{P}_{\varOmega}(X_{k})\|_{F}/\|\mathcal {P}_{\varOmega}(D)\|_{F}\leq\varepsilon=10^{-4}\).

From Table 1 and Figs. 1–2, we can see that the MOLRMA algorithm performs better than the OLRMA algorithm in computing time, iteration number, and error. Compared with the MEOR1MP algorithm, the new algorithm is better than the MEOR1MP algorithm in error, computing time, and iteration number when p=0.3 and 0.5.

5 Conclusion

In this paper, we have presented a new algorithm for solving the TMC problem based on the mean projection matrix operator. The approximation matrices are of the Toeplitz structure so that the fast SVD is used in the whole iterative process. Meanwhile, the MOLRMA algorithm has a much better performance in precision than the OLRMA, MOR1MP, and MEOR1MP algorithms, and it is also better in computing time and iteration number when the sampling ratio is large. It is exciting that the iteration number of the new algorithm is less than the rank of the objective matrix. In addition, the iterative error of the MOLRMA algorithm is inversely related to the sampling ratio, which has little relation to the rank of the completion matrix.

References

Amit, Y., Fink, M., Srebro, N., Ullman, S.: Uncovering shared structures in multiclass classification. In: Processings of the 24th International Conference on Machine Learning, pp. 17–24. ACM, New York (2007)

Argyriou, A., Evgeniou, T., Pontil, M.: Multi-task feature learning. Adv. Neural Inf. Process. Syst. 19, 41–48 (2007)

Bajwa, W.U., Haupt, J.D., Raz, G.M., Wright, S.J., Nowak, R.D.: Toeplitz-structures compressed sensing matrices. In: IEEE/SP 14th Workshop on Statistical Signal Processing, SSP’07, pp. 294–298 (2007)

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. Comput. Graph. 34, 417–424 (2000)

Boumal, N., Absil, P.A.: RTRMC: a Riemannian trust-region method for low-rank matrix completion. Adv. Neural Inf. Process. Syst. 24, 406–414 (2011)

Cai, J.F., Liu, S., Xu, W.: A fast algorithm for reconstruction of spectrally sparse signals in super-resolution. In: Wavelets and Sparsity XVI. Proceedings of SPIE, vol. 9597. SPIE, Bellingham (2015)

Cai, J.F., Qu, X., Xu, W., Ye, G.B.: Robust recovery of complex exponential signals from random Gaussian projections via low rank Hankel matrix reconstruction. Appl. Comput. Harmon. Anal. 41(2), 470–490 (2016)

Cai, J.F., Wang, T., Wei, K.: Spectral compressed sensing via projected gradient descent. SIAM J. Optim. 28(3), 2625–2653 (2018)

Cai, J.F., Wang, T., Wei, K.: Fast and provable algorithms for spectrally sparse signal reconstruction via low-rank Hankel matrix completion. Appl. Comput. Harmon. Anal. 46(1), 94–121 (2019)

Chen, Y., Chi, Y.: Robust spectral compressed sensing via structured matrix completion. IEEE Trans. Inf. Theory 60(10), 6576–6601 (2014)

Fazel, M., Pong, T.K., Sun, D., Tseng, P.: Hankel matrix rank minimization with applications to system identification and realization. SIAM J. Matrix Anal. Appl. 34, 946–977 (2013)

Fu, Y.R., Wang, C.L.: A modified orthogonal rank-one matrix pursuit for Toeplitz matrix completion. Far East J. Appl. Math. 95, 411–428 (2016)

Golub, G.H., VanLoan, C.F.: Matrix Computations, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Gutiérrez-Gutiérrez, J., Crespo, P.M., Böttcher, A.: Functions of banded Hermitian block Toeplitz matrices in signal processing. Linear Algebra Appl. 422, 788–807 (2007)

Jain, P., Netrapalli, P., Sanghavi, S.: Low-rank matrix completion using alternating minimization. In: Proceedings of the Forty-Fifth Annual ACM Symposium on Theory of Computing, pp. 665–674 (2013)

Keshavan, R.H., Montanari, A., Oh, S.: Matrix completion from a few entries. Technical report, Stanford University (2009)

Liu, Z., Vandenberghe, L.: Interior-point method for nuclear norm approximation with application to system identification. SIAM J. Matrix Anal. Appl. 31, 1235–1256 (2009)

Lu, Z., Zhang, Y., Li, X.: Penalty decomposition methods for rank minimization. Optim. Methods Softw. 30(3), 531–558 (2015)

Luk, F.T., Qiao, S.Z.: A fast singular value algorithm for Hankel matrices. In: Olshevsky, V. (ed.) Fast Algorithms for Structured Matrices: Theory and Applications. Contemporary Mathematics, vol. 323. Am. Math. Soc., Providence (2003)

Mesbahi, M., Papavassilopoulos, G.P.: On the rank minimization problem over a positive semidefinite linear matrix inequality. IEEE Trans. Autom. Control 42, 239–243 (1997)

Mishra, B., Apuroop, K.A., Sepulchre, R.: A Riemannian geometry for low-rank matrix completion (2012). arXiv:1211.1550

Pati, Y.C., Rezaiifar, R., Rezaiifar, Y.C.P.R., Krishnaprasad, P.S.: Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. In: Proceedings of the 27th Annual Asilomar Conference on Signals, Systems, and Computers, pp. 40–44 (1993)

Sebert, F., Zou, Y.M., Ying, L.: Toeplitz block matrices in compressed sensing and their applications in imaging. In: International Conference on Information Technology and Applications in Biomedicine, pp. 47–50 (2008). https://doi.org/10.1109/ITAB.2008.4570587

Shaw, A.K., Pokala, S., Kumaresan, R.: Toeplitz and Hankel approximation using structured approach. In: Proceedings of the 1998 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 12–15 (1998)

Suffridge, T.J., Hayden, T.L.: Approximation by a Hermitian positive semidefinite Toeplitz matrix. SIAM J. Matrix Anal. Appl. 14(3), 721–734 (1993)

Tanner, J., Wei, K.: Low rank matrix completion by alternating steepest descent methods. Appl. Comput. Harmon. Anal. 40, 417–429 (2016)

Vandereycken, B.: Low rank matrix completion by Riemannian optimization. SIAM J. Optim. 23, 1214–1236 (2013)

VanLoan, C.F.: Computational Frameworks for the Fast Fourier Transform. SIAM, Philadelphia (1992)

Wang, C.L., Li, C.: A mean value algorithm for Toeplitz matrix completion. Appl. Math. Lett. 41, 35–40 (2015)

Wang, C.L., Li, C.: A structure-preserving algorithm for Toeplitz matrix completion. Sci. Sin., Math. 46, 1191–1206 (2016) (in Chinese)

Wang, C.L., Li, C., Wang, J.: A modified augmented Lagrange multiplier algorithm for Toeplitz matrix completion. Adv. Comput. Math. 42, 1209–1224 (2016)

Wang, C.L., Li, C., Wang, J.: Optimal low-rank matrix approximation algorithms for matrix completion. Appl. Math. Comput. (under revision)

Wang, Z., Lai, M.J., Lu, Z.S., Fan, W., Davulcu, H., Ye, J.-P.: Orthogonal rank-one matrix pursuit for low rank matrix completion. SIAM J. Sci. Comput. 37, A488–A514 (2015)

Wen, R.P., Li, S.Z.: An ℓ-step modified augmented Lagrange multiplier algorithm for completing a Toeplitz matrix. Math. Appl. 32(4), 887–899 (2019)

Wen, R.P., Li, S.Z., Duan, Y.H.: Toeplitz matrix completion via smoothing augmented Lagrange multiplier algorithm. Appl. Math. Comput. 355, 299–310 (2019)

Wen, R.P., Li, S.Z., Zhou, F.: A semi-smoothing augmented Lagrange multiplier algorithm for low-rank Toeplitz matrix completion. J. Inequal. Appl. 2019, Article ID 83 (2019)

Wen, R.P., Liu, L.X.: The two-stage iteration algorithms based on the shortest distance for low-rank completion. Appl. Math. Comput. 314, 133–141 (2017)

Wen, Z.W., Yin, W.T., Zhang, Y.: Solving a low-rank factorization model for matrix completion by a non-linear successive over-relaxation algorithm. Math. Program. Comput. 4, 333–361 (2012)

Xu, W., Qiao, S.Z.: A fast SVD algorithm for square Hankel matrices. Linear Algebra Appl. 428, 550–563 (2008)

Ying, J., Lu, H., Wei, Q., Cai, J.F., Guo, D., Wu, J., Chen, Z., Qu, X.: Hankel matrix nuclear norm regularized tensor completion for n-dimensional exponential signal. IEEE Trans. Signal Process. 65, 3702–3717 (2017)

Acknowledgements

The authors are very much indebted to the editor and anonymous referees for their helpful comments and suggestions. And the authors are so thankful for the support from the NSF of Shanxi Province (201901D211423), the CSREP in Shanxi (No. 2019KJ035), and the SYYJSKC-1803.

Availability of data and materials

Not applicable.

Funding

It is not applicable.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wen, R., Fu, Y. Toeplitz matrix completion via a low-rank approximation algorithm. J Inequal Appl 2020, 71 (2020). https://doi.org/10.1186/s13660-020-02340-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-020-02340-w