Abstract

Background

The Measurement Tool to Assess systematic Reviews (AMSTAR) 2 is a critical appraisal tool for systematic reviews (SRs) and meta-analyses (MAs) of interventions. We aimed to perform the first AMSTAR 2-based quality assessment of heart failure-related studies.

Methods

Eleven high-impact journals were searched from 2009 to 2019. The included studies were assessed on the basis of 16 domains. Seven domains were deemed critical for high-quality studies. On the basis of the performance in these 16 domains with different weights, overall ratings were generated, and the quality was determined to be “high,” “moderate,” “low,” or “critically low.”

Results

Eighty-one heart failure-related SRs with MAs were included. Overall, 79 studies were of “critically low quality” and two were of “low quality.” These findings were attributed to insufficiency in the following critical domains: a priori protocols (compliance rate, 5%), complete list of exclusions with justification (5%), risk of bias assessment (69%), meta-analysis methodology (78%), and investigation of publication bias (60%).

Conclusions

The low ratings for these potential high-quality heart failure-related SRs and MAs challenge the discrimination capacity of AMSTAR 2. In addition to identifying certain areas of insufficiency, these findings indicate the need to justify or modify AMSTAR 2’s rating rules.

Similar content being viewed by others

Introduction

Since the 1970s, systematic reviews (SRs) and meta-analyses (MAs) began to gain prominence as the science of research synthesis began and play a role in providing evidence-based information to drive decision making. These publications are now widely used in clinical and policy decisions, making it imperative to verify their quality. A high-quality study follows standard protocols and reports relevant details at every step to facilitate readers’ understanding of its results. Various guidelines have been proposed for SRs and MAs, including the Cochrane Handbook, Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), Quality of Reporting of Meta-analyses (QUORUM), and Meta-analyses Of Observational Studies in Epidemiology (MOOSE) [1,2,3,4]. Appraisal tools such as the Measurement Tool to Assess systematic Reviews (AMSTAR); AMSTAR 2; revised AMSTAR (R-AMSTAR); Scottish Intercollegiate Guidelines Network (SIGN) checklist; Grading of Recommendations, Assessment, Development and Evaluations (GRADE) framework; and Risk of Bias Assessment Tool for Systematic Reviews (ROBIS) have also been developed to evaluate these studies [5,6,7,8,9,10].

AMSTAR was developed in 2007 on the basis of the Cochrane Handbook for Systematic Reviews of Interventions [1, 5]. It primarily focuses on the correct methodology to ensure reliable results and serves as a brief checklist of items needed for high-quality reviews. After a decade of extensive use, an updated version, AMSTAR 2, based on users’ experience and feedback, was published in 2017 [6]. AMSTAR 2 is a domain-based rating system with seven critical domains and nine non-critical domains. Instead of generating a total score, AMSTAR 2 evaluates the overall quality based on performance in critical and non-critical domains, which are assigned different weights in the rating rules. As a part of the advancements from AMSTAR, which only appraises SRs based on randomized controlled trials (RCTs), AMSTAR 2 also appraises SRs of non-randomized studies of interventions (NRSIs) using specific contents covering a different risk of bias (RoB) assessment and meta-analysis methodology. AMSTAR 2 also tightens the rules for appraisal of each domain by retaining only “yes” or “no” responses and removing “not applicable” and “cannot answer” responses. Since 2017, approximately 100 publications have used AMSTAR 2 to evaluate SRs in different areas [11,12,13,14,15,16,17]. However, reports using this tool in the field of heart failure (HF) have been limited. Therefore, we identified HF-related SRs and MAs from high-impact journals over the last 10 years and assessed their quality using AMSTAR 2.

Methods

Data source and study selection

A systematic search was conducted in August 2019 by two independent reviewers (Lin Li and Abdul Wahab Arif to identify all HF-related SRs and MAs published between January 2009 and July 2019 in 11 major medical and cardiovascular journals: Annals of Internal Medicine (Annals IM), Circulation, Circulation: Heart Failure, European Heart Journal (EHJ), European Journal of Heart Failure (EJHF), Journal of American College of Cardiology (JACC), Journal of American College of Cardiology: Heart Failure (JACC HF), Journal of American Medical Association (JAMA), Journal of American Medical Association: Internal Medicine (JAMA-IM), Lancet, and the New England Journal of Medicine (NEJM). These journals were selected based on their high impact factors and their focus on publishing high-quality SRs and MAs related to HF.

We performed manual searches for the terms “meta” and “review” in the titles and abstracts on the journals’ websites and excluded papers that did not include MAs and those unrelated to HF. Because AMSTAR 2 was designed for the SRs and MAs of healthcare interventions, we further excluded papers focused on epidemiology, diagnosis, prognosis, and etiology. Moreover, AMSTAR 2 was not intended to deal with MAs of individual patient data or network MAs, so such papers were also excluded.

Data extraction and quality assessment by AMSTAR 2

Two authors (Lin Li and Abdul Wahab Arif) independently reviewed the full texts and Online supplemental material of each included study. We extracted information, including PubMed ID (PMID), publication year, journal, authors, type of study (RCT- or NRSI-based), Cochrane or non-Cochrane SR, and number of citations.

Two authors (Lin Li and Iriagbonse Asemota) independently used the AMSTAR 2 online checklist to evaluate each included study [18]. Disagreements were resolved by a third reviewer, Lifeng Lin, while referring to AMSTAR 2’s original paper, supplements, and the relevant chapters in the Cochrane Handbook [1, 6]. The seven critical domains and nine non-critical domains are listed in Table 1. We recorded the answers to all 16 domains. All answers were categorized as “yes” or “no” in AMSTAR 2. “Partial yes” was allowed in some domains to identify partial adherence to standard protocols. If the answer was “no” to a specific domain, this domain was labeled as “weak.”

Subsequently, we rated the studies as high-, moderate-, low-, or critically low-quality. The rules of the overall rating based on these 16 domains are listed below [6].

High

No or one non-critical weakness: The systematic review provides an accurate and comprehensive summary of the results of the available studies that address the question of interest.

Moderate

More than one non-critical weakness*: The systematic review has more than one weakness but no critical flaws. This may provide an accurate summary of the results of the available studies included in the review.

Low

One critical flaw with or without non-critical weaknesses: The review has a critical flaw and may not provide an accurate and comprehensive summary of the available studies that address the question of interest.

Critically low

More than one critical flaw with or without non-critical weaknesses: The review has more than one critical flaw and should not be relied on to provide an accurate and comprehensive summary of the available studies.

*Multiple non-critical weaknesses may diminish confidence in the review, and it may be appropriate to move the overall appraisal down from moderate to low confidence.

We explored the inter-rater reliability (IRR) by using Cohen’s kappa coefficient for each domain. A value < 0 indicates no agreement; 0–0.20, slight agreement; 0.21–0.40, fair agreement; 0.41–0.60, moderate agreement; 0.61–0.80, substantial agreement; and 0.81–1, almost perfect agreement [19].

Results

Literature search and study characteristics

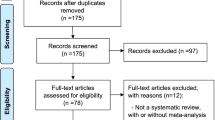

We included 81 SRs and MAs on HF-related interventions from eight journals (Fig. 1). None of the articles from NEJM, Lancet, and JAMA were included due to the aforementioned inclusion and exclusion criteria. Of the 81 articles, 56 studies were based on RCTs, 13 were based on NRSIs, and 12 included both RCTs and NRSIs. Nine studies were Cochrane systematic reviews, whereas 72 studies were non-Cochrane reviews. As of July 2019, the average number of citations was 104 (Online Supplement 1).

Results for the 16 domains

The results for each domain are presented as percentages of “yes or partial yes” and “no” answers, as detailed in Table 1.

For Q2, only 4 (5%) studies answered “yes,” and they were either registered in PROSPERO or published pre-established protocols. Among the other 77 studies, 43 did not report any guidelines or protocols. The remaining 34 studies followed guidelines such as the Center for Reviews and Dissemination (CRD), PRISMA, Cochrane Handbook, QUOROM, and MOOSE [1,2,3,4, 20].

The answer for Q9 was “no” in 25 (31%) studies because the RoB assessment was not satisfactory. For RCT-based studies, most of the “no” answers were attributed to the lack of RoB assessment tools, for which the most commonly used appropriate tools were the Cochrane instrument and JADAD [21, 22]. Similarly, for the NRSI-based studies, most of the “no” answers were attributed to the lack of reporting tools. The most commonly used tools were the Risk Of Bias In Non-randomized Studies-of Interventions (ROBINS), Strengthening the Reporting of Observational Studies in Epidemiology (STROBE), Newcastle-Ottawa Scale (NOS), and United States Preventive Services Task Force (USPSTF) checklists [23,24,25,26]. For studies based on both RCTs and NRSIs, the insufficiency was caused by the fact that one tool covered RCTs but failed to cover NRSIs, or vice versa. The appropriate tools reported in these studies that can cover both RCTs and NRSIs were the Cochrane instrument and Downs and Black checklist [1, 27]. The answer for Q12 was “no” in 59 (73%) studies. This is another RoB-related domain that examines how results vary with inclusion or exclusion of studies with a high RoB [6]. Among the 22 studies with “yes” answers to Q12, 13 had low RoB, six used sensitivity analyses, two used subgroup analyses, and one used meta-regression analyses.

The answer for Q11 was “no” in 18 (22%) studies. One of the 56 RCT-based MAs failed Q11 because it included no investigation of heterogeneity. Seven of the 13 NRSI-based MAs failed Q11 because they did not report confounder-adjusted estimates and instead used raw data for pooling. Among the 12 studies based on both RCTs and NRSIs, 10 failed Q11 because of failure to clarify the NRSI data source or failure to report summaries separately for RCTs and NRSIs. The answer for Q14 was “no” in 12 (15%) studies due to a lack of a satisfactory explanation for heterogeneity. Nineteen studies reported no significant heterogeneity, and 17 used meta-regression analyses. Nine used subgroup analyses, eight used sensitivity analyses, and 16 explained the heterogeneity narratively.

Cohen’s kappa varied among the 16 domains, but most of the values were in the range of acceptable agreement. Fair, moderate, substantial, and perfect agreement were recorded for three (Q1, Q12, and Q13), three (Q8, Q9, and Q14), four (Q3, Q5, Q10, and Q11), and eight (Q2, Q4, Q6, Q7, Q15, and Q16) domains, respectively (Online Supplement 2).

Overall ratings

Although AMSTAR 2 stresses that it is not intended to generate an overall score, we completed the assessment process and rated these 81 studies as two low-quality reviews and 79 critically low-quality reviews following the rules mentioned above.

Discussion

Based on our literature review from 2009 to 2019, this is the first study to apply AMSTAR 2 in highly cited HF-related SRs and MAs. Sixteen domains evaluated each step of the conduct of SRs and MAs. AMSTAR 2 has been previously used in various fields, including psychiatry, surgery, pediatrics, endocrinology, rheumatology, and cardiovascular disease, and many publications have reported substantial numbers of SRs with low to critically low quality [28,29,30,31,32,33]. Shan Shan et al. in prior literature called it a “floor effect” because of the lack of discrimination capacity of the tool, raising questions about its high standard and practical value [34]. In this study, we observed similar findings for high-impact SRs and MAs related to HF. This raises the dilemma that either the AMSTAR 2 standard is unreasonable, or that these high-impact SRs and MAs are of low quality. We can further break down this question into whether AMSTAR 2’s 16 domains and its backbone Cochrane guidelines are impractical, whether AMSTAR 2’s overall rating rules are unreasonable, whether there were actual defects in these SRs and MAs, and whether there was under-reporting that should be formalized to cover the information gap and facilitate readers’ understanding of these studies.

The guidelines and evaluation systems for SRs and MAs are a rapidly evolving field, with new or updated guides released every few years [1,2,3,4,5,6,7, 10, 20, 35,36,37,38,39]. AMSTAR 2 was published in 2017, when most SRs were using the Cochrane, QUORUM, PRISMA, and MOOSE guidelines. Until 2020, less than 200 publications in PubMed used AMSTAR 2. In our review, none of the 81 studies used AMSTAR 2 as a guide. Therefore, some non-compliance can be attributed to the fact that these guidelines did not follow AMSTAR 2 from the beginning to conduct and report the study. Additionally, the strict “yes or no” rule in AMSTAR 2 precluded responses like “not applicable,” “cannot answer,” or “not reported,” forcing the evaluators to choose “no” for potentially under-reporting studies and categorize certain domains as “weak.” This probably explains why the results of quality assessment by AMSTAR 2 generally appear worse than those performed by AMSTAR in previous reviews [15].

The 95% “no” rate for the critical domains Q2 and Q7 played a major role in the overall low rating of these 81 studies, since failing one critical domain resulted in an overall low quality. These two critical domains were included since the AMSTAR in 2007. The Cochrane Handbook mentions that “All Cochrane reviews must have a written protocol, specifying in advance the scope and methods to be used by the review, to assist in planning and reduce the risk of bias in the review process.” [1] However, none of the nine Cochrane SRs included here reported pre-established protocols. PRISMA checklist also mentions, “If registered, provide the name of the registry (such as PROSPERO) and registration number,” suggesting that registration is not compulsory. Unsurprisingly, none of the 19 studies using the PRISMA checklist reported pre-established protocols. The same is true for MOOSE and QUORUM. Apparently, pre-registration or publishing a pre-established protocol is not yet a common practice for SRs, but more studies have been following this step in recent years [40,41,42,43]. Q7 is based on the same content as in Cochrane Handbook Chapter 4, with the further instruction that “the list of excluded studies should be as brief as possible. It should not list all of the reports that were identified by an extensive search.” [1] However, a complete list of exclusions is not mandated by the PROSPERO guidelines [44], nor in PRISMA or QUORUM. Although exclusion is an essential step during study selection in SRs and MAs, the various guidelines have not arrived at a consensus regarding reporting a complete list of exclusions, and there is potential difficulty for publication [45]. Thus, the designation of Q2 and Q7 as critical domains and the decision to assign an overall poor quality to studies failing them needs to be justified.

Almost half of the SRs are now based on NRSIs; an increasing number of NRSIs are based on large databases that provide a better real-world picture. Although these studies can be more precise, they are also more easily confounded [46]. Thus, one of the major advancements in AMSTAR 2 from AMSTAR is the inclusion of different RoB assessments and data-pooling methods for NRSIs. The 31% “no” answers for Q9 resulted from the lack of reporting tools or incorrectly used tools. Choosing the right RoB tool for RCTs and NRSIs remains a big challenge for authors of SRs because of the large number of tools available [47]. The lack of homogeneity among various tools also makes comparisons difficult. The RoB assessment tool for RCTs recommended by AMSTAR 2 is the Cochrane Collaboration tool, and that for NRSIs is ROBINS-I [1, 23]. In our study, different tools were eligible for the Q9 assessment. However, the same RCT or NRSI study rated as showing a low RoB by one tool may be rated as showing an elevated RoB by another more comprehensive tool. This discrepancy affected the RoB-related domains Q12 and Q13, and eventually led to different overall quality ratings, raising questions regarding AMSTAR 2’s reliability and consistency. The non-critical domain Q12 is also mentioned in the Cochrane Handbook and PROSPERO registration; however, there is no consensus regarding analysis of the influence of RoB in other checklists [1,2,3,4, 44]. Q11 further clarifies the difference between RCTs and NRSIs in terms of data-pooling methodology. The rationale for enhanced pooling of fully adjusted estimates in NRSIs is that the data adjusted by confounders may generate very different results in comparison with raw data. Deficiencies in heterogeneity investigations were another finding for Q11, which are detailed in a recent paper reviewing HF-related MAs [48]. However, the multiple domains assessing RoB and NRSI reflect their overall importance in SRs and MAs. Future researchers should better understand the differences in RoB between RCTs and NRSIs, choose RoB tools wisely, address their influence objectively, and avoid using raw data from NRSIs for meta-analysis.

The final underperforming critical domain was Q15, which covers the investigation of publication bias. Funnel plots are the best known and most commonly used method to assess publication bias or small-study effects, but at least 10 studies are needed to reliably show funnel plot asymmetry [1, 49]. Most of the SRs answered “no” for Q15 because they included less than 10 studies and did not report graphical or statistical tests of publication bias. This issue in AMSTAR 2 may need to be addressed in a future study.

In summary, AMSTAR 2 is an appraisal tool based on the Cochrane Handbook that focuses on the conduct of SRs and MAs in healthcare interventions. Our evaluation of highly cited HF-related SRs and MAs by using AMSTAR 2 helped us identify areas of insufficiency and highlighted the scope for improvements in future studies, including a priori protocol or pre-registration, the addition of a full exclusion list with justifications, appropriate RoB assessments, and caution while combining NRSI data. These findings reflect the core values of the AMSTAR 2 and Cochrane guidelines in avoiding bias. However, compared to the most commonly used guidelines mentioned above, AMSTAR 2 is relatively new and advanced. Thus, consensus among various guidance and assessment tools is essential before it can be considered as the standard. Using a “new” tool to judge older SRs and call them “low quality” is not the conclusion of this study. However, a perfect tool does not exist. AMSTAR 2 does not include justifications for designating certain domains as critical and others as non-critical and does not explain the underlying rules used to categorize studies as “high” versus “low” quality. Considering these aspects, the interaction between AMSTAR 2 and these high-impact SRs yielded some findings of interest, which can be expected to facilitate the validation of AMSTAR 2 and provide feedback for its future development.

Limitations

We thoroughly searched all published materials related to these 81 studies, but we did not contact the review authors to clarify the “cannot answer” domains or “not reported” contents. If we had done so, some answers would have changed because of the potential underreporting in some domains, particularly Q7 and Q10. We also did not change certain non-critical domains to critical domains and vice versa for different studies, as allowed by AMSTAR 2. However, we did not include MAs that summarize the known literature base or SRs without MAs, which may question the critical nature of Q4, Q7, Q11, and Q15.

References

Higgins JPT TJ, Chandler J, Cumpston M, Li T, Page MJ, Welch VA. Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Cochrane. 2020; Available from www.training.cochrane.org/handbook.

David Moher AL. Jennifer Tetzlaff, Douglas G Altman, PRISMA Group, Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Int Med. 2009;151(4):264–9. https://doi.org/10.7326/0003-4819-151-4-200908180-00135.

Moher D, D.J.C, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUORUM statement. Quality of Reporting of Meta-analyses. Lancet. 1999;354(9193):1896–900. https://doi.org/10.1016/s0140-6736(99)04149-5.

Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA. 2000;283(15):2008–12. https://doi.org/10.1001/jama.283.15.2008.

Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMJ. 2007;7:10. https://doi.org/10.1186/1471-2288-7-10.

Beverley J, Shea BCR, Wells G, Thuku M, Hamel C, Moran J, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358:j4008. https://doi.org/10.1136/bmj.j4008.

Jason Kung FC. Olivia O Cajulis, Raisa Avezova, George Kossan, Laura Chew, Carl A Maida, From Systematic Reviews to Clinical Recommendations for Evidence-Based Health Care: Validation of Revised Assessment of Multiple Systematic Reviews (R-AMSTAR) for Grading of Clinical Relevance. Open Dentistry J. 2010;4:84–91. https://doi.org/10.2174/1874210601004020084.

Penny Whiting JS. Julian P T Higgins, Deborah M Caldwell, Barnaby C Reeves, Beverley Shea, Philippa Davies, Jos Kleijnen, Rachel Churchill, et al, ROBIS: A new tool to assess risk of bias in systeamtic reviews was developed. J Clin Epidemiol. 2016;69:225–34. https://doi.org/10.1016/j.jclinepi.2015.06.005.

Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924–6. https://doi.org/10.1136/bmj.39489.470347.AD.

Scottish Intercollegiate Guidelines Network (SIGN) Methodology Checklist 1: Systematic Reviews and Meta-analyses. . Available from: https://www.sign.ac.uk/what-we-do/methodology/checklists/.

Maylynn Ding LS. Jae Hung Jung, Philipp Dahm, Low Methodological Quality of Systematic Reviews Published in the Urological Literature (2016-2018). Urology. 2020;138:5–10. https://doi.org/10.1016/j.urology.2020.01.004.

Shih-Chieh Shao L-TK, Chien R-N, Hung M-J, Lai EC-C. SGLT2 inhibitors in patients with type 2 diabetes with non-alcoholic fatty liver disease: an umbrella review of systematic reviews. BMJ Open Diabetes Research & Care. 2020;8(2):e001956. https://doi.org/10.1136/bmjdrc-2020-001956.

Alvaro Sanabria LPK. Iain Nixon, Peter Angelos, Ashok Shaha, Randall P Owen, Carlos Suarez, Alessandra Rinaldo, Alfio Ferlito, International Head and Neck Scientific Group, Methodological Quality of Systematic Reviews of Intraoperative Neuromonitoring in Thyroidectomy: A Systematic Review. JAMA Otolaryngol Head Neck Surg. 2019;145(6):563–73. https://doi.org/10.1001/jamaoto.2019.0092.

Chiara Arienti SGL, Pollock A, Negrini S. Rehabilitation interventions for improving balance following stroke: An overview of systematic reviews. PLoS One. 2019;14(7). https://doi.org/10.1371/journal.pone.0219781.

Hye-Ryeon Kim C-HC, Jo E. A Methodological Quality Assessment of Meta-Analysis Studies in Dance Therapy Using AMSTAR and AMSTAR 2. Healthcare. 2020;8(4):446. https://doi.org/10.3390/healthcare8040446.

Katja Matthias OR, Pieper D, Morche J, Nocon M, Jacobs A, Wegewitz U, et al. The methodological quality of systematic reviews on the treatment of adult major depression needs improvement according to AMSTAR 2: A cross-sectional study. Heliyon. 2020;6(9):e04776. https://doi.org/10.1016/j.heliyon.2020.e04776.

Victoria Leclercq CB, Tirelli E, Bruyère O. Psychometric measurements of AMSTAR 2 in a sample of meta-analyses indexed in PsycINFO. J Clin Epidemiol. 2020;119:144–5. https://doi.org/10.1016/j.jclinepi.2019.10.005.

AMSTAR online checklist. Available from: https://amstar.ca/Amstar_Checklist.php.

Mchugh ML. Interrater reliability: the kappa statistic. Biochemia Medica (Zagreb). 2012;22(3):276–82.

Tacconelli E. Systematic reviews: CRD’s guidance for undertaking reviews in health care. Lancet Infect Dis. 2010;10(4):226. https://doi.org/10.1016/S1473-3099(10)70065-7.

Jadad AR, Moore RA, Carroll D, Jenkinson C, Reynolds DJ, Gavaghan DJ, et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Controlled Clin Trials. 1996;17(1):1–12. https://doi.org/10.1016/0197-2456(95)00134-4.

Jonathan AC, Sterne JS, Page MJ, Elbers RG, Blencowe NS, Boutron I, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019;366:l4898. https://doi.org/10.1136/bmj.l4898.

Jonathan Ac Sterne MAH, Reeves BC, Savović J, Berkman ND, Viswanathan M, Henry D, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919. https://doi.org/10.1136/bmj.i4919.

Jan P. Vandenbroucke, E.v.E., Douglas G Altman, Peter C Gøtzsche, Cynthia D Mulrow, Stuart J Pocock, Charles Poole, James J Schlesselman, Matthias Egger, STROBE Initiative, Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): explanation and elaboration. Int J Surg. 2014;12(12):1500–24. https://doi.org/10.1016/j.ijsu.2014.07.014.

Lo CK-L, Mertz D, Loeb M. Newcastle-Ottawa Scale: comparing reviewers’ to authors’ assessments. BMC Med Res Methodol. 2014;14:45. https://doi.org/10.1186/1471-2288-14-45.

Harris RP, Helfand M, Woolf SH, Lohr KN, Mulrow CD, Teutsch SM, et al. Current Methods of the U.S. Preventive Services Task Force: A Review of the Process. Am J Prev Med. 2001;20(3 Suppl):21–35. https://doi.org/10.1016/s0749-3797(01)00261-6.

Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health. 1998;52(6):377–84. https://doi.org/10.1136/jech.52.6.377.

Almeida MO, Yamato TP, do Carma Silva Parreira P, Costa LOP, Kamper S, Saragiotto BT. Overall confidence in the result of systematic reviews on exercise therapy for chronic low back pain: a cross-sectional analysis using the Assessing the Methodological Quality of Systematic Reviews (AMSTAR) 2 tool. Braz J Phys Ther. 2020;24(2):103–17. https://doi.org/10.1016/j.bjpt.2019.04.004.

Patricia Rios RC, Morra D, Nincic V, Goodarzi Z, Farah B, Harricharan S, et al. Comparative effectiveness and safety of pharmacological and non-pharmacological interventions for insomnia: an overview of reviews. Syst Rev. 2019;8(1):281. https://doi.org/10.1186/s13643-019-1163-9.

Rachel Perry VL. Chris Penfold, Philippa Davies, An overview of systematic reviews of complementary and alternative therapies for infantile colic. Syst Rev. 2019;8(1):271. https://doi.org/10.1186/s13643-019-1191-5.

Shan-Shan Lin C-XL, Zhang J-H, Wang X-L, Mao J-Y. Efficacy and safety of oral chinese patent medicine combined with conventional therapy for heart failure: an overview of systematic reviews. Evid Based Complement Altern Med. 2020;2020:8620186. https://doi.org/10.1155/2020/8620186.

Mendoza JFW, Latorraca CDOC, de Ávila Oliveira R, Pachito DV, Martimbianco ALC, Pacheco RL, et al. Methodological quality and redundancy of systematic reviews that compare endarterectomy versus stenting for carotid stenosis. BMJ Evid Based Med. 2019;26(1):14–8. https://doi.org/10.1136/bmjebm-2018-111151.

Xiao Zhu XS. Xiaoqiang Hou, Yanan Luo, Xianyun Fu, Meiqun Cao, Zhitao Feng, Total glucosides of paeony for the treatment of rheumatoid arthritis: a methodological and reporting quality evaluation of systematic reviews and meta-analyses. Int Immunopharmacol. 2020;88:106920. https://doi.org/10.1016/j.intimp.2020.106920.

Lorenz RC, Matthias K, Pieper D, Wegewitz U, Morche J, Nocon M, et al. AMSTAR 2 overall confidence rating: lacking discriminating capacity or requirement of high methodological quality ? J Clin Epidemiol. 2020;119:142–4. https://doi.org/10.1016/j.jclinepi.2019.10.006.

Sacks HS, J.B, Reitman D, Ancona-Berk VA, Chalmers TC. Meta-analyses of randomized controlled trials. NEJM. 1987;316(8):450–5. https://doi.org/10.1056/NEJM198702193160806.

Oxman AD, Guyatt GH. Validation of an index of the quality of review articles. J Clin Epidemiol. 1991;44(11):1271–8. https://doi.org/10.1016/0895-4356(91)90160-b.

Oxman AD, Cook DJ, Guyatt GH. Users’ guides to the medical literature. VI. How to use an overview. Evidence-Based Medicine Working Group. JAMA. 1994;272(17):1367–71. https://doi.org/10.1001/jama.272.17.1367.

Edoardo Aromataris RF, Godfrey CM, Holly C, Khalil H, Tungpunkom P. Summarizing systematic reviews: methodological development, conduct and reporting of an umbrella review approach. Int J Evid Based Healthcare. 2015;13(3):132–40. https://doi.org/10.1097/XEB.0000000000000055.

PRISMA 2020. Journal of Clinical Epidemiology. 2020; 134:A5-A6. doi: https://doi.org/10.1016/j.jclinepi.2021.04.008.

Page MJ, Shamseer L, Tricco AC. Registration of systematic reviews in PROSPERO: 30,000 records and counting. Syst Rev. 2018;7(1):32. https://doi.org/10.1186/s13643-018-0699-4.

Rafael Badenes BH, Citerio G, Robba C, Aguilar G, Alonso-Arroyo A, Taccone FS, et al. Hyperosmolar therapy for acute brain injury: study protocol for an umbrella review of meta-analyses and an evidence mapping. BMJ Open. 2020;10(2):e033913. https://doi.org/10.1136/bmjopen-2019-033913.

Martin Taylor-Rowan SN, Patel A, Burton JK, Quinn TJ. Informant-based screen tools for diagnosis of dementia, an overview of systematic reviews of test accuracy studies protocol. Syst Rev. 2020;9(1):271. https://doi.org/10.1186/s13643-020-01530-3.

Araujo ZTS, Mendonça KMPP, Souza BMM, Santos TZM, Chaves GSS, Andriolo BNG, et al. Pulmonary rehabilitation for people with chronic obstructive pulmonary diesease: A protocol for an overview of Cochrane reviews. Medicine (Baltimore). 2019;98(38):e17129. https://doi.org/10.1097/MD.0000000000017129.

Guidance notes for registering a systematic review protocol with PROSPERO. 2016; Available from: www.crd.york.ac.uk/prospero.

Jr, C.M.F. Critical Appraisal of AMSTAR: challenges, limitations, and potential solutions from the perspective of an assessor. BMC Med Res Methodol. 2015;15:63. https://doi.org/10.1186/s12874-015-0062-6.

Egger M, Schneider M, Smith GD. Spurious precision ? Meta-analysis of observational studies. BMJ. 1995;316(7125):140–4. https://doi.org/10.1136/bmj.316.7125.140.

Simon Sanderson IDT, Higgins JPT. Tools for assessing quality and susceptibility to bias in observational studies in epidemiology: a systematic review and annotated bibliography. Int J Epidemiol. 2007;36(3):666–76. https://doi.org/10.1093/ije/dym018.

Khan MS, Li L, Yasmin F, Khan SU, Bajaj NS, Pandey A, et al. Assessment of heterogeneity in heart failure-related meta-analyses. Circulation: Heart Failure. 2020;13(11). https://doi.org/10.1161/CIRCHEARTFAILURE.120.007070.

Mueller KF, Meerpohl JJ, Briel M, Antes G, von Elm E, Lang B, et al. Methods for detecting, quantifying, and adjusting for dissemination bias in meta-analysis are described. J Clin Epidemiol. 2016;80:25–33. https://doi.org/10.1016/j.jclinepi.2016.04.015.

Funding

None.

Author information

Authors and Affiliations

Contributions

The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

No consent needed for this paper.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Table 1.

Characteristics of 81 systemic reviews and meta-analyses. Supplement Table 2. Inter-rater reliability analysis.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Li, L., Asemota, I., Liu, B. et al. AMSTAR 2 appraisal of systematic reviews and meta-analyses in the field of heart failure from high-impact journals. Syst Rev 11, 147 (2022). https://doi.org/10.1186/s13643-022-02029-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13643-022-02029-9