Abstract

Network coding is an emerging technique known to improve the network performance in many aspects. In Vehicular Ad-hoc Networks (VANET), the bandwidth is considered to be one of the most important network resources. In this paper, we propose a network coding technique to improve the bandwidth utilization for non-safety applications in VANET. In a scenario where there are two sources broadcasting the data into the same area at the same time, the relay will use the network coding technique to decrease the number of rebroadcasting events and the consumption of the bandwidth, However, a fundamental problem for the relay when it receives a packet, is whether to wait for a coding opportunity and save the bandwidth or send the packet directly and reduce the delay. In order to address such tradeoff, we introduce two versions of our protocol, namely buffer size control scheme (BSCS) and time control scheme (TCS); by both versions we aim to control the delay that is experienced by the packet at each hop, while achieving better bandwidth utilization.

Up to 38 % improvement in the bandwidth utilization has been recorded, and both schemes have shown a considerable amount of control on the imposed delay.

Similar content being viewed by others

1 Introduction

An Ad-hoc Network (VANET) is a mobile ad-hoc technology that turns the vehicles on the roads into network devices. With VANET, the vehicles on the road will be able to form a distributed network and thus communicate and exchange messages. A wide range of applications can benefit from such technology; safety applications that concern the road safety measures and aim to safe the road users’ lives are the most appealing class of applications that drives the development of such technology. However, in order to accelerate this development and increase the market penetration, commercial applications are required. A wide range of comfort and entertainment applications has been proposed in the literature, which includes point-of-interest notification applications, automatic-access control/parking access applications, and local electronic commerce applications [1]. In fact, one major difference between safety applications and commercial applications is their time requirements; while safety applications have a very restricted time constraint, it is relatively relaxed for commercial applications. However, the nature of the commercial applications makes them more bandwidth consuming. For example, applications such as point-of-interest notification, which informs about the presences of locally based services and/or points of interests, may lead to severe network congestion, particularly if we take into account its communication mode which requires a periodic and permanent message broadcasting [1]. Furthermore, there is a possibility of the existence of multiple sources at the same area. For example, consider the situation explained by Fig. 1; both business providers (road side unit) RSU1 and RSU2 present a point of interest, where RSU1 is a restaurant advertising its services including the new offers and the menu, while RSU2 is a gas station advertising the opening hours and the prices. Both points of interest are targeting the prospective customers who are the road users in the neighborhood. However, as shown in Fig. 1, the two business providers actually have a common area of interest; that is, there is a section of the road with a length of one or more hops, where both business providers intend to cover. In this case, we can achieve better bandwidth utilization, reduce the network collisions, and reduce the number of rebroadcasting events by using techniques such as the network coding.

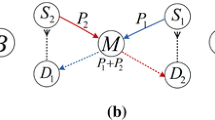

Network coding is a technique known to efficiently utilize the bandwidth by exploiting the broadcast nature of the wireless medium. Network coding reduces the number of retransmissions by allowing the relay to forward the packets and to do some sort of logic operation on them. A typical network coding operation is shown in Fig. 2. Suppose node X needs to send packet P0 to node Z, and node Z is sending packet P1 to node X. Node Y will relay the data of both node X and node Z. In the traditional routing, node Y will relay each packet in a separate event. However, using network coding, node Y will combine both packets by performing a bitwise Exclusive Or (XOR) operation on them, and sends them in one event, and therefore, both node X and node Z will receive the encoded packet; however, both nodes will be able to decode the received coded packet by XOR-ing it with their own previously sent packet. Thus, by doing so, up to 50 % of the consumed bandwidth can be saved when we use the network coding technique (see Section 3.1).

So for a situation such as the one described by Fig. 1, Instead of traditional broadcasting, where each packet from RSU1 or RSU2 needs to be separately rebroadcasted at the intermediate relay, we propose using the network coding technique to combine and encode the packets from the two sources before rebroadcasting them in one broadcasting event.

However, further issues when using the network coding technique in wireless networks are the randomness and the asymmetric nature of the traffic. Because of the stochastic nature of the packet arrivals, packets from the different sources will not be available at the same time; thus, the relay can either hold the packet waiting for the arrival of a packet from the other source in order to encode them together or send the packet directly and reduces the delay. Long waiting times induce additional delays which is not acceptable for many applications. On the other hand, in distributed networks such as VANET, it is desirable to reduce the number of the retransmissions in order to reduce the bandwidth consumption. So, when the relay receives a packet, the main question will be whether to rebroadcast the packet directly and decrease the latency or to wait until receiving a packet from the other source and combine them together to save the bandwidth.

Designing a transmission policy, that compromises the packet delay and the bandwidth consumption, is known in literature as opportunistic network coding (ONC) [2]. In ONC, a scheme that combines the traditional routing and the network coding is usually used to address the tradeoff between the delay and the bandwidth consumption.

In this work, we consider the multimedia-safety data dissemination in VANET, a situation where there are two sources at the opposite ends of the same road, and an N hop’s area of interest between the two sources. First, in order to achieve an optimum throughput and decrease the network congestions in such a scenario, we propose the relay to use the network coding technique to encode the data of both sources instead of the traditional routing. Then, in order to control the imposed delay, we introduce two versions of an ONC scheme, namely buffer size control scheme (BSCS) and time control scheme (TCS), to help the relay to decide whether to hold or to rebroadcast the received packet.

This paper is organized as follows: In the next section, we present the literature review and what has been proposed in VANET using network coding; in Section 3, the network scenario and the two versions of our protocol have been described; and Section 4 describes the simulation environment and setup in addition to the results and outcomes of our protocols along with a discussion on those outputs. The paper is concluded in Section 5.

2 The literature review

Many works in the literature propose methods to save the energy and/or the bandwidth consumption in distributed networks [3–10]. However, different methods have been used to achieve such a goal; for example, in order to save the bandwidth, authors in [11] propose a genetic algorithm to optimize the routes in a multi-constrained QoS multicast routing scenario. For the purpose of delay control and bandwidth efficiency, [12] proposes a joint forensics-scheduling scheme, which allocates the available network resources based on the affordable forensics overhead and the expected quality of service, then schedules the transmissions to meet the application’s delay constraints. The fuzzy prediction method has been proposed by [13] to avoid the shortage of the resources in the route selection process. However, network coding, firstly proposed in [14] for noiseless wired networks, allows linear/nonlinear coding of multiple information flows at intermediate nodes, and it greatly outperforms the traditional routing-based methods in terms of the achievable data rates [15]. In VANET literature, in addition to content distribution protocols [16–19], protocols use the network coding to maintain the network bandwidth and/or power consumption as well as to enhance the robustness and the reliability of the network. The authors of [20] use the network coding to propose a repetition-based MAC protocols for vehicular ad-hoc networks, which can be used for safety applications and beacon-message exchange. Assuming an erasure channel, nodes in [20] should share their knowledge of successful transmissions between each other, and then when a node has a transmission opportunity, it can XOR some of its already-received messages and send the result. The protocol argued to minimize the safety message-loss probability. However, unlike our work, [20] considers only the short beacon messages.

One the other hand, Delay-based Opportunistic Network Coding protocol (DONC) [21] aims to reduce the twofold effect of the packet loss in delay-based broadcasting protocols in VANET. DONC based on the fact that packet losses have a direct effect on the amount of the successfully received packets and indirect effect with implicit acknowledgement misses. By combining network coding with the delay-based broadcasting, DONC results in better reliability and loss recovery than in traditional delay-based broadcasting protocols. However, DONC is proposed for short safety alert messages, and it does not consider the multimedia data.

Authors of NCCARQ-MAC [22] propose that a protocol coordinates the channel access among a set of relays capable of using network coding. In NCCARQ-MAC, upon erroneous packet reception by the destination, the relay will encode the retransmission request from the destination with a newly arrived packet from the source and send them out in one broadcasting event. Since the source has a copy of the new packet while the destination has a copy of the retransmission request packet, they will both be able to decode the encoded packet. NCCARQ-MAC aims to decrease the number of rebroadcasts and to improve the throughput of the network. However, it considers only a single source-destination uni-cast communication mode.

3 Network scenario and protocol description

The aim of our proposal is to achieve a better utilization of the bandwidth and to reduce the network congestion in the multimedia data dissemination scenario. To do so, the network coding technique is used in order to reduce the number of retransmissions. Our proposal serves a situation similar to Fig. 1, where there are two sources located at the opposite ends of the same road and share N hop’s area of interest. Both sources aim to disseminate non-safety data among the vehicles in the shared section of the road between them.

Here, instead of having the relay broadcasting the packets from the different sources separately, our proposal uses the network coding technique to combine two packets from different sources and encodes them into one packet before further rebroadcasting the encoded packet in the network, and thus reduces the bandwidth consumption; however, we further investigate on how to control the imposed delay at the relay. The relay here is assumed to be able to forward the packets and perform a bitwise XOR operation on the received packets before rebroadcasting them. It is also assumed that the relay is actually aware of the average data rates that the sources are sending in.

3.1 Working example

To illustrate how our proposal will work in such scenario, let us consider the following example.

Assume we have two sources, source1 which is a restaurant advertising its services including the new offers and the menu, while the other source, source2, is a gas station advertising the opening hours and the prices.

The two sources are located N hop’s distance from each other on the same road, and each source is willing to disseminate its data in a certain area which is the shared distance between them. Thus, the packets will need to be relayed at intermediate nodes to cover all the area (see Fig. 1).

For a simple illustration, let us assume that the area of interest is two hops away from each source. So, in the traditional broadcasting scenario, in order to disseminate one packet from source1 all the way up to the end of the area of interest, the packet will be relayed at intermediate nodes; thus, the packet will be broadcasted from source1 and then relayed which make two broadcasting events; the same will happen for a packet to be disseminated by source2. Thus, disseminating one packet from source1 and one packet from source2 will cost four broadcasting events. Now, let us consider the same scenario using the network coding technique; in this case, after source1 and source2 broadcast their packets, the relay will encode the two packets into one packet and relay it. So in this case, the same scenario will cost three broadcasting events using the network coding technique rather than four using traditional broadcasting.

However, since the vehicles that are located one hop away from source1 already have a copy of the packet disseminated by source1, and the vehicles that are located one hop away from source2 have a copy of the packet that is disseminated by source2, both groups of vehicles will be able to retrieve and decode the received encoded packet.

Generally, to more precisely find how much of the bandwidth can be saved in case of the network coding, let us say we have A packets to be disseminated from source1 and B packets to be disseminated from source2. In the conventional broadcasting case, the relay will need to relay A + B packets; if we assume that each packet will consume \( \frac{1}{T} \) of the bandwidth, a total of \( \frac{A + B}{T} \) of the bandwidth will be consumed. Assuming the network coding, each packet from source1 will be combined with a packet from source2; therefore, only a number of Max (A or B) packets will be rebroadcasted. This will consume \( \frac{Max\left(A\ or\ B\right)}{T} \) of the bandwidth. Therefore, the improvement in the overall throughput will be \( \frac{Max\left(A\ or\ B\right)}{A+B} \). However, if there is the same number of packets from both directions (e.g., A = B), then up to 50 % of the consumed bandwidth can be saved when we use the network coding technique. Figure 3 shows the change in the percentage of the throughput improvement versus the ratio between the numbers of packets from both sources (e.g., B/A).

The intermediate nodes that act as relays could be either stationary nodes, where they could be RSUs attached to the light poles on the road, or they could be mobile nodes, and in this case, they might be any vehicle in the radio range of the source. We have considered both cases in this paper.

In the next section, in order to control the imposed delay at the relay, we propose two approaches for the relay when it receives a packet so as to decide whether to rebroadcast it and decrease the delay or to hold it, waiting for a coding opportunity to enhance the throughput.

3.2 The behavior of the relay

In order for the relay to decide whether it should hold the newly received packet and wait for a coding opportunity or send it out immediately to reduce the delay, we assume the following:

The relay maintains a queuing buffer to hold the data that is received from the slower source; however, if both sources are sending in the same rate, the relay will choose randomly.

When the relay receives a packet from the faster source (assume here to be source1), the relay will check the queue; if it is non-empty, the head of the queue will be encoded with the new arrival packet from source1, and the new resulted packet will be sent out. Otherwise, if the queue is empty, the packet will be sent immediately.

However if the received packet is from the slower source (e.g., source2), the relay will have to decide whether to wait for a coding opportunity or send it immediately according to the following schemes:

3.2.1 Buffer size control scheme (BSCS)

This scheme aims to control the imposed delay by controlling the size of the buffer.

Generally, as the utilization of the queue increases, the average delay per packet will increase. So in this scheme, the relay will decide to queue the packet based on the number of the packets in the queue when the packet arrived. In other words, the probability of p queuing a packet is proportional to the size of that queue when the packet arrived. Thus:

However, doing so might cause a newly arrived packet to be relayed while the older packets are still waiting for coding opportunity in the queue. This will lead the packets to be disseminated out of order and might cause the queued packets to be disseminated too late for the application to consider them.

So, instead, the relay will actually queue the new packet with probability p calculated as above, while queue the packet and release the head of the queue with probability 1 − p. Figure 4 explains the steps the relay will follow when receiving a new packet.

In the next subsection, we calculate the expected delay of such behavior.

BSCS delay analysis

Assuming the data rate for both source1 and source2 in Fig. 1 are Poisson distribution and equal to λ1 and λ2, respectively, and the queue is a M/M/1 model.

Then the arrival rate at the queue λ q is

Where P(0) is the probability of the queue to be empty. The first term represents the case when the packet will be queued, and the header gets released instead.

However, since every packet arrived from source1 will cause a packet from the queue to be released, the departure rate µ q of the queue is

Where the second term is the packet’s departure because of the arrival of the new packets at the queue.

P(0) of the M/M/1 queue can be expressed as follows:

And as the probability to queue a packet is proportional to the size of the queue, it can be expressed as follows:

Solving (4) and (5) together P (0) and p can be expressed as per Appendix.

The average queuing delay D q can be expressed as follows:

However, the average delay per packet from source2 (e.g., the slower source) D dir:

Where \( \frac{\lambda_{\mathrm{q}}}{\lambda 2} \) represents the probability of a packet to be queued.

3.2.2 Time-limited scheme (TLS)

Despite that the time constraints for non-safety applications are not tied, yet long delays are not desirable. Thus, in this scheme, the relay will actually queue all the packets that come from the slow source; however, the relay sets a delay limit to each packet. That is, the packet will not be buffered more than a certain time Tmax. So when a relay receives a packet from the slow source, it will queue it directly, but sets a timer, and after Tmax, if the packet is still in the queue, then it should be sent immediately without encoding. Figure 5 explains the steps to be followed by the relay in TLS.

TCS delay analysis

Considering the same assumption of the previous scheme, as the relay will queue all the packets from the slow source, the arrival rate of the queue will be λ2, while the departure rate remains only λ1. However, considering that the packet on average will not stay longer than Tmax in the queue, in this case, the average queue delay can be expressed as follows:

3.2.3 Pure network coding scheme

For the purpose of comparison, in this section, we describe a system that uses pure network coding (PNC) without any enhancements.

In this case, all the packets from source2 will be immediately buffered in the queue, while the packets from sources will be sent directly, either encoded if the queue is non-empty or as native packet if the queue is empty.

In this case p = 1. And (2), (3), and (6) can be rewritten as:

3.3 Stationary relay scenario

Our first scenario is when all the relays over the area of interest are stationary RSUs that are installed on the light poles. In this case, the RSUs are placed with a radio range distance between them.

3.4 Mobile relay scenario

Here, we consider the vehicles between the two sources in the area of interest to cooperate with each other in order to disseminate the data of both sources. However, the main challenge in our protocol will be how to select the vehicle that should act as a relay. Many relay selection mechanisms are introduced in the literature; however, most of them might have different relays for different packets [23].

In our case, to increase the coding opportunities, the main challenge is to have the same node to work as relay during all the periods of the transmission.

However, certain classes of clustering and/or broadcasting algorithms might be suitable to implement our protocol. In those classes, the header of the cluster will always be responsible for all the rebroadcasting operations. The methods that have been used in [23–26] are examples of such behavior.

We adopt the relay selection method that is described in [26]. In [26], the road area is divided into virtual segments or grids, with the role of the relay is assigned to one vehicle in each segment, and when the current relay leaves the segment, it will hand over all its buffered data to a new relay. In other words, the same relay will continue to relay the packets until it leaves the segment and hand its data to the new relay. For more information about the relay, readers are referred to [26].

However, in our case the width of the segment will be the width of the two directions of the road, that is, the two directions of the road will have one relay. Based on the vehicles velocity and their location from the end of the segment, the vehicle that will spend more time in the segment will be selected as the relay in that segment.

4 Simulation setup and numerical results

To simulate a scenario such as the one that is described in Section 3 and by Fig. 1. We implemented the protocol on a network simulator (i.e., NS3 version 19) and experimented using IEEE 802.11p (WAVE) MAC standard [27]. Table 1 shows the simulation parameter setting for our protocol. We set the two sources to randomly generate the packets, and we use the Poisson distribution to calculate the interval between the generated packets. We set λ2 to be 1 packet/sec; however, λ1 is varied throughout the simulation. The speeds of the vehicles were set to be between 36 and 54 km/h. When the simulation starts, 250 vehicles were distributed into a road area of 4000 m in both road directions. Then the simulation runs for 20 s before the sources started sending their data. Tmax is set to be 0.3 for the TCS, while the segment size in the mobile relay scheme is set to 250 m. However, for the stationary relay scenario, three relays were placed with a distance of 500 m between them.

4.1 Performance metrics

Our protocols are designed to achieve better bandwidth utilization while controlling the imposed delay. Hence, numbers of performance metrics have been chosen to reflect the performance of our protocols as follows:

The hop delay: This is the average time the packet from the buffered traffic might spend at each hop.

Percentage of improvement in the throughput: by this we mean how much of the bandwidth has been saved, and we calculate it as follows:

Packet Delivery Ratio: Is the percentage of the packet that are successfully received, and we calculate it as follows:

The Number Un-decoded Packets: By this we mean the received encoded packet that the node is unable to decode.

The figures below represent the observation of the behavior of our two protocols in comparison to the pure network coding for both stationary relay scenario and mobile relay scenarios.

4.2 The hop delay

Figure 6 shows the average hop delay for the packets that is sent by source2. Figure 6a represents the stationary relay scenario. In the symmetric flows case (e.g., λ1/ λ2 = 1), pure network coding will lead to unbounded delay while both BSCS and TCS schemes shows considerable amount of control on the imposed delay. On the other hand, for both pure network coding and BSCS scheme, as the ratio between the two traffic (e.g., λ1/ λ2) increases, the experienced delay decreases, e.g., when λ1/ λ2 = 1.5, the delay was 1 and 2 s for BSCS and pure NC, respectively, while when λ1/λ2 = 2.5, the delay was 0.75 s for both schemes. That is reasonable as the fact that λ1 is assumed to be the departure rate of the queue, and as the departure rate of the queue increases, the average delay in the queue decreases. It is worth to mention here that BSCS outperforms the pure network coding mainly when the two traffics are symmetric, or the ratio between them is low. The TCS scheme represents a good choice when application requires a bounded delay; here in our scenario, the delay stays almost constant at the value of Tmax (e.g., 0.3).

For the case of the mobile relay, the dynamic nature of the relay has affected the stability of the network; especially in the pure network scenario, where the network behavior was difficult to predict; however for the BSCS and TCS schemes, the performance was better than the pure network coding scenario.

4.3 The throughput improvement

In Fig. 7 the improvement in the network throughput is shown. Although, pure network coding achieves up to almost 50 % in the throughput improvement when (λ1 = λ2), yet it must be considered that, this performance costs the network unbounded delay (see Fig. 6a). Thirty-eight percent improvement in the bandwidth usage has been recorded for the BSCS scheme when λ1 = λ2 when the relay is stationary. This is to be considered a good performance, since the BSCS scheme decreases the imposed delay.

However, as the ratio between λ1 and λ2 increases, the performance of both pure network coding and BSCS become more similar. The increment in the ratio between the λ1 and λ2 causes a decrement in the improvement of the throughput.

In the TCS scheme, the improvement in the throughput remains constant despite the ratio between λ1 and λ2, which refers to the fixed coding opportunity the protocol gives to the packet despite the rate of the data.

4.4 Delivery ratio

In fact, the stability of the network in case of stationary relay leads to a higher packet delivery ratio by both protocols as shown in Fig. 8, while the mobility and the frequent change of the relays lead to a lower packet delivery ratio shown in the same figure.

4.5 Un-decodable packets

Figure 9 represents the average number of the un-decodable packets that received by a vehicle, by un-decodable packets we mean: The encoded packets that are received by the vehicle, but the vehicle was not able to decode them, because it does not have any of the packet that was included in the encoded packet. That would happen mainly because of the movement of the vehicle, e.g., sometimes if the relay has held a packet for too long and finally releases it as an encoded packet, in this case, some vehicles that are first entering the radio range of this relay will not be able to decode such a packet. However, this is one of the advantages of the time-fixed scheme where it records low un-decodable packets for both stationary and mobile relays. However, when λ1 is high enough, the packets will not wait too long in the queue and thus the number of the un-decodable packets decreases.

Figure 10, shows the effect of Tmax on the percentage of the improvement in the bandwidth in the TCS scheme. As Tmax grows higher, more throughput improvement is recorded. This might be useful in helping adjust the delay based on the application requirements.

Finally, obviously, the dynamic nature of the relay in the mobile scenario degrades the performance of the protocols, the un-stability of the network cause more packet loss and collisions which result in low-delivery ratio and long-queuing times. The number of un-decodable packets also increases in the mobile relay scenario as a result of the collision and the hidden terminal effect.

5 Conclusions

In this paper, we consider VANET non-safety applications, where two sources are disseminating the data in a common area of interest.

To reduce the network congestion, we propose using the same relay to rebroadcast the packets of both sources. And for better utilization of the bandwidth, we propose the network coding technique to be used by the relay when broadcasting the packets. By doing so, we record up to 38 % improvement in the throughput.

However, In order to control the imposed delay, two versions of our protocols have been described. Both the BSCS and TCS protocols have been tested under both stationary and mobile relay scenarios and showed a noticeable decrease in the imposed delay with respect to the pure network coding scenario.

We consider upgrading our mobile relay selection protocol, in order to improve the performance of the protocols in the fully dynamic environment.

References

G Karagiannis, O Altintas, E Ekici, G Heijenk, B Jarupan, K Lin, T Weil, Vehicular networking: a survey and tutorial on requirements, architectures, challenges, standards and solutions. Communications Surveys & Tutorials, IEEE 13(4), 584–616 (2011). doi:10.1109/SURV.2011.061411.00019. Fourth Quarter

Y.-P. Hsu, N. Abedini, S. Ramasamy, N. Gautam, A. Sprintson, and S. Shakkottai, “Opportunities for network coding: to wait or not to wait,”in Proc. 2011 IEEE International Symp. Inf. Theory, pp. 791–795

Y Zeng, K Xiang, D Li, AV Vasilakos, Directional routing and scheduling for green vehicular delay tolerant networks. Wireless networks 19(2), 161–173 (2013)

A Cianfrani, V Eramo, M Listanti, M Polverini, AV Vasilakos, An OSPF-integrated routing strategy for QoS-aware energy saving in IP backbone networks. Network and Service Management, IEEE Transactions on 9(3), 254–267 (2012). doi:10.1109/TNSM.2012.031512.110165

L Xiang, J Luo, A Vasilakos, “Compressed data aggregation for energy efficient wireless sensor networks,” Sensor, Mesh and Ad Hoc Communications and Networks (SECON), 2011 8th Annual IEEE Communications Society Conference on, vol., no., pp.46,54, 10.1109/SAHCN.2011.5984932, 2011

J Liu, J Wan, Q Wang, P Deng, K Zhou, Y Qiao, A survey on position-based routing for vehicular ad hoc networks, Springer Telecommunication Systems, 10.1007/s11235-015-9979-7, 2015

L Peng, G Song, Y Shui, AV Vasilakos, CodePipe: An opportunistic feeding and routing protocol for reliable multicast with pipelined network coding. INFOCOM, 2012 Proceedings IEEE, 2012, pp. 100–108

PBF Duarte, ZM Fadlullah, AV Vasilakos, N Kato, On the partially overlapped channel assignment on wireless mesh network backbone: a game theoretic approach. IEEE Journal on Selected Areas in Communications 30(1), 119–127 (2012). doi:10.1109/JSAC.2012.120111

Y Yanjun, Qing C, AV Vasilakos, EDAL: An energy-efficient, delay-aware, and lifetime-balancing data collection protocol for wireless sensor networks. IEEE 10th International Conference on Mobile Ad-Hoc and Sensor Systems (MASS), 182–190 (2013). doi:10.1109/MASS.2013.44

PP Demestichas, VAG Stavroulaki, LM Papadopoulou, AV Vasilakos, ME Theologou, Service configuration and traffic distribution in composite radio environments. IEEE Transactions on Systems, Man, and Cybernetics, Part C: Applications and Reviews, 34(1), 69–81 (2004). doi:10.1109/TSMCC.2003.818500

Y-S Yen, H-C Chao, R-S Chang, A Vasilakos, Flooding-limited and multi-constrained QoS multicast routing based on the geneticalgorithm forMANETs. Math. Comput. Modelling 53(11–12), 2238–2250 (2011)

L Zhou, HC Chao, AV Vasilakos, ldquo, Joint forensics-scheduling strategy for delay-sensitive multimedia applications over heterogeneous networks. IEEE J. Selected Areas in Comm 29(7), 1358–1367 (2011)

A Vasilakos, C Ricudis, K Anagnostakis, W Pedryca, A Pitsillides, Evolutionary-fuzzy prediction for strategic QoS routing in broadband networks, fuzzy systems proceedings, 1998. IEEE World Congress on Computational Intelligence., The 1998 IEEE International Conference on 2, 1488–1493 (1998). doi:10.1109/FUZZY.1998.686339

R Ahlswede, N Cai, S-YR Li, RW Yeung, Network information flow. IEEE Transactions on Information Theory 46(4), 1204–1216 (2000)

M Dai, P Wang, S Zhang, B Chen, H Wang, X Lin, C Sun, Survey on cooperative strategies for wireless relay channels. Trans Emerging Tel Tech 25, 926–942 (2014). doi:10.1002/ett.2789

Z Liang, Z Yan, K Song, J Weiping, AV Vasilakos, Distributed media services in P2P-based vehicular networks. Vehicular Technology, IEEE Transactions on 60(2), 692–703 (2011). doi:10.1109/TVT.2010.2102782

U Lee, J Park, J Yeh, G Pau, M Gerla, Code torrent: content distribution using network coding in VANET, in Proceedings of the 1st international workshop on Decentralized resource sharing in mobile computing and networking, 2006, pp. 1–5

M Li, Z Yang, W Lou, CodeOn: cooperative popular content distribution for vehicular networks using symbol level network coding. IEEE J. Sel. A. Commun. 29(1), 223–235 (2011)

Z Yang, M Li, and W Lou, CodePlay: Live multimedia streaming in VANETs using symbol-level network coding, in Proceedings of the 18th IEEE International Conference on Network Protocols. 2010, IEEE Computer Society. p. 223–232

B Hassanabadi, Z Le, S Valaee, Index coded repetition-based MAC in vehicular ad-Hoc networks, in Consumer Communications and Networking Conference, 2009. CCNC 2009. 6th IEEE, 2009

FH Mirani, A Busson, C Adjih, DONC: delay-based opportunistic networkcoding protocol, ad hoc networking workshop (MED-HOC-NET), 2013 12th Annual Mediterranean, 2013, pp. 34–41. doi:10.1109/MedHocNet.2013.6767407

A Antonopoulos, C Skianis, C Verikoukis, Network coding-based cooperative ARQ scheme for VANETs. Journal of Network and Computer Applications 36(3), 1001–1007 (2013)

S.A.M. Ahmed, S.H.S. Ariffin, and N. Fisal, Survey on broadcasting in VANET. Research Journal of Applied Sciences, Engineering and Technology, 2014. 7(18)

S.-H. Cha, Lee K.-W., and H.-S. Cho, Grid-based predictive geographical routing for inter-vehicle communication in urban areas. International Journal of Distributed Sensor Networks, 2012. 2012.

Y Luo, W Zhang, Y Hu, A new cluster based routing protocol for VANET, IEEE wireless communications and trusted computing, 2010

S Hamato, S Ariffin, N Fisal, Contention free time efficient broadcasting protocol for safety applications in VANETs. International Review on Computers and Software (IRECOS) 9(11), 1923–1931 (2014). http://dx.doi.org/10.15866/irecos.v9i11.4551

S.A.M Ahmed, S.H.S. Ariffin, and N. Fisal, Overview of wireless access in vehicular environment (WAVE) protocols and standards. Indian Journal of Science and Technology, 2013

Acknowledgements

The authors would like to thank all those who contributed toward making this research successful. The authors wish to express their gratitude to the Ministry of Higher Education (MOHE), Malaysia Research Management Center (RMC) for the sponsorship, and Telematic Research Group (TRG), Universiti Teknologi Malaysia for the financial support and advice for this project. (Vot number Q.J130000.2623.08J19).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Ahmed, S.A.M., Ariffin, S.H.S. & Fisal, N. Network coding techniques for VANET advertising applications. J Wireless Com Network 2015, 200 (2015). https://doi.org/10.1186/s13638-015-0421-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13638-015-0421-3