Abstract

Statistical signal processing applications usually require the estimation of some parameters of interest given a set of observed data. These estimates are typically obtained either by solving a multi-variate optimization problem, as in the maximum likelihood (ML) or maximum a posteriori (MAP) estimators, or by performing a multi-dimensional integration, as in the minimum mean squared error (MMSE) estimators. Unfortunately, analytical expressions for these estimators cannot be found in most real-world applications, and the Monte Carlo (MC) methodology is one feasible approach. MC methods proceed by drawing random samples, either from the desired distribution or from a simpler one, and using them to compute consistent estimators. The most important families of MC algorithms are the Markov chain MC (MCMC) and importance sampling (IS). On the one hand, MCMC methods draw samples from a proposal density, building then an ergodic Markov chain whose stationary distribution is the desired distribution by accepting or rejecting those candidate samples as the new state of the chain. On the other hand, IS techniques draw samples from a simple proposal density and then assign them suitable weights that measure their quality in some appropriate way. In this paper, we perform a thorough review of MC methods for the estimation of static parameters in signal processing applications. A historical note on the development of MC schemes is also provided, followed by the basic MC method and a brief description of the rejection sampling (RS) algorithm, as well as three sections describing many of the most relevant MCMC and IS algorithms, and their combined use. Finally, five numerical examples (including the estimation of the parameters of a chaotic system, a localization problem in wireless sensor networks and a spectral analysis application) are provided in order to demonstrate the performance of the described approaches.

Similar content being viewed by others

1 Introduction

1.1 Motivation: parameter estimation in statistical signal processing applications

Statistical inference deals with the estimation of a set of unknowns given a collection of observed data contaminated by noise and possibly by some other types of distortions and interferences [1]. In many signal processing applications, this typically amounts to inferring some static parameters of interest from the noisy observations [2–4]Footnote 1. For instance, in denoising applications, the aim is reconstructing the original signal (e.g., an audio recording or an image) from the noisy observations [5]. An extended version of this problem occurs in blind deconvolution, where a noisy filtered signal is available and the goal is to recover both the unknown filter and the input [6]Footnote 2. Finally, as a third application, target localization/tracking in wireless sensor networks requires estimating/tracking the location of the target (maybe jointly with some parameters of the system, such as the noise variance, the propagation constant or even the position of the sensors) from measurements recorded by the sensors [9, 10].

In the Bayesian framework, all the aforementioned problems are addressed by formulating a prior distribution, which should gather all the available information about the parameters of interest external to the data, and assuming an input-output model (the likelihood), that incorporates our knowledge or lack thereof on the way in which the observed data relate to the unknown parameters [11]. Then, Bayes theorem allows us to obtain the posterior distribution, which takes into account both the effect of the prior information and the observed data in an optimal way. Finally, the desired Bayesian point estimators are obtained by minimizing a pre-defined cost function that can typically be expressed either as some integral measure with respect to (w.r.t.) the posterior or as some optimization problem. For instance, the well-known minimum mean squared error (MMSE) estimator corresponds to the conditional mean of the parameters of interest given the data (i.e., the expected value of the posterior distribution), whereas the maximum a posteriori (MAP) estimator corresponds to the value of the parameters where the posterior attains its highest peakFootnote 3. Note that a similar procedure is also followed by frequentist methods (i.e., in the end they also attempt to minimize some cost function which is either expressed as some integral measure or formulated as an optimization problem), even though they are completely different from a conceptual point of view. Indeed, the frequentist maximum likelihood (ML) estimator simply corresponds to the Bayesian MAP estimator with a uniform prior. Hence, although we focus on Bayesian approaches in the sequel, all the techniques mentioned here are also applicable in a frequentist context.

Unfortunately, obtaining closed-form expressions for any of these estimators is usually impossible in real-world problems. This issue can be circumvented by using approximate estimators (e.g., heuristic estimators in the frequentist context or variational Bayesian approximations) or by restricting the class of models that were considered (e.g., in the case of Bayesian inference by using only conjugate priors). However, with the increase in computational power and the extensive development of Monte Carlo methods, Bayesian inference has been freed from the use of a restricted class of models and much more complicated problems can now be tackled in a realistic way. In the following section, we briefly review the history of Monte Carlo methods, pointing out the key developments and some of the most relevant algorithms that will be described in detail throughout the paper. Note that, apart from MC methods, there are several alternative techniques for approximating integrals in statistical inference problems [12]: asymptotic methods, multiple quadrature approaches, and subregion adaptive integration. However, these schemes cannot be applied in high-dimensional problems and MC algorithms become the only feasible approach in many practical applications. Another related topic which is not covered here due to space constraints is variational Bayesian inference. However, the interested reader can check some of the existing tutorials (and references therein) for an overview these methods [13, 14].

1.2 Framework: Monte Carlo methods

The so-called Monte Carlo (MC) methods encompass a large class of stochastic simulation techniques that can be used to solve many optimization and inference problems in science and engineering. Essentially, MC methods proceed by obtaining a large pool of potential values of the desired parameters and substituting the integrations by sample averages. In practice, these parameter values can be obtained either by physically replicating the desired experiment or by characterizing it probabilistically and generating a set of random realizations.

The origin of MC methods can be traced back to Buffon’s experiments to compute an empirical value on the St. Petersburg game,Footnote 4 and the formulation of his famous experiment (nowadays commonly known as Buffon’s needle) to calculate the value of π [16, 17]Footnote 5. Buffon’s needle experiment became quite well known after it was mentioned by Laplace in 1812 [18], and several scientists attempted to replicate his experiment during the last quarter of the ninteenth century [19–22]Footnote 6. Meanwhile, other statisticians were experimenting with different mechanisms to generate random numbers (e.g., using cards, a roulette or dice) to verify empirically, through some kind of primitive stochastic simulation, their complicated statistical procedures [26]. Another example of simulation in statistical computations occurred at the beginning of the twentieth century, when William Gosset (“Student”) published his famous papers, where he investigated the distribution of the t-statistic and the correlation coefficient [27, 28]Footnote 7. Finally, Leonard H. C. Tippett devised a way to systematically draw random numbers for his experiments on extreme value distributions and published a list of random digits that was used by subsequent researchers [31, 32]. However, all these approaches occurred before the advent of computers and aimed only at solving some particular problem at hand, not at providing some general simulation method (except for Galton’s approach [33], which provided a generic way to draw normal random variables (RVs) for all types of applications, but failed to gain widespread acceptance).

In spite of all these early attempts to perform stochastic simulation (a.k.a. statistical sampling), the formulation of the MC method as we know it today did not happen until the construction of the first computers in the 1940sFootnote 8. Stanislaw Ulam, a Polish mathematician working at Los Alamos National Laboratory, devised the MC method while convalescing from an illness in 1946 [36, 38]. He was playing solitaire and trying to calculate the probability of success (a difficult combinatorial problem) when he realized that an easier way to accomplish that task (at least in an approximate way) would be to play a certain number of hands and compute the empirical success probability. On his return to Los Alamos, he learnt of the new computers that were being built from his close friend John von Neumann, a consultor both at Los Alamos and the Ballistics Research Laboratory (where the first computer, the ENIAC, was being developed), and discussed the possibility of developing a computer-based implementation of his idea to solve difficult problems in statistical physics. Von Neumann immediately recognized the relevance of Ulam’s idea and sketched an approach to solve neutron diffusion/multiplication problems through computer-based statistical sampling in a 1947 letter to Robert Richtmyer (head of the Theoretical Division at Los Alamos) [38]. The method was then successfully tested on 9 neutron transport problems using ENIAC and Nicholas Metropolis coined the name “Monte Carlo”, inspired by an uncle of Stan Ulam who borrowed money from relatives because “he just had to go to Monte Carlo” [36, 37]. The seminal paper on MC was then published in 1949 [39], more powerful computers were developed (like the MANIAC in Los Alamos [35]), and many physicists started using computer-based MC methods to obtain approximate solutions to their problems [40]. MC methods required an extensive supply of random numbers, and the development of the essential random number generators required by MC methods also started during those years. For instance, von Neumann described the rejection sampling (RS) method in a 1947 letter to Ulam [38] (although it was not published until 1951 [41]) and Lehmer introduced linear congruential random number generators in 1951 [42].

The next milestone in statistical sampling was the development of the Metropolis-Hastings (MH) algorithm. The MH algorithm was initially devised by Nicholas Metropolis et al. in 1953 as a general method to speed up the computation of the properties of substances composed of interacting individual molecules [43]. The idea is rather simple: random uniformly distributed moves of particles around their current position were proposed; if the global energy of the system was decreased, these moves were always accepted; otherwise, they were accepted only with some non-null probability that depended on the energy increase (the larger the increase, the less likely the move to be accepted). Rejected moves were also used to compute the desired averages. Metropolis et al. proved that the method was ergodic and samples were drawn from the desired distribution. This approach can be seen as a Markov chain, with an RS sampling step at the core to ensure that the chain has the desired invariant probability density function (PDF), and thus Markov chain Monte Carlo (MCMC) methods were born. A symmetric proposal density was considered in [43]. In 1970, Hastings showed that non-symmetric proposal densities could also be used [44], thus allowing for much more flexibility in the method, and proposed a generic acceptance probability that guaranteed the ergodicity of the chain. In the meantime, a different acceptance probability rule had been proposed by Barker in 1965 [45], and it remained to be seen which rule was better. This issue was settled in 1973 by Peskun (a Ph.D. student of Hastings), who proved that the Hastings acceptance rule was optimal [46]. The MH algorithm was extensively used by the physics community since the beginning, but few statisticians or engineers were aware of it until the 1990s [47].

Another crucial event in the history of MC methods was the introduction, by Stuart Geman and Donald Geman in 1984, of a novel MCMC algorithm, the Gibbs sampler, for the Bayesian restoration of images [48]. The Gibbs sampler became very popular soon afterwards, thanks to the classical 1990 paper of Gelfand and Smith [49], who gave examples of how the Gibbs sampler could be applied in Bayesian inference. Andrew Gelman showed in 1992 that the Gibbs sampler was a particular case of the MH algorithm [50], thus causing a renewed interest in the MH algorithm by statisticians. Then, Tierney wrote an influential paper on the history and theory of the MH algorithm in 1994 [51], where he showed how it could be used to deal with non-standard distributions in Bayesian inference. Simple explanations of the Gibbs sampler and the MH algorithm also appeared in the 1990s [52, 53], and those two methods started being applied for all sort of problems during the following years: medicine [54], econometrics [55], biostatistics [56], phylogenetic inference [57], etc. Indeed, the MH algorithm has become so popular since its re-discovery in the early 1990s that it was named one of the top 10 algorithms in the 20th century by the IEEE Computing in Science & Engineering Magazine [58].

The first signal processing applications of MCMC followed soon after Geman and Geman’s publication of the Gibbs sampler (indeed, their original application involved a signal processing problem: the denoising of images). In the 1990s, both the MH algorithm and the Gibbs sampler were applied to several signal processing problems: blind identification, deconvolution, and equalization [59–63]; denoising and restoration of missing samples in digital audio recordings [5, 64–66]; reconstruction of the images obtained in computed tomography [67, 68]; parameter estimation of time-varying autoregressive (AR) models [69, 70]; etc. Then, Fitzgerald published the first tutorial on MCMC methods for signal processing applications in 2001 [71], and the first special issue on MC methods for statistical signal processing (edited by Petar Djuric and Simon Godsill) appeared in 2002 [72]. During these years, tutorial papers on the related areas of signal processing for wireless communications and machine learning also appeared [73, 74], as well as another review paper on MC methods for statistical signal processing [75].

The second large family of Monte Carlo methods are the so-called importance sampling (IS) and its adaptive versions (AIS). Unlike MCMC techniques, where candidate samples can be either accepted or discarded, IS methods employ all the generated candidates, assigning them a weight according to their “quality”. IS was first used in statistical physics in order to estimate the probability of nuclear particles to penetrate shields [76]. During the following decades, IS was extensively used as a variance reduction technique (especially for rare event simulation) in a large variety of applications: operations research [77], simulation of stochastic processes [78], other problems in statistical physics [79, 80], digital communications [81, 82], computer reliability [83], inventory systems [84], etc. In the 1970s and 1980s, several authors also applied the IS principle in Bayesian inference problems when direct sampling from the posterior distribution was either impossible or impractical [85–87]. The limitations of the IS approach were also recognized at this time: the performance of IS-based estimators critically depends on the choice of the proposal, with good proposals leading to a substantial decrease in variance and bad proposals resulting in a very poor performance (with a potentially infinite variance from a theoretical point of view). In order to solve these issues, the multiple IS (MIS) approach and alternative weighting schemes (like the so called deterministic mixture (DM)) were proposed in the 1990s [88–91]. During these years, sequential importance sampling (SIS) methods (a.k.a. particle filters) were also developed as an alternative to the Kalman filter for the estimation of dynamic parameters [92, 93]. These methods are also based on the IS methodology, with weights that are sequentially updated as new observations become available. See the companion tutorial in this special issue for a detailed review of sequential Monte Carlo (SMC) methods, which essentially correspond to SIS with resampling [93].

However, IS techniques did not become widely known to all computational statistics, machine learning and statistical signal processing practitioners until the 2000s. In 2001, Iba published a cross-disciplinary survey in which he grouped several algorithms where “a set of ‘walkers’ or ‘particles’ is used as a representation of a high-dimensional vector” under the generic name of population Monte Carlo algorithms [94]. Soon afterwards, Cappé et al. published their influential population Monte Carlo (PMC) paper [95], where they borrowed the name coined by Iba for their proposed AIS framework. In short, [95] showed that previously drawn samples can be used to adapt the proposal in order to reduce the variance of the desired estimators. The original PMC algorithm considered a set of Gaussian proposals with different variances and means selected from the previous population through a multinomial resampling step, where particles were selected with a probability proportional to their IS weights. This classical or standard PMC algorithm is numerically unstable and shows a poor performance in many practical applications, but opened the door to other improved PMC algorithms, like the mixture PMC (M-PMC) [96] or the recent deterministic mixture PMC (DM-PMC) [97]. Furthermore, the success of PMC-based approaches renewed the interest in IS techniques for the estimation of static parameters, encouraging authors to develop other AIS methods, like the adaptive multiple importance sampling (AMIS) [98] or the adaptive population importance sampling (APIS) [99] algorithms.

Finally, let us remark that many important advances have occurred in the field of Monte Carlo methods during the last 20 years: adaptive MCMC techniques that increase the acceptance rate and decrease the correlation among samples, gradient-based MCMC methods which improve the performance in high-dimensional parameter spaces, multiple candidate MCMC algorithms for a higher efficiency in sample generation, generalized sampling and weighting schemes in MIS algorithms for a reduced variance of the desired estimators, the combination of MCMC and AIS techniques in order to exploit their complementary strong points and minimize their drawbacks, etc. A detailed description of all these methods is provided in this survey. Other important topics that are also briefly covered here are the following: the pseudo-marginal MCMC framework [100], approximate Bayesian computation (ABC) [101, 102], the application of Monte Carlo algorithms in a big data context [103], noisy MCMC methods, and approximated likelihood algorithms.

1.3 Related articles, books, and software packages

The literature on MC methods is rather vast, with many technical reports, journal papers, conference articles, books, and book chapters that cover different aspects of the many existing MC algorithms. In this section, we provide a brief summary (which intends to be illustrative rather than exhaustive) of the articles and books that provide a tutorial introduction or an overview of several aspects of MC methods and closely related topics. At the end of the section we also describe some of the most relevant software packages which are freely available to implement several important MC algorithms. Note that these articles, books, and/or software packages often concentrate on some particular class of MC algorithms, and the user has to select the most appropriate family of methods and software for the specific problem. In particular, note that different MCMC methods have different convergence properties, and therefore we encourage users to be careful and select the most reliable algorithm for their problem.

On the one hand, many excellent books are entirely devoted to the general theory and practice of MC methods [104–109]. However, none of these books is specifically written with signal processing practitioners in mind and they are 5–14 years old, thus not covering several important recently developed algorithms. On the other hand, several books are also devoted to specific classes of MC methods. For instance, [110] and [111] focus on particle filters for tracking applications and random set models respectively, [112] details several different state-space processors (including those based on particle filters), [113] is entirely devoted to the theoretical and practical aspects of SMC methods, and [114] covers Bayesian filtering and smoothing techniques from Kalman to particle filters. Finally, several books address the related topic of random variable generation [115–119], which is an essential issue for MC algorithms, and some of these books also contain one or more chapters on MC methods (e.g., Chapter 7 of [118, 119]).

There are also many other journal papers and conference articles that provide tutorial descriptions of MC methods, but they are either more than 10 years old, differ in scope from the present paper, or cover only some specific class of MC algorithms. The first tutorial on MC methods for signal processing practitioners (as far as we know), covering classical MC techniques (e.g., the MH algorithm, the Gibbs sampler, and reversible jump MCMC) for parameter estimation and model selection, appeared in 2001 [71]. Similar tutorials for wireless communications [73], including also SIS and SMC schemes, and machine learning [74], where simulated annealing and the MC-EM algorithm are described, shortly followed. Then, another tutorial on MC methods for signal processing was published in 2004 and focused on recent advances in MCMC algorithms and particle filters [120]. More recently, Green et al. published a tutorial on Bayesian computation that partially overlaps with the current survey (e.g., it includes MALA, the HMC algorithm, and particle MCMC) [121]. A survey specifically focused on different Multiple Try MCMC methods can be found in [122], whereas Robert et al. [123] have recently published in arXiv another overview on algorithms to accelerate MCMC that briefly discusses several methods included in this paper (like MTM, HMC, or adaptive MCMC). Several surveys that concentrate exclusively on importance sampling methods have also been published recently [124–126].

Finally, note that many toolboxes and specific software implementations (in Matlab, Python, R, and other programming languages) of the different algorithms described in this survey are freely available online. Due to their importance, let us mention three of the main existing environments for MC computation: BUGS, JAGS, and StanFootnote 9. On the one hand, BUGS (Bayesian inference Using Gibbs Sampling) is a software package that allows the user to specify a statistical model by simply stating the relationships between related variables [127–129]. The software includes an “expert system” that determines the appropriate MCMC scheme (based on the Gibbs sampler) for analysing the specified model. On the other hand, JAGS (Just Another Gibbs Sampler) is a program for the analysis of Bayesian hierarchical models using MCMC simulation [130]. It provides a cross-platform engine for the BUGS language, allowing users to write their own functions, distributions, and samplers. Finally, Stan is a flexible probabilistic programming language that allows users to specify their statistical models and then perform Bayesian inference using MCMC methods (NUTS and HMC), ABC or ML estimation [131, 132]. Stan has Python and R interfaces, as well as wrapper packages for Matlab, Julia, Stata, and Mathematica.

1.4 Acronyms, notation, and organization

Table 1 provides a list of the acronyms used throughout the paper, whereas Table 2 summarizes the main notation. Moreover, the following rules will be followed regarding the notation:

-

Vectors and matrices will be denoted in boldface (e.g., y and C), with vec{y1,…,yL} denoting the vectorization operation, i.e., the stacking of a set of vectors (y1,…,yL) of dimension Dy×1 in order to construct a single vector \(\mathbf {y} \in \mathbb {R}^{LD_{\mathbf {y}}}\). Capital boldface symbols are used for matrices, whereas lowercase boldface symbols are used for vectors.

Table 1 List of acronyms used Table 2 Summary of the main notation used throughout the paper -

The notation θ¬i will be used to denote a vector with the ith component removed, i.e., \(\boldsymbol {\theta }_{\neg i} = [\theta _{1}, \ldots, \theta _{i-1}, \theta _{i+1}, \ldots, \theta _{D_{\theta }}]^{\top }\phantom {\dot {i}\!}\).

-

Capital letters will be used to denote random variables (e.g., X), while lowercase letters are used for their realizations (e.g., x).

-

When required, properly normalized PDFs will be indicated by using a bar (e.g., \(\bar {\pi }\) and \(\bar {q}\)), whereas their non-negative unnormalized versions will be indicated by the same letter without the bar (e.g., π and q).

-

The notation x∼p(X) indicates that a realization x of the random variable X is drawn from the PDF p.

-

We use an argument-wise notation for the different normalized and unnormalized densities used throughout the text. For instance, π(θ) denotes the Dθ-dimensional target, whereas π(θd|θ¬d) denotes the one-dimensional full conditional density of the dth parameter.

-

The notation \(\mathbb {E}_{p}({g})\) will be used to denote the mathematical expectation of the function g w.r.t. the PDF p.

Regarding the structure of the paper, let us remark that we concentrate on the use of MCMC methods for the estimation of static parameters, although the extension of some of these techniques to a dynamical setting will be occasionally discussed. This choice is motivated by two facts: the need to keep the length of the tutorial within reasonable bounds and the existence of two recent review papers on AIS methods [126, 133]. However, two sections detailing the different IS and AIS techniques, as well as the use of IS-within-MCMC, have also been included for the sake of completeness. Regarding the selection of the methods covered, we have tried to include the most relevant MC algorithms from the different families, following a chronological order from the classical (and usually simpler) MC methods to the more recent and sophisticated ones. Finally, note that the main focus of the paper is describing the different MC algorithms in a unified way by using a consistent notation which is amenable to signal processing practitioners. However, some theoretical aspects are also briefly discussed, as well as the main advantages and limitations of each algorithm.

The rest of the paper is organized as follows. First of all, the mathematical background is provided in Section 2. The Bayesian framework for statistical inference and the basic MC algorithm are briefly reviewed here (Section 2.1), altogether with RS, which lies at the heart of MCMC methods (Section 2.2). Then, Section 3 describes in detail many of the most relevant MCMC algorithms for signal processing applications: the MH algorithm, the Gibbs sampler, and their combined use (Section 3.1); adaptive MCMC methods (Section 3.2); gradient-based algorithms (Section 3.3); and other advanced MCMC schemes (Section 3.4). A short dicussion on MCMC convergence diagnostics (Section 3.5) is also included here. This is followed by Section 4, where IS techniques are described: standard IS vs. multiple IS (Section 4.1); adaptive IS (Section 4.2); group IS (Section 4.7); and sequential IS (Section 4.8). Some convergence results on IS and AIS (Section 4.3) are also included here, as well as a short discussion on the variance of the IS estimator and the choice of the optimal proposal (Section 4.4), a note on the estimation of the effective sample size (Section 4.5), and a description of proper weighting schemes (Section 4.6). This is followed by the description of different schemes for the use of IS-within-MCMC in Section 5: multiple try approaches for static (Section 5.1) and dynamic parameters (Section 5.2); pseudo-marginal MCMC methods (Section 5.3); noisy MCMC algorithms (Section 5.4); and approximated likelihood methods (Section 5.4.2). Finally, the performance of many of the described methods is demonstrated through several numerical simulations in Section 6: two simple examples for MCMC and IS methods (Sections 6.1 and 6.2); the estimation of the parameters of a chaotic system (Section 6.3); a localization problem in wireless sensor networks (Section 6.4); and a spectral estimation application (Section 6.5). A discussion of the reviewed methods concludes the paper in Section 7.

2 Mathematical formulation

2.1 Bayesian inference and the Monte Carlo method

Let us assume that we have a dataset, \(\mathbf {y}=\text {vec}\{\mathbf {y}_{1}, \ldots, \mathbf {y}_{L}\} \in \mathbb {R}^{LD_{\mathrm {y}}}\) with \(\mathbf {y}_{i} \in \mathbb {R}^{D_{\mathrm {y}}}\), which depends on some static parameter vector, \(\boldsymbol {\theta }=[\theta _{1}, \ldots, \theta _{D_{\theta }}]^{\top } \in \Theta \subseteq \mathbb {R}^{D_{\theta }}\). From a Bayesian point of view, all the information required to solve any task related to θ (e.g., inference or optimization problems) is contained in the posterior or target PDF, \(\bar {\pi }(\boldsymbol {\theta }|\mathbf {y})\). Using Bayes rule, this posterior can be expressed as

where ℓ(y|θ) is the likelihood, that depends on the statistical input-output model assumed; p0(θ) is the prior PDF, which summarizes all the information available about θ external to the observation of the data; Z(y) is the marginal likelihood (a.k.a. as model evidence or partition function in some contexts), a normalizing term which does not depend on θ; and π(θ|y) is the target function, a non-negative definite function (i.e., π(θ|y)≥0 for all \(\boldsymbol {\theta } \in \Theta \subseteq \mathbb {R}^{D_{\theta }}\) and \(\mathbf {y} \in \mathbb {R}^{LD_{\mathrm {y}}}\)) such that \(\int _{\Theta }{\pi (\boldsymbol {\theta }|\mathbf {y})\ d\boldsymbol {\theta }} = Z(\mathbf {y})\) with Z(y)≠1 in general.

Now, let us assume that we want to compute the following integral,

where g(θ) can be any integrable function w.r.t. \(\bar {\pi }(\boldsymbol {\theta }|\mathbf {y})\). For instance, when g(θ)=θ this integral becomes the well-known minimum mean squared error (MMSE) estimator of the parameter θ [2–4],

which is widely used in many statistical signal processing applications and corresponds to the conditional expectation of θ w.r.t. the posterior PDF.

Unfortunately, obtaining an analytical solution of these integrals is usually unfeasible in many practical problems of interest. In these cases, an approximate solution of (2) can be obtained through the Monte Carlo (MC) method shown in Algorithm 1. Essentially, the MC method simply consists of obtaining a set of independent and identically distributed (IID) samples of the parameter vector to be inferred and using them to approximate the desired integral by means of an unweighted sum. These M samples, θ(m), can be obtained either by sampling directly from the target PDF (i.e., the posterior \(\bar {\pi }(\boldsymbol {\theta }|\mathbf {y})\)), as shown in Algorithm 1, or by replicating the physical procedure where the desired parameters are involved. Note that the subindex M in \(\widehat {I}_{M}\) denotes the number of samples involved in the estimation.

The MC estimate of I provided by Eq. (4) is unbiased, i.e., \(\mathbb {E}_{\bar {\pi }}({\widehat {I}_{M}}) = I\). Moreover, by the strong law of large numbers, \(\widehat {I}_{M} \to I\) almost surely (a.s.) as M→∞ [104]. Furthermore, if g(θ) is square integrable w.r.t. \(\bar {\pi }(\boldsymbol {\theta }|\mathbf {y})\), then we can use the central limit theorem (CLT) to state the following result [104]:

where \(\overset {d}{\to }\) denotes convergence in distribution, and

Note that (5) is equivalent to stating that \(\widehat {I}_{M} \overset {d}{\to } \boldsymbol {\mathcal {N}}(I,V_{M})\) as M→∞.

Unfortunately, Algorithm 1 cannot be applied in many practical problems, because we cannot draw samples directly from \(\bar {\pi }(\boldsymbol {\theta }|\mathbf {y})\). In these cases, if we can perform point-wise evaluations of the target function, π(θ|y)=ℓ(y|θ)p0(θ), we can apply other types of Monte Carlo algorithms: rejection sampling (RS) schemes, Markov chain Monte Carlo (MCMC) techniques, and importance sampling (IS) methods. These two large classes of algorithms, MCMC and IS, are the core of this paper and will be described in detail in the rest of this work. Before, we briefly recall the basis of the RS approach, which is one of the key ingredients of MCMC methods, in the following section.

2.2 Rejection sampling (RS)

The RS method is a classical Monte Carlo technique for universal sampling that can be used to generate samples virtually from any target density \(\bar {\pi }(\boldsymbol {\theta })\) by drawing from a simpler proposal density \(\bar {q}(\boldsymbol {\theta })\)Footnote 10. The sample is either accepted or rejected by applying an adequate test to the ratio of the two PDFs, and it can be easily proved that accepted samples are actually distributed according to the target density [115]. The RS algorithm was originally proposed by John von Neumann in a 1947 letter to Stan Ulam [38], but it was not published until 1951 [41]. In its original formulation, von Neumann considered only a uniform proposal PDF, but the algorithm was later generalized to allow drawing samples from any proposal density from which sampling is straightforward. In the standard RS algorithm [41, 115], we first draw a sample from the proposal PDF, θ′∼q(θ) and then accept it with probability

where C is a constant such that Cq(θ) is an envelope function for π(θ), i.e., Cq(θ)≥π(θ) for all θ∈Θ. We can summarize this procedure in an equivalent way: at the tth iteration, draw a sample \(\boldsymbol {\theta }^{(t)} \sim \bar {q}(\boldsymbol {\theta })\) and \(u \sim \boldsymbol {\mathcal {U}}([0,1])\); if \(u \leq \frac {\pi (\boldsymbol {\theta }^{(t)})}{Cq(\boldsymbol {\theta }^{(t)})}\), accept θ(t), otherwise, reject it; when the desired number of samples have been drawn from \(\bar {\pi }(\boldsymbol {\theta })\), stop. Algorithm 2 summarizes the generation of M samples from the target PDF using the standard RS algorithm.

The RS algorithm is a simple MC method for approximating the integral in Eq. (2) that can be universally applied as long as the upper bound C can be found. However, it has several important drawbacks that hinder its practical application:

-

1.

For complicated targets, finding a bound C such that Cq(θ)≥π(θ) for all θ∈Θ can be difficult, especially for high-dimensional parameter spaces.

-

2.

Even if this bound can be found, the RS algorithm can be very inefficient if the ratio \(\frac {\pi (\boldsymbol {\theta })}{Cq(\boldsymbol {\theta })}\) is small for a large portion of the parameter space. Indeed, the acceptance probability of the RS algorithm is given by

$$ P_{A} = \int_{\Theta}{\frac{\pi(\boldsymbol{\theta})}{Cq(\boldsymbol{\theta})} \bar{q}(\boldsymbol{\theta})\ d\boldsymbol{\theta}} = \frac{Z_{\pi}}{CZ_{q}}, $$(8)where \(Z_{\pi } = \int _{\Theta }{\pi (\boldsymbol {\theta })\ d\boldsymbol {\theta }}\) and \(Z_{q} = \int _{\Theta }{q(\boldsymbol {\theta })\ d\boldsymbol {\theta }}\). Depending on the target and the proposal selected, this PA can be very low (this happens when CZq≫Zπ), thus rendering the RS algorithm useless in practice. For this reason, many RS approaches have been specifically designed for drawing efficiently from a specific target distribution [134, 135]. For example, efficient random number generators based on RS schemes can be found for the Gamma, Beta, and Nakagami distributions [136–140].

-

3.

The number of iterations required to generate M samples, T, is a random variable with an expected value \(\mathbb {E}_{}{(T)} = \frac {M}{P_{A}}\) and PA given by (8). Hence, the exact time required to generate M valid samples cannot be set a priori, and this can be a serious problem in many applications.

One way to tackle some of these difficulties is by constructing the proposal q(θ) adaptively, using some of the so called adaptive RS (ARS) methods. The ARS algorithm was originally proposed by Gilks and Wild in 1992 [141], and several generalized ARS algorithms have been proposed since then [142–150]. However, the need to have Cq(θ)≥π(θ) for all θ∈Θ and the difficulty of constructing the adaptive proposals in high-dimensional parameter spaces limit the applicability of those generalized ARS algorithms [119, 151], rendering MCMC and IS approaches more efficient in general, and thus preferable for practical applications. For further information see Chapters 3 and 4 in [119].

3 Markov chain Monte Carlo (MCMC)

According to Definition 7.1 of [104], an MCMC method is any method producing an ergodic Markov chain whose stationary density is the desired target PDF, \(\bar {\pi }({\boldsymbol {\theta }})\). In the following, we detail some of the most relevant MCMC algorithms, starting from the basic building blocks (the MH algorithm and the Gibbs sampler) in Section 3.1, and ending up with several advanced adaptive (Section 3.2), gradient-based (Section 3.3), and other advanced MCMC schemes (Section 3.4). Note that we focus on describing the different algorithms rather than on their theoretical properties, although a brief discussion on the validity of the MH algorithm (due to its importance as the basis of most MCMC algorithms) is provided in Section 3.1.2.

3.1 MCMC basic building blocks: Metropolis-Hastings and Gibbs samplers

3.1.1 Metropolis-Hastings (MH) algorithm

As mentioned in the introduction, the MH algorithm was initially devised by Nicholas Metropolis et al. in 1953 as a general method to speed up the computation of the properties of substances composed of interacting individual molecules [43]. In a two-dimensional square with N particles, studying these properties requires computing 2N-dimensional integrals, an impossible task (both analytically or numerically) for the large values of N required in practice. A naive MC simulation would consist in drawing particle configurations uniformly at random and assigning them weights proportional to \(\exp (-E/K\boldsymbol {\mathcal {T}})\), where E is the energy of the configuration, K≈1.38×10−23 is Boltzmann’s constant and \(\boldsymbol {\boldsymbol {\mathcal {T}}}\) is the absolute temperature (in Kelvin degrees)Footnote 11. Nevertheless, Metropolis et al. devised the following alternative modified MC scheme:Footnote 12

-

1.

Place the N particles in an initial arbitrary configuration (e.g., in a regular lattice) within the square.

-

2.

At the tth iteration (t=1,…,T) and for i=1,…,N:Footnote 13

-

(a)

Propose a move for the ith particle, located at \(\boldsymbol {\theta }_{i}^{(t-1)}\), according to the following rule:

$$\begin{array}{*{20}l} \boldsymbol{\theta}_{i}^{\prime} = \boldsymbol{\theta}_{i}^{(t-1)} + \kappa \boldsymbol{\xi}^{(t)}, \end{array} $$(9)where κ is a user-defined parameter that represents the maximum allowed displacement per iteration, while \(\boldsymbol {\xi }^{(t)} \sim \boldsymbol {\mathcal {U}}([-1,1) \times [-1,1))\).

-

(b)

Compute the change in energy caused by the move, \(\Delta E_{i}^{(t)}\). If the energy is not increased (i.e., \(\Delta E_{i}^{(t)} \le 0\)), then accept the move, setting \(\boldsymbol {\theta }_{i}^{(t)} = \boldsymbol {\theta }_{i}^{\prime }\). Otherwise (i.e., if the energy is increased and thus \(\Delta E_{i}^{(t)} > 0\)), accept the move with probability \(\exp (-\Delta E_{i}^{(t)}/K\boldsymbol {\mathcal {T}})\), thus setting \(\boldsymbol {\theta }_{i}^{(t)} = \boldsymbol {\theta }_{i}^{\prime }\), or reject it with probability \(1-\exp (-\Delta E_{i}^{(t)}/K\boldsymbol {\mathcal {T}})\), thus letting \(\boldsymbol {\theta }_{i}^{(t)} = \boldsymbol {\theta }_{i}^{(t-1)}\).

-

(a)

-

3

All the different configurations, \(\boldsymbol {\theta }_{i}^{(t)}\) for t=1,…,T, are used to compute the desired averages.

Intuitively, the MH algorithm can be seen as a generalized rejection sampler whose proposal depends on the result of the previous iteration (i.e., on θ(t−1)). Furthermore, the acceptance rate also depends on θ(t−1) and the value of θ(t−1) is re-used whenever a candidate sample θ′ is rejected. This creates an undesired effect, since the drawn samples are no longer independent as in the RS algorithm, but allows us to work with proposal densities that may lie below the target. This is due to the fact that the underlying Markov chain has the desired target as the limiting invariant distribution (e.g., see [104] for a rigorous proof). Another useful perspective is to view the method as a thinning of a random walk in precisely the right way to ensure convergence to the correct target. Loosely speaking, the chain is thinned by discarding those candidates which correspond to moves from the current state that happen too often, and this is done with the right probability to ensure that the invariant distribution of the Markov chain is exactly the desired target. See the excellent tutorial (but rigorous) exposition of the MH algorithm provided by Chib and Greenberg for further information about this issue [53].

In this algorithm, the proposal for the tth iteration and the ith particle is \(\bar {q}(\boldsymbol {\theta }_{i}|\boldsymbol {\theta }_{1:N}^{(t-1)}) = \bar {q}(\boldsymbol {\theta }_{i}|\boldsymbol {\theta }_{i}^{(t-1)}) = \boldsymbol {\mathcal {U}}([\theta _{i,1}^{(t-1)}-\kappa,\theta _{i,1}^{(t-1)}+\kappa) \times [\theta _{i,2}^{(t-1)}-\kappa,\theta _{i,2}^{(t-1)}+\kappa))\), whereas the target is \(\bar {\pi }(\boldsymbol {\theta }) \propto \exp (-E(\boldsymbol {\theta })/K\boldsymbol {\mathcal {T}})\). The acceptance probability is then given by

with \(\Delta E_{i}^{(t)} = E\left (\boldsymbol {\theta }_{1}^{(t-1)}, \ldots, \boldsymbol {\theta }_{i-1}^{(t-1)}, \boldsymbol {\theta }_{i}^{\prime }, \boldsymbol {\theta }_{i+1}^{(t-1)}, \ldots, \boldsymbol {\theta }_{N}^{(t-1)}\right) - E\left (\boldsymbol {\theta }_{1}^{(t-1)}, \ldots, \boldsymbol {\theta }_{N}^{(t-1)}\right)\). This acceptance probability guarantees the ergodicity of the chain and the convergence of the algorithm to the desired target PDF [43], but is not the only valid acceptance rule. Indeed, in 1965 Barker proposed an alternative acceptance probability for the computation of radial distribution functions in plasmas [45]:

Soon afterwards, Hastings generalized these two acceptance probabilities, allowing for non-symmetric proposals (unlike the proposals considered both by Metropolis and Barker, which were both symmetric) [44]. Using our notation, where the parameters to be estimated are denoted as θ, the two acceptance rules (αM and αB denote the generalization of Metropolis’ and Barker’s acceptance rules, respectively) become:

Finally, in 1973 Peskun proved that the acceptance rule of Eq. (12a) was optimal [46], and this settled the structure of the algorithm used nowadays [152].

The MH algorithm with the acceptance rule of Eq. (12a) is summarized in Algorithm 3. The burn-in period (Tb) is the number of initial samples removed from the empirical average in Eq. (14), which is used to compute the desired estimator, in order to guarantee that the chain has converged approximately to its stationary distribution. This period can be estimated automatically (e.g., see Section 3.5 for a brief discussion on this issue and [153] for a comparative review of different techniques to assess the convergence of a Markov chain and thus determine the burn-in period) or set to some pre-defined value, and is required by all MCMC algorithms.

One of the main advantages of the MH algorithm is that it is a very generic method that admits the use of almost any proposal and target PDFs. However, although the algorithm is valid regardless of the shape and parameters of the proposal PDF (see Section 3.1.2 for a brief review of the specific conditions for the validity of the MH algorithm), the speed of convergence and the quality of the estimators obtained substantially depend on the quality of this proposal. Many choices are possible, but here we will only consider the two most widely used (see [53] for a brief discussion on five different families of proposals):

-

Independent MH: The proposal is fixed and does not depend on the current state of the chain, i.e., \(\bar {q}(\boldsymbol {\theta }|\boldsymbol {\theta }^{(t-1)}) = \bar {q}(\boldsymbol {\theta })\). For instance, a widely used choice in this case is a multi-variate Gaussian PDF with fixed mean vector and covariance matrices: \(\bar {q}(\boldsymbol {\theta }) = \boldsymbol {\mathcal {N}}(\boldsymbol {\theta }|\boldsymbol {\mu },\mathbf {C})\). An independent proposal can be considered a global proposal, since it can generate candidate samples in the whole state space regardless of the current state of the chain. This type of proposal fosters the exploration of the state space, but its performance can be poor for complicated target PDFs (especially for high-dimensional state spaces, where it can be difficult to find a good parameterization).

-

Random walk MH: The proposal is centered on the current state of the chain, i.e., the proposed candidate at the tth iteration can be expressed as θ′=θ(t−1)+𝜗′, where 𝜗′∼p(𝜗|0,C𝜗) and p(𝜗|μ,C𝜗) is an arbitrary PDF specified using a location parameter μ and a scale parameter C. For instance, using a Gaussian PDF for 𝜗 we have \(\boldsymbol {{\vartheta }^{\prime }} \sim \boldsymbol {\mathcal {N}}(\boldsymbol {\vartheta }|\mathbf {0},\mathbf {C}_{\vartheta })\), which implies that \(\boldsymbol {{\theta }^{\prime }} \sim \bar {q}(\boldsymbol {\theta }|\boldsymbol {\theta }^{(t-1)}) = \boldsymbol {\mathcal {N}}(\boldsymbol {\vartheta }|\boldsymbol {\theta }^{(t-1)},\mathbf {C}_{\vartheta })\). If the PDF of 𝜗 is symmetric (i.e., q(𝜗|𝜗(t−1))=q(𝜗(t−1)|𝜗)), then the acceptance rule becomes:

$$ \alpha(\boldsymbol{{\theta}^{\prime}},\boldsymbol{\theta}^{(t-1)}) = \min\left[1,\frac{\pi(\boldsymbol{{\theta}^{\prime}})}{\pi(\boldsymbol{\theta}^{(t-1)})}\right]. $$(15)This is the type of proposal used by Metropolis et al. (with a uniform distribution for 𝜗) in [43], which led them to the simplified acceptance probability shown in Eq. (10). A random walk proposal can be seen as a local proposal, since it is centered on the current state of the chain. Hence, the random walk MH algorithm encourages a more local exploration around the current state.

A critical issue for the good performance of the MH algorithm is the acceptance rate (AR), which depends on the variance of the proposal PDF and should be neither too high nor too low. On the one hand, a high variance typically leads to a low AR, thus implying that the MH algorithm gets stuck because most candidate samples are rejected. On the other hand, a low variance can easily lead to a high AR, as only local moves around previously accepted samples are proposed, but can result in the MH algorithm failing to explore the target. The seminal work of Roberts, Gelman, and Wilks proved, for the random walk MH algorithm and in a simplified setting, that the proposal’s variance should be tuned in such a way that the average acceptance rate is roughly 1/4 [154]. In [155], the same authors delved deeper into this issue, showing that the optimal acceptance rate is approximately 44% for Dθ=1 and declines to 23% when Dθ→∞. These results can be extended to different settings and other methods based on the MH algorithm, like MH-within-Gibbs or Hamiltonian MC (see Sections 3.1.4 and 3.3.2, respectively), and have lead to the practical rule of thumb of choosing the variance of the proposal in order to ensure and acceptance rate between 25 and 40%. However, let us remark that several authors have proved that the optimal AR can be substantially different for other settings/methods. For instance, Bédard and Rosenthal have recently warned that the asymptotically optimal AR can be significantly different from the well-known 0.234 AR when the target’s components are not independent [156]. Indeed, in [157, 158] Bédard showed that 0.234 is the upper limit for the AR in the simplified model considered, but much lower ARs can actually be optimal. Other authors have also found that higher acceptance rates can be optimal for other algorithms that make use of gradient information, like the simplified Langevin algorithm (SLA) or the modified adaptive Langevin algorithm (MALA) (see Section 3.3.1) [159, 160].

Finally, let us remark that the local and global proposals, used by the independent and random walk MH algorithms respectively, can be combined. For instance, [161] proposes using the following small world proposal:

where qL(θ) is a local proposal centered around the current state of the chain, qG(θ) is a global proposal that allows for “wild” moves far away from the current state, and p is a small probability. Using this proposal leads to an MH algorithm with improved performance, especially for complicated heterogeneous spaces and multi-modal distributions, and can turn slowly mixing into rapidly mixing chains [161, 162].

3.1.2 Validity of the Metropolis-Hastings algorithm

Let us now take at the conditions when the MH algorithm (Alg. 3) produces samples from the desired target PDF. In order to analyze its output, let us first notice that the states θ(1),θ(2),… form a Markov chain with a certain transition density K(θ(t)|θ(t−1)). The key trick of the MH algorithm is that the algorithm has been constructed in such a way that the stationary PDF of the Markov chain is the target PDF:

One way to ensure the above is the detailed balance condition, which demands that

Integrating both sides over θ and recalling \( \int _{\Theta } K(\boldsymbol {\theta }|\boldsymbol {{\theta }^{\prime }}) d\boldsymbol {\theta } = 1\) now gives

which shows that \(\bar {\pi }(\boldsymbol {\theta })\) is the stationary PDF of the Markov chain. Furthermore, this condition also ensures that the Markov chain is reversible [104–106, 152]. The transition PDF of the MH algorithm consist of two parts—the PDF of the accepted samples and the PDF of the rejected samples. It can thus be written in the following form:

By direct computation, it can be easily verified that the detailed balance condition is satisfied (see also Theorem 7.2 of [104]).

In addition to having the correct stationary PDF, we also need to ensure that the Markov chain is ergodic. The ergodicity property ensures that the Markov chain converges to the stationary distribution with a predefined rate so that we can estimate expectations of the state distributions by computing time averages. A sufficient condition for ergodity is to ensure that the Markov chain is also an aperiodic \(\bar {\pi }\)-irreducible Harris chain, which can be ensured by the following conditions (see Equations 7.4 and 7.5 and Lemma 7.6 in [104]):Footnote 14

-

1.

The stationary distribution and the proposal PDF satisfy \(P[ \bar {\pi }(\boldsymbol {\theta }) q(\boldsymbol {{\theta }^{\prime }}|\boldsymbol {\theta }) \le \bar {\pi }(\boldsymbol {{\theta }^{\prime }}) q(\boldsymbol {\theta }|\boldsymbol {{\theta }^{\prime }}) ] < 1\).

-

2.

The proposal PDF is strictly positive everywhere in the parameter space, i.e., q(θ′|θ)>0 for all θ′,θ∈Θ.

Provided that the detailed balance condition and the aforementioned properties are satisfied, then Corollaries 7.5 and 7.7 in [104] ensure the following ergodicity properties for the MH Markov chain:

where g is an arbitrary L1 function, ∥·∥TV is the total variation norm, Kn denotes the n-step transition kernel, and \(\bar {\pi }_{0}\) is an arbitrary initial PDF. Eq. (21a) guarantees that the sample average converges to the true value of the integral, whereas (21b) ensures that the chain’s PDF converges to the target PDF regardless of the initial density.

The aforementioned conditions ensure that the chain converges to the target distribution and that time averages can be used to approximate expectations. However, the convergence of the algorithm can still be arbitrarily slow. In order to guarantee that the chain does not get stuck in some region of parameter space for large amounts of time, we need MCMC algorithms which are geometrically ergodic. An MCMC algorithm is geometrically ergodic if

for some \(C_{\bar {\pi }_{0}}\) and 0<ρ<1 giving the convergence rate. There are two main reasons why geometric ergodicity is essential. On the one hand, geometric ergodicity guarantees the existence of a Central Limit Theorem which enables error bounds to be developed. On the other hand, without geometric ergodicity algorithms are more-or-less guaranteed to give rise to sample paths with “heavy-tailed excursions” far away from the center of the distribution, thus leading to instability and inaccuracy of the subsequent parameter estimation procedures. See [163] and [164] for a more detailed discussion on geometric ergodicity on the one-dimensional and multi-dimensional cases, respectively.

3.1.3 Gibbs sampler

The Gibbs sampler was introduced by Stuart Geman and Donald Geman in 1984 in order to sample from the Markov Random Field (MRF) induced by the Gibbs distribution [48]. The application considered was the Bayesian restoration of images degraded by blurring, nonlinear deformations, and multiplicative or additive noiseFootnote 15. In order to deal with these distortions, Geman and Geman proposed a stochastic relaxation algorithm that relied on iteratively making local random changes in the image based on current values of the pixels. A simulated annealing approach, that gradually lowers the system’s “temperature” [165], was used to avoid local maxima. More precisely, using our notation the Gibbs sampler proposed in [48] was the following:

-

1.

Select an arbitrary configuration of the pixels, \(\boldsymbol {\theta }^{(0)} = \left [\theta _{1}^{(0)}, \ldots, \theta _{D_{\theta }}^{(0)}\right ]^{\top }\).

-

2.

Select the sequence of pixels (n1,n2,…) that will be visited for replacement. The sequence used in [48] corresponded to a raster scan of the image (i.e., repeteadly visiting all the sites in some “natural” fixed order), but this sequence does not necessarily have to be periodic.

-

3.

At the tth “epoch” (t=1,2,3,…), update the ntth pixel by drawing a sample from the conditional PDF of \(\theta _{n_{t}}\) given the current value of the remaining pixels, \(\theta _{n_{t}}^{(t)} \sim \bar {\pi }(\theta _{n_{t}}|\boldsymbol {\theta }_{\neg n_{t}}^{(t-1)})\) with \(\boldsymbol {\theta }_{\neg n_{t}}^{(t-1)} = [\theta _{1}^{(t-1)}, \ldots, \theta _{n_{t}-1}^{(t-1)}, \theta _{n_{t}+1}^{(t-1)}, \ldots, \theta _{D_{\theta }}^{(t-1)}]^{\top }\).

-

4.

Repeat step 3 until a pre-specified termination condition (e.g., a fixed number of iterations T) is fulfilled.

This approach can be easily generalized and adapted to many practical problems. Algorithm 4 provides a generic version of the Gibbs sampler with an arbitrary selection of the indices to be sampled. As already mentioned in the introduction, Gelman showed that the Gibbs sampler is a particular case of the MH algorithm [50]. This can be easily seen by considering the MH algorithm (Algorithm 3) with a proposal at the tth iteration given by \(q(\boldsymbol {\theta }|\boldsymbol {\theta }^{(t-1)}) = \pi \left (\theta _{d_{t}}|\boldsymbol {\theta }_{\neg d_{t}}^{(t-1)}\right) \delta \left (\boldsymbol {\theta }_{\neg d_{t}}-\boldsymbol {\theta }_{\neg d_{t}}^{(t-1)}\right)\), where δ(·) denotes Dirac’s delta. Then, \(\boldsymbol {{\theta }^{\prime }} = \left [\theta _{1}^{(t-1)}, \ldots, \theta _{d_{t}-1}^{(t-1)}, \theta _{d_{t}}^{\prime }, \theta _{d_{t}+1}^{(t-1)}, \ldots, \theta _{D_{\theta }}^{(t-1)}\right ]\) with \(\theta _{d_{t}}^{\prime } \sim \pi \left (\theta _{d_{t}}|\boldsymbol {\theta }_{\neg d_{t}}^{(t-1)}\right)\), just like in the tth iteration of the Gibbs sampler of Algorithm 4. Now, we just need to prove that θ′ is always accepted, as it happens in the Gibbs sampler. Noting that \(\pi (\boldsymbol {\theta }) = \pi (\theta _{d_{t}}|\boldsymbol {\theta }_{\neg d_{t}}) \pi (\boldsymbol {\theta }_{\neg d_{t}})\) by the chain rule of probability, the ratio inside the acceptance probability (αt) of the MH algorithm becomes:

Hence, the proposed sample (drawn from the dtth full conditional PDF) is always accepted and only the dtth coordinate is updated at the tth iteration, just like in the Gibbs sampler.

Note that we still have to specify how to select the coordinates to be sampled. In general it may be difficult to determine the best type of scan for a Gibbs sampler, as shown by Roberts and Rosenthal in [166], and many alternative approaches can be devised. However, the three most widely used schemes are the following [104]:

-

Systematic scan: The parameters are updated according to some pre-specified “canonical” order. Without loss of generality, let us consider that this order is simply \(\theta _{1}, \theta _{2}, \ldots, \theta _{D_{\theta }}\phantom {\dot {i}\!}\). Then, we have the following sequence of coordinates to be updated: \(\phantom {\dot {i}\!}d_{1}=1, d_{2}=2, \ldots, d_{D_{\theta }}=D_{\theta }, d_{D_{\theta }+1}=1, d_{D_{\theta }+2}=2, \ldots, d_{2D_{\theta }}=D_{\theta }, d_{2D_{\theta }+1}=1, \ldots \) This can be expressed more compactly as \(d_{t} = ((t-1))_{D_{\theta }} + 1\phantom {\dot {i}\!}\), where \(((t))_{D_{\theta }}\) denotes the modulo operation: \(((t))_{D_{\theta }} = m \iff t = k D_{\theta } + m\phantom {\dot {i}\!}\) for some \(k, m \in \mathbb {Z}\) with m∈{0,1,…,Dθ−1} and −∞<k<∞. In this particular case, the Gibbs sampler in Algorithm 4 can be expressed using a double FOR loop, with the inner loop running sequentially over the different parameters, as shown in Algorithm 5. In this systematic scan Gibbs sampler, which is probably the most widely used version of the algorithm in signal processing applications, one iteration of the Gibbs sampler corresponds to one step of the outer loop. Note that the total number of samples drawn from the full conditional PDFs in Algorithm 5 is TDθ, whereas in Algorithm 4 only T samples were drawn. Finally, note that the Markov chain induced by the systematic scan Gibbs sampler is non-reversible [104].

-

Symmetric scan: The coordinates are also explored following a pre-specified deterministic order [104]: first in an ascending order and then in a descending order, and this scheme is repeated periodically, i.e., \(\phantom {\dot {i}\!}d_{1}=1, d_{2}=2, \ldots, d_{D_{\theta }}=D_{\theta }, d_{D_{\theta }+1}=D_{\theta }-1, d_{D_{\theta }+2}=D_{\theta }-2, \ldots, d_{2D_{\theta }-1}=1, d_{2D_{\theta }}=1, d_{2D_{\theta }+1}=2, \ldots \) Using the modulo notation, \(\phantom {\dot {i}\!}d_{t} = \min \{((t-1))_{2D_{\theta }-2},((-t))_{2D_{\theta }-2}\}\)Footnote 16. Unlike the systematic scan, the symmetric scan leads to a reversible Markov chain and can also result in an improved performance. The symmetric Gibbs sampler can also be expressed using a double FOR loop, as shown in Algorithm 6, with one iteration of the Gibbs sampler corresponding to one step of the outer loop. Now, the total number of samples drawn from the full conditional PDFs is T(2Dθ−1).

-

Random scan: This method was proposed originally by Liu et al. [167]. In this case, the parameter to be updated is selected randomly at each iteration, typically following a uniform distribution, i.e., \(\phantom {\dot {i}\!}d_{t} \sim \boldsymbol {\mathcal {U}}(\{1, 2, \ldots, D_{\theta }\})\). This scheme also produces a reversible Markov chain and can lead to an improved performance w.r.t. the symmetric scan Gibbs samplerFootnote 17.

Note that only the samples corresponding to the outer loops in Algorithms 5 and 6 (i.e., \(\boldsymbol {\theta }^{(t)} = [\theta _{1}^{(t)}, \ldots, \theta _{D_{\theta }}^{(t)}]^{\top }\)) are typically used to compute the approximate estimator of Eq. (14). This entails an inefficient use of the generated samples w.r.t. the generic Gibbs sampler of Algorithm 4, which uses all the drawn samples to compute the approximate estimator of Eq. (14). However, “nothing prevents the use of all the simulations [samples] in integral approximations”, as stated by Robert and Casella [104]. Indeed, it has been shown very recently that using all the intermediate samples, both in the Gibbs and MH-within-Gibbs (see Section 3.1.4) samplers, can result in a substantial improvement in performance in some cases [168].

Regarding the convergence of the Gibbs sampler, [48, 169] provide regularity conditions under which the Gibbs sampler is ergodic and the distribution of θ(t) converges to the target distribution as t→∞, whereas [52] provides a simple convergence proof. In short, the convergence of the Gibbs sampler essentially requires that all the coordinates keep being updated as the algorithm proceeds, implying that every coordinate is visited infinitely often as t→∞.

Finally, note that there is no need to sample each of the Dθ parameters individually. Indeed, if a certain subset of parameters can be easily sampled jointly given the rest, then we can group them together inside the loop of Algorithm 4 (and also in Algorithms 5 and 6). Let us assume that the Dθ parameters in \(\boldsymbol {\theta } = [\theta _{1}, \ldots, \theta _{D_{\theta }}]^{\top }\phantom {\dot {i}\!}\) can be grouped into Ng disjoint groups in such a way that \(\boldsymbol {\vartheta } = [\boldsymbol {\vartheta }_{1}, \ldots, \boldsymbol {\vartheta }_{N_{g}}]^{\top }\phantom {\dot {i}\!}\) contains all the parameters to be inferred. Then, Algorithm 4 can be applied on 𝜗 instead of θ, drawing \(\boldsymbol {\vartheta }_{d_{t}}^{(t)} \sim \bar {\pi }(\boldsymbol {\vartheta }_{d_{t}}|\boldsymbol {\vartheta }_{\neg d_{t}}^{(t-1)})\). This algorithm is known as the group or block Gibbs sampler. Alternatively, if a subset of parameters can be easily sampled given the rest, we can remove them from the loop of the Gibbs sampler. Without loss of generality, let us assume that we keep the first \(\widetilde {D}_{\theta }\) parameters and leave the remaining parameters outside of the iterations of the Gibbs sampler, i.e., we only draw samples from the reduced set of parameters \(\widetilde {\boldsymbol {\theta }} = [\theta _{1}, \ldots, \theta _{\widetilde {D}_{\theta }}]^{\top }\). Then, Algorithm 4 can be applied on \(\widetilde {\boldsymbol {\theta }}\) instead of θ, drawing \(\theta _{d_{t}}^{(t)} \sim \bar {\pi }(\theta _{d_{t}}|\widetilde {\boldsymbol {\theta }}_{\neg d_{t}}^{(t-1)})\) with \(d_{t} \in \{1, \ldots, \widetilde {D}_{\theta }\}\). When the chain has converged, then we can easily sample from the remaining parameters given the samples from the first \(\widetilde {D}_{\theta }\) parameters obtained using the Gibbs sampler. This algorithm is known as the collapsed Gibbs sampler. Although the addition of auxiliary variables can speed up the convergence of the Gibbs sampler in some cases (e.g., see the data augmentation algorithm in Section 3.1.5), in general grouping or collapsing down variables leads to improved convergence and decreased sample autocovariances, as shown by Liu in [170]. However, let us remark that Liu’s proof is highly restrictive and in some cases the uncollapsed sampler can actually converge faster than the collapsed one (e.g., see the counterexample in Appendix A of Terenin et al. [171]). Finally, note also that finding the optimal variables to group or collapse in order to achieve the optimal performance depends on the problem and can be a very difficult task.

The Gibbs sampler is a fundamental algorithm for parameter estimation in many signal processing and machine learning problems. Indeed, it may be the only choice for some models, because it is well-defined even on discrete state spaces where gradients are not available and good Metropolis-Hastings proposals are difficult to construct. Therefore, it has been extensively used in practical applications either as a stand-alone method or combined with the MH algorithm as described in the following section.

3.1.4 MH-within-Gibbs

The Gibbs sampler requires sampling from the full univariate conditional PDFs. Unfortunately, although this should be a much easier task than sampling from the multi-variate posterior PDF, in many real-world applications these conditional PDFs have non-standard forms and we cannot sample directly from them. Initially, some authors tackled this problem by using the RS algorithm (e.g., see [172]), and the adaptive RS (ARS) algorithm was specifically designed for this task [141]. However, as already mentioned before, both the RS and ARS algorithms require finding a bounding constant C such that Cq(θ)≥π(θ), a task that may be difficult for complicated targets and lead to very inefficient sampling if C is large. In this section, we briefly discuss a widely used technique developed to address this problem, the MH-within-Gibbs algorithm (often also called Component-wise MH method), as well as two related methods: the griddy Gibbs sampler and the fast universal self-tuned sampler (FUSS).

In order to sample from non-standard full conditional PDFs, Ritter and Tanner proposed the so called griddy Gibbs sampler [173, 174]. Their basic idea was using a set of evaluations from the desired full conditional PDF to build a piecewise approximation from which sampling is straightforward. The tth iteration of the griddy Gibbs sampler for the dth coordinate (1≤d≤Dθ) proceeds as follows:

-

1.

Evaluate the target at some pre-specified set of parameters, \(\boldsymbol {\mathcal {S}}_{d}^{(t)}=\{\theta _{d,1}^{(t)}, \ldots, \theta _{d,K}^{(t)}\}\), obtaining \(P_{d,1}^{(t)}=\pi \left (\theta _{d,1}^{(t)}|\boldsymbol {\theta }_{\neg d}^{(t-1)}\right), \ldots, P_{d,K}^{(t)} = \pi \left (\theta _{d,K}^{(t)}|\boldsymbol {\theta }_{\neg d}^{(t-1)}\right)\).

-

2.

Construct an approximate inverse cumulative distribution function (CDF) of the target, \(\hat {\Pi }^{-1}\left (\theta _{d}|\boldsymbol {\theta }_{\neg d}^{(t-1)},\boldsymbol {\mathcal {S}}_{d}^{(t)}\right)\), using \(P_{d,1}^{(t)}, \ldots, P_{d,K}^{(t)}\) and a piecewise constant (PWC) or piecewise linear (PWL) approximation.

-

3

Draw \(u \sim \boldsymbol {\mathcal {U}}([0,1))\) and apply the inverse method [119] to obtain a sample drawn approximately from the target as \(\theta _{d}^{(t)} = \hat {\Pi }^{-1}(u|\boldsymbol {\theta }_{\neg d}^{(t-1)},\boldsymbol {\mathcal {S}}_{d}^{(t)})\).

The griddy Gibbs sampler can be easily implemented for univariate full conditional PDFs, and its performance can be improved by using an adaptive grid and allowing the grid to grow if necessary (using the so called grid grower), as described in [173, 174]. However, the samples obtained are only approximately distributed according to the target, and building an effective approximation of the inverse CDF in the multi-variate case (e.g., for its use within the block Gibbs sampler) is a challenging task. The first issue can be addressed by using the Gibbs stopper [174], where an IS weight is assigned to the drawn samples in order to ensure that they come exactly from the target PDF, but the second one is much more difficult to solve.

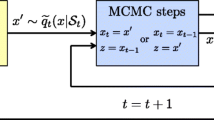

In order to sample virtually from any full conditional PDF, the MH algorithm can be used within the Gibbs sampler. This results in a hybrid sampler [104], where an internal Monte Carlo method (the MH algorithm) is used within another external Monte Carlo technique (the Gibbs sampler). Apparently, Geweke and Tanizaki were the first ones to suggest using the MH algorithm within the Gibbs sampler in order to provide a general solution to nonlinear and/or non-Gaussian state space modeling in a Bayesian framework [175, 176]. The MH-within-Gibbs sampler is detailed in Algorithm 7. Note that TMH iterations of the internal MH algorithm are performed per iteration of the external Gibbs sampler and only the last sample drawn from the MH algorithm is typically used for the integral approximation in Eq. (14). Furthermore, usually TMH=1 for the sake of efficiency, but several authors have shown that this is often not the best alternative from the point of view of reducing the variance of the desired estimators for a given computational budget [177]. Note also that the internal MH algorithm should be used to sample only those parameters that cannot be sampled directly (Algorithm 7 assumes that all the parameters require it), and that it can also be easily applied within the block and collapsed Gibbs samplers. Finally, note that Neal and Roberts have shown that the optimal scaling rate for the MH algorithm (which leads to an average acceptance rate of 0.234) also holds for the MH-within-Gibbs sampler regardless of the dimensionality of the update rule [178].

Noting that the piecewise proposal built by the griddy Gibbs sampler could be used to construct very good proposals for the MH-within-Gibbs sampler, Martino et al. recently proposed the fast universal self-tuned sampler (FUSS) within Gibbs algorithm [179]. Essentially, the idea is starting with a very dense grid that roughly covers the whole effective support of the corresponding full conditional PDF and then applying a pruning strategy in order to obtain a sparse grid that contains most of the probability mass of the conditional PDF. The steps performed by the FUSS algorithm, at the tth step of the Gibbs sampler for the dth parameter, are the following:

-

1.

Initialization: Choose a large set of support points, \(\widetilde {\boldsymbol {\mathcal {S}}}_{d}^{(t)}=\{\theta _{d,1}^{(t)}, \ldots, \theta _{d,L}^{(t)}\}\), that densely cover the whole effective support of the target.

-

2.

Pruning: Remove support points according to a pre-specified and efficient criterion, attaining a final sparse set of support points, \(\boldsymbol {\mathcal {S}}_{d}^{(t)}=\{\theta _{d,1}^{(t)}, \ldots, \theta _{d,K}^{(t)}\}\) with K≪L.

-

3.

Construction: Build a proposal function \(q(\theta _{d}|\boldsymbol {\theta }_{\neg d}^{(t-1)},\boldsymbol {\mathcal {S}}_{d}^{(t)})\) using some appropriate pre-defined mechanism, typically a PWC or PWL approach.

-

4.

MH steps: Perform TMH steps of the internal MH algorithm, as in Algorithm 7, using \(q(\theta _{d}|\boldsymbol {\theta }_{\neg d}^{(t-1)},\boldsymbol {\mathcal {S}}_{d}^{(t)})\) as the proposal PDF.

Since the FUSS algorithm builds a proposal tailored to the target, the acceptance rate of the internal MH algorithm is usually very high and the correlation among the drawn samples very small. This leads to estimators with a reduced variance, especially for very peaky proposals, where other Monte Carlo methods fail (see [179] for further details). Finally, note that it is again possible to employ all the TMH samples generated by the internal MH algorithm in the final estimators, as shown in [168].

3.1.5 Other classical MCMC techniques

In this section, we describe other classical approaches for sampling from non-standard multi-variate densities: data augmentation, slice sampling, the hit-and-run algorithm, and adaptive direction sampling. We also discuss briefly the issue of thinning or subsampling the Markov chain, which is often used in signal processing applications to reduce the computational cost and the correlation among the generated samples.

Data augmentation (DA)

The data augmentation method was originally devised by Tanner and Wong in order to compute posterior distributions for Bayesian inference [180]. The basic idea of data augmentation (DA) is the same one that underlies the well-known and widely used expectation-maximization (E-M) algorithm [181]: in many practical problems, augmenting the observed dataset (y) with a set of latent data (z) leads to an easier analysis of the problem. In the Bayesian inference case, the DA algorithm is based on the assumption that π(θ|y,z) is straightforward to analyze, whereas π(θ|y) is intractable. Another important assumption regards the generation of the latent data (z): they should be easy to draw given the parameters and the observed data. Under these two assumptions, drawing samples from the desired target can be easily accomplished following the iterative approach shown in Algorithm 8. Note that the DA procedure shown in Algorithm 8 is equivalent to the application of the Gibbs sampler of Algorithm 4 on the augmented parameter vector θa=[θ,z1,…,zK] [170]Footnote 18. Note also that data augmentation is the opposite of integrating out parameters from a model in closed form, as done in the collapsed Gibbs sampler described in Section 3.1.3. Finally, let us remark that, just like it happens with the collapsed Gibbs sampler (cf. the previously mentioned discussion of Liu et al. in Section 3.1.3), DA can either increase or reduce the mixing efficiency.

Slice sampling

Several Monte Carlo techniques, like direct methods (e.g., the inverse-of-density method) [119], the rejection sampler (see Section 2.2), and some MCMC algorithms (e.g., the so-called slice sampler) rely on a simple result, known as the fundamental theorem of simulation.

Theorem 1

Drawing samples from a random variable θ with density \(\bar {\pi }({{\boldsymbol {\theta }}})\propto \pi ({{\boldsymbol {\theta }}})\) is equivalent to sampling uniformly on the region defined by

Namely, considering a realization (θ′,z′), if it is distributed uniformly on \(\boldsymbol {\mathcal {A}}_{\pi }\), then θ′ is a sample from \(\bar {\pi }({{\boldsymbol {\theta }}})\) [104, 119].

Therefore, if we are able to draw a vector (θ′,z′) uniformly on \(\boldsymbol {\mathcal {A}}_{\pi }\) (i.e., the area below the unnormalized target function π(θ)), then the coordinate θ′ is marginally distributed according to \(\bar {\pi }({{\boldsymbol {\theta }}})\). The variable z plays the role of an auxiliary variable which is introduced in order to ease the sampling procedure, just like the latent data in the data augmentation algorithm.

The slice sampler is precisely a Gibbs sampling method that can be applied for drawing samples uniformly from \(\boldsymbol {\mathcal {A}}_{\pi }\). Let us define the set

The slice sampler is given in Algorithm 9.

The slice sampling algorithm generates a Markov chain over \(\boldsymbol {\mathcal {A}}_{\pi }\), producing samples uniformly distributed in \(\boldsymbol {\mathcal {A}}_{\pi }\) after the burn-in period. However, performing step 2b is often virtually impossible (even for unidimensional target PDFs), since it requires the inversion of π(θ) in order to determine the set \(\boldsymbol {\mathcal {O}}(z)\). The difficulty of this inversion is due to the fact that π(θ) is usually a non-monotonic function, implying that the set \(\boldsymbol {\mathcal {O}}(z)\) is typically formed by the union of disjoint sets which are difficult to determine. Fortunately, several practical procedures have been suggested for this purpose. See [182] for further information on this issue.

Hit-and-run

Another important class of methods that can be used both for global optimization and Bayesian inference are the so called hit-and-run algorithms, which are a collection of efficient sampling techniques that use random walks to explore the parameter space. Sampling through random walks was independently proposed by Boneh and Golan [183] and Smith [184, 185], and this class of methods were later renamed as hit-and-run algorithms [186]. The generic hit-and-run algorithm is shown in Algorithm 10. The basic idea is determining a random direction in the Dθ-dimensional parameter space using the proposal q(θ) and then selecting a random point along that direction with a probability proportional to the target PDF evaluated along the chosen direction.

Different hit-and-run algorithms are obtained depending on the proposal function q(θ). For instance, the original hypersphere directions (HD) hit-and-run algorithm considered a uniform proposal q(θ) and a uniform target on some bounded region \(\Theta \subset \mathbb {R}^{D_{\theta }}\) [183–185], whereas the coordinate directions (CD) hit-and-run randomly chooses one of the Dθ coordinates of the parameter space [187]. Regarding the connections with other methods, the hit-and-run algorithm has similarities both with the MH algorithm and the Gibbs sampler. On the one hand, the hit-and-run algorithm resembles the random walk MH algorithm, but the generated samples are always accepted, since they are drawn from the targetFootnote 19. On the other hand, the CD hit-and-run algorithm is equivalent to the random scan Gibbs sampler. However, note that the generic hit-and-run algorithm is more flexible than the Gibbs sampler, since it can choose any arbitrary direction, not only one of the directions corresponding to the different parameters.

Adaptive direction sampling (ADS)

A third important family of methods that attempt to improve the convergence speed of the Gibbs sampler is adaptive direction sampling (ADS) [189, 190]. The basic idea of ADS is maintaining a set of support points that are constantly updated, with the current support set being used to determine the sampling direction. The general adaptive direction sampler is shown in Algorithm 11.

The procedure shown in Algorithm 11 is very general and many different algorithms can be obtained by considering different choices for 𝜗(t) and λt [189]:

-

Snooker algorithm: Important special case of the general ADS algorithm obtained by setting \(\boldsymbol {\vartheta }^{(t)} = \boldsymbol {\theta }_{a}^{(t)} \sim \boldsymbol {\mathcal {U}}(\boldsymbol {\mathcal {S}}^{(t-1)} \setminus \{\boldsymbol {\theta }_{c}^{(t)}\})\) and λt=−1. In this specific algorithm, \(\boldsymbol {\theta }_{a}^{(t)}\) sets the direction along which \(\boldsymbol {\theta }_{c}^{(t)}\) is moved in order to obtain the new sample.

-