Abstract

Objectives

Our aim was to develop a structured reporting concept (structured oncology report, SOR) for general follow-up assessment of cancer patients in clinical routine. Furthermore, we analysed the report quality of SOR compared to conventional reports (CR) as assessed by referring oncologists.

Methods

SOR was designed to provide standardised layout, tabulated tumour burden documentation and standardised conclusion using uniform terminology. A software application for reporting was programmed to ensure consistency of layout and vocabulary and to facilitate utilisation of SOR. Report quality was analysed for 25 SOR and 25 CR retrospectively by 6 medical oncologists using a 7-point scale (score 1 representing the best score) for 6 questionnaire items addressing different elements of report quality and overall satisfaction. A score of ≤ 3 was defined as a positive rating.

Results

In the first year after full implementation, 7471 imaging examinations were reported using SOR. The proportion of SOR in relation to all oncology reports increased from 49 to 95% within a few months. Report quality scores were better for SOR for each questionnaire item (p < 0.001 each). Averaged over all questionnaire item scores were 1.98 ± 1.22 for SOR and 3.05 ± 1.93 for CR (p < 0.001). The overall satisfaction score was 2.15 ± 1.32 for SOR and 3.39 ± 2.08 for CR (p < 0.001). The proportion of positive ratings was higher for SOR (89% versus 67%; p < 0.001).

Conclusions

Department-wide structured reporting for follow-up imaging performed for assessment of anticancer treatment efficacy is feasible using a dedicated software application. Satisfaction of referring oncologist with report quality is superior for structured reports.

Similar content being viewed by others

Key points

-

Supported by dedicated software, high-volume utilisation of profoundly structured radiology reports is feasible for general follow-up imaging in cancer patients.

-

Report quality is rated better for structured reports than for conventional reports by oncologists.

Introduction

For patients with solid cancers, the results of imaging examinations are of crucial importance for primary diagnosis and treatment guidance during the further course of disease. Imaging findings do heavily impact on therapeutic decisions and treatment strategies of referring clinicians both in the curative and the advanced tumour situation. For communication of results, written radiology reports are commonly used. Traditionally, free-form narrative reports have been generally used in the radiologic community [1]. Due to the risk of incompleteness and lack of comprehensibility of relevant information, the need for improved and structured reporting has been claimed not only during the last decade [2].

For primary diagnosis and local staging of several tumour entities, disease-specific reporting templates have been proposed by medical societies, e.g. for rectal cancer [1, 3] or pancreatic cancer [1, 4]. Regarding content and presence of key descriptors, superiority of structured reports over conventional reports has been demonstrated for both of these diseases [5,6,7,8] as well as for several other malignancies such as prostate cancer [9] and hepatocellular carcinoma [10, 11]. Thus, the advantages of structured reporting for primary diagnosis and initial local staging are well recognised.

However, the vast majority of workload in radiology departments associated with comprehensive cancer centres consists of follow-up imaging of cancer patients with advanced disease to determine efficacy of cancer treatment. For clinical trials, the Response Criteria In Solid Tumours (RECIST) were introduced initially in 2000 for standardisation of response assessment [12]. For clinical routine assessment of tumour patients, there is no common proposal to harmonise layout, content and terminology using structured reporting to date.

We here report the conceptual design, clinical implementation and practical utilisation of a structured oncology report (SOR) dedicated to follow-up of patients with metastatic cancer at a radiology department of a high-volume university hospital. Furthermore, we present the results of an analysis regarding the reporting quality of SOR compared to conventional reports (CR) as assessed by referring oncologists.

Materials and methods

Concept of structured oncology reporting

The concept of structured oncology reporting was interdisciplinarily designed by an expert panel of radiologists (T.F.W., O.S., T.M., H.U.K.) and oncologists (G.M.H., C.S., D.J., A.K.B.) to provide a specific framework to be used in imaging examinations for follow-up of cancer patients with solid tumours. Conceptualisation was based on personal experience and considered available evidence concerning report content preferences [13, 14] and guidelines for tumour response assessment [15]. Aside from standardised content, the concept includes in-house programming and utilisation of a browser-based software application for generating SOR. Figure 1 shows a schematic illustration of the layout of a SOR. SOR is pillared on three main principles that address important criteria of report quality:

Standardised layout

The SOR has a consistent organisation and is separated into specific sections. After a section dedicated to assessment of general information concerning imaging and clinical data, the descriptive part of the report contains separate sections for oncological and non-oncological findings, respectively. The content of the section for oncological findings is divided further into subsections for imaging findings regarding the location of the primary tumour and the presence of metastases at different anatomical sites. The conclusion of the report is divided into subsections for oncological impression and non-oncological impression. The oncological impression provides standardised content using uniform terminology (see below).

Tabulated documentation of tumour burden

In the SOR, tumour measurements are documented in tables. Reference lesions are selected and measured following the rules provided by RECIST 1.1 [15]. The sum of the diameters of the reference lesions serves as the primary quantitative measure used for response assessment.

Standardised conclusion using uniform terminology

The content of the oncological conclusion is standardised concerning structure and vocabulary used for tumour response categorisation.

-

Clinical tumour response assessment using a uniform terminology for response categories in due consideration of short-term and long-term imaging (Table 1). Assignment of clinical response categories is thought to consider quantitative measures of tumour burden following rules similar to RECIST 1.1 as well as subjective impressions of tumour burden development. The clinical response category is supplemented by a free-text summary of relevant oncological findings.

-

Formal tumour response assessment strictly adhering to RECIST 1.1 in due consideration of baseline and nadir imaging if applicable

Software application for structured reporting

A software application was programmed to ensure consistency of layout and vocabulary of SOR and to facilitate utilisation in clinical practice. The web browser-based application provides report templates using HyperText Markup Language (HTML) forms. HTML form elements are processed with JavaScript to generate the final report text, which is copied and pasted into the radiology information system (RIS) after completion. Different types of input elements, such as text fields, checkboxes and drop-down lists, are used to ensure uniform terminology on the one hand and to provide space for narrative description of findings on the other. The application can be accessed for review using the following internet link: http://www.targetedreporting.com/sor/.

The template for SOR is designed in such a way that the abovementioned principles of the reporting concept are supported and adherence to reporting formalities is facilitated.

Standardised layout

The HTML form sections handling the descriptive parts of oncological and non-oncological findings are set up to largely meet widespread anatomy-based reporting habits, in which the report is traditionally generated from head to toe. That is, for a given anatomic region, oncological and non-oncological findings are entered in the same form block. With entering data into specific input elements, the content is processed and rearranged to occur at the correct position in the final report text output. Pre-formulated phrases are provided as selectable input options to accelerate reporting of additional findings.

Tabulated documentation of tumour burden

Input form elements for integers are used for entering reference lesion diameters. The sum of the diameters of the reference lesions and the absolute and relative change of the sum of the diameters in comparison to the prior imaging examination are calculated automatically.

Standardised conclusion

The oncological conclusion provides means to ensure that formalities of structure and content including the uniform response terminology are complied with.

Implementation of standardised oncology reports

The different main elements of SOR were implemented gradually into clinical routine. First, tabulated documentation of reference lesions was integrated into CR. Second, the uniform terminology for response assessment was added. Third, the standardised layout and the whole concept of SOR were introduced into clinical practice with providing the software application. Starting with select key users, utilisation of SOR was increased successively over a time period of three months (training period). After the training period, the whole imaging department was instructed to use SOR for reporting of computed tomography (CT) and magnetic resonance imaging (MRI) examinations performed for follow-up in cancer patients (implementation period). An internal white paper was issued to serve as reporting guideline and reference in daily practice.

For assessment of SOR implementation into clinical routine numbers on utilisation of SOR during the training period and the first year of the implementation period were extracted from the radiology information system.

Analysis of reporting quality

Study design

Reporting quality as assessed by medical oncologists was analysed in a retrospective study design comparing SOR with CR.

Report selection for assessing reporting quality

Metastatic colorectal cancer (mCRC) was decided to serve as a pars pro toto of advanced solid tumour diseases. The decision for mCRC was made because mCRC represents one of the most frequent tumour diseases in both men and women and shows a rather uniform pattern of tumour spread facilitating interindividual comparison. Twenty-five CR and 25 SOR of CT scans of chest, abdomen and pelvis performed for follow-up of mCRC patients were extracted from the radiology information system. The 25 CR were obtained from 25 consecutive mCRC patients that were examined prior to implementation of SOR at our institution. The 25 SOR were obtained from another 25 mCRC patients that were examined after implementation of SOR. The number of reports had exploratory character, because data allowing proper sample size calculation on the basis of a comparable type of structured reporting were not available.

Layout of conventional reports

CR created prior to introduction of SOR had a non-harmonised format but typically included a section for anatomy-based description of findings and a section for the conclusion. Presentation of tumour measurements was done at the discretion of the radiologist either in-line within the text or using a table format.

Rating of reports

CR and SOR of all patients were rated individually by six randomly assigned independent physicians (observers) from the internal department of medical oncology. These included three assistant physicians in medical oncology (with 1 year, 3 years and 4 years of professional experience) and three board-approved medical oncologists (with 12 years, 13 years and 15 years of professional experience). The de-identified reports were presented to the observers in a randomised manner.

Reports were rated using a questionnaire that contained six items addressing different elements of reporting quality (Table 2). Items 1 and 2 addressed the clarity of the presentation of oncological and non-oncological findings. Items 3 and 4 addressed the clarity of the presentation of tumour measurements and tumour response. Item 5 addressed the completeness of the report regarding answering the medical question. Item 6 addressed the overall satisfaction of the observer with the report.

For rating of the questionnaire items, a 7-point scale was used with a score of 1 representing the best score. A score lower than or equal to 3 was defined as a positive rating.

Ethics approval

The analysis of reporting quality was approved by the ethics committee of the University of Heidelberg with a waiver of informed consent for patients whose reports were used (S-082/2018). The observers selected for rating the reports consented in written form to study participation and use of their personal data.

Statistics

Scores were compared between SOR and CR using the Mann-Whitney U test. For assessment of differences concerning the proportion of positive ratings (score lower than or equal to 3 compared with score greater than 3) between board-approved medical oncologists and residents as well as between SOR and CR the chi-squared test was used. Interrater agreement was determined using the agreement coefficient 2 (AC2) according to Gwet [16]. In contrast to Cohen’s Kappa, Gwet’s coefficients have been specifically developed for analysis of agreement between more than two raters and are less vulnerable to the interrater agreement paradoxes described by Cicchetti and Feinstein [17]. Levels of agreement were defined using the classification of Landis and Koch [18]. A p value below 0.05 was considered to indicate statistical significance. Statistical calculations were performed using Excel for Mac version 16 (Microsoft Corporation, Redmond, USA) and SPSS version 25 (IBM, Armonk, USA).

Results

Implementation of SOR into clinical practice

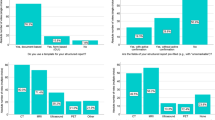

In total, 7471 imaging examinations were reported with SOR after department-wide roll out of SOR in the first 12 months of the implementation period. Figure 2 shows the absolute number of imaging examinations that were reported with SOR on a per-month basis for the training period and the first 12 months of the implementation period. The proportion of SOR in relation to all reports created for one division of the department of medical oncology increased from 49% for the training period to 95% for the first 12 months of the implementation period (Fig. 3a). Of note, these numbers also include examinations of cancer patients that were not performed in the context of oncological follow-up. The proportion of SOR in relation to all reports (oncological and non-oncological reports) created for examinations at CT and MRI scanners used for body imaging was 29% during the first 12 months of the implementation period (Fig. 3b). Multi-region examinations such as CT of chest, abdomen and pelvis are counted as one examination.

Percentage of structured oncology reports used for oncological follow-up imaging. a The proportion of SOR to all reports generated for a representative division of the department for medical oncology. b The proportion of SOR to all reports generated for scans at the CT and MRI scanners used for body imaging. Month 0 indicates time point of department-wide roll out of structured oncology reports. SOR, structured oncology report; CR, conventional report

Analysis of reporting quality

The scores for items 1 and 2 were significantly better for SOR regarding both oncological findings (item 1; 1.96 ± 1.16 versus 3.02 ± 1.82; p < 0.001) and non-oncological findings (item 2; 2.33 ± 1.41 versus 2.88 ± 1.75; p = 0.018). The proportion of positive ratings (score ≤ 3) was higher for SOR concerning clarity of oncological findings (90% versus 69%; p < 0.001) as well as clarity of non-oncological findings (85% versus 68%; p < 0.001).

The scores for items 3 and 4 were significantly better for SOR regarding both presentation of tumour measurements (item 3; 2.03 ± 1.22 versus 3.39 ± 2.05; p < 0.001) and definition of tumour response categories (item 4; 1.73 ± 1.03 versus 2.89 ± 2.00; p < 0.001). The proportion of positive ratings (score ≤ 3) was higher for SOR concerning presentation of tumour measurements (89% versus 61%; p < 0.001) as well as definition of tumour response categories (92% versus 69%; p < 0.001).

The score for item 5 was significantly better for SOR regarding the sufficiency of the reports for answering the medical question (1.70 ± 1.02 versus 2.74 ± 1.82; p < 0.001). The proportion of positive ratings (score ≤ 3) was higher for SOR (94% versus 72%; p < 0.001).

Overall satisfaction scores were significantly better for SOR (item 6; 2.15 ± 1.32 versus 3.39 ± 2.08; p < 0.001). The proportion of positive ratings (score ≤ 3) was higher for SOR (86% versus 63%).

Averaged over all questionnaire item scores were significantly better for SOR (1.98 ± 1.22 versus 3.05 ± 1.93; p < 0.001). The proportion of positive ratings (score ≤ 3) was higher for SOR (89% versus 67%; p < 0.001). The proportion of items that were rated positively by all six readers was higher for SOR (53% versus 9%; p < 0.001). Interrater agreement was excellent for SOR (AC2 = 0.812) and moderate for CR (AC2 = 0.561).

The proportion of positive ratings of SOR tended to be higher for board-approved medical oncologists than for assistant physicians (91% versus 87%; p = 0.05). The proportion of positive ratings of conventional reports was higher for assistant physicians than for board-approved medical oncologists (76% versus 58%; p < 0.001).

Figure 4 shows heat maps of the distribution of scores assigned to each item. Figure 5 illustrates the proportions of positive ratings for all items including all readers.

Discussion

We have demonstrated the feasibility of a department-wide implementation of an elaborated structured reporting concept for clinical response assessment in patients with advanced solid tumour diseases. SOR was designed primarily to be used for oncological follow-up of metastatic disease and aims at providing a comprehensive diagnostic means to support the oncologist in making the most appropriate treatment decision in due consideration of imaging and clinical course. As the available reporting items resemble the structure of the TNM classification, SOR may also be used for initial staging of newly diagnosed cancers. However, tumour-specific reporting templates are considered more appropriate for initial staging of most cancers in order to reliably address the relevant questions. Within a few months after introduction into clinical practice, SOR has replaced CR and now represents the backbone of oncological imaging in our high-volume cancer centre. Establishing SOR goes hand in hand with introduction of an information technology (IT) solution programmed in-house for report creation using web browser forms. Analysis of referring physicians’ satisfaction shows superiority of SOR over CR regarding different elements of report quality. This applies to both senior medical oncologists and assistant physicians in training.

The European Society of Radiology has published several practice guidelines addressing quality standards of radiology reports [19,20,21,22]. However, there is ongoing discussion on how to define structured reporting [23]. With reference to Weiss and Bolos [24], the European Society of Radiology identifies three levels of complexity of structured reporting [22, 24]: The first level comprises a structured format with headings and subheadings. The second level is a consistent organisation ensuring that all relevant aspects of an imaging study are considered. The third level uses a standardised language to improve communication and reusability of radiology reports. Reasons for using structured reporting include improvement of reporting quality as well as datafication and accessibility of radiology reports for scientific purposes [22]. A distinction between standardised reporting and structured reporting has been suggested [23]: Standardised reporting is proposed to represent a means of streamlining the medical content of a radiological report. Structured reporting is proposed to include particular IT to arrange the radiology report [23]. An IT-based reporting tool is thought necessary to support the reporting radiologist by ordering the report into a certain layout (level 1) and by providing predefined medical content (level 2) [23]. Considering this, our reporting concept is supposed to meet the criteria of top-level structured reporting as a specific computer application is being used to provide report templates, to arrange the content and to assist in using standardised language for clinical response assessment. Aside from a uniform terminology in the conclusion, description of findings in the sections for oncological and non-oncological observations is based deliberately on narrative free-text in order to provide the reporting radiologist with sufficient degrees of freedom to portray the individual abnormalities. Available data suggest that the combination of free-text and predefined phrasing options is superior to conventional reporting and beneficial for interdisciplinary communication [25]. Bearing this in mind, specification of an objective tumour response category in the conclusion is complemented by a summarising description of oncological findings that is used to also convey a subjective impression of tumour load and gives room to indicate recommendations for further clinical decision-making.

A survey on expectations regarding the radiology report as seen by radiologists and referring clinicians showed need for improving reporting habits [19]. Referring physicians generally prefer structured reporting with an itemised layout over conventional reports [13], although studies with contrary results are available as well [26]. Concerning oncological imaging, most oncologists feel that conventional reports are not sufficient for assessing tumour burden in oncological patients [27] and presentation of tumour measurement data in a tabulated form is preferred [14]. For decision-making in patients with malignant lymphoma, structured reports have proven superior to conventional reports [28]. In line with these findings, our study shows that presentation of tumour measurements, definition of tumour response and satisfaction with answering the medical question were rated better for structured reports than for conventional reports.

In terms of practical implementation, the successful integration of department-wide structured reporting programmes using a step-wise approach and an interdisciplinary agreement has been described before [29, 30]. Olthof et al. have demonstrated that interdisciplinary workflow optimisation including clarification of imaging request forms, subspecialisation of radiologists and structured reporting improves the quality of radiology reports in oncological patients [31]. Gormly reported on experiences with an oncological reporting concept that includes reporting templates with a layout comparable to SOR [32]. However, scepticism against structured reporting is prevalent among radiologists [33]. Raised concerns include the fear of interference with the natural process of image interpretation, non-feasibility for complex cases and cumbersome utilisation [33]. Our data on implementation of SOR in clinical practice show, however, that compliance with such structured reporting concepts and structured reporting tools aside from the RIS can be high even in settings with high reporting workload.

Web browser-based reporting tools can be used to generate structured reports. Several authors have used a commercial online reporting solution that is primarily designed to generate structured reports containing semantic sentences using predefined text phrases from itemised point-and-click data entry [5, 9, 28, 34,35,36]. Pinto dos Santos et al. have developed an open-source reporting platform that is compliant with IHE Management of Radiology Report Templates profile and stores report information in an additional database aside from RIS to facilitate data analysis [37].

We used a different software application programmed in-house in order to create a reporting template for oncological follow-up imaging that fits best to local clinical and radiological demands and represents the locally approved reporting concept in detail. SOR are compiled within the software application and are exported to the RIS as plain text via copy and paste. SOR are stored in RIS only and not in an additional SOR database. Scientific analyses of information contained in SOR can be performed using language processing techniques after extracting reports from RIS. In our opinion, scientific analyses of a separate SOR database would require data consistency with RIS, in which the report finally has to be cleared. Data consistency, however, is difficult to establish considering (1) an obligatory RIS-based multistep reporting process including primary reporting by an assistant radiologists and approval by a board-approved radiologist and (2) necessity of a technological means that SOR database and RIS database reports are identical. On the other hand, lack of a separate SOR database impedes inclusion of data from previous reports into the current report to ease reporting, e.g. inclusion of reference lesion measurements in long-term follow-up.

Limitations

The implementation of our reporting concept has constraints. The influence of SOR implementation on reporting times was not determined. The experiences of radiologists in generating the reports and objective parameters of report turnaround times were not investigated and should be investigated prospectively. For quantitative assessment of long-term follow-up, tumour measurements recorded in reports of previous examinations have to be considered, because in SOR only the last prior tumour measurements are included. The objective impact of SOR on clinical decision-making was not assessed. In the analysis of satisfaction with reports, only reports from patients with metastatic colorectal cancer have been investigated as a pars pro toto. The number of reports that has been analysed for referring physicians’ satisfaction was small.

Conclusion

Department-wide structured reporting for general follow-up imaging studies performed in metastatic cancer patients for assessment of anticancer treatment efficacy is feasible using a dedicated IT reporting tool. Satisfaction of referring oncologist with report quality is superior for structured reports compared with conventional reports.

Availability of data and materials

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CR:

-

Conventional report

- CT:

-

Computed tomography

- HTML:

-

HyperText Markup Language

- IT:

-

Information technology

- mCRC:

-

Metastatic colorectal cancer

- MRI:

-

Magnetic resonance imaging

- RECIST:

-

Response Criteria In Solid Tumours

- RIS:

-

Radiology information system

- SOR:

-

Structured oncology report

References

Pinto Dos Santos D, Hempel JM, Mildenberger P, Klöckner R, Persigehl T (2018) Structured reporting in clinical routine. Rofo 191:33–39. https://doi.org/10.1055/a-0636-3851

Dunnick NR, Langlotz CP (2008) The radiology report of the future: a summary of the 2007 Intersociety Conference. J Am Coll Radiol 5:626–629

Beets-Tan RGH, Lambregts DMJ, Maas M et al (2018) Magnetic resonance imaging for clinical management of rectal cancer: updated recommendations from the 2016 European Society of Gastrointestinal and Abdominal Radiology (ESGAR) consensus meeting. Eur Radiol 28:1465–1475. https://doi.org/10.1007/s00330-017-5026-2

Al-Hawary MM, Francis IR, Chari ST et al (2014) Pancreatic ductal adenocarcinoma radiology reporting template: consensus statement of the Society of Abdominal Radiology and the American Pancreatic Association. Radiology 270:248–260. https://doi.org/10.1148/radiol.13131184

Nörenberg D, Sommer WH, Thasler W et al (2017) Structured reporting of rectal magnetic resonance imaging in suspected primary rectal cancer. Invest Radiol 52:232–239. https://doi.org/10.1097/RLI.0000000000000336

Sahni VA, Silveira PC, Sainani NI, Khorasani R (2015) Impact of a structured report template on the quality of MRI reports for rectal cancer staging. AJR Am J Roentgenol 205:584–588. https://doi.org/10.2214/AJR.14.14053

Brown PJ, Rossington H, Taylor J et al (2019) Standardised reports with a template format are superior to free text reports: the case for rectal cancer reporting in clinical practice. Eur Radiol 29:5121–5128. https://doi.org/10.1007/s00330-019-06028-8

Brook OR, Brook A, Vollmer CM, Kent TS, Sanchez N, Pedrosa I (2015) Structured reporting of multiphasic CT for pancreatic cancer: potential effect on staging and surgical planning. Radiology 274:464–472. https://doi.org/10.1148/radiol.14140206

Wetterauer C, Winkel DJ, Federer-Gsponer JR et al (2019) Structured reporting of prostate magnetic resonance imaging has the potential to improve interdisciplinary communication. PLoS One 14:e0212444. https://doi.org/10.1371/journal.pone.0212444

Poullos PD, Tseng JJ, Melcher ML, et al (2018) Structured reporting of multiphasic CT for hepatocellular carcinoma: effect on staging and suitability for transplant. AJR Am J Roentgenol 210:766–774. doi: https://doi.org/10.2214/AJR.17.18725

Flusberg M, Ganeles J, Ekinci T et al (2017) Impact of a structured report template on the quality of CT and MRI reports for hepatocellular carcinoma diagnosis. J Am Coll Radiol. https://doi.org/10.1016/j.jacr.2017.02.050

Therasse P, Arbuck SG, Eisenhauer EA et al (2000) New guidelines to evaluate the response to treatment in solid tumors. European Organization for Research and Treatment of Cancer, National Cancer Institute of the United States, National Cancer Institute of Canada. J Natl Cancer Inst 92:205–216. https://doi.org/10.1093/jnci/92.3.205

Naik SS, Hanbidge A, Wilson SR (2001) Radiology reports: examining radiologist and clinician preferences regarding style and content. AJR Am J Roentgenol 176:591–598. https://doi.org/10.2214/ajr.176.3.1760591

Travis AR, Sevenster M, Ganesh R, Peters JF, Chang PJ (2014) Preferences for structured reporting of measurement data: an institutional survey of medical oncologists, oncology registrars, and radiologists. Acad Radiol 21:785–796. https://doi.org/10.1016/j.acra.2014.02.008

Eisenhauer EA, Therasse P, Bogaerts J et al (2009) New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1). Eur J Cancer 45:228–247. https://doi.org/10.1016/j.ejca.2008.10.026

Gwet KL (2016) Testing the difference of correlated agreement coefficients for statistical significance. Educ Psychol Meas 76:609–637. https://doi.org/10.1177/0013164415596420

Cicchetti DV, Feinstein AR (1990) High agreement but low kappa: II. Resolving the paradoxes. J Clin Epidemiol 43:551–558. https://doi.org/10.1016/0895-4356(90)90159-m

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33:159–174

European Society of Radiology (ESR) (2011) Good practice for radiological reporting. Guidelines from the European Society of Radiology (ESR). Insights Imaging 2:93–96. https://doi.org/10.1007/s13244-011-0066-7

European Society of Radiology (ESR) (2013) ESR communication guidelines for radiologists. Insights Imaging 4:143–146. https://doi.org/10.1007/s13244-013-0218-z

European Society of Radiology (ESR) (2012) ESR guidelines for the communication of urgent and unexpected findings. Insights Imaging 3:1–3. https://doi.org/10.1007/s13244-011-0135-y

European Society of Radiology (ESR) (2018) ESR paper on structured reporting in radiology. Insights Imaging 9:1–7. https://doi.org/10.1007/s13244-017-0588-8

Nobel JM, Kok EM, Robben SGF (2020) Redefining the structure of structured reporting in radiology. Insights Imaging 11:10–15. https://doi.org/10.1186/s13244-019-0831-6

Weiss DL, Bolos PR (2009) Reporting and Dictation. In: Branstetter B (ed) Practical imaging informatics. Springer Science & Business Media, pp 147–162

Schwartz LH, Panicek DM, Berk AR, Li Y, Hricak H (2011) Improving communication of diagnostic radiology findings through structured reporting. Radiology 260:174–181. https://doi.org/10.1148/radiol.11101913

Johnson AJ, Chen MY, Zapadka ME, Lyders EM, Littenberg B (2010) Radiology report clarity: a cohort study of structured reporting compared with conventional dictation. J Am Coll Radiol 7:501–506. https://doi.org/10.1016/j.jacr.2010.02.008

Folio LR, Nelson CJ, Benjamin M, Ran A, Engelhard G, Bluemke DA (2015) Quantitative radiology reporting in oncology: survey of oncologists and radiologists. AJR Am J Roentgenol 205:W233–W243. https://doi.org/10.2214/AJR.14.14054

Schoeppe F, Sommer WH, Nörenberg D et al (2018) Structured reporting adds clinical value in primary CT staging of diffuse large B-cell lymphoma. Eur Radiol 28:3702–3709. https://doi.org/10.1007/s00330-018-5340-3

Larson DB, Towbin AJ, Pryor RM, Donnelly LF (2013) Improving consistency in radiology reporting through the use of department-wide standardized structured reporting. Radiology 267:240–250. https://doi.org/10.1148/radiol.12121502

Goldberg-Stein S, Walter WR, Amis ES Jr, Scheinfeld MH (2016) Implementing a structured reporting initiative using a collaborative multi-step approach. Curr Probl Diagn Radiol 46:295–299. https://doi.org/10.1067/j.cpradiol.2016.12.004

Olthof AW, Borstlap J, Roeloffzen WW, van Ooijen PMA (2018) Improvement of radiology reporting in a clinical cancer network: impact of an optimised multidisciplinary workflow. Eur Radiol 28:1–7. https://doi.org/10.1007/s00330-018-5427-x

Gormly KL (2009) Standardised tumour, node and metastasis reporting of oncology CT scans. J Med Imaging Radiat Oncol 53:345–352. https://doi.org/10.1111/j.1754-9485.2009.02090.x

Weiss DL, Langlotz CP (2008) Structured reporting: patient care enhancement or productivity nightmare? Radiology 249:739–747. https://doi.org/10.1148/radiol.2493080988

Gassenmaier S, Armbruster M, Haasters F et al (2017) Structured reporting of MRI of the shoulder - improvement of report quality? Eur Radiol 27:4110–4119. https://doi.org/10.1007/s00330-017-4778-z

Schöppe F, Sommer WH, Schmidutz F et al (2018) Structured reporting of x-rays for atraumatic shoulder pain: advantages over free text? BMC Med Imaging 18:20. https://doi.org/10.1186/s12880-018-0262-8

Sabel BO, Plum JL, Czihal M et al (2018) Structured reporting of CT angiography runoff examinations of the lower extremities. Eur J Vasc Endovasc Surg 55:679–687. https://doi.org/10.1016/j.ejvs.2018.01.026

Dos Santos DP, Klos G, Kloeckner R, Oberle R, Dueber C, Mildenberger P (2016) Development of an IHE MRRT-compliant open-source web-based reporting platform. Eur Radiol. https://doi.org/10.1007/s00330-016-4344-0

Funding

Open access funding provided by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

TFW: Design of the work; acquisition, analysis and interpretation of data; creation of software; and drafting the work. MS: Acquisition, analysis and interpretation of data and drafting the work. FCH: Analysis and interpretation of data and drafting the work. OS: Design of the work and substantial revision of the work. GMH: Design of the work and substantial revision of the work. CS: Design of the work and substantial revision of the work. TM: Design of the work and substantial revision of the work. DJ: Design of the work and substantial revision of the work. HUK: Design of the work and substantial revision of the work. AKB: Design of the work, interpretation of data, creation of software and drafting the work. The authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the ethics committee of the University of Heidelberg with a waiver of informed consent for patients (S-082/2018). The observers selected for rating the reports consented in written form to study participation and use of their personal data.

Consent for publication

Not applicable.

Competing interests

There are no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weber, T.F., Spurny, M., Hasse, F.C. et al. Improving radiologic communication in oncology: a single-centre experience with structured reporting for cancer patients. Insights Imaging 11, 106 (2020). https://doi.org/10.1186/s13244-020-00907-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13244-020-00907-1