Abstract

Background

Children with autism spectrum conditions (ASC) have emotion recognition deficits when tested in different expression modalities (face, voice, body). However, these findings usually focus on basic emotions, using one or two expression modalities. In addition, cultural similarities and differences in emotion recognition patterns in children with ASC have not been explored before. The current study examined the similarities and differences in the recognition of basic and complex emotions by children with ASC and typically developing (TD) controls across three cultures: Israel, Britain, and Sweden.

Methods

Fifty-five children with high-functioning ASC, aged 5–9, were compared to 58 TD children. On each site, groups were matched on age, sex, and IQ. Children were tested using four tasks, examining recognition of basic and complex emotions from voice recordings, videos of facial and bodily expressions, and emotional video scenarios including all modalities in context.

Results

Compared to their TD peers, children with ASC showed emotion recognition deficits in both basic and complex emotions on all three modalities and their integration in context. Complex emotions were harder to recognize, compared to basic emotions for the entire sample. Cross-cultural agreement was found for all major findings, with minor deviations on the face and body tasks.

Conclusions

Our findings highlight the multimodal nature of ER deficits in ASC, which exist for basic as well as complex emotions and are relatively stable cross-culturally. Cross-cultural research has the potential to reveal both autism-specific universal deficits and the role that specific cultures play in the way empathy operates in different countries.

Similar content being viewed by others

Background

Autism spectrum conditions (ASC) are neurodevelopmental conditions characterized by social communication and interaction difficulties, circumscribed interests, and a preference for sameness and repetition. Individuals with ASC experience difficulties processing and interpreting socio-emotional cues [1–3]. These emotion recognition (ER) difficulties are recognized as part of the social communication challenge, characteristic of ASC [4] and predict adaptive socialization skills [5]. The recognition of others’ emotions and mental states relies on the integration of emotional cues from various channels. These include facial expressions, vocal intonation, and body language, as well as contextual information [6].

Due to its centrality in emotional expression, the majority of ER research has focused on facial expression. In typical development (TD), the skills necessary to recognize and discriminate between emotional expressions are present from infancy [7, 8]. Throughout development, facial ER improves, with children gradually becoming “emotion experts” [9]. In contrast, children and adults with ASC show reduced attention to faces and facial expressions [10–13]. Altered attention, specifically to the eye region of the face [14, 15], has a significant effect on facial affect recognition in individuals with ASC [16]. In addition, children with ASC process faces in a piecemeal fashion rather than holistically [17–19] (see [20], for a different perspective). Consequently, children with ASC show reduced “face expertise” [11].

Some studies of facial ER have reported deficits in participants with ASC [21–24]. Other studies, however, have found facial emotion recognition skills in children with ASC to be equal to those in TD peers [25–28]. Recent review of facial expression in ASC had proposed that these differences in documented ER are dependent on participant demographics, task selection, and stimulus type [29]. Despite the extensive research on emotion recognition through facial expressions in ASC, most studies have either used still, rather than dynamic, stimuli [29, 30], allowed participants unlimited exposure to stimuli [31–33], or else limited their research to a narrow set of basic emotions [34, 35].

Another important channel of emotional information is prosody, which includes vocal intonation, stress, and rhythm [36]. Prosody plays an important role in linguistic functions as well as in emotional expression and comprehension [37, 38]. Whereas considerable research has documented the deficits children with ASC have in language production and comprehension, relatively few studies have examined the abilities of these children to recognize emotions through prosody [38, 39]. Studies on ER from prosody in individuals with ASC have yielded mixed results. Some studies have reported that adults or children with ASC experienced difficulties in using emotional prosody to identify the emotions of others [22, 36, 38, 40–43]. In contrast, several studies have reported that the emotional prosody perception in individuals with ASC is similar to that of TD controls [37, 44–47]. These mixed results could be due to methodological reasons [45], such as the use of cross-modal (e.g., face-voice) paradigms [48, 49] or the use of speech stimuli in which ER could rely on linguistic, rather than prosodic cues [3, 50].

A third channel of relevant emotional cues is body language, which includes gestures and postural changes. TD infants as young as 4 to 6 months show distinct attention to human biological motion [51]. At 2 years of age, children already rely on body language cues to predict the behavior of people they are watching [52], understand the goals of actions they observe [53], and anticipate people’s intentions [54]. At 3 years of age, children can verbally interpret biological motion, an ability that peaks by about 5 years of age [55]. Finally, children as young as 4 years successfully use body language to recognize social interactions, an ability that peaks by the age of 7 to 8 years [56].

In ASC, studies demonstrate difficulties discriminating biological from non-biological motion [57–59] and no preference for biological motion over object motion [60, 61]. Studies of emotional body language comprehension in ASC are scarce. The existing ones reported difficulties recognizing emotional, but not non-emotional motion from point-light displays in individuals with ASC, compared to TD controls [62, 63]. Children with ASC can distinguish social from non-social scenes, solely based on whole-body movements presented in point-light and stick-figure displays [56], but fail to interpret the nature of the actions presented, especially in the case of social scenes [56, 64–66]. Hence, the body motion recognition deficit in ASC appears to be restricted to percepts requiring mentalization and emotional interpretation [67–69].

Most studies that examined the ability of people with ASC to understand body language have either used stimuli such as point-light displays [67–69] or stick figures [56] or limited their investigation to a narrow set of basic emotions [24, 70]. It is therefore important to investigate whether this documented deficit exists in full body video stimuli, demonstrating a wider range of emotions, including complex ones.

In addition to the unimodal emotion-processing deficits described above, individuals with ASC show problems integrating information across multiple modalities [71]. Many of the atypical perceptual experiences reported by people with ASC may stem from an inability to efficiently filter, process, and integrate information from different modalities, when presented simultaneously [72].

Most studies that examine multimodal processing focus on the integration of auditory and visual social stimuli linked to communication, such as speech and its corresponding lip movements [73, 74]. The results of most of these studies indicate that the ability to integrate audiovisual social stimuli is impaired in individuals with ASC [75–78]. On the other hand, there is little research on the integration of information from facial expressions and body language or from multiple modalities in context. Multimodal integration ER studies reveal that presenting emotional cues in different channels does not necessarily help, and may even hamper the ability of individuals with ASC to recognize emotions and mental states [79, 80]. Previous studies of multimodal ER in ASC have shown deficits in adults [81] and in children [82]. However, the stimuli presented in these studies included verbal content, in addition to the visual and auditory emotional cues. Since individuals with ASC rely on verbal content as a compensatory mechanism [25], an examination of multimodal ER in children with ASC, in which stimuli have no verbal content to rely on, is desirable. In the absence of a linguistic component, such stimuli could also be used for cross-cultural comparison of ER in ASC.

As described above, studies showing no ER differences between TD individuals and individuals with ASC focus mostly, if not solely, on basic emotions [24, 34, 35, 70]. The six emotions referred to as “basic” (happiness, sadness, fear, anger, surprise, and disgust) are suggested to be cross-culturally expressed and recognized [83] and are to some extent neurologically distinct [84]. Unlike basic emotions, complex emotions are considered more context and culture dependent [85, 86]. They involve attributing a cognitive state as well as an emotion and may be belief- rather than situation-based [87]. The examination of basic ER in children with ASC yielded mixed results, with some studies reporting basic ER deficit in this group [21, 49, 88], whereas others reporting no difficulties in recognition of the basic emotions in children with ASC [25, 26, 28]. In contrast, studies investigating recognition of complex emotions and other mental states by children with ASC, compared to TD children, have shown more conclusive deficits [3, 89]. However, no study so far has conducted a direct comparison of basic and complex ER in children with ASC and their TD peers.

In terms of cross-cultural ER differences, meta-analyses have documented evidence for an in-group advantage, in that emotional expressions are more accurately recognized by individuals within the same culture, versus other cultures [90, 91]. However, individuals with ASC have been found to have poorer understanding of emotional cues (as shown above), as well as lower sensitivity to social cues [10] and lower social conformity [92]. Since individuals with ASC are less socio-emotionally sensitive within their own cultures, it is possible that cross-cultural differences in ER, if found, would be smaller in the ASC groups than in the TD groups.

The current study aimed to compare the recognition of the six basic and 12 complex emotions by children with ASC and TD controls. We examined ER unimodally through faces, voices, and body language, as well as multimodally through integrative scenarios with no verbal content and tested basic and complex ER cross-culturally in three different countries: Israel, Britain, and Sweden. We assessed differences between 5 to 9-year-old children with autism in the average IQ range and TD controls, comparable for age, sex, and IQ. We predicted that (a) the ASC group would have lower scores on the different ER tasks, compared to controls; (b) basic emotions would be recognized more easily than complex emotions; (c) the group differences would be greater for complex ER than for basic ER; (d) cultural differences between the three sites would be greater for complex ER than for basic ER; and (e) cultural differences on ER would be greater in the TD groups than in the ASC groups.

Methods

Instruments

Intelligence

Two subtests from the Wechsler Intelligence Scales, Vocabulary and Block Design, were used, representing verbal and performance IQ. In Britain and Sweden, subtests were taken from the locally standardized versions of the second edition of the Wechsler Abbreviated Scales of Intelligence (WASI-2) [93]. In Israel, they were taken from the fourth edition of the Wechsler Intelligence Scale for Children [94] and the third edition of the Wechsler Primary and Preschool Scale of Intelligence (WPPSI-3) [95], used according to the child’s age.

Autistic traits

The school-age form (4 to 18 years) of the Social Responsiveness Scale, second edition (SRS-2) [96], was used to assess the severity of autism traits. The SRS-2 measures social awareness, social communication, social motivation, social cognition, and inflexible behaviors applying a dimensional concept of autism. The SRS-2 includes 65 items, each scored on a four-point Likert scale, from 0 (“not true”) to 3 (“almost always true”), yielding a maximum of 195. It has demonstrated with good to excellent reliability and validity [96] and with good intercultural validity [97].

Facial affect recognition

The Frankfurt Test and Training of Facial Affect Recognition (FEFA-2) [98, 99] is a computerized test, examining facial ER of the six basic emotions (and neutral). The normed FEFA-2 test module comprises a series of 50 items for faces, showing good to excellent internal consistency and stability. The FEFA-2 was used in this study as a convergent validator for the test battery.

Emotion recognition battery

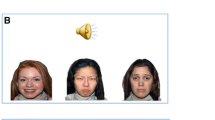

Emotion recognition was tested using four tasks, comprising facial expression videos, decontextualized vocal utterances, body language videos, and integrative video clips, presenting all three modalities in context. The battery is based on stimuli from several sources: The face task comprised 5-s long video clips from Mindreading (www.jkp.com/mindreading) [100]. The voice and body tasks comprised 1- to 3-s long audio clips and 4- to 24-s long video clips from The EU-Emotion Stimulus Set [101, 102]. The integrative task used 3- to 19-s long sampled scenes from old television series, following Golan et al. [82].

The tasks assessed ER for the six basic emotions (happy, sad, afraid, angry, disgusted, surprised) and for 12 complex emotions (interested, bored, excited, worried, disappointed, frustrated, proud, ashamed, kind, unfriendly, joking, hurt). Clips representing each emotion in all modalities were sampled from the above sources. Audio clips used neutral utterances (e.g., “what’s all this?”), spoken in appropriate emotional prosody. They were recorded in the native language on the three sites [101, 102]. In the body language clips, faces were masked, in order to prevent reliance on facial cues. In the integrative clips, the soundtrack was muffled, so that only paralinguistic information was available. Stimuli for the tasks were selected from an extended cross cultural ER survey, in which each stimulus was validated by 60 adults (20 from each site). In these surveys, participants had to recognize each stimulus by choosing the correct emotional label out of six options [101, 102]. Stimuli were considered valid if recognized correctly by at least 50% of the judges (p < .00001, binomial test). For each of the selected stimuli, the six-label scale was then reduced to four emotion labels—the target emotion label and three foils, in order to make it more suitable for children. One of the foils was always in the opposite valence to the target emotion (e.g., afraid as a foil for a proud target), and the other two were in the same valence as the target emotion (e.g., excited and interested as foils for a proud target). Label order was counterbalanced across items.

Next, item analysis was conducted with 20 TD children in Israel, 20 in Britain, and 10 in Sweden, aged 5 to 9 (half boys and half girls). Items were approved separately for each site, if the target answer was selected by at list 50% of the children in Israel and in Britain (p < .01, binomial test) and at least 60% in Sweden (p < .02, binomial test), and if none of the foils was selected by more than 40% of the children.

For the face task, 77.5% of the items met these criteria in Israel (with an average recognition rate of M = 74.0%, s.d. = 12.1%), 86.5% met them in Britain (recognition rate: M = 76.0%, s.d. = 12.4%), and 82.1% met them in Sweden (recognition rate: M = 80.0%, s.d. = 12.7%). For the voice task, 78.8% of the items met the criteria in Israel (recognition rate: M = 77.3%, s.d. = 13.6%), 91.7% in Britain (recognition rate: M = 75.8%, s.d. = 14.6%), and 89.47% met them in Sweden (recognition rate: M = 80.3%, s.d. = 10.7%). For the body task, 75.0% of the items met the criteria in Israel (recognition rate: M = 76.7%, s.d. = 9.3%), 90.3% in Britain (recognition rate: M = 81.3%, s.d. = 12.6%), and 85.3% met them in Sweden (recognition rate: M = 86.6%, s.d. = 9.9%). For the integrative task, 88.6% of the items met the criteria in Israel (recognition rate: M = 77.5%, s.d. = 12.2%), 95.5% in Britain (recognition rate: M = 81.34%, s.d. = 14.0%), and 87.5% met them in Sweden (recognition rate: M = 83.1%, s.d. = 13.7%).

Items that did not meet the inclusion criteria on the first item analysis round had their stimulus clip replaced, and the item analysis process was repeated until criteria were met. This resulted in slightly different stimuli being used in the face, body, and integrative tasks on the different sites (four items were different on the face task, one on the body task and one on the integrative task).

Following these steps, four ER tasks were created (face, voice, body, and integration). In the face, voice, and integrative tasks, each emotion was represented by two clips, with a total of 36 items (score range 0–36) per task. The body gesture task included only 24 items (score range 0–24), two per emotion, comprising the six basic emotions and six of the complex emotions (proud, worried, excited, disappointed, frustrated, bored). The other complex emotions were not represented in this task, as they required more than one individual to convey the emotion (e.g., unfriendly). Figure 1 presents screenshots of the three visual tasks.

The ER battery was validated through correlation analysis between the four ER task scores and participants’ age, verbal and non-verbal abilities, level of autistic symptoms on the SRS-2, and FEFA-2 scores, as a convergent validator. Task intercorrelations were also computed. Bonferroni’s correction for multiple correlations was used. The correlations are presented in Table 1.

As shown in the table, tasks were moderately intercorrelated (ranging between r = .61 and .75) and, in addition, had moderate positive correlations with participants’ age (ranging between r = .44 and .51) and verbal ability (ranging between r = .35 and .52). Non-verbal ability was only correlated with the face task scores (r = .26, p < .01). All tasks were negatively correlated with participants’ level of autistic symptoms (ranging between r = −.29 and −.50) and positively correlated with the FEFA-2 (range: r = .46–.54).

Participants

The study was approved by the Psychology Research Ethics Committee at Cambridge University, by the Institutional Review Board at Bar-Ilan University, and by the Regional Board of Ethical Vetting Stockholm. Participants’ assent and parents’ informed consent were received before inclusion in the study. One hundred thirteen children, aged 5–9 years, took part in the study. The Israeli sample comprised 20 children in the ASC group and 22 in the TD group. The British sample comprised 16 children with ASC and 18 with TD. In Sweden, 19 children with ASC were compared to 18 children with TD. Participants with ASC had all been clinically diagnosed according to DSM-IV-TR or ICD-10 criteria [103, 104]. Diagnosis for children with ASC was corroborated by meeting ASC cutoff using the second edition of the Autism Diagnostic Observation Schedule (ADOS-2) [105]. Participants with ASC were recruited from volunteer databases, local clinics for children with ASC, special education classes and kindergartens, internet forums, and support organizations for individuals with ASC.

Participants in the TD groups were recruited from local primary schools and kindergartens. Parents reported their children had no psychiatric diagnoses or special educational needs, and none had a family member diagnosed with ASC. To screen out for ASC in the TD group, participants’ parents filled in the Childhood Autism Spectrum Test (CAST) [106]. None of the TD group participants scored above the cutoff of 15. Groups were comparable locally on age, gender, and standard scores of two subtests from the Wechsler Abbreviated Scale of Intelligence (WASI-2) [93]: Vocabulary and Block design. ASC groups and TD groups, separately, were comparable on SRS scores. The groups’ background data appears in Table 2.

Procedure

The research team met each child one to three times for assessment. In Israel, the meeting took place at the children’s homes. In Britain, some meetings were held at children’s homes and some at the Autism Research Centre in Cambridge. In Sweden, the meeting took place at the clinical research department KIND. All participants were tested individually. Parents filled out the SRS-2. The Wechsler subtests, the four ER computerized tasks, the FEFA-2, and ADOS-2 (children with ASC only) were administered to children.

The children were tested on the ER tasks in a counterbalanced form. Each task was preceded by two practice items. The experimenter read the instructions and the questions for all items, in order to avoid confounds due to reading difficulties. Optional answers were read out loud using neutral intonation, and the children were asked if they were familiar with all the possible answers. If the child was not familiar with a word, it was defined and demonstrated using a definition handout (e.g., unfriendly: to be not nice to someone. John was unfriendly to Paul. He told him he didn’t want to play with him). There was no time limit to answer each item, but participants could watch or listen to each clip only once. Completion of the whole ER battery took 1.5–2.5 h, including breaks.

Statistical analysis

In order to examine differences between basic and complex ER in the different tasks, since the number of basic and complex emotions included in each task differed, accuracy proportion scores of basic and complex ER in the different tasks were computed for each participant. Average proportions of basic and complex ER task scores for the groups in the three countries appear in Table 3. After confirming the assumptions for MANOVA are met, a multivariate analysis of covariance (MANCOVA) with repeated measures was computed, with accuracy proportion scores of the four tasks (face, voice, body, and integrative) as the dependent variables, complexity (basic, complex) as the within-subject factor and group (ASC, TD), and country (Israel, Britain, Sweden) as the between-group factors. Since participants in the three countries differed on age and verbal ability, these two factors were entered as covariates. Pairwise comparisons with Bonferroni’s correction were used for further analysis of the interaction effects.

Results

Overall analysis

The MANCOVA yielded an overall main effect for group (F[4,102] = 14.70, p < .001, η 2 = .37) and for complexity (F[4,102] = 10.49, p < .001, η 2 = .29) but not for country (F[8,204] = 1.73, n.s.). Both age (F[4,102] = 11.82, p < .001, η 2 = .32) and verbal ability (F[4,102] = 8.11, p < .001, η 2 = .24) had significant overall effects as covariates. The three two-way interactions: group by country (F[8,204] = 1.41, n.s.), group by complexity (F[4,102] = 1.56, n.s.), and country by complexity (F[8,204] = 1.90, n.s.) were not significant, but a three-way interaction of group by country by complexity came out significant (F[8,204] = 2.29, p < .05, η 2 = .08). In addition, complexity had a significant interaction with age (F[4,102] = 5.93, p < .001, η 2 = .19).

Analysis per ER task

Effects of the analysis per ER task are detailed in Table 4. The analysis revealed the group, and complexity main effects found in the overall analysis were also significant for each and every task, with the TD group performing better than the ASC group over and above complexity and country, and with basic emotions recognized significantly better than complex emotions, over and above group and country. Age had a significant effect on all ER tasks, and verbal ability had a significant effect on all tasks but the face task. Similarly, the age by complexity interaction was significant for all ER tasks, with the exception of the face task. The three-way interaction of group by country by complexity came out significant only for the face task, suggesting this is the source for the interaction effect found in the overall analysis.

Pairwise comparisons revealed the TD group performed significantly better than the ASC group in Israel and in Britain both for basic (Israel: mean difference = .15, s.e. = .04, p < .001; Britain: mean difference = .17, s.e. = .05, p < .001) and for complex (Israel: mean difference = .11, s.e. = .04, p < .01; Britain: mean difference = .13, s.e. = .04, p < .01) emotions. However, in Sweden, group differences were found for complex emotions (mean difference = .13, s.e. = .04, p < .01) but not for basic emotions (mean difference = .02, s.e. = .04, n.s.). Figure 2 illustrates these effects in the three countries.

An examination of the age by complexity interaction in the different ER tasks revealed the interaction was significant for all tasks, with the exception of the voice task. In order to further examine the interaction, bivariate correlation analysis, with Bonferroni’s correction, was conducted for age with basic and complex ER scores of each task, and the difference between the correlation of basic and complex emotions with age was compared for each task. The results, detailed in Table 5, show that, with the exception of the face task, age was more strongly correlated with complex ER than with basic ER for all tasks.

Additional findings

In addition to the effects found in the overall analysis, some additional interaction effects were found for specific tasks. These will be described here, since there were specific hypotheses about them, which were not found over and above tasks. A group by complexity interaction was found only for the voice task (F[1,105] = 4.43, p < .05, η 2 = .04). Pairwise comparisons revealed participants with ASC (M = .61, s.e. = .02) scored lower than TD participants (M = .71, s.e. = .02) on complex emotion scores (mean difference = .10, s.e. = .03, p < .001) but not on basic emotion scores (ASC: M = .68, s.e. = .02; TD: M = .71, s.e. = .02; mean difference = .03, s.e. = .03, n.s.). In addition, a country by complexity interaction effect was found significant only for the body task (F[2,105] = 3.74, p < .05, η 2 = .07). Pairwise comparisons revealed that in Sweden, the body task scores for basic emotions (M = .75, s.e. = .03) were higher than for complex emotions (M = .64, s.e. = .03; mean difference = .11, s.e. = .04, p < .01). No difference between basic and complex body task scores was found significant in Israel (mean difference = .04, s.e. = .03, n.s.) and in Britain (mean difference = .04, s.e. = .04, n.s.).

Discussion

The current study tested if there are differences in the recognition of basic and complex emotions between children with ASC and typically developing children across three countries: Israel, Britain, and Sweden. Emotion recognition (ER) was tested using dynamic facial expressions, body language, vocal expressions, and integrative scenarios. Children with ASC showed ER deficits in all three modalities and their integration in context. These deficits were found cross-culturally. Participants with ASC showed ER deficits in both basic and complex emotions. Cross-cultural agreement was found for all major findings, with minor deviations, reported below. Our findings highlight the multimodal nature of ER deficits in ASC, which exists for basic and complex emotions and is relatively stable cross-culturally.

As predicted, the ASC group had more difficulties in ER from facial expressions, body language, vocal expressions, and integrative scenarios, compared to the TD participants, even after controlling for age and verbal IQ. The significant group differences on task scores replicates previous findings of difficulties among individuals with ASC on emotion and mental state recognition from visual, auditory, and contextual stimuli [22, 24, 40, 43, 56, 66, 76–78]. These findings provide further support for ER and mentalizing deficits in children with ASC, which are evident cross-culturally.

As predicted, our findings show that basic emotions were recognized more easily than complex emotions on all modalities, regardless of child’s diagnosis. However, contrary to our hypothesis, group differences for complex ER were not greater than those found on basic ER. With the exception of an interaction effect between complexity and group in the voice task, our findings showed ER deficits in 5–9-year olds with ASC exist over and above complexity level. These findings match some reports on basic ER deficits in high-functioning children with ASC [23, 24, 35, 41–43, 70] and reports on complex ER deficits in this group [3, 40, 82]. The deficit we describe in the ASC group in basic ER, which was not found in some other studies (e.g., [28, 46]), may result from the age group tested. Whereas in older age, children with ASC may develop compensatory mechanisms allowing them to recognize basic emotions [25], our examination reveals a comprehensive ER deficit in 5–9-year-old children with ASC.

Another finding of developmental importance was the age by complexity interaction found in the current study. This interaction, found over and above group, revealed moderate correlations between age and ER in complex emotions, across all modalities, suggesting 5–9-year olds are still developing their ability to recognize complex emotions, comprising mental, as well as situational, aspects. Weaker correlations were found for age with basic ER in the voice, body, and integrative tasks, but not on the face task, suggesting basic ER skills have matured for at least some of the children in the tested age group. Interestingly, our findings show that the facial ER skills of basic and complex emotions alike continue to develop in this age group. In view of the centrality of facial expressions in ER, these findings provide an interesting example of the continuing development of facial expertise [9].

It is important to note that all of our visual stimuli used video clips, rather than still pictures. It is possible that processing of dynamic stimuli, which are more ecological in nature, may be more challenging to children with ASC, in comparison to still images. Specific deficits in processing of dynamic stimuli [107] may explain why our paradigm, which employed only dynamic stimuli in the visual channel, had demonstrated ER deficits in basic, as well as in complex, emotions in the visual tasks, but only complex ER deficits in the auditory task.

A striking finding in our study was the lack of major cultural differences. Our hypotheses that complex ER would show greater cultural variability than basic ER and that TD children would show greater cultural variability than children with ASC were not supported. These findings stand in contrast with previous arguments about greater cultural diversity in complex ER [85, 86]. It is possible that such cultural differences would be more salient in older age groups, when the acquisition of culture-specific nuances has been completed.

However, some cultural differences were found on the face and body language tasks. For facial ER, children with ASC in Sweden had performed more poorly than TD controls only on complex emotions and not on basic ER. This was in contrast to the British and Israeli ASC groups, which showed facial ER deficits both for basic and complex emotions. In addition, a significant interaction was found between country and complexity in the body language task. The analysis revealed that regardless of diagnosis, participants in Sweden had more difficulties recognizing complex, compared to basic, emotions from body language. This difference was not found in Israel and Britain. It should be noted that the body task stimuli were filmed in Britain, comprising actors of various ethnicities. Whereas the basic, more automatic and cross-cultural emotions were easily recognized in Sweden, as they were in Britain and in Israel, the more subtle, complex manifestations of emotion in body language may have been more challenging for children in Sweden. An examination of body language emotions, performed by Scandinavian (in comparison to non-Scandinavian) actors should be conducted in order to support (or refute) this potential findings of an in-group advantage [108].

A few limitations of the study should be noted: The current study examined cultural differences in ER as part of a cross-cultural examination of differences between individuals with and without ASC. Whereas the sample size is sufficiently large for the main question of the study, a larger sample of TD individuals within each country may be needed in order to appropriately examine ER cultural differences in the general population. The examination of ER cultural differences (and ASC-TD differences) in the various modalities should also be extended to additional, non-western cultures, such as African, or Eastern cultures [90], as these may reveal greater cross-cultural differences than the ones reported here.

In the current study, we were unable to examine ER gender differences, due to the relatively small number of females in the ASC groups. Gender differences in ER were found among adult participants with ASC in some studies [35, 40] but not in others [109] A replication of the current study with larger samples could examine the existence of gender differences in children with ASC as well.

Another limitation lies in some methodological variability across the sites and more specifically to the administration of assessments at home vs. the lab. These differences had resulted from participants’ difficulties to attend the lab in Israel and in Britain (due to distance and to parents’ needs). Hence, the clinical teams traveled to children’s homes. Despite the attempts to maintain a standardized testing environment (e.g., standardized protocol, individual assessment in a quiet room), it is possible that the different location for assessments had affected the results of this study. However, since the ASC and TD groups within each country were tested under similar conditions, it is unlikely that the different testing conditions have affected the group differences. Nonetheless, we recommend further studies to endorse more standardized structure if possible.

Conclusions

Our findings demonstrate a supra-modal emotion recognition deficit in children with ASC, cross-culturally, for basic and complex emotion alike. Although the ER skills of children with ASC improve with age, like their TD peers, this overall ER deficit persists and calls for interventions [110, 111] which might narrow the developmental ER gap between children with ASC and their TD peers at the earliest possible stage.

Abbreviations

- ASC:

-

Autism spectrum conditions

- ER:

-

Emotion recognition

- TD:

-

Typical development

References

Kuusikko S, Haapsamo H, Jansson-Verkasalo E, Hurtig T, Mattila M-L, Ebeling H, Jussila K, Bölte S, Moilanen I. Emotion recognition in children and adolescents with autism spectrum disorders. J Autism Dev Disord. 2009;39:938–45.

Uljarevic M, Hamilton A. Recognition of emotions in autism: a formal meta-analysis. J Autism Dev Disord. 2013;43:1517–26.

Golan O, Sinai-Gavrilov Y, Baron-Cohen S. The Cambridge mindreading face-voice battery for children (CAM-C): complex emotion recognition in children with and without autism spectrum conditions. Mol Autism. 2015;6:22.

American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 5th ed. VA: American Psychiatric Association; 2013.

Hudepohl MB, Robins DL, King TZ, Henrich CC. The role of emotion perception in adaptive functioning of people with autism spectrum disorders. Autism. 2015;19:107–12.

Foxe JJ, Molholm S. Ten years at the multisensory forum: musings on the evolution of a field. Brain Topogr. 2009;21:149–54.

Haviland JM, Lelwica M. The induced affect response: 10-week-old infants’ responses to three emotion expressions. 1987, 23:97–104.

Leppänen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Dev. 2007;78:232–45.

Herba C, Phillips M. Annotation: Development of facial expression recognition from childhood to adolescence: behavioural and neurological perspectives. J Child Psychol Psychiatry. 2004;45:1185–98.

Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiat. 2002;1(59(9)):809–16.

Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Dev Neuropsychol. 2005;27:403–24.

Papagiannopoulou EA, Chitty KM, Hermens DF, Hickie IB, Lagopoulos J. A systematic review and meta-analysis of eye-tracking studies in children with autism spectrum disorders. Soc Neurosci. 2014;9:610–32.

Guillon Q, Rogé B, Afzali MH, Baduel S, Kruck J, Hadjikhani N. Intact perception but abnormal orientation towards face-like objects in young children with ASD. Sci Rep. 2016;6:22119.

Baron-Cohen S, Wheelwright S, Jolliffe and T. Is there a “Language of the Eyes”? Evidence from normal adults, and adults with autism or asperger syndrome. Vis cogn 1997, 4:311–331.

Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. J Autism Dev Disord. 2002;32:249–61.

Berggren S, Engström A-C, Bölte S. Facial affect recognition in autism, ADHD and typical development. Cogn Neuropsychiatry. 2016;21:213–27.

Happé F. Autism: cognitive deficit or cognitive style? Trends Cogn Sci. 1999;3:216–22.

Elgar K, Campbell R. Annotation: the cognitive neuroscience of face recognition: implications for developmental disorders. J Child Psychol Psychiatry. 2001;42:705–17.

van der Geest JN, Kemner C, Verbaten MN, van Engeland H. Gaze behavior of children with pervasive developmental disorder toward human faces: a fixation time study. J Child Psychol Psychiatry. 2002;43:669–78.

Tanaka JW, Sung A. The “Eye Avoidance” hypothesis of autism face processing. J Autism Dev Disord. 2016;46:1538–52.

Deruelle C, Rondan C, Gepner B, Tardif C. Spatial frequency and face processing in children with autism and Asperger syndrome. J Autism Dev Disord. 2004;34:199–210.

Diehl JJ, Bennetto L, Watson D, Gunlogson C, McDonough J. Resolving ambiguity: a psycholinguistic approach to understanding prosody processing in high-functioning autism. Brain Lang. 2008;106:144–52.

Grossman RB, Tager-Flusberg H. Reading faces for information about words and emotions in adolescents with autism. Res Autism Spectr Disord. 2008;2:681–95.

Philip RCM, Whalley HC, Stanfield a C, Sprengelmeyer R, Santos IM, Young a W, Atkinson a P, Calder a J, Johnstone EC, Lawrie SM, Hall J. Deficits in facial, body movement and vocal emotional processing in autism spectrum disorders. Psychol Med. 2010;40:1919–29.

Grossman JB, Klin A, Carter AS, Volkmar FR. Verbal bias in recognition of facial emotions in children with Asperger syndrome. J Child Psychol Psychiatry. 2000;41:369–79.

Castelli F. Understanding emotions from standardized facial expressions in autism and normal development. Autism. 2005;9:428–49.

Rosset DB, Rondan C, Da Fonseca D, Santos A, Assouline B, Deruelle C. Typical emotion processing for cartoon but not for real faces in children with autistic spectrum disorders. J Autism Dev Disord. 2008;38:919–25.

Tracy JL, Robins RW, Schriber R a, Solomon M. Is emotion recognition impaired in individuals with autism spectrum disorders? J Autism Dev Disord. 2011;41:102–9.

Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol Rev. 2010;20:290–322.

Wong N, Beidel DC, Sarver DE, Sims V. Facial emotion recognition in children with high functioning autism and children with social phobia. Child Psychiatry Hum Dev. 2012;43:775–94.

Macdonald H, Rutter M, Howlin P, Rios P, Conteur AL, Evered C, Folstein S. Recognition and expression of emotional cues by autistic and normal adults. J Child Psychol Psychiatry. 1989;30:865–77.

Humphreys K, Minshew N, Leonard GL, Behrmann M. A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia. 2007;45:685–95.

Rump KM, Giovannelli JL, Minshew NJ, Strauss MS. The development of emotion recognition in individuals with autism. Child Dev. 2009;80:1434–47.

Law Smith MJ, Montagne B, Perrett DI, Gill M, Gallagher L. Detecting subtle facial emotion recognition deficits in high-functioning autism using dynamic stimuli of varying intensities. Neuropsychologia. 2010;48:2777–81.

Sucksmith E, Allison C, Baron-Cohen S, Chakrabarti B, Hoekstra R a. Empathy and emotion recognition in people with autism, first-degree relatives, and controls. Neuropsychologia. 2013;51:98–105.

McCann J, Peppé S, Gibbon FE, O’Hare A, Rutherford M. Prosody and its relationship to language in school-aged children with high-functioning autism. Int J Lang Commun Disord. 2007;42:682–702.

Paul R, Augustyn A, Klin A, Volkmar FR. Perception and production of prosody by speakers with autism spectrum disorders. J Autism Dev Disord. 2005;35:205–20.

Lindner JL, Rosén L a. Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with Asperger’s syndrome. J Autism Dev Disord. 2006;36:769–77.

McCann J, Peppé S. Prosody in autism spectrum disorders: a critical review. Int J Lang Commun Disord. 2003;38:325–50.

Golan O, Baron-Cohen S, Hill JJ, Rutherford MD. The “Reading the Mind in the Voice” test-revised: a study of complex emotion recognition in adults with and without autism spectrum conditions. J Autism Dev Disord. 2007;37:1096–106.

Hubbard K, Trauner D a. Intonation and emotion in autistic spectrum disorders. J Psycholinguist Res. 2007;36:159–73.

Järvinen-Pasley A, Peppé S, King-Smith G, Heaton P. The relationship between form and function level receptive prosodic abilities in autism. J Autism Dev Disord. 2008;38:1328–40.

Globerson E, Amir N, Kishon-Rabin L, Golan O. Prosody recognition in adults with high-functioning autism spectrum disorders: from psychoacoustics to cognition. Autism Res. 2015;8:153–63.

Chevallier C, Noveck I, Happé F, Wilson D. What’s in a voice? Prosody as a test case for the theory of mind account of autism. Neuropsychologia. 2011;49:507–17.

Grossman RB, Bemis RH, Plesa Skwerer D, Tager-Flusberg H. Lexical and affective prosody in children with high-functioning autism. J Speech Lang Hear Res. 2010;53:778.

Brennand R, Schepman A, Rodway P. Vocal emotion perception in pseudo-sentences by secondary-school children with autism spectrum disorder. Res Autism Spectr Disord. 2011;5:1567–73.

Brooks PJ, Ploog BO. Attention to emotional tone of voice in speech perception in children with autism. Res Autism Spectr Disord. 2013;7:845–57.

Grossman RB, Tager-Flusberg H. “Who said that?” Matching of low- and high-intensity emotional prosody to facial expressions by adolescents with ASD. J Autism Dev Disord. 2012;42:2546–57.

Hobson RP. The autistic child’s appraisal of expressions of emotions. J Child Psychol Psychiatry. 1986;27:321–42.

Rutherford MD, Baron-Cohen S, Wheelwright S. Reading the mind in the voice: a study with normal adults and adults with Asperger syndrome and high functioning autism. J Autism Dev Disord. 2002;32:189–94.

Fox R, McDaniel C. The perception of biological motion by human infants. Science. 1982;218:486–7.

Onishi KH, Baillargeon R. Do 15-month-old infants understand false beliefs? Science. 2005;308:255–8.

Warneken F, Tomasello M. Altruistic helping in human infants and young chimpanzees. Science. 2006;311:1301–3.

Southgate V, Senju A, Csibra G. Action anticipation through attribution of false belief by 2-year-olds. Psychol Sci. 2007;18:587–92.

Pavlova M, Krägeloh-Mann I, Sokolov A, Birbaumer N. Recognition of point-light biological motion displays by young children. Perception. 2001;30:925–33.

Centelles L, Assaiante C, Etchegoyhen K, Bouvard M, Schmitz C. From action to interaction: exploring the contribution of body motion cues to social understanding in typical development and in autism spectrum disorders. J Autism Dev Disord. 2013;43:1140–50.

Blake R, Turner LM, Smoski MJ, Pozdol SL, Stone WL. Visual recognition of biological motion is impaired in children with autism. Psychol Sci. 2003;14:151–7.

Cook J, Saygin AP, Swain R, Blakemore S-J. Reduced sensitivity to minimum-jerk biological motion in autism spectrum conditions. Neuropsychologia. 2009;47:3275–8.

Falck-Ytter T, Rehnberg E, Bölte S. Lack of visual orienting to biological motion and audiovisual synchrony in 3-year-olds with autism. PLoS One. 2013;8:e68816.

Kaiser MD, Delmolino L, Tanaka JW, Shiffrar M. Comparison of visual sensitivity to human and object motion in autism spectrum disorder. Autism Res. 2010;3:191–5.

Annaz D, Campbell R, Coleman M, Milne E, Swettenham J. Young children with autism spectrum disorder do not preferentially attend to biological motion. J Autism Dev Disord. 2012;42:401–8.

Moore DG, Hobson RP, Lee A. Components of person perception: an investigation with autistic, non-autistic retarded and typically developing children and adolescents. Br J Dev Psychol. 1997;15:401–23.

Murphy P, Brady N, Fitzgerald M, Troje NF. No evidence for impaired perception of biological motion in adults with autistic spectrum disorders. Neuropsychologia. 2009;47:3225–35.

Bertone A, Mottron L, Jelenic P, Faubert J. Motion perception in autism: a “complex” issue. J Cogn Neurosci. 2003;15:218–25.

Pellicano E, Gibson L, Maybery M, Durkin K, Badcock DR. Abnormal global processing along the dorsal visual pathway in autism: a possible mechanism for weak visuospatial coherence? Neuropsychologia. 2005;43:1044–53.

Pellicano E, Gibson LY. Investigating the functional integrity of the dorsal visual pathway in autism and dyslexia. Neuropsychologia. 2008;46:2593–6.

Parron C, Da Fonseca D, Santos A, Moore DG, Monfardini E, Deruelle C. Recognition of biological motion in children with autistic spectrum disorders. Autism. 2008;12:261–74.

Atkinson AP. Impaired recognition of emotions from body movements is associated with elevated motion coherence thresholds in autism spectrum disorders. Neuropsychologia. 2009;47:3023–9.

Hubert B, Wicker B, Moore DG, Monfardini E, Duverger H, Fonséca D, Deruelle C. Brief report: Recognition of emotional and non-emotional biological motion in individuals with autistic spectrum disorders. J Autism Dev Disord. 2007;37:1393.

Grèzes J, Wicker B, Berthoz S, de Gelder B. A failure to grasp the affective meaning of actions in autism spectrum disorder subjects. Neuropsychologia. 2009;47:1816–25.

Marco EJ, Hinkley LBN, Hill SS, Nagarajan SS. Sensory processing in autism: a review of neurophysiologic findings. Pediatr Res. 2011;69(5 Part 2):48R–54R.

O’Neill M, Jones RSP. Sensory-perceptual abnormalities in autism: a case for more research? J Autism Dev Disord. 1997;27:283–93.

Brandwein AB, Foxe JJ, Butler JS, Russo NN, Altschuler TS, Gomes H, Molholm S. The development of multisensory integration in high-functioning autism: high-density electrical mapping and psychophysical measures reveal impairments in the processing of audiovisual inputs. Cereb Cortex. 2013;23:1329–41.

Grossman RB, Steinhart E, Mitchell T, McIlvane W. “Look who’s talking!” Gaze patterns for implicit and explicit audio-visual speech synchrony detection in children with high-functioning autism. Autism Res. 2015;8:307–16.

Magnée MJ, de Gelder B, van Engeland H, Kemner C. Audiovisual speech integration in pervasive developmental disorder: evidence from event-related potentials. J Child Psychol Psychiatry. 2008;49:995–1000.

Mongillo EA, Irwin JR, Whalen DH, Klaiman C, Carter AS, Schultz RT. Audiovisual processing in children with and without autism spectrum disorders. J Autism Dev Disord. 2008;38:1349–58.

Taylor N, Isaac C, Milne E. A comparison of the development of audiovisual integration in children with autism spectrum disorders and typically developing children. J Autism Dev Disord. 2010;40:1403–11.

Irwin JR, Tornatore LA, Brancazio L, Whalen DH. Can children with autism spectrum disorders “Hear” a speaking face? Child Dev. 2011;82:1397–403.

Pierce K, Glad KS, Schreibman L. Social perception in children with autism: an attentional deficit? J Autism Dev Disord. 1997;27:265–82.

Hall GBC, Szechtman H, Nahmias C. Enhanced salience and emotion recognition in autism: a PET study. Am J Psychiatry. 2003;160:1439–41.

Golan O, Baron-Cohen S. Systemizing empathy: teaching adults with Asperger syndrome or high-functioning autism to recognize complex emotions using interactive multimedia. Dev Psychopathol. 2006;18:591–617.

Golan O, Baron-Cohen S, Golan Y. The “reading the mind in films” task [child version]: complex emotion and mental state recognition in children with and without autism spectrum conditions. J Autism Dev Disord. 2008;38:1534–41.

Ekman P. Facial expression and emotion. Am Psychol. 1993;48:384–92.

Wilson-Mendenhall CD, Barrett LF, Barsalou LW. Neural evidence that human emotions share core affective properties. Psychol Sci. 2013;24:947–56.

Griffiths PE. What emotions really are: the problem of psychological categories. Chicago: University of Chicago Press; 1997.

Izard C. Basic emotions, natural kinds, emotion schemas, and a new paradigm. Perspect Psychol Sci. 2007;2:260–80.

Harris PL. Children and emotion: the development of psychological understanding. Basil Blackwell; 1989.

Celani G, Battacchi MW, Arcidiacono L. The understanding of the emotional meaning of facial expressions in people with autism. J Autism Dev Disord. 1999;29:57–66.

Capps L, Yirmiya N, Sigman M. Understanding of simple and complex emotions in non-retarded children with autism.pdf. J child Psychol Psychiatry. 1992;35:1169–82.

Elfenbein HA, Ambady N. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol Bull. 2002;128:203–35.

Juslin PN, Laukka P. Communication of emotions in vocal expression and music performance: different channels, same code? Psychol Bull. 2003;129:770–814.

Yafai A-F, Verrier D, Reidy L. Social conformity and autism spectrum disorder: a child-friendly take on a classic study. Autism. 2014;18:1007–13.

Wechsler D. Wechsler Abbreviated Scale of Intelligence. 2nd ed. San Antonio: Pearson; 2011.

Wechsler D. Wechsler Intelligence Scale for Children-Fourth Edition. San Antonio: Psychological Corporation; 2003.

Wechsler D. Wechsler primary and preschool scale of intelligence—third edition. San Antonio: Harcourt Assessment; 2002.

Constantino JN, Gruber C. The Social Responsiveness Scale—second edition (SRS-2). Torrance: Western Psychological Services; 2012.

Bölte S, Poustka F, Constantino JN. Assessing autistic traits: cross-cultural validation of the social responsiveness scale (SRS). Autism Res. 2008;1:354–63.

Bölte S, Hubl D, Feineis-Matthews S, Prvulovic D, Dierks T, Poustka F. Facial affect recognition training in autism: can we animate the fusiform gyrus? Behav Neurosci. 2006;120:211–6.

Bölte S, Ciaramidaro A, Schlitt S, Hainz D, Kliemann D, Beyer A, Poustka F, Freitag C, Walter H. Training-induced plasticity of the social brain in autism spectrum disorder. Br J Psychiatry. 2015;207:149–57.

Baron-Cohen S, Golan O, Wheelwright S, Hill JJ. Mind reading: the interactive guide to emotions. London: Jessica Kingsley Limited; 2004.

O’Reilly H, Pigat D, Fridenson S, Berggren S, Tal S, Golan O, Bölte S, Baron-Cohen S, Lundqvist D. The EU-emotion stimulus set: a validation study. Behav Res Methods. 2016;48:567–76.

Lassalle A, Pigat D, O’Reilly H, Berggen S, Fridenson-Hayo S, Tal S, Elfström S, Råde A, Golan O, Bölte S, Baron-Cohen S, Lundqvist D. The EU-Emotion Voice Database. 2016.

World Health Organisation. ICD-10—International Classification of Diseases (10th ed.). Geneva: World Health Organization; 1994.

American Psychiatric Association. Diagnostic and statistical manual of mental disorders, 4th edition. American Psychiatric Association; 2000.

Lord C, Rutter M, DiLavore P, Risi S, Gotham K, Bishop SL. Autism diagnostic observation schedule (2nd ed.). Los Angeles: CA: Western Psychological Services; 2012.

Scott FJ, Baron-Cohen S, Bolton P, Brayne C. The CAST (Childhood Asperger Syndrome Test): preliminary development of a UK screen for mainstream primary-school-age children. Autism. 2002;6:9–31.

Gepner B, Féron F. Autism: a world changing too fast for a mis-wired brain? Neurosci Biobehav Rev. 2009;33:1227–42.

Elfenbein HA, Beaupré M, Lévesque M, Hess U. Toward a dialect theory: cultural differences in the expression and recognition of posed facial expressions. Emotion. 2007;7:131–46.

Baron-Cohen S, Bowen DC, Holt RJ, Allison C, Auyeung B, Lombardo MV, Smith P, Lai M-C. The “reading the mind in the eyes” test: complete absence of typical sex difference in ~400 men and women with autism. PLoS One. 2015;10(8):e0136521.

Golan O, Ashwin E, Granader Y, McClintock S, Day K, Leggett V, Baron-Cohen S. Enhancing emotion recognition in children with autism spectrum conditions: an intervention using animated vehicles with real emotional faces. J Autism Dev Disord. 2010;40:269–79.

Kouo JL, Egel AL. The effectiveness of interventions in teaching emotion recognition to children with autism spectrum disorder. Rev J Autism Dev Disord. 2016;3:254–65.

Acknowledgements

We would like to thank the research participants and their families, as well as our dedicated research teams: in Britain: Kirsty Macmillan, Nisha Hickin, and Mathilde Matthews; in Sweden: Anna Råde and Nina Milenkovic; and in Israel: Tal Alfi, Hila Hakim, and Na’ama Amir.

Funding

The research leading to this work has received funding from the European Community’s Seventh Framework Programme (FP7) under grant agreement no. [289021]. SvB was supported by the Swedish Research Council (Grant No. 523-2009-7054), and SBC was supported by the Autism Research Trust, the MRC, the Wellcome Trust, and the NIHR CLAHRC EoE during the period of this work.

Availability of data and materials

For access to our data base, please contact Shimrit Fridenson-Hayo at shimfri@gmail.com

Authors’ contributions

SFH and ST collected the data in Israel. StB collected the data in Sweden. AL and DP collected the data in Britain. OG, SvB, and SBC obtained the funding and supervised the study in Israel, Sweden, and Britain, respectively. SFH and OG analyzed the data and wrote the manuscript. All authors read, commented, and approved the manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

The study was approved by the Psychology Research Ethics Committee at Cambridge University, by the Institutional Review Board at Bar-Ilan University, and by the Regional Board of Ethical Vetting Stockholm. Participants’ assent and parents’ informed consent were received before inclusion in the study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Fridenson-Hayo, S., Berggren, S., Lassalle, A. et al. Basic and complex emotion recognition in children with autism: cross-cultural findings. Molecular Autism 7, 52 (2016). https://doi.org/10.1186/s13229-016-0113-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13229-016-0113-9