Abstract

Objectives

Clinical Practice Guidelines (CPGs) are designed to guide treatment decisions, yet adherence rates vary widely. To characterise perceived barriers and facilitators to cancer treatment CPG adherence in Australia, and estimate the frequency of previous qualitative research findings, a survey was distributed to Australian oncologists.

Results

The sample is described and validated guideline attitude scores reported for different groups. Differences in mean CPG attitude scores across clinician subgroups and associations between frequency of CPG use and clinician characteristics were calculated; with 48 respondents there was limited statistical power to find differences. Younger oncologists (< 50 years) and clinicians participating in three or more Multidisciplinary Team Meetings were more likely to routinely or occasionally use CPGs. Perceived barriers and facilitators were identified. Thematic analysis was conducted on open-text responses. Results were integrated with previous interview findings and presented in a thematic, conceptual matrix. Most barriers and facilitators identified earlier were corroborated by survey results, with minor discordance. Identified barriers and facilitators require further exploration within a larger sample to assess their perceived impact on cancer treatment CPG adherence in Australia, as well as to inform future CPG implementation strategies. This research was Human Research Ethics Committee approved (2019/ETH11722 and 52019568810127, ID:5688).

Similar content being viewed by others

Introduction

Clinical Practice Guidelines (CPGs) are designed both to guide treatment decision making through synthesis of the best available evidence, and to reduce unwarranted clinical variation [1]. Cancer treatment CPG-adherent care is associated with improved patient outcomes [2, 3]; however, non-adherence persists [4,5,6,7,8,9,10,11,12,13,14,15]. In order to explore perceived barriers and facilitators to cancer treatment CPG adherence in Australia, a mixed methods study [16] was conducted. Previously published results include: a review characterising perceived determinants of cancer treatment CPG adherence [14]; a review presenting cancer treatment CPG-adherence rates and associated factors in Australia [15]; and a qualitative study identifying perceived barriers and facilitators to cancer CPG adherence in New South Wales (NSW), Australia, mapped across five themes [17]. This manuscript represents the second empirical phase of this sequential study [16] that aimed to quantify and generalise previously identified qualitative findings [17] in a broader sample of oncologists across Australia. Survey results are presented and integrated with previous qualitative findings.

Main text

Survey methods

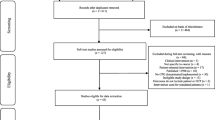

A purpose-designed survey was developed that was informed by interview findings from a previous study [17] and the literature [18,19,20,21]. The 13-question, self-administered survey assessed clinician attitudes and demographic details. One question was the previously validated tool ‘Attitudes towards clinical practice guidelines’ requiring a 6-point Likert scale response (strongly agree to strongly disagree) to 18 sub-questions [22, 23]. Approximately n = 200 completed surveys were anticipated [16].

Three approaches to recruitment were used

-

1)

In February 2020, senior oncologists from seven hospitals (across four geographical catchments, representing half of the population of NSW, Australia [24]) invited, via email, an unspecified number of hospital-based oncologists to complete the survey, sending a reminder in March 2020. The invitation included a survey pack (an online survey link, a participant information and consent form, and a gift-card draw entry-form). Purposive sampling by the senior oncologists ensured clinicians from a range of seniority and disciplines were invited [25]. Recruitment was paused because of concerns regarding coronavirus disease (COVID-19) pandemic-related clinician burnout [26] and recommenced in March 2021.

-

2)

In May 2021, the Clinical Oncology Society of Australia (COSA) emailed invitations to participate, with a survey pack, to 257 oncology specialist Society members; reminders were sent in June 2021.

-

3)

In June 2022, 290 hard copy invitations and surveys (along with a survey pack and reply-paid envelope) were posted to clinicians across the seven hospitals who were listed on a NSW Government website of oncology Multidisciplinary Team (MDT) members (“CANREFER” [27]). This excluded clinicians who had previously completed the survey. All participants were encouraged to forward the survey link to colleagues.

Analysis

Descriptive statistics of the characteristics of the clinician sample were calculated using the Statistical Package for Social Scientists, version 21 (SPSS, Chicago, USA), and presented as counts and proportions. An attitude score was calculated using the Attitudes Regarding Practice Guidelines tool [22, 23]. An analysis of variance was conducted to assess the statistical significance of differences in mean scores across clinician subgroups (Supplementary File 1). The associations between frequency of referring to CPGs, clinician demographics and practice patterns was explored, with statistical significance assessed by Fisher’s exact test [28]. Thematic analysis was conducted to examine open-text responses [29].

Mixed method data integration of survey findings with previously reported interview findings was conducted at the methods level, through building, where interview findings informed the survey development [30]. The triangulation of the two data sets and data collection methods aimed to enhance trustworthiness [31, 32] and corroborate the interview findings through a larger sample [30]. The thematically coded interview data was previously quantitised [33] and integrated with the survey data at the data interpretation and presentation level [30] through a visual display of a thematic conceptual matrix [30, 34,35,36]. Each comparable finding from the interview and survey studies was identified and assigned an alphanumeric code, using the Methods for Aggregating The Reporting of Interventions in Complex Studies (MATRICS) method [35] (Interview findings represented by “A”, survey findings by “B”, each interview subtheme represented by a number). Survey findings were assessed as: (1) convergent with interview findings (in agreement); (2) as offering information on the same issue that was complementary; or (3) as contradictory (discordant) [35, 37]. Interview subthemes that weren’t assessed in the survey were labelled silent (expected) [37]. Findings were labelled discordant, neutral [38], or in agreement, if less than, exactly, or more than 50% of survey respondents reported the finding, respectively. Findings were merged into summary statements [35] and presented within the qualitative thematic framework [17].

Survey Results

In total, 48 surveys were completed (19, 15 and 14 surveys returned in each wave respectively), yielding an estimated 5.8% and 4.8% response rate in second and third waves (with six postal surveys returned to the sender). An overall response rate was not calculated owing to snowball sampling and an unknown number of clinicians approached in wave one.

Most clinicians were aged 40–69 years and practicing in NSW. Most were medical oncologists (MOs; n = 15), radiation oncologist (ROs; n = 10) or surgeons (n = 15), practising as staff specialists or Visiting Medical Officers, who graduated from medicine between 1980 and 2009, completing their medical and oncology training in Australia. Clinicians practiced a mix of public and private practice and typically only practiced in metropolitan hospitals. Most clinicians were members of an MDT, attending 1–4 MDTs more than once per week. The most common cancers treated were breast, colorectal, upper gastrointestinal, lung, skin and haematological cancers. Just under half of the clinicians treated one cancer stream, while almost a third treated four or more cancer streams (Table 1). Most surgeons (11/15) and haematologists (3/3) reported treating one cancer stream, while most MOs (12/15) and ROs (8/10) reported treating multiple cancer streams.

Clinicians reported staying up-to-date by attending conferences (n = 39), reading journals (n = 36), attending MDTs (n = 23), discussing cases with colleagues (n = 15) and attending journal clubs or educational meetings (n = 12). Many clinicians routinely or occasionally referred to CPGs when making treatment decisions (33/46) and estimated that their practice was routinely adherent with CPG recommendations (32/46). Of clinicians who routinely referred to CPGs, most (13/17) treated two or more cancers, while those who referred to CPGs less frequently, typically only treated one cancer stream (17/29). Clinicians mainly reported using CPGs developed by: eviQ (n = 22), National Comprehensive Cancer Network (n = 22), American Society of Clinical Oncology (n = 15), Cancer Council Australia/National Health and Medical Research Council (n = 13) and the European Society of Medical Oncology (n = 12) CPGs. Clinicians referred to CPGs to ensure their practice was current and evidence based (n = 23), to support treatment plans for complex/rare/unfamiliar cases (n = 12), and/or to seek consensus opinion when evidence was lacking (n = 2). Clinicians reported not referring to CPGs when CPGs were out-of-date (n = 6), when clinicians felt they were well informed through MDTs and journal club (n = 5), and when CPGs were not locally relevant (n = 2) or too generic (n = 2).

The mean CPG attitude score was 42.6 (95%CI 40.4–44.8), ranging from 23 to 59, with 60 being the most positive score possible. No significant differences in mean scores were found across clinician subgroups (Supplementary File 1): average scores indicated a tendency for positive attitudes towards cancer CPGs. The only clinician characteristics that were significantly associated with frequency of referring to CPGs was the age of clinicians (p = 0.007) and number of MDTs clinicians attended (p = 0.03), with younger clinicians and those attending more MDTs referring to CPGs more frequently (Table 2). This may indicate that clinicians attending more MDTs (who treat more cancer sites), utilise CPGs to remain current with the evidence base across multiple cancer sites. Similarly, younger clinicians may engage with CPGs more frequently to support their professional development. Neither higher attitude scores, nor referring to CPGs necessarily result in CPG adherence, however, as demonstrated by the wide variation in rates across Australia and different cancer streams detected previously [15].

Integration with previously published interview findings

Comparable findings from this survey and the previous interview study [17] were integrated and are presented below [35] (Table 3). Results that were discordant or complementary are labelled.

[Findings 1AB, 2AB, 3AB, 4AB, 5AB]

Clinicians considered CPGs to be helpful, educational tools that are reassuring frameworks for supporting treatment decisions. They were perceived to reduce clinical variation and improve patient care, while simultaneously being unable to cater for patient complexities. Facilitators included regular CPG updates, and inclusion of a summary of evidence that justifies a recommendation and highlights the level of underpinning evidence. Barriers included a lack of agreement with the CPG interpretation of evidence (discordant) and CPGs being difficult to navigate or too rigid (complementary).

[Findings 6AB, 7AB, 8AB, 9AB]

Patient preference was a barrier to CPG adherence and potential litigation a facilitator. Younger clinicians and those who attended more MDTs referred to CPGs more often. Barriers such as other clinicians’ hubris; equipoise; and disciplinary preferences; plus concern about CPG-recommended treatment side effect; access challenges for rural patients; and concerns that publishing CPGs increased liability, were discordant across the studies.

[Findings 10AB]

Easy access to CPGs was a facilitator, while concern that other clinicians’ limited awareness of CPGs was a barrier; however, almost all surveyed clinicians reported being familiar with, and having access to, CPGs.

[Findings 11AB, 12AB]

Peer expectations to adhere to CPGs, multidisciplinary engagement and peer review of treatment decisions were facilitators, while lack of clinician time was a barrier. Surveyed clinicians were divided over whether: limited access to CPG-recommended drugs was a barrier; and if there was enough support and resources to implement CPGs (complementary).

[Findings 14AB, 15AB, 16AB]

Clinical audits, CPGs being based on unbiased synthesis of evidence or expert opinion and being developed by trusted organisations were facilitators. Adapting or tailoring international CPGs to meet local Australian needs and developing living CPGs, managed by a centralised national group, were proposed improvements.

The majority of these findings are in agreement across the two studies, and many have been previously recognised as barriers and facilitators to cancer CPG adherence in the literature [14]. The discordant findings, however, warrant further exploration. Further research is needed to understand treatment access barriers for patients living rurally (who are less likely to receive CPG adherent care than those in metropolitan areas [39, 40]) and the impact on adherence [41]. Similarly, limited access to international CPG-recommended drugs that lack Pharmaceutical Benefits Scheme (PBS) subsidisation and the impact on adherence warrants investigation: universal PBS insurance ensures affordable cancer care by subsidising approved drugs [42]. Further understanding of the impact of organisational support on CPG adherence in Australia is also needed, given organisational support is an established determinant of CPG adherence [43] [21, 44].

Limitations

The purpose of this study was to assess the frequency of previously published qualitative findings by surveying a broader population of oncology specialists [45]. The sample was dominated by clinicians in NSW, limiting the generalisability of findings. Results from this study should be interpreted with caution, as associations may have been over- or under-estimated [46], the full survey was not validated, and participant self-selection [47] may influence findings. Response rates were smaller than anticipated, despite using recommended strategies (postal surveys, incentives, follow-up) to increase participation [48], potentially reflecting COVID-19-related clinician busyness or burnout [26]. The extended recruitment period may potentially influence findings, although no data discrepancies were noted across the recruitment waves. The small sample size limited the study’s power to statistically compare characteristics and CPG attitude scores across clinician subgroups. Low response rates are common in clinician surveys [48,49,50], reducing the power to meaningfully compare use of CPGs across respondent subgroups. The comparison of means (e.g., mean CPG attitude scores) generally requires substantially smaller sample sizes, therefore validated scales should be incorporated into surveys, wherever possible, to assess differences between subgroups. In eTable 1 (Supplementary file) we provide means and standard deviations of mean CPG-attitude scores to enable estimation of sample sizes required to detect differences in attitude scores between sub-groups.

The qualitative data that was quantified [33] in the interview study [17] was not validated by a second reviewer [33], limiting its reliability and comparability, and potentially weakening the integration of the data sets [51]. Given the discordant findings identified, a follow-on confirmatory study with a larger sample size, and more nuanced questions, is needed to establish a clearer and more in depth understanding of the determinants of cancer treatment CPG adherence in Australia.

This study has characterised key determinants of cancer treatment CPG adherence in Australia. These findings are intended to inform the development CPG implementation recommendations and strategies to mitigate barriers and utilise facilitators of adherence.

Data Availability

The datasets generated and/or analysed during the current study are not publicly available due to ethics approval data confidentiality requirements, but are available (with restrictions) from the corresponding author on reasonable request and with permission of the South-Western Sydney Local Health District HREC and Macquarie University HREC.

Abbreviations

- COSA:

-

Clinical Oncology Society of Australia

- COVID-19:

-

Coronavirus disease

- CPG:

-

Clinical Practice Guidelines

- HREC:

-

Human Research Ethics Committee

- MDTs:

-

Multidisciplinary team

- MO:

-

Medical Oncologists

- NSW:

-

New South Wales

- PBS:

-

Pharmaceutical Benefits Scheme

- RO:

-

Radiation Oncologist

References

Harrison MB, Légaré F, Graham ID, Fervers B. Adapting clinical practice guidelines to local context and assessing barriers to their use. Can Med Assoc J. 2010;182(2):E78–E84. https://doi.org/10.1503/cmaj.081232.

Adelson P, Fusco K, Karapetis C, Wattchow D, Joshi R, Price T, et al. Use of guideline-recommended adjuvant therapies and survival outcomes for people with colorectal cancer at tertiary referral hospitals in South Australia. J Eval Clin Pract. 2018;24(1):135–44. https://doi.org/10.1111/jep.12757.

Hanna T, Shafiq J, Delaney G, Vinod S, Thompson S, Barton M. The population benefit of evidence-based radiotherapy: 5-year local control and overall survival benefits. Radiotherapy Oncol. 2018;126(2):191–7. https://doi.org/10.1016/j.radonc.2017.11.004.

Ng W, Jacob S, Delaney G, Do V, Barton M. Estimation of an optimal chemotherapy utilisation rate for upper gastrointestinal cancers: setting an evidence-based benchmark for the best-quality cancer care. Gastroenterol Res Pract. 2015. https://doi.org/10.1155/2015/753480.

Chagpar R, Xing Y, Chiang Y-J, Feig BW, Chang GJ, You YN, et al. Adherence to stage-specific treatment guidelines for patients with colon cancer. J Clin Oncol. 2012;30(9):972. https://doi.org/10.1200/JCO.2011.39.6937.

Eldin NS, Yasui Y, Scarfe A, Winget M. Adherence to treatment guidelines in stage II/III rectal cancer in Alberta, Canada. Clin Oncol. 2012;24(1):e9–e17. https://doi.org/10.1016/j.clon.2011.07.005.

Gagliardi G, Pucciarelli S, Asteria C, Infantino A, Romano G, Cola B, et al. A nationwide audit of the use of radiotherapy for rectal cancer in Italy. Tech Coloproctol. 2010;14(3):229–35. https://doi.org/10.1007/s10151-010-0597-9.

Dronkers EA, Mes SW, Wieringa MH, van der Schroeff MP, de Jong RJB. Noncompliance to guidelines in head and neck cancer treatment; associated factors for both patient and physician. J BMC cancer. 2015;15(1):1–10. https://doi.org/10.1186/s12885-015-1523-3.

Fong A, Ng W, Barton MB, Delaney GP. Estimation of an evidence-based benchmark for the optimal endocrine therapy utilization rate in breast cancer. The Breast. 2010;19(5):345–9. https://doi.org/10.1016/j.breast.2010.02.006.

Fong A, Shafiq J, Saunders C, Thompson A, Tyldesley S, Olivotto IA, et al. A comparison of systemic breast cancer therapy utilization in Canada (British Columbia), Scotland (Dundee), and Australia (Western Australia) with models of “optimal” therapy. The Breast. 2012;21(4):562–9. https://doi.org/10.1016/j.breast.2012.01.006.

Fong A, Shafiq J, Saunders C, Thompson AM, Tyldesley S, Olivotto IA, et al. A comparison of surgical and radiotherapy breast cancer therapy utilization in Canada (British Columbia), Scotland (Dundee), and Australia (Western Australia) with models of “optimal” therapy. The Breast. 2012;21(4):570–7. https://doi.org/10.1016/j.breast.2012.02.014.

Delaney G, Jacob S, Featherstone C, Barton M. The role of radiotherapy in cancer treatment: estimating optimal utilization from a review of evidence-based clinical guidelines. Cancer: Interdisciplinary International Journal of the American Cancer Society. 2005;104(6):1129–37. https://doi.org/10.1002/cncr.21324.

Kang Y-J, O’Connell DL, Tan J, Lew J-B, Demers A, Lotocki R, et al. Optimal uptake rates for initial treatments for cervical cancer in concordance with guidelines in Australia and Canada: results from two large cancer facilities. J Cancer Epidemiology. 2015;39(4):600–11. https://doi.org/10.1016/j.canep.2015.04.009.

Bierbaum M, Rapport F, Arnolda G, Nic Giolla Easpaig B, Lamprell K, Hutchinson K, et al. Clinicians’ attitudes and perceived barriers and facilitators to cancer treatment clinical practice guideline adherence: a systematic review of qualitative and quantitative literature. Implement Sci. 2020;15:1–24. https://doi.org/10.1186/s13012-020-00991-3.

Bierbaum M, Rapport F, Arnolda G, Tran Y, Nic Giolla Easpaig B, Ludlow K et al. Rates of Adherence to Cancer Treatment Guidelines in Australia and the factors associated with adherence: A systematic review. Asia-Pacific Journal of Clinical Oncology. 2023;Mar 7 (in press). doi: https://doi.org/10.1111/ajco.13948.

Bierbaum M, Braithwaite J, Arnolda G, Delaney GP, Liauw W, Kefford R, et al. Clinicians’ attitudes to oncology clinical practice guidelines and the barriers and facilitators to adherence: a mixed methods study protocol. BMJ Open. 2020;10(3):e035448. https://doi.org/10.1136/bmjopen-2019-035448.

Bierbaum M, Rapport F, Arnolda G, Delaney GP, Liauw W, Olver I, et al. Clinical practice Guideline adherence in oncology: a qualitative study of insights from clinicians in Australia. PLoS ONE. 2022;17(12):e0279116. https://doi.org/10.1371/journal.pone.0279116.

Graham ID, Brouwers M, Davies C, Tetroe J. Ontario doctors’ attitudes toward and use of clinical practice guidelines in oncology. J Eval Clin Pract. 2007;13(4):607–15. https://doi.org/10.1111/j.1365-2753.2006.00670.x.

Brown B, Young J, Kneebone A, Brooks A, Dominello A, Haines M. Knowledge, attitudes and beliefs towards management of men with locally advanced prostate cancer following radical prostatectomy: an australian survey of urologists. BJU Int. 2016;117:35–44. https://doi.org/10.1111/bju.13037.

Shea AM, DePuy V, Allen JM, Weinfurt KP. Use and perceptions of clinical practice guidelines by internal medicine physicians. Am J Med Qual. 2007;22(3):170–6. https://doi.org/10.1177/1062860607300291.

Brouwers MC, Makarski J, Garcia K, Akram S, Darling GE, Ellis PM, et al. A mixed methods approach to understand variation in lung cancer practice and the role of guidelines. Implement Sci. 2014;9(1):36. https://doi.org/10.1186/1748-5908-9-36.

Quiros D, Lin S, Larson EL. Attitudes toward practice guidelines among intensive care unit personnel: a cross-sectional anonymous survey. Heart & Lung. 2007;36(4):287–97. https://doi.org/10.1016/j.hrtlng.2006.08.005.

Larson E. A tool to assess barriers to adherence to hand hygiene guideline. Am J Infect Control. 2004;32(1):48–51. https://doi.org/10.1016/j.ajic.2003.05.005.

NSW Department of Planning Industry and Environment. 2016 New South Wales State and Local Government Area Population Projections. Sydney, NSW: 2016.

Etikan I, Musa SA, Alkassim RS. Comparison of convenience sampling and purposive sampling. Am J Theoretical Appl Stat. 2016;5(1):1–4. https://doi.org/10.11648/j.ajtas.20160501.11.

Dobson H, Malpas CB, Burrell AJ, Gurvich C, Chen L, Kulkarni J, et al. Burnout and psychological distress amongst australian healthcare workers during the COVID-19 pandemic. Australasian Psychiatry. 2021;29(1):26–30. https://doi.org/10.1177/1039856220965045.

Cancer Institute NSW. Canrefer. Sydney, NSW.2022 [Nov 2022]. Available from: https://www.canrefer.org.au/.

Warner P. Testing association with Fisher’s exact test. J Family Plann Reproductive Health Care. 2013;39(4):281–4. https://doi.org/10.1136/jfprhc-2013-100747.

Braun V, Clarke V. Using thematic analysis in psychology. Qualitative Res Psychol. 2006;3(2):77–101. https://doi.org/10.1191/1478088706qp063oa.

Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs—principles and practices. Health Serv Res. 2013;48(6pt2):2134–56. https://doi.org/10.1111/1475-6773.12117.

Tashakkori A, Teddlie C, Johnson B. Mixed methods. International Encyclopedia of the Social & Behavioral Sciences (Second Edition, Vol 15,: Elsevier Ltd; 2015. p. 618–23.

O’Cathain A, Murphy E, Nicholl J. Three techniques for integrating data in mixed methods studies. BMJ. 2010;341. https://doi.org/10.1136/bmj.c4587.

Sandelowski M, Voils CI, Knafl G. On quantitizing. J Mixed Methods Res. 2009;3(3):208–22. https://doi.org/10.1177/1558689809334210.

Wendler MC. Triangulation using a meta-matrix 1. J Adv Nurs. 2001;35(4):521–5. https://doi.org/10.1046/j.1365-2648.2001.01869.x.

Hutchings HA, Thorne K, Jerzembek GS, Cheung W-Y, Cohen D, Durai D, et al. Successful development and testing of a method for aggregating the reporting of interventions in complex studies (MATRICS). J Clin Epidemiol. 2016;69:193–8. https://doi.org/10.1016/j.jclinepi.2015.08.006.

Onwuegbuzie AJ, Dickinson WB. Mixed methods analysis and information visualization: graphical display for effective communication of research results. Qualitative Rep. 2008;13(2):204–25.

Farmer T, Robinson K, Elliott SJ, Eyles J. Developing and implementing a triangulation protocol for qualitative health research. Qual Health Res. 2006;16(3):377–94. https://doi.org/10.1177/1049732305285708.

Gray JH, Densten IL. Integrating quantitative and qualitative analysis using latent and manifest variables. Qual Quant. 1998;32(4):419–31. https://doi.org/10.1023/A:1004357719066.

Craft PS, Buckingham JM, Dahlstrom JE, Beckmann KR, Zhang Y, Stuart-Harris R, et al. Variation in the management of early breast cancer in rural and metropolitan centres: implications for the organisation of rural cancer services. The Breast. 2010;19(5):396–401. https://doi.org/10.1016/j.breast.2010.03.032.

Varey AH, Madronio CM, Cust AE, Goumas C, Mann GJ, Armstrong BK, et al. Poor adherence to national clinical management guidelines: a population-based, cross-sectional study of the surgical management of melanoma in New South Wales, Australia. Ann Surg Oncol. 2017;24(8):2080–8. https://doi.org/10.1245/s10434-017-5890-7.

Gabriel G, Barton M, Delaney GP. The effect of travel distance on radiotherapy utilization in NSW and ACT. Radiotherapy Oncol. 2015;117(2):386–9. https://doi.org/10.1016/j.radonc.2015.07.031.

Wilson A, Cohen J. Patient access to new cancer drugs in the United States and Australia. Value in Health. 2011;14(6):944–52. https://doi.org/10.1016/j.jval.2011.05.004.

Gurses AP, Marsteller JA, Ozok AA, Xiao Y, Owens S, Pronovost PJ. Using an interdisciplinary approach to identify factors that affect clinicians’ compliance with evidence-based guidelines. Crit Care Med. 2010;38:282–S91. https://doi.org/10.1097/CCM.0b013e3181e69e02.

Baatiema L, Otim ME, Mnatzaganian G, de-Graft Aikins A, Coombes J, Somerset S. Health professionals’ views on the barriers and enablers to evidence-based practice for acute stroke care: a systematic review. Implement Sci. 2017;12(1):1–15. https://doi.org/10.1186/s13012-017-0599-3.

O’Cathain A, Murphy E, Nicholl J. Why, and how, mixed methods research is undertaken in health services research in England: a mixed methods study. BMC Health Serv Res. 2007;7(1):1–11. https://doi.org/10.1186/1472-6963-7-85.

Hackshaw A. Small studies: strengths and limitations. Eur Respir J. 2008;32(5):1141–3. https://doi.org/10.1183/09031936.00136408.

Bethlehem J. Selection bias in web surveys. Int Stat Rev. 2010;78(2):161–88. https://doi.org/10.1111/j.1751-5823.2010.00112.x.

Cho YI, Johnson TP, VanGeest JB. Enhancing surveys of health care professionals: a meta-analysis of techniques to improve response. Evaluation the Health Professions. 2013;36(3):382–407. https://doi.org/10.1177/01632787134964.

Markwell AL, Wainer Z. The health and wellbeing of junior doctors: insights from a national survey. Med J Aust. 2009;191(8):441–4. https://doi.org/10.5694/j.1326-5377.2009.tb02880.x.

Arnolda G, Winata T, Ting HP, Clay-Williams R, Taylor N, Tran Y, et al. Implementation and data-related challenges in the deepening our understanding of quality in Australia (DUQuA) study: implications for large-scale cross-sectional research. Int J Qual Health Care. 2020;32(Supplement1):75–83. https://doi.org/10.1093/intqhc/mzz108.

Oleinik A. Mixing quantitative and qualitative content analysis: Triangulation at work. Qual Quantity. 2011;45(4):859–73. https://doi.org/10.1007/s11135-010-9399-4.

Acknowledgements

The authors are grateful to the hospital contacts and COSA for their support in disseminating the survey invites to participants, and for the clinicians who participated in this research.

Funding

MB is supported by an Australian government Research Training Program Scholarship stipend associated with the Australian Institute of Health Innovation, Macquarie University (ID:9100002). JB is chief investigator on a National Health and Medical Research Council Centre for Research Excellence Grant (APP1135048). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

MB designed the study, completed data collection, analysis and interpretation, and wrote the manuscript. FR, GA and JB reviewed and provided feedback on the study design, analysis, and manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval was received from the South-Western Sydney Local Health District Human Research Ethics Committee (HREC), and the Macquarie University HREC (2019/ETH11722 and 52019568810127, ID:5688) and governance approval was granted from seven hospital sites. All participants provided informed, written consent. All methods were performed in accordance with the relevant guidelines and regulations.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Bierbaum, M., Arnolda, G., Braithwaite, J. et al. Clinician attitudes towards cancer treatment guidelines in Australia. BMC Res Notes 16, 80 (2023). https://doi.org/10.1186/s13104-023-06356-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13104-023-06356-5