Abstract

Background

Hospitals in sub-Saharan Africa (SSA) continue to receive high numbers of severely ill (HIV-infected) patients with physical pain that may suffer from hepatic and renal dysfunction. Paracetamol is widely used for pain relief in this setting but it is unknown whether therapeutic drug concentrations are attained. The aim of this study was to assess the occurrence of therapeutic, sub-therapeutic and toxic paracetamol concentrations in SSA adult hospital population.

Methods

In a cross-sectional study, plasma paracetamol concentrations were measured in patients with an oral prescription in a referral hospital in Mozambique. From August to November 2015, a maximum of four blood samples were drawn on different time points for paracetamol concentration measurement and biochemical analysis. Study endpoints were the percentage of participants with therapeutic (≥ 10 and ≤ 20 mg/L), sub-therapeutic (< 10 mg/L) and toxic (> 75 mg/L) concentrations.

Results

Seventy-six patients with a median age of 37 years, a body mass index of 18.2, a haemoglobin concentration of 10.3 g/dL and an albumin of 29 g/L yielded 225 samples. 13.4% of participants had one or more therapeutic paracetamol concentrations. 86.6% had a sub-therapeutic concentration at all time points and 70.2% had two or more concentrations below the lower limit of quantification. No potentially toxic concentrations were found.

Conclusions

Routine oral dosing practices in a SSA hospital resulted in substantial underexposure to paracetamol. Palliation is likely to be sub-standard and oral palliative drug pharmacokinetics and dispensing procedures in this setting need further investigation.

Similar content being viewed by others

Introduction

Health care institutions in sub-Sahara Africa (SSA) continue to receive large numbers of severely ill HIV-infected patients with opportunistic infections and cancer, conditions known to be important causes of physical pain irrespective of treatment with antiretroviral drugs or chemotherapy [1, 2]. In the relative absence of opioid drugs in this region of the world, oral formulations of paracetamol (acetaminophen) are commonly prescribed for the relief of fever and pain. In the so-called WHO Pain Ladder, an analgesic treatment tool for physicians, the use of paracetamol is recommended as a first step [3, 4].

To improve palliative care in SSA health care institutions, a great deal of attention is paid to supply chains of analgesic drugs and training of health care workers on drug prescribing [3]. Information about analgesic drug concentrations is however scarce, whereas this could offer insight on the actual attainment of therapeutic drug concentrations and the appropriateness of existing dosing routines. Since kidney and liver disease are common in the general population as a result of the high prevalence of hypertension and hepatitis B, and over-the-counter drug and prescription medicine misuse is high, such information could also shed light on the occurrence of potentially toxic drug concentrations [5,6,7,8].

The aim of this pilot study was to evaluate plasma paracetamol drug concentrations in an adult SSA hospital population during routine oral paracetamol dosing, whilst investigating the occurrence of therapeutic, sub-therapeutic and potentially toxic drug concentrations.

Methods

Setting

Mozambique has an estimated adult HIV prevalence of 10.6% [9]. The Beira Central Hospital (HCB) is a 733-bed governmental referral health facility with 260 internal medicine beds, admitting up to 1500 patients monthly. The HIV prevalence on medicine wards is estimated to be at least 75% and up to 30% of patients die during hospital stay [10].

Study design

The current study was a cross-sectional pilot study and a sub-study of a larger population pharmacokinetic (PPK) study of antibiotics. In this study, pharmacokinetic (PK) data were collected from October 2014 until November 2015 from adult patients admitted to the medicine ward of the HCB, who were being treated with one or more of the following intravenously administered antimicrobials: benzylpenicillin, ampicillin, gentamicin and ceftriaxone.

Recruitment and data collection

Patients were selected on the basis of use of study antibiotics as documented in a patient’s medication administration record. Inclusion criteria were age ≥ 18 years and being willing and able to give informed consent. Exclusion criteria were the use of drugs known to significantly affect PK of the different study antibiotics, a hemoglobin level ≤ 6 g/dL, and any condition necessitating a blood transfusion, irrespective of hemoglobin level. PPK study participants with any quantitative oral paracetamol prescription were selected for the current sub-study. Only participants with an ‘as needed’ paracetamol prescription (pro re nata: PRN) were excluded.

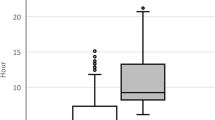

A participant’s weight and length were measured by the study team and other baseline characteristics and paracetamol dosing information were captured, as documented in a patient’s (medication) record. During 2–3 consecutive days, a maximum of four blood draws were performed for the measurement of antibiotic drug concentrations: a trough level, a peak level and two levels at random time points. The same blood samples were used for the determination of paracetamol concentrations, thus providing random sampling relative to the moment of administration of paracetamol. The dispensing and ingestion of paracetamol were not directly observed. One blood sample was used for the measurement of albumin, liver enzyme and creatinine concentrations. Plasma was stored at − 80 °C in the local research laboratory until shipment on dry ice to the Netherlands for biochemical marker and drug concentration analysis.

Total plasma paracetamol concentrations were measured using a validated colorimetric enzymatic assay with the Cobas Integra 800 System Analyzer (Roche Diagnostics, Mannheim, Germany). The lower limit of quantification was 2 mg/L.

Study endpoints were the percentage of patients with a therapeutic paracetamol concentration defined as > 10 and < 20 mg/L, the percentage of patients with a sub-therapeutic paracetamol concentration defined as < 10 mg/L and the percentage with a drug level that was considered potentially toxic, defined as > 75 mg/L [11,12,13].

Data processing and analysis

Data were entered, cleaned and analysed using Excel 2011 (Microsoft, Redmont, WA, USA) and the Excel descriptive statistics add-in tool StatPlus 4.8 (AnalystSoft Inc. Walnut, CA, USA) for quantitative items.

Results

Study population

Participants for the current study were selected from the 143 PPK study participants enrolled during the last 4 months of the inclusion period. This resulted in 78/143 (54.6%) patients with an oral paracetamol prescription. Five patients had a PRN prescription and six patients had no blood samples available for drug concentration measurement, leaving the study with a total of 67 participants. A majority of 57/67 participants (85.1%) had a 500 or 1000 mg three times per day prescription (Table 1).

At least one abnormal liver function marker was present in 34/67 (50.7%) participants and 30/67 (44.8%) had an estimated creatinine clearance below 80 mL/min.

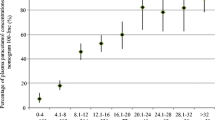

Paracetamol concentrations

A total of 225 blood samples were available for analysis. Of the 67 participants, 62 (92.5%) had two or more blood samples available for drug concentration measurement (Fig. 1). Plasma paracetamol concentrations were generally low, with 86.6% (58/67) participants having sub-therapeutic levels at all time points and 70.2% (47/67) participants having two or more paracetamol levels below the detection limit. One or more therapeutic drug level were measured in 13.4% (9/67) participants, most of whom had a 500 mg three times/day prescription. The highest concentration measured was 19.8 mg/L and no potentially toxic concentrations were found.

Discussion

In this pilot study, paracetamol concentrations were measured in a SSA hospital medicine ward population consisting of young, chronically severely ill patients, as illustrated by a low median BMI and a high frequency of anaemia, hypoalbuminaemia, and renal and hepatic dysfunction, as illustrated by the creatinine and liver enzyme concentration results. While employing routine paracetamol dosing practices, paracetamol concentrations were sub-therapeutic, if measurable at all, in the vast majority of patients, even though paracetamol is metabolized by the liver and eliminated by the kidneys. Yet, very few patients had one or more therapeutic paracetamol concentrations. Potentially toxic drug concentrations were not found. One study with healthy volunteers and another with patients under spinal anaesthesia looked into the PK of 1 g of orally administered paracetamol (≈ 15 mg/kg) and found a mean Cmax of 12.3 and 18.0 mg/L respectively, although with high inter-individual variability [14, 15]. Data from a study in an elderly population with a 1 g three times per day oral regimen rendered a mean trough level of 1.3 mg/L [16]. Based on this evidence, it is conceivable that a substantial part of our study’s drug concentrations would be around or under the lower level of the therapeutic range. Given our random sampling procedures however, the common occurrence of (immeasurably) low paracetamol levels across all samples does not seem to fit the observations in the studies mentioned, even when using a relatively low daily dose of paracetamol. Although the plasma paracetamol concentration is not always predictive of an individual’s pain response, concentrations between 10 and 20 mg/L are often believed to represent the therapeutic range [11, 15]. Our study’s consistent pattern of paracetamol concentrations below 10 mg/L, or even below the level of detection, is therefore worrisome, as it may promote unnecessary pain and fever.

Possible explanations may be found at a patient’s level as well as at the health system level. Interestingly, results from a clinical PK study comparing oral paracetamol absorption in patients while supine and standing demonstrated that in subjects taking a tablet while supine, esophageal transit of tablets was delayed. Paracetamol peak plasma concentrations occurred later while being significantly lower [17]. Our study population largely consisted of weak, bedridden patients who lack consistent assistance with eating, drinking and taking oral medication, and impaired oral drug absorption could therefore have contributed to the low drug concentrations found. In critically ill patients, systemic exposure to paracetamol can also decrease when the volume of distribution increases as a result of fluid shifts and hypoalbuminaemia [18].

Apart from the potential influences of PK processes, shortcomings in the efficiency of the local health system may have played a substantial role in patients not achieving therapeutic paracetamol levels. There is a shortage of motivated, well-trained health care workers, and few nurses may be responsible for large numbers of very ill patients [19]. This can lead to erroneous drug dispensing as well as inconsistent assistance with eating, drinking and taking oral medication.

High in-patient HIV-related morbidity and mortality as well as weaknesses in the health system are not unique to Mozambique, and we suppose that underexposure to oral analgesic drugs is therefore not unlikely to happen in health care institutions across SSA [20].

An important limitation of this study is that drug dispensing and ingestion were not directly observed and that blood samples were drawn at time points relative to the dosing of study antibiotics, and not relative to dosing of paracetamol. Although it was thus not able to relate paracetamol concentrations to actual doses and administration time points, the study’s approach rendered samples that were randomly distributed over the paracetamol-dosing interval, covering peak levels as well as troughs.

Conclusion

In a chronically and severely ill adult SSA hospital population with a paracetamol prescription, underexposure to paracetamol appears to be common when exercising a routine oral dosing practice. The occurrence of low plasma paracetamol concentrations is likely to lead to sub-optimal palliation. Although this pilot study has limited power to make inferences, the study results do seem to underline a need for a comprehensive oral palliative drug monitoring and evaluation agenda in SSA that addresses analgesic drug therapy at a health system—as well as at a patient level.

Abbreviations

- SSA:

-

sub-Saharan Africa(n)

- PPK:

-

population pharmacokinetic(s)

- PK:

-

pharmacokinetic(s)

- BMI:

-

body mass index

- PRN:

-

pro re nata (‘as needed’)

- GGT:

-

gamma-glutamyl transferase

- AST:

-

aspartate aminotransferase

- ALT:

-

alanine aminotransferase

- Cmax :

-

peak plasma concentration

References

UNAIDS. Global AIDS update 2016. 2016. http://www.unaids.org/sites/default/files/media_asset/global-AIDS-update-2016_en.pdf. Accessed 24 Jan 2017.

Parker R, Stein DJ, Jelsma J. Pain in people living with HIV/AIDS: a systematic review. J Int AIDS Soc. 2014. https://doi.org/10.7448/IAS.17.1.18719.

Harding R, Higginson IJ. Palliative care in sub-Saharan Africa. Lancet. 2015;365:1971–7.

World Health Organization. Palliative care fact sheet No 402. 2015. http://www.who.int/mediacentre/factsheets/fs402/en/. Accessed 24 Jan 2017.

Schweitzer A, Horn J, Mikolajczyk RT, Krause G, Ott JJ. Estimations of worldwide prevalence of chronic hepatitis B virus infection: a systematic review of data published between 1965 and 2013. Lancet. 2015;386:1546–55.

Stanifer JW, Jing B, Tolan S, Helmke N, Mukerjee R, Naicker S, et al. The epidemiology of chronic kidney disease in sub-Saharan Africa: a systematic review and meta-analysis. Lancet Glob Health. 2014;2(3):e174–81.

Spearman CW, Sonderup MW. Health disparities in liver disease in sub-Saharan Africa. Liver Int. 2015;35:263–71.

Myers B, Siegfried N, Parry CD. Over-the-counter and prescription medicine misuse in Cape Town–findings from specialist treatment centres. S Afr Med J. 2003;93:367–70.

Conselho Nacional de Combate ao HIV e SIDA (CNCS). Global aids response progress report: country progress reports Mozambique. http://www.unaids.org/sites/default/files/country/documents//file%2C94670%2Cfr.pdf Accessed 26 Jun 2017.

Bos JC, Smalbraak L, Macome C, Gomes E, van Leth F, Prins JM. TB diagnostic process management of patients in a referral hospital in Mozambique in comparison with the 2007 WHO recommendations for the diagnosis of smear-negative pulmonary TB and extrapulmonary TB. Int Health. 2013;5:302–8.

Rumack BE. Acetaminophen misconceptions. Hepatology. 2004;40:10–5.

Koppen A, van Riel A, de Vries I, Meulenbelt J. Recommendations for the paracetamol treatment nomogram and side effects of N-acetylcysteine. Neth J Med. 2014;72:251–7.

Wallace CI, Dargan PI, Jones AL. Paracetamol overdose: an evidence based flowchart to guide management. Emerg Med J. 2002;19:202–5.

Langford RA, Hogg M, Bjorksten AR, Willams DL, Leslie K, Jamsen K, et al. Comparative plasma and cerebrospinal fluid pharmacokinetics of paracetamol after intravenous and oral administration. Anesth Analg. 2016;123:610–5. https://doi.org/10.1213/ANE.0000000000001463.

Singla NK, Parulan C, Samson R, Hutchinson J, Bushnell R, Beja EG, et al. Plasma and cerebrospinal fluid pharmacokinetic parameters after single-dose administration of intravenous, oral, or rectal acetaminophen. Pain Pract. 2012. https://doi.org/10.1111/j.1533-2500.2012.00556.x.

Bannwarth B, Pehourcq F, Lagrange F, Matoga M, Maury S, Palisson M, et al. Single and multiple dose pharmacokinetics of acetaminophen (paracetamol) in polymedicated very old patients with rheumatic pain. J Rheum. 2001;28:182–4.

Channer KS, Roberts CJ. Effect of delayed esophageal transit on acetaminophen absorption. Clin Pharamacol Ther. 1985;37:72–6.

De Maat MM, Tijssen TA, Brüggemann RJ, Ponssen HH. Paracetamol for intravenous use in medium- and intensive care patients: pharmacokinetics and tolerance. Eur J Clin Pharmacol. 2010;66:713–9.

World Health Organization. No health without a workforce. 2013. http://www.who.int/workforcealliance/knowledge/resources/hrhreport2013/en/. Accessed 24 Jan 2017.

Wajanga BMK, Webster LE, Peck RN, Downs JA, Mate K, Smart LR, et al. Inpatient mortality of HIV-infected adults in sub-Saharan Africa and possible interventions: a mixed method review. BMC Health Serv Res. 2014. https://doi.org/10.1186/s12913-014-0627-9.

Authors’ contributions

JCB, JMP and RAM conceived and designed the study; JCB prepared an application for the Mozambican National Committee for Bio-ethics in Health. MM, GN and JCB locally implemented the study. JCB and RVH analysed the data, and JCB carried out the interpretation of the data. JCB drafted the manuscript. RVH, RAM and JMP critically revised the manuscript for intellectual content. JMP is the guarantor of the paper. All authors read and approved the final manuscript.

Authors’ information

JCB and JMP are internists and infectious diseases specialists who have collaborated extensively with the Faculty of Health Sciences of the Catholic University of Mozambique (UCM) in Beira, with the Beira Central Hospital and with the Ministry of Health of the Republic of Mozambique (MISAU) since 2006 for the improvement and expansion of graduate and post-graduate internal medicine training.

Acknowledgements

In Mozambique, we are indebted to the late Carlos de Oliveira, internist and former head of the HCB Department of Internal Medicine, who made study office space available on the ward. We would like to thank Ms. Marcelina Duarte, former study team member, for her organizational work during the initial 3 months of the study. We also thank the CIDI laboratory nursing and support staff and the HCB nursing and medical staff for their share in ethics, good clinical- and laboratory practice training and their cooperation, respectively. In the Netherlands we thank Ms. Femke Schrauwen, trail coordinator of the AMC biochemistry laboratory, and Ms. Marloes van der Meer and Marcel Pistorius, research analists of the AMC pharmacology laboratory, for their useful input in the interpretation of the study’s laboratory testing results.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

The dataset used and analysed during the current study is available from the corresponding author on reasonable request.

Consent for publication

Not applicable.

Ethics approval and consent to participate

The study, including the current sub-study, was reviewed and approved by the Mozambican National Committee for Bio-ethics in Health (CNBS: study ref. 118/CNBS/2013). A letter of approval was obtained from the general director of the HCB. Participants gave written informed consent. Those unable to read, write and/or understand Portuguese gave a thumbprint and an impartial, literate witness observed the entire informed consent process and subsequently co-signed the informed consent form.

Funding

This work was internally funded with AMC funding. Additionally, it was indirectly supported by the Gilead Foundation (July 14, 2014; IA 356007) and a Dutch private donor who wants to stay anonymous (CA 356001), but whose professional activities do not create conflict of interest for one of the authors. Both funding parties supported the local presence of JCB in Mozambique in the context of a long running medical educational capacity building project with the Faculty of Health Sciences of the Catholic University of Mozambique (FCS-UCM).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Bos, J.C., Mistício, M.C., Nunguiane, G. et al. Paracetamol clinical dosing routine leads to paracetamol underexposure in an adult severely ill sub-Saharan African hospital population: a drug concentration measurement study. BMC Res Notes 10, 671 (2017). https://doi.org/10.1186/s13104-017-3016-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13104-017-3016-8