Abstract

Background

For children and young people with eye and vision conditions, research is essential to advancing evidence-based recommendations in diagnosis, prevention, treatments and cures. Patient ‘experience’ reflects a key measure of quality in health care (Department of Health. High Quality Care for All: NHS Next Stage Review Final Report: The Stationery Office (2008)); research participant ‘experiences’ are equally important. Therefore, in order to achieve child-centred, high-quality paediatric ophthalmic research, we need to understand participation experiences. We conducted a systematic review of existing literature; our primary outcome was to understand what children and young people, parents and research staff perceive to support or hinder positive paediatric eye and vision research experiences. Our secondary outcomes explored whether any adverse or positive effects were perceived to be related to participation experiences, and if any interventions to improve paediatric ophthalmic research experiences had previously been developed or used.

Methods

We searched (from inception to November 2018, updated July 2020) in MEDLINE, Embase, CINAHL, Web of Science, NICE evidence and The Cochrane Library (CDSR and CENTRAL), key journals (by hand), grey literature databases and Google Scholar; looking for evidence from the perspectives of children, young people, parents and staff with experience of paediatric ophthalmic research. The National Institute for Health Research (NIHR) Participant in Research Experience Survey (PRES) (National Institute for Health Research. Research Participant Experience Survey Report 2018–19 (2019); National Institute for Health Research. Optimising the Participant in Research Experience Checklist (2019)) identified ‘five domains’ pivotal to shaping positive research experiences; we used these domains as an ‘a priori’ framework to conduct a ‘best fit’ synthesis (Carroll et al., BMC Med Res Methodol. 11:29, 2011; Carroll et al., BMC Med Res Methodol. 13:37, 2013).

Results

Our search yielded 13,020 papers; two studies were eligible. These evaluated research experiences from the perspectives of parents and staff; the perspectives of children and young people themselves were not collected. No studies were identified addressing our secondary objectives. Synthesis confirmed the experiences of parents were shaped by staff characteristics, information provision, trial organisation and personal motivations, concurring with the ‘PRES domains’ (National Institute for Health Research. Optimising the Participant in Research Experience Checklist (2019)) and generating additional dimensions to participation motivations and the physical and emotional costs of study organisation.

Conclusions

The evidence base is limited and importantly omits the voices of children and young people. Further research, involving children and young people, is necessary to better understand the research experiences of this population, and so inform quality improvements for paediatric ophthalmic research care and outcomes.

Trial registration

Review registered with PROSPERO, International prospective register of systematic reviews: CRD42018117984. Registered on 11 December 2018.

Similar content being viewed by others

Background

Clinical research will improve the healthcare we are able to deliver [1]. Ethically robust paediatric clinical studies work in partnership with children, young people and their families to weigh concerns about childhood vulnerability and their complex care needs, with the need for children’s participation [2,3,4,5]. Paediatric research design needs to be feasible and acceptable to children and to their families [6]. Caldwell et al. [4] highlight the perils of “piggy-backing” children and young people (CYP) onto a research design intended for adult participants, where their child-specific clinical outcomes, needs and priorities may be neglected. Gillies et al. [7] discuss the ethical risks of poor experiences beyond trial entry (generally, not specifically in paediatrics), which can jeopardise retention and compromise the robustness of results. Patient ‘experience’ alongside safety and effectiveness, is recognised as a key quality measure of care [8]; this is no less the case for children and their families in research.

Unique childhood disease patterns, treatment responses and priorities for intervention acceptability, mean CYP with eye and vision conditions demand research attention [9,10,11,12]; evaluating and learning from their research experiences is critical to the quality of this endeavour [8, 13]. This systematic review seeks to understand the paediatric ophthalmic research experiences of CYP, families and research staff.

Planner et al. [14] champion the measurement of research experiences as a necessary feature of quality improvement and a mechanism for ‘patient-centred’ research design. Their scoping review of studies using a standardised measure of experience (1999–2016) found no consensus about how to measure research experience. Work to develop a valid, reliable and acceptable measure for use across trial portfolios is underway [15].

Meanwhile, since 2015, driven by aspirations to involve participants in shaping research delivery through feedback, the National Institute for Health Research (NIHR) via Local Clinical Research Networks (LCRNs) have been measuring research experiences using their ‘Participant in Research Experience Survey’ (PRES). The format of PRES continues to evolve, moving towards national standardisation and away from LCRN variation [16]; up until the 2020/2021 survey, three standardised questions around information provision and general research experience were mandated. In 2019, analysis of national standardised question responses (2018/2019), underpinned development of the ‘Optimising the Participant in Research Experience Checklist’; this identifies five domains significant to shaping positive research experiences, the dimensions of which can be derived from authors’ explanations and examples of each domain [17, 18] (see Table 1). It is striking to note the similarity of these five to domains identified in a study of research retention strategies nearly a quarter of a century ago [19]. To illustrate the similarities, the recommended strategies by Given et al. [19] have been listed alongside the PRES checklist recommendations [18] (see Table 1).

Objectives

The primary objective of this systematic review was to understand what children, young people, their parents and research staff, perceive to support or hinder a positive paediatric eye and vision research participation experience.

The secondary objectives were to:

-

i)

Determine if any adverse or positive effects are perceived to be related to participation experiences

-

ii)

Identify if any previous interventions have been developed or used to improve paediatric ophthalmic research experiences

Methods

This systematic review followed the Centre for Reviews and Dissemination (CRD) guidance for the conduct of healthcare reviews [20] and is reported in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [21]. The protocol was prospectively registered with PROSPERO (Registration Number CRD42018117984, 11 December 2018).

Search strategy

We searched the electronic databases MEDLINE, Embase, CINAHL, Web of Science, NICE Evidence and The Cochrane Library (CDSR and CENTRAL), from inception until November 2018 and updated the searches in July 2020. Scoping searches identified the most useful search terms and combinations; in order to accommodate our diverse objectives, we designed a sensitive search strategy (see Additional File 1). In addition, key journals were hand-searched, grey literature databases were searched and a focussed search in Google Scholar was conducted (see Additional File 2). References and citations of included studies were screened.

Study eligibility criteria

Eligibility criteria were defined using the SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) review tool [22], a tool appropriate for framing qualitative questions [23] (see Table 2). To be included in the review, a study had to explore the paediatric eye and vision research participation experiences/perceptions/views/opinions of CYP and/or their parents and/or research staff. To save all ophthalmic studies including CYP being subject to full text screening, we made a pragmatic decision to only include studies where outcomes relating to, or discussing research experiences, were reported in the title or abstract.

Study selection

Search results were exported into the Covidence software (© 2020 Covidence) and duplicates removed. Two reviewers (JM, MM) independently screened all title and abstracts to identify potentially relevant articles. Any disagreements were resolved through discussion, with topic expert (JM) taking final decision. The full-text of these articles were then assessed for inclusion independently by two reviewers (JM, ADN) using the eligibility criteria outlined in Table 2. Where lack of eligibility clarity existed, additional information was sought from authors and JC resolved conflicts.

Quality assessment

The quality assessment tool by Hawker et al. [24] (Additional File 3) was selected to assess the overall quality of the included studies. Due to our eligibility criteria including ‘any study design’, Hawker et al. [24] was deemed a suitable tool owing to its ability to cope with quality assessment across a potentially diverse group of empirical studies. Whilst debate continues about the assessment of qualitative methods [23], it was decided that presenting our assessment using these broad criteria would enable transparent decision-making for a range of methodologies. Hawker et al. [24] equated 10 = very poor, up to 40 = good. However, due to a lack of clarity, over how 40 could be reached for a top score (with 9 questions scoring 1–4), MM and JM agreed that for the purposes of this review, the tool would be adapted; 36 would be classed as the maximum score (very poor = 0–9, poor = 10–18, fair = 19–27, good = 28–36). Two reviewers (JM, KCT) appraised included studies and discussed their assessments to reach conclusions on study quality.

Data extraction and synthesis

Two reviewers (JM, KCT) independently extracted data on study characteristics (author, publication date, country, setting, study sample, setting, experience measure methods) and results from the included studies. The same two reviewers independently conducted a ‘best fit’ synthesis of the included study data against the five domains of the NIHR PRES framework (see Table 1) [25, 26]. This involved discussion of their independent interpretations, to reach judgement on the definitions of the domains, actively seeking disconfirming data (i.e. falling outside the framework), as well as data falling within existing domains, to generate additional domains of research experience, as well as additional dimensions of existing domains. Differences in the synthesis between the two reviewers were resolved through discussion.

Results

Searches

The PRISMA [21] flowchart outlining the screening process is shown in Fig. 1.

Our search strategy identified 20,926 papers, 7906 of which were duplicates. Therefore, 13,020 were screened on title and abstract. This high volume of papers arose from the sensitivity of our search strategy which did not include strings relating to study design or approach. This was to identify studies exploring experiences of research participation, as well as measured effects or interventions to improve experience (see Objectives). Of the 101 papers which progressed to full text review, one was unobtainable. Ninety-eight studies (n = 92 identified via databases/registers, n = 6 via other methods) were excluded as follows: duplicates (n = 6); wrong sample/population (n = 4, mostly adult samples); wrong phenomenon of interest (n = 30, 7 measuring other experiences, for example of clinical practice, or trial recruitment only, or of patient and public involvement activities, 23 measuring experience of the trial intervention only). Of these 23 studies which focussed on the experience of the intervention only, some evaluated ophthalmic tests (n = 9) such as vision screening, intraocular pressure (IOP) measurement, perimetry, fundoscopy assessments and ptosis assessments, and others (n = 14) evaluated treatments for ophthalmic conditions such as amblyopia, allergic conjunctivitis and myopia. How children and young people experience interventions is important (including what measure is used and how the data is reported). However, after scrutiny by the review team, it was agreed that the broad experiences children had participating in these studies was not measured (therefore, they were excluded); wrong evaluation (n = 23, did not measure experiences); wrong research type (n = 3, not empirical); wrong publication type (n = 32, no published datasets—protocols, studies in progress or abstract only). Two studies (reported in three papers) met the inclusion criteria: Dias et al. [27], Buck et al. [28] and Clarke et al. [29] (with Buck et al. [28] reporting results pertaining to this review more comprehensively than Clarke et al. [29]).

Included study characteristics

Key characteristics and research participation experience measure methods for the two included studies are presented in Additional File 4.

Quality assessment

Quality assessments ratings of the two included studies are presented in Additional File 3. Both studies were graded ‘good’ (‘good’ range 28–36), with scores of 30 [28] and 34 [27]. Despite both studies being ‘good’, we were conscious of the following limitations when synthesising their findings. For Buck et al. [28], the wide range of data collection time points defocused the purpose of their evaluation by not accounting for altered perceptions over time. It would be interesting to know how insights changed from those interviewed just after enrolment, compared to those 10 weeks later, for example. In addition, their interviews were not recorded ad verbatim with transcription; instead, notes were inputted into a computer whilst conducting the interview. This may be what led to minimal exemplar quotes and a lack of detail in the results reported. More information on prominence of the different themes identified would have enhanced the results section, together with details to explain certain aspects (for example the meaning of ‘communication’ in their results Table 3). The sample relevant to this review was small (n = 14); little rationale is given about the sample size or the demographic details of the interviewees.

For Dias et al. [27], the questionnaire itself was an adapted version of a survey used in the Framingham Heart Study [30]; no information is given about how either questionnaire was designed. Whether the items evaluated in the questionnaires were aspects of care, which families would consider important to their experiences, remains unknown, since there is no mention of involving families (or staff) in either. In addition, using a closed-questionnaire format only did not allow for ‘any other comments’ or for families to highlight ‘any other’ aspects of their research experiences not listed. Dias et al. [27] also used their surveys for parents to rate the aspects of the study ‘important to retention’. However, the results presented do not share the ratings, instead focussing on the comparison between family and staff opinions.

Primary outcome

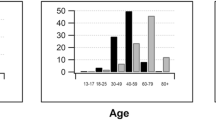

No data were identified where CYP were directly consulted on their own experiences of research participation. The perspectives of 425 parents of children between the ages of 6 months and 16 years were sought by Dias et al. [27] and Buck et al. [28] combined. Dias et al. [27] complemented parents’ perspectives by collecting the views of 35 research staff, however seeking staffs’ perceptions of families’ trial experiences, rather than their own perspectives or experiences.

Dias et al. [27] used a survey design and focussed in general on how families liked a lot more aspects of the COMET study [31] than staff thought they would. Buck et al. [28] used telephone interviews to explore which aspects of the SamExo study [32] parents deemed acceptable.

The results for our primary objective are presented below, under the domains of the PRES framework detailed in Table 1. Table 3 indicates which studies contributed to each domain.

Relationship with research staff

Dias et al. [27] measured the extent to which parents ‘liked’ the following characteristics of staff: staff response to questions, friendliness, quality of eye care, positive encouragement, seeing the same staff at each visit. In alignment with the PRES framework (Table 1), this domain stood out as supporting positive experiences and being ‘liked’ by all 411 parents; 95–98% awarding the highest rating (‘liked a lot’) to staff friendliness, response to questions, quality of eye care and positive encouragement [27]. It is worth noting that as quality assurance measure embedded within the COMET study, staff received training on the importance of ‘prompt responses to questions’ and had clear protocols for problem solving. Staff underestimated the extent to which parents valued each ‘staff characteristics’.

In Buck et al. [28], parents suggested ‘communication’ was needed to make the trial more acceptable. However, from their reporting, it is difficult to identify what this relates to potentially ‘staff characteristics’ or ‘information’ or ‘study organisation’.

Quality and timeliness of information

When parents talked about what would make the SamExo study more acceptable [28], the majority of suggestions related to the ‘study information’. In alignment with the PRES framework (Table 1), parents’ preferences focused around the format, content and comprehensiveness of information. One parent suggested simplification of the Patient Information Sheet (PIS), with another who felt the randomisation explanation was ‘too detailed’. In relation to content of the information, despite most parents being ‘satisfied’, Buck et al. [28] reported parental concerns about the following missing or inadequate content: the operation (intervention) itself (e.g. how long it would take), success rates and outcomes, general information about the condition itself and the need for randomisation in the study design. Validating this deficiency, some parents revealed a lack of knowledge or understanding for the randomisation during their interviews. A shortfall of information about ‘risks’ was also identified, with one parent commenting about the importance of the ‘NHS’ providing this information rather than having to rely on ‘online’ information which could be “dangerous”. Finally, parents requested extra information about the ‘costs of participation’, which the study authors assume to be solely related to ‘monetary’ costs. It is worth remembering the range of time points the interviews were conducted in Buck et al. [28] and considering how parents might view the information differently at 10 weeks compared to 2 days into trial.

The focus of information provision by Dias et al. [27] related to ‘updates and progress’, where they evaluated parents’ views of their newsletters (93% of parents ‘liked’) and appointment reminders (telephone calls and postcards prior to visits) (98% of parents ‘liked’). Details of the newsletters content, format or frequency are not reported. Staff underestimated the extent to which these updates were ‘liked’.

Engagement with participants’ diverse motivations for participation

Altruistic

Buck et al. [28] aligned with the PRES framework (Table 1) by identifying some parents altruistic motivations for ‘doing their bit’ for research, though this was never their sole motivation. Dias et al. [27] categorised their newsletters as ‘reinforcements’; potentially aiming to ‘reinforce’ or nurture participating families’ altruistic motivations; though we are not privy to the newsletter content.

Health related

Improved monitoring and care of own condition: similarly to PRES (Table 1), both Buck et al. [28] and Dias et al. [27] report data suggesting that parents were motivated by an expectation that the best or ‘expert’ care, superior to regular clinical care, could be achieved through participation in research. Parents in Buck et al. [28] report being reassured that their child would be monitored. In Dias et al. [27], very high percentages of parents ‘liked’ the ‘quality of eye care’ (99%) and ‘completeness of eye exam’ (99%). Ninety-seven percent of parents ‘liked’ the ‘association with the College of Optometry’ and ninety-nine percent of parents ‘liked’ being ‘part of a nationwide study’, both of which could potentially signal to parents a nationwide availability of high quality and trustworthy care (with a standard of care set by the College of Optometry).

Hope of improvement in personal medical condition: for this dimension, in direct contrast to participants being motivated to participate through the hope of improvement, one parent [28] raised how parents may be ‘put off’ because the study was about ‘the eyesight’. No rationale is given, though the statement implies this parent felt ‘the eyesight’ was either an especially important aspect of their child’s health or an especially vulnerable aspect of their physiology. Either way, the sense is given that the threat of a potential decline in personal medical condition, through research participation, may act as a de-motivating factor.

Opportunity for (relatively) flexible treatment options

This new sub-dimension identified in Buck et al. [28] was added to the PRES framework (Table 4), based on parents’ lack of preference or timeframe for treatment, which thereby increased their willingness or motivation to join a randomised design trial. Parents said they had ‘nothing to lose’—they were happy to have surgery (the study intervention) but also happy to wait (until or if a constant strabismus appeared to be developing, or if parents request surgery and the responsible clinical team agreed that this was appropriate, or at the end of the trial if randomised to ‘active monitoring’ arm [32]). Some parents mentioned that they would not be ‘denied’ surgery, so it was a question of ‘when’ they would have surgery not ‘if’ they would have surgery. Therefore, if joining the study resulted in a delay of surgery, this was an acceptable outcome to some parents whose child had participated. In addition, parents felt reassured they could change their mind about participation (withdrawing from study). Parents were therefore motivated for their child to participate by the variety, flexibility and potentially reversible (withdrawing from study) treatment options which study participation provided.

Opportunity to relinquish personal responsibility for unforeseen effects whilst trying new treatment

This second new sub-dimension added to the framework (Table 4) was derived from one parent who described wanting ‘someone else’ to make the decision for them [28]. Joining a randomised controlled trial (RCT) was described as a ‘positive’, through being exonerated of personal responsibility for a treatment decision by surrendering to random allocation. In the COMET trial [27], parents had to agree to accept the random assignment of treatment and continue for at least 3 years: their ‘COMET commitment’. Eighty-nine percent of parents ‘liked a lot’, the ‘COMET commitment’, though we do not learn any details about why. Potentially, like the parent in Buck et al. [28], parents in the COMET trial also liked the opportunity to surrender control of treatment. It would have been interesting to gain a deeper understanding of why parents ‘liked’ this commitment and indeed explore the feelings of the CYP themselves.

Material incentives designed in by researchers

This third additional sub-dimension (Table 4) was generated from data collected by Dias et al. [27] and related to material incentives, designed into studies by researchers. For Dias et al. [27], ‘thank you’ materials were classed by the authors as ‘incentives’ (movie passes, gift certificates to local attractions, T-shirts, photo frames and other items with the COMET logo, other small age-specific toys and trinkets) plus free glasses (including sports glasses) and free repair/maintenance of glasses; these were all incorporated into the study design and liked by all but 2% of parents.

Study organisation

Included study data aligned well with PRES (Table 1) in terms of organisational aspects appreciated or disparaged by participants. Within Dias et al. [27], families appreciated the flexibility and convenience of appointment times, which included evenings and weekends. When asked whether they liked the length of study (3 years), the majority did, though a small minority did not. Equally, a small minority gave less positive rankings about the ‘location and access to the study site’ [27]; there were four sites, though no details are given of which site(s) posed the problem or why. Staff overestimated the number of parents who disliked the study length and centre access.

In relation to accommodating anticipated ‘costs’ encountered through participation, as highlighted earlier, there was a mention of unanticipated costs in Buck et al. [28]; no details are given as to whether the costs incurred were felt reasonable. Within Dias et al. [27], related to the physical and emotional costs of participation, families and staff rated the eye drops (Anaesthetic, Tropicamide, Fluorescein) required for eye examinations as the least popular feature of the study. Excluding the physical and emotional costs of study ‘interventions’, the PRES framework (Table 1) only lightly touches on the physical and emotional costs of ‘research participation’, in quotes which relate to the emotional burden of ‘waiting’, for example. Perceived burden of eye drops [27] is an important consideration for eye and vision research, where the use of drops which sting for assessments is common. For CYP in particular, it is ethically important to pay special attention to the emotional and physical burden of trial participation. Therefore, adding this example of ‘assessment burden/discomfort/distress’ to this dimension builds in necessary depth to the ‘study organisation’ domain (Table 4).

Study environment

No data was identified related to this domain of the framework.

Secondary outcomes

No data were identified to meet our secondary outcomes. The exploration by Dias et al. [27] of aspects ‘important to retention’ did not measure proven effects or associations between families’ preferences and retention.

Discussion

We found no evidence capturing CYP’s own experiences of ophthalmic research participation (objective 1) nor measured effects of participation experiences or interventions designed to improve paediatric ophthalmic research experiences (objectives 2). Two studies were identified which captured parents’ experiences of their child’s ophthalmic research participation (objective 1).

From a parent perspective therefore, our primary review outcome both concurs with and expands on previous research participation experience evaluations [17, 18]. The extent to which the dimensions and domains of research care (Table 4) are accommodated in research design and delivery may enhance (or undermine) participants’ and their families’ experiences accordingly.

Like other adults [17, 18], parents’ experiences confirmed the important role of positive staff interactions [27], comprehensive and accessible information provision [27, 28] and engagement with participants’ personal motivations for taking part; such as hope for improved monitoring and care [27, 28] or material benefits one can receive as part of a study design (e.g. free maintenance and provision of glasses [27]). Parents were also motivated by flexible and potentially reversible (withdrawing from study) treatment options available via trial participation [28], including the option, afforded by intervention randomisation, to relinquish personal responsibility for a treatment decision [27, 28]. Our synthesis added to the understanding of concerns about health deterioration as a barrier, specifically that this may be especially salient in the context of ophthalmic research where ‘eyesight’ is regarded as a particularly precious and fragile resource [28]. Aligning with the PRES framework (Table 1), the degree to which study logistics were well organised, flexible, convenient and accessible, was important [27]. In particular, our data flagged a need for greater attention to the physical and emotional burden of participation, for example, discomfort associated with research assessments [27]; this perhaps is a function of the paediatric population and the associated ethical considerations [33].

Re-addressing the validity of the survey data, we remember authors provided no account of how instruments were developed nor mention of exploratory evidence about participants’ concerns and priorities to underpin robust survey design. That said, the list of ‘staff characteristics’ posed [27] was broadly similar to examples given in PRES (Table 1). Additions were ‘positive encouragement’ and ‘seeing the same staff at each visit’, which were also staff characteristics highlighted by Given et al. [19] and which we know young people value in the context of routine long-term care [34]. ‘Positive encouragement’ may be particularly pertinent for CYP participating in eye and vision research, where tests and assessments can demand sustained focus and stillness and are often scheduled one after the other in trial protocols, although equally alongside ‘encouragement’, focusing protocol design around the needs of CYP and thereby avoiding extended sequences of testing should also be a consideration.

As discussed in the assessment of quality, a more thorough qualitative design [28], or the inclusion of open text data collection [27], may have provided more understanding about why aspects of research participation were acceptable or ‘liked’. In some of the excluded studies (excluded on the basis of only evaluating ‘experience of the ophthalmic intervention’), the richness in data collected through qualitative methods led to greater understanding of experiences with some important clinical implications. For example, Carrara et al. [35] who conducted interviews to capture parental perspectives on their newborn visual function test collected such rich data, a change of future practice followed, despite only being raised by 1% of their population (not handling the baby with one arm during the test). In other studies where formal collection of verbatim comments were collected, the understanding of results was also significantly enhanced, for example, Patel et al. [36] where in addition to a difficult rating scale for static perimetry, comments by children explained that it was the rapid rate and intensity of stimuli presentation, which raised the difficulty rating, despite being shorter in duration. Without formal collection or systematic analysis, ad hoc comments are less reliable (despite sometimes being added to concluding statements, e.g. Martin ([37] p676) “the children found it rather entertaining”).

Parents’ views, in the context of their important role supporting treatment or research participation, are crucial; but they do not necessarily dovetail with the priorities and concerns of CYP themselves [34, 38,39,40,41]. Though omitted in the included studies, it was heartening to see in a small number of the excluded studies (for example [36, 42], excluded due to only evaluating the ‘experience of the intervention’), attention given directly to CYP’s own perceptions. The United Nations Convention on the Rights of the Child (UNCRC) [43] asserts children’s rights to both “the highest attainable standard of health and to facilities for treatment” and to being asked their perspectives on matters which affect them; a robust evidence base for paediatric ophthalmic healthcare requires attention to the research experiences of CYP themselves and validated measures to monitor this.

Dias et al. [27] spent much time comparing families’ experience ratings with estimates made by staff. Whilst the benefit of staff ‘estimates’ was not immediately obvious, it was interesting to note a broad finding that staff consistently underestimated aspects of the experience families ‘liked’ (for example, staff characteristics, newsletters, appointment reminders). As Dias et al. [27] highlighted, it is important staff have accurate knowledge of research care aspects families value, in order to be able to correctly channel resources and focus (for example, writing or disseminating newsletters) to achieve positive participation experiences. The other notable discrepancy was how staff consistently overestimated negative ratings by families (for example, study centre access, selections of frames and eye drops). With ‘eye drops’, for example, there was a significant difference with 22% of families ‘disliking’ the eye drops compared with the staff estimate that 83% families ‘disliked’ eye drops; in addition, 14% families unusually did not answer the eye drops question. The explanation for this tension is hindered by data collection methods limited to closed questionnaire surveys. This points to the value of in-depth qualitative approaches to gain insights into experiences, where context is collected to help explain and interpret findings. Despite efforts to gain honest reporting (responses being sent direct to the coordinating centre and anonymity for staff), these discrepancies of opinion could potentially highlight that families still felt uncomfortable reporting negative experiences, or may simply represent differing perspectives, emphasising the importance and value of collecting perspectives from various stakeholders, in particular CYP themselves.

The added value from collecting multiple perspectives was also evident in some of the excluded studies (excluded for only evaluated the ‘experience of the intervention’), for example, Carrara et al. [35], where parents raised concerns regarding testing newborns so ‘early’; in contrast, staff emphasised the need for an early test, to increase the chances of the baby being awake and the test therefore being easier to conduct. Similarly, Patel et al. [36] triangulated the perspectives of CYP with their parents and the examiner. Their methods, using an Examiner Based Assessment of Reliability (EBAR) score, and comparing scores with the children’s difficulty rating, led to an interesting findings that no relationship was detected between the two. They found it was not always the tests children perceived as ‘hard/difficult’, which were unreliable nor the ‘easy’ tests which were reliable.

Strengths and limitations

Whilst the sensitivity and scope of our literature search means we are confident no interventions to ‘improve the paediatric experience of eye and vision research participation’ have been developed and tested to date, it became apparent that excluding studies on the basis of ‘no mention of an ‘experience’ outcome within the title or abstract’ could be a potential limitation. Sometimes, where the ‘experience’ evaluation was not the main focus of a study, less formal reporting occurred; this also highlights a wider issue around the reporting of ‘experience’ measures and the varied levels of the importance placed upon this type of outcome within studies.

In addition, ‘experience’ is not well defined in the literature; a wide range of terminology and measures were identified to infer ‘experience’ outcomes (see Additional File 5). A similar variety of terms and measures was identified by Sekhon et al. [44] when reviewing the concept and definition of ‘acceptability’ in relation to health care interventions. They found a mixture of self-report measures (satisfaction measures, experiences or perceptions, interviews, side effects) and observed behaviour measures (dropout rates, reason for discontinuation, withdrawal rates).

Implications for future research

The evidence to understand paediatric eye and vision research experience is scarce; most importantly, it fails to include the voices of CYP themselves. Future research to expand the evidence base, using methodology accessible and acceptable to involve CYP, is recommended to:

-

a)

Better understand paediatric eye and vision research participation experiences;

-

b)

Direct how teams can maximise what enables CYP to have positive experiences and minimise what leads to CYP having poor experiences, in the design and delivery of eye and vision research;

-

c)

Explore any effects of positive and negative experiences, including potential relationships with recruitment and retention to studies.

Equally, no validated instruments were used to measure experiences. Our review builds on the recommendations of Planner et al. [14] with the following suggestions:

-

a)

That multiple perspectives are collected, including the voice of CYP themselves, in a format that is age appropriate and meaningful;

-

b)

The inclusion of all stakeholders in the design of experience measures, to ensure instruments address aspects of research experiences important to participants;

-

c)

That a qualitative component is included to ensure the richness of data required to enable full understanding;

-

d)

That the dominance of the ‘experience of the intervention’, in the context of paediatric ophthalmic research participation experience, is further explored. Currently, the literature is mainly limited to this type of experience evaluation (which was excluded from this review); it is important to decipher which experience domains are the most important and impactful to stakeholders;

-

e)

That further review is conducted of paediatric ophthalmic ‘intervention’ experience measures. Twenty-three papers were excluded from this review for measuring ‘experience of the trial intervention only’; though excluded, a similar paucity of robust, validated, child-friendly experience measures was noted.

-

f)

That consideration is given to the definition and indexing of ‘experience’ terminology, together with expectations for formal reporting for ‘experience’ outcomes. This would help researchers consider the purpose of their experience evaluation(s) and the type of measure used.

Conclusion

Understanding the experiences of CYP taking part in eye and vision research is important to trial integrity; findings can direct improvements, enhancing research design and delivery and promoting quality, credible, child-centred research. However, the current limited evidence base only captures the experiences of parents and a small number of staff; the voices of CYP and their evaluations of their own experiences are missing. Our review adds detail to the current evidence base on aspects of research care pivotal to experiences; whether these additions may or may not be limited to eye and vision research is unknown. Further investigation, involving CYP, could expose a unique perspective, which could both inform the way research ‘experience’ is measured, and lead to improvements in the quality of paediatric ophthalmic research care; which in turn will maximise the visual outcomes for CYP in the future.

Availability of data and materials

All datasets used/or analysed during the current review are available from the corresponding author on reasonable request.

Abbreviations

- CYP:

-

Children and Young People

References

Department of Health and Social Care. Saving and improving lives: the future of UK clinical research delivery. 2021. Available from: https://www.gov.uk/government/publications/the-future-of-uk-clinical-research-delivery/saving-and-improving-lives-the-future-of-uk-clinical-research-delivery.

Staphorst M. Hearing the Voices of the Children: the views of children participating in clinical research. Erasmus Univeristy Rotterdam; 2017. Available from: https://repub.eur.nl/pub/100170/.

Nuffield Council on Bioethics. Children and clinical research: ethical issues London. 2015. Available from: http://nuffieldbioethics.org/wp-content/uploads/Children-and-clinical-research-full-report.pdf.

Caldwell P, Murphy S, Butow P, Craig J. Clinical trials in children. Lancet. 2004;364(9436):803–11.

Carter B, Bray L, Dickinson A, Edwards M, Ford K. Child-centred nursing: promoting critical thinking. Sage; 2014. Available from: http://0-sk.sagepub.com.wam.city.ac.uk/books/child-centred-nursing-promoting-critical-thinking.

Edwards V, Wyatt K, Logan S, Britten N. Consulting parents about the design of a randomized controlled trial of osteopathy for children with cerebral palsy. Health Expect. 2011;14(4):429–38.

Gillies K, Entwistle V. Supporting positive experiences and sustained participation in clinical trials: looking beyond information provision. J Med Ethics. 2012;38(12):751–6.

Department of Health. High quality care for All: NHS next stage review final report: The Stationery Office; 2008. Available from: https://webarchive.nationalarchives.gov.uk/20130105061315/http:/www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/documents/digitalasset/dh_085828.pdf.

Smyth R, Weindling A. Research in children: ethical and scientific aspects. Lancet. 1999;354:SII21–4.

Tailor V, Banteka M, Khaw P, Dahlmann-Noor A. Delivering high-quality clinical trials and studies in childhood eye disease: challenges and solutions. 2014.

Nimbalkar S, Patel D, Phatak A. Are parents of preschool children inclined to give consent for participation in nutritional clinical trials? PLoS ONE. 2016;11(10):e0163502.

Lancaster G, Dodd S, Williamson P. Design and analysis of pilot studies: recommendations for good practice. J Eval Clin Pract. 2004;10(2):307–12.

National Institute for Health Research. Improving care by using patient feedback. 2019. Available from: https://discover.dc.nihr.ac.uk/content/themedreview-04237/improving-care-by-using-patient-feedback.

Planner C, Bower P, Donnelly A, Gillies K, Turner K, Young B. Trials need participants but not their feedback? A scoping review of published papers on the measurement of participant experience of taking part in clinical trials. Trials. 2019;20(1):381.

Bower P, Gillies K, Donnelly A, Young B, Sanders C, Turner K. Patient-centred trials: developing measures to improve the experience of people taking part in clinical trials NIHR Funding and Awards. 2020. Available from: https://fundingawards.nihr.ac.uk/award/PB-PG-0416-20033.

Olivia L. Dicsussion about the history and development of PRES. Personal communication ed. 2021.

National Institute for Health Research. Research Participant Experience Survey Report 2018–19. 2019. Available from: https://www.nihr.ac.uk/documents/research-participant-experience-survey-report-2018-19/12109.

National Institute for Health Research. Optimising the participant in Research Experience Checklist. 2019. Available from: https://www.nihr.ac.uk/documents/optimising-the-participant-in-research-experience-checklist/21378?diaryentryid=60465.

Given BA, Keilman LJ, Collins C, Given CW. Strategies to minimize attrition in longitudinal studies. Nurs Res. 1990;39(3):184–7.

Centre for Reviews and Dissemination UoY. Systematic Reviews CRD’s guidance for undertaking reviews in health care. York, England: CRD, University of York; 2008.

Moher D, Liberati A, Tetzlaff J, Altman D. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9.

Cooke A, Smith D, Booth A. Beyond PICO: the SPIDER tool for qualitative evidence synthesis. Qual Health Res. 2012;22(10):1435–43.

Noyes J, Booth A, Cargo MF, K Harden, A, Harris J, Garside R, Hannes K, et al. Chapter 21: qualitative evidence. Cochrane; 2022. Available from: https://training.cochrane.org/handbook/current/chapter-21. [updated Updated Feb 2022. version 6.3.

Hawker S, Payne S, Kerr C, Hardey M, Powell J. Appraising the evidence: reviewing disparate data systematically. Qual Health Res. 2002;12(9):1284–99.

Carroll C, Booth A, Cooper K. A worked example of “best fit” framework synthesis: a systematic review of views concerning the taking of some potential chemopreventive agents. BMC Med Res Methodol. 2011;11(1):29.

Carroll C, Booth A, Leaviss J, Rick J. “Best fit” framework synthesis: refining the method. BMC Med Res Methodol. 2013;13(1):37.

Dias L, Schoenfeld E, Thomas J, Baldwin C, Mcleod J, Smith J, et al. Reasons for high retention in pediatric clinical trials: comparison of participant and staff responses in the Correction of Myopia Evaluation Trial. Clin Trials. 2005;2(5):443–52.

Buck D, Hogan V, Powell C, Sloper J, Speed C, Taylor R, et al. Surrendering control, or nothing to lose: parents’ preferences about participation in a randomised trial of childhood strabismus surgery. Clin Trials. 2015;12(4):384–93.

Clarke M, Hogan V, Buck D, Shen J, Powell C, Speed C, et al. An external pilot study to test the feasibility of a randomised controlled trial comparing eye muscle surgery against active monitoring for childhood intermittent exotropia [X (T)]. Health Technol Assess. 2015;19(39):1.

Marmor J, Oliveria S, Donahue R, Garrahie E, White M, Moore L, et al. Factors encouraging cohort maintenance in a longitudinal study. J Clin Epidemiol. 1991;44(6):531–5.

Gwiazda J, Hyman L, Hussein M, Everett D, Norton T, Kurtz D, et al. A randomized clinical trial of progressive addition lenses versus single vision lenses on the progression of myopia in children. Invest Ophthalmol Vis Sci. 2003;44(4):1492–500.

Buck D, McColl E, Powell C, Shen J, Sloper J, Steen N, et al. Surgery versus Active Monitoring in Intermittent Exotropia (SamExo): study protocol for a pilot randomised controlled trial. Trials. 2012;13(1):192.

Staphorst M, Hunfeld J, van de Vathorst S, Passchier J, van Goudoever J. Children’s self reported discomforts as participants in clinical research. Soc Sci Med. 2015;142:154–62.

Curtis-Tyler K, Arai L, Stephenson T, Roberts H. What makes for a ‘good’or ‘bad’paediatric diabetes service from the viewpoint of children, young people, carers and clinicians? A synthesis of qualitative findings. Arch Dis Child. 2015;100(9):826–33.

Carrara V, Darakomon M, Thin N, Paw N, Wah N, Wah H, et al. Evaluation and acceptability of a simplified test of visual function at birth in a limited-resource setting. PLoS ONE. 2016;11(6):e0157087.

Patel D, Cumberland P, Walters B, Russell-Eggitt I, Rahi J, Group OS. Study of Optimal Perimetric Testing in Children (OPTIC): feasibility, reliability and repeatability of perimetry in children. PLoS ONE. 2015;10(6):e0130895.

Martin L. Rarebit and frequency-doubling technology perimetry in children and young adults. Acta Ophthalmol Scand. 2005;83(6):670–7.

Carlton J. Developing the draft descriptive system for the child amblyopia treatment questionnaire (CAT-Qol): a mixed methods study. Health Qual Life Outcomes. 2013;11(1):1–9.

Steel D, Codina C, Arblaster G. Amblyopia treatment and quality of life: the child’s perspective on atropine versus patching. Strabismus. 2019;27(3):156–64.

O’Keeffe S, Weitkamp K, Isaacs D, Target M, Eatough V, Midgley N. Parents’ understanding and motivation to take part in a randomized controlled trial in the field of adolescent mental health: a qualitative study. Trials. 2020;21(1):1–13.

Chambers C, Giesbrecht K, Craig K, Bennett S, Huntsman E. A comparison of faces scales for the measurement of pediatric pain: children’s and parents’ ratings. Pain. 1999;83(1):25–35.

Tailor V, Glaze S, Unwin H, Bowman R, Thompson G, Dahlmann-Noor A. Saccadic vector optokinetic perimetry in children with neurodisability or isolated visual pathway lesions: observational cohort study. Br J Ophthalmol. 2016;100(10):1427–32.

UN. Convention on the Rights of the Child New York: United Nations General Assembly; 1989. Available from: https://downloads.unicef.org.uk/wp-content/uploads/2010/05/UNCRC_united_nations_convention_on_the_rights_of_the_child.pdf?_ga=2.5908211.156742178.1553247995-1715386300.1553247995.

Sekhon M, Cartwright M, Francis J. Acceptability of health care interventions: a theoretical framework and proposed research agenda. Br J Health Psychol. 2017;23(3):519–31.

Acknowledgements

We would like to acknowledge Vijay K Tailor (Moorfields Eye Hospital NHS Foundation Trust, London, UK) for his contribution to the reliability of data extraction and quality assessment, in the developmental stages of the review.

Funding

Review conducted as part of Jacqueline Miller’s PhD studies, funded by National Institute for Health Research (NIHR) Moorfields Biomedical Research Centre (BRC): Award Reference NIHR-INF-0465.

Author information

Authors and Affiliations

Contributions

JM: conception, searches (title and abstract screening, full text review), quality assessment, data extraction, data synthesis, lead author of manuscript. KCT: conception, quality assessment, data extraction and synthesis, comments on manuscript draft. MM: Searches (title and abstract screening) comments on manuscript draft. ADN: conception, searches (full text review), comments on manuscript draft. JC: conception, conflict resolution during searches, comments on manuscript draft. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

JM, KCT, MM and JC declare that they have no competing interests.

A Dahlmann-Noor: Medical advisor for Santen, Novartis, CooperVision, SightGlassVision.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search Strategy.

Additional file 2.

Key journals hand-searched.

Additional file 3.

Quality scoring using an adapted Hawker et al. (2002) assessment tool.

Additional file 4.

Summary of papers which evaluate paediatric eye and vision research experiences.

Additional file 5.

Terminology and measures identified to infer ‘experience’ outcomes.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Miller, J., Curtis-Tyler, K., Maden, M. et al. Paediatric eye and vision research participation experiences: a systematic review. Trials 24, 66 (2023). https://doi.org/10.1186/s13063-022-07021-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-022-07021-1