Abstract

Aligning sequencing reads onto a reference is an essential step of the majority of genomic analysis pipelines. Computational algorithms for read alignment have evolved in accordance with technological advances, leading to today’s diverse array of alignment methods. We provide a systematic survey of algorithmic foundations and methodologies across 107 alignment methods, for both short and long reads. We provide a rigorous experimental evaluation of 11 read aligners to demonstrate the effect of these underlying algorithms on speed and efficiency of read alignment. We discuss how general alignment algorithms have been tailored to the specific needs of various domains in biology.

Similar content being viewed by others

Introduction

In April 2003, the high-throughput sequencing era started with the Human Genome Project, which led to the successful sequencing of a nearly complete human genome and establishment of a reference genome that is still in use [1]. The Human Genome Project cost approximately $3 billion over 13 years to sequence the genome of an individual human. Recent advances in high-throughput sequencing technologies have enabled cost-effective and time-efficient probing of the DNA sequences of living organisms through a process known as DNA sequencing [2]. Modern high-throughput sequencing techniques are capable of producing millions of nucleotide sequences of an individual’s DNA [3] and providing multifold coverage of whole genomes or particular genomic regions. The output of high-throughput sequencing consists of sets of relatively short genomic sequences, usually referred to as reads. Contemporary sequencing technologies are capable of generating tens of millions to billions of reads per sample, with read lengths ranging from a few hundred to a few million base pairs [4].

The trade-off for decreased cost and increased throughput offered by modern sequencing technologies is a larger margin of noise in sequencing data [5]. The magnitude of error rates in data produced by state-of-the-art sequencing platforms varies from ~ 10−3 for short reads to ~ 15 × 10−2 for the relatively new long and ultra-long reads [6]. The increased error rate of today’s emerging long-read technologies may negatively impact biological interpretations. For example, errors in protein-coding regions can bias the accuracy of protein predictions [7]. Sequenced reads lack information about the order and origin (i.e., which part, homolog, and strand of the subject genome) of reads. The main challenge in genome analysis today is to reconstruct the complete genome of an individual. This process, read alignment (also known as read mapping), typically requires the reference genome which is used to determine the potential location of each read. Accuracy of alignment has a strong effect on many downstream analyses [8]. For example, most trans-eQTL signals were shown to be solely caused by alignment errors [9].

Read alignment can be performed in a brute force manner but is impractical for modern sequencing platforms capable of producing hundreds of millions of reads. Instead, today’s efficient bioinformatics algorithms enable fast and accurate read alignment and can be thousands of orders of magnitude faster when compared to the naive brute force approach [10] (Supplementary Note 1). Read alignment enables observation of the differences between the read and the reference genome. These differences can be caused by either real genetic variants in the sequenced genome or errors generated by the sequencing platform. These sequencing errors and read lengths, which are typically short, make the read alignment problem computationally challenging. The continued increase in the throughput of modern sequencing technologies creates additional demand for efficient algorithms for read alignment. Over the past several decades, a plethora of tools were developed to align reads onto reference genomes across various domains of biology. Previous efforts that provide overviews of various algorithms and techniques used by read aligners are presented elsewhere [10,11,12], including studies that present benchmarks of existing tools [13, 14]. Since the time those efforts were published, many new alignment algorithms have been developed. Additionally, previous efforts lack a historical perspective on algorithm development.

Our review provides a historical perspective on how technological advancements in sequencing are shaping algorithm development across various domains of modern biology, and we systematically assess the underlying algorithms of a large number of aligners (n = 107). Algorithmic development and challenges associated with read alignment are to a large degree data- and technology-driven, and emerging highly accurate ultra-long-read sequencing techniques promise to expand the application of read alignment.

Where do reads come from—advantages and limitations of read alignment

One can study an individual genome using sequencing data in two ways: by mapping reads to a reference genome, if it exists, or by de novo assembling the reads. The complexity of the human genome, in combination with the short length of sequenced reads, poses substantial challenges to our ability to accurately assemble personal genomes [15]. Even recently-introduced ultra-long reads [16] (up to 2 Mb) offer the limited capacity to build a de novo assembly of an individual genome with no prior knowledge about the reference genome [16]. The presence of many repetitive regions in the human genome limits our ability to assemble a personal human genome as a single sequence. Emerging long-read sequencing technologies that are capable of producing ultra-long reads [16] promise to deliver more accurate assemblies [17]. However, the relatively high error rate of data output from recently developed long-read sequencing technologies often results in inaccuracies in the assembled genomes, especially when using low sequencing coverage [18, 19].

The read alignment problem is known to be solvable in polynomial time [20], while a polynomial-time solution for genome assembly is still unknown [20,21,22]. Genome assembly is typically slower and more computationally intensive than read alignment [17, 23, 24] due to the presence of repeats that are much longer than the typical read length. This makes assembly impractical in studies that involve large-scale clinical cohorts of thousands of individuals. At the same time, when the reference genome is unknown, long reads are a valuable resource for assembling genomes that are far more complex than the human genome, such as the hexaploid bread wheat genome [17, 23, 25].

The availability of a large number of alignment methods that are scalable to both read length and genome size has enabled read alignment to become an essential component of high-throughput sequencing analysis (Table 1) [26]. However, read alignment also has its own fundamental challenges. First, some challenges are caused by the incompleteness of the reference genomes that have multiple assembly gaps [16]. Reads originating from these gaps often remain unmapped or are incorrectly mapped to homologous regions. Second, the presence of repetitive regions of the genome confounds current read alignment techniques, which often map reads originating from one region to match several other repetitive regions (such reads are known as multi-mapped reads). In such cases, most read aligners simply report one location randomly selected among the possible mapping locations, in turn, significantly reducing the number of detected variants [27]. Third, read alignment techniques should tolerate differences between reads and the reference genome. These differences may correspond to a single nucleotide (including deletion, insertion, and substitution of a nucleotide) or to larger structural variants [28]. Fourth, read alignment algorithms need to align reads to both forward and reverse DNA strands of the same reference genome in order to tackle the strand bias problem, defined as the difference in genotypes identified by reads that map to forward and reverse DNA strands. Strand bias is likely caused by errors introduced during library preparation and not by mapping artifacts [27, 29].

Co-evolution of read alignment algorithms and sequencing technologies

Over the past few decades, we have observed an increase in the number of alignment tools developed to accommodate rapid changes in sequencing technology (Table 1). Published alignment tools use a variety of algorithms to improve the accuracy and speed of read alignment (Table 2). At the same time, the development of read alignment algorithms is impacted by rapid changes in sequencing technologies, such as read length, throughput, and error rates (Supplementary Table 1). For example, some of the first alignment algorithms (e.g., BLAT [38]) were designed to align expressed sequence tag (EST) sequences, which are 200 to 500 bp in length. Another early alignment algorithm, BLASTZ [39], was designed to align 1 Mb human contigs onto the mouse genome. After short reads became available, the majority of the algorithms have focused on the problem of aligning hundreds of millions of short reads to a reference genome. Recent sequencing technologies are capable of producing multi-megabase reads at the cost of high error rates (up to 20%)—a development that poses additional challenges for modern read alignment methods [17]. A recent improvement in circular consensus sequencing (CCS) allows a substantial reduction in sequencing error rates; for example, the error rate has dropped from 15% down to 0.0001% by sequencing the same molecule at least 30 times and further correcting errors by calculating consensus [136].

We have studied the underlying algorithms of 107 read alignment tools that were designed for the short- and long-read sequencing technologies and were published from 1988 to 2020 (Table 1). We defined read alignment as a three-step procedure (Supplementary Note 2). First, indexing with the aim of quickly locating genomic subsequences in the reference genome is performed. This step includes building a large index database from a reference genome and/or the set of reads (Fig. 1a, b). Second, global positioning is performed to determine the potential positions of each read in the reference genome. In this step, alignment algorithms use the prepared index to determine one or more possible regions of the reference genome that are likely to be similar to each read sequence (Fig. 1c, d). Lastly, pairwise alignment is performed between the read and each of the corresponding regions of the reference genome to determine the exact number, location, and type of differences between the read and corresponding region (Fig. 1e, f).

Overview of a read alignment algorithm. a The seeds from the reference genome sequence are extracted. b Each extracted seed and all its occurrence locations in the reference genome are stored using the data structure of choice (suffix tree and hash table are presented as an example). Common prefixes of the seeds are stored once in the branches of the suffix tree, while the hash table stores each seed individually. c The seeds from each read sequence are extracted. d The occurrences of each extracted seed in the reference genome are determined by querying the index database. In this example, the three seeds from the first read appear adjacent at locations 5, 7, and 9 in the reference genome. Two of the same seeds appear also adjacent at another two locations (12 and 16). Other non-adjacent locations are filtered out (marked with X) as they may not span a good match with the first read. e The adjacent seeds are linked together to form a longer chain of seeds by examining the mismatches between the gaps. Pre-alignment filters can also be applied to quickly decide whether or not the computationally expensive DP calculation is needed. f Once the pre-alignment filter accepts the alignment between a read and a region in the reference genome, then DP-based (or non-DP-based) verification algorithms are used to generate the alignment file (in BAM or SAM formats), which contains alignment information such as the exact number of differences, location of each difference, and their type.

Hashing is the most popular technique for indexing the reference genome

The key goal of the indexing step is to facilitate quick and efficient querying over the whole reference genome sequence, producing a minimal memory footprint by storing the redundant subsequences of the reference genome only once [17, 20, 137]. Rapid advances in sequencing technologies have shaped the development of read alignment algorithms, and major changes in technology have rendered many tools obsolete. For example, some early methods [43, 44, 47, 48, 80] built the index database from the reads. Today’s longer read lengths and increased throughput of sequencing technologies make such an approach infeasible for analyzing modern sequencing data. Modern alignment algorithms typically build the index database from the reference genome and then use the subsequences of the reads (known as seeds or qgrams) to query the index database (Fig. 1a). In general, indexing the reference genome compared to the read set is a more practical and resource-frugal solution. Additionally, it allows reusing the constructed reference genome index across multiple samples.

We observe that the most popular indexing technique used by read alignment tools is hashing, which is used exclusively by 60.8% of our surveyed read aligner tools from various domains of biological research (Fig. 2). Hashing is also the most popular individual indexing method for aligners that can handle DNA-Seq data, accounting for 68.3% of the surveyed read aligner tools. Hash table indexing was first used in 1988 by FASTA [30, 138] and has since dominated the landscape of read alignment tools. Hashing was also the only dominant technique to be used until the BWT-FM index was introduced by Bowtie [55] (Fig. 3a). Its popularity can be explained by the simplicity and ease of implementation when compared to other indexing techniques. Other advantages and limitations of hashing are outlined in Table 2. The hash table is a data structure that stores the content of some short regions of the genome (e.g., seeds) and their corresponding locations in the reference genome (Fig. 1b). Such regions are also known as k-mers or qgrams [139]. After the genomic seeds are produced, the alignment algorithm extracts the seeds from each read and uses them as a key to query the hash table index. The hash table returns a location list storing all occurrence locations of the read seed in the reference genome.

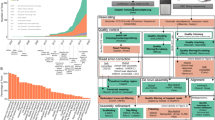

Combination of algorithms utilized by read alignment tools. Sankey plot displaying the flow of surveyed tools using each indexing technique and pairwise alignment. For every indexing technique, the percentage of surveyed tools using the algorithm is displayed (BWT-FM 26.2%, BWT-FM, and Hashing 2.8%, Hashing 60.8%, Other Suffix 10.3%). For every pairwise alignment technique, the percentage of surveyed tools using the algorithm is displayed (Smith-Waterman 28.3%, Hamming distance 19.2%, Needleman-Wunsch 16.2%, Other DP 14.1%, Non-DP Heuristic 13.1%, Multiple Methods 9.1%)

The landscape of read alignment algorithms published from 1988 to 2020. a Histogram showing the cumulation of surveyed tools over time colored by the algorithm used for genome indexing. The first published aligner, FASTA, is labeled as well as the point at which Bowtie and BWA were introduced and changed the landscape of aligners. b The popularity of all surveyed aligners, judged by citations per year since the initial release. Tools are grouped by the algorithm used for genome indexing. The six overall most popular aligners are labeled. c Histogram showing the cumulation of surveyed tools over time colored by the algorithm used for pairwise alignment. The two aligners credited to have been the first to use the three most popular algorithms (FASTA: Smith-Waterman and Needleman-Wunsch, RMAP: Hamming distance) are labeled. d The popularity of each surveyed aligner, judged by citations per year since the initial release. Tools are grouped by the algorithm used for pairwise alignment. The six overall most popular aligners are labeled.

Alignment tools utilizing suffix-tree-based indexing are generally faster and more widely used

The second most popular approach to indexing is the suffix-tree-based techniques, used exclusively by 36.5% of the surveyed read aligner tools (Fig. 2) (Table 1). ERNE 2 [116], LAMSA [122], and lordFAST [128] are categorized separately since they combine hashing with a suffix-tree-based technique. A suffix tree is a tree-like data structure where separate branches represent different suffixes of the genome; the shared prefix between two suffixes of the genome is stored only once. Every leaf node of the suffix tree stores all occurrence locations of this unique suffix in the reference genome (Fig. 1b). Unlike a hash table, a suffix tree allows searching for both exact and inexact match seeds [140, 141] by walking through the tree branches from the root to a leaf node, detouring as needed, following the query sequence (Table 2). While some algorithms [142, 143] specifically rely on creating suffix trees, the most frequently chosen tools from this category use the Burrows-Wheeler Transform (BWT) and the FM index (hence called BWT-FM-based tools) to mimic the suffix-tree traversal process while generating a smaller memory footprint [99]. The performance of the read aligners in this category degrades as either the sequencing error rate increases or the genetic differences between the subject and the reference genome are more likely to occur [144, 145].

The effect of read alignment algorithms on speed of alignment and computational resources

To measure the effect of read alignment algorithms on speed of alignment and computational resources, we have compared the running time and memory (RAM) required of eleven read alignment tools when applied to ten real WGS datasets (Fig. 4a, b). We used tools available via the Bioconda package manager [146]. We ran these tools using their default parameters. We randomly selected ten WGS samples from the 1000 Genomes Project. We excluded tools specifically designed for RNA-Seq or BS-Seq. Details on how the tools were installed and ran are provided in Supplementary Note 3.

The effect of read alignment algorithms on the speed of alignment and computational resources. Results of the benchmarking performed on 11 surveyed DNA read alignment tools that can be installed through bioconda (RMAP, Bowtie, BWA, GSNAP, SMALT, LAST, SNAP, Bowtie2, Subread, HISAT2, and minimap2) additionally noted in Supplementary Table 2 and Supplementary Note 3. Each tool’s CPU time and RAM required were recorded for 10 different WGS samples from the 1000 Genomes Project. a, b Violin plots showing the relative performance (a CPU time and b RAM) of the benchmarked aligners. Aligners are ordered by year of release. c, d The relative performance (c CPU time and d RAM) of the benchmarked aligners grouped by the algorithm used for genome indexing and colored by individual aligners (BWT-FM CPU time vs. Suffix array CPU time: LRT, p value = 1.5 × 10−15, Hashing memory vs. BWT-FM memory: LRT, p value = 2.2 × 10−3, BWT-FM memory vs. Suffix Array memory: LRT, p value < 2 × 10−16). The legend of d is the same for c, e, and f. e The relative performance (CPU time) of the benchmarked aligners grouped by whether the tool was released before or after long-read technology was introduced (2013) and colored by individual aligners (LRT, p value = 3.7 × 10−11). f The relative performance (CPU time) of the benchmarked aligners grouped by the algorithm used for pairwise alignment and colored by individual aligners (Needleman-Wunsch CPU time vs. Smith-Waterman CPU time: Wald, p value = 1.3 × 10−4, Needleman-Wunsch CPU time vs. Hamming Distance CPU time: Wald, p value = 9.3 × 10−7, Needleman-Wunsch CPU time vs. Non-DP Heuristic CPU time: Wald, p value = 1.8 × 10−10)

We found no significant difference in the runtime for BWT-FM tools and hashing-based tools when adjusting for year of publication, chain of seeds, and type of pairwise alignment (Likelihood ratio test (LRT) p value = 0.5) (Fig. 4c, Supplementary Table 3, 4). SMALT [69] is an outlier to this observation, and it shows the highest execution time (Fig. 4c) as it uses standard non-accelerated pairwise alignment algorithm (Smith-Waterman algorithm). BWT-FM-based tools did require, on average, 3.8× less computational resources when compared to hashing-based tools, adjusting for year of publication, chain of seeds, and type of pairwise alignment algorithm (LRT p value = 2.2 × 10−3) (Fig. 4d, Supplementary Table 5, 6). SNAP [81] shows the highest memory footprint (Fig. 4d) as its index exceptionally uses much longer (> 20 bp) seeds compared to most other tools. The default suffix array implemented by LAST [78] requires, on average, 4.38× more running time and 3.58× more computational resources when compared to BWT-FM-based tools (LRT test p value = 1.5 × 10−15 and < 2 × 10−16 for runtime and memory, respectively) (Fig. 4c, d, Supplementary Table 3, 4, 5, 6).

Despite the difference in performance driven by algorithms, we observed an overall improvement (9.2× reduction) in computation time of read alignment over time (s.e. = 0.09; LRT test p value = 3.7 × 10−11) (Fig. 4e, Supplementary Table 3, 4) but no significant improvement (only 1.57× reduction) of their memory requirements (s.e. = 0.24; LRT p value = 0.41) (Supplementary Figure 1, Supplementary Table 5, 6). Usually, the index is created separately for each genome. Some methods incorporate multiple genomes into a single index graph [58, 76, 115], while other methods use a de Bruijn graph for hashing [58, 116]. Although computing the genome index can take up to four hours, it usually needs to be computed only once and is often already precomputed for various species (Supplementary Figure 2). Updating the genome index can create a bottleneck in the analysis, especially for extremely large genome databases. Bloom1-filter-based algorithms promise to provide an alternative way of indexing while preserving faster search times [125, 147].

We surveyed 28 BWT-FM-based tools to compare the popularity of the read alignment algorithms using the number of times the introductory publication has been cited in other papers. Of those, three aligners have accumulated more than 1000 citations per year since release, and 18% of the BWT-FM-based tools have been cited by at least 500 papers per year. In contrast, only two of the 63 hashing-based tools have more than 1000 citations per year, but those two aligners (BLAST [31] and Gapped BLAST [32]) are, by far, the most popular with 2726 and 3143 citations per year, respectively (Fig. 3b). Notably, tools cited more than 500 times per year were among the most effective both in terms of runtime and required computational resources (Supplementary Figure 3).

Majority of the tools utilize fix length seeding to find the global position of the read in the reference genome

The goal of the second step of read alignment is to find the global position of the read in the reference genome. This step is known as global positioning and uses the generated genome index to retrieve the locations (in the genome) of various seeds extracted from the sequencing reads (Fig. 1c). The read alignment algorithm uses the determined seed locations to reduce the search space from the entire reference genome to only the neighborhood region of each seed location (Supplementary Note 4).

The number of possible locations of a seed in the reference genome is affected by two key factors: the seed length and the seed type. The estimated number of such locations is extremely large for short seeds and can reach tens of thousands for the human genome. The high frequency of short seeds is due to the repetitive nature of most genomes, which creates a high probability of finding the same short seed frequently in a long string of only four DNA letters. A large number of possible locations for short seeds imposes a significant computational burden on read alignment algorithms [148, 149]. Only a few read alignment algorithms examine all the seed locations reported in the location list [102]. Most of the read alignment algorithms apply heuristic devices to avoid examining all the locations of the seed in the reference genome (Fig. 1d, Supplementary Note 4).

Longer seed lengths can help reduce both the number of possible locations of a seed in the reference genome and the number of chosen seeds from each read. These benefits come at the cost of a possible reduction in alignment sensitivity, especially in cases where the mismatches between the read and the genome are located within the seed sequence. To enable increasing the seed length without reducing the alignment sensitivity, seeds can be generated as spaced seeds (Supplementary Note 4 ) [34,35,36,37, 139].

The majority of the surveyed alignment algorithms use seeds of fixed length at run time. Some algorithms generate seeds of various lengths [83, 108, 150] in order to reduce the hit frequencies while tolerating mismatches. Varying the seed length or using different types of seed during the same run is often referred to as hybrid seeding [108] and was used by 20 of the 107 surveyed alignment algorithms. The first tool to use variable-length seeds was GMAP [41]. Hybrid seeding with a hash-based index would require the creation of multiple hash tables of the same genome and would require extra computational resources. As a result, the vast majority of tools that use variable-length seeds use a suffix tree indexing technique (BWT-FM or other).

Majority of the tools utilize Hamming distance and Smith-Waterman to determine similarity between the read and its global positions in the reference genome

The goal of the last step of a read alignment algorithm is to determine regions of similarity between each read and the global positions of each read in the reference genome, which was determined in the previous step. These regions are potentially highly similar to the reads, but read alignment algorithms still need to determine the minimum number of differences between two genomic sequences, the nature of each difference, and the location of each difference in one of the two given sequences. Such information about the optimal location and the type of each edit is normally calculated using a verification algorithm (Fig. 1f) that first verifies the similarity between the query read and the corresponding region in the reference genome. Verification algorithms can be categorized into algorithms based on dynamic programming (DP) [151] and non-DP-based algorithms. The DP-based verification algorithms can be implemented as local alignment (e.g., Smith-Waterman [152]) or global alignment (e.g., Needleman-Wunsch [153]). DP-based verification algorithms can also be implemented as semi-global alignment, where the entirety of one sequence is aligned to one of the ends of the other sequence [108, 109, 117].

The non-DP verification algorithms include Hamming distance [154] and the Rabin-Karp algorithm [155]. When one is interested in finding genetic substitutions, insertions, and deletions, DP-based algorithms are favored over non-DP algorithms. In general, the local alignment algorithm is preferred over global alignment when only a fraction of the read is expected to match with some regions of the reference genome due to, for example, large structural variations [63]. The Smith-Waterman [152] and Needleman-Wunsch [153] alignment algorithms were both first used by FASTA [30, 138] in 1988, which we categorize as “Multiple Methods” (Fig. 3c). Smith-Waterman remains the most popular algorithm and is used by 28.3% of our surveyed tools (Fig. 2). Needleman-Wunsch, in contrast, has only been used by 16.2% of our surveyed tools (Fig. 2). However, if we include the tools which allow for multiple methods, Smith-Waterman represents 38.3% and Needleman-Wunsch represents 26.2% of alignment algorithms used. This trend is due to the fact that 12 of the 13 tools classified as “Multiple Methods” use or allow both Smith-Waterman and Needleman-Wunsch. Non-DP verification using Hamming distance [154] has been the second most popular single technique since used for the first time by RMAP [44] in 2008 (Fig. 3c). There is no significant correlation between the indexing technique used and the pairwise alignment algorithm chosen. Most major indexing techniques are used in conjunction with most pairwise alignments. However, BWT-FM-based aligners do comprise the largest percentage of tools that allow multiple pairwise alignment methods (Fig. 2).

As the number of differences between two sequences is not necessarily equivalent to the sum of the number of differences between the subsequences of these sequences, it is necessary to perform verification for the entire read sequence and the corresponding region in the reference sequence [156]. Existing DP-based algorithms can be inefficient as they require quadratic time and space complexity. Despite more than three decades of attempts to improve their algorithmic implementation, the fastest known edit distance computation algorithm is still nearly quadratic [157]. Some of the read alignment algorithms use DP only for seed chaining, which provides suboptimal alignment calculation [38, 40]. This approach is called sparse DP and is used in C4 [40], conLSH [135], and LAMSA [122]. An alternative way to accelerate the alignment algorithms is by reducing the maximum number of differences that can be detected by the verification algorithm, which reduces the search space of the DP algorithm and shortens the computation time [106, 158,159,160,161,162,163,164, 167, 168] (Supplementary Note 5).

We found that tools which use the Needleman-Wunsch [153] algorithm are faster than tools which use other algorithms (faster by 3.57×, 4.14×, and 6.7× and Wald test p values 9.3 × 10−7, 1.8 × 10−10, and 1.3 × 10−4 for Hamming distance, non-DP heuristics, and SW algorithms, respectively) (Fig. 4f, Supplementary Table 3), adjusting for publication year, seed chaining, and indexing method. Despite the overall longer runtime of Hamming distance-based methods, the latest hashing-based tools (e.g., HISAT2 [133]) provide a comparable running time with the fastest Needleman-Wunsch-based tools. We also found significant differences in the amount of computational resources required by read alignment tools using different pairwise alignment algorithms after adjusting for publication year, type of seed, and indexing method (LRT; p value = 0.04) (Supplementary Figure 4, Supplementary Table 6). Notably, the algorithms with the smallest computational footprints use various types of pairwise alignment algorithms.

Influence of long-read technologies on the development of novel read alignment algorithm

Alignment of the long reads produced by modern long-read technologies [16, 136, 169] provides a unique possibility to discover previously undetectable structural variants [16, 170, 171]. Long reads also improve the construction of an accurate hybrid de novo assembly [16, 172], in cases where long and short reads are suffix-prefix overlapped, or in cases where reads are aligned using pairwise alignment algorithms, to construct an entire assembly graph. This is helpful when a reference genome is either unavailable [173, 174] or is complex and contains large repetitive genomic regions [175].

Existing long-read alignment algorithms still follow the three-step-based approach of short-read alignment. Some long-read alignment tools even divide every long read into short segments (e.g., 250 bp), align each short segment individually, and determine the mapping locations of each long read based on the adjacent mapping locations of these short segments [123, 127]. Some long-read alignment tools use hash-based indexing [110, 120, 176], while others use BWT-FM indexing [54, 98, 177]. The major challenge with the long-read alignment algorithms is dealing with large sequencing errors and a significantly large number of short seeds extracted from each long or ultra-long read [178]. Thus, the most recently developed long-read alignment algorithms require heuristically extracting fewer seeds per read length when compared to those extracted from short reads. Instead of creating a hash table for the full set of seeds, recent long-read alignment algorithms find the minimum representative set of seeds from a group of adjacent seeds within a genomic region. These representative seeds are called minimizers [179, 180] and can also be used to compress genomic data [181] or taxonomically profile metagenomic samples [182]. Long-read alignment algorithms [119, 124, 183] that use hashed minimizers as an indexing technique provide a faster alignment process compared to other algorithms that use conventional seeding or BWT-FM. They also provide a significantly faster (> 10×) indexing time (Supplementary Table 1). However, their accuracy degrades with the use of short reads as they process a fewer number of seeds per short read [124].

Box 1. Advantages and limitations of short- versus long-read alignment algorithms

• Error rate. The error rate of modern short-read sequencing technologies is smaller than that of modern long-read technologies. | |

• Genome coverage. Throughput (i.e., the number of reads) of modern short-read sequencing technologies is higher than that of modern long-read technologies. | |

• Global position. Determine a global position of the read by identifying the starting position or positions of the reads in the reference genome. This step is ambiguous with short reads, as the repetitive structure of the human genome causes such reads to align to multiple locations of the genome. In contrast, long reads are usually longer than the majority of repeat regions and are aligned to a single location in the genome. | |

• Local pairwise alignment. After determining the global position of each read, the algorithms map all bases of the read to the reference segments, located at these global positions, in order to account for indels. Due to the smaller error rate of short-read technologies, it is usually easier to perform local alignment on short reads than on long ones. | |

• Genomic variants. Single-nucleotide polymorphisms (SNPs) are easy to detect using short reads when compared to long reads due to the lower error rate and higher coverage of short-read sequencing technologies. Structural variants (SVs) are easy to detect with long reads, which span the entire SV region. Current long-read-based tools [184] are able to detect deletions and insertions with high precision. The sparse coverage of long reads may lower the sensitivity of detection. |

Read alignment across various domains of biological research

We discuss the challenges and the features of these algorithms that are specific to the various domains of modern biological research. Often the domain-specific alignment problem can be solved by creating a novel tool from scratch or wrapping the existing algorithms into a domain-specific alignment tool (Supplementary Figure 5 and 6). Additionally, longer reads make the read alignment problem similar across areas of biological research. For example, tools recently designed to align long reads can handle both DNA and RNA-Seq reads [131].

RNA-Seq alignment

RNA sequencing is a technique used to investigate transcriptomics by generating millions of reads from a collection of human alternative spliced isoform transcripts, referred to as a transcriptome [185]. RNA-Seq has been widely used for gene expression analysis as well as splicing analysis [14, 185, 186]. However, the alignment of RNA sequencing reads needs to overcome additional challenges when mapping the reads originating from human transcriptome onto the reference genomes. Those challenges arise due to differences between the human transcriptome and the human genome; these differences define a subset of alignment problems known as spliced alignment. Spliced alignment requires that the one takes into account reads spanning over large gaps caused by spliced out introns [185]. Reads spanning only a few bases across the junctions can be easily aligned to an adjacent intron or aligned in a wrong location, making the accurate alignment more difficult [14, 185].

Several spliced alignment tools have been developed to address this issue and align RNA-Seq reads in a splicing-aware manner (Table 1 and Fig. 1c). Hashing is the most popular technique among RNA-Seq aligners (Supplementary Figure 7). This is even more evident if we remove the RNA-Seq aligners that are wrappers of existing DNA-Seq alignment methods (Supplementary Figure 5). Over 60% of the RNA-Seq aligners which are wrappers of existing DNA-Seq alignment methods use Bowtie or Bowtie2 (Supplementary Figure 5). When considering only stand-alone RNA-Seq aligners, the number of aligners using hashing more than doubles the number of aligners using an FM index (Supplementary Figure 8).

The most popular tool based on the number of citations was TopHat2 [105] (Table 1). TopHat2 uses Bowtie2 to align reads that may span more than one exon by splitting the reads into smaller segments and stitching the segments together to form a whole read alignment. The stitched read alignment spans a splicing junction on the human genome. This method allows identification of the splicing junction without transcriptome alignment. A more recent tool, HISAT2, uses a hierarchical indexing algorithm that leverages the Burrows-Wheeler Transform and Ferragina-Manzini index to align parts of reads and extend the alignment [115]. Another popular method, RNA-Seq aligner—called STAR—utilizes suffix arrays to identify a maximal mappable prefix, which is used as seeds or anchors, and stitch together the seeds that aligned within the same genomic window [104]. Although those tools can detect splicing junctions within their algorithm, it is possible to supply known gene annotation to increase the accuracy of a spliced alignment. The alignment accuracy, measured by correct read placement, can be increased 5–10% by supplying known gene annotations [14, 185]. HISAT2 and STAR are able to align the reads accurately with or without a splicing junction [14]. Furthermore, the discovery and quantification of novel splicing junctions can be significantly improved using two passes in STAR, which generates a list of possible junctions in the first pass and identifies aligning reads leveraging the junctions in the second pass [187]. While spliced alignment can provide an important splicing junction information, those tools require intensive computational resources [14].

To align RNA-Seq reads onto the transcriptome reference instead of the genome reference, regular DNA aligners are typically used. Mapping to the transcriptome is usually performed to estimate expression levels of genes and alternatively spliced isoforms by assigning reads to genes and alternatively spliced isoforms [104, 188]. Since many alternatively spliced isoforms share exons, which are usually longer than the short reads, probabilistic models are used as it is impossible to uniquely assign reads to the isoform transcripts [189].

Alternatively, one can avoid computationally expensive alignment and perform pseudo-alignment, such as Kallisto [104] and Salmon [187]. Kallisto [190] uses transcriptome de Bruijn graph as an index where its nodes are seeds. Kallisto determines the locations of each input read by matching seeds extracted from reads with the seeds of the index without performing sequence alignment. Kallisto also exploits the structure of the de Bruijn graph to avoid examining more than a few seeds located at the same graph’s path (between two junctions). This reduces the number of seed lookups in the index and hence reduces expensive memory accesses.

In contrast, Salmon [190, 191] can optionally perform either pseudo-alignment or read alignment. Salmon approximates the locations of each input read by building a hashing index in conjunction with a suffix array index. The seeds extracted from each read are looked up in the hash table and then the suffix array provides all suffixes of the reference genome containing the matched seed. Similar to Kallisto, Salmon tries to reduce the number seed lookups by finding the longest subsequence of the read that exactly matches the reference suffixes and excluding these regions from seed lookups.

In contrast to regular alignment algorithms, pseudo-alignment algorithms [190, 191] are unable to provide the precise alignment position of the read in the genome nor alignment profile (e.g., CIGAR string). Instead, pseudo-alignment algorithms assign the reads to a corresponding gene and/or alternatively spliced isoform. Usually, such information can be sufficient to accurately estimate gene expression levels of the sample [192]. A higher sequencing depth is demonstrated to improve the accuracy of Salmon and decreases the accuracy of Kallisto, as only Salmon exploits abundance information of each isoform to assist the seed matching [188].

Metagenomic alignment

Metagenomics is a technique used to investigate the genetic material in human or environmental microbial samples by generating millions of reads from the microbiome—a complex microbial community residing in the sample. Metagenomic data often contains an increased number of reads required to be aligned against more than hundreds of thousands of microbial genomes. For example, as of July 2018, the total number of nucleotides in NCBI’s collection of bacterial genomes measures over 204 times the number of nucleotides present in the Genome Reference Consortium Human Build 38 (Supplementary Note 6). The increased number of reads and the size of reference databases pose unique challenges to existing alignment algorithms when applied to metagenomics studies.

In targeted gene sequencing studies, such as those that sequence portions of the 16S ribosomal RNA of prokaryotes or internally transcribed spacers (ITS) of eukaryotes, a number of task-specific aligners are utilized to identify the origin of candidate reads or to perform homology searches. For example, Infernal [193] utilizes profile hidden Markov models to perform alignment based on RNA secondary structure information. Multiple sequence aligners are also utilized in metagenomic analysis pipelines such as QIIME [194], Mothur [195], and Megan [195, 196]. For example, NAST [195,196,197] and PyNAST [198] use 7-mer seeds and a BLAST alignment that is then further refined using a bidirectional search to handle indels. Similarly, MUSCLE [198, 199] uses an initial distance estimation based on k-mers and proceeds through a progressively constructed hierarchical guide tree while optimizing a log expectation for multiple sequence alignment [199].

For untargeted whole genome shotgun (WGS) metagenomic studies, the task of identifying the genomic or taxonomic origin of sequencing reads (referred to as “fragment recruitment” or “taxonomic read binning”) is even more difficult, individual reads can originate from multiple organisms due to shared homology or horizontal gene transfer and reads may originate from previously unsequenced organisms. This has sparked the development of a variety of tools [200] which aim to identify the presence and relative abundance of taxa or organisms present in a metagenomic sample via a reference-free and/or alignment-free fashion (referred to as “taxonomic profiling”). Similar in spirit to RNA-Seq alignment, these tools avoid computationally expensive base-level alignment and perform pseudo-alignment or multiple types of k-mer matching to detect the presence of organisms in a metagenomic sample [182, 201, 202], as well as use minimizers to reduce computational time [182].

Other approaches handle growing reference database sizes by aligning reads onto a reduced reference database, sometimes composed of marker microbial genes that are present in specific taxa. Reads mapping to those genes can be used to determine the presence of specific taxa in a sample [203]. Such tools typically use existing DNA alignment algorithms (e.g., MetaPhlAn [203] uses the Bowtie2 aligner).

Even with the development of these new metagenomic tools, existing read alignment tools (e.g., MOSAIK, SOAP, and BWA) are still used for fragment recruitment purposes [204]. However, the use of existing read alignment tools for metagenomics carries a significant computational burden and is identified as the main bottleneck in the analysis of such data. This major limitation suggests the need for the development of alignment tools capable of handling the increased number of reads and reference genomes seen in such studies [205].

Metagenomics studies are also capable of functional annotation of microbiome samples by aligning the reads to genes, gene families, protein families, or metabolic pathways. Protein alignment is beyond the scope of this manuscript, but many of the algorithmic approaches previously discussed are utilized for functional annotation [204, 206]. For example, RAPSearch2 [204, 206] uses a collision-free hash table based on amino acid 6-mers. The protein aligner DIAMOND [207] utilizes a spaced-seed-and-extend approach based on a reduced alphabet and unique indexing of both reference and query sequences. Indexing of both the reference and the query reads provides multiple orders of magnitude in speed improvements over older tools (such as BLASTX) at the cost of increased memory usage. Recently, MMseqs2 [205] utilizes consecutive, similar k-mer matches to further improve the speed of protein alignment.

Viral quasispecies alignment

RNA viruses such as human immunodeficiency virus (HIV) are highly mutable, with the mutation rates being as high as 10−4 per base per cell [208] allowing such viruses to form highly heterogeneous populations of closely related genomic variants commonly referred to as quasispecies [209]. Rare genomic variants, which are a few mutations away from the major strain, are often responsible for immune escape, drug resistance, viral transmission, and increase of virulence and infectivity of the viruses [210, 211]. Massively parallel sequencing techniques allow for sampling of intra-host viral populations at high depth and provide the ability to profile the full spectra of viral quasispecies, including rare variants.

Similar to other domains, accurate read alignment is essential for assembling viral genomic variants including the rare ones. Aligning reads that originated from heterogeneous populations of closely related genomic variants to the reference viral genome give rise to unique challenges for existing read alignment algorithms. For example, read alignment methods should be extremely sensitive to small genomic variations while being robust to artificial variations introduced by sequencing technologies. At the same time, the genetic difference between viral quasispecies of different hosts is usually substantial (unless they originated from the same viral outbreak or transmission cluster), which makes the application of predefined libraries of reference sequences for viral read alignment problematic or even impossible.

Currently, viral haplotyping tools [212, 213] and variant calling tools [214, 215] frequently rely on existing independent alignment tools. While viral samples contain several distinct haplotypes, the read alignment tools such as BWA [145] and BowTie [216] can only map reads to a single reference sequence. Since certain haplotypes may be further or closer to the reference sequence, the reads emitted by such haplotypes may have different mapping quality. Some tools re-align reads to the consensus sequence instead of keeping the original alignment to the reference. Nevertheless, even alignment to the perfect reference or consensus sequence can reject perfectly valid short reads because of multiple mismatches. Rejection of such reads may cause loss of rare haplotypes and mutations. Systematic sequencing errors (such as homopolymer errors) frequently cause alignment errors. Although the sequencing error rate, both systematic and random, is comparatively low, such errors can be more frequent than the rarest variants. The alignment errors caused by sequencing errors may cause drastic sensitivity and reduction in specificity of haplotyping and variant calling methods (Supplementary Figure 9).

Aligning bisulfite-converted sequencing reads

Bisulfite-converted sequencing is a technique used to sequence methylated fragments [217, 218]. During sequencing, most of the cytosines (C) in the reads become thymines (T). Since every sequenced T could either be a genuine genomic T or a converted C, special techniques are used to map those reads [219]. Some tools substitute all C in reads with wildcard bases, which can be aligned to C or T in the reference genome [37, 52], while other tools substitute all C by T in all reads and reference and work with a three-letter alphabet aligning to a C-to-T-converted genome [77, 96]. Unlike RNA-Seq aligners, FM index was the most popular technique among BS-Seq aligners (Supplementary Figure 10). One-third of the surveyed BS-Seq aligners were wrappers of existing DNA-Seq alignment methods (Supplementary Figure 6), with all three of those wrapping Bowtie or Bowtie2 (Supplementary Figure 6). As a result, when considering only stand-alone BS-Seq aligners, the numbers of aligners using each indexing algorithm become extremely similar (Supplementary Figure 11).

Other domains

Other domains requiring specialized alignment include B and T cell receptor repertoire analysis. The repertoire data is generated using targeter repertoire sequencing protocols, known as BCR- or TCR-Seq. For example, tools designed to align reads to the V(D)J genes use combinations of fast alignment algorithms and more sensitive modified Smith-Waterman and Needleman-Wunsch algorithms [182, 220, 221].

Discrepancies between the reads and the reference may reveal the historical errors in the reference assembly

Genome sequencing datasets, especially those generated with long reads, provide a unique perspective to reveal errors in the reference assemblies (e.g., human reference genome) based on the discrepancies between the reads and the reference sequence. References and reads (e.g., resequencing data) are often produced using different technologies, and there are usually disagreements between references and reads that produce mapping errors. Similarly, some of these errors also come from the errors in the reads used for assembly, collapsed/merged duplications/repeats, and heterozygosity. For example, a study for structural variation discovery led to the identification of incorrectly inverted segments in the reference genome [222]. Similarly, Dennis et al. [223] characterized a duplicated gene that was not represented accurately because it collapsed in the reference genome. Therefore, using the most recent version of a reference genome is always the best practice, as demonstrated by an analysis of the latest version of the human genome [223, 224].

Structural errors in the reference genomes can be found and corrected by using various orthogonal technologies such as mate-pair and paired-end sequencing [225, 226], optical mapping [227], and linked-read sequencing [228]. Smaller-scale errors (i.e., substitutions and indels) can also be corrected using assembly polishing tools such as Pilon, which employs short-read sequencing data [229]. However, long reads are more powerful in detecting and correcting errors due to the fact that they can span the most common repeat elements. Long-read-based assembly polishers include Quiver [230] that uses Pacific Biosciences data, Nanopolish [231] that uses Nanopore sequencing, and Apollo [232] that can use read sets from any sequencing technology to polish large genomes. Additionally, more modern long-read genome assemblers, such as Canu [233], include built-in assembly polishing tools.

Discussion

Rapid advances in sequencing technologies shaped the landscape of modern read alignment algorithms leading to today’s diverse array of alignment methods. Those technological changes rendered some read alignment algorithms irrelevant—yet provide context for the development of new tools better suited for modern next-generation sequencing data. The development of alignment algorithms is shaped not only by the characteristics of sequencing technologies but also by the specific characteristics of the application domain. Often different biological questions can be answered using similar bioinformatics algorithms. For example, BLAT [38, 234], a tool that was originally designed to map EST and Sanger reads, is now used to map the assembled contigs to the reference genome [234]. Specific features of various domains of biological research, including whole transcriptome, adaptive immune repertoire, and human microbiome studies, confront the developer with a choice of developing a novel algorithm from scratch or adjusting existing algorithms.

In general, the read alignment problem is extremely challenging due to the large size of analyzed datasets and numerous technological limitations of modern sequencing platforms. A modern read aligner should not only be able to maintain a good balance between speed and memory usage but also be able to preserve small and large genetic variations. It should be capable of tackling numerous technological limitations and changes, ultimately inducing rapid evolution of sequencing technologies such as constant growth of read length and changes in error rates. In general, determining an accurate global position of the read in the reference genome provides no guarantee that accurate local pairwise alignment can be found. This is especially challenging for the error-prone long reads, where determining the accurate global position of the read in the reference genome is usually easy, but local pairwise alignment represents a substantial challenge due to a high error rate.

This review not only provides an understanding of the basic concepts of read alignment, its limitations, and how they are mitigated but also helps inform its future directions in read alignment development. We believe the future is bright for read alignment algorithms, and we hope that the many examples of read alignment algorithms presented in this work inspire researchers and developers to enhance the field of computational genomics by accurate and scalable tools.

Availability of data and materials

All data and code required to produce the figures contained within this text are freely available on GitHub: https://github.com/Mangul-Lab-USC/review.technology.dictates.algorithms.

References

Weissenbach J. Human Genome Project: Past, Present, Future. In: The Human Genome; 2002. p. 1–9.

Shendure J, Ji H. Next-generation DNA sequencing. Nat Biotechnol. 2008;26:1135–45.

Metzker ML. Sequencing technologies — the next generation. Nat Rev Genet. 2009;11:31–46.

Payne A, Holmes N, Rakyan V, Loose M. BulkVis: a graphical viewer for Oxford nanopore bulk FAST5 files. Bioinformatics. 2019;35:2193–8.

Goodwin S, McPherson JD, McCombie WR. Coming of age: ten years of next-generation sequencing technologies. Nat Rev Genet. 2016;17:333–51.

Fox EJ, Reid-Bayliss KS, Emond MJ, Loeb LA. Accuracy of Next Generation Sequencing Platforms, Nextgeneration, sequencing & applications. 2014;1:106-14.

Watson M, Warr A. Errors in long-read assemblies can critically affect protein prediction. Nat Biotechnol. 2019;37:124–6.

Srivastava A, Malik L, Sarkar H, Zakeri M, Almodaresi F, Soneson C, Love MI, Kingsford C, Patro R.Alignment and mapping methodology influence transcript abundance estimation. Genome biology. 2020;21(1):1-29.

Saha A, Battle A. False positives in trans-eQTL and co-expression analyses arising from RNA-sequencing alignment errors. F1000Res. 2018;7:1860.

Schbath S, Martin V. Mapping Reads on a Genomic Sequence: An Algorithmic Overview and a Practical Comparative Analysis. J Comput Biol. 2012;19(6):796–813. https://doi.org/10.1089/cmb.2012.0022.

Fonseca NA, Rung J, Brazma A, Marioni JC. Tools for mapping high-throughput sequencing data. Bioinformatics. 2012;28:3169–77.

Li H, Homer N. A survey of sequence alignment algorithms for next-generation sequencing. Brief Bioinform. 2010;11:473–83.

Hatem A, Bozdağ D, Toland AE, Çatalyürek ÜV. Benchmarking short sequence mapping tools. BMC Bioinform. 2013;14:184.

Baruzzo G, et al. Simulation-based comprehensive benchmarking of RNA-seq aligners. Nat Methods. 2017;14:135–9.

Nagarajan N, Pop M. Sequence assembly demystified. Nat Rev Genet. 2013;14:157–67.

Jain M, Koren S, Miga KH, Quick J, Rand AC, Sasani TA, Tyson JR, Beggs AD, Dilthey AT, Fiddes IT, Malla S. Nanopore sequencing and assembly of a human genome with ultra-long reads. Nature biotechnology. 2018;36(4):338-45.

Sedlazeck FJ, Lee H, Darby CA, Schatz MC. Piercing the dark matter: bioinformatics of long-range sequencingand mapping. Nature Reviews Genetics. 2018;19(6):329-46.

Wenger AM, Peluso P, Rowell WJ, Chang PC, Hall RJ, Concepcion GT, Ebler J, Fungtammasan A, Kolesnikov A, Olson ND, Töpfer A. Accurate circular consensus long-read sequencing improves variant detection and assembly of a human genome. Nature biotechnology. 2019;37(10):1155-62.

Wee Y, Bhyan SB, Liu Y, Lu J, Li X, Zhao M. The bioinformatics tools for the genome assembly and analysis based on third-generation sequencing. Briefings in functional genomics. 2019;18(1):1-12.

Canzar S, Salzberg SL. Short Read Mapping: An Algorithmic Tour. Proc IEEE Inst Electr Electron Eng. 2017;105:436–58.

Steinberg KM, Schneider VA, Alkan C, Montague MJ, Warren WC, Church DM, Wilson RK. Building and improving reference genome assemblies. Proceedings of the IEEE. 2017;105(3):422-35.

Baichoo S, Ouzounis CA. Computational complexity of algorithms for sequence comparison, short-read assembly and genome alignment. Biosystems. 2017;156-157:72–85.

Ekblom R, Wolf JBW. A field guide to whole-genome sequencing, assembly and annotation. Evol Appl. 2014;7:1026–42.

Bradnam KR, et al. Assemblathon 2: evaluating de novo methods of genome assembly in three vertebrate species. Gigascience. 2013;2:10.

Zimin AV, et al. The first near-complete assembly of the hexaploid bread wheat genome, Triticum aestivum. Gigascience. 2017;6:1–7.

Flicek P, Birney E. Sense from sequence reads: methods for alignment and assembly. Nat Methods. 2009;6:S6–S12.

Firtina C, Alkan C. On genomic repeats and reproducibility. Bioinformatics. 2016;32:2243–7.

Weiss LA, et al. Association between microdeletion and microduplication at 16p11.2 and autism. N Engl J Med. 2008;358:667–75.

Guo Y, et al. The effect of strand bias in Illumina short-read sequencing data. BMC Genomics. 2012;13:666.

Pearson WR, Lipman DJ. Improved tools for biological sequence comparison. Proc Natl Acad Sci U S A. 1988;85:2444–8.

Altschul SF, Gish W, Miller W, Myers EW, Lipman DJ. Basic local alignment search tool. J Mol Biol. 1990;215:403–10.

Altschul SF, et al. Gapped BLAST and PSI-BLAST: a new generation of protein database search programs. Nucleic Acids Res. 1997;25:3389–402.

Ning Z, Cox AJ, Mullikin JC. SSAHA: a fast search method for large DNA databases. Genome Res. 2001;11(10):1725–9. https://doi.org/10.1101/gr.194201.

Egidi L, Manzini G. Better spaced seeds using Quadratic Residues. J Comput Syst Sci. 2013;79:1144–55.

Rizk G, Lavenier D. GASSST: global alignment short sequence search tool. Bioinformatics. 2010;26:2534–40.

Ma B, Tromp J, Li M. PatternHunter: faster and more sensitive homology search. Bioinformatics. 2002;18:440–5.

Wu TD, Nacu S. Fast and SNP-tolerant detection of complex variants and splicing in short reads. Bioinformatics. 2010;26:873–81.

Kent WJ. BLAT--the BLAST-like alignment tool. Genome Res. 2002;12(4):656–64. https://doi.org/10.1101/gr.229202.

Schwartz S, et al. Human-mouse alignments with BLASTZ. Genome Res. 2003;13:103–7.

Slater GSC, Birney E. Automated generation of heuristics for biological sequence comparison. BMC Bioinform. 2005;6:31.

Wu TD, Watanabe CK. GMAP: a genomic mapping and alignment program for mRNA and EST sequences. Bioinformatics. 2005;21:1859–75.

Lam TW, Sung WK, Tam SL, Wong CK, Yiu SM. Compressed indexing and local alignment of DNA. Bioinformatics. 2008;24:791–7.

Li H, Ruan J, Durbin R. Mapping short DNA sequencing reads and calling variants using mapping quality scores. Genome Res. 2008;18(11):1851–8. https://doi.org/10.1101/gr.078212.108.

Smith AD, Xuan Z, Zhang MQ. Using quality scores and longer reads improves accuracy of Solexa read mapping. BMC Bioinform. 2008;9:128.

Li R, Li Y, Kristiansen K, Wang J. SOAP: short oligonucleotide alignment program. Bioinformatics. 2008;24:713–4.

Ondov BD, Varadarajan A, Passalacqua KD, Bergman NH. Efficient mapping of Applied Biosystems SOLiD sequence data to a reference genome for functional genomic applications. Bioinformatics. 2008;24:2776–7.

Jiang H, Wong WH. SeqMap: mapping massive amount of oligonucleotides to the genome. Bioinformatics. 2008;24:2395–6.

Lin H, Zhang Z, Zhang MQ, Ma B, Li M. ZOOM! Zillions of oligos mapped. Bioinformatics. 2008;24(21):2431–7. https://doi.org/10.1093/bioinformatics/btn416.

De Bona F, Ossowski S, Schneeberger K, Rätsch G. Optimal spliced alignments of short sequence reads. Bioinformatics. 2008;24:i174–80.

Jean G, Kahles A, Sreedharan VT, De Bona F, Rätsch G. RNA-Seq read alignments with PALMapper. Curr Protoc Bioinform. 2010;Chapter 11:Unit 11.6.

Harris EY, Ponts N, Le Roch KG, Lonardi S. BRAT-BW: efficient and accurate mapping of bisulfite-treated reads. Bioinformatics. 2012;28:1795–6.

Xi Y, Li W. BSMAP: whole genome bisulfite sequence MAPping program. BMC Bioinform. 2009;10:232.

Homer N, Merriman B, Nelson SF. BFAST: an alignment tool for large scale genome resequencing. PLoS One. 2009;4:e7767.

Li H, Durbin R. Fast and accurate long-read alignment with Burrows–Wheeler transform. Bioinformatics. 2010;26:589–95.

Langmead B, Trapnell C, Pop M, Salzberg SL. Ultrafast and memory-efficient alignment of short DNA sequences to the human genome. Genome Biol. 2009;10:R25.

Schatz MC. CloudBurst: highly sensitive read mapping with MapReduce. Bioinformatics. 2009;25:1363–9.

Clement NL, et al. The GNUMAP algorithm: unbiased probabilistic mapping of oligonucleotides from next-generation sequencing. Bioinformatics. 2010;26:38–45.

Schneeberger K, et al. Simultaneous alignment of short reads against multiple genomes. Genome Biol. 2009;10:R98.

Eaves HL, Gao Y. MOM: maximum oligonucleotide mapping. Bioinformatics. 2009;25:969–70.

Campagna D, et al. PASS: a program to align short sequences. Bioinformatics. 2009;25:967–8.

Chen Y, Souaiaia T, Chen T. PerM: efficient mapping of short sequencing reads with periodic full sensitive spaced seeds. Bioinformatics. 2009;25:2514–21.

Weese D, Emde A-K, Rausch T, Döring A, Reinert K. RazerS--fast read mapping with sensitivity control. Genome Res. 2009;19:1646–54.

Rumble SM, et al. SHRiMP: accurate mapping of short color-space reads. PLoS Comput Biol. 2009;5:e1000386.

Li R, et al. SOAP2: an improved ultrafast tool for short read alignment. Bioinformatics. 2009;25:1966–7.

Malhis N, Butterfield YSN, Ester M, Jones SJM. Slider—maximum use of probability information for alignment of short sequence reads and SNP detection. Bioinformatics. 2009;25:6–13.

Hoffmann S, et al. Fast mapping of short sequences with mismatches, insertions and deletions using index structures. PLoS Comput Biol. 2009;5:e1000502.

Trapnell C, Pachter L, Salzberg SL. TopHat: discovering splice junctions with RNA-Seq. Bioinformatics. 2009;25:1105–11.

Chen P-Y, Cokus SJ, Pellegrini M. BS Seeker: precise mapping for bisulfite sequencing. BMC Bioinform. 2010;11:203.

Hannes Ponsting ZN. SMALT - A New Mapper for DNA Sequencing Reads; 2010.

Malhis N, Jones SJM. High quality SNP calling using Illumina data at shallow coverage. Bioinformatics. 2010;26:1029–35.

Kurtz S. 2016. http://www.vmatch.de/virtman.pdf. Accessed Feb 2020.

Hach F, et al. mrsFAST: a cache-oblivious algorithm for short-read mapping. Nat Methods. 2010;7:576–7.

Wang K, et al. MapSplice: accurate mapping of RNA-seq reads for splice junction discovery. Nucleic Acids Res. 2010;38:e178.

Emde A-K, Grunert M, Weese D, Reinert K, Sperling SR. MicroRazerS: rapid alignment of small RNA reads. Bioinformatics. 2010;26:123–4.

Au KF, Jiang H, Lin L, Xing Y, Wong WH. Detection of splice junctions from paired-end RNA-seq data by SpliceMap. Nucleic Acids Res. 2010;38:4570–8.

Bryant DW Jr, Shen R, Priest HD, Wong W-K, Mockler TC. Supersplat--spliced RNA-seq alignment. Bioinformatics. 2010;26:1500–5.

Krueger F, Andrews SR. Bismark: a flexible aligner and methylation caller for Bisulfite-Seq applications. Bioinformatics. 2011;27:1571–2.

Kiełbasa SM, Wan R, Sato K, Horton P, Frith MC. Adaptive seeds tame genomic sequence comparison. Genome Res. 2011;21:487–93.

Flouri T, Iliopoulos CS, Pissis SP. DynMap: mapping short reads to multiple related genomes; 2011.

David M, Dzamba M, Lister D, Ilie L, Brudno M. SHRiMP2: sensitive yet practical SHort Read Mapping. Bioinformatics. 2011;27:1011–2.

Zaharia, M, et al. Faster and More Accurate Sequence Alignment with SNAP. arXiv [cs.DS]. 2011.

Lunter G, Goodson M. Stampy: a statistical algorithm for sensitive and fast mapping of Illumina sequence reads. Genome Res. 2011;21:936–9.

Wood DLA, Xu Q, Pearson JV, Cloonan N, Grimmond SM. X-MATE: a flexible system for mapping short read data. Bioinformatics. 2011;27:580–1.

Huang S, et al. SOAPsplice: Genome-Wide ab initio Detection of Splice Junctions from RNA-Seq Data. Front Genet. 2011;2:46.

Chaisson MJ, Tesler G. Mapping single molecule sequencing reads using basic local alignment with successive refinement (BLASR): application and theory. BMC Bioinform. 2012;13:238.

Tennakoon C, Purbojati RW, Sung W-K. BatMis: a fast algorithm for k-mismatch mapping. Bioinformatics. 2012;28:2122–8.

Langmead B, Salzberg SL. Fast gapped-read alignment with Bowtie 2. Nat Methods. 2012;9:357–9.

Marco-Sola S, Sammeth M, Guigó R, Ribeca P. The GEM mapper: fast, accurate and versatile alignment by filtration. Nat Methods. 2012;9:1185–8.

Weese D, Holtgrewe M, Reinert K. RazerS 3: faster, fully sensitive read mapping. Bioinformatics. 2012;28:2592–9.

Mu JC, et al. Fast and accurate read alignment for resequencing. Bioinformatics. 2012;28:2366–73.

Emde A-K, Schulz MH. Detecting genomic indel variants with exact breakpoints in single- and paired-end sequencing data using SplazerS. Bioinformatics. 2012;28(5):619–27. https://doi.org/10.1093/bioinformatics/bts019.

Li Y, Terrell A, Patel JM. WHAM: A High-throughput Sequence Alignment Method; 2011.

Faust GG, Hall IM. YAHA: fast and flexible long-read alignment with optimal breakpoint detection. Bioinformatics. 2012;28:2417–24.

Hu J, Ge H, Newman M, Liu K. OSA: a fast and accurate alignment tool for RNA-Seq. Bioinformatics. 2012;28(14):1933–4. https://doi.org/10.1093/bioinformatics/bts294.

Zhang Y, et al. PASSion: a pattern growth algorithm-based pipeline for splice junction detection in paired-end RNA-Seq data. Bioinformatics. 2012;28:479–86.

Guo W, et al. BS-Seeker2: a versatile aligning pipeline for bisulfite sequencing data. BMC Genomics. 2013;14:774.

Liao Y, Smyth GK, Shi W. The Subread aligner: fast, accurate and scalable read mapping by seed-and-vote. Nucleic Acids Res. 2013;41:e108.

Li, H. Aligning sequence reads, clone sequences and assembly contigs with BWA-MEM. arXiv [q-bio.GN]. 2013.

Siragusa E, Weese D, Reinert K. Fast and accurate read mapping with approximate seeds and multiple backtracking. Nucleic Acids Res. 2013;41:e78.

Sedlazeck FJ, Rescheneder P, von Haeseler A. NextGenMap: fast and accurate read mapping in highly polymorphic genomes. Bioinformatics. 2013;29:2790–1.

Gontarz PM, Berger J, Wong CF. SRmapper: a fast and sensitive genome-hashing alignment tool. Bioinformatics. 2013;29:316–21.

Alkan C, et al. Personalized copy number and segmental duplication maps using next-generation sequencing. Nat Genet. 2009;41:1061–7.

Philippe N, Salson M, Commes T, Rivals E. CRAC: an integrated approach to the analysis of RNA-seq reads. Genome Biol. 2013;14:R30.

Dobin A, et al. STAR: ultrafast universal RNA-seq aligner. Bioinformatics. 2013;29:15–21.

Kim D, et al. TopHat2: accurate alignment of transcriptomes in the presence of insertions, deletions and gene fusions. Genome Biol. 2013;14:R36.

Sahinalp SC, Vishkin U. Efficient approximate and dynamic matching of patterns using a labeling paradigm. In Proceedings of 37th IEEE Conference on Foundations of Computer Science. October 1996;320-328.

Kerpedjiev P, Frellsen J, Lindgreen S, Krogh A. Adaptable probabilistic mapping of short reads using position specific scoring matrices. BMC Bioinform. 2014;15:100.

Liu Y, Popp B, Schmidt B. CUSHAW3: sensitive and accurate base-space and color-space short-read alignment with hybrid seeding. PLoS One. 2014;9:e86869.

Kim J, Li C, Xie X. Improving read mapping using additional prefix grams. BMC Bioinform. 2014;15(1):42. https://doi.org/10.1186/1471-2105-15-42.

Lee W-P, et al. MOSAIK: a hash-based algorithm for accurate next-generation sequencing short-read mapping. PLoS One. 2014;9:e90581.

Tárraga J, et al. Acceleration of short and long DNA read mapping without loss of accuracy using suffix array. Bioinformatics. 2014;30:3396–8.

Hach F, et al. mrsFAST-Ultra: a compact, SNP-aware mapper for high performance sequencing applications. Nucleic Acids Res. 2014;42:W494–500.

Butterfield YS, Kreitzman M. JAGuaR: junction alignments to genome for RNA-seq reads. PLoS One. 2014;9(7):e102398. https://doi.org/10.1371/journal.pone.0102398.

Bonfert T, Kirner E, Csaba G, Zimmer R, Friedel CC. ContextMap 2: fast and accurate context-based RNA-seq mapping. BMC Bioinform. 2015;16:122.

Kim D, Langmead B, Salzberg SL. HISAT: a fast spliced aligner with low memory requirements. Nat Methods. 2015;12:357–60.

Prezza N, Vezzi F, Käller M, Policriti A. Fast, accurate, and lightweight analysis of BS-treated reads with ERNE 2. BMC Bioinform. 2016;17(Suppl 4):69.

Sović I, et al. Fast and sensitive mapping of nanopore sequencing reads with GraphMap. Nat Commun. 2016;7:11307.

Amin MR, Skiena S, Schatz MC. NanoBLASTer: Fast alignment and characterization of Oxford Nanopore single molecule sequencing reads, 2016 IEEE 6th International Conference on Computational Advances in Bio and Medical Sciences (ICCABS); 2016. https://doi.org/10.1109/iccabs.2016.7802776.

Li H. Minimap and miniasm: fast mapping and de novo assembly for noisy long sequences. Bioinformatics. 2016;32:2103–10.

Liu B, Guan D, Teng M, Wang Y. rHAT: fast alignment of noisy long reads with regional hashing. Bioinformatics. 2016;32:1625–31.

Lin H-N, Hsu W-L. Kart: a divide-and-conquer algorithm for NGS read alignment. Bioinformatics. 2017;33:2281–7.

Liu B, Gao Y, Wang Y. LAMSA: fast split read alignment with long approximate matches. Bioinformatics. 2017;33:192–201.

Lin H-N, Hsu W-L. DART: a fast and accurate RNA-seq mapper with a partitioning strategy. Bioinformatics. 2018;34:190–7.

Li H. Minimap2: pairwise alignment for nucleotide sequences. Bioinformatics. 2018;34(18):3094–100. https://doi.org/10.1093/bioinformatics/bty191.

Dadi TH, et al. DREAM-Yara: an exact read mapper for very large databases with short update time. Bioinformatics. 2018;34:i766–72.

Marçais G, et al. MUMmer4: A fast and versatile genome alignment system. PLoS Comput Biol. 2018;14:e1005944.

Sedlazeck FJ, et al. Accurate detection of complex structural variations using single-molecule sequencing. Nat Methods. 2018;15:461–8.

Haghshenas E, Sahinalp SC, Hach F. lordFAST: sensitive and Fast Alignment Search Tool for LOng noisy Read sequencing Data. Bioinformatics. 2019;35:20–7.

Zhou Q, Lim J-Q, Sung W-K, Li G. An integrated package for bisulfite DNA methylation data analysis with Indel-sensitive mapping. BMC Bioinform. 2019;20:47.

Marić J, Sović I, Križanović K, Nagarajan N, Šikić M. Graphmap2-splice-aware RNA-seq mapper for long reads. bioRxiv. 2019; p.720458.

Boratyn GM, Thierry-Mieg J, Thierry-Mieg D, Busby B, Madden TL, Boratyn GM, Thierry-Mieg J, Thierry-Mieg D, Busby B, Madden TL. Magic-BLAST, an accurate RNA-seq aligner for long and short reads. BMC bioinformatics. 2019;20(1):1-19.

Vasimuddin M, Misra S, Li H, Aluru S. Efficient Architecture-Aware Acceleration of BWA-MEM for Multicore Systems, 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS); 2019. https://doi.org/10.1109/ipdps.2019.00041.

Kim D, Paggi JM, Park C, Bennett C, Salzberg SL. Graph-based genome alignment and genotyping with HISAT2 and HISAT-genotype. Nat Biotechnol. 2019;37:907–15.

Liu B, et al. deSALT: fast and accurate long transcriptomic read alignment with de Bruijn graph-based index. https://doi.org/10.1101/612176.

Chakraborty A, Bandyopadhyay S. conLSH: Context based Locality Sensitive Hashing for mapping of noisy SMRT reads. Comput Biol Chem. 2020;85:107206.

Wenger AM, et al. Accurate circular consensus long-read sequencing improves variant detection and assembly of a humangenome. Nat Biotechnol. 2019. https://doi.org/10.1038/s41587-019-0217-9.

Yorukoglu D, Yu YW, Peng J, Berger B. Compressive mapping for next-generation sequencing. Nat Biotechnol. 2016;34:374–6.

Wilbur WJ, Lipman DJ. Rapid similarity searches of nucleic acid and protein data banks. Proc Natl Acad Sci U S A. 1983;80:726–30.

Burkhardt S, Kärkkäinen J. Better Filtering with Gapped q-Grams. Comb Pattern Matching. 2001:73–85. https://doi.org/10.1007/3-540-48194-x_6.

Ukkonen E. Approximate string-matching over suffix trees. In: Combinatorial Pattern Matching. Berlin Heidelberg: Springer; 1993. p. 228–42.

Ghodsi M, Pop M. Inexact Local Alignment Search over Suffix Arrays. In: 2009 IEEE International Conference on Bioinformatics and Biomedicine; 2009. p. 83–7.

Cokus SJ, et al. Shotgun bisulphite sequencing of the Arabidopsis genome reveals DNA methylation patterning. Nature. 2008;452:215–9.

Kurtz S, et al. Versatile and open software for comparing large genomes. Genome Biol. 2004;5:R12.

Medina I, et al. Highly sensitive and ultrafast read mapping for RNA-seq analysis. DNA Res. 2016;23:93–100.

Li H, Durbin R. Fast and accurate short read alignment with Burrows-Wheeler transform. Bioinformatics. 2009;25:1754–60.

Grüning B, et al. Bioconda: sustainable and comprehensive software distribution for the life sciences. Nat Methods. 2018;15:475–6.

Mohamadi H, Vandervalk BP. DIDA: Distributed Indexing Dispatched Alignment. PLoS One. 2015;10(4):e0126409. https://doi.org/10.1371/journal.pone.0126409.

Xin H, et al. Accelerating read mapping with FastHASH. BMC Genomics. 2013;14(Suppl 1):S13.

Xin H, Nahar S. Optimal seed solver: optimizing seed selection in read mapping. Bioinformatics. 2016;32(11):1632–42. https://doi.org/10.1093/bioinformatics/btv670.

Zhang H, Chan Y, Fan K, Schmidt B, Liu W. Fast and efficient short read mapping based on a succinct hash index. BMC Bioinform. 2018;19:92.

Eddy SR. What is dynamic programming? Nat Biotechnol. 2004;22:909.

Smith TF, Waterman MS. Identification of common molecular subsequences. J Mol Biol. 1981;147:195–7.

Needleman SB, Wunsch CD. A general method applicable to the search for similarities in the amino acid sequence of two proteins. J Mol Biol. 1970;48:443–53.

Hamming RW. Error detecting and error correcting codes. Bell Syst Tech J. 1950;29:147–60.

Karp RM, Rabin MO. Efficient randomized pattern-matching algorithms. IBM J Res Dev. 1987;31:249–60.

Calude C, Salomaa K, Yu S. Additive distances and quasi-distances between words. J Univ Comput Sci. 2002;8:141–52.

Backurs A, Indyk P. Edit Distance Cannot Be Computed in Strongly Subquadratic Time (unless SETH is false), Proceedings of the Forty-Seventh Annual ACM on Symposium on Theory of Computing - STOC ’15; 2015. https://doi.org/10.1145/2746539.2746612.

Ukkonen E. Algorithms for approximate string matching. Information and control. 1985;64(1-3):100-18.

Cole R, Hariharan R. Approximate String Matching: A Simpler Faster Algorithm. SIAM J Comput. 2002;31:1761–82.

Alser M, Hassan H, Kumar A, Mutlu O, Alkan C. Shouji: a fast and efficient pre-alignment filter for sequencealignment. Bioinformatics. 2019;35(21):4255-63.

Alser M, Hassan H, Xin H, Ergin O, Mutlu O, Alkan C. GateKeeper: a new hardware architecture for accelerating pre-alignment in DNA short read mapping. Bioinformatics. 2017;33(21):3355-63.

Alser, M., Mutlu, O. & Alkan, C. MAGNET: Understanding and Improving the Accuracy of Genome Pre-Alignment Filtering. arXiv [q-bio.GN]. 2017.

Kim JS, et al. GRIM-Filter: Fast seed location filtering in DNA read mapping using processing-in-memory technologies. BMC Genomics. 2018;19:89.

Alser M, Shahroodi T, Gómez-Luna J, Alkan C, Mutlu O. SneakySnake: a fast and accurate universal genome pre-alignment filter for CPUs, GPUs and FPGAs. Bioinformatics. 2020;36(22-23):5282-90.

Zhang J, et al. BGSA: A Bit-Parallel Global Sequence Alignment Toolkit for Multi-core and Many-core Architectures.Bioinformatics. 2018. https://doi.org/10.1093/bioinformatics/bty930.

Turakhia Y, Goenka SD, Bejerano G, Dally WJ. Darwin-WGA: A Co-processor Provides Increased Sensitivity in Whole Genome Alignments with High Speedup, 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA); 2019. https://doi.org/10.1109/hpca.2019.00050.

Cali DS, et al. GenASM: A High-Performance, Low-Power Approximate String Matching Acceleration Framework for Genome Sequence Analysis. In: 2020 53rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO); 2020. p. 951–66.

Alser M, et al. Accelerating Genome Analysis: A Primer on an Ongoing Journey. IEEE Micro. 2020;40:65–75.

Kloosterman WP, et al. Characteristics of de novo structural changes in the human genome. Genome Res. 2015;25:792–801.

Vollger MR, et al. Long-read sequence and assembly of segmental duplications. Nat Methods. 2019;16:88–94.

Merker JD, Wenger AM. Long-read genome sequencing identifies causal structural variation in a Mendelian disease. Genet Med. 2018;20(1):159–63. https://doi.org/10.1038/gim.2017.86.

Goodwin S, et al. Oxford Nanopore sequencing, hybrid error correction, and de novo assembly of a eukaryotic genome. Genome Res. 2015;25:1750–6.