Abstract

Background

As part of a late onset GM2 gangliosidosis natural history study, digital health technology was utilized to monitor a group of patients remotely between hospital visits. This approach was explored as a means of capturing continuous data and moving away from focusing only on episodic data captured in traditional study designs. A strong emphasis was placed on real-time capture of symptoms and mobile Patient Reported Outcomes (mPROs) to identify the disease impact important to the patients themselves; an impact that may not always correlate with the measured clinical outcomes assessed during patient visits. This was supported by passive, continuous data capture from a wearable device.

Results

Adherence rate for wearing the device and completing the mPROs was 84 and 91%, respectively, resulting in a rich multidimensional dataset. As expected for a six-month proof-of-concept study in a disease that progresses slowly, statistically significant changes were not expected or observed in the clinical, mPROs, or wearable device data.

Conclusions

The study demonstrated that patients were very enthusiastic and motivated to engage with the technology as demonstrated by excellent compliance. The combination of mPROs and wearables generates feature-rich datasets that could be a useful and feasible way to capture remote, real-time insight into disease burden.

Similar content being viewed by others

Introduction

The GM2 gangliosidoses, Tay-Sachs (TSD) and Sandhoff (SD) diseases, are neurodegenerative disorders, caused by a deficiency of the lysosomal enzyme beta- hexosaminidase A (Hex A). The deficiency causes accumulation of GM2 ganglioside particularly in neurons where the rate of ganglioside synthesis is the highest, leading to progressive neurodegeneration. Although the incidence of TSD and SD is very low (1 in 320,000 for TSD and even less frequent for SD [1]) there are common mutations in ethnic populations that make it more frequent. In the Ashkenazi Jewish population, the disease incidence of infantile TSD is about 1 in every 3500 newborns. Similarly, there is a common mutation (HEXA, p.GLY269SER) in the eastern European population that accounts for many of the individuals with late onset TSD [2]. In contrast to infantile TSD or SD disease the late-onset forms have symptom onset in adolescence or early adulthood, with ataxia, selective and progressive muscular atrophy leading to increased falls and difficulty rising from a chair or the floor, and for TSD patients, dysarthria. The heterogeneity of the disease may also result in the misdiagnosing of older adults who have the disease, and a history of neuronal symptoms, through conflation with the clinical indications of other neurodegenerative disorders [3]. SD patients may often have tingling, numbness or pain in their hands and feet as a presenting sign.

There is currently no cure for TSD or SD. Research is focused on increasing HexA activity by enzyme replacement therapy where the blood brain barrier has been a formidable obstacle; by substrate reduction of ganglioside precursors using small molecules; or by gene delivery [4, 5]. As new treatment options emerge, it is imperative to identify and validate appropriate outcome measures by which to evaluate potential therapeutic effects.

We believe that these measures should include patient-reported outcomes, to provide the patient’s perspective and give them a voice in their own health care [6]. The development of smartphone applications has made it possible to collect this information easily and often [7]. In addition, wearable devices can continuously measure the quality and quantity of physical activity [8, 9], providing valuable information on motor function.

The aim of this study was to assess the feasibility of using digital health technology to monitor GM2 patients remotely between hospital visits. The technology included a wearable device and a smartphone application to record patient-reported outcomes. This proof-of-concept study also focused on capturing patient feedback on use of the technology and exploring the outcome data it can provide. We plan to extend use of the technology to validate outcome measures that monitor disease progression, measure the effects of therapeutic intervention, and solicit further patient feedback on the impact of the disease on their activities of daily living.

Results

Eight consenting patients took part in the study and remained engaged for its duration. Age ranged from 28 to 61 years (44 ± 11), with three men and five women.

Laboratory and clinical results measured by clinical evaluation over the 6-month course of the study can be seen in Table 1. There were no statistically significant differences between baseline and month six in any of the measures.

Adherence

Adherence to wearing the device ranged from 35 to 96% in terms of each individual patient over the 6-month period of the study. The median cohort adherence rate was 84%. Wearable usage decreased slightly from 3 months to 6 months primarily due to decreased usage over a holiday period and the coinciding battery life limits. The mean (standard deviation) number of daily steps for the cohort of eight patients was 7253.2 (490.0) with a median of 6526.9 steps. Complete data are seen in Table 2.

For the wearable data, the median adherence rate i.e. calculated when the patient completed a minimum of 8 × 30-min epochs of data, was 91% (range: 63–97%). All patients gave at least two responses to each PRO over the 6-month period, but adherence to the PROs was variable by patient and month and overall tended to decrease towards the end of the study (see Fig. 1).

Wearable data

The average steps per epoch over a 24 h Period (from midnight to midnight) is illustrated in Fig. 2. On average, less activity was recorded between midnight and 7 am, consistent with average sleep patterns. Patient NIH-APT-006 who reported activity above 250 average steps per epoch at night worked night shifts.

Three wearable metrics were calculated (described in more detail in the Methods section): the average daily maximum (ADM), average daily steps (ADS), and average daily steps per 30-min epoch (ADE). Cohort analysis of ADM, ADS, ADE is presented in Table 3. No statistically significant changes were observed between baseline and month six.

Clinical event data

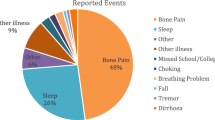

Every single patient used the app to record their symptoms (range: 8–79 events reported). In terms of the number of patients who reported each event respectively, seven patients reported a fall/near fall (66 events), and six patients reported choking/coughing (67 events). Other symptoms reported were Tremor (10 events), Other (49 events), and Other Illness (72 events).

Other Illness, which covered a broad range of options (“Vomiting”, “Headache”, “Cold”, “Cough” and “Diarrhea”), was the most frequently reported event from the pre-selected options (72 events), while missed college/ work was the least reported event (5 events).

Five patients reported ‘Other’ events using free text. Of those, health-related responses were hiccups, leg/hip muscle spasm, headache, injuring arm, having an appointment with a physician because of feeling tired, lower back pain, acid reflux, short term memory, fall, migraine, neuropathy to right hip, muscle cramp, incontinence, sharp pain to body parts, numbness/tingling, bone grinding such as in the hip and taking medication such as Ibuprofen and Tylenol.

These self-reported clinical events are of paramount importance not only on their own but also to put context around the objective data of the wearable. In addition, the ability to report in real-time reduces the impact of memory recall on the details provided.

mPRO data

Table 4 shows the Rosenberg Self Esteem scale, which is a widely used and validated self-esteem measure with a scale of 0 to 30, with a score less than 15 indicating potential problematic low self-esteem. Our cohort average ranged from 14.4 to 15.4 suggesting that this cohort are on the low side of self-esteem [10]. PedsQL fatigue scores ranged from 46.5 to 61.1 on a scale of 0 to 100, indicating fatigue in this cohort [11]. Self-reported “Impact on Family” was scored higher than “Impact of Disease”.

Health care visits data

Seven out of eight patients used the app to report healthcare visits at least once. Number of Visits responses ranged for each individual patient from 0 to 65 for Healthcare Professional (n = 117), 0–2 for General Practitioner (n = 4), and 0–3 for Hospital (n = 3). Healthcare Professional was the most reported healthcare visit at 117.

Correlations

Correlations were calculated between the three-wearable metrics (ADM, ADS, ADE), three clinical measures (6-min walk test (6MWT), Brief Ataxia Rating Scale (BARS), and cadence from the GAITRite walking assessment) and the ten mPROs at baseline and at month-6. For clarity, Fig. 3 highlights only results with moderate to strong correlations (coefficients > 0.6 or < − 0.6), and p < 0.05 is indicated by an asterisk.

Correlations between clinical measures, wearable and mPRO data for baseline (top) and month 6 (bottom). The upper triangles show the positive correlations, and the lower triangles show the negative correlations: the darker the colour, the higher the correlation. Note that the Tremor scale is not included in the baseline correlations, as it was zero for all patients

Some of the wearable metrics are correlated with each other at month 0, with the highest positive correlations between the clinical walking assessments (6MWT and GAITRite cadence; 0.96), and between ADM and ADE (0.83) and the highest negative correlation seen between disease impact (i.e. impact of late onset GM2) and ADS (0.94) which may suggest that higher physical activity measured with the wearable device is linked to better walking performance and lower disease impact. The clinical walking assessments are also negatively correlated with the Impact Scales, but to a lesser extent than the wearable metrics. BARS score does not show any correlation with 6MWT or GAITRite. There were stronger correlations seen at month 6 when compared to month 0 between the three wearable metrics and impact factors. Stronger correlations were seen at month 6 than month zero between the three wearable metrics and impact factors.

Feedback survey

A feedback survey was conducted at the end of the study and indicated that all eight patients considered the app to be “valuable” for reporting their symptoms to their doctor in real time, with four patients stating the app to be “very valuable”. Overall, 37.5% of patients said they were definitely likely to continue wearing the wristwatch and use the phone app on a long-term basis. A “very good” overall impression was reported by two out of eight patients, one reported their overall impression as “good”, and four as “ok”. This feedback was instrumental in the redevelopment of the app and the introduction of a new wearable.

Discussion

This feasibility study demonstrated that utilizing mHealth with wearable technology was well accepted by patients over a six-month natural history study. Adherence to wearing the device remained greater than 65% throughout the six-month period for seven out of eight patients.

Engagement with the app (symptoms and mPROs) was utilized by all patients over the course of the study. In fact, at the end of the study some of the patients chose to continue to use the technology. It was noted that patient 003 had low adherence with respect to the wearable data but high adherence to the PROs. An explanation for this is that this patient experienced issues with the band on her device, which broke. A spare device was also sent to this patient which resulted in data loss.

Engagement with the app for Events indicates its value in patients monitoring their symptoms in real-time. Collecting patient-generated data outside of the hospital setting, for example, during drug development, enables healthcare professionals to capture data remotely on a real-time basis. This not only enables researchers and healthcare professionals to capture disease changes, but also reduces the burden on the healthcare system because fewer hospital-based assessments may be needed, either during a clinical study or for clinical practice. This also means patients benefit from having to attend fewer hospital appointments. The additional value of machine learning /artificial intelligence (ML/AI) provides additional support for the clinical value of the device/app, which can’t be implemented by human resources.

The clinical data (Table 1) suggest that the physical ability of the patients in the 6MWT remained the same or slightly improved over the six months. Likewise, all the GAITRite parameters tended to be higher at month six, but the increase was not statistically significant. The BARS assessment remained stable over the duration of the six-month study. This indicates that disease state as measured by these parameters remained stable during this relatively short observation period for a disorder with a documented slow progression.

Figure 2 shows the average steps per epoch over a 24 h period. Measuring such repeated patterns in longitudinal data collection can identify patterns and routines specific to each patient. Specific patterns that arise from commute and work breaks could be identified, and act as indicators of disease progression when things change. Patients with very low-level levels of activity in a month, i.e. engagement with wearing the device, had their data for any month excluded from analysis if the number of active days in that month was less than six days. It should be noted that the specific wearable device used was not able to differentiate between data captured while being worn by the patient or not, so patients with low activity might have had their activity discounted if they had not been active for a total of 8 × 30-min epochs.

The decrease in wearable usage seen during the period from 3 to 6 months is thought to be largely because of decreased usage over the holiday period and the coinciding battery life limits. Several of the patients needed to replace the batteries in the wearable device, therefore losing a few days of data.

Table 3 shows that there were no changes in the wearable metrics (defined as ADM, ADS, and ADE), in the six-month period of the study. This is consistent with the hospital-based assessment of 6MWT and GAITRite. As the study started in August and finished in February, the mild decrease noted could be linked to seasonal variation and changes in the weather. The ADS values obtained from patients were are high. This in part may have been as a result of the patients being conscious of the wearable monitoring their ambulatory activity, thus increasing their motivation. Prior studies have shown the use of pedometers to increase the number of steps taken by a range of 2000–2500 per day [12].

Engagement with the app for Events indicates its value in patients monitoring their symptoms in real-time. The high number of reports of falls/near falls and choking/coughing supports natural history data since these are both disease symptoms known to be associated with disease progression. The limited number of tremor-related events may reflect the fact that tremor’s were also reported as part of the weekly mPROs and that this is not a consistent symptom in all patients.

With a small number of patients and a large number of variables, the correlation analysis aims to suggest relationships, rather than provide clear evidence. Figure 3 shows that highly active patients, as measured with the wearable, perform better at the clinical walking tests, and report lower disease impact. As the clinical assessments do not all seem to agree (i.e. ataxia does not show a negative relationship to walking performance), the combination of mPROs and wearable could provide additional information on disease impact. Increased correlation was seen between the wearable metrics and impact scales at month 6 compared to month zero. This was unexpected given that LOTS is a stable disease and there weren’t many changes in ADM, ADS or ADE over the 6-month study period. As this was a natural history study with a small sample size, it is not possible to rationalise these observations as the statistics are only indicative.

One of the insights gained through this study was that clinical measures do not always match patient self-perceived disease impact. For example, the patient with the highest reported score of Wider Impact and Tremor mPROs (008) had the third least disease impact according to the BARS score. However, the same patient reported the highest number of Events, and the highest number of healthcare visits (“Psychiatrist for physical therapy”, “MRI as part of natural history study”, “Phlebotomist”, “Speech Therapist”, “Neurologist”, “Psychologist”, “Dietician” and “Urologist”). This shows that the self-perceived impact of the disease is an important measure to consider in disease burden and may not correlate with clinical testing. The low perceived self-esteem of patients observed through their responses to the PROs, is expected in this patient population. Low self-esteem, emotional health and psychological issues are highly reported in patients with rare genetic disorders [Rare Disease UK 2018 – Living with a rare condition: the effect on mental health].

As a consequence of the feedback from the patient survey, many improvements have been made, and a new wearable device has been identified which will be integrated into future studies. Additional features will be developed including an integration of video conferencing and secure messaging to enable telemedicine consultations.

Conclusions

In a highly motivated cohort of patients with a rare disease, mHealth and wearable technology was shown to be useful and feasible for capturing remote, real-time insight into disease burden. It is likely that a longer observation period will yield a clearer understanding of the nuances of disease progression and the individualized impact of disease burden that can be used as outcomes to therapeutic interventions.

Methods

Patients were recruited at the National Institutes of Health in the USA, as part of an ongoing natural history study (02-HG-0107). All patients who were approached about the study consented to take part. Consenting patients were admitted for a three day stay for clinical assessments at baseline and at the 6-month completion of the trial including the Brief Ataxia Rating Scale (BARS) and subtest, the 6 min walk test (6MWT), neuroglyphics (a digital Archimedes spiral-drawing accuracy rating tool), the 9-hole peg test and GAITRite walking assessment.

All consenting patients downloaded the Aparito app via Google or the App store (Android and iOS respectively) at the baseline visit and this was paired with a 3D accelerometer device to be worn on the wrist. Patients were asked to wear the 3D accelerometer continuously for the six-month duration of the study. The 3D accelerometer wrist-worn device captured data in 30-min epochs and calculated the number of steps taken for that 30-min period. The term ‘activity’ means patient engagement when wearing the device; activity does not mean physical activity in the context of this study (Fig. 4).

Three wearable metrics were computed as defined below:

-

i)

The average daily maximum (ADM) is the maximum number of steps per epoch on each active day, averaged over all active days in the month.

-

ii)

Average daily steps (ADS) is the total number of steps taken by a patient on active days in a month divided by the number of active days.

-

iii)

The average daily steps per epoch (ADE) is calculated as follows. The total number of steps in an active day is divided by the number of active epochs. This is then further averaged over the number of active days in the month.

Patients with very low-level levels of activity in a month had their data for any month excluded from analysis if the number of active days in that month was less than six days.

The patient-facing app captured disease symptoms which patients could access to report any symptom or health-related problem in real-time on the app. The pre-configured health symptoms were already listed in the app as a drop-down menu: Choking / Coughing, Fall / Near Fall, Missed College / Work, Tremor, Other Illness, Other (Note: patients entered their symptoms/problem via free text for this category).

Ten mPROs were pushed to the app at pre-set intervals ranging from 8 to 60 days. The mPROS were the Tremor Impact Scale, Disease Impact Scale, Family Impact Scale, Wider Impact Scale, Impact Composite Scale, Perceived Stress, Global Self-worth, Rosenberg Self Esteem, CHU9D and PedsQL Multi-dimensional Fatigue scale. These are described in the Appendix. The different PROs were pushed out at varying schedules as described in Table 13 in the Appendix. It should be noted that four patients carried on using the App after the agreed 6-month study period, but these data are not reported in this paper.

Patients also had the ability to record health care appointments in a ‘Visits’ section, allowing patients to record planned or emergency visits to different health care professionals via the app provided. The pre-configured visits already listed in the app included general practitioner, healthcare professional and hospital. In addition to this, patients had the option to provide further detail of the visit.

All wearable, clinical and mPRO data were tested for overall trends between baseline and month six. The methods used were the Wilcoxon matched pairs test and the Hollander test for bivariate symmetry [13]. These tests take account of the nonparametric nature of some of the data and the presence of tied data.

Correlation testing was pre-planned before the start of the study. No adjustments of p-values for multiple comparisons was made due to the exploratory nature of the study. At both baseline and month six the relationships between wearable data and clinical and mPRO data and within the set of three wearables were tested using the Spearman’s rank correlation test. This approach tests between-patient correlation at one time point. Correlations with coefficient ≥ |0.6| were considered as moderate to strong relationships [14].

The rationale for testing all mPRO data against the three wearable metrics was to explore new PROs against the metrics because there are no disease-specific PROs currently available for LOTs. Therefore, the correlation analyses were exploratory.

Adherence for the device was calculated when a minimum of 4 h of data (i.e. 8 × 30-min epochs) were captured for that day. Adherence was calculated as the total number of days active on the device divided by the total number of days in the 6-month study period. Adherence for the PRO responses presented in Table 2 was calculated by dividing the total number of actual responses per month by the number of expected responses per month for all PRO surveys over the 6-month period multiplied by 100. The average adherence rate for each month was calculated by dividing the total number of actual responses by the number of patients (i.e. 8 patients) multiplied by 100.

To learn from the experience and to improve on the technical capabilities of the wearable device, patients were asked to answer a questionnaire at the end of the study. The questionnaire comprised five questions.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ADE:

-

Average daily steps per epoch

- ADM:

-

Average daily maximum

- ADS:

-

Average daily steps

- BARS:

-

Brief Ataxia Rating Scale

- CHU 9D:

-

Child Health Utility 9D

- mPROs:

-

Mobile Patient Reported Outcomes

- 6MWT:

-

6-min walk test

- SD:

-

Sandhoff disease

- TSD:

-

Tay-Sachs disease

References

Lew R, Burnett L, Delatycki M, Proos A. Tay-Sachs disease: current perspectives from Australia. Appl. Clin. Genet. 2015;8:19.

Navon R, Kolodny EH, Mitsumoto H, Thomas GH, Proia RL. Ashkenazi-Jewish and non-Jewish adult GM2 gangliosidosis patients share a common genetic defect. Am J Hum Genet. 1990;46(4):817–21.

Deik A, Saunders-Pullman R. Atypical Presentation of Late-Onset Tay-Sachs Disease. Muscle Nerve. 2014;49(5):768–71.

Osher E, et al. Effect of cyclic, low dose pyrimethamine treatment in patients with Late Onset Tay Sachs: an open label, extended pilot study. Orphanet J. Rare Dis. 2015;10(1):45.

Solovyeva VV, et al. New Approaches to Tay-Sachs Disease Therapy. Front Physiol. 2018;9:1–11. https://pubmed.ncbi.nlm.nih.gov/30524313/.

Baumhauer JF. Patient-reported outcomes — are they living up to their potential? N Engl J Med. 2017;377(1):6–9.

Wang J, et al. Development of a smartphone application to monitor pediatric patient-reported outcomes. Stud Health Technol Inform. 2017;245(0):253–7.

Gresham G, et al. Wearable activity monitors in oncology trials : Current use of an emerging technology. Contemp. Clin. Trials. 2018;64:13–21.

Dobkin BH. Wearable motion sensors to continuously measure real-world physical activities. Curr Opin Neurol. 2013;26(6):602–8.

Sinclair SJ, Blais MA, Gansler DA, Sandberg E, Bistis K, LoCicero A. Psychometric properties of the Rosenberg elf-esteem scale: overall and across demographic groups living within the United States. Eval Heal Prof. 2010;33(1):56–80.

Haverman L, Limperg PF, van Oers HA, van Rossum MAJ, Maurice-Stam H, Grootenhuis MA. Psychometric properties and Dutch norm data of the PedsQL multidimensional fatigue scale for young adults. Qual Life Res. 2014;23(10):2841–7.

Tudor-Locke C, et al. How many steps/day are enough? for adults. Int J Behav Nutr Phys Act. 2011;8(79):2-19.

Hollander M, Wolfe DA, Chicken E. Nonparametric statistical methods. London: Wiley; 2015. https://www.wiley.com/en-gb/Nonparametric+Statistical+Methods%2C+3rd+Edition-p-9780470387375.

Akoglu H. User’s guide to correlation coefficients. Turk J Emerg Med. 2018;18(3):91–3.

Fecarotta S, et al. Long term follow-up to evaluate the efficacy of miglustat treatment in Italian patients with Niemann-Pick disease type C. Orphanet J. Rare Dis. 2015;10(1):22.

Cohen S, Kamarck T, Mermelstein R. A Global Measure of Perceived Stress. J. Health Soc. Behav. 1983;24(4):385.

Sabatelli RM, Anderson SA. Assessing outcomes in child and youth programs: a practical handbook revised edition. Connect: US Dep. Justice to State Connect; 2005.

Rosenberg M. Society and the adolescent self-image: Princeton university press; 2015.

Furber G, Segal L. The validity of the Child Health Utility instrument (CHU9D) as a routine outcome measure for use in child and adolescent mental health services. Health Qual. Life Outcomes. 2015;13(1):22.

Canaway AG, Frew EJ. Measuring preference-based quality of life in children aged 6–7 years: a comparison of the performance of the CHU-9D and EQ-5D-Y—the WAVES pilot study. Qual Life Res. 2013;22(1):173–83.

Varni JW, Burwinkle TM, Szer IS. The PedsQL Multidimensional Fatigue Scale in pediatric rheumatology: reliability and validity. J. Rheumatol. 2004;31(12):2494–500.

Funding

This study was funded by a generous gift from the Buryk Foundation, a member of the National Tay-Sachs and Allied Diseases Association.

Author information

Authors and Affiliations

Contributions

EHD oversaw data analysis and contributed to writing the manuscript. JJ monitored the patients and conducted the 9-hole peg test. CT performed the BARS and neurological assessments. CJT was the principal investigator on the study and was responsible for the oversight of patient evaluations and review of the manuscript. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All patients gave informed consent, and the study was approved by the National Human Genome Research Institute Institutional Review Board.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests other than ED is an employee of Aparito.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Tremor Impact Scale

This scale, developed specifically for this study as it is a known complication of disease, was available for patients to record tremor in a particular week, and its impact on their ability to perform tasks. This PRO has a scale from 0 to 3 for a single domain. The highest total score of 3 ‘Yes, I had a severe tremor that impeded my ability to perform everyday tasks.’ is interpreted as the highest severity of tremors and 0 reflected ‘No, I did not have a tremor”. The complete range of questions with their possible answers and corresponding quantitative values are shown in Table 5 in Appendix.

Impact of Late Onset GM2 Scale

This scale was modified from the Niemann Pick -C Patient/Parent Reported Scale that was developed by the International Niemann-Pick Disease Association for use in their disease registry as an indicator for the impact of disease. The questions asked were the impact of their disease on the ability to walk, coordination, speech, ability to swallow and cognitive abilities. This PRO has a total of 5 domains, with a scale scoring range for each individual domain of 0–3 to 0–5 depending on the domain (Table 6 in Appendix). This PRO also has a total score range from 0 to 19, where a high score implies high disease impact on an individual.

Impact on the family of a late onset GM2 Patient Scale

This scale was developed by the International Niemann-Pick Disease Association for use in their disease registry. This PRO allows patients to record how their disease impacted their family. Questions included whether family members had to give up things due to the patient’s illness (Table 7 in Appendix). This PRO has a total of 4 domains, with an individual domain scoring range of 0–3 for one domain, while the other domains have a negative scoring from 3 to 0, as shown in Table 7 in Appendix. This PRO has a total score range from 0 to 12, where a higher total score implies a higher negative impact on family life.

Wider Impact scale

This scale was also developed by the International Niemann-Pick Disease Association for use in their disease registry. This PRO allows patients to record the wider impact of late onset GM2. The questions asked include the impact on the jobs/school of family members, and visits to emergency rooms due to the illness. A full list of the questions and possible answers can be found in Table 8 in Appendix. This PRO has a total of 4 domains, with a domain scoring range of 0–3 and 0–4 which is dependent on the domain being considered. This PRO has a total score range from 0 to 14 where a higher score implies a wider negative impact of the disease.

Impact Composite Scale

Impact Composite Scale consists of 3 different impact scales: the Impact of Late onset GM2 (Table 6 in Appendix), the Impact on the family of a late onset GM2 patient (Table 7 in Appendix), and the Wider Impact (Table 8 in Appendix). These were combined using a mean composite scoring system [15] to have a total score range from 0 to 1 where a higher score implies higher negative impact.

Perceived Stress

This scale was taken from the Perceived Stress Scale, a psychological instrument used for measuring the perception of stress [16]. For this PRO, patients rate specific situations on how stressful they felt. This PRO has a total of 10 domains, with an individual domain scoring range of 0–4, and which also includes negative scoring from 4 to 0. The total scoring range from all domains is 0–40, where a high score implies a high amount of perceived stress. A full list of the questions and possible answers can be found in Table 9 in Appendix.

Global Self Worth

This PRO scale was modified from the Global Self-Worth Scale [17], and was developed for patients to rate specific situations stated in the PRO on their self-worth. This PRO has a total of 6 domains, with an individual domain scoring range of 0–4 and which also includes negative scoring from 4 to 0 (Table 10 in Appendix). The total scale scoring range from all domains is 0–24, where a higher score implies a higher self-worth.

Rosenberg Self-Esteem

This PRO scale was modified from the Global Self-Worth Scale [18], and was developed for patients to rate specific situations stated in the PRO on their self-esteem. This PRO has a total of 10 domains, with an individual domain scoring range of 0–3 and which also includes negative scoring from 3 to 0 (Table 11 in Appendix). The total scale scoring range from all domains is 0–30, where a higher score implies a higher self-esteem.

CHU 9D

This PRO was taken from the Child Health Utility 9D (CHU 9D) (UK weighted tariff) [19], and was developed as a measure of a patient’s health related quality of life. This PRO has a total of 9 domains with an individual domain scoring range of weightings ranging from 0 to 0.1079 when considering all domains collectively (Table 12 in Appendix). The total scale scoring range from all domains is 0.33 to 1 where a higher score implies good health [20].

PedsQL™ Multidimensional Fatigue Scale

This PRO taken from the PedsQL™ Multidimensional Fatigue Scale [21] was used to measure the fatigue of the patients. This fatigue scale is formed of 18 items comprising of the General Fatigue Scale (6 items), Sleep/Rest Fatigue Scale (6 items), and Cognitive Fatigue Scale (6 items) Each individual domain is scored from 0 to 100, where based on intrinsic calculations, the total scale scoring range is also 0–100, where a higher score implies less problems with fatigue (Good).

Patient Reporting and Timing

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Davies, E.H., Johnston, J., Toro, C. et al. A feasibility study of mHealth and wearable technology in late onset GM2 gangliosidosis (Tay-Sachs and Sandhoff Disease). Orphanet J Rare Dis 15, 199 (2020). https://doi.org/10.1186/s13023-020-01473-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13023-020-01473-x