Abstract

Purpose/Objectives

The clinical effects of radiation dose-intensification in locally advanced non-small cell lung (NSCLCa) and other cancers are challenging to predict and are ideally studied in randomized trials. The purpose of this study was to assess the use of dose-escalated radiation for locally advanced NSCLCa in the U.S., 2004–2013, a period in which there were no published level 1 studies on dose-escalation.

Materials/Methods

We performed analyses on two cancer registry databases with complementary strengths and weaknesses: the National Oncology Data Alliance (NODA) 2004–2013 and the National Cancer Database (NCDB) 2004–2012. We classified locally advanced patients according to the use of dose-escalation (>70 Gy). We used adjusted logistic regression to assess the association of year of treatment with dose-escalated radiation use in two periods representing time before and after the closure of a cooperative group trial (RTOG 0617) on dose-escalation: 2004–2010 and 2010–2013. To determine the year in which a significant change in dose could have been detected had dose been prospectively monitored within the NODA network, we compared the average annual radiation dose per year with the forecasted dose (average of the prior 3 years) adjusted for patient age and comorbidities.

Results

Within both the NODA and NCDB, use of dose-escalation increased from 2004 to 2010 (p < 0.0001) and decreased from 2010 to 2013 (p = 0.0018), even after controlling for potential confounders. Had the NODA network been monitoring radiation dose in this cohort, significant changes in average annual dose would have been detected at the end of 2008 and 2012.

Conclusions

Patterns of radiation dosing in locally advanced NSCLCa changed in the U.S. in the absence of level 1 evidence. Monitoring radiation dose is feasible using an existing national cancer registry data collection infrastructure.

Similar content being viewed by others

Introduction

Incremental technical advances in linear accelerators have steadily improved the delivery of radiotherapy to a patient’s tumor while sparing the adjacent normal tissue. Leveraging advances in technology to dose-intensify is compelling particularly in diseases for which local control outcomes are poor, such as inoperable locally advanced non-small cell lung cancer (NSCLCa) which has an estimated 2-year local failure rate of 30% when treated with concurrent chemotherapy and standard dose radiation [1]. However, the clinical effects of dose-intensification in NSCLCa and other cancers have been challenging to predict and several clinical studies have failed to validate presumed benefits [1–3]. A recent example is RTOG 0617 which randomized inoperable locally advanced NSCLCa patients to 60 Gy (standard arm) or 74 Gy (dose-escalation arm) with concurrent chemotherapy [4]. Unexpectedly, patients treated on the dose-escalation arm had substantially worse median overall survival (20.3 months versus 28.7 months), despite having acceptable radiation treatment plans based on established normal tissue constraints.

We hypothesized that because the theoretical rationale for dose-intensified treatments are compelling, they are slowly adopted even in the absence of high level clinical evidence and that this “radiation dose creep” may unexpectedly be causing harm. To assess the first of these hypotheses, that radiation dose creep occurs, we evaluated the patterns of radiation dosing in locally advanced NSCLCa between 2004 and 2013, a period in which there were no published level 1 studies on dose-escalation. We hypothesized that there would be an increasing trend in the use of dose-escalated radiation in the years preceding the closure of RTOG 0617 and that there would be a decreasing trend beginning immediately preceding the study’s closure to the end of the study period.

Finally, we sought to determine the year in which a significant change in radiation dosing practice patterns could have been detected in this study period had dose been prospectively monitored using commonly available cancer registry data.

Methods

Data sources

The primary analyses of this study were performed on data extracted from two cancer registry databases with complementary strengths and weaknesses: the National Oncology Data Alliance® (NODA) (Elekta Inc., Sunnyvale, CA), years 2004–2013, and the National Cancer Database (NCDB) (American College of Surgeons, Chicago, Il), years 2004–2012. The NODA captures newly diagnosed cancer cases at more than 150 hospitals in the U.S. and includes all of the data fields sent to state and federal cancer registries. The strength of the NODA is that it includes radiation dose fields that are assessed for internal validity by reviewers with specialized radiation oncology training. The NODA, however, may not be broadly representative of U.S. practice. To assess the generalizability of our observations, we performed the same analysis using data from the NCDB, a more representative database that captures information from approximately 70% of all newly diagnosed cancers in the U.S. [5]. A weakness of the NCDB is that radiation dose is not secondarily validated. Both the NODA and the NCDB require a minimum set of database fields to be completed by registrars to meet submission requirements, so every case of locally advanced NSCLCa treated at participating institutions is not necessarily captured within these databases.

Cohort identification

To minimize confounding, we sought to restrict our patterns of care analysis to patients likely meeting eligibility criteria for RTOG 0617 since this represents a cohort deemed potentially suitable for dose-escalated radiation by subject matter experts in the era of interest. Using manual chart review as the gold standard, we iteratively developed a cancer registry-based algorithm that classifies patients based on RTOG 0617 eligibility and whether they were treated with definitive-intent concurrent chemotherapy and radiation. To minimize misclassification errors, the algorithm was more restrictive than RTOG 0617 eligibility criteria with respect to tumor staging and prior allowable malignancies. The study cohorts included only patients with AJCC versions 6 and 7 clinical T3, N1 or T0-3, N2, M0, but excluded patients with derived AJCC v6 stage IIB, which included clinical T2, N1, and M0. The algorithm excluded patients treated to total doses of <59 Gy (to omit patients who received pre-operative radiation or palliative radiation or who discontinued radiation early) and patients with total doses of >80 Gy (to omit outliers likely related to reporting errors). We assessed the algorithm’s ability to predict RTOG 0617 eligibility (positive predictive value) in validation cohorts at cancer registry programs in two regionally distinct hospitals.

Internal and external validity

To assess internal validity (e.g., confounding and bias) within the NODA cohort, we used chi-square tests to compare the distribution of covariates between patients treated in the years before (2004–2010) and after (2011–2013) the early closure of RTOG 0617 (on June 17, 2011). We evaluated the external validity (e.g., generalizability) of our NODA findings in two ways. First, we used chi-square tests to compare the distribution of patient covariates in the NODA cohort (excluding 2013 cases) and the NCDB 2004–2012 cohort. In order to maximize the representativeness of the NCDB, we did not filter the NCDB cohort on radiation dose (unfiltered NCDB 2004–2012) for this first generalizability analysis. Second, after our a priori hypotheses were confirmed in the NODA cohort, we determined whether these patterns were also present in the NCDB, 2004–2012, which was similarly filtered on radiation dose (filtered NCDB 2004–2012).

Primary outcome and control variables

The primary outcome was the use of dose-escalated radiation over two a priori-defined periods. Period 1 was defined from 1/1/2004 to 12/31/2010, the period before the early closure of the high dose arms of RTOG 0617 was announced to participating institutions. Period 2 was defined from 1/1/2010 to 12/31/2013, which is the period immediately before RTOG 0617 closure through the data collection period. We defined dose-escalated radiation as a total dose of >70 Gy, consistent with guidelines and on-going national trials [6, 7]. Both NODA and NCDB capture delivered dose, not prescribed dose. We a priori selected patient and disease control variables that might affect a physician’s perception of the tolerability and effectiveness of dose-escalated radiation and characteristics of the diagnosing hospital that might affect patterns of care (Table 1). Characteristics of the diagnosing hospital were defined as previously described [8, 9]. We calculated confidence intervals around the estimated annual percentage of patients treated with dose-escalated radiation using the Clopper-Pearson method. We used logistic regression to assess the covariate-adjusted association of the year of treatment (continuous variable) with dose-escalated radiation use (categorical variable) and in each period of interest. In a post hoc analysis, we characterized the use of total doses in the ‘low-standard dose’ range (≥59 Gy and <64 Gy), a dose most consistent with the superior arm of RTOG 0617.

We used linear regression to determine the year in which a significant change in radiation dosing could have been detected had total radiation dose been prospectively monitored within the NODA network. We compared the actual average radiation dose (continuous variable) used for a given year across the network with a forecasted average dose based on the average of the prior three years, with adjustments for age and comorbidities. For 2005 and 2006, where three years of prior data were not available, we forecasted based on the prior year and two years, respectively.

Statistical significance was set a priori at 0.01 because multiple hypotheses were being tested. Missing values were uncommon so were excluded from statistical analyses.

Results

The positive predictive values for the RTOG 0617 eligibility algorithm were 90.2% (37/41 patients) and 91.2% (31/34) based on manual chart review at the first and second cancer registry programs, respectively. The most common reason for false positives within both validation sets was failure to meet RTOG 0617 performance status and pulmonary function test criteria. The positive predictive values of the algorithm with respect to total radiation dose were 97.5% (40/41) and 97.1% (33/34) at the first and second cancer registry programs, respectively.

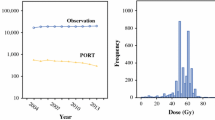

When applied to the NODA dataset, the algorithm identified 1733 patients treated between 2004 and 2013, of whom 499 (29%) patients were treated with dose-escalated radiation (Fig. 1). Table 1 shows the distribution of patient, disease and hospital factors in the period from 2004 to 2010 versus 2011–2013. The patients treated in 2004–2010 and 2011–2013 were similar with respect to age, sex, race, T-stage and diagnosing hospital academic status and metropolitan status. Table 2 shows a comparison of the distribution of patient, disease and contextual variables of the NODA primary analytic cohort (excluding 2013 data) and the NCDB 2004–2012 NSCLCa cohort without radiation dose exclusions (Fig. 1). The NODA cohort had a greater proportion of metropolitan and non-academic hospitals and borderline differences with respect to age, race, T- stage and comorbidity scores.

From 2004 to 2010 (Period 1), the use of dose-escalated radiation increased (p< 0.0001), even after adjusting for potential confounders (Table 3). From 2010 to 2013 (Period 2), the use of dose-escalated radiation decreased (p = 0.0018) (Table 4), even after adjusting for potential confounders. Specifically, the percentage of patients treated with dose-escalated radiation in the NODA increased from 22% (95% CI: 15-31%) in 2004 to 37% in 2010 (95% CI: 31-43%) and then declined to 20% (95% CI: 13–28%) in 2012, its lowest level in the decade (Fig. 2). Because RTOG 0617 was opened by 484 institutions and accrued 544 patients, we sought to determine whether accrual on to the trial itself might explain the apparent change in patterns of care in the NODA dataset. Of the 499 patients treated with dose-escalated radiation in the NODA dataset, only 20 (4%) patients were treated to 74 Gy during the period in which the high dose arms of RTOG 0617 were accruing.

An increasing utilization of dose-escalated radiation in Period 1 and a decreasing utilization in Period 2 was also observed in the NCDB 2004–2012 (Fig. 3, Tables 5 and 6). While the pattern of utilization was similar between the two datasets, the absolute estimates of utilization were lower in the NCDB. In the NCDB dataset, the percentage of patients treated with dose-escalated radiation increased from 7% (95% CI: 6–8%) in 2004 to 12% (95% CI: 11-14%) in 2010 then decreased to 7% (95% CI: 6–9%) in 2012. The pattern of utilization of low-standard dose radiation (≥59 and <64Gy) over time was similar in the NODA and NCDB (Figs. 4 and 5, respectively). In the NODA, the percentage of patients treated using low-standard dose RT decreased from 47% (95% CI: 37-56%) in 2004 to its lowest levels of 31% (95% CI: 25–37%) in 2010 and then increased again to 44% (95%CI: 35-53%) in 2013. In the NCDB, the percentage of patients treated using low-standard dose radiation decreased from 49% (95% CI: 46–52%) in 2004 to its lowest level of 31% (95% CI: 29-33%) in 2010 and then increased again to 43% (95% CI: 41–45%) in 2012.

Using a rolling three-year average and adjusting for patient age and comorbidities, an increase in average radiation dose would have been first detected in 2008 had the NODA network been monitoring radiation dose in this lung cohort (2005–2007: 64.6 Gy, 95% CI [64.4–65.3 Gy] vs 2008: 65.5 Gy 95% CI [65.1–66.3 Gy], adjusted p = 0.0084) (Fig. 6). A decrease in average radiation dose would have been first detected in 2012 (2009–2011: 65.6 Gy, 95% CI [65.3–66.0 Gy] vs 2012: 64.2 Gy 95% CI [63.4–65.0 Gy], adjusted p = 0.0004).

Discussion

Our analyses show that the use of dose-escalated radiation (>70 Gy) in locally advanced NSCLCa increased in the U.S. between 2004 and 2010. This finding is consistent with our a priori hypothesis that “radiation dose creep” occurred in the absence of level I evidence, since no randomized comparisons of standard versus dose-escalated radiation were formally published over this period. Single-arm studies published in the 2000s suggested that dose-escalation of up to 74Gy in 2 Gy fractions was safe and effective [10–13]. Questionnaire-based surveys show that the evidence was compelling enough to already have changed practice by the mid-2000s, particularly for physicians who considered themselves thoracic specialists [14]. The use of escalating radiation doses in this cohort likely reflects in part disappointing local control outcomes demonstrated with the standard of care. When outcomes are poor, providers may be more open to deviating from standards of care. Even after the results of RTOG 0617 were communicated, it is clear that the radiation oncology community did not fully re-embrace the 60Gy treatment paradigm. While utilization of doses >70Gy declined to its lowest levels in 2012, our data suggests that less than half of patients were treated using low-standard doses (≥59 Gy to <64Gy) in 2012 and 2013. The perception that “more is better” appears to have persisted. Indeed, there is a growing interest in whether intermediate doses (64Gy < dose <74 Gy) might achieve the benefits of dose escalation without the excess costs [15]. Of course, the complex interplay of hypoxic [16], immune [17] and other in vivo responses to radiation and our limited understanding of dose-response of normal tissues make the clinic effects of dose-escalation hard to predict and possibly counter-intuitive.

We also observed that the use of dose-escalation decreased between 2010 and 2013. The preliminary findings of RTOG 0617 were first presented at the annual meeting of ASTRO in 2011 [18]. The first peer-reviewed manuscript was published in 2015 [4]. It is remarkable that the rate of dose-escalation declined by 2012 to levels observed in 2004, based on abstracts alone. In some ways, the rapidity of response is re-assuring, but raises the question: what would have been the consequences of dose creep for these patients had RTOG 0617 been delayed or never performed?

Finally, we demonstrated that an increasing average radiation dose in this cohort could have been detected by 2008 within the smaller NODA network. This result suggests that a national infrastructure for monitoring cohort-specific radiation dosing patterns already exists. Most centers in the U.S. now participate in the CoC’s credentialing program so are already abstracting information on radiation dose. Thus, the CoC, SEER and many other programs that aggregate cancer registry data internationally could feasibly monitor dose to identify cohorts for which dose creep is occurring. In addition, our demonstration used a simple forecasting approach. More sophisticated analytical approaches could provide greater and timelier information [19].

This study has important limitations. First, neither the NODA nor the NCDB captures a statistically representative portion of the U.S. cancer population. While the NCDB captures approximately 70% of cancer cases, diagnoses from small and rural facilities may be under-represented. Second, the absolute estimates for the utilization of dose-escalated therapy varied between the NODA and the NCDB analyses. In contrast, the NODA and the NCDB generated similar estimates for the utilization of low-standard dose over the same time period. This discrepancy may reflect differences in the characteristics of the institutions represented within each dataset and challenges that registrars have in interpreting radiation summaries of patients who have both a regional and a boost treatment, which is more common in patients receiving dose-escalated therapy. The NODA likely contains more accurate dose data, but it lacks the representativeness of the NCDB. Given that the NODA and NCDB have complementary strengths and weaknesses, the true absolute utilization rate of dose-escalation therapy over this period probably falls somewhere between the NODA and NCDB estimates. Third, our analyses do not adjust for radiation technique (e.g., 3D conformal vs IMRT). While the NCDB does have a field identifying “treatment modality”, the current options eligible for cancer registrars are not mutually exclusive. For example, a registrar can identify a treatment as 6MV photons or IMRT, though often a treatment is both. Because of this issue, we chose not to include this variable in our analyses. While increasing adoption of IMRT may in part explain the increasing utilization of dose-escalated radiation observed before 2011, it would not explain the decline after 2011.

Conclusion

Patterns of radiation dosing in locally advanced NSCLCa changed in the U.S. from 2004 to 2013 and in the absence of level 1 evidence. These changes could have been identified using a simple radiation dose monitoring approach that uses data already aggregated from most cancer centers in the U.S.

Abbreviations

- ASTRO:

-

American Society for Radiation Oncology

- CoC:

-

Commission on Cancer

- NCDB:

-

National Cancer Database

- NODA:

-

National Oncology Data Alliance

- NSCLCa:

-

Non small cell lung cancer

- RTOG:

-

Radiation Therapy Oncology Group

References

Curran Jr WJ, Paulus R, Langer CJ, et al. Sequential vs. concurrent chemoradiation for stage III non-small cell lung cancer: randomized phase III trial RTOG 9410. J Natl Cancer Inst. 2011;103(19):1452–60.

Incrocci L, Wortell RC, Alwuni S, et al. Hypofractionated vs Conventionally Fractionated Radiotherapy for Prostate Cancer: 5-year Oncologic Outcomes of the Dutch Randomized Phase 3 HYPRO Trial. Presented at the Annual Meeting of the American Society of Radiation Oncology, San Antonio, TX. 2015. (LBA 2).

Chan JL, Lee SW, Fraass BA, et al. Survival and failure patterns of high-grade gliomas after three-dimensional conformal radiotherapy. J Clin Oncol. 2002;20(6):1635–42.

Bradley JD, Paulus R, Komaki R, et al. Standard-dose versus high-dose conformal radiotherapy with concurrent and consolidation carboplatin plus paclitaxel with or without cetuximab for patients with stage IIIA or IIIB non-small-cell lung cancer (RTOG 0617): a randomised, two-by-two factorial phase 3 study. Lancet Oncol. 2015;16(2):187–99.

National Cancer Database. Am Coll Surg Comm Cancer. https://www.facs.org/quality%20programs/cancer/ncdb. Accessed 12 Jan 2017.

Chang JY, Kestin LL, Barriger RB, et al. ACR Appropriateness Criteria® Nonsurgical treatment for locally advanced non-small cell lung cancer: good performance status/definitive intent. https://acsearch.acr.org/docs/69394/Narrative/. American College of Radiology. Accessed 12 Jan 2017.

RTOG 1308 Protocol Information. https://www.rtog.org/ClinicalTrials/ProtocolTable/StudyDetails.aspx?study=1308. Accessed 12 Jan 2017.

CoC Accreditation Categories. https://www.facs.org/quality-programs/cancer/accredited/about/categories. Accessed 12 Jan 2017.

Rural–urban Continuum Codes. http://seer.cancer.gov/seerstat/variables/countyattribs/ruralurban.html. Accessed 12 Jan 2017.

Kong FM, Ten Haken RK, Schipper MJ, et al. High-dose radiation improved local tumor control and overall survival in patients with inoperable/unresectable non-small-cell lung cancer: long-term results of a radiation dose escalation study. Int J Radiat Oncol Biol Phys. 2005;63:324–33.

Rosenzweig KE, Fox JL, Yorke E, et al. Results of a phase I dose-escalation study using three-dimensional conformal radiotherapy in the treatment of inoperable nonsmall cell lung carcinoma. Cancer. 2005;103(10):2118–27.

Bradley JD, Moughan J, Graham MV, et al. A phase I/II radiation dose escalation study with concurrent chemotherapy for patients with inoperable stages I to III non-small-cell lung cancer: phase I results of RTOG 0117. Int J Radiat Oncol Biol Phys. 2010;77(2):367–72.

Rosenman JG, Halle JS, Socinski MA, et al. High-dose conformal radiotherapy for treatment of stage IIIA/IIIB non-small-cell lung cancer: technical issues and results of a phase I/II trial. Int J Radiat Oncol Biol Phys. 2002;54(2):348–56.

Kong FM, Cuneo KC, Wang L, et al. Patterns of practice in radiation therapy for non-small cell lung cancer among members of the American Society for Radiation Oncology. Pract Radiat Oncol. 2014;4(2):e133–41.

Rodrigues G, Oberije C, Senan S, et al. Is intermediate radiation dose escalation with concurrent chemotherapy for stage III non-small-cell lung cancer beneficial? A multi-institutional propensity score matched analysis. Int J Radiat Oncol Biol Phys. 2015;91(1):133–9.

Semenza GL. The hypoxic tumor microenvironment: a driving force for breast cancer progression. Biochim Biophys Acta. 2016;1863(3):382–91.

Kalbasi A, June CH, Haas N, Vapiwala N. Radiation and immunotherapy: a synergistic combination. J Clin Invest. 2013;123(7):2756–63.

Bradley J, Paulus R, Komaki R, et al. A randomized phase III comparison of standard-dose (60 Gy) versus high-dose (74 Gy) conformal chemoradiotherapy +/− cetuximab for stage IIIa/IIIb non-small cell lung cancer: Preliminary findings on radiation dose in RTOG 0617 (late-breaking abstract 2). Presented at the Annual Meeting of the American Society of Radiation Oncology, October 2–6, 2011, Miami, FL.

Guo W. Functional mixed effects models. Biometrics. 2002;58:121–8.

Acknowledgments

Not applicable.

Funding

This research received no external funding support.

Availability of data and materials

The data that support the findings of this study are available from the National Oncology Data Alliance and the National Cancer Database but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available.

Authors’ contributions

All authors were involved in the conception and design of the study, analysis and interpretation, were involved in drafting of the manuscript and have given final approval; JPC, MDW and TES, PG acquired the datasets.

Competing interests

John Christodouleas and Marjorie Van der Pas report employment status at Elekta, Inc.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Both the NODA and NCDB have been de-identified in accordance with The Health Insurance Portability and Accountability Act. Insitutional review board approval was obtained at the University of Pennsylvania and at City of Hope.

Scientific writers

Not applicable.

Author information

Authors and Affiliations

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Christodouleas, J.P., Hall, M.D., van der Pas, M.A. et al. The use of dose-escalated radiation for locally advanced non-small cell lung cancer in the U.S., 2004–2013. Radiat Oncol 12, 19 (2017). https://doi.org/10.1186/s13014-016-0755-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13014-016-0755-y