Abstract

Background

Existing reviews identify numerous studies of the relationship between urban built environment characteristics and obesity. These reviews do not generally distinguish between cross-sectional observational studies using single equation analytical techniques and other studies that may support more robust causal inferences. More advanced analytical techniques, including the use of instrumental variables and regression discontinuity designs, can help mitigate biases that arise from differences in observable and unobservable characteristics between intervention and control groups, and may represent a realistic alternative to scarcely-used randomised experiments. This review sought first to identify, and second to compare the results of analyses from, studies using more advanced analytical techniques or study designs.

Methods

In March 2013, studies of the relationship between urban built environment characteristics and obesity were identified that incorporated (i) more advanced analytical techniques specified in recent UK Medical Research Council guidance on evaluating natural experiments, or (ii) other relevant methodological approaches including randomised experiments, structural equation modelling or fixed effects panel data analysis.

Results

Two randomised experimental studies and twelve observational studies were identified. Within-study comparisons of results, where authors had undertaken at least two analyses using different techniques, indicated that effect sizes were often critically affected by the method employed, and did not support the commonly held view that cross-sectional, single equation analyses systematically overestimate the strength of association.

Conclusions

Overall, the use of more advanced methods of analysis does not appear necessarily to undermine the observed strength of association between urban built environment characteristics and obesity when compared to more commonly-used cross-sectional, single equation analyses. Given observed differences in the results of studies using different techniques, further consideration should be given to how evidence gathered from studies using different analytical approaches is appraised, compared and aggregated in evidence synthesis.

Similar content being viewed by others

Introduction

The global prevalence of obesity has increased in recent decades [1],[2]. A contributing factor could be changes to the urban built environment, including suburbanisation (urban sprawl), which have altered the availability of a variety of dietary and physical activity resources. The costs (including time costs) of walking and cycling are likely to be higher in cul-de-sac housing developments, for example, compared to densely populated urban areas with greater land-use mix and shorter distances between home, leisure, retail and work locations. Fewer footpaths (sidewalks) and cycle routes would likely reinforce this cost differential. However, a potential counterbalance to high physical activity costs in suburban areas may be relatively low costs of accessing healthy foods, which are more readily available in larger out-of-town supermarkets (stores), at least in the U.S. [3]. Fewer public transport facilities and less road traffic congestion may also affect the costs of physical activity, although their impact could operate in either direction in different contexts. Policymakers seeking to reduce the (relative) costs people face when choosing healthy behaviours might therefore choose to intervene in the design of urban built environments.

Existing reviews - such as the review by Feng and colleagues [4], hereafter the `Feng review’ - document a substantial number of cross-sectional observational studies of the relationship between urban built environment characteristics and obesity using single equation regression adjustment techniques. Typically these reviews do not distinguish between these more common study designs [5],[6], which can be used to test statistical associations and generate causal or interventional hypotheses [7],[8], and other studies that may (at least in principle) strengthen the basis for causal inferences and provide a better guide for policymaking.

In particular, more advanced analytical techniques have been proposed in recent UK Medical Research Council guidance [9] (hereafter “MRC guidance”; Table 1) on evaluating population health interventions using natural experiments, in which variation in exposure to interventions is not determined by researchers. These include difference-in-differences (DiD) [10],[11], instrumental variables [12],[13], and propensity scores [13]-[15], which are intended to mitigate bias resulting from differences in observable or unobservable characteristics between intervention and control groups. Such methods have been used extensively by economists in observational studies to evaluate public policies that are typically not tested in randomised experiments [16].

These techniques can reduce the risk of `allocation bias’ (also known as `residual confounding’ in epidemiology [17] and `endogeneity’ or `self-selection bias’ in economics) which may arise particularly in observational studies [18],[19] if people’s decisions about where they live are correlated with unmeasured individual-level characteristics (e.g. attitude towards physical activity) and with the outcome(s) of interest (e.g. obesity) [6]. Whilst randomised experiments are considered the `gold standard’ study design for estimating the effect of an intervention, since observed effect sizes can generally be attributed to the intervention rather than to unobserved differences between individuals, they are infrequently employed in public health research [20]-[22]. Particular barriers to their use in built environment research include ethical and political objections to the random assignment of participants to neighbourhoods, or to the random assignment of neighbourhoods to receipt of interventions, alongside the difficulty of blinding participants to their group allocation and limiting the potential for participants to visit neighbouring areas. The more advanced techniques described in MRC guidance may therefore provide a more realistic, if hitherto under-used, alternative approach.

The objectives of the present study were (1) to identify studies of the relationship between urban built environment characteristics and obesity that have used more advanced analytical techniques or study designs, and (2) to explore whether the choice of methodological approach critically affects the results obtained. For instance, do more advanced analytical techniques consistently show a weaker association between the built environment and obesity than single equation techniques - as would be expected if, for example, people of normal weight are more likely to choose to live in more walkable neighbourhoods? Should this be the case, then researchers and policymakers need to consider how evidence gathered from studies using different analytical techniques is appraised, compared and aggregated in evidence synthesis.

Methods

Search strategy

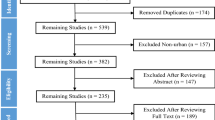

While recognising acknowledged difficulties in designing search filters on the basis of built environment characteristics [23], study design labels or design features across disciplines [24], a purposive search strategy was devised to elicit studies that may support more robust causal inferences than cross-sectional, single equation approaches. In order to identify additional studies to those included in the Feng review, a strategy was devised for the Ovid Medline (1950 to 2011) database encompassing a broader range of built environment search terms (based on another review [25]) and including papers published after 2009. Grey literature searches began with Google Scholar (to March 2013). On identifying a number of relevant studies published by U.S. economists at the National Bureau of Economic Research (NBER), the search was subsequently extended to the online repository of the NBER Working Paper series (http://www.nber.org/papers) and, to ascertain whether similar studies had been published in Europe, the online repository for research papers published by the Centre for Health Economics, York, U.K. (http://www.york.ac.uk/che/publications/in-house/).

The search was completed in two stages. In Stage 1, the search was restricted to observational studies using the more advanced analytical techniques identified in MRC guidance [9] (Table 1, excluding cross-sectional studies using only single equation regression adjustment since these feature in existing reviews).

In Stage 2, study designs or methodological approaches were identified which may not necessarily require use of the particular advanced analytical techniques specified in MRC guidance but may, nonetheless, support more robust causal inference. Specifically, this encompassed: (1) randomised experiments, (2) structural equation models (SEMs) [26], a multivariate regression approach in which variables may influence one another reciprocally, either directly or through other variables as intermediaries, and (3) panel data studies that controlled for fixed effects. In fixed effects panel data studies - as in those using the DiD approach - only changes within individuals over time are analysed, so eliminating the risk of bias arising from time-invariant differences between individuals (including in potential confounding variables) [27]-[29]. Other cohort, longitudinal or repeated cross-sectional studies which could not account for unobserved differences between individuals were excluded.

Analysis

Data were extracted from each of the identified studies relating to the methods, including characteristics of the study population, the dependent and independent variables, analytical technique(s) and study design(s) employed; and to the results, including parameter estimates for one or more methods of analysis, noting any mismatch between the results of analyses that used different approaches.

Results

Objective 1: Characteristics of included studies

Of eight studies identified in Stage 1 of the review, all used instrumental variables and of these, six were cross-sectional and two were repeat cross-sectional studies (Table 2). Zick and colleagues, for example, used individual-level cross-sectional data on 14,689 U.S. women, linked to a walkability measure incorporating characteristics relating to land-use diversity, population density and neighbourhood design. An instrumental variable was derived from those characteristics (e.g. church or school density) that were significantly associated with the walkability of the neighbourhood but, crucially, not with BMI. In five of the eight studies, proximity to major roads (which was not correlated with BMI) was similarly used as an exogenous source of variation in relevant independent variables (e.g. fast-food restaurant availability (4/8), which increases around major roads because such amenities attract non-resident travellers). No studies identified in Stage 1 used the matching, propensity score, DiD or regression discontinuity (RDD) analytical techniques.

Of six studies identified in Stage 2 (Table 3), two were randomised experiments. In one, the `Moving to Opportunity’ (MTO) study [37], families living in public housing in high poverty areas of five U.S. cities were randomly assigned housing vouchers for private housing in lower-poverty neighbourhoods. Significant reductions in obesity likelihood were observed after five years amongst voucher recipients when compared to non-recipients. In the other study, the exposure (not administered by researchers) resulted from the random (and hence exogenous) allocation of first year students to different university campus accommodation [38]. Three further studies identified in Stage 2 were fixed effects panel data analyses. Sandy and colleagues, for example, studied the impact of built environment changes in close proximity to individual households (derived from aerial photographs) on changes in the BMI of individual children over eight years. The sixth study was described as a structural equation modelling (SEM) study. Using cross-sectional data, physical activity and obesity status were modelled using latent variables for the physical and social environments [39].

In the five observational studies that used data from multiple time periods (two in Stage 1 and three in Stage 2), although BMI data were collected in up to 25 different time periods, data on built environment characteristics were collected less frequently and in three cases were fixed at a single time point. This could reflect the relative difficulty in collecting historical built environment data [29],[43] which limits within-individual analysis to people who move location, rather than including those exposed to changes in the built environment around them.

Across both stages of the review, six studies (6/14, 43%) reported statistically significant relationships between built environment characteristics and obesity in the main analysis. Of these, four were instrumental variable studies identified in Stage 1 (statistically significant results were also reported for one of two obesity measures in one further study). Apart from the MTO study (for which the BMI results appeared only in the grey literature), all studies identified in the review were published after the Feng review had been completed in 2008, and all used data on U.S. participants. Nine studies (9/14) were published in sources that included “economic” or “economics” in their title.

Objective 2: Comparison of results using different methodological approaches

Within-study comparisons of results were possible in six of the eight instrumental variable studies identified in Stage 1 (Table 2). In two of these studies [32],[33], the results were statistically insignificant in both the instrumental variable and comparable single equation regression adjustment analyses. In four studies [31],[34]-[36], statistically significant results reported in the instrumental variable analysis, in the expected directions, were not replicated in comparable single equation analyses. This was also the case in subgroup analyses such as for females or non-white ethnic groups in the other two studies.

Similar differences were also observed in one of the three panel data studies identified in Stage 2 of the review (Table 3) [40], as well as in some subgroup analyses of the panel data study by Sandy and colleagues in which statistically significant negative relationships between BMI and the density of fitness, kickball and volleyball facilities were statistically insignificant in the cross-sectional analysis.

These results suggest that use of cross-sectional, single equation analysis would have led to a lower estimate of the impact of built environment characteristics on obesity, whereas some authors had a prior hypothesis that these methods would have led to an overestimate of effect size arising from allocation bias. In contrast to an expectation that people of normal weight would prefer living in walkable neighbourhoods, for example, Zick and colleagues concluded that some neighbourhood features were positively associated with walkability and hence healthy living, but negatively related to other competing factors that people consider when choosing where to live, such as school quality, traffic levels and housing costs [35]. Similarly, although fast-food restaurants were expected to locate in areas with high demand [44], Dunn and colleagues suggested that a possible explanation for the statistically insignificant results identified in their instrumental variables study could be that these profit-maximizing firms operated in areas with low (not high) levels of obesity [32]. This may be because of higher average levels of education and income and lower levels of crime in those areas [33].

In contrast to the more common cases in which single equation, cross-sectional studies had relatively underestimated the impact of the built environment, in a small number of subgroup analyses of two of the panel data studies identified in Stage 2, statistically significant cross-sectional parameter estimates were not replicated in the panel data analysis (although in these two studies, the majority of parameter estimates were statistically insignificant regardless of the method of analysis) [41],[42].

A more unexpected result in the study by Sandy and colleagues was the statistically significant negative relationship identified between the number of fast-food restaurants and BMI in the panel data analysis, which contrasted with a statistically insignificant estimate in the cross-sectional analysis. The authors did not suggest that fast-food restaurants actually reduced BMI in children, but concluded that a recent moratorium on new outlets in the U.S. city of Los Angeles might be ineffective, perhaps because outlets are already so commonplace that children can access fast food regardless of whether a restaurant is present in their immediate neighbourhood [42].

All remaining studies produced results that were in line with expectations. Furthermore, no studies were identified in which the application of at least two methods led to contradictory results (e.g. one estimate showing a positive and the other showing a negative impact).

In two of the instrumental variable studies identified in Stage 1 (2/8) [3],[30], and in the randomised experimental and SEM studies identified in Stage 2 (3/6), results were not reported for any comparable alternative analyses.

Discussion

Objective 1: Use of more advanced methods

Despite increasing use of randomised experiments in policy areas where they are not normally expected [22],[45]-[47], just two randomised experiments were identified in the review [37],[38]. While RCTs ought not be overlooked as an evaluation option [48],[49], the problem of “empty” systematic reviews would arise if non-randomised observational studies were excluded from evidence synthesis processes [50]. Scarce resources might then be diverted towards small-scale individual-level interventions [51], simply because RCTs of such interventions are more common, at the expense of large-scale population-level interventions, regardless of their relative cost-effectiveness [52].

The twelve identified non-randomised studies that used more advanced methodological approaches were all published during the past five years and, given that the Feng review identified 63 studies, already represent a sizeable contribution to the existing literature on the relationship between urban built environment characteristics and obesity. This indicates that, in the absence of evidence from RCTs, observational studies that employ the more advanced analytical methods are feasible and increasingly employed. In addition to their greater potential to support causal inference when compared to cross-sectional, single equation analyses, these observational studies may sometimes also provide more credible results than randomised experiments [53]-[57]. For example, large-scale, individual-level, retrospective data sets (e.g. the U.S. National Longitudinal Surveys (NLSY) and Behavioral Risk Factor Surveillance System (BRFSS), used in five studies) can potentially eliminate threats to internal validity likely to arise in public health intervention studies in which, unlike in placebo-controlled clinical trials, participants cannot be blinded to their group allocation. This can affect researchers- treatment of participants [57] as well as participants- behaviour and attrition rates. Although the impact on results was unclear, one-quarter of New York MTO participants were lost during follow-up, for example [58]. Further, in terms of external validity, larger sample sizes (e.g. Courtemanche and Carden’s study included 1.64 million observations [36]), longer follow-up periods, a wider range of variables relating to individual-level characteristics and the possibility of linking individuals to spatially referenced exposure variables identified in other datasets can support robust analysis of large, population-level interventions or risk factors, as well as smaller population-subgroup analyses [9]. In one such study, for example, statistically significant effect sizes were observed only amongst ethnic minorities [33]. These analyses are typically unfeasible in randomised experiments due to unrepresentative samples, high attrition rates, high costs or limited sample sizes. In Kapinos and Yakusheva’s study, for example, 386 students living in car-free campus accommodation, which was unrepresentative of external neighbourhoods, were followed up for just one year. Given an apparent mismatch in the schedules of experimental researchers and policy-makers [59], retrospective datasets can also support more rapid analyses and avoid the need for lengthy ethical approval processes associated with RCTs [45]. Nevertheless, all the identified studies featured U.S. participants (compared to 83% of the studies identified in the Feng review), which might be indicative of a scarcity of suitable datasets elsewhere, particularly in low- or middle-income countries [8].

Despite the apparent increased use of more advanced methodological approaches, not all the techniques recommended by the MRC for use in natural experimental studies featured in the identified studies. The absence of any study using the RDD or DiD approaches may be explained partly by a lack of suitable data and their relative inapplicability to built environment research, since policy interventions - particularly those involving the clear eligibility cut-offs that are required in RDD - may be relatively scarce. Further, most of the identified studies were published in economics journals, whereas none of the studies identified in the Feng review came from such sources. This could indicate the relative infrequency with which these techniques are used amongst public health researchers or are familiar to peer reviewers who are not economists [60]. However, in the case of propensity scores and matching, where the data requirements are similar to those of single equation techniques, some of their relative advantages over methods that control only for observable characteristics are not always acknowledged in existing guidelines [9]. First, they overcome the problem of wrongly specified functional forms, a recognised issue in built environment research [61]. Second, assuming that they are correctly applied [15], these techniques limit the potential for non-comparable individuals being included in the treatment and control groups [14],[62],[63] (problems related to their inappropriate use are highlighted in the next section). This so-called lack of `common support’ could be problematic if, for example, the most walkable neighbourhoods were home to individuals with levels of observed characteristics (e.g. higher income and education levels) that do not feature at all amongst the population of the least walkable neighbourhoods [14].

The review also revealed use of ambiguous or confusing study design labels - a recognised issue [24],[64], owing perhaps to the relative novelty of natural experimental approaches. For example, `natural experiments’ are sometimes defined in broad terms as studies `in which subsets of the population have different levels of exposure to a supposed causal factor’ [65],[66], or more narrowly, where `random or `as if’ random assignment to treatment and control conditions constitutes the defining feature’ [9],[67]. Of the two studies identified that used “natural experiment” in their titles, the study by Sandy and colleagues only constitutes a natural experiment using the former definition [42]; the other, by Kapinos and Yakusheva, is better defined using the latter [38]. Yet these are not intervention studies and may therefore lie outside the scope of the natural experimental studies described in MRC guidance, despite their having exploiting variation which was outside the researcher’s control.

Established definitions of other terms, including fixed effects [68], quasi-experiments [6],[64], DiD and SEM, may also vary between disciplines. In the present review, Franzini and colleagues used SEM to describe an observational study that used latent variables for the physical environment based on various built environment indicators [39], while Zick and colleagues [35], in common with other examples [69],[70], used the term more broadly to encompass other multiple-equation analytical techniques, including instrumental variables. Elsewhere, the term SEM is used to describe a more specific research area which is distinct from the so-called `policy evaluation’ (or `reduced form’), multiple-equation methods that are the primary focus of the present paper [71],[72]. Rather than evaluating specific interventions or policy changes and striving to develop techniques that mimic the RCT study design, structural models can be cumulative, incorporating existing theories and past evidence to simulate an array of potential built environment changes [73]-[75] and may therefore offer one promising but hitherto unexplored area for developing a better understanding of causal mechanisms and pathways in this field.

Objective 2: Comparing effect sizes arising from different analytical approaches and implications for future primary research and guidance for evidence synthesis

Significant differences are - with some exceptions [76] - generally observed between the results of observational studies and randomised experiments [77]-[81]. However, comparisons of the results of observational studies that used different analytical techniques are uncommon. One unique series of studies in which different analytical techniques were used to evaluate the U.S. National Supported Work Demonstration programme, a 1970s job guarantee scheme for disadvantaged workers, is particularly insightful because statistically significant differences in effect sizes were observed when regression-adjustment, propensity score matching [82],[83] and DiD [84] methods were used in analyses of comparable data arising from the same RCT [16],[85].

One main finding of our review, that statistically significant relationships between features of the built environment and obesity were less likely when weaker, cross-sectional, single equation analyses were used, was unexpected, given the hypotheses of some authors (see Results section). Although this finding was based on a small number of within-study comparisons of results, it corresponds with a similar review of studies by McCormack and colleagues of the relationship between the built environment and physical activity which concluded that observed associations likely exist independent of residential location choices, an important contributor to allocation bias (although these studies focused primarily on using survey questions to elicit information about neighbourhood preferences and satisfaction, an approach that is associated with other sources of bias) [6]. A second main finding of our review was that 43% of identified studies reported statistically significant results in the main analysis, and that all statistically significant results were in directions that would be expected (except in one subgroup analysis). Although the estimated effect sizes were often still modest, a number of authors emphasised the potential of neighbourhood-level built environment interventions to influence the weight of large numbers of people [35]. Together with the Feng review which identified statistically significant effects in 48 of 63 studies (76%), these two main findings suggest that current interest in altering the design of urban built environments, amongst research and policymaking communities alike, seems warranted. Nevertheless, as in the two reviews by Feng and McCormack, the great heterogeneity in the range of built environment characteristics investigated limits the inferences that can be made about the specific changes to the built environment that are most likely to be cost-effective.

The finding that the use of different methods can make a difference to results suggests that, used appropriately, these more advanced methods should be considered as more robust approaches for establishing effect estimates of potentially causal associations between built environment characteristics and health-related outcomes. It also supports the case for improved tools to distinguish between studies in policy areas, including public health, criminology, education, the labour market and international development, where observational study designs are the norm [24],[86]-[90]. Existing evidence synthesis guidelines, including MOOSE [91] and GRADE [92] used in health research and the Maryland Scale of Scientific Methods [93] which was developed by criminologists and forms the basis of recent guidance for U.K. Government departments [81],[94],[95], are not typically sensitive to potentially important sources of bias, including allocation bias, which may arise [78],[90],[96],[97]. Meanwhile, more established tools, such as those developed by the Centre for Reviews and Dissemination [98], the Cochrane Collaboration [99] and PRISMA [100], focus solely on biases likely to be present in randomised intervention studies, including allocation concealment and attrition bias [99].

Nevertheless, enhancing these guidelines so that they are more sensitive to differences between different observational study designs would be challenging. First, unlike the common distinction between RCT and non-RCT intervention research, it is not generally possible to state that any analytical technique is universally preferable to another in all observational settings [84]. Rather, a researcher’s choice of technique should be based on pragmatic and subjective judgements dependent on the data available and the study context. In many cases, none of the advanced analytical techniques would be suitable, and rarely would they be interchangeable. Second, each analytical technique has distinct features which must be borne in mind when interpreting results. For example, instrumental variable analyses rely on subjective, unverifiable judgments about the quality of the instrument [74],[101]-[104], and are therefore liable to be used inappropriately [60]. Reviewers of instrumental variable analyses must also consider the population subsample that has been used in the analysis [105],[106] and, in propensity score analyses, of the characteristics of participants for whom there is common support [15],[107]. Sometimes this detail is overlooked or left unreported by study authors [15]. Hence reviewers or policymakers may conclude that the results of comparable cross-sectional, single equation studies provide a more reliable guide, despite the associated risk of allocation bias. Reporting guidelines designed for authors of studies of observational studies (e.g. STROBE [108],[109]) could be better developed [77] to alleviate inadequacies in the reporting of results, but also to encourage authors to report the results of a comparable single equation or cross-sectional analysis. Third, other important sources of bias may be overlooked if an assessment of study quality were based solely on the chosen analytical technique. Evident in the present paper, for example, were the use of self-reported rather than objectively measured BMI outcomes [4] and perceived rather than objectively measured characteristics of the built environment [110], differences in the strength of temporal evidence in longitudinal studies (i.e. whether a change in environmental characteristics actually preceded a change in obesity), varying attempts to control for residential self-selection using self-reported attitudes [6], and a trade-off between the use of large pre-existing administrative boundaries (e.g. the study by Powell and colleagues of adolescent BMI [41]) and more sophisticated approaches based on georeferenced micro-data (e.g. the study by Chen and colleagues [31]) (Tables 2 and 3). While the latter can provide a detailed description of each individual’s immediate living environment, a possible bias would likely arise if individuals engaged in dietary or physical activity behaviours outside their immediate area [111].

Conclusion

Use of more advanced methods of analysis does not appear necessarily to undermine the observed strength of association between urban built environment characteristics and obesity when compared to more commonly-used cross sectional, single equation analyses. Although differences in the results of analyses that used different techniques were observed, studies using these techniques cannot easily be `quality’-ranked against each other and further research is required to guide the refinement of methods for evidence synthesis in this area.

References

Sassi F, Devaux M, Cecchini M, Rusticelli E: The Obesity Epidemic: Analysis of Past and Projected Future Trends in Selected OECD Countries. 2009, OECD, Paris

Finucane MM, Stevens GA, Cowan MJ, Danaei G, Lin JK, Paciorek CJ, Singh GM, Gutierrez HR, Lu Y, Bahalim AN, Farzadfar F, Riley LM, Ezzati M: National, regional, and global trends in body-mass index since 1980: systematic analysis of health examination surveys and epidemiological studies with 960 country-years and 9.1 million participants. Lancet. 2011, 377: 557-567. 10.1016/S0140-6736(10)62037-5.

Zhao Z, Kaestner R: Effects of urban sprawl on obesity. J Health Econ. 2010, 29: 779-787. 10.1016/j.jhealeco.2010.07.006.

Feng J, Glass TA, Curriero FC, Stewart WF, Schwartz BS: The built environment and obesity: a systematic review of the epidemiologic evidence. Health Place. 2010, 16: 175-190. 10.1016/j.healthplace.2009.09.008.

Ding D, Gebel K: Built environment, physical activity, and obesity: what have we learned from reviewing the literature?. Health Place. 2012, 18: 100-105. 10.1016/j.healthplace.2011.08.021.

McCormack G, Shiell A: In search of causality: a systematic review of the relationship between the built environment and physical activity among adults. Int J Behav Nutr Phys Act. 2011, 8: 125-10.1186/1479-5868-8-125.

Bauman AE, Sallis JF, Dzewaltowski DA, Owen N: Toward a better understanding of the influences on physical activity: the role of determinants, correlates, causal variables, mediators, moderators, and confounders. Am J Prev Med. 2002, 23: 5-14. 10.1016/S0749-3797(02)00469-5.

Bauman AE, Reis RS, Sallis JF, Wells JC, Loos RJF, Martin BW: Correlates of physical activity: why are some people physically active and others not?. Lancet. 2012, 380: 258-271. 10.1016/S0140-6736(12)60735-1.

Craig P, Cooper C, Gunnell D, Haw S, Lawson K, Macintyre S, Ogilvie D, Petticrew M, Reeves B, Sutton M, Thompson S: Using Natural Experiments to Evaluate Population Health Interventions: Guidance for Producers and Users of Evidence. 2011, Medical Research Council, London

Qin J, Zhang B: Empirical-likelihood-based difference-in-differences estimators. J R Stat Soc Ser B Stat Methodol. 2008, 70: 329-349. 10.1111/j.1467-9868.2007.00638.x.

Grafova IB, Freedman Vicki A, Lurie N, Kumar R, Rogowski J: The difference-in-difference method: Assessing the selection bias in the effects of neighborhood environment on health. Economics and Human Biology. 2014, 13: 20-33.

Angrist JD, Imbens GW, Rubin DB: Identification of causal effects using instrumental variables. J Am Stat Assoc. 1996, 91: 444-455. 10.1080/01621459.1996.10476902.

Cousens S, Hargreaves J, Bonell C, Armstrong B, Thomas J, Kirkwood BR, Hayes R: Alternatives to randomisation in the evaluation of public-health interventions: statistical analysis and causal inference. J Epidemiol Community Health. 2011, 65: 576-581. 10.1136/jech.2008.082610.

Cao X: Exploring causal effects of neighborhood type on walking behavior using stratification on the propensity score. Environ Plann A. 2010, 42: 487-504. 10.1068/a4269.

Austin PC: A critical appraisal of propensity?score matching in the medical literature between 1996 and 2003. Stat Med. 2008, 27: 2037-2049. 10.1002/sim.3150.

Blundell R, Costa Dias M: Alternative Approaches to Evaluation in Empirical Microeconometrics. 2002, Centre for Microdata Methods and Practice, UCL, London

Guyatt GH, Oxman AD, Sultan S, Glasziou P, Akl EA, Alonso-Coello P, Atkins D, Kunz R, Brozek J, Montori V, Jaeschke R, Rind D, Dahm P, Meerpohl J, Vist G, Berliner E, Norris S, Falck-Ytter Y, Murad MH, Schünemann HJ: GRADE guidelines: 9: rating up the quality of evidence. J Clin Epidemiol. 2011, 64: 1311-1316. 10.1016/j.jclinepi.2011.06.004.

Mokhtarian PL, Cao X: Examining the impacts of residential self-selection on travel behavior: a focus on methodologies. Transp Res B Methodol. 2008, 42: 204-228. 10.1016/j.trb.2007.07.006.

Jones AM, Rice N: Econometric evaluation of health policies. The Oxford Handbook of Health Economics. Edited by: Glied S, Smith PC. 2011, Oxford University Press, Oxford

Petticrew M, Cummins S, Ferrell C, Findlay A, Higgins C, Hoy C, Kearns A, Sparks L: Natural experiments: an underused tool for public health?. Public Health. 2005, 119: 751-757. 10.1016/j.puhe.2004.11.008.

Jones A: Evaluating Public Health Interventions with Non-Experimental Data Analysis. 2006, University of York Health Econometrics and Data Group, York

Weatherly H, Drummond M, Claxton K, Cookson R, Ferguson B, Godfrey C, Rice N, Sculpher M, Sowden A: Methods for assessing the cost-effectiveness of public health interventions: key challenges and recommendations. Health Policy. 2009, 93: 85-92. 10.1016/j.healthpol.2009.07.012.

Gebel K, Bauman AE, Petticrew M: The physical environment and physical activity: a critical appraisal of review articles. Am J Prev Med. 2007, 32: 361-369. 10.1016/j.amepre.2007.01.020.

Higgins JPT, Ramsay C, Reeves BC, Deeks JJ, Shea B, Valentine JC, Tugwell P, Wells G: Issues relating to study design and risk of bias when including non-randomized studies in systematic reviews on the effects of interventions. Res Synthesis Methods. 2013, 4: 12-25. 10.1002/jrsm.1056.

Promoting and Creating Built or Natural Environments that Encourage and Support Physical Activity. 2008, National Institute for Health and Care Excellence, London

Kline RB: Principles and Practice of Structural Equation Modeling. 2011, The Guilford Press, New York, USA

Baltagi B: Econometric Analysis of Panel Data. 2008, Wiley, London

Martin A, Goryakin Y, Suhrcke M: Does active commuting improve psychological wellbeing? Longitudinal evidence from eighteen waves of the British Household Panel Survey. Prev Med. 2014, In Press

White MP, Alcock I, Wheeler BW, Depledge MH: Would you be happier living in a greener urban area? A fixed-effects analysis of panel data. Psychol Sci. 2013, 24 (6): 920-928. 10.1177/0956797612464659.

Anderson ML, Matsa DA: Are restaurants really supersizing America?. Am Econ J: Appl Econ. 2011, 3: 152-188.

Chen SE, Florax RJ, Snyder SD: Obesity and fast food in urban markets: a new approach using geo-referenced micro data. Health Econ. 2012, 22: 835-856.

Dunn RA: The effect of fast-food availability on obesity: an analysis by gender, race, and residential location. Am J Agric Econ. 2010, 92: 1149-1164. 10.1093/ajae/aaq041.

Dunn RA, Sharkey JR, Horel S: The effect of fast-food availability on fast-food consumption and obesity among rural residents: an analysis by race/ethnicity. Econ Hum Biol. 2012, 10: 1-13.

Fish JS, Ettner S, Ang A, Brown AF: Association of perceived neighborhood safety on body mass index. Am J Public Health. 2010, 100: 2296-2303. 10.2105/AJPH.2009.183293.

Zick C, Hanson H, Fan J, Smith K, Kowaleski-Jones L, Brown B, Yamada I: Re-visiting the relationship between neighbourhood environment and BMI: an instrumental variables approach to correcting for residential selection bias. Int J Behav Nutr Phys Act. 2013, 10: 27-10.1186/1479-5868-10-27.

Courtemanche C, Carden A: Supersizing supercenters? The impact of Walmart Supercenters on body mass index and obesity. J Urban Econ. 2011, 69: 165-181. 10.1016/j.jue.2010.09.005.

Kling JR, Liebman JB, Katz LF, Sanbonmatsu L: Moving to Opportunity and Tranquility: Neighborhood Effects on Adult Economic Self-Sufficiency and Health from a Randomized Housing Voucher Experiment. 2004, National Bureau of Economic Research, Cambridge MA, USA

Kapinos KA, Yakusheva O: Environmental influences on young adult weight gain: evidence from a natural experiment. J Adolesc Health. 2011, 48: 52-58. 10.1016/j.jadohealth.2010.05.021.

Franzini L, Elliott MN, Cuccaro P, Schuster M, Gilliland MJ, Grunbaum JA, Franklin F, Tortolero SR: Influences of physical and social neighborhood environments on children's physical activity and obesity. Am J Public Health. 2009, 99: 271-278. 10.2105/AJPH.2007.128702.

Gibson DM: The neighborhood food environment and adult weight status: estimates from longitudinal data. Am J Public Health. 2011, 101: 71-78. 10.2105/AJPH.2009.187567.

Powell LM: Fast food costs and adolescent body mass index: evidence from panel data. J Health Econ. 2009, 28: 963-970. 10.1016/j.jhealeco.2009.06.009.

Sandy R, Liu G, Ottensmann J, Tchernis R, Wilson J, Ford OT: Studying the Child Obesity Epidemic with Natural Experiments. 2009, National Bureau of Economic Research, Cambridge MA, USA

Filomena S, Scanlin K, Morland KB: Brooklyn, New York foodscape 2007-2011: a five-year analysis of stability in food retail environments. Int J Behav Nutr Phys Act. 2013, 10: 46-10.1186/1479-5868-10-46.

Chou S-Y, Grossman M, Saffer H: An economic analysis of adult obesity: results from the behavioral risk factor surveillance system. J Health Econ. 2004, 23: 565-587. 10.1016/j.jhealeco.2003.10.003.

White H: An Introduction to the use of Randomized Control Trials to Evaluate Development Interventions. 2011, The International Initiative for Impact Evaluation (3ie), Washington DC, USA

Haynes L, Service O, Goldacre B, Torgerson D: Test, Learn, Adapt: Developing Public Policy with Randomised Controlled Trials. 2012, Cabinet Office, London

Goldacre B: Building Evidence into Education. 2013, Department for Education, London

Macintyre S: Evidence based policy making. BMJ. 2003, 326: 5-6. 10.1136/bmj.326.7379.5.

Macintyre S: Good intentions and received wisdom are not good enough: the need for controlled trials in public health. J Epidemiol Community Health. 2011, 65: 564-567. 10.1136/jech.2010.124198.

Waddington H, White H, Snilstveit B, Hombrados JG, Vojtkova M, Davies P, Bhavsar A, Eyers J, Koehlmoos TP, Petticrew M, Valentine JC, Tugwell P: How to do a good systematic review of effects in international development: a tool kit. J Dev Effectiveness. 2012, 4: 359-387. 10.1080/19439342.2012.711765.

Martin A, Suhrcke M, Ogilvie D: Financial incentives to promote active travel: an evidence review and economic framework. Am J Prev Med. 2012, 43: e45-e57. 10.1016/j.amepre.2012.09.001.

Ogilvie D, Egan M, Hamilton V, Petticrew M: Systematic reviews of health effects of social interventions: 2. Best available evidence: how low should you go?. J Epidemiol Community Health. 2005, 59: 886-892. 10.1136/jech.2005.034199.

Jadad AR, Enkin MW: Bias in randomized controlled trials. Randomized Controlled Trials. 2008, Blackwell Publishing Ltd, London

Barrett CB, Carter MR: The power and pitfalls of experiments in development economics: some non-random reflections. Appl Econ Perspect Policy. 2010, 32: 515-548. 10.1093/aepp/ppq023.

Petticrew M: Commentary: sinners, preachers and natural experiments. Int J Epidemiol. 2011, 40: 454-456. 10.1093/ije/dyr023.

Rodrik D: The New Development Economics: We Shall Experiment, but How Shall We Learn?. 2008, John F. Kennedy School of Government, Cambridge MA, USA

Banerjee AV, Duflo E: The Experimental Approach to Development Economics. 2008, National Bureau of Economic Research, Cambridge MA, USA

Leventhal T, Brooks-Gunn J: Moving to opportunity: an experimental study of neighborhood effects on mental health. Am J Public Health. 2003, 93: 1576-1582. 10.2105/AJPH.93.9.1576.

Rutter J: Evidence and Evaluation in Policy Making: a Problem of Supply or Demand?. 2012, Institute for Government, London

Martens EP, Pestman WR, de Boer A, Belitser SV, Klungel OH: Instrumental variables: application and limitations. Epidemiology. 2006, 17: 260-267. 10.1097/01.ede.0000215160.88317.cb. doi:210.1097/1001.ede.0000215160.0000288317.cb

Bodea TD, Garrow LA, Meyer MD, Ross CL: Explaining obesity with urban form: a cautionary tale. Transportation. 2008, 35: 179-199. 10.1007/s11116-007-9148-2.

Heckman J, Ichimura H, Smith J, Todd P: Characterizing Selection Bias using Experimental Data. 1998, National Bureau of Economic Research, Cambridge MA, USA

Caliendo M, Kopeinig S: Some practical guidance for the implementation of propensity score matching. J Econ Surv. 2008, 22: 31-72. 10.1111/j.1467-6419.2007.00527.x.

DiNardo J: Natural experiments and quasi-natural experiments. The New Palgrave Dictionary of Economics. Edited by: Durlauf SN, Blume LE. 2008, Palgrave Macmillan, Basingstoke

Diamond JM, Robinson JA: Natural Experiments of History. 2010, Belknap, Cambridge MA, USA

Last JM: A Dictionary of Epidemiology. 2000, Oxford University Press, Oxford

Dunning T: Improving causal inference: strengths and limitations of natural experiments. Pol Res Q. 2008, 61: 282-293. 10.1177/1065912907306470.

Gelman A: Analysis of variance-why it is more important than ever. Ann Stat. 2005, 33: 1-53. 10.1214/009053604000001048.

Bagley MN, Mokhtarian PL: The impact of residential neighborhood type on travel behavior: a structural equations modeling approach. Ann Reg Sci. 2002, 36: 279-297. 10.1007/s001680200083.

Boone-Heinonen J, Gordon-Larsen P, Guilkey DK, Jacobs DR, Popkin BM: Environment and physical activity dynamics: the role of residential self-selection. Psychol Sport Exercise. 2011, 12: 54-60. 10.1016/j.psychsport.2009.09.003.

Heckman J: Building bridges between structural and program evaluation approaches to evaluating policy. J Econ Lit. 2010, 48: 356-10.1257/jel.48.2.356.

Heckman J, Vytlacil E: Structural equations, treatment effects, and econometric policy evaluation. Econometrica. 2005, 73: 669-738. 10.1111/j.1468-0262.2005.00594.x.

Angrist JD, Pischke JS: The credibility revolution in empirical economics: how better research design is taking the con out of econometrics. J Econ Perspect. 2010, 24: 3-30.

Deaton A: Instruments, randomization, and learning about development. J Econ Lit. 2010, 48: 424-455. 10.1257/jel.48.2.424.

Levine BJ: A question too complex for statistical modeling. Am J Public Health. 2011, 101: 773-773.

Card D, Kluve J, Weber A: Active labour market policy evaluations: a meta-analysis. Econ J. 2010, 120: F452-F477. 10.1111/j.1468-0297.2010.02387.x.

Reeves B, Deeks J, Higgins J, Wells G: Including non-randomized studies. Cochrane Handbook for Systematic Reviews of Interventions. Edited by: Higgins J, Green S. 2011, John Wiley and Sons, Chichester, UK

Deeks J, Dinnes J, D'Amico R, Sowden A, Sakarovitch C, Song F, Petticrew M, Altman D: Evaluating non-randomised intervention studies. Health Technol Assess. 2003, 7 (27): 1-173.

Odgaard-Jensen J, Vist G, Timmer A, Kunz R, Akl E, Schünemann H, Briel M, Nordmann A, Pregno S, Oxman A: Randomisation to protect against selection bias in healthcare trials. Cochrane Database Syst Rev. 2011, 13 (4): Art. No.: MR000012-doi:10.1002/14651858.MR000012.pub3

Glazerman S, Levy DM, Myers D: Nonexperimental versus experimental estimates of earnings impacts. Ann Am Acad Pol Soc Sci. 2003, 589: 63-93. 10.1177/0002716203254879.

Athanasopoulou A, Bradburn P, Hodgson H, Jennings A, Williams T, Kell M: Evaluation in Government. 2013, National Audit Office, London

Dehejia RH, Wahba S: Causal effects in nonexperimental studies: reevaluating the evaluation of training programs. J Am Stat Assoc. 1999, 94: 1053-1062. 10.1080/01621459.1999.10473858.

Dehejia RH, Wahba S: Propensity score-matching methods for nonexperimental causal studies. Rev Econ Stat. 2002, 84: 151-161. 10.1162/003465302317331982.

Smith JA, Todd PE: Does matching overcome LaLonde's critique of nonexperimental estimators?. J Econ. 2005, 125: 305-353. 10.1016/j.jeconom.2004.04.011.

LaLonde RJ: Evaluating the econometric evaluations of training programs with experimental data. Am Econ Rev. 1986, 76: 604-620.

Couch KA, Bifulco R: Can nonexperimental estimates replicate estimates based on random assignment in evaluations of school choice? a within-study comparison. J Policy Anal Manag. 2012, 31: 729-751. 10.1002/pam.20637.

Duvendack M, Hombrados JG, Palmer-Jones R, Waddington H: Assessing `what works' in international development: meta-analysis for sophisticated dummies. J Dev Effectiveness. 2012, 4: 456-471. 10.1080/19439342.2012.710642.

Murray J, Farrington D, Eisner M: Drawing conclusions about causes from systematic reviews of risk factors: the Cambridge quality checklists. J Exp Criminol. 2009, 5: 1-23. 10.1007/s11292-008-9066-0.

Stang A: Critical evaluation of the Newcastle-Ottawa scale for the assessment of the quality of nonrandomized studies in meta-analyses. Eur J Epidemiol. 2010, 25: 603-605. 10.1007/s10654-010-9491-z.

Shemilt I, Mugford M, Vale L, Marsh K, Donaldson C, Drummond M: Evidence synthesis, economics and public policy. Res Synthesis Methods. 2010, 1: 126-135. 10.1002/jrsm.14.

Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, Moher D, Becker BJ, Sipe TA, Thacker SB: Meta-analysis of observational studies in epidemiology. J Am Med Assoc. 2000, 283: 2008-2012. 10.1001/jama.283.15.2008.

Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, Schünemann HJ: GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008, 336: 924-926. 10.1136/bmj.39489.470347.AD.

Sherman LW, Gottfredson D, MacKenzie D, Eck J, Reuter P, Bushway S: Preventing Crime: What Works, What Doesn't, What's Promising. 1997, Department of Justice, Washington DC, USA

Rapid Evidence Assessment Toolkit. 2013, ?, London

Campbell S, Harper G: Quality in Policy Impact Evaluation: Understanding the effects of Policy from other Influences (supplementary Magenta Book guidance). 2012, HM Treasury, London

Sanderson S, Tatt ID, Higgins JPT: Tools for assessing quality and susceptibility to bias in observational studies in epidemiology: a systematic review and annotated bibliography. Int J Epidemiol. 2007, 36 (3): 666-676. 10.1093/ije/dym018.

Thomas BH, Ciliska D, Dobbins M, Micucci S: A process for systematically reviewing the literature: providing the research evidence for public health nursing interventions. Worldviews Evid-Based Nurs. 2004, 1: 176-184. 10.1111/j.1524-475X.2004.04006.x.

Chapter 5: systematic reviews of economic evaluations. Systematic Reviews: CRD's Guidance for Undertaking Reviews in Health Care. 2009, University of York, York, 199-219.

Higgins J, Green S: Chapter 8: assessing risk of bias in included studies. Cochrane Handbook for Systematic Reviews of Interventions. 2011, The Cochrane Collaboration, London

Moher D, Liberati A, Tetzlaff J, Altman DG: Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009, 339: b2535-10.1136/bmj.b2535.

Heckman J: Instrumental variables: a study of implicit behavioral assumptions used in making program evaluations. J Hum Resour. 1997, 32, No. 3: 441-462.

Imbens GW, Angrist JD: Identification and estimation of local average treatment effects. Econometrica. 1994, 62: 467-475. 10.2307/2951620.

Ichino A, Winter-Ebmer R: Lower and upper bounds of returns to schooling: an exercise in IV estimation with different instruments. Eur Econ Rev. 1999, 43: 889-901. 10.1016/S0014-2921(98)00102-0.

Heckman JJ, Urzua S: Comparing IV with structural models: what simple IV can and cannot identify. J Econ. 2010, 156: 27-37. 10.1016/j.jeconom.2009.09.006.

Newhouse JP, McClellan M: Econometrics in outcomes research: the use of Instrumental Variables. Annu Rev Public Health. 1998, 19: 17-34. 10.1146/annurev.publhealth.19.1.17.

Angrist J, Krueger AB: Instrumental Variables and the Search for Identification: From Supply and Demand to Natural Experiments. 2001, National Bureau of Economic Research, Cambridge MA, USA

Williamson E, Morley R, Lucas A, Carpenter J: Propensity scores: from naïve enthusiasm to intuitive understanding. Stat Methods Med Res. 2012, 21: 273-293. 10.1177/0962280210394483.

Von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP: The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Lancet. 2007, 370: 1453-1457. 10.1016/S0140-6736(07)61602-X.

Boutron I, Moher D, Tugwell P, Giraudeau B, Poiraudeau S, Nizard R, Ravaud P: A checklist to evaluate a report of a nonpharmacological trial (CLEAR NPT) was developed using consensus. J Clin Epidemiol. 2005, 58: 1233-1240. 10.1016/j.jclinepi.2005.05.004.

Jones A, Bentham G, Foster C, Hillsdon M, Panter J: Tackling Obesities: Future Choices - Obesogenic Environments - Evidence Review. 2007, Government Office for Science, London

Fotheringham AS, Wong DW: The modifiable areal unit problem in multivariate statistical analysis. Environ Plann A. 1991, 23: 1025-1044. 10.1068/a231025.

Acknowledgements

The work was undertaken by the Centre for Diet and Activity Research (CEDAR), a UKCRC Public Health Research Centre of Excellence. Funding from the British Heart Foundation, Cancer Research UK, Economic and Social Research Council (MRC), Medical Research Council, the National Institute for Health Research, and the Wellcome Trust, under the auspices of the UK Clinical Research Collaboration, is gratefully acknowledged. David Ogilvie is also supported by the MRC [Unit Programme number MC_UU_12015/6]. No financial disclosures were reported by the authors of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

AM undertook the review and drafted the manuscript. DO and MS participated at all stages in the design of the study and helped to draft the manuscript, revising it critically for important intellectual content. All authors read and approved the final manuscript.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Martin, A., Ogilvie, D. & Suhrcke, M. Evaluating causal relationships between urban built environment characteristics and obesity: a methodological review of observational studies. Int J Behav Nutr Phys Act 11, 142 (2014). https://doi.org/10.1186/s12966-014-0142-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12966-014-0142-8