Abstract

Objectives

Health interventions in a clinical setting may be complex. This is particularly true of clinical interventions which require systems reorganization or behavioural change, and/or when implementation involves additional challenges not captured within a clinical trial setting. Medical Research Council guidance on complex interventions highlights the need to consider economic evaluation alongside implementation. However, the extent to which this guidance has been adhered to, and how, is unclear. The failure to incorporate implementation within the evaluation of an intervention may hinder the translation of research findings into routine practice. This will have consequences for patient care. This study examined the methods used to address implementation within health research conducted through funding from the National Institute for Health Research (NIHR) Health Technology Assessment (HTA) programme.

Methods

We conducted a rapid review using a systematic approach. We included all NIHR HTA monographs which contained the word “implementation” within the title or abstract published between 2014 and 2020. We assessed the studies according to existing recommendations for specifying and reporting implementation approaches in research. Additional themes which were not included in the recommendation, but were of particular relevance to our research question, were also identified and summarized in a narrative synthesis.

Results

The extent to which implementation was formally incorporated, and defined, varied among studies. Methods for examining implementation ranged from single stakeholder engagement events to the more comprehensive process evaluation. There was no obvious pattern as to whether approaches to implementation had evolved over recent years. Approximately 50% (22/42) of studies included an economic evaluation. Of these, two studies included the use of qualitative data obtained within the study to quantitatively inform aspects relating to implementation and economic evaluation in their study.

Discussion

A variety of approaches were identified for incorporating implementation within an HTA. However, they did not go far enough in terms of incorporating implementation into the actual design and evaluation. To ensure the implementation of clinically effective and cost-effective interventions, we propose that further guidance on how to incorporate implementation within complex interventions is required. Incorporating implementation into economic evaluation provides a step in this direction.

Similar content being viewed by others

Contribution to the literature

-

Current guidance on developing and evaluating complex interventions recommends that implementation should be considered as part of a cyclical process—development, feasibility/piloting, evaluation, and implementation.

-

There are no formal guidelines or frameworks for how implementation can be incorporated within a holistic evaluation of a health technology.

-

Our review sought to identify if, and how, implementation has been taken into account in NIHR HTA research over the last 6 years.

-

Our review found that, although informal and inconsistent, methods are available to address implementation. Economic evaluation provides a set of tools which can aid implementation. However, further research and formal guidance are required to ensure the translation of research findings into clinical practice.

Background

Clinical research findings are often challenging to implement into routine clinical practice. This is particularly true of complex interventions which require significant system reorganization, behavioural change, or when implementation involves additional challenges which are not captured within a clinical trial setting. To ensure potentially beneficial research findings are effectively translated into routine clinical practice, one needs to consider implementation.

There are many reasons why a potentially promising health technology observed in a clinical trial setting may not translate into an improvement in patient outcomes in a routine clinical setting [1]. Among these is the consideration of the barriers presented by costs and consequences not observed in a trial setting. The underuse of potentially beneficial health interventions has consequences in terms of potential patient benefit forgone [2].

Given that limited resources are available to generate patient health outcomes in a publicly funded healthcare system, it is necessary to consider both the clinical effectiveness and cost-effectiveness of health technologies. This includes the choice of how, and indeed whether, to implement a health technology [3, 4]. Economic evaluation provides a tool by which researchers can determine not only whether or not a health technology should be implemented and the extent of implementation required, but also the conditions under which a technology would be expected to be cost-effective. In the realm of complex interventions, it may also be necessary to consider the cost-effectiveness of systems-level changes in healthcare provision and the cost-effectiveness of a single technology given alternative configurations of the healthcare system or clinical pathway.

Economic evaluation plays an increasingly crucial role in the evaluation of health technologies. However, despite this, economic evaluation rarely considers explicitly the challenge of implementation. In recent years, some methodological tools have been developed which seek to bridge the gap between economic evaluation and implementation science [5,6,7,8]. Economic evaluation can potentially aid implementation in two ways. It can either be used to compare alternative implementation strategies—i.e. by considering the costs and consequences of implementation strategy X, compared with Y [5]. Alternatively, implementation challenges can be incorporated within the economic evaluation of a technology—i.e. by adopting a mixed-methods approach to economic evaluation [6,7,8].

Although typically the reserve of population health studies, complex interventions are increasingly relevant to interventions in a clinical setting. The line which distinguishes a “simple” from a “complex” intervention is blurred. Indeed, some argue that the distinction relates to the choice of research question, rather than the intervention itself [9, 10]. From the perspective of a health technology assessment (HTA) body, whose remit is to consider the clinical effectiveness and cost-effectiveness of a health intervention, alongside equity and other social concerns, it could be argued that all interventions should be evaluated as complex interventions.

The importance of implementation is recognized in current Medical Research Council (MRC) guidance which highlights four phases for the assessment of complex interventions in a “cyclical sequence”: development, feasibility/piloting, evaluation, and implementation [11]. Furthermore, as part of the implementation element of a complex intervention, the MRC guidance highlights dissemination, surveillance and monitoring, and long-term follow-up as the key issues to consider—all following the evaluation process. There is no discussion of how implementation can be used to inform the evaluation process. The MRC guideline update is currently underway and will address additional elements including early economic evaluation alongside the consideration of implementation [12].

The National Institute for Health Research (NIHR) is the largest funder of health-related research in the United Kingdom. The need to undertake an economic evaluation of a health technology is a core component of the NIHR Health Technology Assessment (HTA) programme. Therefore, this rapid review sought to examine how implementation has been incorporated into NIHR HTA research over the past 6 years.

Methods

We conducted a rapid review, using a systematic approach [13], to examine how implementation has being taken into account within NIHR HTA research. We applied the Proctor et al. (2012) checklist to identify how issues relating to implementation had been included within each study [14, 15]. In addition, we identified additional themes that are relevant but not captured within the Proctor et al. (2012) checklist. A narrative synthesis was undertaken using these key themes to evaluate and discuss the identified studies.

Criteria for inclusion of studies

We included NIHR HTA monographs published over the period September 2014—September 2020. All monographs which contained the word “implementation” within the title or abstract were included for review. Details of the search terms are given in Table 1. All monographs obtained from the search were included in the review. No exclusions were made based on participants, interventions, comparisons, outcomes, or study design. As the purpose of this review was to evaluate how implementation has been incorporated into all studies identified in the review, no quality assessment of the identified studies was required.

Database searched

We searched the NIHR HTA database via Medline.

Data extraction

All monographs were retrieved from Medline and exported to Endnote X7.0.2. They were initially reviewed, and data were extracted by one researcher. For the purpose of validation, a random sample of 10% of the monographs were subsequently reviewed independently by two additional researchers.

Data synthesis and presentation

All monographs were reviewed and assessed according to the Procter et al. (2012) checklist. This checklist was designed to provide guidance for researchers planning an implementation study. It contains a list of criteria which the authors recommend should be addressed within a study which aims to evaluate implementation. It is based on a review of successful implementation study research grants and the broader literature on implementation studies. Other checklists have been used when assessing the quality of studies used to inform implementation [16, 17]. However, the focus of these checklists was on the quality of survey methods used to inform implementation, rather than a focus on how implementation had been incorporated into the study. To date, we are not aware of any commonly accepted tool for incorporating implementation into the development and evaluation of a study. For this reason, we believed the Proctor et al. (2012) checklist served as a suitable tool for assessing the extent to which implementation issues have been incorporated within the studies included in our review. The key components relating to implementation that we used to critique the studies in our review, based on the Proctor et al. (2012) checklist, are identified in Table 2.

Due to the limitations associated with the use of the Proctor et al. (2016) checklist for the purpose of this study, a narrative synthesis was used to identified additional themes relevant to the issue of implementation, but not captured within the Proctor checklist [18, 19]. We grouped “themes” not captured within Proctor. These themes were identified by the three study authors as themes which can aid the incorporation of implementation within economic evaluation. We identified and presented these themes alongside each study in matrix form in Table 3. As there was no “standardized metric” among studies, meta-analysis of results was not appropriate. We evaluated how the inclusion or exclusion of these additional themes served to hinder or facilitate the incorporation of implementation within the studies. We discussed heterogeneity of our results in terms of the consistency of approach and any pattern of change over time. Limitations to our review, such as databases searched and themes identified, are discussed in the Discussion section.

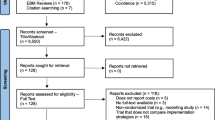

Results

Four hundred and forty-five studies were identified in the NIHR HTA programme between September 2014 and September 2020. Forty-two (9%) of these studies included the word “implementation” in the title or abstract (Fig. 1).

The extent to which implementation was formally incorporated in the analysis, and how implementation was defined, varied among studies. No studies were excluded from the review. Seven themes which are not included in the Proctor et al. (2012) checklist [study type, process evaluation (“the process of understanding the functioning of an intervention, by examining implementation, mechanisms of impact, and contextual factors” [20]), barriers and facilitators, quantitative evaluation of implementation, economic evaluation, recommendations, and future work] were of particular relevance to our research question (Table 3).

Study type and setting

Twenty-one (50%) studies were either based on randomized controlled trials (RCTs) or pilot RCTs, while the remaining 50% of studies were a mix of cohort studies, modelling studies, or literature reviews (one monograph was a methods study) (Table 3). Twenty-eight studies assessed an intervention which applied to a clinical setting, while the remaining studies involved a population intervention (Table 3). Ten of the studies included discussed “setting” and the extent to which there was a readiness to adopt a new intervention or capacity to change (Table 2).

Process evaluation, stakeholder engagement, and barriers and facilitators

A full process evaluation was included within 15 of the studies (Table 3). Two studies included a conceptual model of the decision problem [21, 22] (Table 3). In evaluating an intervention aimed at reducing bullying and aggression in schools, Bonnell et al. [23] used a conceptual model to map out and disaggregate the relationships between intervention inputs and how these were mediated via behavioural change and environmental change to produce health outcomes. A justification for the choice of interventions being considered was given in every monograph reviewed (Table 2).

Twenty-nine of the studies included reported engaging with stakeholders during their study (Table 2). Thirty-four studies included a discussion on barriers and facilitators to implementing an intervention (Table 3). This was the most common method by which implementation was considered within the studies included in this review.

Quantitative evaluation of implementation and economic evaluation

Twenty-three of the studies included an economic evaluation (Table 3). Three studies included the use of quantitative data from a process evaluation to address implementation within their economic evaluation [24,25,26] (Table 3). For example, Richards [26] used semi-structured interviews to elicit data on nurse time required to undertake psychological care alongside cardiac rehabilitation in a pilot RCT. These data were then used to estimate the cost of nurse time.

Francis et al. [25] undertook a multicentre RCT in 86 general practitioner (GP) offices to evaluate the use of point-of-care testing to guide the management of antibiotic prescriptions in patients with chronic obstructive pulmonary disease. Embedded within the trial, a process evaluation found that staff time and initial training and equipment costs were a potential barrier to implementation of testing in routine practice. These findings were included within the economic evaluation. The results of the economic evaluation were then presented in terms of cost-effectiveness (cost required to reduce the number of people consuming at least one dose of antibiotics by 1%), cost-utility (cost per quality-adjusted life-year [QALY]), and cost consequence (where costs were presented alongside clinical outcomes in tabular form to allow decision-makers to determine for themselves the value they place on each clinical outcome in the trial).

Seguin et al. undertook a mixed-methods study to evaluate the use of self-sample kits for increasing HIV testing among black Africans in the United Kingdom [24]. The qualitative information collected in the process evaluation was used to guide the base case economic evaluation and to inform where sensitivity analyses were required around their assumptions. For example, the qualitative evaluation highlighted challenges in estimating the time required for a nurse to explain the intervention to the patient, since the majority of the appointment was spent explaining the study, obtaining consent, and recording baseline characteristics. As a result, reliable data on the time required to explain how to use the self-sample kit was not obtained. This informed the sensitivity analysis of the cost-effectiveness results, where nurse time and cost required to explain the intervention were varied and demonstrated that this was highly unlikely to impact on the overall cost-effectiveness results.

In all three of these studies, this involved the inclusion of additional implementation-related costs of delivering an intervention. No studies evaluated the relative cost-effectiveness of alternative implementation strategies.

Implementation was typically considered the remit of the qualitative researchers only, taking the form of a separate chapter which was then considered in the discussion section alongside the primary study results. Hence, there was little to no consideration of how implementation would impact on the economics of the intervention. For example, Little et al. undertook an RCT to investigate streptococcal management in primary care which included a nested qualitative study and economic evaluation [27]. The qualitative study gathered GP, nurse, and patient views regarding the challenges associated with the use of streptococcal tests in primary care. However, these data were not then used to consider the economics of alternative implementation strategies or to test the robustness of their results to alternative assumptions regarding implementation.

Clear recommendations for implementation and future work

No studies included within the review specified implementation as a primary objective of their study. However, 23 of the studies referred to implementation within their specification of the study objectives as an issue for consideration. Thirty-three studies considered implementation in their discussion section only. Twenty-one of the studies included provided clear recommendations on implementation (Table 3). For example, Whitaker et al. (2016) suggested that future economic evaluations of interventions to reduce unwanted pregnancies in teenagers adopt a “multi-agency perspective”, due to the potential cost impact of interventions on not only health, but social care providers also [22]. Surr et al. [28] evaluated the use of dementia care mapping (DCM) to reduce agitation and improve outcomes in care home residents with dementia. This was a pragmatic RCT of a complex intervention which included a process evaluation. The intervention was not found to be clinically effective or cost-effective. However, the process evaluation identified a significant challenge in adherence to the intervention—10% of care homes failed to participate at all in the intervention, and only 13% adhered to the intervention protocol over the required period to an acceptable level. Two homes withdrew from the study—one citing a personal belief in the ineffectiveness of the intervention. Therefore, recommendations included considering alternative modes of implementation which were not reliant on care home staff for delivery [28]. The discussion of implementation as an issue for “further research” was reported in 22 of the studies included (Table 3).

Discussion

The extent to which implementation was formally considered varied among studies. Methods for examining implementation ranged from single stakeholder engagement events to the more comprehensive process evaluation. There was no obvious pattern as to whether approaches to implementation had evolved over recent years. Approximately half of the studies included an economic evaluation. However, it was uncommon for the economic analyses to incorporate issues relating to implementation. Where issues relating to implementation were including in the economic evaluation, this was limited to additional costs only. Where implementation of an intervention was considered more generally, such as in the process evaluation utilized in the Surr et al. [28] study, they found that it was difficult to determine if the lack of effectiveness of the intervention was a result of an inherent lack of efficacy in the intervention itself or due to implementation challenges. This highlights the need to consider implementation alongside the evaluation of a health technology throughout the design and evaluation life cycle.

Current MRC guidance on developing and evaluating complex interventions stresses the importance of considering development, feasibility/piloting, evaluation, and implementation in a cyclical sequence. Specifically, they suggest involving stakeholders in the choice of question and design of the research to ensure relevance. They also suggest taking into account context, such that benefits and costs which are not captured in study can be incorporated into the analysis. Our review would suggest that this guidance is not consistently adhered to in HTA studies over the last 6 years (see Stakeholders and Setting within the Table 3). There is no obvious trend in terms of how studies have incorporated implementation issues over time. A potential reason for this lack of consistency is perhaps that, although guidance is provided by the MRC on what to include within an evaluation of a complex intervention, there is little guidance on how this should be included.

The Proctor et al. (2012) checklist provides a set of key issues which need to be considered when undertaking an implementation study. This review has assessed NIHR HTA studies over the last 6 years which have included implementation. Although the purpose of these studies was not explicitly to undertake an implementation study, it is worth considering to what extent they would be judged sufficient to undertake an implementation study based on the checklist suggested by Proctor et al. (2012). Our findings suggest that the studies identified in our review have not fully addressed implementation and that they need to go further. The necessary elements, such as team expertise, are often already available within the project team. What is required is guidance as to how quantitative and qualitative methods can be integrated, alongside early stakeholder engagement, so as to allow for implementation to be woven into every stage in the evaluation.

Methods for economic evaluation are well established for assessing the value for money of competing interventions, given a fixed budget constraint for the healthcare system. However, an intervention which appears highly cost-effective based on these cost-effectiveness methods as they are applied to simple interventions may no longer be cost-effective once the process of implementation is considered. This is partly due to the impact complex interventions can have on both other services within the same disease area (e.g. acute treatment versus rehabilitation, patient pathway, and organizational challenges, etc.) and also on non-health sectors (e.g. education, justice, defence, etc.). The need to consider how the costs and benefits of health technologies fall on difference sectors, and budgets, reinforces the need for economic evaluation which considers these trade-offs simultaneously. The question of whether costs “unrelated” to an intervention ought to be included within an economic evaluation remains a contentious issue [29]. For example, mechanical thrombectomy is a costly, but cost-effective, treatment available for patients with acute ischaemic stroke [30]. Should the initial fixed capital costs of the comprehensive stroke unit and staff training which is required to undertake this procedure be included within an economic evaluation or just the per-procedure variable costs? Drummond argues that if health benefits arising from an intervention are projected over an individual’s lifetime, then all healthcare costs should similarly be projected [31]. The recommended approach here would be to annuitize the initial capital cost over the useful life of the asset to produce an equivalent annual cost. However, we still have the challenge of how to capture healthcare costs attributable to different budget holders within a single economic evaluation. Indeed, Wildman et al. suggest that new funding models may be required to address the challenge of matching benefits and opportunity costs which fall on different sectors when implementing complex interventions [32].

The preferred measure for estimating clinical benefits from a health economic evaluation perspective is the QALY. However, the costs and benefits of competing healthcare interventions are not always sufficiently captured within a QALY outcome. This is particularly an issue when considering the implementation of a complex intervention, where multiple outcomes may be relevant to multiple stakeholders. Methods for economic evaluation which do not reply upon the QALY are available, including cost-effectiveness, cost consequence, multiple-criteria decision analysis (MCDA), and discrete choice experiments (DCEs). However, these methods are not without their limitations and have been discussed extensively elsewhere [33, 34].

In addition to the barriers imposed by implementation costs, and the problem of determining which outcomes ought to be considered, further barriers to implementation remain. These include issues relating to the design of the healthcare system and the political environment in which these decisions take place. Smith et al. suggest a range of solutions for addressing barriers to implementation which go beyond cost-effectiveness analysis [35]. These include the need to model and disaggregate a range of potential outcomes, depending on alternative implementation scenarios and system configurations; the use of qualitative and quantitative evaluation techniques; and the involvement of the public in the decision-making process.

While not utilized in any of the studies included in this review, existing methods are available for estimating the “value of implementation” within an economic evaluation [5, 6, 8]. These typically focus on either estimating the potential cost-effectiveness of alternative implementation strategies, the trade-off between directing resources towards further research or towards further implementation, or establishing a “break-even” level of implementation at which an intervention may be cost-effective. However, these methods do not consider the initial challenge of deciding what outcomes ought to be included when attempting to incorporate implementation issues into the economic evaluation of a complex intervention, nor how these outcomes should be evaluated. While useful, these methods tackle only a subset of the issues relating to implementation and are designed to be utilized following a cost-effectiveness analysis. We argue that we need to understand the potential challenges of implementation before we begin an economic evaluation so that these issues can be incorporated into the analysis.

More descriptive methods are also being developed to aid the economic evaluation of implementation. Anderson et al. (2016) advocate for a more “realist” approach to economic evaluation where, rather than a focus on “measurement”, the focus is on understanding what works, for whom, and in what circumstances [36, 37]. More recently, McMeekin et al. demonstrated the use of conceptual modelling alongside economic evaluation to explore the relationship between the disease, treatment, and other potential mediators which impact on the “success” of an intervention [38]. Both of these methodologies are contrasted with a more “black-box” approach to economic evaluation. Dopp et al. developed a framework for “mixed-method economic evaluation” in implementation science, highlighting the benefits to implementation science researchers from undertaking economic evaluations with context-specific information capable of informing the implementation process [7]. Each of these tools constitute another piece in the puzzle of integrating implementation within economic evaluation.

The implementation of health technologies is a complex problem. As such, it is unlikely that a single new methodology or perspective will address all the potential challenges associated with implementation. However, the incorporation of implementation issues into economic evaluation provides one route by which we can begin to address this problem and produce research which is more useful to decision-makers in a “real-world” setting.

Economic evaluations are increasingly incorporated within clinical trials with the aim of supporting the reimbursement decision-making process [39]. Analogous to the introduction of economic evaluation into clinical trials, we believe that economic evaluation should play a key role in guiding the process of implementing new interventions into routine practice.

THE NIHR HTA programme is only one funder of clinical research within the United Kingdom. An extensive search of other databases may have identified methods not included within our review. However, for pragmatic purposes, and due to the prominent role played by the NIHR HTA programme in setting the research agenda in the United Kingdom, we chose to limit our search to this database only. We limited our search to studies which included the word “implementation” within the title or abstract. There are a range of terms which may relate to implementation—e.g. capacity, acceptability, stakeholder, etc. However, as our aim was to capture how any of these issues relate specifically to the challenge of implementation, we think the choice to focus on this term is reasonable. It is a limitation of this study, but also a key point, that no guidance is available for evaluating how implementation has been incorporated within an HTA. To facilitate better implementation of research findings, further guidance will be required to help researchers decide how implementation ought to be considered within an economic evaluation from the outset and how these data should be analysed.

Conclusion

There are currently a variety of approaches available to incorporate implementation within an HTA. While they all provide some insight into the issues surrounding implementation, they do not go far enough in terms of evaluation and giving recommendations on specific implementation strategies. Furthermore, the issues of economic evaluation and implementation are typically considered in isolation—with implementation factors only considered after the economic evaluation has taken place. Given the MRC’s warning that an evaluation which does not include a “proper consideration of the practical issues of implementation will result in weaker interventions”, this is a surprising finding [11].

Our review has demonstrated a lack of consistency in how implementation has been incorporated within NIHR HTA-funded research and, hence, a need for further guidance in this area. We argue that implementation ought to be considered early in the evaluation of a complex intervention. We further argue that implementation and economic evaluation ought to be integrated, such that an appreciation of the economic implications of implementation issues are considered iteratively throughout the evaluation process. We recommend a more strategic approach to considering implementation—plan ahead and collect data which will allow for a quantitative analysis, which can be supplemented by qualitative work to inform implementation. This can conveniently be done within the economic evaluation framework.

Authors' information

The main author is a research associate in Health Economics and Health Technology Assessment within the Institute of Health and Wellbeing at the University of Glasgow. He is undertaking a PhD on methods for incorporating implementation within the economic evaluation of HTAs.

Availability of data and materials

All data generated or analysed during this study are included in this published article.

References

Hoomans T, Severens JL. Economic evaluation of implementation strategies in health care. Implement Sci. 2014;9(1):168.

McGlynn EA, Asch SM, Adams J, Keesey J, Hicks J, DeCristofaro A, et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348(26):2635–45.

Eisman AB, Kilbourne AM, Dopp AR, Saldana L, Eisenberg D. Economic evaluation in implementation science: making the business case for implementation strategies. Psychiatry Res. 2020;283:112433.

Roberts SLE, Healey A, Sevdalis N. Use of health economic evaluation in the implementation and improvement science fields—a systematic literature review. Implement Sci. 2019;14(1):72.

Whyte S, Dixon S, Faria R, Walker S, Palmer S, Sculpher M, et al. Estimating the cost-effectiveness of implementation: Is sufficient evidence available? Value in Health. 2016;19(2):138–44.

Fenwick E, Claxton K, Sculpher M. The value of implementation and the value of information: combined and uneven development. Med Decis Mak. 2008;28(1):21–32.

Dopp AR, Mundey P, Beasley LO, Silovsky JF, Eisenberg D. Mixed-method approaches to strengthen economic evaluations in implementation research. Implement Sci. 2019;14(1):2.

Walker SM, Faria R, Palmer SJ, Sculpher M. Getting cost-effective technologies into practice: policy research unit in economic evaluation of health and care interventions (EEPRU); 2014.

Petticrew M. When are complex interventions ‘complex’? When are simple interventions ‘simple’? Eur J Pub Health. 2011;21(4):397–8.

Moore GF, Evans RE, Hawkins J, Littlecott HJ, Turley R. All interventions are complex, but some are more complex than others: using iCAT_SR to assess complexity. Cochrane Database Syst Rev. 2017;7:84.

Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:1655.

Craig P. Developing and evaluating complex interventions: current thinking (oral presentation). Economic Evaluation of Complex Interventions Symposium, Univeristy of Glasgow, 31st August 2017. 2017.

Dobbins M. Rapid Review Guidebook: steps for conducting a rapid review. Obtained on 24/5/2019. https://wwwnccmtca/uploads/media/media/0001/01/a816af720e4d587e13da6bb307df8c907a5dff9apdf. 2017.

Proctor EK, Powell BJ, Baumann AA, Hamilton AM, Santens RL. Writing implementation research grant proposals: ten key ingredients. Implement Sci. 2012;7(1):96.

Neta G, Brownson RC, Chambers DA. Opportunities for epidemiologists in implementation science: a primer. Am J Epidemiol. 2018;187(5):899–910.

Burns KE, Duffett M, Kho ME, Meade MO, Adhikari NK, Sinuff T, et al. A guide for the design and conduct of self-administered surveys of clinicians. CMAJ. 2008;179(3):245–52.

Management CfE-B. Critical Appraisal of a Cross-Sectional Study (Survey). Obtained on 18/09/2019. http://wwwcebmaorg/wp-content/uploads/Critical-Appraisal-Questions-for-a-Cross-Sectional-Study-july-2014pdf. 2014.

Popay J, Roberts H, Sowden A, Petticrew M, Arai L, Rodgers M, et al. Guidance on the conduct of narrative synthesis in systematic reviews. A product from the ESRC methods programme Version. PLoS ONE. 2006;1:92.

Campbell M, McKenzie JE, Sowden A, Katikireddi SV, Brennan SE, Ellis S, et al. Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. BMJ. 2020;368:l6890.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:88.

Bonell C, Fletcher A, Fitzgerald-Yau N, Hale D, Allen E, Elbourne D, et al. Initiating change locally in bullying and aggression through the school environment (INCLUSIVE): a pilot randomised controlled trial. Health Technol Assess. 2015;19(53):1–109.

Whitaker R, Hendry M, Aslam R, Booth A, Carter B, Charles JM, et al. Intervention now to eliminate repeat unintended pregnancy in teenagers (INTERUPT): a systematic review of intervention effectiveness and cost-effectiveness, and qualitative and realist synthesis of implementation factors and user engagement. Health Technol Assess. 2016;20(16):1–214.

Bonell C, Fletcher A, Fitzgerald-Yau N, Hale D, Allen E, Elbourne D et al. Initiating change locally in bullying and aggression through the school environment (INCLUSIVE): a pilot randomised controlled trial. Health technology assessment (Winchester, England). 2015;19(53):1–109, vii-viii.

Seguin M, Dodds C, Mugweni E, McDaid L, Flowers P, Wayal S, et al. Self-sampling kits to increase HIV testing among black Africans in the UK: the HAUS mixed-methods study. Health Technol Assess. 2018;22(22):1–158.

Francis NA, Gillespie D, White P, Bates J, Lowe R, Sewell B, et al. C-reactive protein point-of-care testing for safely reducing antibiotics for acute exacerbations of chronic obstructive pulmonary disease: the PACE RCT. Health Technol Assess. 2020;24(15):1–108.

Richards SH, Campbell JL, Dickens C, Anderson R, Gandhi M, Gibson A, et al. Enhanced psychological care in cardiac rehabilitation services for patients with new-onset depression: the CADENCE feasibility study and pilot RCT. Health Technol Assess. 2018;22(30):1–220.

Little P, Hobbs FD, Moore M, Mant D, Williamson I, McNulty C, et al. Primary care streptococcal management (PRISM) study: in vitro study, diagnostic cohorts and a pragmatic adaptive randomised controlled trial with nested qualitative study and cost-effectiveness study. Health Technol Assess. 2020;18(6):1–101.

Surr CA, Holloway I, Walwyn RE, Griffiths AW, Meads D, Kelley R, et al. Dementia Care Mapping™ to reduce agitation in care home residents with dementia: the EPIC cluster RCT. Health Technol Assess. 2020;24(16):1–172.

Drummond MF SM, Claxton K, Stoddart GL, Torrance GW. . Methods for the Economic Evaluation of Health Care Programmes. 4th ed. . Oxford: Oxford University Press. 2015;Obtained on 2/10/2020. https://books.google.co.uk/books?id=lvWACgAAQBAJ.

Heggie R, Wu O, White P, Ford GA, Wardlaw J, Brown MM, et al. Mechanical thrombectomy in patients with acute ischemic stroke: a cost-effectiveness and value of implementation analysis. Int J Stroke. 2019;15(8):881–98.

Drummond MF, Sculpher MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the Economic Evaluation of Health Care Programmes. Oxford: Oxford: Oxford University Press; 2015.

Wildman J, Wildman JM. Combining health and outcomes beyond health in complex evaluations of complex interventions: suggestions for economic evaluation. Value Health. 2019;22(5):511–7.

Marsh KD, Sculpher M, Caro JJ, Tervonen T. The use of MCDA in HTA: great potential, but more effort needed. Value in Health. 2018;21(4):394–7.

Tinelli M, Ryan M, Bond C. What, who and when? Incorporating a discrete choice experiment into an economic evaluation. Health Econ Rev. 2016;6(1):31.

Glassman A, Giedion U, Smith PC. What's in, what's out: designing benefits for universal health coverage. Glassman A, Giedion U, Smith PC, editors: Brookings Institution Press; 2017.

Anderson R, Hardwick R. Realism and resources: towards more explanatory economic evaluation. Evaluation. 2016;22(3):323–41.

Dalkin SM, Greenhalgh J, Jones D, Cunningham B, Lhussier M. What’s in a mechanism? Development of a key concept in realist evaluation. Implement Sci. 2015;10(1):49.

McMeekin N, Briggs A, Wu O. PRM135—developing a conceptual modelling framework for economic evaluation. Value in Health. 2017;20(9):A754–5.

Petrou S, Gray A. Economic evaluation alongside randomised controlled trials: design, conduct, analysis, and reporting. BMJ. 2011;342:1548.

Acknowledgements

Not applicable

Funding

This work was not funded.

Author information

Authors and Affiliations

Contributions

RH: design, data extraction and analysis, manuscript preparation. KB: design, independent validation of review (10% sample), comments on final manuscript. OW: design, independent validation of review (10% sample), comments on final manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Heggie, R., Boyd, K. & Wu, O. How has implementation been incorporated in health technology assessments in the United Kingdom? A systematic rapid review. Health Res Policy Sys 19, 118 (2021). https://doi.org/10.1186/s12961-021-00766-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12961-021-00766-2