Abstract

Background

Land Use Regression (LUR) is a popular method to explain and predict spatial contrasts in air pollution concentrations, but LUR models for ultrafine particles, such as particle number concentration (PNC) are especially scarce. Moreover, no models have been previously presented for the lung deposited surface area (LDSA) of ultrafine particles. The additional value of ultrafine particle metrics has not been well investigated due to lack of exposure measurements and models.

Methods

Air pollution measurements were performed in 2011 and 2012 in the eight areas of the Swiss SAPALDIA study at up to 40 sites per area for NO2 and at 20 sites in four areas for markers of particulate air pollution. We developed multi-area LUR models for biannual average concentrations of PM2.5, PM2.5 absorbance, PM10, PMcoarse, PNC and LDSA, as well as alpine, non-alpine and study area specific models for NO2, using predictor variables which were available at a national level. Models were validated using leave-one-out cross-validation, as well as independent external validation with routine monitoring data.

Results

Model explained variance (R2) was moderate for the various PM mass fractions PM2.5 (0.57), PM10 (0.63) and PMcoarse (0.45), and was high for PM2.5 absorbance (0.81), PNC (0.87) and LDSA (0.91). Study-area specific LUR models for NO2 (R2 range 0.52–0.89) outperformed combined-area alpine (R 2 = 0.53) and non-alpine (R 2 = 0.65) models in terms of both cross-validation and independent external validation, and were better able to account for between-area variability. Predictor variables related to traffic and national dispersion model estimates were important predictors.

Conclusions

LUR models for all pollutants captured spatial variability of long-term average concentrations, performed adequately in validation, and could be successfully applied to the SAPALDIA cohort. Dispersion model predictions or area indicators served well to capture the between area variance. For NO2, applying study-area specific models was preferable over applying combined-area alpine/non-alpine models. Correlations between pollutants were higher in the model predictions than in the measurements, so it will remain challenging to disentangle their health effects.

Similar content being viewed by others

Background

Several studies have documented large spatial contrasts in air pollution in European and US cities [1–4]. Land Use Regression (LUR) modeling has become a popular method for explaining the observed contrasts [5–10], as well as estimating outdoor pollution concentrations at the homes of participants of large epidemiological studies [11–14]. LUR relies on a spatially dense air pollution monitoring network, of which each site is characterized by a set of potential “predictor variables”, which are generally derived from Geographic Information Systems (GIS) [7]. In LUR, a regression model is developed which links the air pollution concentrations observed in the network to the most predictive environmental characteristics, such as traffic, land use and population. Depending on the pollutant, LUR modeling has been able to explain a moderate to large amount of spatial variability in concentration for a growing arsenal of pollutants.

Where traditionally, mostly nitrogen dioxides and particulate matter were modeled [7], a few recent LUR studies have also modeled ultrafine particles [15–21], mostly based on mobile [17, 20] or short period monitoring [16, 18, 19, 21], and therefore modeling concentrations which are not necessarily representative for the longer-term average. Long-term, spatially resolved monitoring campaigns for ultrafine particles (UFP) have been uncommon because the condensation particle counters which are typically used for measurements are costly and require daily maintenance. The MiniDisc devices used in our study could be deployed for longer periods with relatively little maintenance.

Typically, LUR models are developed for single cities, metropolitan areas or regions, and applied within the same geographical parameters. Some attempts have been presented to combine study areas [6, 10, 22–24], or transfer LUR models between areas [24–26]. Most studies conclude that locally developed models are favorable over combined-area or transferred models [10, 24–26]. However, it has been mentioned that combined-area models may allow epidemiological studies to pool epidemiological data from different areas and better exploit the between area contrasts, which would substantially increase the exposure range for some pollutants [22]. The background concentration difference between areas is often mentioned as a source of over- or under-prediction when applying these combined-area models, and has been addressed by including indicator variables [6], area-specific regional background [22] or recently satellite-observed background NO2 [23], or (like in this paper) larger scale dispersion models [10, 27]. Data for the latter two approaches may not be available for all pollutants, but these approaches have the advantage of being able to interpolate between study areas. The availability of common-source predictor data is crucial to the success of transferring LUR models or combining study areas [22, 25, 26]. If the allocation of land use categories differs between areas, or traffic intensity dataare obtained from different traffic models, this may compromise the quality of a combined-area model [10] or the ability to transfer models between areas [25].

This paper describes the development, performance and validation of multi-area LUR models for nitrogen dioxide (NO2), particulate matter <2.5 μm (PM2.5), PM2.5 filter absorbance, a marker for diesel exhaust particles (PM2.5 absorbance), particulate matter <10 μm (PM10), the coarse fraction calculated as the difference between PM10 and PM2.5 (PMcoarse), particle number concentration (PNC) and the lung deposited surface area (LDSA) of particles. For NO2, measurements and models cover all eight areas of the Swiss Study on Air Pollution and Lung and heart Diseases (SAPALDIA). For particle-related markers of air pollution, the study covers four of the eight areas. This paper presents one of the first LUR models representing long-term exposure to PNC and the first model for LDSA of ultrafine particles. Several toxicological studies suggest that the strength of the induced inflammatory response is related to the contact surface area of the pollutant with the alveolar cells, rather than its mass [28, 29], making it a biologically promising metric of exposure [30]. Moreover, the small size of ultrafine particles facilitates translocation into the bloodstream and uptake into cells [31]. However, due to the lack of exposure data, no epidemiological study on long-term effects of air pollution has evaluated the added value of its use. Furthermore, we evaluate the performance of study area-specific and multi-area NO2 models to predict concentrations of an independent validation dataset. The LUR models presented in this paper will be applied to the SAPALDIA study population to estimate subjects’ exposure to the above pollutants. The SAPALDIA cohort was initiated in 1991 and had follow-ups in 2002 and 2011 (SAPALDIA 3) [32, 33].

Methods

We developed LUR models for biannual average concentrations of NO2 for eight areas in Switzerland, and for PM2.5, PM2.5 absorbance, PM10, PMcoarse, PNC and LDSA for four areas, due to financial constraints, using a range of geographic predictor variables. NO2 models were developed for all eight areas combined, for each area separately, and for the alpine and non-alpine regions separately. All predictors were available nationwide. We used a supervised stepwise method to develop LUR models, maximizing model explained variance, while allowing only “plausible” directions of effect (e.g. increase in concentration for traffic, decrease in concentration for proximity to green space).

Air pollution measurement data

The SAPALDIA 3 measurement campaign and results have been described previously [34]. Briefly, eight study areas (Aarau, Basel, Davos, Geneva, Lugano, Montana, Payerne and Wald) were selected to cover the spatial distribution of SAPALDIA 3 cohort addresses (Additional file 1). Forty NO2 sampling sites were selected in each study area. In Basel, Geneva, Lugano and Wald, PM2.5, PM10, PNC and LDSA measurements were co-located with the NO2 samplers at 20 of the 40 sites. NO2 was measured with passive diffusion samplers (Passam AG, Männedorf, Switzerland), PM2.5 and PM10 were collected on filters using Harvard Impactors, PM2.5 absorbance was measured as reflectance on PM2.5 filters using a smoke stain reflectometer, and PNC and LDSA measurements were conducted with the Miniature Diffusion Size Classifier (MiniDiSC) (Fachhochschule Nordwestschweiz, Switzerland) [35]. All measurements were performed between January 2011 and December 2012. In each study area, regional background, urban background and traffic sites were selected. Because a substantial amount of spatial contrast is traffic-related, we chose to over-represent the number of street sites (±50 % of the total), including a wide range of different traffic intensities and street layouts. In Aarau, Davos, Montana and Payerne, all sites were measured simultaneously, three times for 14 days each in the cold, warm and intermediate seasons. In Basel, Geneva, Lugano and Wald, 20 sites (10 PM + NO2 and 10 NO2-only) were measured simultaneously for 14 days, while the remaining 20 sites were measured during the subsequent 14 days. This was again repeated 3 times in different seasons. A small number of sites for which valid measurements were only available for 1 season, were excluded from the analysis [34], explaining n < 40 for NO2 or n < 20 for PM2.5 or PM10 in some study areas (Table 2).

For each site, results from the three individual measurements were averaged to represent a bi-annual average over the years 2011 and 2012, by adjusting for the long-term concentration observed at a reference site which was centrally located in each study area. The temporal correction factor for each measurement was calculated as the ratio between the biannual mean and the average reference site measurement during the particular measurement period. Thus, it is assumed that the temporal variation observed at the fixed site monitor reflects the seasonal pattern of the entire area. This procedure has been extensively described before [34].

GIS predictor data

Sampling site coordinates were determined manually in GIS by investigators who had personally visited the sites, ensuring that the position was accurate relative to roads, and buildings. For each site, we used GIS to calculate 164 geographic predictor variables. To do this, we made use of digital datasets which were available on the European and National (Switzerland) level. A more detailed description of the predictor variables and how they were derived can be found in Table 1.

The following GIS source data were available:

-

1.

Building density

Building footprints were available for the year 2008 from Vector25, the digital landscape model of Switzerland [36]. Data covered the whole country plus several km beyond the borders. We calculated the total area covered by building footprints in various buffers.

-

2.

Population density

Aggregated census population data at a 100×100m grid were available for 2011 from the Bundesamt für Statistik (BfS) http://www.bfs.admin.ch/bfs/portal/de/index/dienstleistungen/geostat/datenbeschreibung/volks-__gebaeude-0.html for Switzerland only. For the border regions, we obtained a 100×100m CORINE (COoRdination of INformation on the Environment) population grid for 2000 for the surrounding countries (http://www.eea.europa.eu/data-and-maps/data/population-density-disaggregated-with-corine-land-cover-2000-2#tab-gisdata). The two grids were combined, adjusting for an average 4.65 % population growth in France, Germany, Italy and Austria over 2000–2011 (Additional file 2).

-

3.

Land use

Digital land cover (CORINE CLC-2006 Version 13, 02–2010) data were available from the European Environment Agency for the year 2006 for all of Europe including Switzerland. We calculated the surface area of 6 land use categories (high density residential, low density residential, airport, industry, natural and port), following re-classifications previously adopted in the ESCAPE [5, 6] and APMOSPHERE [37] projects.

-

4.

Digital road network

A digital road network (Vector 25) with resolution 1:25,000 was available from Swiss Topo (Bundesamt fur Landestopografie) at a national level for the year 2008, with modeled traffic intensity data for the same year. Road class (total 32 classes) and percentage heavy traffic were also available. Roads were distinguished as major if the traffic intensity was ≥ 5000 vehicles/24 h.

-

5.

Altitude

A swiss elevation map with a resolution of 200 m was available from the Bundesamt für Landestopografie (Federal Office for Topography), SwissTOPO, website: http://www.swisstopo.admin.ch/internet/swisstopo/de/home/products/height/dhm25.html. Sampling points were directly overlaid with this height grid.

-

6.

Dispersion model

PolluMap Gaussian dispersion model estimates were available for NO2, PM2.5 and PM10 at a 200×200m resolution, for the year 2010. These dispersion models have been shown to compare well to measurements, and details of their development have been published previously [38]. Sampling points were directly overlaid with the grids.

Few sites were within 500 m of a port or industrial area, or within 25 m of a major road, resulting in many 0-values for the smaller buffers of these land-use classes and traffic variables. Occasionally, these variables are selected as predictor variables, but this causes coefficients to be estimated with a lot of weight given to relatively few sites. This causes high Cook’s distance for the sites in question, and often unstable coefficients, which do not hold up in cross-validation, resulting in large differences between the model R2 and LOOCV R2, as previously noted for other LUR studies [5, 6]. The same happens for predictor variables which include extreme outliers. Therefore, we a priori restricted the set of eligible predictors, eliminating those which 1) had fewer than five sites with a value other than the most common value (typically 0); 2) had a maximum which was more than 3 times the P10-P90 range above P90, or 3) had a minimum which was more than 3 times the P10-P90 range under P10. The latter 2 criteria are based on the generally accepted criteria for outliers (lower limit P25-3*(P75-P25), upper limit P75 + 3*(P75-P25), but are less restrictive. The elimination process was repeated for each model, and so eligible variables varied, based on the number of sites, and on the selection of sites included in the model.

LUR model development

Linear regression models were developed using a supervised forward selection procedure, first evaluating univariate regressions. The corrected bi-annual average concentrations were evaluated against all eligible potential predictors. The predictor giving the highest adjusted explained variance (adjusted R2) was selected for inclusion in the model if the direction of effect was as defined a priori and the P-value was <0.10, following procedures used before [5, 6]. Subsequently, we evaluated if any of the remaining predictor variables further improved the model adjusted R2 by at least 0.01. Again, we selected the predictor giving the highest gain in adjusted R2, if it had a P-value <0.10 and the expected direction of effect. Additional variables were not selected if they changed the direction of effect of one of the previously included variables. This process continued until there were no more variables which fit the criteria, and improved the model adjusted R2 by at least 0.01.

As final steps, variables with a p-value above 0.10 were removed from the model. Furthermore, we checked that all Variance Inflation Factors (VIF) were lower than 3 (ensuring the absence of collinearity), and that Cook’s Distance values were below 1, ensuring the absence of highly influential observations disproportionately influencing a specific variable’s coefficient.

All analyses were done using SAS version 9.4. The Moran’s I statistic was calculated to indicate spatial autocorrelation of the model residuals (with weights proportional to the inverse distance squared). In addition, the significant dependence of model residuals on the study area was tested for the multi-area models (PROC GLM, with class variable for study area).

The modelling procedure is described in more detail in Additional file 5, Table 1. In the first phase of our modelling effort, we combined all measurement sites where the pollutant in question was measured to fit a single LUR model for each pollutant. We considered, but did not force in PolluMap model NO2 (for pollutant NO2), Pollumap PM2.5 (for pollutants PM2.5 and PM2.5 absorbance) and PM10 (for pollutants PM10 and PMcoarse). This way, we ensured continuity of exposure characterization across areas, and reduced the risk of over-fitting, which is higher for models developed based on a limited number of training sites [39–41]. No dispersion model predictors were evaluated for the main PNC and LDSA models. In the second phase (model 2, Additional file 5, Table 1), we addressed those multi-area models which did not adequately model between-area variability (resulting in significant spatial autocorrelation in the residuals). We tried to force PolluMap NO2 estimates into the NO2 model, to consider PolluMap NO2 for the PM2.5 absorbance model, and to consider PM2.5 and PM10 PolluMap for the PNC and LDSA models, In the third phase, we introduced area-indicators to account for between-area variance. These indicators were introduced to the model as dummy variables for each site in the area of Geneva, Lugano or Wald (Aarau being the reference). Ultimately, in the 4th phase,, we fitted an area-specific NO2 model for each study area to optimally capture local contrasts. In addition, we fitted an alpine (study areas Davos and Montana) and a non-alpine model (study areas Aarau, Basel, Geneva, Lugano, Payerne, Wald), retaining the option of making model predictions outside of the eight study areas, for sites above and below 1000 m, respectively. This was only possible for NO2, where 40 measurement sites per study area were available. We did not consider building local models for pollutants with only few sites (≤20) available per study area. The models that were ultimately selected for the epidemiological application are shown in the main paper. The selection process and the disregarded alternative models are shown in Additional file 5.

Validation and quality assurance

Model performance was evaluated in three ways: firstly by leave-one-out cross validation (LOOCV), where each site was sequentially left out from the model while the included variables were left unchanged. Each site’s value was then predicted from the model based on the n-1 sites, after which the R2 between observed and predicted values was calculated. Secondly, and only for those models combining multiple study areas, all sites from one particular study area were left out (leave-one-area-out cross validation (LOAOCV), while the remaining sites from the other areas were used to predict their values. The variables in the model were left unchanged. The predicted concentrations from the LOAOCV were compared to the measured concentrations, showing the degree of over- or under-prediction per study area.

Thirdly, external validation was performed using a set of routine monitoring stations from the National air quality monitoring network (NABEL) and several cantonal air monitoring agencies. We gathered data from all available stations in Switzerland which had annual average concentrations available for both 2011 and 2012 for NO2 (102 stations), PM2.5 (10 stations), elemental carbon (EC) as a marker of PM2.5 absorbance (these metrics are known to correlate well from previous studies [3], 18 stations), PM10 (82 stations) and PNC (13 stations). PMcoarse was calculated as the difference between PM10 and PM2.5 for 10 stations. No routine monitoring data was available for LDSA, since this is not measured as part of any routine monitoring networks. The predictor variables selected for the models were also extracted for these stations. To avoid predictions outside of the plausible concentration range, predictor values were truncated to the minimum and maximum values present in the various training data sets which were used to develop the LUR models [42]. Predictors derived from dispersion models were not truncated: these were assumed to reflect regional background pollution levels, and were therefore allowed to vary beyond the range present in the training dataset. For PM2.5, PM10 and PMcoarse, LUR models were then applied to all available stations. The PM2.5 absorbance and PNC models, which included area indicators, were applied to all stations which fell within 20 km of any of the measurement sites used to develop the model for that specific area. This resulted in 5 successful predictions for PM2.5 absorbance and 4 for PNC. Reducing the inclusion criterion further (e.g. to 10 km) would have resulted in too few sites (≤3) to allow any comparison. For NO2, we applied the area-specific models to stations within 10 km of the sites used for the modelling, the alpine models for stations above 1000 m and the non-alpine model for stations below 1000 m. Model predictions were then compared to the two-year (2011–2012) measured average concentrations, to best represent the time period for which the models were developed.

Sensitivity analyses

To allow a more direct comparison of model performance between pollutants, we additionally fitted an NO2 model for the same four study areas which also contributed to the PM and UFP models (Basel, Geneva, Lugano and Wald). We explored whether applying the local NO2 models within 10 km of the study areas, and alpine and non-alpine models everywhere else, gave the most accurate predictions (in comparison to applying alpine and non-alpine models only, or applying local models within 20 km of the study areas).

Results

Pollutant distribution characteristics are shown in Table 2 and are available in more detail in Eeftens et al. 2015 [34]. For all pollutants, substantial variation was present between as well as within the areas. Within-area contrasts were largest for NO2 and PMcoarse, while between-area contrasts were the dominant source of variability for the PM2.5, PM2.5 absorbance, PM10, PNC and LDSA [34].

The area-specific, alpine and non-alpine LUR models and LOOCV validation statistics for NO2 are presented in Table 3. Combined-area LUR models for PM2.5, PM2.5 absorbance, PMcoarse, PNC and LDSA are shown in Table 4. Pearson correlations between different pollutants are shown in Table 5 for the measured and for the LUR predicted concentrations. Model performance by area and LOAOCV statistics are shown in Additional file 3. Descriptive statistics of the predictor variables used in the models can be found in Additional file 4.

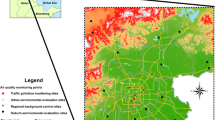

We observed substantial under- and over-prediction by study area, and significant spatial autocorrelation in the residuals for the eight-area NO2 model and the four-area PM2.5 absorbance, PNC and LDSA models (Additional file 5, Table 2). These models also showed dependence of the model residuals on study area, and substantial over- or under-prediction bias in some of the areas (Additional file 5, Table 3). We therefore ultimately fitted area-specific NO2 LUR models which could adequately capture local variability. Additionally, we fitted NO2 LUR models for alpine (Davos and Montana) and non-alpine (Aarau, Basel, Geneva, Lugano, Payerne, Wald) areas, which could be applied to predict NO2 exposures for addresses outside of the eight SAPALDIA areas, above and below 1000 m, respectively (Fig. 1). For PM2.5 absorbance, neither the PM2.5 nor the NO2 dispersion-model estimates were selected since neither explained the between area variability. For the novel markers of ultrafines (PNC and LDSA), allowing dispersion model estimates for PM10 and PM2.5 to enter the models resulted in no systematic under- of overprediction by area in the models (Additional file 5, Table 3). However, the inclusion of these dispersion-model estimates did not allow us to capture the spatial variation of ultrafines independently from the mass. To better explain between-area variability in these models, we introduced area-indicators for PM2.5 absorbance, PNC and LDSA.

Combined area NO2 models for alpine (Davos and Montana) and non-alpine (all others) areas yielded R2’s of 0.53 and 0.65, respectively, and performed similarly in cross-validation (LOOCV R 2 = 0.46 and 0.63, respectively) (Table 3). Area-specific NO2 models yielded an average R2 of 0.71 (range 0.52–0.89), which was higher than for the alpine and non-alpine NO2 models. LOOCV R2 values were on average 10 % (range 3–15 %) lower than model R2’s, indicating that models were generally less robust than the multi-area ones. None of the study area specific, nor the alpine or non-alpine NO2 models showed significant spatial autocorrelation. While the model residuals remained associated with the study area in the non-alpine model (Table 3), absolute over- and under-prediction for the different areas was small (Additional file 3). The alternative NO2 models without regional indicators, with PolluMap NO2 estimates, and with area indicators yielded an R2’s of 0.52, 0.52 and 0.64, respectively, but could not adequately explain between area variability, or were unable to predict exposures outside of the study areas (Additional file 5).

Multi-area LUR models explained a moderate amount of spatial variance for the different PM mass fractions PM2.5 (R 2 = 0.57), PM10 (R 2 = 0.63) and PMcoarse (R 2 = 0.45). Explained variance was higher for PM2.5 absorbance (R 2 = 0.81), PNC (R 2 = 0.87) and LDSA (R 2 = 0.91) (Table 4). For comparison, a model for NO2 based on the same four areas yielded a moderate R2 of 0.61 in sensitivity analyses (Additional file 5, Table 2). LOOCV R2 values were on average 5 % points (range 4–7 %) lower than model R2’s for all multi-area models, indicating that models were robust (Table 4). Multi-area models for PM2.5, PM10 and PMcoarse generally performed well in leave-one-area-out cross-validation, predicting similar amounts of within-area spatial variation, whether the area in question was included in the training dataset or not (Additional file 3). However, for all pollutants, the under- or over-prediction of absolute concentrations became more extreme if the area in question was left out from the training dataset (Additional file 3). Alternative models for PNC and LDSA which did not consider and regional indicators were unable to explain between area variability adequately. Alternative models which considered dispersion model predictors yielded high R2’s of 0.84 for PNC and 0.89 for LDSA. These models were able to predict between areas, but did not allow us to understand PNC and LDSA variability independently from PM mass. (Additional file 5).

LUR models were successfully applied to available routine monitoring sites and predicted 52 to 83 % of spatial variability for most pollutants (Table 6). Over- and under-prediction was small for NO2, PM2.5, PM2.5 absorbance and PM10 compared to the predicted concentration ranges. Over-prediction for PMcoarse was larger than for PM2.5 and PM10, likely because of the increased uncertainty that is introduced when subtracting PM2.5 from PM10. Sensitivity analysis showed that applying local NO2 models within 10 km of the study areas, and alpine and non-alpine models farther outside the study areas, outperformed applying alpine and non-alpine models only, or applying local models within 20 km of the study areas (Additional file 6). While the PNC model performed well in LOOCV, there was no association with the routinely measured averages at fixed monitoring sites. The four available routine monitors generally measured higher numbers of particles than our models predicted, and our PNC model predictions did not capture the spatial contrast measured by the four routine monitors well.

Discussion

We developed multi-area LUR models for NO2, PM2.5, PM2.5 absorbance, PM10, PMcoarse, PNC and LDSA with moderate to good explained variance across regions of Switzerland. Models for PM2.5 absorbance, PNC and LDSA explained the highest amount of spatial variance, and also performed best in LOOCV. Multi-area models for PM2.5, PM10 and PMcoarse adequately captured between-area contrasts, but area-indicators were necessary to adequately capture the between area contrasts for PM2.5 absorbance, PNC and LDSA. For NO2, study area specific models were preferable to any LUR models which combined areas, since they were able to explain more local spatial variance. However, they were most reliably applied within 10 km of the measurement sites which were used to develop the model. LOAOCV validation for the particulate air pollutant models shows that the multi-area models based on three areas predicted moderate to high amounts of spatial variation in the area which was left out. Validation using independent routine measurement sites shows that the developed models were able to predict moderate to high amounts of spatial variation, except for PNC. For LDSA, we could not verify our LUR model predictions against independent measurements.

LUR models for NO2, PM2.5, PM2.5 absorbance, PM10 and PMcoarse were previously developed based on measurement campaigns for the SAPALDIA study for the years 1993 and 2003 [10] and for the ESCAPE study for the years 2008 to 2010 [5, 6]. The previous SAPALDIA study also identified that fitting a combined-area model for NO2 was challenging because between area variability could not be adequately captured. The models developed in this paper generally performed similarly to previously developed models. Variations in performance may be explained by differences in the site selection procedure, different numbers of sites or the availability of better predictor variables. For a detailed discussion of this comparison and a table summarizing the performance of LUR models from the current and from previous studies in SAPALDIA study areas, we refer to Additional file 7.

There are few LUR models for PNC which can be compared with our model. In Switzerland, only one other LUR model was previously made for PNC [19]. This model was developed for the city of Basel and comprised repeated 20-min measurements at 57–59 measurement sites during three seasons. Median concentrations were log-transformed and a large fraction (50 %) of the (temporal) variability could be explained by the concurrently measured concentration at a nearby urban background site. Subsequently, spatial predictors derived from GIS, and other predictors related to season, meteorology, time and manually observed site characteristics were able to increase the explained variance to 58-68 % (depending on which predictor sets were evaluated) [19]. We cannot directly compare this model for PNC to ours, since it contained temporal as well as spatial terms and used log-transformed median concentrations. In comparison to PNC models from other countries [15–18, 20, 21], which are purely spatial, and used average, not-transformed concentration data, our models performed very well, yielding R2 values of 0.84 and 0.89, respectively. The Dutch PNC model for Amsterdam was the only other study based on weeklong observations per site [15], and had a notably higher explained variance (R 2 = 0.67, LOOCV R 2 = 0.57) than models which were based on shorter-term averages (10–60 min) [16, 18, 19, 21] or mobile monitoring [17, 20].

To understand the spatial predictors of PNC and LDSA independently of particle mass, our main models did not offer PM mass dispersion model estimates. However, in a sensitivity analysis, we allowed the use of dispersion model estimates for PM mass concentrations to enter the models, revealing a strong predictive power of the PM mass dispersion model estimate for the local levels of PNC and LDSA alike (Additional file 5). This may appear counterintuitive, given the rather different small-scale spatial distributions of freshly emitted particles – well indicated by PNC – as compared to the spatially more homogeneous PM2.5 and PM10. However, we found large spatial variability between study areas for long-term PNC and LDSA measurements [34]. The high predictive ability of dispersion model predictors in PNC and LDSA models and the high correlations in Table 5, show that between-area pollution contrasts are similar for NO2, PM mass, PNC and LDSA. The high spatial correlation between NO2, PNC and LDSA, which all characterize motor vehicle exhaust in the direct vicinity, was previously discussed in Eeftens et al. [34]. The selection strategy of choosing measurement sites close to residential locations, including side- and back-yards was aimed at capturing the residential variation of PNC and LDSA, rather than the high peaks along busy roads. Therefore, the spatial variability of PNC and LDSA also showed high correlations with other pollutants, such as PM2.5 and PM10 [34], and it could be predicted by dispersion modelled PM2.5 or PM10. (Additional file 5).

Variables related to traffic and major roads were selected in nearly all models, mostly in small buffer sizes (25 to 100 m). This has also been observed in earlier studies [5–7], and reflects the major impact of traffic on the adjacent roads on local air quality rather than reflecting the influence of ring roads and motorways. The dispersion model estimates from the Pollumap PM2.5 and PM10 models are found in the multi-area models for PM2.5, PM10 and PMcoarse, and account for a large fraction of the regional and between-area variability (Table 4). These models are too coarse to explain much of the local variability within study areas, and were only included in the area-specific NO2 models for Basel, Davos and Wald, but not as the first variable (Table 3). PM2.5, PM10, PMcoarse and PNC models further included large-buffer land-use variables on urban green and natural land, both serving as a sink of pollutants (negative coefficients). These land-use variables do not appear in the multi-area or local NO2 models. However, several of the local NO2 models include large to medium-size buffers of building density, population density and residential land, representing broader-scale urban activities. In addition, local NO2 models include industrial land-use (Aarau and Lugano) indicating local industrial sources. In the Basel and Lugano NO2 models, proximity to the Rhine river and Lugano lake was relevant as well. The Rhine above Basel hosts the most important port of Switzerland, and almost all the freight transport ships on the Rhine cross the city. The lake in Lugano is also used by boats, including regular boating services for tourists.

We evaluated model performance in three different ways: 1) using leave-one-out cross validation (LOOCV), which is widely used in previous LUR studies [5–7], 2) leave-one-area-out cross-validation (LOAOCV), as used by a previous study which combined study areas [22] and 3) by independent external validation, where the models were applied to independent sites from a routine measurement network. The second method gives an idea of how well the models predict within-area contrasts in areas which were not used to develop the model. The third method shows how well the models predict independent concentration contrasts at a national level.

Several recent studies pointed out that leave-one-out cross-validation overestimates the true model performance when applied to independent sites, especially if the models were developed based on a limited number of training sites, which increases the risk of over-fitting [39–41]. Our multi-area models are based on a minimum of 67 sites, and our study area specific models on a minimum of 37 sites, increasing the robustness of the models, which is apparent from a small difference between model R2 and LOOCV R2, as well as good results from LOAOCV and independent external validation.

Independent validation showed that we could predict moderate to large amounts of spatial variability for NO2, PM2.5, PM2.5 absorbance, PM10 and PMcoarse among routine monitoring sites, which were not used for model development. The comparison of our PNC model with the routine monitoring data must be interpreted with caution. In contrast to all other pollutants, the routine monitoring data for ultrafine particles are derived from different types of monitoring instruments, which measure different particle size ranges, and use optical or electric charge measurement principles. Therefore, the absence of agreement between the LUR model predictions and the routine monitoring for PNC likely highlights the limitation of comparing any metrics of absolute concentrations of ultrafine particles that are derived from multiple different instruments. Hence, this finding should not be interpreted as indicating poor quality of the LUR model.

We minimized the risk of over-fitting by setting a priori criteria for inclusion of variables into our model. These criteria were related to the expected direction of the effect, significance, and distribution of the predictor variables, producing models which were plausible, with coefficients that were robust and not dependent on a small number of sites. Strict selection criteria resulted in the inclusion of 3 to 5 predictors for the local NO2, PM2.5, PM10 and PMcoarse models, and 6 to 7 for the PM2.5 absorbance, PNC and LDSA models, which included area indicators. Similar screening strategies were used in other studies [43, 44].

While there are no strict rules for a minimum number of sampling sites, for developing LUR models, we observed that the model R2 and LOOCV R2 were larger for the area-specific NO2 models (based on up to 40 sites) and the alpine and non-alpine models than for the model fitted on all eight areas. The risk of over-fitting is larger when using smaller training sets in model building [39–41]. Therefore, we gave preference to the more robust multi-area models for the particulate pollutants, wherever systematic bias in predicting the between-area variability was not an issue. In LOAOCV, we show that the separate alpine and non-alpine NO2 models (Additional file 3) are indeed preferable to the NO2 model which combined all eight regions (Additional file 5), with lower over- and under-prediction by study area, and better explained variance within each area. In external validation, we further show that the alpine and non-alpine models predict better in the independent external validation (Table 6). The inability to properly model NO2 for the Alpine valleys with data from the flatter, much more populated areas of Switzerland and vice versa may be explained by the distinct topographies and meteorological conditions of alpine and non-alpine regions in Switzerland.

While the spatial distributions of different pollutants were already moderately to highly correlated in the SAPALDIA3 measurements, we observed that after applying the LUR models to the measurement sites on which they were fitted, the correlations between pollutants became even higher (Table 5). The LUR models all include different predictors, but these predictors are also correlated. Using these predictors to fit models “smoothes” the random variation in the measurements for all pollutants in a similar way. To not further complicate this challenge, our main models for PNC and LDSA did not allow dispersion modelled PM mass concentrations as predictors. The high correlations between predicted concentrations are a combination of the rather high spatial correlation of these pollutants (lower left part of Table 5) and the artifact of the modelling process. As a consequence, we may expect similarly high correlations when we apply our models to the residential sites of the SAPALDIA cohort participants. It is important to be aware of possible artifacts when using LUR modelled estimates in epidemiological health analyses, especially when fitting 2-pollutant models, for which the collinearity of the estimated exposures will be high. The implication is that epidemiological studies relying on LUR models are limited in disentangling the health effects attributable to the different pollutants, looking at PNC and LDSA independently from PM mass.

Because of the increasing number of LUR models, developed for different study areas, long-term cohort studies such as SAPALDIA have a multitude of exposure estimates available. To avoid multiple testing, we pre-selected models for the health analyses to prevent the use of too many models. Based on our LOAOCV (Additional file 3) and external validation results (Additional file 6), we concluded that long-term exposure to NO2 is best estimated by study-area specific models. We will use these models to predict long-term exposure to NO2 at the SAPALDIA home and work addresses which fall within 10 km of at least one of the measurement sites used to develop the model for that particular study area. For cohort participants who have moved further beyond the original SAPALDIA study areas, we will estimate the NO2 exposure based on the alpine and non-alpine models, respectively, which also performed adequately in external validation. The validity of the models presumably decreases with the distance from the sites used to develop the model. Therefore, an epidemiological sensitivity analysis may address if the effect estimates are affected if those people living further from the measurement sites are excluded. Exposure to PM2.5 absorbance, PNC and LDSA model will only be assigned to those coordinates within 20 km from the areas for which they were developed. Exposure to all particulate air pollutants (PM2.5, PM10 and PMcoarse) will be assigned to all homes and work addresses by applying the multi-area models developed for these pollutants to all subjects. Since these models are based on measurements made in Basel, Geneva, Lugano and Wald only, it will be necessary to verify in the epidemiological analyses that their application in the remaining four areas, as well as the rest of Switzerland does not affect the relationship with the health outcomes. This might be achieved by performing sensitivity analysis on only those participants who live within 10 km/20 km of the measurement sites which were used to develop the models. Appropriately, the Basel, Geneva, Lugano and Wald study areas are geographically diverse, which suggests the models can be applied in a wide geographical context. Moreover, external validation suggests that throughout Switzerland, the PM2.5, PM10 and PMcoarse models explain moderate to high amounts (R 2 = 0.83, 0.71 and 0.65, respectively) of spatial variation.

Conclusions

We were able to develop a set of LUR models capturing both the between and within-area variability in long-term pollutant concentrations in the study areas of the Swiss SAPALDIA cohort. Multi-area models for PM2.5, PM10, and PMcoarse, performed adequately in LOOCV, LOAOCV, and external validation. For PM2.5 absorbance, PNC and LDSA, area indicators were needed to capture the between area variance. Model and model performance (evaluated by LOOCV, and for PM2.5 absorbance by external validation) was high. For NO2, applying study-area specific models was preferable over applying combined-area alpine/non-alpine models. The study-area specific, alpine and non-alpine NO2 models, and multi-area models for the particulate air pollutants will be applied to derive exposure of participants of health studies such as the SAPALDIA cohort study. However, we observe high spatial correlations between the estimated pollutants, so the ability to fully disentangle their health effects may remain a challenge.

Abbreviations

- CORINE:

-

COoRdination of INformation on the Environment

- GIS:

-

Geographic information systems

- LDSA:

-

lung deposited surface area

- LOAOCV:

-

Leave-one-area-out cross-validation

- LOOCV:

-

leave-one-out cross-validation

- LUR:

-

land use regression

- NO2 :

-

nitrogen dioxide

- NOX :

-

nitrogen oxides

- PM2.5 :

-

mass concentration of particles less than 2.5 μm in size

- PM10 :

-

mass concentration of particles less than 10 μm in size

- PM2.5 absorbance:

-

measurement of the blackness of PM2.5 filters; a proxy for elemental carbon, which is the dominant light absorbing substance

- PMcoarse :

-

mass concentration of particles between 2.5 μm and 10 μm in size

- PNC:

-

particle number concentration

References

Cyrys J, Eeftens M, Heinrich J, Ampe C, Armengaud A, Beelen R, Bellander T, Beregszaszi T, Birk M, Cesaroni G et al. Variation of NO2 and NOx concentrations between and within 36 European study areas: Results from the ESCAPE study. Atmos Environ. 2012;62:374–90.

Eeftens M, Tsai M-Y, Ampe C, Anwander B, Beelen R, Bellander T, Cesaroni G, Cirach M, Cyrys J, de Hoogh K et al. Spatial variation of PM2.5, PM10, PM2.5 absorbance and PMcoarse concentrations between and within 20 European study areas and the relationship with NO2 – results of the ESCAPE project. Atmos Environ. 2012;62:303–17.

Hoek G, Meliefste K, Cyrys J, Lewné M, Bellander T, Brauer M, Fischer P, Gehring U, Heinrich J, van Vliet P. Spatial variability of fine particle concentrations in three European areas. Atmos Environ. 2002;36(25):4077–88.

Jerrett M, Burnett RT, Ma R, Pope III CA, Krewski D, Newbold KB, Thurston G, Shi Y, Finkelstein N, Calle EE. Spatial analysis of air pollution and mortality in Los Angeles. Epidemiology. 2005;16(6):727–36.

Beelen R, Hoek G, Vienneau D, Eeftens M, Dimakopoulou K, Pedeli X, Tsai M-Y, Künzli N, Schikowski T, Marcon A et al. Development of NO2 and NOx land use regression models for estimating air pollution exposure in 36 study areas in Europe – The ESCAPE project. Atmos Environ. 2013;72:10–23.

Eeftens M, Beelen R, de Hoogh K, Bellander T, Cesaroni G, Cirach M, Declercq C, Dedele A, Dons E, de Nazelle A et al. Development of Land Use Regression models for PM2.5, PM2.5 absorbance, PM10 and PMcoarse in 20 European study areas; results of the ESCAPE project. Environ Sci Technol. 2012;46(20):11195–205.

Hoek G, Beelen R, de Hoogh K, Vienneau D, Gulliver J, Fischer P, Briggs D. A review of land-use regression models to assess spatial variation of outdoor air pollution. Atmos Environ. 2008;42(33):7561–78.

Jerrett M, Arain A, Kanaroglou P, Beckerman B, Potoglou D, Sahsuvaroglu T, Morrison J, Giovis C. A review and evaluation of intraurban air pollution exposure models. J Expo Anal Environ Epidemiol. 2004;15(2):185–204.

Ryan PH, LeMasters GK. A review of land-use regression models for characterizing intraurban air pollution exposure. Inhal Toxicol. 2007;19(S1):127–33.

Liu S, Tsai M-Y, Keidel D, Gemperli A, Ineichen A, Hazenkamp-von Arx M, Bayer-Oglesby L, Rochat T, Künzli N, Ackermann-Liebrich U et al. Long-term exposure models for traffic related NO2 across geographically diverse areas over separate years. Atmos Environ. 2012;46:460–71.

Beelen R, Hoek G, van den Brandt PA, Goldbohm RA, Fischer P, Schouten LJ, Jerrett M, Hughes E, Armstrong B, Brunekreef B. Long-term effects of traffic-related air pollution on mortality in a Dutch cohort (NLCS-AIR study). Environ Health Perspect. 2008;116(2):196.

Beelen R, Raaschou-Nielsen O, Stafoggia M, Andersen ZJ, Weinmayr G, Hoffmann B, Wolf K, Samoli E, Fischer P, Nieuwenhuijsen M. Effects of long-term exposure to air pollution on natural-cause mortality: an analysis of 22 European cohorts within the multicentre ESCAPE project. Lancet. 2013;383:785–95.

Henderson SB, Beckerman B, Jerrett M, Brauer M. Application of land use regression to estimate long-term concentrations of traffic-related nitrogen oxides and fine particulate matter. Environ Sci Technol. 2007;41(7):2422–8.

Raaschou-Nielsen O, Andersen ZJ, Beelen R, Samoli E, Stafoggia M, Weinmayr G, Hoffmann B, Fischer P, Nieuwenhuijsen MJ, Brunekreef B et al. Air pollution and lung cancer incidence in 17 European cohorts: prospective analyses from the European Study of Cohorts for Air Pollution Effects (ESCAPE). Lancet Oncol. 2013;14(9):813–22.

Hoek G, Beelen R, Kos G, Dijkema M, Zee SC, Fischer PH, Brunekreef B. Land use regression model for ultrafine particles in Amsterdam. Environ Sci Technol. 2010;45(2):622–8.

Abernethy RC, Allen RW, McKendry IG, Brauer M. A land use regression model for ultrafine particles in Vancouver, Canada. Environ Sci Technol. 2013;47(10):5217–25.

Patton AP, Collins C, Naumova EN, Zamore W, Brugge D, Durant JL. An hourly regression model for ultrafine particles in a near-highway urban area. Environ Sci Technol. 2014;48(6):3272–80.

Rivera M, Basagaña X, Aguilera I, Agis D, Bouso L, Foraster M, Medina-Ramón M, Pey J, Künzli N, Hoek G. Spatial distribution of ultrafine particles in urban settings: a land use regression model. Atmos Environ. 2012;54:657–66.

Ragettli MS, Ducret-Stich RE, Foraster M, Morelli X, Aguilera I, Basagaña X, Corradi E, Ineichen A, Tsai M-Y, Probst-Hensch N. Spatio-temporal variation of urban ultrafine particle number concentrations. Atmos Environ. 2014;96:275–83.

Zwack LM, Paciorek CJ, Spengler JD, Levy JI. Modeling spatial patterns of traffic-related air pollutants in complex urban terrain. 2011.

Weichenthal S, Farrell W, Goldberg M, Joseph L, Hatzopoulou M. Characterizing the impact of traffic and the built environment on near-road ultrafine particle and black carbon concentrations. Environ Res. 2014;132:305–10.

Wang M, Beelen R, Bellander T, Birk M, Cesaroni G, Cirach M, Cyrys J, de Hoogh K, Declercq C, Dimakopoulou K. Performance of multi-city land use regression models for nitrogen dioxide and fine particles. Environ Health Perspect. 2014;122:843–9.

Vienneau D, de Hoogh K, Bechle MJ, Beelen R, van Donkelaar A, Martin RV, Millet DB, Hoek G, Marshall JD. Western European land use regression incorporating satellite- and ground-based measurements of NO2 and PM10. Environ Sci Technol. 2013;47(23):13555–64.

Vienneau D, de Hoogh K, Beelen R, Fischer P, Hoek G, Briggs D. Comparison of land-use regression models between Great Britain and the Netherlands. Atmos Environ. 2010;44(5):688–96.

Poplawski K, Gould T, Setton E, Allen R, Su J, Larson T, Henderson S, Brauer M, Hystad P, Lightowlers C et al. Intercity transferability of land use regression models for estimating ambient concentrations of nitrogen dioxide. J Expos Sci Environ Epidemiol. 2008;19(1):107–17.

Allen RW, Amram O, Wheeler AJ, Brauer M. The transferability of NO and NO2 land use regression models between cities and pollutants. Atmos Environ. 2011;45(2):369–78.

Wilton D, Szpiro A, Gould T, Larson T. Improving spatial concentration estimates for nitrogen oxides using a hybrid meteorological dispersion/land use regression model in Los Angeles, CA and Seattle, WA. Sci Total Environ. 2010;408(5):1120–30.

Schwarze P, Øvrevik J, Låg M, Refsnes M, Nafstad P, Hetland R, Dybing E. Particulate matter properties and health effects: consistency of epidemiological and toxicological studies. Hum Exp Toxicol. 2006;25(10):559–79.

Stoeger T, Reinhard C, Takenaka S, Schroeppel A, Karg E, Ritter B, Heyder J, Schulz H. Instillation of six different ultrafine carbon particles indicates a surface area threshold dose for acute lung inflammation in mice. Environ Health Perspect. 2006;114:328–33.

Wittmaack K. In search of the most relevant parameter for quantifying lung inflammatory response to nanoparticle exposure: particle number, surface area, or what? Environ Health Perspect. 2007;115(2):187–94.

Oberdörster G, Oberdörster E, Oberdörster J. Nanotoxicology: an emerging discipline evolving from studies of ultrafine particles. Environ Health Perspect. 2005;823–839.

Ackermann-Liebrich U. Schweizer Studie “Luftverschmutzung und Atemwegserkrankungen bei Erwachsenen: SAPALDIA.”. Atemw Lungenkr. 1993;5:190–4.

Martin B, Ackermann-Liebrich U, Leuenberger P, Künzli N, Stutz E, Keller R, Zellweger J-P, Wüthrich B, Monn C, Blaser K et al. SAPALDIA: methods and participation in the cross-sectional part of the swiss study on Air pollution and lung diseases in adults. Soz Praventivmed. 1997;42(2):67–84.

Eeftens M, Phuleria HC, Meier R, Aguilera I, Corradi E, Davey M, Ducret-Stich R, Fierz M, Gehrig R, Ineichen A. Spatial and temporal variability of ultrafine particles, NO2, PM2.5, PM2.5 absorbance, PM10 and PMcoarse in Swiss study areas. Atmos Environ. 2015;111:60–70.

Fierz M, Houle C, Steigmeier P, Burtscher H. Design, calibration, and field performance of a miniature diffusion size classifier. Aerosol Sci Tech. 2011;45(1):1–10.

Landestopographie Bf. VECTOR25–Das digitale Landschaftsmodell der Schweiz. Wabern: Informationsbroschüre des Bundesamts FÜR Landestopographie der Schweiz; 2000.

Beelen R, Hoek G, Pebesma E, Vienneau D, de Hoogh K, Briggs DJ. Mapping of background air pollution at a fine spatial scale across the European Union. Sci Total Environ. 2009;407(6):1852–67.

Liu L-JS, Curjuric I, Keidel D, Heldstab J, Künzli N, Bayer-Oglesby L, Ackermann-Liebrich U, Schindler C, team S. Characterization of source-specific air pollution exposure for a large population-based Swiss cohort (SAPALDIA). Environ Health Perspect. 2007;115:1638–45.

Basagaña X, Rivera M, Aguilera I, Agis D, Bouso L, Elosua R, Foraster M, de Nazelle A, Nieuwenhuijsen M, Vila J et al. Effect of the number of measurement sites on land use regression models in estimating local air pollution. Atmos Environ. 2012;54:634–42.

Johnson M, Isakov V, Touma JS, Mukerjee S, Özkaynak H. Evaluation of land-use regression models used to predict air quality concentrations in an urban area. Atmos Environ. 2010;44(30):3660–8.

Wang M, Beelen R, Eeftens M, Meliefste K, Hoek G, Brunekreef B. Systematic evaluation of land use regression models for NO2. Environ Sci Technol. 2012;46(8):4481–9.

Wang M, Beelen R, Basagana X, Becker T, Cesaroni G, de Hoogh K, Dedele A, Declercq C, Dimakopoulou K, Eeftens M et al. Evaluation of land use regression models for NO2 and particulate matter in 20 European study areas: the ESCAPE project. Environ Sci Technol. 2013;47(9):4357–64.

Mercer LD, Szpiro AA, Sheppard L, Lindström J, Adar SD, Allen RW, Avol EL, Oron AP, Larson T, Liu LJS et al. Comparing universal kriging and land-use regression for predicting concentrations of gaseous oxides of nitrogen (NOx) for the Multi-Ethnic Study of Atherosclerosis and Air Pollution (MESA Air). Atmos Environ. 2011;45(26):4412–20.

Sampson PD, Szpiro AA, Sheppard L, Lindström J, Kaufman JD. Pragmatic estimation of a spatio-temporal air quality model with irregular monitoring data. Atmos Environ. 2011;45(36):6593–606.

Beelen R, Voogt M, Duyzer J, Zandveld P, Hoek G. Comparison of the performances of land use regression modelling and dispersion modelling in estimating small-scale variations in long-term air pollution concentrations in a Dutch urban area. Atmos Environ. 2010;44(36):4614–21.

de Hoogh K, Wang M, Adam M, Badaloni C, Beelen R, Birk M, Cesaroni G, Cirach M, Declercq C, Dedele A, et al. Development of land use regression models for particle composition in twenty study areas in Europe. Environ Sci Technol. 2013;47(11):5778–86.

Acknowledgements

We thank the field workers collecting the exposure data: Marianne Rutschi, Aliocha Schaffner, Siventher Jeyachchandren, Helen Graf, Dominik Bürgi, Tobias Heckelmann, Luca Hüsler, Joseph Quinlan, Gregor Fessler and Alain Jost. We also honor Prof. Lee-Jane Sally Liu (1965–2011) who initiated this exposure assessment project. We also thank Dr. Robert Gehrig for his long-term contribution to this project and to SAPALDIA in general and the study participants for their help during the data collection period. The authors declare they have no actual or potential competing financial interests.

The SAPALDIA team

Study directorate: NM Probst-Hensch (PI; e/g); T Rochat (p), N Künzli (e/exp), C Schindler (s), JM Gaspoz (c)

Scientific team: JC Barthélémy (c), W Berger (g), R Bettschart (p), A Bircher (a), O Brändli (p), C Brombach (n), L Burdet (p), M Frey (p), U Frey (pd), MW Gerbase (p), D Gold (e), E de Groot (c), W Karrer (p), M Kohler (p), B Martin (pa), D Miedinger (o), L Nicod (p), M Pons (p), F Roche (c), T Rothe (p), P Schmid-Grendelmeyer (a), A Schmidt-Trucksäss (pa), A Turk (p), J Schwartz (e), D. Stolz (p), P Straehl (exp), JM Tschopp (p), A von Eckardstein (cc), E Zemp Stutz (e).

Scientific team at coordinating centers: M Adam (e/g), I Aguilera, C Autenrieth (pa), PO Bridevaux (p), D Carballo (c), I Curjuric (e), J Dratva (e), R Ducret-Stich (s), E Dupuis Lozeron (s), M Eeftens (exp), I Eze (e), E Fischer (g), M Germond (s), L Grize (s), S Hansen (e), A Hensel (s), M Imboden (g), A Ineichen (exp), D Keidel (s), A Kumar (g), N Maire (s), A Mehta (e), R Meier (exp), E Schaffner (s), T Schikowski (e), GA Thun (g), M Tarantino (s), M Tsai (exp)

(a) allergology, (c) cardiology, (cc) clinical chemistry, (e) epidemiology, (exp) exposure, (g) genetic and molecular biology, (m) meteorology, (n) nutrition, (o) occupational health, (p) pneumology, (pa) physical activity, (pd) pediatrics, (s) statistics

Local fieldworkers : Aarau: S Brun, G Giger, M Sperisen, M Stahel, Basel: C Bürli, C Dahler, N Oertli, I Harreh, F Karrer, G Novicic, N Wyttenbacher, Davos: A Saner, P Senn, R Winzeler, Geneva: F Bonfils, B Blicharz, C Landolt, J Rochat, Lugano: S Boccia, E Gehrig, MT Mandia, G Solari, B Viscardi, Montana: AP Bieri, C Darioly, M Maire, Payerne: F Ding, P Danieli A Vonnez, Wald: D Bodmer, E Hochstrasser, R Kunz, C Meier, J Rakic, U Schafroth, A Walder.

Administrative staff: C Gabriel, R Gutknecht.

Research support

The Swiss National Science Foundation (grants no 33CSCO-134276/1, 33CSCO-108796, 324730_135673, 3247BO-104283, 3247BO-104288, 3247BO-104284, 3247–065896, 3100–059302, 3200–052720, 3200–042532, 4026–028099, PMPDP3_129021/1, PMPDP3_141671/1), the Federal Office for the Environment, the Federal Office of Public Health, the Federal Office of Roads and Transport, the canton’s government of Aargau, Basel-Stadt, Basel-Land, Geneva, Luzern, Ticino, Valais, and Zürich, the Swiss Lung League, the canton’s Lung League of Basel Stadt/Basel Landschaft, Geneva, Ticino, Valais, Graubünden and Zurich, Stiftung ehemals Bündner Heilstätten, SUVA, Freiwillige Akademische Gesellschaft, UBS Wealth Foundation, Talecris Biotherapeutics GmbH, Abbott Diagnostics, European Commission 018996 (GABRIEL), Wellcome Trust WT 084703MA.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare they have no competing interests.

Authors’ contributions

HP, MYT, NK and NPH designed the study. AI, HP and MYT coordinated and performed the air pollution measurements which form the basis of the study. ME, IA, MYT and RM evaluated the air pollution and GIS data-quality for analysis. ME, CS, IA, MYT, NK, RDS and RM developed the analysis plan (including statistical methods) and interpreted the results. DK and RDS derived the SAPALDIA cohort’s geographical information. ME developed the LUR models and drafted the manuscript. All authors read and approved the final manuscript.

Additional files

Additional file 1:

Study area descriptions, numbers of sites and measurement periods. (DOCX 17 kb)

Additional file 2:

Population change in bordering countries of Switzerland over 2000–2011. (DOCX 16 kb)

Additional file 3:

Explained variance by area and leave-one-area-out cross-validation (LOAOCV) validation. (DOCX 19 kb)

Additional file 4:

Descriptive statistics of predictor variables used in alpine, non-alpine and area-specific NO2 LUR models, and (continued) in multi-area PM2.5, PM2.5 absorbance, PM10, PMcoarse, PNC and LDSA LUR models. (DOCX 25 kb)

Additional file 5:

Process of model selection and description and evaluation of alternative LUR models, which were not eventually selected. (DOCX 34 kb)

Additional file 6:

Results of independent external validation for NO2, using different cut-off distances for the application of local versus alpine/non-alpine LUR models (n = 102). (DOCX 14 kb)

Additional file 7:

Overview of model performance of LUR models built previously for Swiss study areas. (DOCX 60 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Eeftens, M., Meier, R., Schindler, C. et al. Development of land use regression models for nitrogen dioxide, ultrafine particles, lung deposited surface area, and four other markers of particulate matter pollution in the Swiss SAPALDIA regions. Environ Health 15, 53 (2016). https://doi.org/10.1186/s12940-016-0137-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12940-016-0137-9