Abstract

Background

Following the recent development of a new approach to quantitative analysis of IgG concentrations in bovine serum using transmission infrared spectroscopy, the potential to measure IgG levels using technology and a device better designed for field use was investigated. A method using attenuated total reflectance infrared (ATR) spectroscopy in combination with partial least squares (PLS) regression was developed to measure bovine serum IgG concentrations. ATR spectroscopy has a distinct ease-of-use advantage that may open the door to routine point-of-care testing. Serum samples were collected from calves and adult cows, tested by a reference RID method, and ATR spectra acquired. The spectra were linked to the RID-IgG concentrations and then randomly split into two sets: calibration and prediction. The calibration set was used to build a calibration model, while the prediction set was used to assess the predictive performance and accuracy of the final model. The procedure was repeated for various spectral data preprocessing approaches.

Results

For the prediction set, the Pearson’s and concordance correlation coefficients between the IgG measured by RID and predicted by ATR spectroscopy were both 0.93. The Bland Altman plot revealed no obvious systematic bias between the two methods. ATR spectroscopy showed a sensitivity for detection of failure of transfer of passive immunity (FTPI) of 88 %, specificity of 100 % and accuracy of 94 % (with IgG <1000 mg/dL as the FTPI cut-off value).

Conclusion

ATR spectroscopy in combination with multivariate data analysis shows potential as an alternative approach for rapid quantification of IgG concentrations in bovine serum and the diagnosis of FTPI in calves.

Similar content being viewed by others

Background

Immunoglobulins are glycoproteins produced by B-lymphocytes, and are a crucial component of the host’s adaptive immune system [1]. Immunoglobulin G (IgG) is the predominant class of immunoglobulins involved in transfer of passive immunity to newborn calves via colostrum [2]. Failure of transfer of passive immunity (FTPI) occurs when calves fail to ingest or absorb sufficient IgG (<1000 mg/dL) from colostrum [3]. FTPI is a major predisposing risk factor for early neonatal losses associated with gastroenteritis, pneumonia, and septicemia [3, 4]. Reduced long-term productivity, decreased milk yield, and increased culling rates during first lactation have also been associated with FTPI [5, 6]. The monitoring of IgG levels is important for assessment of farm colostrum management and to reduce productivity losses associated with calf-hood diseases [7].

A number of methods have been used to measure the IgG concentrations in bovine serum. The most widely accepted reference method used is the radial immunodiffusion (RID) assay that provides direct measurement of IgG concentrations in bovine serum [8]. However, RID has significant drawbacks, including the time it takes to obtain results (18–24 h), utilization of labile reagents, high cost [9, 10], and discrepancies among RID kits due to inaccuracies associated with the internal standards [11]. Other methods, such as refractometry, zinc sulfite turbidity test, sodium sulfate turbidity test, serum γ-glutamyl transferase activity, whole blood glutaraldehyde coagulation test, and ELISA have been used to identify calves with FTPI with varying degrees of accuracy [2, 12, 13]. Despite these options, there is a need for an accurate, simple, rapid, and cost-effective method for quantification of IgG concentrations in bovine serum and for diagnosis of FTPI in dairy calves, which may be readily translated to the farm, clinic or small laboratory setting.

Infrared (IR) spectroscopy is one of the most important tools in modern analytic chemistry that is used for quantitative and qualitative analyses of many sample types [14, 15]. To obtain IR spectra from these samples various analyzing techniques have been used including transmission and attenuated total reflectance methods. For transmission techniques, the sample is directly placed into the path of the IR beam, and then the transmitted energy is measured and a spectrum is generated [16, 17]. However, the ATR technique differs in that it measures changes that occur to the totally internally reflected IR beam after the beam comes into contact with a sample [16, 17].

Transmission IR spectroscopy, in combination with partial least squares (PLS) regression, has been used to measure total serum protein, glucose, albumin, triglyceride, cholesterol, and urea in human serum samples [14, 18, 19]. In veterinary applications, it is also widely used for nutrient analysis of milk [20, 21], and screening of dairy cows for metabolic diseases such as ketosis [22]. Technical difficulties commonly encountered with transmission techniques can make routine collection of high-quality spectra in the field situation difficult [23]. These difficulties include practical issues associated with filling and cleaning short-pathlength cells (for spectroscopy of liquid samples), uncertainties in optical pathlength (for spectroscopy of dried films), and the time required for sample submission and preparation [16, 17]. In contrast to transmission IR spectroscopy, attenuated total reflectance infrared (ATR) spectroscopy by its nature does not have issues associated with optical pathlength or sample thickness. The measurement is simple and fast, and requires little or no sample preparation [24]. For these reasons, ATR has emerged as one of the most commonly used IR spectroscopic analyzing techniques, with applications addressing various clinical diagnostic problems in human and veterinary medicine [25–27]. Most recently, robust, small footprint ATR equipment has been manufactured that is ideally suited for field use on the farm, veterinary clinic or small laboratory [28].

The objectives of this study were to investigate the potential use of ATR, in combination with multivariate data analysis, for the rapid quantification of IgG concentrations in bovine serum, and for the diagnosis of FTPI in dairy calves. This study also investigated the effects of different spectral data pre-processing techniques on model performance and predictive accuracy.

Methods

Serum samples

Serum samples (n = 250) from Holstein-Friesian calves and adult dairy cows were used. Calf samples (n = 208, including eight prior to colostrum ingestion) had previously been collected for companion studies [29, 30], and adult dairy cow samples (n = 42) had been collected for the Maritime Quality Milk laboratory (Charlottetown, Prince Edward Island, Canada). Samples were stored at -80 °C at the University of Prince Edward Island before use. All samples were tested at the same time, using a radial immunodiffusion (RID) assay to quantify the serum IgG level and ATR spectroscopy to acquire the IR spectrum for each sample. The research protocol was reviewed and approved by the Animal Care Committee at University of Prince Edward Island (Protocol number: 6006206).

RID assay for IgG

A commercial RID assay (Bovine IgG RID Kit, Triple J Farms; Bellingham, WA) was used as the reference method for determining serum IgG concentrations. Serum samples were thawed at room temperature and then vortexed for 10 s. Subsequently, IgG was measured using the bovine RID assay with a working range of 196 to 2748 mg/dL. For the eight samples that were collected prior to colostrum ingestion, IgG was quantified using the Bovine Ultra Low Level IgG RID Kit with a working range of 10–100 mg/dL. Each RID assay was performed according to the manufacturer’s instructions using 5 μL of undiluted serum in each well. The diameters of the precipitating zones were measured after 18–24 h by the same individual, using a handheld caliper. Each sample and manufacturer’s standards were tested in replicates of five, with one replicate per RID plate, and the mean values were calculated. The assay standards were used to construct a calibration curve that was used to determine the IgG concentrations of the study serum samples.

ATR assay for IgG

Infrared spectra were acquired using a customized 3-bounce attenuated total reflectance mid-infrared spectrometer (Cary 630 IR spectrometer, 3B Diamond ATR Module ZnSe element, Agilent Technologies, Dansbury, Connecticut). Thawed serum samples were diluted (1:1) with deionized sterile water and vortexed at a maximum of 2700 rpm for 10 s to homogenize the samples. Following dilution, 5 μL aliquots were evenly spread onto the ATR element of the optics module of the spectrometer and dried by a stream of air from a domestic hair dryer. The sample was completely dried within 3-4 min and formed a thin film on the optical element.

Spectra were collected over the wavenumber range of 4000–650 cm−1 with a nominal resolution of 8 cm−1. For each spectrum, 32 scans were co-added to increase the signal-to-noise ratio. Before each measurement, the stage of the optics module of the spectrometer was cleaned with 100 % ethanol and allowed to dry, and a new background reading was collected. Each serum sample was tested in replicates of five. A total of 1250 (250 × 5) ATR spectra were collected and saved in GRAMS spectrum (SPC) format (GRAMS/AI version 7.02, Thermo Fisher Scientific), and then converted into PRN (printable) formatted data. The PRN format spectral data were imported into MATLAB® (version R2012b, MathWorks, Natick, MA) and further data analysis was performed using scripts written by the authors.

Spectral pre-processing

Several pre-processing methods, including Savitsky-Golay smoothing (2nd order polynomial function with 9 points), first-order and second-order derivative spectra [31], and two different normalization methods (Standard normal variate (SNV) and vector normalization) [32, 33] were applied to examine effects, if any, on the calibration models. This was followed by spectrum region selection of the 3700–2600 cm−1 and 1800–1300 cm−1 wavenumber regions, which exhibited the strongest absorptions in the original spectra. With five replicate spectra per serum sample, spectrum outlier detection was performed using Dixons Q-test [34, 35] at each wavenumber. If absorbance values were outliers (95 % confidence level) for over 50 % of the spectral data points for a given spectrum, that complete spectrum was treated as an outlier and excluded from further analysis. The average of the replicate spectra for each sample (after removal of outliers) was used for subsequent analysis.

Multivariate calibration model development

The 250 serum samples were sorted based on IgG concentrations obtained from the RID assays. Serum samples with IgG concentrations outside of the manufacturer’s stated performance range for the RID assay were excluded from further analysis (n = 50; 28 calves; 22 cows). The remaining samples (n = 200) were linked to their corresponding pre-processed IR spectra, and then split into two sets (prediction and calibration sets). The prediction set (n = 67) was identified by ordering all of the serum samples according to their corresponding IgG levels and selecting the spectra of every third serum sample as a member of the prediction set. Thus, the prediction set encompassed the full range of IgG values for use in testing the predictive performance of the calibration models. The remaining calibration set (n = 133) was randomly split into training (n = 67) and validation (n = 66) data sets for model development.

Calibration models were developed using PLS regression to relate spectroscopic features (pre-processed wavenumber bands) to the reference serum RID IgG concentrations. PLS regression was applied first to the training set, with up to 30 PLS factors retained, to develop 30 trial calibration models. Each of these models was employed to calculate the IgG concentration of each sample in the validation set, and an error estimate (sum of the squares of the differences between RID IgG values and the ATR predicted IgG values) was calculated. This procedure was repeated 10,000 times (including new randomly assigned splits of the calibration data set into new training and validation sets). The root mean squared error for the Monte Carlo cross validation value (RMMCCV) [36, 37] was calculated for each of the 30 trial calibration models, and then the optimal number of PLS factors was chosen as the one giving the lowest RMMCCV. Once the number of PLS factors was determined, the training and validation sets were combined (i.e., all the samples in the calibration set) to evaluate a PLS calibration model with the determined number of PLS factors.

Evaluation of the calibration models

The predictive accuracy of each multivariate calibration model was assessed using the independent prediction data set (n = 67). The level of agreement between IgG concentrations measured by RID assay and predicted by ATR assay was first assessed for both the calibration and prediction sets by a scatter plot, Pearson correlation coefficient, and the concordance correlation coefficient. This was followed by a Bland–Altman plot [38, 39], which was used to examine the differences between RID and ATR assays values for the prediction set, thereby assessing the interchangeability of the two assays.

The precision of both the ATR and RID methods was investigated using the prediction set samples. The mean and standard deviations (SD) were calculated for the five replicates of each serum sample, and from these a modified coefficient of variation (CV* = CV (1 + (1/4n)); n = 5) was determined [40]. For each sample, and for each analytical method, the CV* was then plotted against the mean IgG concentration.

Finally, the potential utility of the ATR method was further assessed using the ratio of predictive deviation (RPD: the population standard deviation of the RID-determined IgG concentrations, ratioed to the RMSEP for the ATR method), and the range error ratio (RER: the range of the RID-determined IgG concentrations, ratioed to the RMSEP for the ATR method) [41]. According to this framework, a RPD <2 is considered to be poorly predictive; values between 2.0 and 2.5 are adequate for qualitative evaluation or for screening purposes; values >2.5 (or RER >10) are regarded as acceptable for quantification; and values >3 (or RER >20) suggest that the model is suitable for very accurate quantitative analysis [41].

Clinical utility of ATR assay

To evaluate the clinical utility of the ATR assay for the diagnosis of FTPI (serum IgG <1000 mg/dL) in dairy calves, the test characteristics were calculated using 2×2 tables in prediction and entire data sets. These calculations were performed with Stata version 13.0 statistical software (StataCorp, College Station, TX). Sensitivity (Se) was defined as the proportion of samples with FTPI, as determined by RID, that were classified as positive by the ATR assay. Conversely, specificity (Sp) was defined as the proportion of samples without FTPI that were classified as negative by the ATR assay. Accuracy was defined as the proportion of samples that were correctly classified by the ATR assay.

Results

RID assay

The IgG concentrations of the serum samples with concentrations within the range of the RID assays (n = 200) ranged from 5.5 to 2983 mg/dL, with an average and SD of 1122 and 866 mg/dL, respectively. Separate IgG concentration statistics (mean, SD and range) for the calibration set and the prediction set are summarized in Table 1.

ATR spectra

A typical ATR spectrum of bovine serum over wavenumber range of 4000–650 cm−1 is shown in Fig. 1. Strong absorption bands at 1650 cm−1 and 1550 cm−1, correspond to C = O stretching and N–H bending vibrations respectively, while a broad strong absorption band centered at 3300 cm−1 was attributed to N–H stretching vibration [14, 15]. The noise in the 2200–1900 cm−1 range was due to absorptions by the diamond coating on the ZnSe ATR element. The spectral region at 4000–3800 cm−1 was a true baseline, free of appreciable sample absorptions, and as such was highly reproducible for all spectra.

Multivariate calibration model

Trial PLS models were built to compare several pre-processing methods. The optimized results were obtained using data from the smoothed spectra with a 9 point Savitzky-Golay filter and SNV scaling (Table 2). The optimum number of PLS factors for this model was 14 (Fig. 2), based on the lowest IgG RMMCCV (332 mg/dL). Fig. 2 also shows root mean squared error of calibration (RMSEC) and root mean squared error of prediction (RMSEP) plotted against the number of PLS factors.

Plots of RMMCCV and RMSEC for the calibration (n = 133) data set, and RMSEP for the prediction (n = 67) data set. The optimum number of PLS factors was determined to be 14, based on the lowest RMMCCV. RMMCCV: Root mean squared error in the Monte Carlo cross validation value; RMSEC: Root mean squared error of calibration; RMSEP: Root mean squared error of prediction; PLS: Partial least squares

Calibration model validation

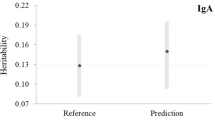

Figure 3 shows a scatter plot that indicates the level of agreement between IgG concentrations measured by the RID assay and those predicted by ATR spectroscopy for the calibration and prediction sets. The plots for the prediction set showed dispersions similar to those for the calibration set, with no significant over-fitting or under-fitting observed, indicating that a robust calibration model was developed. The Pearson correlation coefficients (r) for the calibration and prediction sets were 0.97 and 0.93, respectively. The concordance correlation for the calibration set was 0.97 and for the prediction set 0.93.

Scatter plots comparing immunoglobulin G (IgG) concentrations measured by the radial immunodiffusion (RID) assay to those predicated by the ATR-based assay for 200 bovine serum samples. The correlation coefficients (r) were 0.97 and 0.93 for the calibration and prediction data sets, respectively. The squares denote the samples from the calibration set and the circles indicate the samples from the prediction set. The two assays are considered comparable if data points distribute closely about the reference line

The Bland-Altman plot (Fig. 4) revealed that the mean value of the differences between concentrations obtained by the ATR and RID was -30 mg/dL, which approached zero, indicating no obvious bias between the ATR and RID methods. The 95 % confidence interval ranged from −670–611 mg/dL, which was relatively small in comparison with the range of IgG concentrations (~3000 mg/dL) obtained from the RID assay. Gauges of precision for the ATR and RID analytical methods are summarized graphically in Fig. 5a and b. The mean CV* for ATR analyses was 20 % and the mean CV* for RID analyses was 8.6 %. The RPD and RER values were estimated at 2.7 and 9.1, respectively.

Bland–Altman plot. The average immunoglobulin G (IgG) concentrations measured by radial immunodiffusion (RID) and ATR methods (x-axis) against the difference in IgG concentrations between the two methods (y-axis) for the prediction set samples (n = 67). The dashed lines represent the 95 % confidence limits of agreement (–670 to 611 mg/dL) and the solid line represents the mean difference between ATR and RID assays (-30 mg/dL), indicating no appreciable systematic difference between the two methods

ATR sensitivity and specificity for detection of FTPI

The test characteristics of ATR assay were determined for diagnosis of FTPI (serum IgG <1000 mg/dL) in the prediction and entire data sets. The sensitivity, specificity, and accuracy are shown in Table 3. Within the entire data set, the number of samples that had IgG concentrations from the RID assay of <1000 mg/dL was 102 of 200 samples, resulting in a true FTPI prevalence of 51 %. The number of samples that had IgG concentrations <1000 mg/dL from ATR assay was 94 of 200 samples, resulting in an apparent FTPI prevalence of 47 %. There were no false positives and 8 false negatives identified (Table 3).

Discussion

In this study, the ATR assay was successfully developed as a tool for the rapid measurement of IgG concentrations in bovine serum. The analytical method development required the use of a large calibration data set with a wide range of IgG concentrations to develop a multivariate regression model that could be then applied to determine the IgG concentrations of new serum samples [42].

The performance of the analytical method depends on the spectral pre-processing approaches chosen [43]. To seek the optimal choice, different pre-processing strategies were evaluated and the most accurate was selected according to model performance metrics (i.e., lowest RMMCCV and r closet to 1) and confirmed by its high predictive accuracy (i.e., low RMSEP, high RPD and RER values) [41]. Regardless of the normalization method applied to PLS analysis, spectral smoothing was universally beneficial (Table 2). In contrast to other related studies [44], spectral derivation provided no improvement.

The ATR assay showed higher Pearson correlation and concordance coefficients than have been reported for previous transmission IR spectroscopy-based serum IgG assays for bovine [44], equine serum and plasma [10, 45], and alpaca serum [46]. Agreement between the ATR and RID assays was poorer at high IgG concentrations than at low IgG concentrations (Fig. 4). This may be attributed to the large number of serum samples with IgG concentrations below 1000 mg/dL (102 out of 200). As a result, the calibration model development was weighted towards low IgG concentrations, which are particularly more important for diagnosis of FTPI in farm animals [3, 4]. Similar findings have been observed for transmission IR spectroscopy-based serum IgG assays for bovine serum [44] and IR-based assays for other species [45, 46].

The precision of the ATR analytical method was found to be lower than that of the reference RID assay, as previously observed also for a transmission IR spectroscopy-based assay [44]. The relatively large CV* for the ATR assay typically occurs because the samples in the prediction set are not involved in the optimization of the calibration model (to ensure that the model performance is not overly optimistic). Nevertheless, given the conservative nature of this estimate of precision, the CV* of the IgG concentrations from the prediction samples lies within the acceptable range (should not exceed 20 %), according to the quality control standards of the US Food and Drug Administration Agency [47].

In anticipation of its application in the field, the ATR-based IgG assay was evaluated for its capacity to diagnose a clinically relevant problem - the occurrence of FTPI - using an IgG concentration cut-off value of 1000 mg/dL [3]. The ATR-based assay showed excellent sensitivity (0.92) and specificity (1.0), with values markedly better than those reported for a previously described transmission IR spectroscopy-based assay [44]. In comparison with other methods reported to assess FTPI in neonates, these results are equivalent to or better than most published assays [4, 13, 48]. The 8 false negatives for the ATR method corresponded to samples with RID-determined IgG values between 727–886 mg/dL, relatively close to the 1000 mg/dL diagnostic cut-off. These values indicate only partial FTPI, and thus the possible misdiagnosis of these animals poses a substantially lower risk of morbidity and mortality than would be the case for samples with lower IgG concentrations [48, 49]. There were no false positives identified by ATR spectroscopy. The very low false positive rate has previously been noted for transmission IR spectroscopy-based assays for camelids (no false positives out of 175 samples) [46], and bovine samples (4 false positives out of 200 samples) [44].

At present, the RID assay is acknowledged to be the reference standard test for quantification of IgG in bovine serum [8]. In practice, measurement of IgG by RID method is time consuming (18–24 h), utilizes reagents, and is expensive. In contrast, the ATR assay described in the current work is performed rapidly (one test can be completed within 3–4 min using 5 μL of sample) and the sample can be used with dilution in deionized water, the only required sample preparation step. These attractions, combined with practical advantages associated with compact, portable ATR spectrometers [16, 17], suggest the real possibility of using the technique in the field for assessing pre-calving assessment of dams, the management of colostrum, and ensuring adequate transfer of passive immunity to neonatal calves.

Conclusions

Attenuated total reflectance infrared (ATR) spectroscopy in combination with multivariate data analysis is a feasible alternative for the rapid quantification of IgG concentrations in bovine serum and has the potential to effectively assess FTPI in neonatal calves. Testing of different pre-processing approaches revealed that spectral smoothing (without spectral derivation) significantly improved analytical performance and accuracy as compared to otherwise identical methods with no spectral smoothing

Abbreviations

- IgG:

-

Immunoglobulin G

- FTPI:

-

Failure of transfer of passive immunity

- ATR:

-

Attenuated total reflectance

- RID:

-

Radial immunodiffusion

- PLS:

-

Partial least squares

- RMMCCV:

-

Root mean squared error Monte Carlo cross validation

- RMSEC:

-

Root mean squared error of calibration

- RMSEP:

-

Root mean squared error of prediction

- CV:

-

Coefficient of variation

- RPD:

-

Ratio of predictive deviation

- RER:

-

Range error ratio

References

Rehman S, Bytnar D, Berkenbosch JW, Tobias JD. Hypogammaglobulinemia in pediatric ICU patients. J Intensive Care Med. 2003;18(5):261–4.

Weaver DM, Tyler JW, VanMetre DC, Hostetler DE, Barrington GM. Passive transfer of colostral immunoglobulins in calves. J Vet Intern Med. 2000;14(6):569–77.

Godden S. Colostrum management for dairy calves. Vet Clin North Am Food Anim Pract. 2008;24(1):19–39.

Tyler JW, Besser TE, Wilson L, Hancock DD, Sanders S, Rea DE. Evaluation of a whole blood glutaraldehyde coagulation test for the detection of failure of passive transfer in calves. J Vet Intern Med. 1996;10(2):82–4.

DeNise SK, Robison JD, Stott GH, Armstrong DV. Effects of passive immunity on subsequent production in dairy heifers. J Dairy Sci. 1989;72(2):552–4.

Heinrichs AJ, Heinrichs BS. A prospective study of calf factors affecting first-lactation and lifetime milk production and age of cows when removed from the herd. J Dairy Sci. 2011;94(1):336–41.

Furman-Fratczak K, Rzasa A, Stefaniak T. The influence of colostral immunoglobulin concentration in heifer calves’ serum on their health and growth. J Dairy Sci. 2011;94(11):5536–43.

McBeath DG, Penhale WJ, Logan EF. An examination of the influence of husbandry on the plasma immunoglobulin level of the newborn calf, using a rapid refractometer test for assessing immunoglobulin content. Vet Rec. 1971;88(11):266–70.

Bielmann V, Gillan J, Perkins NR, Skidmore AL, Godden S, Leslie KE. An evaluation of Brix refractometry instruments for measurement of colostrum quality in dairy cattle. J Dairy Sci. 2010;93(8):3713–21.

Riley CB, McClure JT, Low-Ying S, Shaw RA. Use of Fourier-transform infrared spectroscopy for the diagnosis of failure of transfer of passive immunity and measurement of immunoglobulin concentrations in horses. J Vet Intern Med. 2007;21(4):828–34.

Ameri M, Wilkerson MJ. Comparison of two commercial radial immunodiffusion assays for detection of bovine immunoglobulin G in newborn calves. J Vet Diagn Invest. 2008;20(3):333–6.

Parish SM, Tyler JW, Besser TE, Gay CC, Krytenberg D. Prediction of serum IgG1 concentration in Holstein calves using serum gamma glutamyltransferase activity. J Vet Intern Med. 1997;11(6):344–7.

Tyler JW, Hancock DD, Parish SM, Rea DE, Besser TE, Sanders SG, et al. Evaluation of 3 assays for failure of passive transfer in calves. J Vet Intern Med. 1996;10(5):304–7.

Shaw RA, Kotowich S, Leroux M, Mantsch HH. Multianalyte serum analysis using mid-infrared spectroscopy. Ann Clin Biochem. 1998;35(Pt 5):624–32.

Shaw RA, Mantsch HH. nfrared spectroscopy in clinical and diagnostic analysis. In: Meyer RA, Chichester E, editors. Encyclopedia of Analytical Chemistry: Applications Theory and Instrumentation. UK: Wiley Online Library; 2000. p. 83–102.

Smith BC. Fundamentals of Fourier transform infrared spectroscopy. 2nd ed. Boca Raton: CRC Press; 2011.

Sun D. Infrared spectroscopy for food quality analysis and control. 1st ed. London: Academic; 2009.

Budinova G, Salva J, Volka K. Application of molecular spectroscopy in the mid-infrared region to the determination of glucose and cholesterol in whole blood and in blood serum. Appl Spectrosc. 1997;51(5):631–5.

Ward KJ, Haaland DM, Robinson MR, Eaton RP. Post-prandial blood glucose determination by quantitative mid-infrared spectroscopy. Appl Spectrosc. 1992;46:959–65.

Rutten M, Bovenhuis H, Heck J, van Arendonk J. Predicting bovine milk protein composition based on Fourier transform infrared spectra. J Dairy Sci. 2011;94(11):5683–90.

Rutten M, Bovenhuis H, Heck J, van Arendonk J. Prediction of β-lactoglobulin genotypes based on milk Fourier transform infrared spectra. J Dairy Sci. 2011;94(8):4183–8.

Hansen PW. Screening of dairy cows for ketosis by use of infrared spectroscopy and multivariate calibration. J Dairy Sci. 1999;82(9):2005–10.

Oberg KA, Fink AL. A new attenuated total reflectance Fourier transform infrared spectroscopy method for the study of proteins in solution. Anal Biochem. 1998;256(1):92–106.

Fahrenfort J. Attenuated total reflection: A new principle for the production of useful infra-red reflection spectra of organic compounds. Spectrochim Acta. 1961;17(7):698–709.

Baldauf NA, Rodriguez-Romo LA, Yousef AE, Rodriguez-Saona LE. Differentiation of Selected Salmonella enterica Serovars by Fourier Transform Mid-Infrared Spectroscopy. Appl Spectrosc. 2006;60(6):592–8.

Ellis DI, Broadhurst D, Kell DB, Rowland JJ, Goodacre R. Rapid and quantitative detection of the microbial spoilage of meat by fourier transform infrared spectroscopy and machine learning. Appl Environ Microbiol. 2002;68(6):2822–8.

Gupta M, Irudayaraj J, Schmilovitch Z, Mizrach A. Identification and quantification of foodborne pathogens in different food matrices using FTIR spectroscopy and artificial neural networks. Trans ASABE. 2006;49(4):1249–55.

Seigneur A, Hou S, Shaw RA, McClure J, Gelens H, Riley CB. Use of Fourier-transform infrared spectroscopy to quantify immunoglobulin G concentration and an analysis of the effect of signalment on levels in canine serum. Vet Immunol Immunopathol. 2015;163(1–2):8–15.

Fecteau G, Van Metre DC, Pare J, Smith BP, Higgins R, Holmberg CA, et al. Bacteriological culture of blood from critically ill neonatal calves. Can Vet J. 1997;38(2):95–100.

Fecteau G, Pare J, Van Metre DC, Smith BP, Holmberg CA, Guterbock W, et al. Use of a clinical sepsis score for predicting bacteremia in neonatal dairy calves on a calf rearing farm. Can Vet J. 1997;38(2):101–4.

Savitzky A, Golay MJ. Smoothing and differentiation of data by simplified least squares procedures. Anal Chem. 1964;36(8):1627–39.

Barnes R, Dhanoa M, Lister SJ. Standard normal variate transformation and de-trending of near-infrared diffuse reflectance spectra. Appl Spectrosc. 1989;43(5):772–7.

Barnes R, Dhanoa M, Lister S. Letter: correction to the description of standard normal variate (SNV) and De-trend (DT) ransformations in practical spectroscopy with applications in food and everage analysis–2nd edition. J Near Infrared Spectrosc. 2004;1(3):185–6.

Dean R, Dixon W. Simplified statistics for small numbers of observations. Anal Chem. 1951;23(4):636–8.

Rorabacher DB. Statistical treatment for rejection of deviant values: critical values of Dixon’s“Q” parameter and related subrange ratios at the 95% confidence level. Anal Chem. 1991;63(2):139–46.

Picard RR, Cook RD. Cross-validation of regression models. J Am Stat Assoc. 1984;79(387):575–83.

Xu Q, Liang Y. Monte Carlo cross validation. Chemometrics Intellig Lab Syst. 2001;56(1):1–11.

Altman DG, Bland JM. Measurement in medicine: the analysis of method comparison studies. Statistician. 1983;32(3):307–17.

Bland JM, Altman DG. Comparing two methods of clinical measurement: a personal history. Int J Epidemiol. 1995;24(1):S7–S14.

Salkind NJ. Encyclopedia of research design. Thousand Oaks: Sage; 2010.

Williams PC, Sobering D. How do we do it: a brief summary of the methods we use in developing near infrared calibrations, Near infrared spectroscopy: The future waves. UK: NIR Publications; 1996. p. 185–8.

Murray I. Near infrared reflectance analysis of forages, Recent advances in Animal nutrition. London: Buttersworths; 1986. p. 141–56.

Zeaiter M, Roger J, Bellon-Maurel V. Robustness of models developed by multivariate calibration. Part II: The influence of pre-processing methods. TrAC Trends Anal Chem. 2005;24(5):437–45.

Elsohaby I, Riley CB, Hou S, McClure JT, Shaw RA, Keefe GP. Measurement of serum immunoglobulin G in dairy cattle using Fourier-transform infrared spectroscopy: A reagent free approach. Vet J. 2014;202(3):510–5.

Hou S, McClure JT, Shaw RA, Riley CB. Immunoglobulin g measurement in blood plasma using infrared spectroscopy. Appl Spectrosc. 2014;68(4):466–74.

Burns J, Hou S, Riley C, Shaw R, Jewett N, McClure J. Use of fourier‐transform infrared spectroscopy to quantify immunoglobulin G concentrations in alpaca serum. J Vet Intern Med. 2014;28:639–45.

US Department of Health and Human Services: Guidance for industry, bioanalytical method validation. http://www.fda.gov/downloads/Drugs/.../Guidances/ucm070107.pdf" 2001.

Lee SH, Jaekal J, Bae CS, Chung BH, Yun SC, Gwak MJ, et al. Enzyme-linked immunosorbent assay, single radial immunodiffusion, and indirect methods for the detection of failure of transfer of passive immunity in dairy calves. J Vet Intern Med. 2008;22(1):212–8.

Fecteau G, Arsenault J, Pare J, Van Metre DC, Holmberg CA, Smith BP. Prediction of serum IgG concentration by indirect techniques with adjustment for age and clinical and laboratory covariates in critically ill newborn calves. Can J Vet Res. 2013;77(2):89–94.

Acknowledgments

The authors acknowledge Cynthia Mitchell for her technical assistance, Professor Gilles Fecteau (Faculté de Médecine Vétérinaire, Université de Montréal) for providing some of the samples used in this study and William Chalmers for technical assistance in preparation of the manuscript. This research was funded by the Atlantic Canada Opportunities Agency (AIF: 195174). Personal funding for I. Elsohaby was provided by Mission Office, Ministry of Higher Education and Scientific Research, Egypt.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors disclose no competing of interests.

Authors’ contributions

IE carried out the radial immunodiffusion assay, ATR assay, performed the statistical analysis and wrote the first draft of the manuscript. SH wrote the MATLAB scripts and took part in the drafting and revising of the manuscript. RAS participated in the design of the studies and also took part in the drafting and revising of the manuscript. CBR, JTM and GPK were responsible for the whole project, generated the working hypothesis, provided the samples, obtained financial support, revised the draft and wrote the final version of the manuscript. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Elsohaby, I., Hou, S., McClure, J.T. et al. A rapid field test for the measurement of bovine serum immunoglobulin G using attenuated total reflectance infrared spectroscopy. BMC Vet Res 11, 218 (2015). https://doi.org/10.1186/s12917-015-0539-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12917-015-0539-x