Abstract

Background

Primary care has been described as the ‘bedrock’ of the National Health Service (NHS) accounting for approximately 90% of patient contacts but is facing significant challenges. Against a backdrop of a rapidly ageing population with increasingly complex health challenges, policy-makers have encouraged primary care commissioners to increase the usage of data when making commissioning decisions. Purported benefits include cost savings and improved population health. However, research on evidence-based commissioning has concluded that commissioners work in complex environments and that closer attention should be paid to the interplay of contextual factors and evidence use. The aim of this review was to understand how and why primary care commissioners use data to inform their decision making, what outcomes this leads to, and understand what factors or contexts promote and inhibit their usage of data.

Methods

We developed initial programme theory by identifying barriers and facilitators to using data to inform primary care commissioning based on the findings of an exploratory literature search and discussions with programme implementers. We then located a range of diverse studies by searching seven databases as well as grey literature. Using a realist approach, which has an explanatory rather than a judgemental focus, we identified recurrent patterns of outcomes and their associated contexts and mechanisms related to data usage in primary care commissioning to form context-mechanism-outcome (CMO) configurations. We then developed a revised and refined programme theory.

Results

Ninety-two studies met the inclusion criteria, informing the development of 30 CMOs. Primary care commissioners work in complex and demanding environments, and the usage of data are promoted and inhibited by a wide range of contexts including specific commissioning activities, commissioners’ perceptions and skillsets, their relationships with external providers of data (analysis), and the characteristics of data themselves. Data are used by commissioners not only as a source of evidence but also as a tool for stimulating commissioning improvements and as a warrant for convincing others about decisions commissioners wish to make. Despite being well-intentioned users of data, commissioners face considerable challenges when trying to use them, and have developed a range of strategies to deal with ‘imperfect’ data.

Conclusions

There are still considerable barriers to using data in certain contexts. Understanding and addressing these will be key in light of the government’s ongoing commitments to using data to inform policy-making, as well as increasing integrated commissioning.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Evidence-based policy refers to the different ways in which policy decisions and initiatives can be supported or influenced by (research) evidence [1]. Purported benefits include greater workforce productivity, more efficient use of public resources, and higher likelihood of implementing successful programmes [2, 3]. A wide variety of qualitative and quantitative evidence can be used in evidence-based policy, and the perceived utility of evidence can vary by stakeholder [2].

Within the English National Health Service (NHS), those responsible for delivering evidence-based policy include healthcare commissioners [4]. Commissioning refers to the proactive and strategic process of planning, purchasing, contracting, and monitoring of health services to meet population health needs [5, 6]. The term ‘primary care commissioning’ can refer to both commissioning led by primary care as well as the commissioning of primary care services themselves (which is the focus of this review), i.e. the commissioning of services provided within general practice [7] and of other primary medical services, i.e. dentistry, community pharmacy and ophthalmology services [8]. Since 2022, integrated care boards (ICBs) and integrated care systems (ICSs) have been legal entities with statutory powers and responsibilities tasked with delivering joined-up support and care, with the former taking on responsibility for primary care commissioning [7, 9]. With general practice accounting for 90% of patient consultations (while receiving about 8% of the NHS budget), primary care commissioning is integral to the sustainability of the NHS as whole, given that general practitioners prevent overuse of more expensive health services and enable provision of cost-effective treatment [10,11,12,13].

Legal requirements and practical support are in place to promote evidence-based policy and commissioning, including the 2012 Health and Social Care Act which created a statutory duty for the usage of research evidence to help improve patient outcomes and achieve value for money [14, 15]. NHS England established Data Services to ensure that information about the performance and impact of NHS services was available to commissioners [16]. In addition, numerous guides and toolkits have been developed to promote and facilitate evidence-based commissioning, such as NHS RightCare and the NHS Atlas of Variation in Healthcare [15, 17,18,19].

It has been argued that evidence-based commissioning is particularly pertinent as the NHS is facing severe financial and demographic challenges, with costs in expenditure expected to rise in the medium- to long-term even according to conservative estimates [20, 21]. Some health economists have argued that in order to remain financially sustainable, commissioners must utilise data on healthcare expenditure and understand drivers of variation of activity to help achieve significant cost savings [20, 22]. Despite policy commitment to using data as a key enabler of cost savings and improved health outcomes, the limited research literature on this topic has criticised the utility of the data available and found that commissioners encounter challenges using them meaningfully [17, 23, 24]. There is also limited research on the usage of evidence and decision making in British healthcare commissioning [5, 25]. A recurrent theme in existing research is that evidence use in commissioning is a multifaceted and complex process that can vary by person and context, that evidence is not always used to inform decision-making [4, 5, 26], and that attention should be placed on understanding the role of context affecting evidence use [4, 27, 28].

In this review, we focus on data, defined as quantitative information, including nominal data, and seek to unpack the complex and context-dependent processes underpinning the usage (or not) of data in commissioning. We aim to make a novel contribution by asking:

-

How and why do commissioners use data to inform primary care commissioning?

-

What outcomes does this lead to?

-

What factors or contexts promote and inhibit the usage of data in primary care commissioning decisions?

Methods

Review process

We used a realist synthesis approach, i.e. a theory-driven approach based on a realist philosophy of science, with particular emphasis on understanding causation [29]. This attempts to unpack the relationships between contexts, mechanisms, and outcomes to understand failures, successes, and other possible intended and unintended outcomes of programmes and interventions [29]. Recurrent patterns of outcomes (or demi-regularities) and associated contexts and mechanisms are, where possible, linked to substantive theories, thus forming context-mechanism-outcome (CMO) configurations [29, 30]. The five review steps are based on Pawson’s suggested iterative steps and those outlined by Papoutsi et al. [31,32,33]. We provide a brief summary of these steps, with a more detailed overview provided in Additional file 1 [34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50].

This review aligns with the Realist And MEta-narrative Evidence Syntheses: Evolving Standards (RAMESES) publication standards [29].

Step 1: Locate existing theories

Initial programme theory (Additional file 2) [4, 6, 17, 26, 51,52,53,54,55,56,57,58,59,60,61,62] was built by identifying barriers and facilitators to using data to inform primary care commissioning contained in 15 studies located via an exploratory literature search (Additional file 3) and informal conversations with a former and current NHS commissioner and a public health worker.

Step 2: Search for evidence

Studies were identified via three broad and interdisciplinary formal literature searches completed between March 2019 and March 2022 (Additional file 3) and reference linking.

Step 3: Select studies

The criteria outlined to select studies are shown in Table 1.

Step 4: Extract and organise data

Following full-text screening, we imported the included studies (Additional file 4) into NVivo 12 [39] (a qualitative data management software package) for coding.

Step 5: Synthesise the evidence according to a realist logic of analysis

We initially coded all relevant concepts and ideas as well as any substantive theories mentioned in the included studies. Studies were then re-read, with several forms of reasoning were used to identify contexts, mechanisms, and outcomes, namely induction, deduction, retroduction, and abduction. Once draft CMOs had been developed, we used several forms of reasoning as suggested by Pawson, namely juxtaposition, reconciliation, consolidation, and situating, to further refine and develop the CMOs (see Additional file 1 for further details).

Results

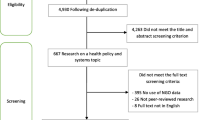

Following de-duplication, the searches identified 3852 studies for screening. Ninety-two studies were included in the review (Fig. 1). As outlined in Table 2, most of the studies either used qualitative or mixed methods approaches.

The 30 CMOs developed (representing the final programme theory) are linked back to the initial draft programme theory in Additional file 5 [4, 6, 17, 28, 51,52,53, 55, 56, 58, 60, 63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101] alongside supporting quotes and, where applicable, substantive theories.

Category: Steps of the commissioning cycle

Commissioners’ usage of data was sometimes related to specific commissioning activities or steps of the commissioning cycle. Data were used both as a source of evidence to inform commissioning decisions, as well as a tool to stimulate improvements. When deciding commissioning priorities, commissioners considered data to be a credible source of evidence for prioritisation, potentially because they perceived them as providing the most ‘objective’ guidance (CMO 1). Four CMOs related to the ‘monitoring and evaluation’ step of the commissioning cycle, where data were used as a tool by commissioners with the aim of improving clinician and service provider performance. Commissioners shared data (e.g. on referral rates and prescribing costs) with clinicians showing how their performance compared relative to their peers based on beliefs that peer pressure and using other clinicians as a reference point (and the resulting competition) could stimulate improvement. They also believed that clinicians were more receptive to feedback from their peers than commissioners (CMO 2). In contexts where commissioners felt clinicians or service providers did not know their performance was below average and they did not want to be seen as ‘managing performance,’ commissioners shared data with them due to a perception that this could empower clinicians and service providers to come up with their own solutions (CMO 3). If data indicated potential for improvement but commissioners suspected that clinicians required help to achieve these improvements and, as in CMO 3, did not wish to be seen as ‘managing performance’ or judging, commissioners sometimes offered support to outliers and underperformers because they wanted to maintain good relationships by being perceived as supportive (CMO 4). In instances where commissioners shared data with clinicians, linking this data to tailored suggestions for action increased clinician engagement and helped them understand how to make improvements (CMO 5).

Category: Characteristics of data

The actual and perceived characteristics of data influenced how commissioners used them. ‘Actual’ characteristics relate to objective attributes of data such as being data about health inequalities or being part of a combined dataset. Perceived characteristics of data relate to more subjective factors including beliefs about data, such as commissioners believing data did not reflect local circumstances.

Contexts promoting the usage of data

The types of data themselves served as a facilitator to commissioners using them (CMOs 8–9, 14, 17, 18–19). For example, commissioners were inclined to use data on health inequalities, which they perceived as useful to achieving an important moral and policy objective (CMO 8). Commissioners were more inclined to use data that were ‘real time’ or recent, since they found them useful for providing immediate support (e.g. to outliers) and enabling speedy decisions, and because they had trust that the data reflect the current situation (CMO 19). Commissioners also wanted access to data showing trends and developments over time, which they could use to monitor and because they perceived this to reduce the risk of drawing false conclusions (CMO 14). Combined datasets, i.e. datasets combining data from different sources such as secondary and primary care enabled commissioners to gain a fuller understanding of the ‘patient journey’ and how and when patients used different care services (CMO 9). These combined datasets facilitated comparisons by providing practices with data to compare themselves with, enabling cross-comparisons with Clinical Commissioning Groups (CCGs) across a regional area, and allowing for comparisons with practices that had a similar profile. These comparisons were facilitated because commissioners could identify multiple datapoints related to a practice or a CCG (e.g. deprivation levels, population factors) thereby allowing them to choose a suitably similar comparator. Commissioners also valued data linked to cost implications, especially the estimated short-term costs of potential interventions, since they were often allocated annual budgets (CMO 17). When commissioners could segment or ‘drill down’ in data, they could create more targeted and tailored commissioning decisions, because they could segment and disaggregate data in multiple ways including by population group, health conditions, and data on service use by ethnic group (CMO 18).

There were also several contexts relating to perceived or more subjective characteristics of data that facilitated their usage (CMOs 6, 7, 13, 20). Where commissioners wished to better understand data, they sometimes supplemented it with qualitative information to increase data validity and gain a fuller and more meaningful understanding (CMO 6). Commissioners sometimes preferred using local data or thought that national or ‘universal’ data (e.g. from trials or research papers) required ‘contextualisation’ (i.e. the process of applying local knowledge to data) or ideally supplementation with local data (CMO 7). Presenting key pieces of data in a succinct, easily digestible manner (e.g. as summaries on a single A4) increased commissioners’ engagement with data (CMO 13). Commissioners were also able to use ‘imperfect’ data such as incomplete data, provided they understood their limitations and this was the only type of data they had access to, since they believed this was better than using no data at all (CMO 20).

Contexts inhibiting the usage of data

Several characteristics of data inhibited their usage (CMO 10–12, 15–16). If commissioners felt that factors outside of clinicians’ or service providers’ control were impacting benchmarking/variation data, and data did not allow for a ‘like for like’ comparison, they became less inclined to use data because they felt the data were not valid (CMO 10). Similarly, commissioners doubted the credibility of data not presented in an interoperable way, i.e. with consistent definitions, and had difficulty drawing conclusions from them (CMO 11). Feelings of mistrust and a subsequent inclination to use data were also triggered in contexts where (clinical) commissioners perceived data to be in tension with their own knowledge or information from clinicians (CMO 12) or where commissioners suspected that commissioning data were inaccurate or contradictory (CMO 15). If commissioners had access to more data than they could manage, they experienced ‘data overload,’ leaving them frustrated, unable to access (certain) data quickly, and unsure about what to prioritise (CMO 16).

Category: Commissioners’ capabilities, roles, working environment, and intentions

Commissioners’ capabilities, including their skillsets, capacity for analysis, and understanding of data, as well as their working environment, influenced how they used data.

Contexts promoting the usage of data

Two CMOs (CMO 22, 24) related to potential interventions that could promote the usage of data: commissioners’ ability to choose data and metrics (e.g. those used to track the progress or uptake of a programme) increased their engagement with data because they were able to choose those they believed were meaningful and valid (CMO 22). Having a ‘data champion’ within the commissioning team to support and promote the usage of data could increase engagement with data and persuade people to use them (CMO 24). In addition, commissioners used data (sometimes selectively) as a source of evidence to persuade others and justify proposals due to a perception that they were an ‘objective’ source of evidence that could increase the legitimacy of proposals (CMO 26). Data were sometimes chosen selectively (rather than systematically) to support what commissioners already wanted or had decided to do prior to looking at evidence (CMO 26).

Contexts inhibiting the usage of data

If commissioners were subjected to financial pressures, they sometimes chose to make decisions based on little or no evidence (including data) because they felt obliged to prioritise financial issues (CMO 21). Where commissioners lacked the skills to analyse and interpret data, they could not understand it and draw insights from it due to a knowledge gap (CMO 25). Similarly, commissioners were sometimes unable to operationalise data because they had difficulty understanding the drivers of data trends,e.g. the drivers of costs or the reasons behind variation in prescribing rates (CMO 26).

Category: Interpersonal relationships with and perceptions of external providers

External providers are organisations who provide commissioners with data and/or data analysis including NHS organisations such as commissioning support units (CSUs), academic partners, and private firms such as consultancies and analytics providers. The relationships commissioners had with these external providers influenced how data were used.

Contexts promoting the usage of data

If commissioners perceived external support as able to provide new or different skills, especially analytical skills, thereby producing new insights, commissioners were more inclined to use the data and outputs produced (CMO 27). The relationships between commissioners and external providers were also key to facilitating data usage. Where commissioners and external providers worked on data production and analysis collaboratively in a way that aligned with the principles of coproduction (e.g. commissioners and external providers having an active and equal relationship, active involvement of commissioners in service design, dialogue between commissioners and external providers), commissioners appeared more inclined to use the outputs (CMO 28). Commissioners who developed relationships with external providers of data (analysis) they perceived to be satisfactory (as evidenced by feelings of trust, closeness, cohesion, etc.) were more likely to use data provided by the external providers (CMO 29).

Contexts inhibiting the usage of data

If there is a real or perceived divergence of interest or information asymmetry between commissioners and the external providers of data (analysis), commissioners may feel mistrustful (CMO 30). This was informed by the theory of the principal-agent problem, which involves two parties exchanging resources: the principal disposes of resources to an agent, who accepts the resources and is willing to further the interests of the principal (e.g. the principal may give the agent money in exchange for the agent’s skills, or in this case commissioners may hire external consultants) [102]. There is also risk of a potential divergence of interest between the principal and the agent, meaning the principal cannot ensure that the agent will act in their interest [103].

Discussion

By drawing on 92 studies, we completed the first realist review to focus specifically on the usage of data in primary care commissioning. The resulting 30 CMOs and programme theory offer a novel perspective on the contexts that can facilitate and hinder the usage of data: although commissioners are often eager and willing to use data to inform commissioning decisions, they face a range of challenges that can impede their use, and addressing these will require changes to be made to the data themselves, as well as the manner in which data are presented and shared with commissioners. These CMOs are interrelated, and to increase the usage of data in commissioning it will not be sufficient to address individual CMOs in isolation.

Comparison to existing literature

Realist research has investigated how policy-makers use evidence and research in countries such as Australia, France, and Canada [49, 104, 105]. Many of the mechanisms identified that promoted and inhibited the usage of evidence (or data) in these studies were similar to those identified in our review, with the actual, ‘objective’ characteristics of evidence being only one factor impacting its usage, in addition to individual, environmental, and organisational factors. A novel contribution of this study is the complexity of outcomes, focussing not only on binary outcomes relating to whether data were used or not but also on more nuanced ones such as using data in conjunction with other forms of evidence or employing strategies to use ‘imperfect’ data.

Non-realist studies of evidence-based decision making in policy have correspondingly found that the usage of evidence in decision-making is a complex, context dependent process and that evidence is often underutilised. The findings of this study have confirmed many of the findings of non-realist research on evidence use in policy-making, e.g. that a gulf or disconnect between decision-makers and researchers (or evidence providers) can prevent evidence from being used [106], or that a lack of time and resources inhibits evidence use [107]. Non-realist research on evidence-use in policy-making has concluded that there is a need for context specific research about the best approaches for incorporating research evidence into decision making [106] and that further research is needed on how and why different types of evidence are used in decision-making [107]. This study has built on this by investigating in more detail the contexts impacting the usage of data, as well as providing insights on specific types of data such as variation data or combined datasets.

The findings of this study are concordant with findings that interventions to increase commissioners’ usage of evidence by providing them with more evidence or embedding researchers in commissioning organisations have not always been successful, confirming that increased access to evidence and its producers is not necessarily sufficient to increase its uptake and that more complex contextual factors are at play. A study evaluating whether access to a demand-led evidence briefing service improved the use of research evidence by commissioners found that this did not improve the uptake and use of research evidence compared to less targeted and intensive alternatives [14]. An evaluation of a ‘researcher in residence’ model in three organisations, including a CCG, concluded that it had potential to produce knowledge that could be used in practice, but challenges remained, including how best to embed researchers in their host environment and the development of relationships with commissioners [108].

Strengths and limitations

The strengths of this study include its novel contribution to the literature, since it is, to the best of our knowledge, the first study to synthesise secondary literature on the usage of data as a form of evidence in primary care commissioning decisions. The application of a realist lens to this topic has provided an elucidation of contexts and their inherent interrelatedness that promote and inhibit the usage of data in primary care commissioning, issues that had received limited attention in the existing literature. This study has also provided insights on the contexts affecting the usage of specific types of data such as variation data or combined datasets. Understanding the latter is particularly pertinent in light of NHS policy to support more integrated commissioning in ICBs and ICSs, since these will have to commission ‘joined up’ health and care services across, e.g. secondary and primary care. A large amount of diverse secondary literature (including grey literature) was synthesised, thereby potentially increasing the validity of the findings.

A limitation of this study is that synthesised studies were largely atheoretical, and there is not one specific NHS-articulated programme theory underpinning the usage of data in primary care commissioning. It was therefore challenging to create an initial programme theory and it was not possible to do so in a realist format, which could have facilitated and accelerated CMO development. In addition, there are several limitations inherent to any realist review, including that more informal or ‘off the record’ information on contextual factors such as interpersonal relationships or power struggles may not be documented in studies [109]. A realist review is not reproducible in the same sense as a Cochrane review, but quality assurance can be provided by researchers being explicit about review methods [109]. While we have attempted to make our methods and reasoning transparent, the CMOs developed may have been different had this study been conducted by a commissioner or another policy-maker.

Implications for practice and policy

Based on the CMOs developed, we have developed several policy recommendations that could potentially facilitate and increase commissioners’ usage of data. As previously mentioned, our CMOs are interrelated, and it is unlikely that implementing a single recommendation or addressing a single CMO can effect change. In addition, each commissioning organisation likely has a different baseline in terms of how (often) data are used, meaning not every recommendation is applicable to every organisation. Therefore, the following recommendations are to be understood as something we recommend commissioners consider, as addressing these will likely help increase the chances that data will be used in commissioning, but the applicability of recommendations will vary:

-

Increase collaboration with the external providers of data: when commissioners can develop relationships and collaborate with the external providers of data, they can better communicate their needs and co-produce relevant evidence. This can also facilitate feelings of trust and make commissioners less wary of external providers’ motivations, thereby making them more likely to use the data.

-

Implement a ‘data champion’ in each commissioning team: commissioners are receptive to messaging and leadership from their peers around data. Having a data champion in their commissioning team who they view as an equal can increase engagement with data.

-

Give commissioners access to up-to-date, locally relevant, and manipulatable datasets: commissioners want access to data they can perform their own analyses on, rather than static data, as this can make the data more useful and meaningful to them.

-

Improve the availability of meaningful integrated data: with the advent of ICSs, integrated, combined datasets (including combining data across different types of care, e.g. primary and secondary care as well as data on social determinants of health e.g. inequality data) are more important than ever. Being able to see the full ‘patient journey’ and considering how non-medical factors impact health outcomes can enable customised commissioning.

-

Define skills and competencies for commissioners and provide training: commissioners come from a wide range of academic backgrounds and prior work experiences. Some commissioners lack the capability to perform or interpret data analysis. Defining the skills that are expected of commissioners in terms of data analysis and providing training could increase engagement with data.

Implications for research

In mid-2022, CCGs were dissolved and replaced by ICSs, with ICBs of each ICS taking on a range of commissioning responsibilities, including primary care services [9]. Several recent NHS policy documents have outlined the importance of ICSs using data to deliver population health improvements, address health inequalities, and develop ICS-wide fully linked datasets from data that are still mostly held separately by individual services and their commissioners [110]. Future research could test the applicability of the CMOs developed in this study to ICSs, in particular CMO 9 (combined datasets) and CMO 12 (interoperability), and refine and develop new CMOs as required.

Given the government’s ‘National Data Strategy’ and its commitment to using ‘better data’ for ‘better decision making’ to deliver more tailored and efficient policies and make savings [111], future research could assess, compare, and contrast how similar areas of policy-making (such as social care, education, or the justice system) use data to inform the planning and commissioning of services. By identifying common barriers and facilitators to using data in policy-making as well as methods to overcoming the concomitant challenges, policy-makers could learn from each other and improve their own practice of evidence use.

Conclusions

Considering the NHS’s increased ambition, commitment to, and investment in using data to inform commissioning, combined with the financial pressures stemming from an ageing population facing increasingly complex health challenges, the need to understand how data can be used effectively to inform commissioning is greater than ever. One encouraging finding from this research is that commissioners do value data as a source of evidence to inform their decision-making, perceiving data to be ‘rational,’ persuasive, and useful if presented and used correctly. Although commissioners are often eager and willing to use data to inform commissioning decisions, they face a range of challenges that can impede their use, and addressing these where they occur will require changes to be made to the data themselves, as well as the way data are presented and shared with commissioners. In addition, increasing commissioners’ trust in the quality of data and strengthening their relationships with and trust in those who provide them with data could facilitate data usage. There is evidence of a disconnect between how NHS policy-makers and providers of data believe the data are used in commissioning and how they are actually used, and greater collaboration and exchange between them and commissioners could facilitate better usage.

Availability of data and materials

Datasets used in the review are available from the corresponding author upon reasonable request.

Abbreviations

- CCGs:

-

Clinical Commissioning Groups

- CSUs:

-

Commissioning support units

- CMO:

-

Context-mechanism-outcome

- ICBs:

-

Integrated care boards

- ICSs:

-

Integrated care systems

- NHS:

-

National Health Service

- RAMESES:

-

Realist And MEta-narrative Evidence Syntheses: Evolving Standards

References

Plewis I. Educational inequalities and education action zones. In: Pantazis C, Gordon D, editors. Tackling inequalities: Where are we now and what can be done? 1st ed. Bristol: Policy Press; 2000. p. 87–100.

Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental concept for public health practice. Annu Rev Public Health. 2009;30:175–201. https://doi.org/10.1146/annurev.publhealth.031308.100134.

Kneale D, Rojas-Garcia A, Raine R, Thomas J. The use of evidence in English local public health decision-making: a systematic scoping review. Implement Sci. 2017;12. https://doi.org/10.1186/s13012-017-0577-9

Wye L, Brangan E, Cameron A, Gabbay J, Klein JH, Pope C. Evidence based policy making and the ‘art’ of commissioning - how English healthcare commissioners access and use information and academic research in ‘real life’ decision-making: an empirical qualitative study. BMC Health Serv Res. 2015;15:430. https://doi.org/10.1186/s12913-015-1091-x.

Maniatopoulos G, Haining S, Allen J, Wilkes S. Negotiating commissioning pathways for the successful implementation of innovative health technology in primary care. BMC Health Serv Res. 2019;19:648. https://doi.org/10.1186/s12913-019-4477-3.

Shaw SE, Smith JA, Porter A, Rosen R, Mays N. The work of commissioning: a multisite case study of healthcare commissioning in England’s NHS. BMJ Open. 2013;3:e003341. https://doi.org/10.1136/bmjopen-2013-003341.

McDermott I, Checkland K, Moran V, Warwick-Giles L. Achieving integrated care through commissioning of primary care services in the English NHS: a qualitative analysis. BMJ Open. 2019;9. https://doi.org/10.1136/bmjopen-2018-027622

Surrey Heartlands Health and Care Partnership. Primary care commissioning. 2023. (Accessed 6 May 2023, at https://www.surreyheartlands.org/primary-care-commissioning.)

Charles A. Integrated care systems explained: making sense of systems, places and neighbourhoods. King’s Fund, 2022. (Accessed 1 Apr 2023, at https://www.kingsfund.org.uk/publications/integrated-care-systems-explained.)

Roland M, Everington S. Tackling the crisis in general practice. BMJ. 2016;352:i942. https://doi.org/10.1136/bmj.i942.

Checkland K, McDermott I, Coleman A, Warwick-Giles L, Bramwell D, Allen P, Peckham S. Planning and managing primary care services: lessons from the NHS in England. Public Money Manage. 2018;38:261–70. https://doi.org/10.1080/09540962.2018.1449467.

Goodwin N, Dixon A, Poole T, Raleigh V. Improving the quality of care in general practice. King’s Fund, 2011. (Accessed 1 May 2023, at https://www.kingsfund.org.uk/publications/improving-quality-care-general-practice.)

Paddison CAM, Rosen R. Tackling the crisis in primary care. BMJ. 2022;377:o1485. https://doi.org/10.1136/bmj.o1485.

Wilson PM, Farley K, Bickerdike L, Booth A, Chambers D, Lambert M, Thompson C, Turner R, Watt IS. Does access to a demand-led evidence briefing service improve uptake and use of research evidence by health service commissioners? A controlled before and after study. Implement Sci. 2017;12:20. https://doi.org/10.1186/s13012-017-0545-4.

NHS England. The role of research and evidence in commissioning. (Accessed 1 Apr 2022, at https://www.innovationagencynwc.nhs.uk/media/PDF/NHSI_FINAL_INFOGRAPHIC.pdf.)

NHS England. Data services. (Accessed 1 Oct 2021, at https://www.england.nhs.uk/data-services/.)

Schang L, Morton A, DaSilva P, Bevan G. From data to decisions? Exploring how healthcare payers respond to the NHS Atlas of Variation in Healthcare in England. Health Policy. 2014;114:79–87. https://doi.org/10.1016/j.healthpol.2013.04.014.

Cripps M. Leadership Q&A: NHS RightCare. 2018. (Accessed 1 Jan 2023, at https://www.hsj.co.uk/leadership-qanda/leadership-qanda-nhs-rightcare/7021633.article.)

West of England Academic Health Science Network. Two new online toolkits launched to support NHS commissioners. 2016. (Accessed 1 Oct 2021, at https://www.weahsn.net/news/online-toolkits-launched-to-support-nhs-commissioners/.)

Rodriguez Santana I, Aragón MJ, Rice N, Mason AR. Trends in and drivers of healthcare expenditure in the English NHS: a retrospective analysis. Health Econ Rev. 2020;10:20. https://doi.org/10.1186/s13561-020-00278-9.

Kingston A, Robinson L, Booth H, Knapp M, Jagger C, for the Mp. Projections of multi-morbidity in the older population in England to 2035: estimates from the Population Ageing and Care Simulation (PACSim) model. Age Ageing. 2018;47:374–380. https://doi.org/10.1093/ageing/afx201

House of Commons Science and Technology Committee. The big data dilemma. 2016. https://publications.parliament.uk/pa/cm201516/cmselect/cmsctech/468/468.pdf. Accessed 1 Feb 2022.

Dropkin G. RightCare: wrong answers. J Public Health. 2017;40:e367–74. https://doi.org/10.1093/pubmed/fdx136.

Arie S. Can we save the NHS by reducing unwarranted variation? BMJ. 2017;358:j3952–j3952. https://doi.org/10.1136/bmj.j3952.

Sabey A. An evaluation of a training intervention to support the use of evidence in healthcare commissioning in England. Int J Evid Based Healthc. 2020;18:58–64.

Clarke A, Taylor-Phillips S, Swan J, Gkeredakis E, Mills P, Powell J, Nicolini D, Roginski C, Scarbrough H, Grove A. Evidence-based commissioning in the English NHS: who uses which sources of evidence? A survey 2010/2011. BMJ Open. 2013;3. https://doi.org/10.1136/bmjopen-2013-002714

Gabbay J, le May A, Pope C, Brangan E, Cameron A, Klein JH, Wye L. Uncovering the processes of knowledge transformation: the example of local evidence-informed policy-making in United Kingdom healthcare. Health Res Policy Syst. 2020;18:110. https://doi.org/10.1186/s12961-020-00587-9.

Swan J, Gkeredakis E, Manning RM, Nicolini D, Sharp D, Powell J. Improving the capabilities of NHS organisations to use evidence: a qualitative study of redesign projects in Clinical Commissioning Groups. Health Serv Deliv Res 2017;5. https://doi.org/10.3310/hsdr05180

Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. RAMESES publication standards: realist syntheses. BMC Med. 2013;11:21. https://doi.org/10.1111/jan.12095.

Jagosh J. Realist synthesis for public health: building an ontologically deep understanding of how programs work, for whom, and in which contexts. Annu Rev Public Health. 2019;40:361–72. https://doi.org/10.1146/annurev-publhealth-031816-044451.

Papoutsi C, Mattick K, Pearson M, Brennan N, Briscoe S, Wong G. Interventions to improve antimicrobial prescribing of doctors in training (IMPACT): a realist review. Health Serv Deliv Res. 2018;6. https://doi.org/10.3310/hsdr06100

Papoutsi C, Mattick K, Pearson M, Brennan N, Briscoe S, Wong G. Social and professional influences on antimicrobial prescribing for doctors-in-training: a realist review. J Antimicrob Chemother. 2017;72:2418–30. https://doi.org/10.1093/jac/dkx194.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist synthesis: an introduction. 2004. (Accessed 1 Dec 2022, at https://www.betterevaluation.org/sites/default/files/RMPmethods2.pdf.)

Gray A. The commissioning cycle. Milton Keynes Clinical Commissioning Group, 2012. (Accessed 1 Jan 2022, at https://studylib.net/doc/5355452/the-commissioning-cycle.)

NHS England. Commissioning cycle. (Accessed 1 Jan 2021, at https://www.england.nhs.uk/get-involved/resources/commissioning-engagement-cycle/.)

Wong G. Data gathering in realist reviews: looking for needles in haystacks. In: Emmel N, Greenhalgh J, Manzano A, Monaghan M, Dalkin S, editors. Doing realist research. London: SAGE Publications Ltd; 2018. p. 131–46.

Pawson R. Evidence-based policy: a realist perspective. London: Sage; 2006.

Gough D, Thomas J, Oliver S. Clarifying differences between review designs and methods. Syst Rev. 2012;1. https://doi.org/10.1186/2046-4053-1-28

QSR International Pty Ltd. NVivo (version 12). 2018.

Greenhalgh T, Pawson R, Wong G, Westhorp G, Greenhalgh J, Manzano A, Jagosh J. Retroduction in realist evaluation. 2017. (Accessed 1 Dec 2022, at http://www.ramesesproject.org/media/RAMESES_II_Retroduction.pdf.)

Jagosh J. Retroductive theorizing in Pawson and Tilley’s applied scientific realism. J Crit Realism. 2020;19:121–30. https://doi.org/10.1080/14767430.2020.1723301.

Blaikie N. Retroduction. In: Lewis-Beck MS, Bryman A, Liao TF, editors. The SAGE Encyclopedia of Social Science Research Methods. Thousand Oaks: SAGE Publications, Inc.; 2004. p. 972.

Jagosh J, Pluye P, Wong G, Cargo M, Salsberg J, Bush PL, Herbert CP, Green L, Greenhalgh T, Macaulay AC. Critical reflections on realist review: insights from customizing the methodology to the needs of participatory research assessment. Res Synth Methods. 2014;5:131–41. https://doi.org/10.1002/jrsm.1099.

Fletcher AJ. Applying critical realism in qualitative research: methodology meets method. Int J Soc Res Methodol. 2016;20:181–94. https://doi.org/10.1080/13645579.2016.1144401.

Mukumbang FC, Marchal B, Van Belle S, van Wyk B. Using the realist interview approach to maintain theoretical awareness in realist studies. Qual Res. 2019;20:485–515. https://doi.org/10.1177/1468794119881985.

Eastwood JG, Jalaludin BB, Kemp LA. Realist explanatory theory building method for social epidemiology: a protocol for a mixed method multilevel study of neighbourhood context and postnatal depression. Springerplus. 2014;3. https://doi.org/10.1186/2193-1801-3-12

Wong G, Westhorp G, Pawson R, Greenhalgh T. Realist synthesis: RAMESES training materials. 2013. (Accessed 1 Dec 2022, at https://www.ramesesproject.org/media/Realist_reviews_training_materials.pdf.)

Haynes A, Rowbotham SJ, Redman S, Brennan S, Williamson A, Moore G. What can we learn from interventions that aim to increase policy-makers’ capacity to use research? A realist scoping review. Health Res Policy Syst. 2018;16. https://doi.org/10.1186/s12961-018-0277-1

Haynes A, Brennan S, Redman S, Williamson A, Makkar SR, Gallego G, Butow P. Policymakers’ experience of a capacity-building intervention designed to increase their use of research: a realist process evaluation. Health Res Policy Syst. 2017;15:99. https://doi.org/10.1186/s12961-017-0234-4.

Haynes A, Brennan S, Carter S, O’Connor D, Schneider C, Turner T, Gallego G, the Ct. Protocol for the process evaluation of a complex intervention designed to increase the use of research in health policy and program organisations (the SPIRIT study). Implement Sci. 2014;9. https://doi.org/10.1186/s13012-014-0113-0

Smith J, Shaw S, Porter A, Rosen R, Blunt I, Davies A, Eastmure E, Mays N. Commissioning high quality care for people with long-term conditions. Final report. Nuffield Trust, 2013. (Accessed 1 Dec 2022, at https://www.nuffieldtrust.org.uk/files/2017-01/commissioning-high-quality-care-for-long-term-conditions-web-final.pdf.)

Curry N, Goodwin N, Naylor CD, Robertson R. Practice-based commissioning: reinvigorate, replace or abandon? King’s Fund, 2008. (Accessed 1 Dec 2022, at https://www.kingsfund.org.uk/sites/default/files/Practice-based-Commissioning-Reinvigorate-replace-abandon-Curry-Goodwin-Naylor-Robertson-Kings-Fund-November-2008.PDF.)

Wye L, Brangan E, Cameron A, Gabbay J, Klein J, Pope C. Knowledge exchange in health-care commissioning: case studies of the use of commercial, not-for-profit and public sector agencies, 2011–14. Health Serv Deliv Res. 2015;3. https://doi.org/10.3310/hsdr03190

Naylor C, Goodwin N. Building high-quality commissioning: what role can external organisations play? King’s Fund, 2010. (Accessed 1 Apr 2022, at https://www.kingsfund.org.uk/projects/building-high-quality-commissioning-what-role-can-external-commissioners-play.)

McDermott I, Warwick-Giles L, Gore O, Moran V, Bramwell D, Coleman A, Checkland K. Understanding primary care co-commissioning: Uptake, development, and impacts. Final report. PRUComm, 2018. (Accessed 1 Dec 2022, at https://prucomm.ac.uk/2018/03/21/understanding-primary-care-co-commissioning-uptake-development-and-impacts/.)

Holder H, Robertson R, Ross S, Bennett LB, Gosling J, Curry N. Risk or reward? The changing role of CCGs in general practice. King’s Fund, 2015. (Accessed Dec 2022, at https://www.kingsfund.org.uk/sites/default/files/field/field_publication_file/risk-or-reward-the-changing-role-of-CCGs-in-general-practice.pdf.)

Schang L, Morton A: LSE/Right Care project on NHS Commissioners’ use of the NHS Atlas of Variation in Healthcare. Case studies of local uptake. In: Right Care Casebook Serieshttp://www.rightcarenhsuk/atlas. 2012.

Elliot H, Popay J. How are policy makers using evidence? Models of research utilisation and local NHS policy making. J Epidemiol Community Health. 2000;54:461–8. https://doi.org/10.1136/jech.54.6.461.

Weiss C. The many meanings of research utilization. Public Adm Rev. 1979;39:426–31. https://doi.org/10.2307/3109916.

Smith J, Curry N, Mays N, Dixon J. Where next for commissioning in the English NHS? King’s Fund and Nuffield Trust, 2010. (Accessed 1 Dec 2022, at http://researchonline.lshtm.ac.uk/3828/.)

Wye L, Brangan E, Cameron A, Gabbay J, Klein JH, Anthwal R, Pope C. What do external consultants from private and not-for-profit companies offer healthcare commissioners? A qualitative study of knowledge exchange. BMJ Open. 2015;5:e006558. https://doi.org/10.1136/bmjopen-2014-006558.

Miller R, Peckham S, Coleman A, McDermott I, Harrison S, Checkland K. What happens when GPs engage in commissioning? Two decades of experience in the English NHS. J Health Serv Res Policy. 2016;21:126–33. https://doi.org/10.1177/1355819615594825.

Swan J, Clarke A, Nicolini D, Powell J, Scarbrough H, Roginski C, Gkeredakis E, Mills P, Taylor-Phillips S. Evidence in management decisions (EMD): advancing knowledge utilization in healthcare management. National Institute for Health Research, 2012. (Accessed 1 Dec 2022, at http://www.netscc.ac.uk/hsdr/files/project/SDO_FR_08-1808-244_V01.pdf.)

Russell J, Greenhalgh T. Rhetoric, evidence and policymaking: a case study of priority setting in primary care. Research Department of Primary Care and Population Health, UCL, 2009. (Accessed 1 Apr 2022, at https://discovery.ucl.ac.uk/id/eprint/15560/1/15560.pdf.)

Smith J, Regen E, Shapiro J, Baines D. National evaluation of general practitioner commissioning pilots: lessons for primary care groups. Br J Gen Pract. 2000;50:469–72.

Dewhurst R. A matter of facts. Public Finance 2008:20–21.

Allison R, Lecky DM, Beech E, Costelloe C, Ashiru-Oredope D, Owens R, McNulty CAM. What antimicrobial stewardship strategies do NHS commissioning organizations implement in primary care in England? JAC Antimicrob Resist. 2020;2. https://doi.org/10.1093/jacamr/dlaa020

NHS National Treatment Agency for Substance Misuse. Addiction to medicine: an investigation into the configuration and commissioning of treatment services to support those who develop problems with prescription-only or over-the-counter medicine. The National Treatment Agency for Substance Misuse, 2011. (Accessed 1 Dec 2022, at https://www.bl.uk/collection-items/addiction-to-medicine-an-investigation-into-the-configuration-and-commissioning-of-treatment-services-to-support-those-who-develop-problems-with-prescriptiononly-or-overthecounter-medicine.)

Alderson SL, Farragher TM, Willis TA, Carder P, Johnson S, Foy R. The effects of an evidence- and theory-informed feedback intervention on opioid prescribing for non-cancer pain in primary care: a controlled interrupted time series analysis. PLoS Med. 2021;18:e1003796–e1003796. https://doi.org/10.1371/journal.pmed.1003796.

Palin V, Tempest E, Mistry C, van Staa TP. Developing the infrastructure to support the optimisation of antibiotic prescribing using the learning healthcare system to improve healthcare services in the provision of primary care in England. BMJ Health Care Inform. 2020;27:e100147. https://doi.org/10.1136/bmjhci-2020-100147.

Holmes RD, Bate A, Steele JG, Donaldson C. Commissioning NHS dentistry in England: issues for decision-makers managing the new contract with finite resources. Health Policy. 2009;91:79–88. https://doi.org/10.1016/j.healthpol.2008.11.007.

Billings JR. Investigating the process of community profile compilation. J Res Nurs. 1996;1:270–83. https://doi.org/10.1177/174498719600100405.

Currie G, Croft C, Chen Y, Kiefer T, Staniszewska S, Lilford R. The capacity of health service commissioners to use evidence: a case study. Health Serv Deliv Res. 2018;6:1–198. https://doi.org/10.3310/hsdr06120.

Evans D. The impact of a quasi-market on sexually transmitted disease services in the UK. Soc Sci Med. 1999;49:1287–98. https://doi.org/10.1016/S0277-9536(99)00203-8.

Salway S, Turner D, Mir G, Bostan B, Carter L, Skinner J, Gerrish K, Ellison GTH. Towards equitable commissioning for our multiethnic society: a mixed-methods qualitative investigation of evidence utilisation by strategic commissioners and public health managers. Health Serv Deliv Res. 2013;1. https://doi.org/10.3310/hsdr01140

Storey J, Holti R, Hartley J, Marshall M, Matharu T. Clinical leadership in service redesign using Clinical Commissioning Groups: a mixed-methods study. Health Serv Deliv Res. 2018;6. https://doi.org/10.3310/hsdr06020

Shepherd S. Power to the people. Health Serv J. 2009;119:4-5.

Chinamasa CF. Priority Setting among Primary Care Trusts in Northwest England: Approaches, Processes and Use of Evidence. UK: The University of Manchester; 2007.

Checkland K, Hammond J, Sutton M, Coleman A, Allen P, Mays N, Mason T, Wilding A, Warwick-Giles L, Hall A. Understanding the new commissioning system in England: contexts, mechanisms and outcomes. NIHR, 2018. (Accessed 20 Dec 2022, at https://prucomm.ac.uk/assets/uploads/blog/2018/11/Understanding-the-new-commissioning-system-in-England-FINAL-REPORT-PR-R6-1113-25001-post-peer-review-v2.pdf.)

Riley VA, Gidlow C, Ellis NJ. Uptake of NHS health check: issues in monitoring. Prim Health Care Res Dev. 2018:1–4. https://doi.org/10.1017/S1463423618000592

Cowie A. Facing the future: A study of the changing pattern of contracting within a National Health Service Community Trust 1994-2001. UK: The University of Manchester; 2002.

Dhillon A, Godfrey AR. Using routinely gathered data to empower locally led health improvements. London J Prim Care. 2013;5:92. https://doi.org/10.1080/17571472.2013.11493387.

Porter A, Mays N, Shaw SE, Rosen R, Smith J. Commissioning healthcare for people with long term conditions: the persistence of relational contracting in England’s NHS quasi-market. BMC Health Serv Res. 2013;13:S2–S2. https://doi.org/10.1186/1472-6963-13-S1-S2.

McCafferty S, Williams I, Hunter D, Robinson S, Donaldson C, Bate A. Implementing world class commissioning competencies. J Health Serv Res Policy. 2012;17(Suppl 1):40–8. https://doi.org/10.1258/jhsrp.2011.011104.

Ellins J, Glasby J. Together we are better? Strategic needs assessment as a tool to improve joint working in England. J Integr Care. 2011;19:34–41. https://doi.org/10.1108/14769011111148159.

Shepperd S, Adams R, Hill A, Garner S, Dopson S. Challenges to using evidence from systematic reviews to stop ineffective practice: an interview study. J Health Serv Res Policy. 2013;18:160–6. https://doi.org/10.1177/1355819613480142.

Williams I, Bryan S. Cost-effectiveness analysis and formulary decision making in England: findings from research. Soc Sci Med. 2007;65:2116–29. https://doi.org/10.1016/j.socscimed.2007.06.009.

Offredy M. An exploratory study of the role and training needs of one primary care trust’s professional executive committee members. Prim Health Care Res Dev. 2005;6:149–61. https://doi.org/10.1191/1463423605pc227oa.

Moran V, Allen P, McDermott I. Investigating recent developments in the commissioning system: final report. Policy Research Unit in Commissioning and the Healthcare System (PRUComm), 2018. (Accessed 1 Dec 2022, at https://prucomm.ac.uk/assets/uploads/blog/2018/11/Recent-developments-in-commissioning-report_FOR-WEBSITE.pdf.)

Wyatt D, Cook J, McKevitt C. Perceptions of the uses of routine general practice data beyond individual care in England: a qualitative study. BMJ Open. 2018;8:e019378. https://doi.org/10.1136/bmjopen-2017-019378.

Department of Health. Informing healthier choices: Information and intelligence for healthy populations. 2006. (Accessed 1 Dec 2020, at https://www.bipsolutions.com/docstore/pdf/12834.pdf.)

Checkland K, Coleman A, Perkins N, McDermott I, Petsoulas C, Wright M, Gadsby E, Peckham S. Exploring the ongoing development and impact of Clinical Commissioning Groups. 2014. (Accessed 1 Dec 2022, at https://prucomm.ac.uk/assets/files/exploring-ongoing-development.pdf.)

Hollingworth W, Rooshenas L, Busby J, E. Hine C, Badrinath P, Whiting PF, Moore THM, Owen-Smith A, Sterne JAC, E. Jones H et al. Using clinical practice variations as a method for commissioners and clinicians to identify and prioritise opportunities for disinvestment in health care: a cross-sectional study, systematic reviews and qualitative study. Health Soc Care Deliv Res. 2015;3. https://doi.org/10.3310/hsdr03130

Smith DP, Gould MI, Higgs G. (Re)surveying the uses of Geographical Information Systems in Health Authorities 1991–2001. Area. 2003;35:74–83. https://doi.org/10.1111/1475-4762.00112.

Wade E, Smith J, Peck E, Freeman T. Commissioning in the reformed NHS: policy into practice. 2006. (Accessed 1 Dec 2022, at https://www.semanticscholar.org/paper/Commissioning-in-the-reformed-NHS-%3A-policy-into-Wade-Smith/505bafeeb8137e18c2ea30e5b9b74a6374bdd9a9.)

Robinson S, Dickinson H, Freeman T, Rumbold B, Williams I. Structures and processes for priority-setting by health-care funders: a national survey of primary care trusts in England. Health Serv Manage Res. 2012;25:113–20. https://doi.org/10.1258/hsmr.2012.012007.

Naylor C, Goodwin N. The use of external consultants by NHS commissioners in England: what lessons can be drawn for GP commissioning? J Health Serv Res Policy. 2011;16:153–60. https://doi.org/10.1258/jhsrp.2010.010081.

Checkland K, Coleman A, Harrison S, Hiroeh U. ‘We can’t get anything done because...’: making sense of ’barriers’ to practice-based commissioning. J Health Serv Res Policy. 2009;14:20–26. https://doi.org/10.1258/jhsrp.2008.008043

Harries U, Elliott H, Higgins A. Evidence-based policy-making in the NHS: exploring the interface between research and the commissioning process. J Public Health. 1999;21:29–36. https://doi.org/10.1093/pubmed/21.1.29.

McDermott I, Coleman A, Perkins N, Osipovič D, Petsoulas C, Checkland K. Exploring the GP ‘added value’ in commissioning: what works, in what circumstances, and how? Final Report. PRUComm, 2015. (Accessed 1 Dec 2022, at https://prucomm.ac.uk/assets/uploads/blog/2015/10/CCG2-final-report-post-review-v3-final.pdf.)

Rod S, Nigel C, Ann M, Naomi C, Verdiana M, Mark E, Richard B, Russell M, Sue L. NHS commissioning practice and health system governance: a mixed-methods realistic evaluation. Health Serv Deliv Res. 2015;3. https://doi.org/10.3310/hsdr03100

Braun D, Guston DH. Principal-agent theory and research policy: an introduction. Sci Public Policy. 2003;30:302–8. https://doi.org/10.3152/147154303781780290.

Buchanan A. Principal/agent theory and decision making in health care. Bioethics. 1988;2:317–33. https://doi.org/10.1111/j.1467-8519.1988.tb00057.x.

Tyler I, Pauly B, Wang J, Patterson T, Bourgeault I, Manson H. Evidence use in equity focused health impact assessment: a realist evaluation. BMC Public Health. 2019;19:230. https://doi.org/10.1186/s12889-019-6534-6.

Martin-Fernandez J, Aromatario O, Prigent O, Porcherie M, Ridde V, Cambon L. Evaluation of a knowledge translation strategy to improve policymaking and practices in health promotion and disease prevention setting in French regions: TC-REG, a realist study. BMJ Open. 2021;11:e045936. https://doi.org/10.1136/bmjopen-2020-045936.

Orton L, Lloyd-Williams F, Taylor-Robinson D, O’Flaherty M, Capewell S. The use of research evidence in public health decision making processes: systematic review. PLOS ONE. 2011;6:e21704. https://doi.org/10.1371/journal.pone.0021704.

Turner S, D’Lima D, Hudson E, Morris S, Sheringham J, Swart N, Fulop NJ. Evidence use in decision-making on introducing innovations: a systematic scoping review with stakeholder feedback. Implement Sci. 2017;12:145. https://doi.org/10.1186/s13012-017-0669-6.

Vindrola-Padros C, Eyre L, Baxter H, Cramer H, George B, Wye L, Fulop NJ, Utley M, Phillips N, Brindle P, et al. Addressing the challenges of knowledge co-production in quality improvement: learning from the implementation of the researcher-in-residence model. BMJ Qual Saf. 2019;28:67–73. https://doi.org/10.1136/bmjqs-2017-007127.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review - a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10(Suppl 1):21–34. https://doi.org/10.1258/1355819054308530.

Green H, Evans S. What are Integrated Care Systems and what are the population health data and intelligence changes? NIHR, 2022. (Accessed 2 Mar 2023, at https://arc-eoe.nihr.ac.uk/news-insights/news-latest/what-are-integrated-care-systems-and-what-are-population-health-data-and.)

Gov.UK. National Data Strategy (policy paper). 2020. (Accessed 1 Mar 2023, at https://www.gov.uk/government/publications/uk-national-data-strategy/national-data-strategy#data-3-4.)

Acknowledgements

We thank Maryam Ahmadyar for helping with the screening and study selection phase. We also thank the three people who had informal conversations with us to facilitate the development of the programme theory.

Funding

This project was completed as part of Alexandra Jager’s DPhil (PhD) project, funded by an NIHR SPCR Studentship in Primary Health Care.

Author information

Authors and Affiliations

Contributions

Study design: AJ, GW, CP. Searches: AJ, NR. Data acquisition: AJ, NR. Data analysis: AJ, GW, CP. Initial draft manuscript: AJ, GW, CP. Reviewing and editing of manuscript: AJ, GW, CP, NR. All authors have read and agreed to the final version of the manuscript.

Authors’ information

N/A.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable as this is a synthesis of existing literature.

Consent for publication

Not applicable as this is drawing on previously published data that we appropriately reference in the paper and supplementary materials.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Detailed overview of 5-step review process.

Additional file 2.

Emerging CMO configurations.

Additional file 3.

Literature research.

Additional file 4.

Table of included studies.

Additional file 5.

Final programme theory.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Jager, A., Wong, G., Papoutsi, C. et al. The usage of data in NHS primary care commissioning: a realist review. BMC Med 21, 236 (2023). https://doi.org/10.1186/s12916-023-02949-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-023-02949-w