Abstract

Background

The use of routine immunization data by health care professionals in low- and middle-income countries remains an underutilized resource in decision-making. Despite the significant resources invested in developing national health information systems, systematic reviews of the effectiveness of data use interventions are lacking. Applying a realist review methodology, this study synthesized evidence of effective interventions for improving data use in decision-making.

Methods

We searched PubMed, POPLINE, Centre for Agriculture and Biosciences International Global Health, and African Journals Online for published literature. Grey literature was obtained from conference, implementer, and technical agency websites and requested from implementing organizations. Articles were included if they reported on an intervention designed to improve routine data use or reported outcomes related to data use, and targeted health care professionals as the principal data users. We developed a theory of change a priori for how we expect data use interventions to influence data use. Evidence was then synthesized according to data use intervention type and level of the health system targeted by the intervention.

Results

The searches yielded 549 articles, of which 102 met our inclusion criteria, including 49 from peer-reviewed journals and 53 from grey literature. A total of 66 articles reported on immunization data use interventions and 36 articles reported on data use interventions for other health sectors. We categorized 68 articles as research evidence and 34 articles as promising strategies. We identified ten primary intervention categories, including electronic immunization registries, which were the most reported intervention type (n = 14). Among the research evidence from the immunization sector, 32 articles reported intermediate outcomes related to data quality and availability, data analysis, synthesis, interpretation, and review. Seventeen articles reported data-informed decision-making as an intervention outcome, which could be explained by the lack of consensus around how to define and measure data use.

Conclusions

Few immunization data use interventions have been rigorously studied or evaluated. The review highlights gaps in the evidence base, which future research and better measures for assessing data use should attempt to address.

Similar content being viewed by others

Background

Within global health, it is widely acknowledged that a cornerstone of well-functioning health systems are sufficiently high-quality data to guide decision-making around health service delivery. Calls to improve the quality and use of data feature prominently in several national plans of action and in global strategies like the Global Vaccine Action Plan. Donor bodies including The Global Fund to Fight AIDS, Tuberculosis and Malaria; US President’s Emergency Plan for AIDS Relief (PEPFAR); and Gavi, the Vaccine Alliance; among others, have also identified data quality and data use as strategic focus areas. While investments in national health information systems and advances in information technology have improved the timeliness, quality, and availability of health data, data remain an underutilized resource in decision-making, especially at the level of health care delivery [1, 2]. In the immunization sector, data use is recognized as lacking in the design and implementation of programs, leading to calls for more evidence regarding effective strategies to improve data use [3].

Although the barriers to using health data have been relatively well studied and point to insufficient skills in data use core competencies among health workers, lack of trust in data due to poor quality, and inadequate availability because of fragmented data across multiple sources, among others [1, 4,5,6,7,8], to date there is no formal review of evidence from existing efforts to strengthen immunization data use. To address this gap, we conducted a realist systematic review of existing research evidence on immunization data use interventions in low- and middle-income countries (LMICs). Our review was designed to answer two specific research questions:

-

1.

What are the most effective interventions to improve the use of data for immunization program and policy decision-making?

-

2.

Why do these interventions produce the outcomes that they do?

Methods

To answer our research questions, we conducted a realist review of the evidence from published and grey literature. Realist review is a theory-driven type of literature review that aims to test and refine the underlying assumptions for how an intervention is supposed to work and under what conditions [9, 10]. While traditional systematic review approaches follow a highly specified methodology with predetermined eligibility criteria to answer a specific research question, the realist review methodology (described elsewhere) involves an equally rigorous process for systematic synthesis of evidence but is more iterative and methodologically flexible [9, 10].

We developed a review protocol (Appendix A) with input from a technical steering committee composed of ten global and regional senior leaders in the areas of immunization, data quality, and data use from the Pan American Health Organization (PAHO), World Health Organization (WHO) headquarters, the Bill & Melinda Gates Foundation, PATH, the US Centers for Disease Control and Prevention, the United Nations Children’s Fund, and Gavi, as well as country representatives from both the Better Immunization Data Initiative (BID Initiative) Learning Network and Improving Data Quality for Immunizations core project countries.

We first developed a theory of change (TOC) (Fig. 1) to establish the theoretical framework for how we expect data use interventions to influence data use. The hypotheses and assumptions reflected in the TOC were informed by existing health information and data use frameworks, as well as systematic reviews on topics related to health information system strengthening and evidence-informed decision-making [11,12,13,14,15,16]. We adopted the WHO definitions of data quality and data use. WHO’s data quality review framework defines data quality according to four dimensions: completeness and timeliness, internal consistency of reported data, external consistency, and external comparisons of population data [17]. Data-informed decision-making is defined by WHO as a process in which data collected by the health system are converted into usable information through data processing, analysis, synthesis, interpretation, review, and discussion, then used to decide on a course of action [18]. From this literature, we identified six barriers to data use (demand, access and availability, quality, skills, structure and process, and communication) and three behavioral drivers (capability, motivation, and opportunity), which are represented in the TOC as mechanisms of data use interventions [11,12,13,14]. We hypothesize that to be effective, any intervention must address one or more of these mechanisms. Likewise, we expect that interventions addressing these mechanisms will lead to intermediate outcomes including data quality and availability, analysis, synthesis, and discussion of data, which we posit are also necessary precursors to data use. From this point, the actual use of data to make program and health service delivery decisions is captured by the data use actions, which are based on the WHO Global Framework to Strengthen Immunization and Surveillance Data for Decision-making [15]. The data use actions represent our outcome of interest in this review; they specify where data are used, by whom, and for what purpose.

Literature search

We searched PubMed, POPLINE, Centre for Agriculture and Biosciences International Global Health, and African Journals Online for published articles. Search terms included vaccine, immunization, data quality, data use, health information system, health management information system, logistics management information system, electronic medical record, electronic health record, electronic patient record, medical record system, immunization register, home-based record, and supply chain data. The search strategies retained for each database are included in Table 1. We purposively filled gaps with additional searches on specific intervention categories. We also performed “reference mining” by searching the references of retrieved articles for additional relevant publications. Using the same search terms, we searched for grey literature on vaccine and digital health conference, implementer, and technical agency websites. A complete list of the websites searched for grey literature is included in the review protocol (Appendix A). We collaborated with colleagues from PAHO to collect literature from country offices in the Latin America and Caribbean region and relied on input from the steering committee to identify immunization data use interventions and implementing organizations. We contacted these organizations to collect unpublished evaluations, studies, and reviews of data use interventions. The first round of searches was conducted between January and April 2018 and a second round was conducted between June and August 2018. During the second round, we included literature from other health sectors such as HIV/AIDS and maternal and child health to fill gaps in the immunization evidence.

Inclusion criteria

During the first round of data collection, literature that met all the following criteria was eligible for inclusion:

-

1.

Focus is on routine health system data. The literature reported on use of routine immunization data, which we defined as data that are continuously collected by health information systems and used by immunization programs to monitor and improve service delivery. This excluded surveillance data used for detecting disease outbreaks; financial and human resources data; and other nonroutine data, such as survey data and research evidence.

-

2.

An intervention is reported. The literature reported on an intervention designed to improve routine data use.

-

3.

Data use outcomes are reported. The literature reported on intervention outcomes related to data use for decision-making.

-

4.

Intervention targeted data users. The intervention targeted health care professionals (e.g., health workers, managers, and decision-makers) as the principal users of routine data. This excluded interventions that targeted recipients of health care services (e.g., patients or communities).

The second round of data collection expanded the first criterion to include literature from other health sectors while all other criteria remained the same. We included systematic reviews that met our inclusion criteria during the second round of data collection and consulted the primary studies for additional information only if the results of the primary studies were not sufficiently detailed in the systematic reviews.

Data extraction and study quality assessment

A six-step process was used in which we:

-

1.

Read the literature abstracts to determine if they met the inclusion criteria.

-

2.

Classified each piece of included literature based on the primary data use intervention type.

-

3.

Read the full text of included articles and coded text segments using Atlas.Ti.

-

4.

Extracted characteristics of the intervention package, including the intervention design and strategies, the types of health care professionals and levels of the health system targeted by the intervention, implementation settings, outcomes, and details on how the interventions functioned.

-

5.

Assessed the quality of the immunization data use articles categorized as evidence using the Mixed Methods Appraisal Tool checklist [19].

-

6.

Compiled the immunization data use metadata for each record in a Microsoft Excel workbook and visualized using Tableau in an evidence gap map.

Articles were read by a three-member review team and coded according to a coding tree based on the TOC. Approximately 20 % of the articles were cross-read and coded to ensure consistent coding among reviewers.

Analysis

We did not exclude literature based on study design or quality, but rather segmented the included literature into two categories: evidence referred to studies and evaluations that applied scientific research methods or evaluation design, and promising strategies referred to grey or published literature that did not qualify as a study or evaluation but described an intervention with strong theoretical plausibility of improving data use.

For each intervention category, we analyzed the intervention’s effect on data use by health care professionals at different levels of the health system. We recorded how the interventions functioned and what mechanisms made them successful, as well as the reasons why interventions did not show evidence of effectiveness. We synthesized the results according to the intermediate outcomes of data quality and availability; data analysis, synthesis, interpretation, and review; and data use actions at different levels of the health system, as conveyed in our TOC. By mapping the evidence to the TOC, we tested the hypothesized relationships between intervention strategies, mechanisms, and data use outcomes and visualized the results in an evidence gap map. We presented a synthesis of our preliminary findings during a workshop held in Washington, DC, May 16 and 17, 2018, with members of the steering committee and other immunization stakeholders. During the workshop, we identified gaps in the immunization literature and decided to conduct a second round of data collection. For intervention categories that had limited evidence and were applicable outside of immunization, we expanded the review to include evidence from other health sectors.

Assessing strength of evidence

For each intervention category, we rated the strength of evidence that the intervention resulted in the intermediate outcomes and data use actions outlined in our TOC. We assigned a strength of evidence rating of high, moderate, low, or very low based on a subjective estimation of four domains: (a) study design; (b) quality; (c) number of studies and their agreement; and (d) context dependence of the evidence (Table 2).

Results

Search results.

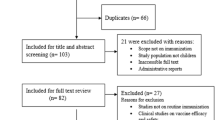

The database searches yielded a total of 426 articles in the first round and an additional 123 articles in the second round of data collection (Fig. 2). Of these 549 articles, 102 met our inclusion criteria, including 49 from peer-reviewed journals and 53 from grey literature. A total of 66 articles reported on immunization data use interventions and 36 articles reported on data use interventions for other health sectors. We categorized 68 articles as research evidence and 34 articles as promising strategies. Ninety-five articles concerned interventions implemented in LMICs and seven articles related to interventions in high-income countries. We identified ten primary intervention categories (Table 3). Electronic immunization registries (EIRs) were the most reported primary intervention (n = 14), followed by decision support systems (n = 13) and multicomponent interventions (n = 13). Most articles described a primary intervention type and were often complemented by secondary intervention components. For example, EIRs were typically implemented with training and/or supportive supervision. We categorized an intervention as multicomponent when there were multiple equally emphasized strategies.

We plotted the results of the 66 articles that reported on immunization data use interventions by primary intervention type and evidence of intermediate outcomes and data use actions in an evidence gap map (Fig. 3). The gap map visualizes all pieces of evidence, including the strength and directionality of the evidence, and promising strategies. A complete list of the immunization data use articles and their quality appraisal scores is included in Appendix B. A detailed synthesis of evidence by intervention category and data use outcomes is included in Appendix C.

Overview of included articles

Intermediate outcomes: availability of timely, high-quality data

A total of 35 articles reported evidence related to data availability, timeliness, and/or quality (Table 4). Among the immunization literature, 22 articles reported an improvement in the availability, timeliness, and/or quality of data. Digital information system interventions (EIR, logistics management information systems [LMIS], and HMIS) were the most common intervention type to report improvements in data availability [20,21,22, 25] and data quality [1, 20, 22, 25,26,27, 42, 120]. Five articles, mostly EIR interventions, did not find any effect on data availability, timeliness, and/or quality [24, 26, 28, 29, 35]. There was moderate-strength evidence that Digital information system interventions were associated with improved data quality owing to the ease of automated feedback and functionalities such as logical checks and warning prompts for improbable or missing data entries [121]. In the included literature from other health sectors, a systematic review of District Health Information Software 2 (DHIS2) implementation found that as health workers gained timelier access to data through the electronic platform, they reported a greater sense of ownership and responsibility for producing high-quality data [42]. Likewise, a multi-country case study of DHIS2 in seven African countries found that more data use generated demand for higher-quality data [1]. Digital information system interventions that were reinforced by other data use activities were more likely to improve data quality than interventions that did not account for structural, behavioral, and other barriers. For example, a nonexperimental, mixed-methods study in South Africa found that human resources shortages, limited awareness of the importance of data, and lack of support and feedback mechanisms undermined the effectiveness of the DHIS platform at improving data quality [43]. Challenges at the point of data entry into digital information systems also lessened data quality, especially data completeness. Numerous articles reported inconsistent data entry due to heavy workloads, parallel paper and electronic reporting systems, disruption to existing workflows, unreliable internet connection, lack of trained staff, and faulty equipment [22, 26, 28, 54]. There was low-strength evidence to suggest that challenges associated with manual data entry could be overcome by digitization of paper immunization records and other mHealth solutions [22, 23, 26, 30,31,32, 100].

There was moderate- to high-strength evidence of improved data quality from repeated data quality assessments and low-strength evidence for data review meetings as part of broader efforts to develop health information infrastructure [60,61,62,63, 66,67,68, 72]. These interventions worked by bringing greater visibility and awareness to data quality issues. Likewise, interventions were more effective when implemented alongside supportive supervision and other forms of feedback that held health workers accountable and developed their skills to address data quality issues [60, 64, 72].

Intermediate outcomes: data are analyzed, synthesized, interpreted, and reviewed

A total of 23 articles reported evidence related to data analysis, synthesis, interpretation, and review. Among the immunization literature, 14 articles reported an improvement in outcomes related to data analysis and interpretation, which we considered necessary steps for transforming data into useful information for decision-making. Moderate-strength evidence suggested that health workers using digital information systems had increased ability to synthesize and interpret routine data, such as identifying defaulters, areas of low coverage, and vaccine stock levels [23, 25, 26, 47]. This outcome was more commonly reported at district and provincial than facility levels. One nonexperimental mixed-methods study found no evidence of improvements in data analysis and interpretation by health facility workers, which was attributed to the absence of feedback and support mechanisms [24]. Instead, interventions that supported health workers in making sense of their data, such as data review meetings, monitoring charts and dashboards, and training, were most likely to report improvements in data analysis and interpretation [48, 49, 55, 62, 94]. There was moderate-strength evidence that monitoring charts and data dashboards, both paper and electronic, increased tracking of immunization coverage [48, 54, 55]. These tools helped health workers synthesize disparate pieces of data, thus improving their ability to detect and react to problems. They were most effective when integrated within established data review and decision-making processes, such as monthly review meetings, and reinforced by supportive supervision and other forms of feedback. The content of data review meetings and how they were structured also influenced their success. For example, building on recommendations and discussion from previous meetings reinforced learning [73], while emphasizing data completeness and accuracy over immunization program target achievement may have improved attitudes about data use [78, 122]. More effective were data review interventions that incorporated quality improvement approaches, such as Rapid Appraisal of Program Implementation in Districts and Plan-Do-Study-Act cycles, because they provided a structured approach to problem-solving [73]. Low-strength evidence suggests that peer learning networks increased collaborative data review and problem-solving by health workers [78, 79, 123]. These interventions leveraged social network platforms like WhatsApp and other forums to bring together health workers across health system levels, departments, and functions, which helped motivate health workers and build their analytic skills.

Data use actions: data use in communities and health facilities

A total of 21 articles reported evidence related to data use at the community and health facility levels. Among the immunization literature, 11 articles reported an improvement in data use by frontline health workers and two articles found no evidence of improvement. At the facility level, data use interventions placed more emphasis on improving data collection practices and data quality. There was low-strength evidence that health facilities used data from digital information systems to make decisions and take action. Two nonexperimental mixed-methods studies of EIR interventions found an increase in facility health workers who self-reported using data to guide their actions [25, 27], but other studies of EIR and HMIS interventions did not detect a change [26, 29, 43, 44]. Challenges such as data entry burdens, poor infrastructure, and lack of feedback from the district and higher levels contributed to inconsistent use.

There was low- to moderate-strength evidence from other interventions. Three articles found that health facilities used monitoring charts and data dashboards to review whether they were meeting targets, respond to low vaccine coverage, and follow up on defaulters [49, 54, 55]. Data quality assessments were the most common intervention type to result in data use by health facilities but centered on resolving data quality issues rather than improving service delivery [60,61,62, 66,67,68]. Supportive supervision interventions targeting the facility level, such as the Data Improvement Team intervention in Uganda, showed mixed evidence of effectiveness. Results from routine project monitoring found an increase in the proportion of health facilities with documented evidence that routine immunization data were used for decision-making, but a rapid organizational-level survey found that none of the health facilities sampled had implemented Data Improvement Team recommendations related to data use [86, 92]. Reasons for inaction included insufficient availability of required materials, inadequate human resources capacity (e.g., new and untrained staff, and low motivation), and a poor management structure that lacked clarity around roles and responsibilities related to data analysis and use. Training in various forms and intensities was a secondary component in at least 17 interventions reviewed. There was low-strength, mostly anecdotal, evidence that one-off training interventions contributed to data use [95, 124]. However, when training was implemented as part of a multicomponent intervention, or reinforced by supportive supervision, these interventions had moderate-strength evidence of improving data use [96,97,98, 108]

Data use actions: data use at the district level

A total of 17 articles reported evidence related to data use at the district level. Among the immunization literature, ten articles measured an improvement in data use by health districts and two articles found no change. LMIS interventions were the most common intervention type to find evidence of increased data use by health districts. Reported data use outcomes were related to supply chain management and included data use in vaccine forecasting and delivery, response to stockouts and cold chain equipment breakdowns, and decisions involving monitoring and supervising health facilities [23, 36, 100, 109]. LMIS interventions that leveraged additional data use strategies were most effective. In Mozambique, for example, the success of the Dedicated Logistics System was influenced by recruiting logisticians responsible for data collection and entry, thus relieving facility health workers from the task; incorporating built-in data visualization to support analysis; and coordinating monthly data review meetings to identify bottlenecks and solutions to improve performance [125].

Similar to LMIS, EIR interventions assume that making data more available and accessible to users will lead to improvements in data use. Although EIRs were the most common data use intervention in our review, only four studies and evaluations measured data use outcomes. Project data from EIR interventions in Tanzania and Zambia found an increase at project midline in the proportion of district-level health workers who self-reported taking action in response to their data [25, 27], although an external evaluation of the same intervention in Tanzania found no significant change in data use between baseline and midline [26]. The evaluators noted that it may have been too early to measure significant changes because of multiple implementation delays. An evaluation of the SmartCare electronic medical record intervention in Zambia found no effect on data use, since most facilities were not entering immunization data into the system; in addition to challenges with the acceptability and feasibility of the system, health workers could not identify ways to use data for action [29]. Other studies of EIR interventions did not measure data use outcomes but found improvements in vaccine coverage that could have been associated with data use if health workers used EIR data to follow up on defaulters and target under-immunized children [20, 21].

Decision support system interventions, such as monitoring charts and data dashboards, helped district health workers organize and analyze data and then use the information to strengthen facility performance and data quality. Project data from the implementation of an immunization data dashboard in Nigeria’s DHIS2 platform found that at state and local government area levels, health workers used the dashboard to track facility performance, monitor immunization coverage trends, and target facilities for training or supportive supervision [54]. Effectiveness was enhanced by deploying DHIS2 implementation officers to provide hands-on learning and support to state and local government area immunization teams, and incorporating data use within existing processes, such as monthly review meetings. In these literature and other studies, decision support systems had moderate-strength effectiveness [73, 74, 92]. Conversely, computerized decision support systems (CDSS) had low-strength evidence of effectiveness. Such systems employ algorithm-based software to help data users interpret and transform data into usable information for decision-making. A mixed-methods evaluation of a CDSS intervention in Papua New Guinea found that district health workers in low-performing regions were more likely to use the knowledge-based system to give feedback to health facilities, which gave rise to the immunization rate, but health workers from higher-performing districts did not perceive any utility in reviewing data [47]. The literature on CDSS from other health sectors and settings did not show an effect on data use or clinical outcomes [50, 51].

Data use actions: data use at the national level

A total of three articles, including two from the immunization literature and one from the other health sector literature, reported evidence related to data use at the national level. Many data use interventions for national-level stakeholders did not meet our inclusion criteria because they focused on decision-making informed by research evidence and survey data instead of routine data. The literature we found provided low-strength, often anecdotal, evidence of data use. For example, in Ghana and Kenya, anecdotal evidence suggested that immunization information system assessments led to concrete follow-up actions, such as improving the managerial and supervisory skills of subdistrict staff in Ghana and incorporating data quality into coursework and continuing education curricula for health professionals [65]. Peer learning networks such as the BID Initiative Learning Network, found that Expanded Programme on Immunization managers and other national-level participants self-reported becoming more data oriented in their work and making decisions based on data [27]. The Data for Decision making project, which included interdisciplinary in-service training for mid-level policymakers, program managers, and technical advisors, found anecdotal evidence of data use for strengthening surveillance systems, and for advocating for, developing, and implementing national policies [95].

Discussion

We found that the state of the evidence around what works to improve immunization data use is still nascent. Although much of the published literature provides insights into the barriers related to data use [4,5,6,7,8], few data use interventions have been rigorously studied or evaluated. We found more evidence of interventions impacting the intermediate outcomes in our TOC, such as data quality, availability, analysis, synthesis, interpretation, and review, but less evidence on what works to support data-informed decision-making. This could be explained by the lack of consensus around how to define and measure data use. Although promising strategies have not yet proven effective, we included them because they provide insight into intervention designs that have potential for future success

By applying a realist review methodology, we developed stronger theories about what works to improve the use of data based on the evidence and promising strategies currently available. We concluded that multicomponent interventions with mutually reinforcing strategies to address barriers at various stages of data use were most effective. Furthermore, interventions were more likely to succeed and be sustained over the long term if they institutionalized data use through dedicated staff positions for data management, routine data review meetings, national training curricula, and guidelines on data use for frontline staff. We found potential for digital systems but note that barriers still exist. While the transition from paper to digital, along with adoption of digital information systems, has made higher-quality data more available to decision-makers in real-time, it has not automatically translated into greater data use. There is more success at the district level and higher because of fewer operational challenges than at the facility level. It is also necessary to pair digital systems with activities that reinforce data use.

On the topic of data quality, the results of this review confirm that data quality is an important barrier and necessary precursor to data use, but we found limited evidence that interventions focused singularly on data quality improved data use. This is because health workers may lack the necessary skills to analyze and translate data into information that is useful for making decisions on program implementation. There is more compelling evidence to suggest that data use interventions can lead to improvements in data quality. We found that as health workers began using their data, they were able to identify inconsistencies and take corrective action. Data use also generated demand for higher-quality data, and as quality improved, users better trusted the data that reinforced use of the data.

Our primary focus was on the use of immunization data in LMICs, but we included evidence from other health sectors and relevant publications from high-income countries that further corroborated and deepened our findings. We found considerable evidence on improving the quality and use of HIV data, owing in large part to the strategic focus and investments in data use by PEPFAR. Our conclusions agree with other literature on the topic. For example, the finding that multicomponent interventions are likely more effective than single-component interventions is supported by other health systems research [6, 108].

We noted particular gaps in the evidence on what works to improve data use at the facility level. Our findings suggest that interventions at this level have focused more on improving data collection practices and data quality, and less on data use. More emphasis on building data use skills during frontline health worker pre- and in-service training and continuing education (e.g., statistics, data interpretation, and data management) and cultivating a culture of data use may have a greater effect on strengthening data quality and use, but this should be tested in future research. In addition, the operational barriers and administrative challenges faced by digital information system interventions point to the need for a phased approach, ensuring that data use infrastructure, human resources capacity, and skills-building are in place before a full digital transition.

We posit that the lack of consensus around how to define and measure data use may in part explain the dearth of rigorously evaluated data use interventions in the published and grey literature. There is therefore a critical need to develop better measures for assessing data use in decision-making to better understand the effectiveness of these interventions. Evaluation designs must also account for complex interventions, which encompass most of the interventions reported here. We do not necessarily recommend investment only in experimental design studies to establish effectiveness; rather, we found that the most useful and richest evidence came from mixed-methods studies and evaluations that described why and how the intervention worked, for whom, and where it worked.

Strengths and limitations

A key strength of this review was its inclusiveness and methodological flexibility, afforded by the realist review approach. Realist review methodologies are increasingly used for synthesizing evidence on complex interventions because of their suitability for examining not only whether an intervention works, but also how the intervention works and under what conditions. The realist approach made it possible to include various types of information and evidence, such as experimental and nonexperimental study designs, grey literature, project evaluations, and reports. Although much of the evidence was from grey literature and therefore of lesser quality, it provided important evidence and learnings that otherwise would be overlooked. In addition to being the first systematic synthesis of evidence on data use interventions in LMICs, our review adds to the growing body of realist review literature by demonstrating realist methodology applied to the review and synthesis of evidence in public health.

Most data use interventions were composed of multiple strategies, and although we attempted to segment the findings according to the primary intervention type, it was not possible to fully disentangle the effects of individual strategies and activities. For this reason, we cannot recommend which interventions or packages of interventions are most effective, but we can provide stronger theories about what may work and why. Another limitation was our reliance on what was reported in the literature that provided the basis for our findings. Not all the literature adequately described how the intervention functioned or identified the contextual factors within which the intervention was implemented that may have contributed to its success or failure. Because we did not have the opportunity to interview the stakeholders responsible for implementing the interventions, we may have missed important contextual considerations.

Finally, the focus on routine immunization data alone was helpful in constraining the review timeline and process but risks further siloing of immunization programs. We eventually expanded the review to include literature from other health sectors; however, these efforts were not as comprehensive and likely failed to capture all the available evidence on the topic. By including systematic reviews during the second round of data collection, we were able to capture evidence from data use interventions that have already been synthesized for other health sectors. The results of this review, along with other future reviews of data use more broadly, should be considered together to inform strategic and cross-programmatic investments in interventions to improve data use.

Conclusions

This review helps fill a critical gap in what is known about the state of the evidence on interventions to improve routine health data. While our findings are presented primarily through the lens of using data to make decisions in immunization programs, they remain relevant for other health sectors. The evidence on effective interventions and promising strategies detailed in this review will help program implementers, policymakers, and funders choose approaches with the highest potential for improving vaccine coverage and equity. We anticipate that these findings will also be of interest to researchers and evaluators to prioritize gaps in the existing knowledge.

Availability of data and materials

The article names of published and grey literature that support the findings of this study are available in the interactive evidence gap map (linked here: https://www.technet-21.org/en/topics/idea#evidence-gap-map). Complete citations for all referenced literature are included in the reference list.

Abbreviations

- AIDS:

-

Acquired Immune Deficiency Syndrome

- BID Initiative:

-

Better Immunization Data Initiative

- CABI:

-

Centre for Agriculture and Biosciences International

- CDSS:

-

Computerized decision support system(s)

- DHIS2:

-

District Health Information Software 2

- EIR:

-

Electronic immunization registry

- Gavi:

-

Gavi, the Vaccine Alliance

- HIV:

-

Human immunodeficiency virus

- HMIS:

-

Health management information system(s)

- LMICs:

-

Low- and middle-income countries

- LMIS:

-

Logistics management information system(s)

- mHealth:

-

Mobile health

- PAHO:

-

Pan American Health Organization

- PEPFAR:

-

US President‘s Emergency Plan for AIDS Relief

- POPLINE:

-

Population Information Online

- TOC:

-

Theory of change

- WHO:

-

World Health Organization

References

Karuri J, Waiganjo P, Orwa D, Manya A. DHIS2: The tool to improve health data demand and use in Kenya. Journal of Health Informatics in Developing Countries. 2014 Mar 18;8(1). Available from: http://www.jhidc.org/index.php/jhidc/article/view/113

Nutley T, Gnassou L, Traore M, Bosso AE, Mullen S. Moving data off the shelf and into action: an intervention to improve data-informed decision making in Côte d’Ivoire. Glob Health Action. 2014 Oct 1;7. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4185136/

World Health Organization. SAGE Working Group on Quality and Use of Global Immunization and Surveillance Data. 2018. Available from: https://www.who.int/immunization/policy/sage/sage_wg_quality_use_global_imm_data/en/

Qazi MS, Ali M. Health Management Information System utilization in Pakistan: Challenges, pitfalls and the way forward. BioScience Trends. 2011;5(6):245–54.

D’Adamo M, Fabic MS, Ohkubo S. Meeting the health information needs of health workers: what have we learned? Journal of Health Communication. 2012 Jun;17(sup2):23–9.

MEASURE Evaluation. Barriers to Use of Health Data in Low- and Middle-Income Countries: A Review of the Literature. Chapel Hill, NC: MEASURE Evaluation; 2018 May. Available from: https://www.measureevaluation.org/resources/publications/wp-18-211

Kumar M, Gotz D, Nutley T, Smith JB. Research gaps in routine health information system design barriers to data quality and use in low- and middle-income countries: a literature review. Int J Health Plann Manage. 2018 Jan;33(1):e1–9.

Lippeveld T. Routine Health Facility and Community Information Systems: Creating an Information Use Culture. Glob Health Sci Pract. 2017 Sep 27;5(3):338–40.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review–a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005 Jul;10 Suppl 1:21–34.

Rycroft-Malone J, McCormack B, Hutchinson AM, DeCorby K, Bucknall TK, Kent B, et al. Realist synthesis: illustrating the method for implementation research. Implementation Science. 2012 Apr 19;7(1):33.

Nutley T, Reynolds HW. Improving the use of health data for health system strengthening. Glob Health Action. 2013 Feb 13;6. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3573178/

Aqil A, Lippeveld T, Hozumi D. PRISM framework: a paradigm shift for designing, strengthening and evaluating routine health information systems. Health Policy Plan. 2009 May;24(3):217–28.

Langer L, Tripney J, Gough D, University of London, Social Science Research Unit, Evidence for Policy and Practice Information and Co-ordinating Centre. The science of using science: researching the use of research evidence in decision making. 2016.

Michie S, van Stralen MM, West R. The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Science. 2011 Apr 23;6:42.

World Health Organization. Global Framework to Strengthen Immunization and Surveillance Data for Decision making. Geneva, Switzerland: WHO; 2018 Jan.

Zuske M, Jarrett C, Auer C, Bosch-Capblanch X, Oliver S. Health Information System Use: Framework Synthesis (draft). Swiss Tropical and Public Health Institute. 2017.

World Health Organization. Data quality review: a toolkit for facility data quality assessment. Geneva, Switzerland: WHO; 2017.

World Health Organization. Monitoring the Building Blocks of Health Systems: A Handbook of Indicators and their Measurement Strategies. World Health Organization; 2010. Available from: https://www.who.int/healthinfo/systems/WHO_MBHSS_2010_full_web.pdf

Pace R, Pluye P, Bartlett G, Macaulay AC, Salsberg J, Jagosh J, et al. Testing the reliability and efficiency of the pilot Mixed Methods Appraisal Tool (MMAT) for systematic mixed studies review. Int J Nurs Stud. 2012 Jan;49(1):47–53.

Nguyen NT, Vu HM, Dao SD, Tran HT, Nguyen TXC. Digital immunization registry: evidence for the impact of mHealth on enhancing the immunization system and improving immunization coverage for children under one year old in Vietnam. Mhealth. 2017 Jul 19;3. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5547172/

Groom H, Hopkins DP, Pabst LJ, Murphy Morgan J, Patel M, Calonge N, et al. Immunization information systems to increase vaccination rates: A community guide systematic review. Journal of Public Health Management and Practice. 2015;21(3):227–48.

Keny A, Biondich P, Grannis S, Were MC. Adequacy and quality of immunization data in a comprehensive electronic health record system. Journal of Health Informatics in Africa. 2013 Sep 20;1(1). Available from: https://www.jhia-online.org/index.php/jhia/article/view/40

Dell N, Breit N, Chaluco T, Crawford J, Borriello G. Digitizing paper forms with mobile imaging technologies. In ACM Press; 2012.

Trumbo S, Contreras M, Garcia A, Diaz F, Gomez M, Carrion V. et al. Improving immunization data quality in Peru and Mexico: Two case studies highlighting challenges and lessons learned. Vaccine. 2018 Nov 29;36(50):7674–7681

Kindoli R. BID Initiative Midline Report, Immunization Data Quality and Use in Arusha Region, Tanzania. PATH; 2017 Sep.

Macdonald M. Evaluation of the Better Immunization Data Initiative- Mid-term report: Tanzania. 2018 Feb.

Zulu C. BID Initiative Midline Report, Zambia. PATH; 2018 Apr.

World Health Organization, PATH. Optimize: Guatemala Report. Seattle, WA: PATH; 2013. Available from: http://www.path.org/publications/files/TS_opt_guatemala_rpt.pdf

Centers for Disease Control and Prevention (CDC). Evaluation of the quality of data in and acceptability of immunisation data collected by electronic medical records, in comparison paper-based records.

Chowdhury M, Sarker A, Sahlen KG. Evaluating the data quality, efficiency and operational cost of MyChild Solution in Uganda. 2018 Jul. Available from: https://shifo.org/doc/rmnch/MyChildExternalEvaluationUganda2018.pdf

Chowdhury M, Sarker A, Sahlen KG. Questioning the MyChild Solution in Afghanistan. 2018 Jul. Available from: https://shifo.org/doc/rmnch/MyChildExternalEvaluationAfghanistan2018.pdf

Sowe A, Putilala O, Sahlen KG. Assessment of MyChild Solution in The Gambia: Data quality, administrative time efficiency, operation costs, and users’ experiences and perceptions. 2018 Jul. Available from: https://shifo.org/doc/rmnch/MyChildExternalEvaluationReportGambia2018.pdf

Westley E, Greene S, Tarr G, Hawes S. Uruguay’s National Immunization Program Register. University of Washington Global Health START Program Report. 2014 May.

Gilbert SS, Thakare N, Ramanujapuram A, Akkihal A. Assessing stability and performance of a digitally enabled supply chain: Retrospective of a pilot in Uttar Pradesh, India. Vaccine. 2017 Apr;35(17):2203–8.

Nshunju R, Ezekiel M, Njau P, Ulomi I. Assessing the Effectiveness of a Web-Based vaccine Information Management System on Immunization-Related Data Functions: An Implementation Research Study in Tanzania. USAID, Maternal and Child Survival Program; 2018 Jul.

Haidar N, Metiboba L, Katuka A, Adamu F. eHealth Africa: LoMIS Stock impact Evaluation report July 2014 - July 2017.

World Health Organization, PATH. Optimize: Albania Report. Seattle, WA: PATH; 2013. Available from: https://path.azureedge.net/media/documents/TS_opt_albania_rpt.pdf

Village Reach. Data for management: It’s not just another report. 2015. http://www.villagereach.org/wp-content/uploads/2015/04/VillageReach_Data-for-Management_Final-20-PPSeries.pdf

Aman M, Bernstein R, Habib H, Khalid M, Rao A, Soomro AA. Deliver Logistics Management Information System: Final Evaluation Report. US Agency for International Development; 2016 Dec. Available from: https://pdf.usaid.gov/pdf_docs/PA00MK1K.pdf

World Health Organization, PATH. Optimize: Tunisia Report. Seattle, WA: PATH; 2013. Available from: https://path.azureedge.net/media/documents/TS_opt_tunisia_rpt.pdf

World Health Organization, PATH. Optimize: Vietnam Report. Seattle, WA: PATH; 2013. Available from: https://path.azureedge.net/media/documents/TS_opt_vietnam_rpt.pdf

Dehnavieh R, Haghdoost A, Khosravi A, Hoseinabadi F, Rahimi H, Poursheikhali A, et al. The District Health Information System (DHIS2): A literature review and meta-synthesis of its strengths and operational challenges based on the experiences of 11 countries. Health Inf Manag. 2018 Jan 1;1833358318777713.

Garrib A, Stoops N, McKenzie A, Dlamini L, Govender T, Rohde J, et al. An evaluation of the District Health Information System in rural South Africa. S Afr Med J. 2008 Jul;98(7):549–52.

Kihuba E, Gathara D, Mwinga S, Mulaku M, Kosgei R, Mogoa W, et al. Assessing the ability of health information systems in hospitals to support evidence-informed decisions in Kenya. Glob Health Action. 2014;7:24859.

Wickremasinghe D, Hashmi IE, Schellenberg J, Avan BI. District decision-making for health in low-income settings: a systematic literature review. Health Policy Plan. 2016 Sep;31 Suppl 2:ii12-24.

Mutemwa RI. HMIS and decision-making in Zambia: re-thinking information solutions for district health management in decentralized health systems. Health Policy Plan. 2006 Jan;21(1):40–52.

Cibulskis RE, Posonai E, Karel SG. Initial experience of using a knowledge based system for monitoring immunization services in Papua New Guinea. J Trop Med Hyg. 1995 Apr;98(2):107–13.

Weeks RM, Svetlana F, Noorgoul S, Valentina G. Improving the monitoring of immunization services in Kyrgyzstan. Health Policy Plan. 2000 Sep;15(3):279–86.

Jain M, Taneja G, Amin R, Steinglass R, Favin M. Engaging Communities With a Simple Tool to Help Increase Immunization Coverage. Global Health: Science and Practice. 2015 Mar 1;3(1):117–25.

Moja L, Kwag KH, Lytras T, Bertizzolo L, Brandt L, Pecoraro V, et al. Effectiveness of computerized decision support systems linked to electronic health records: a systematic review and meta-analysis. Am J Public Health. 2014 Dec;104(12):e12-22.

Vedanthan R, Blank E, Tuikong N, Kamano J, Misoi L, Tulienge D, et al. Usability and feasibility of a tablet-based Decision-Support and Integrated Record-keeping (DESIRE) tool in the nurse management of hypertension in rural western Kenya. Int J Med Inform. 2015 Mar;84(3):207–19.

Poy A, van den Ent MMVX, Sosler S, Hinman A, Brown S, Sodha S et al. Monitoring Results in Routine Immunization: Development of Routine Immunization Dashboard in Selected African Countries in the Context of Polio Eradication Endgame Strategic Plan. J Infect Dis. 2017;216(suppl_1):S226-S236.

Nutley T, McNabb S, Salentine S. Impact of a decision-support tool on decision making at the district level in Kenya. Health Res Policy Sys. 2013;11(34).

Centers for Disease Control and Prevention (CDC). Implementation of the District Health Information System Routine Immunization Module in Nigeria. 2016 Apr.

World Health Organization, UNICEF, Center for Disease Control and Prevention, USAID. In-depth Evaluation of Reaching Every District Approach. 2007. Available from: http://www.who.int/immunization/sage/1_AFRO_1_RED_Evaluation_Report_2007_Final.pdf

Pan American Health Organization (PAHO). Vaxeen: a Digital and Intelligent Immunization Assistant. PAHO Immunization Newsletter. 2015 Mar; Available from: https://www.paho.org/hq/dmdocuments/2016/SNE3701qtr.pdf

JSI Research & Training Institute, Inc. Home-based Record Redesigns That Worked: Lessons from Madagascar & Ethiopia. 2017. Available from: https://publications.jsi.com/JSIInternet/Inc/Common/_download_pub.cfm?id=18694&lid=3

VillageReach. Informed Design: How Modeling Can Provide Insights to Improve Vaccine Supply Chains. 2017. Available from: http://www.villagereach.org/wp-content/uploads/2017/10/ModelingOverview_Final.pdf

World Health Organization. WHO recommendations on home-based records for maternal, newborn and child health. 2018. Available from: https://apps.who.int/iris/bitstream/handle/10665/274277/9789241550352-eng.pdf

Wagenaar BH, Gimbel S, Hoek R, Pfeiffer J, Michel C, Manuel JL, et al. Effects of a health information system data quality intervention on concordance in Mozambique: time-series analyses from 2009–2012. Popul Health Metr. 2015 Mar 26;13. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4377037/

Muthee V, Bochner AF, Osterman A, Liku N, Akhwale W, Kwach J, et al. The impact of routine data quality assessments on electronic medical record data quality in Kenya. PLoS ONE. 2018;13(4):e0195362.

O’Hagan R, Marx MA, Finnegan KE, Naphini P, Ng’ambi K, Laija K, et al. National Assessment of Data Quality and Associated Systems-Level Factors in Malawi. Glob Health Sci Pract. 2017 27;5(3):367–81.

Bosch-Capblanch X, Ronveaux O, Doyle V, Remedios V, Bchir A. Accuracy and quality of immunization information systems in forty-one low income countries. Tropical Medicine & International Health. 2009 Jan 1;14(1):2–10.

Gimbel S, Mwanza M, Nisingizwe MP, Michel C, Hirschhorn L, AHI PHIT Partnership Collaborative. Improving data quality across 3 sub-Saharan African countries using the Consolidated Framework for Implementation Research (CFIR): results from the African Health Initiative. BMC Health Serv Res. 2017 21;17(Suppl 3):828.

Scott C, Clarke K, Grevendonk J, Dolan S, Ahmed HO, Kamau P, et al. Country Immunization Information System Assessments — Kenya, 2015 and Ghana, 2016. MMWR Morb Mortal Wkly Rep. 2017;66.

Ministere de la Sante et de L’hygiene Publique du Republique de Cote d’Ivoire. Rapport de mise en œuvre du DQS et du LQAS dans les 83 Districts sanitaires de la Côte d’Ivoire Septembre-Octobre 2017.

Pan American Health Organization (PAHO), World Health Organization (WHO), Ministerio de Salud Publica y Asistencial Social de El Salvador. Situación de Vacunas e Inmunizaciones en El Salvador. 2009.

Pan American Health Organization (PAHO). Evaluación Internacional del Programa Ampliado de Inmunizaciones de Paraguay 2000–2011. 2011.

Pan American Health Organization (PAHO). Grenada Immunization Information System Assessment. 2018.

Aqil A. PRISM Case Studies: Strengthening and Evaluating RHIS. 2018. Available from: https://www.measureevaluation.org/resources/publications/sr-08-43

Pan American Health Organization (PAHO), Ministerio De Salud, Panamá. Autoevaluación de la calidad de los datos de inmunización (DQS) Panamá: Informe Final. 2014.

Braa J, Heywood A, Sahay S. Improving quality and use of data through data-use workshops: Zanzibar, United Republic of Tanzania. Bull World Health Organ. 2012 May 1;90(5):379–84.

Shimp L, Mohammed N, Oot L, Mokaya E, Kiyemba T, Ssekitto G, et al. Immunization review reetings: low Hanging Fruit for capacity building and data quality improvement? Pan African Medical Journal. 2017;27. Available from: http://www.panafrican-med-journal.com/content/series/27/3/21/full/

LaFond AK, Kanagat N, Sequeira JS, Steinglass R, Fields R, Mookherji S. Drivers of Routine Immunization System Performance at the District Level: Study Findings from Three Countries, Research Brief No. 3. Arlington, VA: JSRI Research & Training Institute, Inc., ARISE Project for the Bill & Melinda Gates Foundation; 2012 p. 18. Available from: http://arise.jsi.com/wp-content/uploads/2012/08/Arise_3CountryBrief_final508_8.27.12.pdf

Robinson JS, Burkhalter BR, Rasmussen B, Sugiono R. Low-cost on-the-job peer training of nurses improved immunization coverage in Indonesia. Bull World Health Organ. 2001;79(2):150–8.

Shieshia M, Noel M, Andersson S, Felling B, Alva S, Agarwal S, et al. Strengthening community health supply chain performance through an integrated approach: Using mHealth technology and multilevel teams in Malawi. J Glob Health. 2014 Dec;4(2).

Chandani Y, Duffy M, Lamphere B, Noel M, Heaton A, Andersson S. Quality improvement practices to institutionalize supply chain best practices for iCCM: Evidence from Rwanda and Malawi. Res Social Adm Pharm. 2017 Nov;13(6):1095–1109.

Li M. How Social Network Platforms can Improve the Use of Data. MEASURE Evaluation; 2017 Nov.

JSI Research & Training Institute, Inc. IMPACT Team Networks-Empowering people with data. 2016.

Pan American Health Organization (PAHO). NOTI-PAI: An Innovative Feature of Bogotá’s Immunization Registry. PAHO Immunization Newsletter. 2012 dec; Available from: https://www.paho.org/hq/dmdocuments/2013/SNE3406.pdf

JSI Research & Training Institute, Inc. Reaping the fruits of IMPACT Team work in Kirinyaga County. 2017.

PATH. BID Learning Network Results. 2018.

JSI Research & Training Institute, Inc., United National Population Fund. Myanmar Supply Chain Quality Improvement Teams Pilot Results. 2016. Available from: https://publications.jsi.com/JSIInternet/Inc/Common/_download_pub.cfm?id=16436&lid=3

JSI Research & Training Institute, Inc. Pakistan visibility and analytics network project: Empowering people with data.

PATH. How Mobile Electronic Devices are Connecting Health Workers to Improve Data Quality and Data Use for Better Health Decisions: Experience from BID Initiative in Tanzania.

Ward K, Mugenyi K, Benke A, Luzze H, Kyozira C, Immaculate A, et al. Enhancing Workforce Capacity to Improve Vaccination Data Quality, Uganda. Emerging Infectious Diseases. 2017 Dec;23(13). Available from: http://wwwnc.cdc.gov/eid/article/23/13/17-0627_article.htm

He P, Yuan Z, Liu Y, Li G, Lv H, Yu J, et al. An evaluation of a tailored intervention on village doctors use of electronic health records. BMC Health Serv Res. 2014 May;14:217.

Rowe AK, de Savigny D, Lanata CF, Victora CG. How can we achieve and maintain high-quality performance of health workers in low-resource settings? Lancet. 2005 Sep 17–23;366(9490):1026–35.

Bosch-Capblanch X, Garner P. Primary health care supervision in developing countries. Trop Med Int Health. 2008 Mar;13(3):369–83.

Vasan A, Mabey DC, Chaudhri S, Brown Epstein HA, Lawn SD. Support and performance improvement for primary health care workers in low- and middle-income countries: a scoping review of intervention design and methods. Health Policy Plan. 2017 Apr 1;32(3):437–452.

Beltrami J, Wang G, Usman HR, Lin LS. Quality of HIV Testing Data Before and After the Implementation of a National Data Quality Assessment and Feedback System. J Public Health Manag Pract. 2017 May/Jun;23(3):269–275.

Centers for Disease Control and Prevention (CDC). Uganda Immunization Data Improvement Teams (DIT): Improving the quality and use of immunization data. 2018 Jan.

Centers for Disease Control and Prevention (CDC). STOP Immunization and Surveillance DATA Specialist (ISDS) Strategy. 2018 Feb.

Courtenay-Quirk C, Spindler H, Leidich A, Bachanas P. Building Capacity for Data-Driven Decision Making in African HIV Testing Programs: Field Perspectives on Data Use Workshops. AIDS Educ Prev. 2016 Dec;28(6):472–84.

Pappaioanou M, Malison M, Wilkins K, Otto B, Goodman RA, Churchill RE, et al. Strengthening capacity in developing countries for evidence-based public health: Social Science & Medicine. 2003 Nov;57(10):1925–37.

Rolle IV, Zaidi I, Scharff J, Jones D, Firew A, Enquselassie F, et al. Leadership in strategic information (LSI) building skilled public health capacity in Ethiopia. BMC Res Notes. 2011 Aug 12;4:292.

Ledikwe JH, Reason LL, Burnett SM, Busang L, Bodika S, Lebelonyane R, et al. Establishing a health information workforce: innovation for low- and middle-income countries. Hum Resour Health. 2013 Jul 18;11:35.

Centers for Disease Control and Prevention (CDC). Data for Decision Making (DDM) Training Program best Practices. 2018.

Centers for Disease Control and Prevention (CDC). Data for Decision Making (DDM) Training Program Best Practices.

Jandee K, Kaewkungwal J, Khamsiriwatchara A, Lawpoolsri S, Wongwit W, Wansatid P. Effectiveness of Using Mobile Phone Image Capture for Collecting Secondary Data: A Case Study on Immunization History Data Among Children in Remote Areas of Thailand. JMIR mHealth and uHealth. 2015 Jul 20;3(3):e75.

Ramanujapuram A, Akkihal A. Improving Performance of Rural Supply Chains Using Mobile Phones: Reducing Information Asymmetry to Improve Stock Availability in Low-resource Environments. In AMC Press; 2014. P.11–20.

Negandhi P, Chauhan M, Das AM, Neogi SB, Sharma J, Sethy G. Mobile-based effective vaccine management tool: An m-health initiative implemented by UNICEF in Bihar. Indian J Public Health. 2016 Oct-Dec;60(4):334–335.

Campbell N, Schiffer E, Buxbaum A, McLean E, Perry C, Sullivan TM. Taking knowledge for health the extra mile: participatory evaluation of a mobile phone intervention for community health workers in Malawi. Glob Health Sci Pract. 2014 Feb 6;2(1):23–34.

Srinivasan P. UNDP device to be used in two Rajasthan blocks for real-time vaccine information. Hindustan Times. 2017 Nov 2. Available from: https://www.hindustantimes.com/jaipur/undp-device-to-be-used-in-two-rajasthan-blocks-for-real-time-vaccine-information/story-T7SxoGlvWavNfbGCGxH33O.html

GlaxoSmithKline. The Power of Partnerships. Transforming Vaccine Coverage in Mozambique. 2016 Apr.

Escalante M, Calderon M, Lu J, Ruiz J, Cabrejos J, Michel F. Strengthening Peruvian immunization records through mobile data collection using the ODK app. WHO Global Immunization Newsletter. 2016 Dec. Available from: https://www.who.int/immunization/GIN_December_2016.pdf

World Health Organization, PATH. Optimize: South Sudan Report. 2013. Available from: https://www.who.int/immunization/programmes_systems/supply_chain/optimize/south_sudan_optimize_report.pdf

Rowe AK, Rowe SY, Peters DH, Holloway KA, Chalker J, Ross-Degnan D. Effectiveness of strategies to improve health care provider practices in low- and middle-income countries: a systematic review. Lancet Glob Health. 2018 Oct;6:e1163-e1175.

Heidebrecht CL, Quach S, Pereira JA, Quan SD, Kolbe F, Finkelstein M, et al. Incorporating Scannable Forms into Immunization Data Collection Processes: A Mixed-Methods Study. Tang P, editor. PLoS ONE. 2012 Dec 18;7(12):e49627.

Sudhof L, Amoroso C, Barebwanuwe P, Munyaneza F, Karamaga A, Zambotti G, et al. Local use of geographic information systems to improve data utilisation and health services: mapping caesarean section coverage in rural Rwanda. Trop Med Int Health. 2013 Jan;18(1):18–26.

Chandani Y, Andersson S, Heaton A, Noel M, Shieshia M, Mwirotsi A, et al. Making products available among community health workers: Evidence for improving community health supply chains from Ethiopia, Malawi, and Rwanda. J Glob Health. 2014 Dec;4(2):020405.

JSI Research & Training Institute, Inc., PEPFAR, MEASURE Evaluation. Applying User-Centered Design to Data Use Challenges: What We Learned. 2017 May. Available from: https://www.measureevaluation.org/resources/publications/tr-17-161

Wheldon S. Back to Basics: Routine Immunization Tools Used for Analysis and Decision Making at the Toga Health Post. John Snow Research & Training Institute, Inc. (JSI); 2015. Available from: https://www.mpffs6apl64314hd71fbb11y-wpengine.netdna-ssl.com/ wp-content/uploads/2016/04/UI-FHS-Case-Study_Tools-at-Toga-HP.pdf

Universal Immunization through Improved Family Health Services (UI-FHS). Reaching every district using quality improvement methods (RED-QI): a Guide for Immunization Program Managers. John Snow Research & Training Institute, Inc. (JSI); 2015. Available from: https://mpffs6apl64314hd71fbb11y-wpengine.netdna-ssl.com/wp-content/uploads/2015/05/UI-FHS_HowtoGuide.pdf

Shifo Foundation. MyChild Solution Components Brief.

Rowe AK. Health Care Provider Performance Review. May 2018. PowerPoint Presentation.

JSI Research & Training Institute, Inc. Building Routine Immunization Capacity, Knowledge and Skills (BRICKS) – Comprehensive Framework for Strengthening and Sustaining Immunization Program Competencies, Leadership and Management. 2016. Available from: https://www.jsi.com/JSIInternet/Inc/Common/_download_pub.cfm?id=17119&lid=3

Shifo Foundation. Leaving no child behind – closing equity gaps and strengthening outreach performance with MyChild Outreach. 2017.

Whelan F. Setting a new pace: How Punjab, Pakistan, achieved unprecedented improvements in public health outcomes. April 2018. Available from: https://cdn.buttercms.com/4TIb4qf2QxnGCHjqKuRr

Frøen JF, Myhre SL, Frost MJ, Chou D, Mehl G, Say L, et al. eRegistries: Electronic registries for maternal and child health. BMC Pregnancy and Childbirth. 2016 Jan 19;16:11.

Pan American Health Organization (PAHO). Immunization Registries in Latin America: Progress and Lessons Learned. PAHO Immunizaton Newsletter. 2012 Dec; Available from: https://www.paho.org/hq/dmdocuments/2013/SNE3406.pdf

Mavimbe JC, Braa J, Bjune G. Assessing immunization data quality from routine reports in Mozambique. BMC Public Health. 2005 Oct;5(1). Available from: http://bmcpublichealth.biomedcentral.com/articles/https://doi.org/10.1186/1471-2458-5-108

BID. BID Initiative Midline Report: Immunization Data Quality and Use in Arusha Region, Tanzania. 2017.

JSI Research & Training Institute, Inc., Centers for Disease Control and Prevention. Data for Decision Making Project. 1994. Available from: ftp://ftp.cdc.gov/pub/publications/mmwr/other/ddmproj.pdf

VillageReach. Enhanced Visibility, Analytics and Improvement for the Mozambique Immunization Supply Chain. 2015 Apr. Available from: http://www.villagereach.org/wp-content/uploads/2016/07/Enhanced-Visibility-Analytics-and-Improvement-for-Mozambique.pdf

Acknowledgements

We would like to acknowledge the Immunization Data: Evidence for Action (IDEA) steering committee members who contributed their time and expertise to advising on the review design and methodology, and provided ongoing input throughout the review process: Alain Nyembo, Ana Morice, Carolina Danovaro, Daniella Figueroa-Downing, David Novillo, Francis Dien Mwansa, Jaleela Jawad, Jan Grevendonk, Marta Gacic-Dobo, and Peter Bloland. In addition, we would like to acknowledge the contributions of our colleagues at the Pan American Health Organization (Martha Velandia, Marcela Contreras, Robin Mowson, and Elsy Dumit-Bechara) for their collaboration in collecting and reviewing literature from the Latin America and Caribbean region and for providing valuable feedback and recommendations along the way. We would like to recognize the rest of the PATH IDEA team (Laurie Werner, Hallie Goertz, Tara Newton, Celina Kareiva, Nikki Gurley, Emma Stewart, Erin Frye-Sosne, and Emma Korpi) for contributing to this review in multiple stages of the process. Finally, we would like to thank Kaleb Brownlow and Kendall Krause of the Bill & Melinda Gates Foundation for providing their expertise and technical input throughout the review process and for supporting this work.

Funding

This work was funded by a grant from the Bill & Melinda Gates Foundation (OPP1180507). The views expressed in the paper are solely those of the authors and do not necessarily reflect the views of the Gates Foundation or organizations of employ.

Author information

Authors and Affiliations

Contributions

AO contributed to the study design and performed the overall analysis, interpretation of data, and reporting of results. JS made substantial contributions to the study design, and reviewed and contributed to the interpretation and reporting of results. NS was a major contributor to the analysis, interpretation of data, and reporting of results for multiple data use interventions. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Osterman, A.L., Shearer, J.C. & Salisbury, N.A. A realist systematic review of evidence from low- and middle-income countries of interventions to improve immunization data use. BMC Health Serv Res 21, 672 (2021). https://doi.org/10.1186/s12913-021-06633-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-021-06633-8