Abstract

Background

Practice facilitation is a promising strategy to enhance care processes and outcomes in primary care settings. It requires that practices and their facilitators engage as teams to drive improvement. In this analysis, we explored the practice and facilitator factors associated with greater team engagement at the mid-point of a 12-month practice facilitation intervention focused on implementing cardiovascular prevention activities in practice. Understanding factors associated with greater engagement with facilitators in practice-based quality improvement can assist practice facilitation programs with planning and resource allocation.

Methods

One hundred thirty-six ambulatory care small to medium sized primary care practices that participated in the EvidenceNow initiative’s NC Cooperative, named Heart Health Now (HHN), fit the eligibility criteria for this analysis. We explored the practice and facilitator factors associated with greater team engagement at the mid-point of a 12-month intervention using a retrospective cohort design that included baseline survey data, monthly practice activity implementation data and information about facilitator’s experience. Generalized linear mixed-effects models (GLMMs) identified variables associated with greater odds of team engagement using an ordinal scale for level of team engagement.

Results

Among our practice cohort, over half were clinician-owned and 27% were Federally Qualified Health Centers. The mean number of clinicians was 4.9 (SD 4.2) and approximately 40% of practices were in Medically Underserved Areas (MUA). GLMMs identified a best fit model. The Model presented as odd ratios and 95% confidence intervals suggests greater odds ratios of higher team engagement with greater practice QI leadership 17.31 (5.24–57.19), [0.00], and practice location in a MUA 7.25 (1.8–29.20), [0.005]. No facilitator characteristics were independently associated with greater engagement.

Conclusions

Our analysis provides information for practice facilitation stakeholders to consider when considering which practices may be more amendable to embracing facilitation services.

Similar content being viewed by others

Background

Cardiovascular disease (CVD) is the leading cause of death in the USA and is associated with diminished life quality, staggering healthcare costs, and years of life lost [1]. Yet, it is estimated that over 200,000 such deaths could be prevented annually by implementing efforts to reduce CVD risk factors. At the primary care practice level, efforts to recommend appropriate aspirin use, better manage blood pressure and high cholesterol, and encourage smoking cessation are key to reducing CVD, a strategy referred to the “ABCS” of CVD prevention [2].

Primary CVD prevention is the focus of the Evidence Now Initiative funded by the Agency for Healthcare Research and Quality (AHRQ) where seven regional cooperatives, including Heart Health Now (HHN), the North Carolina cooperative, assisted small-medium-sized primary care practices in implementing quality improvement (QI) activities to enhance CVD prevention strategies.

HHN practices were offered on-site support via practice facilitators who joined with practice teams to adopt and implement CVD prevention using QI methods. Activities included abstracting and using ABCS’s-related clinical quality measures from electronic health records (EHR) to drive change, implementing evidenced based care protocols, and enhancing general QI knowledge and skills.

Practice facilitation is an especially promising approach to guide care redesign in primary care settings [3] and has been associated with improved outcomes for patients with a variety of health conditions, including enhanced diagnosis and treatment of asthma, reduced hospitalizations for asthma [4], improved testing behaviors [5], improved office work flows for caring with patients using opioids [6], and enhanced adherence to condition specific [7, 8] and cancer screening guidelines [9]. In Rogers’ Diffusion of Innovations, practice facilitators are referred to as “change agents” [10]. They empower practices to become their own agents of change which distinguishes it from a traditional consulting model, thus practices improve while they simultaneously build internal capacity for change [11]. They enable others to act, as opposed to telling or persuading them to do so [12] and serve as cross-pollinators of ideas and are key resource providers for the practices they serve [13, 14].

Although the evidence for the impact of practice facilitation on outcomes is building, it is a relatively new strategy and questions remain as to what underlies effective facilitation methods. For instance, investigators note challenges with establishing relationships between practice facilitators and practice staff teams; an issue mainly attributed to the continuous array of competing demands that commandeer practice time and resources [15]. For example, in a trial designed to test the impact of practice facilitation on improving diabetes care, disruptions such as staff turnover, moving to a new practice location, and installing a new EHR delayed the facilitation start time by nearly 2 years [3]. Others describe challenges facilitators face getting into practices, establishing trust, and finding practice leaders who prioritize QI work [16, 17]. Suboptimal engagement with practices during the study time period has been identified as a barrier to 1) fully implementing study activities, 2) a facilitator’s ability learn about and work within a practice’s culture, and 3) a practice’s abilities to leverage all that facilitation can offer [16,17,18]. These studies and others have led to questions regarding if there are unmeasured baseline characteristics that are barriers to engaging with facilitators [18, 19].

In this analysis, we explore one piece of this puzzle using data from the HHN study, specifically if there are practice characteristics and practice facilitator level variables associated with greater levels of engagement between the facilitators and the practices they served. Understanding which factors may enhance or impair facilitator-practice team engagement may help practice facilitation organizations improve project planning, workforce deployment, and reduce delays with project implementation.

Methods

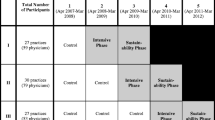

Study design and setting: HHN is a stepped wedge cluster randomized trial designed to evaluate practice facilitation services on CVD outcomes. Practice recruitment efforts were from May 2015 to December 2015. Five hundred thirty-eight practices offering primary care services in NC with 10 or fewer providers and had an electronic health record were considered for participation in the larger HHN trial. Our focus was on independent practices, Rural Health Centers, and Federally Qualified Health Centers (FQHC), often located in Medically Underserved Areas (MUA) [20] that lack organizational support for workflow redesign and development of tailored quality reports derived from their EHRs. We allowed 21 practice sites owned by health systems to participate because of geographic separation and limited practice support from their parent organizations. Two hundred ninety-two practices ultimately enrolled, and 47 of these dropped out prior to the start of the practice facilitation intervention leaving 245 practices that were offered facilitation services. To be eligible, practices needed to agree to form a QI team, meet with a practice facilitator monthly, and permit access to their EHR data in order to allow for data abstraction and to use this data to create dashboards and other resources regarding clinical quality performance on appropriate use of aspirin, blood pressure control, cholesterol control and smoking cessation (the ABCS’s) measures. Practices were randomized to 7 different site activation months that established the randomized cohorts. Facilitators were expected to engage with practice staff members monthly for 12 consecutive months, but were available for additional phone, email, web conference, and onsite consultations if needed. Facilitators were trained to assign a monthly score to assess each practice’s progress using implementation measures described below. More details of the HHN study are in the study protocol manuscript [21].

The HHN practice facilitators, employed by the North Carolina Area Health Education Centers (NC AHEC) practice support program, base their work on a set of changes that, if implemented, could enhance CVD care processes and outcomes (i.e. key drivers of implementation). The extent of adoption of these drivers are captured using the Key Driver Implementation Scale (KDIS) developed by the NC AHEC practice support program; a tool rooted in the Chronic Care Model [22, 23] and used in NC AHEC projects and program analyses [24,25,26]. KDIS scales are ordinal scales, generally with 4 or 5 options, that capture when activities are adopted and how fully they are implemented into standard work [26, 27]. The key drivers are part of a larger framework that provides the logic as to how key activities performed within each driver domain can lead to improved outcomes, thus similar to a logic diagram or protocol, but created specifically to support ambulatory care practices in their change efforts. These 4 key driver domains include: 1) optimal use of clinical information systems, 2) adoption of evidence-based care protocols, 3) regular use and referral for patient self-management support and 4) optimization of the care team. The intervention activities that are tested and implemented in practices are purposefully adaptive to address the needs, skills and resources of each clinic. Additionally, the KDIS captures levels of practice Team Engagement (TE) and QI Leadership as defined below and detailed in Tables 1 and 2 respectively. TE indicates that practice facilitators are included as part of a practice team that collectively works to devise, implement, and evaluate small tests of change. The level of this engagement is captured using a 0–3 score.

We focused our analysis at the approximate mid-point of the 12-month intervention in order to evaluate TE when engagement in active implementation is expected, based upon the long tenure of NC AHEC’s practice support program with other statewide QI initiatives and an expectation that if initial engagement was suboptimal, that changes could be made to optimize our ability to retain practices and capture our main results data.

To guide our analysis, we developed a conceptual model based upon a White paper by Geonnotti et al. that details strategies for facilitators to enhance their engagement with practices in QI [15]. Our model expands upon this and posits that facilitator and practice-level characteristics are important to creating effective practice staff–practice facilitator project implementation teams. We chose data elements that may enhance or impair the ability of practices to find time and/or have the relevant motivation to engage with facilitators in the HHN trial. These include variables such as practice size, location in a medically underserved area (MUA) or not, payer mix, involvement in other quality initiatives, practice level measures of burnout, readiness and adaptive reserve, leadership support for QI and practice facilitator’s prior experiences and tenure with facilitation (Fig. 1).

Data sources

We collected practice-and facilitator-level data from 4 sources described below and in Table 2. We restricted our analysis to the 136 practices that met our inclusion criteria defined as having 1) responded to both baseline surveys and 2) the requisite practice-level KDIS data (Fig. 2).

Baseline practice characteristics survey (PCS)

Completed by a lead practice provider or administrator, this survey captured demographics including number of providers, patient visit volume, payer mix, practice location, number of disruptive changes experienced in the year prior to HHN participation, and experience with other quality initiatives (Table 3).

Baseline practice member survey (PMS)

Practice managers distributed up to 5 surveys to individuals with different roles to obtain a variety of perspectives. These included questions about staff burnout, adaptive reserve and readiness to engage in the study. For our analysis, where more than one person provided responses, practice means were calculated.

Practice facilitator data

NC AHEC program leaders provided information about the facilitators such as: 1) years of facilitation experience, 2) existence of prior working relationships between a specific facilitator and a practice, 3) if a practice facilitator originally assigned, remained the practice’s facilitator for the duration of the intervention, and 4) prior working relationship between a practice and the NC AHEC practice support program.

Key driver implementation scale (KDIS)

Practice facilitators documented practice KDIS, TE and Leadership scores that reflect observations made during each month. The TE score included 4 options scored from 0 to 3 where a “0” indicated no engagement of the practice team with the facilitator while a “3” indicated that a practice team works with a facilitator in a regular and effective manner (Table 1). Practice Leadership (Table 2) and TE scores were calculated by averaging scores from months 4 to 6.

Outcome measure

We define the TE outcome measure as “adequate” if the mean TE score was ≥2 at 6 months; calculated as an average in the 4 to 6-month time interval where at least 2 scores were available. This threshold score was chosen by our NC AHEC leadership and investigator team based upon extensive experience with QI project implementation in both ambulatory practices and health care settings and the score’s representation of a practices’ behavior of having regular QI meetings vs. having irregularly scheduled or no QI meetings (see Table 1. definitions).

Analyses

Descriptive statistics summarizing practice characteristics are in Table 3. We estimated the intracluster correlation coefficient (ICC) to measure the clustering effect by comparing the relative levels of between and within facilitator variabilities [34]. The estimated ICC was 0.345, i.e., 34.5% of the total variabilities in TE scores are attributable to differences among facilitators. The generalized linear mixed-effects model (GLMM) with allowing random intercepts per practice facilitator was used in order to adjust for the effect of clustering by practice facilitator. We identified variables associated with TE scores of ≥2 vs. < 2 (Table 4) by fitting all possible models with predictors one-at-a-time. Significant predictors were defined as p value equal to or less than 0.1 were included. The final model was selected using the Akaike information criterion (AIC) value with the best fit, thus lowest AIC [35, 36]. The adjusted odds ratio and standard errors of the models are reported in Table 4.

Results

Among 245 HHN practices, 136 met inclusion criteria. Over half were clinician owned and 27% were federally qualified health centers (FQHC) or FQHC look-alikes (Table 3). Approximately 40% of practices were located in a medically underserved area (MUA). Nearly 28% of practices had previously worked with the NC AHEC practice support program and 75% of practices had the same facilitator for duration of the intervention. The overall mean TE score was 1.6 (SE 0.4) and the median was 2. Among the practices that met inclusion criteria, 103/136 (76%) had contact with their facilitators during all 6 out of the 6 months included in this analyses. For those deemed ineligible that had practice level data, only 17% had this same level of contact.

By considering GLMM with a single predictor, higher leadership scores, practicing in a MUA location, and having higher percentages of uninsured patients were associated with greater odds of achieving a TE score of ≥2 at the intervention mid-point (see Table 4). Conversely, having higher percentages of dual Medicaid/Medicare insurance were associated with lower odds. When comparing independently owned practices to Federally Qualified Health Centers/health departments and separately to practices that are hospital owned or part of larger health care systems, independently owned practices were less likely to achieve a TE score of 2 or greater. Practice levels of burnout, adaptive reserve, and readiness were not associated with levels of TE.

The final GLMM model was selected based on the smallest AIC value. The following factors were associated with greater odds of a achieving a TE score of ≥2, 1) greater practice leadership and 2) practice location in a MUA. No practice facilitator characteristics were significantly associated with the outcome. None of the models that included payer mix demonstrated statistical significance.

Discussion

Our evaluation shares insights into specific practice characteristics associated with greater odds of engagement of practice facilitators with their practice QI teams at the midpoint of the 12-month HHN CVD prevention trial. We are not aware of other studies that have quantitatively evaluated levels of engagement of facilitators with their teams in this manner.

Our data suggests that greater engagement with facilitators was associated with; 1) practices with leaders who support QI implementation and 2) practices located in MUA’s, with the former having a greater relative impact on this relationship.

Based upon our experience with other NC based practice support projects, we were not surprised that practices located in more remote areas, thus likely with fewer internal resources for implementing practice changes, may be more open and welcoming to facilitation services. We are not aware of any literature that has analyzed similar associations.

We were also not surprised to see the strong association of practice leadership with TE. Within the practice transformation literature, more effective leadership has been associated with greater engagement of practice teams in change activities [37]. In an editorial, Bohmer outlines key physician leadership activities critical to organizational change, including leadership responsibilities with 1) defining care goals, 2) ensuring that “clinical microsystems” can execute such goals, 3) engaging in data driven QI methods, and 4) modeling how to step beyond usual boundaries in order to drive organizations towards “relentless” improvement [38]. We believe that a key facet of strong leadership is the ability to create and support high functioning teams and in the case of leveraging facilitation resources, paving the way to have skilled facilitators become part of practice QI team structures. Other leaders, who are less effective, may be less able or inclined at to support high functioning teams.

Although not included in the final model selected based upon lower AIC, results of another model that included leadership and practice ownership type suggested that compared to independently owned practices, practices that are Federally Qualified Health Centers engaged with their facilitators more readily. This same signal was noted for health system/hospital/faculty practices compared to independently owned practices. Regarding practice ownership type, we suspect that those owned by health systems or hospitals may be more receptive to including facilitators into their teams as it may be a more familiar improvement strategy in such settings vs. in independently owned practices that may function more autonomously. Additionally, Federally Qualified Health centers must have an ongoing quality improvement/assurance system [39]. They are specifically expected to address ways to adhere to evidenced based guidelines, standards of care, and standards in the provision of health care services. As part of this they complete quarterly QI/Quality assurance assessments and must implement follow up actions as deemed necessary. Independently owned practices can certainly engage in a variety of programs, but are not systematically expected to do so like FQHC’s,

We were surprised by the lack of associations between practice level burnout, adaptive reserve, and organizational readiness with TE. We expected that practices with higher levels of burnout might view the study as an additional burden, thus would be more challenged with engagement. We were not able to find other studies where the burnout measure has been calculated at the practice level and did not have a vetted algorithm for generating practice level scores. Without work in establishing theoretical underpinnings and construct validity of the burnout measure at the practice level, it is difficult to understand if null findings are at least partly a measurement issue. The challenge with interpreting these null findings also applies to the adaptive reserve and organizational readiness outcomes. The adaptive reserve measure has not been rigorously validated as its own measure as it was a 23-item instrument that emerged from factor analyses performed on responses of 31 uniquely motivated practices in the National Demonstration Project [40] and the organizational readiness instrument has not been tested yet for predictive validity.

No practice facilitator level measures had independent effects on the TE outcome in our multivariable models. We suspect that there could be personality, communication, or other facilitator characteristics that may impact engagement, but we did not collect such data. Mold et al. in a study where 5 different facilitators guided 12 practices in implementing activities to enhance preventive services, found no effect of the individual facilitator on their study outcomes [41].

As stated, one of the most notable results of this analysis was the influence of greater leadership support for quality improvement and the odds of reaching a TE score of 2 at the study mid-point. This raises the question as to whether or not there are effective interventions that can help practice leaders increase their understanding and opinions of team-based QI prior to a study’s implementation. It may be important to research how to better engage practices where QI team engagement is sub-optimal, for instance to determine if certain types of activities are a better fit and/or if delving into actions that can provide “quick wins” can enhance engagement. There are potential policy implications to consider related to our findings. If the level of practice leadership for QI is critical to a practice team’s ability and willingness to engage with facilitators, then there may be opportunities to help leaders understand the value of QI by using different techniques based upon where leaders are along the change continuum. Several helpful strategies are included White paper “Engaging Primary Care Practices in QI” by Geonnotti et al. and include helping practices by 1) relieving “pain points”, 2) preparing for inevitable changes in health care quality reporting, 3) linking QI work with core values and larger missions of practice organizations, 4) demonstrating how QI can result in lower administrative task burden to clinicians, 5) exposing new practices to early adopters and enthusiastic QI opinion leaders, and 6) using “proxy relationships”, thus already trusted sources, to help make the case for QI work [15]. Understanding if there are specific actions that can enhance leaderships’ enthusiasm for QI may be an important topic for the facilitation research agenda.

Additionally, it is possible that facilitation services in non-MUA areas or with specific types of ownership are better served with different types of facilitation services. This fits squarely with a statement by McHugh et al. who shared their experiences with suboptimal practice engagement in the Heart Health in the Heartland study [17]. They noted that more research is needed to identify best strategies for practice engagement and to understand if in some cases, simple targeted practice facilitator support may be more useful than the comprehensive support provided in larger initiatives like Heart Health Now.

Of note we did not see an association between practice size, used as a continuous measure, and our outcome, while other studies have indicated that smaller practices may be more likely to engage with facilitators due to a lack internal staff members who can take on QI tasks [42]. Our study focused on small to medium sized practices only, thus this may be part of why we did not see associations with size and our outcomes as others have. In another study, practices with fewer than 3 providers demonstrated improvement in one of the overall clinical outcomes, again suggesting that practice size may matter [43]. McHugh et al., in their qualitative analysis of another EvidenceNOW collaborative’s experience, posits that smaller practices may be better supported through less complex interventions than what was included in the HHN study, thus a potential reason for our not seeing an effect of practice size in our analysis [17].

Limitations

Our study’s findings must be considered in light of its limitations. First our practices were small to medium-sized practices that deliver primary care in NC and chose to participate in HHN, thus may not be representative of all primary care practices.

The KDIS measurement instrument was developed by experts in primary care quality improvement to guide and capture implementation efforts within primary care practices and has not been subjected to the rigorous validation processes. However, the KDIS is used in a multiple NC AHEC projects and analyses where we continue to understand its value as an implementation effectiveness measurement tool [24,25,26, 44]. The TE outcome measure and the KDIS Leadership measures were scored by each practice’s facilitator, thus there is a potential for same source bias.

Additionally, many practices were not eligible due to missing data. We suspect that practices that met our inclusion criteria, thus put efforts into filling out practice surveys and activity implementation, could have been more engaged with the study than those with missing data, which may potentially bias our results towards the null. Additionally, as staff turnover is common in practice, it is possible that different staff members provided responses to the baseline surveys vs. those who participated in team activities during the intervention phase, potentially complicating results interpretation.

Conclusion

In our analysis, greater practice engagement with practice facilitators appears to be enhanced in practices located in MUAs and those with greater involvement of leadership in quality improvement efforts. The impact of leadership may be particularly important based upon this analysis and the years of facilitation experience in NC and beyond. How to engage with leaders to optimize the use of facilitation resources and how to enhance leadership support for QI are topics to include in the facilitation research agenda going forward. Additionally, the research community may benefit from reflecting on the experiences of Evidence NOW and other large-scale primary care research projects and work to devise practical measures that capture practice level constructs and commit to their testing and validation.

Availability of data and materials

The data set supporting this analysis is available upon request by contacting Dr. Jacqueline Halladay via email (Jacqueline_halladay@med.unc.edu).

Abbreviations

- GLMM:

-

Generalized linear mixed-effects model

- MUA:

-

Medically Underserved Area

- CVD:

-

Cardiovascular disease

- ABCS:

-

Aspirin, Blood Pressure, Cholesterol, Smoking

- AHRQ:

-

Agency for Healthcare Research and Quality

- HHN:

-

Heart Health Now

- QI:

-

Quality Improvement

- EHR:

-

Electronic Health Record

- FQHC:

-

Federally Qualified Health Center

- KDIS:

-

Key Driver Implementation Scale

- NC AHEC:

-

North Carolina Area Health Education Center

- TE:

-

Team Engagement

- PCS:

-

Practice Characteristics Survey

- PMS:

-

Practice Member Survey

- ICC:

-

Intracluster correlation coefficient

- AIC:

-

Akaike information criterion

References

Heidenreich PA, Trogdon JG, Khavjou OA, et al. Forecasting the future of cardiovascular disease in the United States: a policy statement from the American Heart Association. Circulation. 2011;123(8):933–44.

Frieden TR, Berwick DM. The “million hearts” initiative--preventing heart attacks and strokes. N Engl J Med. 2011;365(13):e27.

Parchman ML, Noel PH, Culler SD, et al. A randomized trial of practice facilitation to improve the delivery of chronic illness care in primary care: initial and sustained effects. Implement Sci. 2013;8:93.

Bryce FP, Neville RG, Crombie IK, Clark RA, McKenzie P. Controlled trial of an audit facilitator in diagnosis and treatment of childhood asthma in general practice. BMJ (Clinical research ed). 1995;310(6983):838–42.

Hogg W, Baskerville N, Lemelin J. Cost savings associated with improving appropriate and reducing inappropriate preventive care: cost-consequences analysis. BMC Health Serv Res. 2005;5(1):20.

Cardarelli R, Weatherford S, Schilling J, et al. Improving chronic pain management processes in primary care using practice facilitation and quality improvement: the central Appalachia inter-professional pain education collaborative. J Patient-Centered Res Rev. 2017;4(4):247–55.

Hulscher ME, van Drenth BB, van der Wouden JC, Mokkink HG, van Weel C, Grol RP. Changing preventive practice: a controlled trial on the effects of outreach visits to organise prevention of cardiovascular disease. Qual Health Care. 1997;6(1):19–24.

Hogg W, Lemelin J, Moroz I, Soto E, Russell G. Improving prevention in primary care: evaluating the sustainability of outreach facilitation. Can Fam Physician. 2008;54(5):712–20.

Dietrich AJ, O'Connor GT, Keller A, Carney PA, Levy D, Whaley FS. Cancer: improving early detection and prevention. A community practice randomised trial. BMJ (Clinical research ed). 1992;304(6828):687–91.

Rogers EM. Diffusion of innovations. 5th ed. New York: Free Press; 2003.

Grumbach K, Bainbridge E, Bodenheimer T. Facilitating improvement in primary care: the promise of practice coaching. Issue brief (Commonwealth Fund). 2012;15:1–14.

Harvey G, Loftus-Hills A, Rycroft-Malone J, et al. Getting evidence into practice: the role and function of facilitation. J Adv Nurs. Mar 2002;37(6):577–88.

Cook R. Primary care. Facilitators: looking forward. Health Visitor. Dec 1994;67(12):434–5.

Fullard E, Fowler G, Gray M. Facilitating prevention in primary care. Br Med J (Clin Res Ed). 1984;289(6458):1585–7.

Geonnotti K, Taylor EF, Peikes D, Schottenfeld L, Burak H, McNellis R, Genevro J. Engaging Primary Care Practices in Quality Improvement: Strategies for Practice Facilitators. AHRQ Publication No. 15-0015-EF. Rockville: Agency for Healthcare Research and Quality; 2015.

Liddy CE, Blazhko V, Dingwall M, Singh J, Hogg WE. Primary care quality improvement from a practice facilitator's perspective. BMC Fam Pract. 2014;15:23.

McHugh M, Brown T, Liss DT, Walunas TL, Persell SD. Practice facilitators’ and leaders’ perspectives on a facilitated quality improvement program. Ann Fam Med. 2018;16(Suppl 1):S65–71.

Gold R, Bunce A, Cowburn S, et al. Does increased implementation support improve community clinics’ guideline-concordant care? Results of a mixed methods, pragmatic comparative effectiveness trial. Implement Sci. 2019;14(1):100.

Wang A, Pollack T, Kadziel LA, et al. Impact of practice facilitation in primary care on chronic disease care processes and outcomes: a systematic review. J Gen Intern Med. Nov 2018;33(11):1968–77.

HRSA. Medically underserved areas and populations (MUA/Ps); 2016. https://bhw.hrsa.gov/shortage-designation/muap. Accessed April 21, 2018, 2018.

Weiner BJ, Pignone MP, DuBard CA, et al. Advancing heart health in North Carolina primary care: the heart health NOW study protocol. Implement Sci. 2015;10:160.

Wagner EH, Austin BT, Davis C, Hindmarsh M, Schaefer J, Bonomi A. Improving chronic illness care: translating evidence into action. Health Aff (Millwood). Nov-Dec 2001;20(6):64–78.

Margolis PA, DeWalt DA, Simon JE, et al. Designing a large-scale multilevel improvement initiative: the improving performance in practice program. J Contin Educ Heal Prof. 2010;30(3):187–96.

Cykert S, Lefebvre A, Bacon T, Newton W. Meaningful use in chronic care: improved diabetes outcomes using a primary care extension center model. N C Med J. 2016;77(6):378–83.

Donahue KE, Halladay JR, Wise A, et al. Facilitators of transforming primary care: a look under the hood at practice leadership. Ann Fam Med. 2013;11(Suppl 1):S27–33.

Halladay JR, DeWalt DA, Wise A, et al. More extensive implementation of the chronic care model is associated with better lipid control in diabetes. J Am Board Fam Med. 2014;27(1):34–41.

Knox L, Taylor EF, Geonnotti K, Machta R, Kim J, Nysenbaum J, Parchman, M. Developing and Running a Primary Care Practice Facilitation Program: A How-to Guide (Prepared by Mathematica Policy Research under Contract No. HHSA290200900019I TO 5.) AHRQ Publication No. 12-0011. Rockville: Agency for Healthcare Research and Quality; 2011.

Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement Sci. 2014;9(1):7.

Solberg LI, Asche SE, Margolis KL, Whitebird RR. Measuring an organization's ability to manage change: the change process capability questionnaire and its use for improving depression care. Am J Med Qual. 2008;23(3):193–200.

Dolan ED, Mohr D, Lempa M, et al. Using a single item to measure burnout in primary care staff: a psychometric evaluation. J Gen Intern Med. 2015;30(5):582–7.

Miller WL, Crabtree BF, Nutting PA, Stange KC, Jaen CR. Primary care practice development: a relationship-centered approach. Ann Fam Med. 2010;8(Suppl 1):S68–79 S92.

Nutting PA, Crabtree BF, Stewart EE, et al. Effect of facilitation on practice outcomes in the National Demonstration Project model of the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):S33–44 S92.

Balasubramanian BA, Chase SM, Nutting PA, et al. Using learning teams for reflective adaptation (ULTRA): insights from a team-based change management strategy in primary care. Ann Fam Med. 2010;8(5):425–32.

Killip S, Mahfoud Z, Pearce K. What is an intracluster correlation coefficient? Crucial concepts for primary care researchers. Ann Fam Med. May-Jun 2004;2(3):204–8.

Aho K, Derryberry D, Peterson T. Model selection for ecologists: the worldviews of AIC and BIC. Ecology. 2014;95(3):631–6.

Akaike H. Information Theory and an Extension of the Maximum Likelihood Principle. In B. N. Petrov, & F. Csaki (Eds.), Proceedings of the 2nd International Symposium on Information Theory. Budapest: Akademiai Kiado; 1973. pp. 267–281.

Stout S, Zallman L, Arsenault L, Sayah A, Hacker K. Developing high-functioning teams: factors associated with operating as a “real team” and implications for patient-centered medical home development. Inquiry. 2017;54:46958017707296.

Bohmer RM. Leading clinicians and clinicians leading. N Engl J Med. 2013;368(16):1468–70.

Services UDoHaH. HRSA health center program; 2018. https://bphc.hrsa.gov/programrequirements/compliancemanual/chapter-10.html#titletop.

Nutting PA, Crabtree BF, Miller WL, Stewart EE, Stange KC, Jaen CR. Journey to the patient-centered medical home: a qualitative analysis of the experiences of practices in the National Demonstration Project. Ann Fam Med. 2010;8(Suppl 1):S45–56 S92.

Mold JW, Aspy CA, Nagykaldi Z. Implementation of evidence-based preventive services delivery processes in primary care: an Oklahoma physicians resource/research network (OKPRN) study. J Am Board Fam Med. 2008;21(4):334–44.

Rogers ES, Cuthel AM, Berry CA, Kaplan SA, Shelley DR. Clinician perspectives on the benefits of practice facilitation for small primary care practices. Ann Fam Med. 2019;17(Suppl 1):S17–23.

Harris MF, Parker SM, Litt J, et al. Implementing guidelines to routinely prevent chronic vascular disease in primary care: the preventive evidence into practice cluster randomised controlled trial. BMJ Open. 2015;5(12):e009397.

Henderson KH, DeWalt DA, Halladay J, et al. Organizational leadership and adaptive Reserve in Blood Pressure Control: the heart health NOW study. Ann Fam Med. 2018;16(Suppl 1):S29–34.

Acknowledgements

This work was presented at the North American Primary Care Research Group’s International Conference (ICPF) on Practice Facilitation in Tampa, FL in December 2018 and in Bethesda, MD in June 2018.

The manuscript has not been previously published and is not under consideration in the same or substantially similar form in any other peer-reviewed media.

The parent Heart Health Now trial as a prospective randomized trial, conforms to the CONSORT guides, however, these do not apply to this analysis as this current manuscript’s analysis uses a retrospective cohort design.

Funding

This study was funded by the Agency for Healthcare Research and Quality via award number 1R18HS023912. The Heart Health Now study is registered on ClinicalTrials.gov NCT02585557. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

All authors listed (JRH, BJW, JIK, DAD, SP, JF, AL, MM, DB, CC, KH, SC) have contributed sufficiently to the project to be included as authors. All authors consent for this manuscript’s publication. All authors have read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The Institutional Review Board at UNC Chapel Hill deemed the Heart Health Now study exempt, therefore need for consent was waived.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interest. To the best of our knowledge, no competing interests, financial or other, exist.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Halladay, J.R., Weiner, B.J., In Kim, J. et al. Practice level factors associated with enhanced engagement with practice facilitators; findings from the heart health now study. BMC Health Serv Res 20, 695 (2020). https://doi.org/10.1186/s12913-020-05552-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-020-05552-4