Abstract

Background

Although public reporting of hospital performance is becoming common, it remains uncertain whether public reporting leads to improvement in clinical outcomes. This study was conducted to evaluate whether enrollment in a quality reporting project is associated with improvement in quality of care for patients with acute myocardial infarction.

Methods

We conducted a quasi-experimental study using hospital census survey and national inpatient database in Japan. Hospitals enrolled in a ministry-led quality reporting project were matched with non-reporting control hospitals by one-to-one propensity score matching using hospital characteristics. Using the inpatient data of acute myocardial infarction patients hospitalized in the matched hospitals during 2011–2013, difference-in-differences analyses were conducted to evaluate the changes in unadjusted and risk-adjusted in-hospital mortality rates over time that are attributable to intervention.

Results

Matching between hospitals created a cohort of 30,220 patients with characteristics similar between the 135 reporting and 135 non-reporting hospitals. Overall in-hospital mortality rates were 13.2% in both the reporting and non-reporting hospitals. There was no significant association between hospital enrollment in the quality reporting project and change over time in unadjusted mortality (OR, 0.98; 95% CI, 0.80–1.22). In 28,168 patients eligible for evaluation of risk-adjusted mortality, enrollment was also not associated with change in risk-adjusted mortality (OR, 0.98; 95% CI, 0.81–1.17).

Conclusions

Enrollment in the quality reporting project was not associated with short-term improvement in quality of care for patients with acute myocardial infarction. Additional efforts may be necessary to improve quality of care.

Similar content being viewed by others

Background

Public reporting of hospital performance data is becoming increasingly common worldwide [1]. Release of performance data is designed to increase transparency and accountability [2], and theoretically leads to improvement in quality of care through two pathways: selection of better-performing providers and change in patterns of care [3]. However, studies have also suggested unintended and negative consequences of public reporting, such as avoidance of treating severe patients [4,5,6]. Therefore, evaluation of the effects of public reporting is important for deciding what, how, and whether to report.

Observational studies that assessed the association between reporting of hospital performance data and improvement in clinical outcomes have produced inconsistent results [4, 7,8,9,10]. In a cluster randomized trial, public release of quality indicators did not significantly improve quality of care for patients with acute myocardial infarction (AMI) or congestive heart failure [11]. Overall, there is insufficient evidence to conclude that public reporting leads to improvement in clinical outcomes [12, 13].

Recent large-scale quasi-experimental studies from the United States have added to the literature by reporting no significant association between enrollment in a quality improvement program and improvement in clinical outcomes of Medicare beneficiaries or patients hospitalized in academic hospitals [14, 15]. Meanwhile, evidence is lacking for other programs and patient populations. In Japan, a quality reporting project led by the Ministry of Health, Labour and Welfare (MHLW) involving hospital groups was introduced in 2010 [16, 17]. The objectives were to improve quality of care and to promote quality reporting in Japan. Nationwide hospital group organizations participating in the project were to select quality indicators (QIs), collect and summarize data from multiple participating hospitals within each group, and publicly report the QIs using their websites or other means. The MHLW required that process and outcome QIs for specific diseases (e.g., cancer, stroke, AMI, and diabetes), patient safety, and regional cooperation were selected, and that organizations reported their results and suggestions for improvements to the MHLW. Each year, 2 to 3 organizations were selected for partial funding by the MHLW, after which they could continue the reporting at their own expense. However, the effectiveness of the project remains unclear.

In the present study, we evaluated the association between enrollment in the Japanese quality reporting project and improvement in quality of care for patients with AMI. We hypothesized that enrollment in the project would lead to improvements in outcome indicators (unadjusted mortality, risk-adjusted mortality, and mortality of patients undergoing percutaneous coronary intervention [PCI]) and a process indicator (treatment with aspirin within 2 days of admission). Propensity score matching by national hospital census data was used to select control hospitals for comparison, and difference-in-differences analyses using data from a nationwide administrative database were conducted.

Methods

The study was approved by the Institutional Review Board of The University of Tokyo (approval number: 3501). Because of the anonymous nature of the data, the need for informed consent was waived.

Data source

In Japan, a lump-sum payment system was introduced in acute-care hospitals from 2003. The Diagnosis Procedure Combination (DPC) database is a national administrative database for patients admitted to hospitals with implementation of the payment system (DPC hospitals) [18]. Participation in the database is mandatory for academic hospitals and voluntary for community hospitals. Participating hospitals provide administrative claims and abstract discharge data for all their acute-care inpatients. The database includes the following information: hospital identification code; patient demographic and clinical information; admission and discharge statuses; main and secondary diagnoses; surgeries and procedures performed; medications; and special reimbursements for specific conditions. Diagnoses are recorded using International Classification of Diseases, Tenth Revision (ICD-10) codes. Suspected diagnoses are allowed to be recorded, and are designated as such. Surgeries, drugs, procedures, and special reimbursements are coded according to the Japanese fee schedule for reimbursement, and their daily use or application is recorded. Clinical information recorded in the database includes the Killip class, a classification for AMI patients based on physical signs of heart failure (class I to class IV in ascending order of severity) [19, 20].

The Reporting System for Functions of Medical Institutions is a census survey of hospitals in Japan initiated in 2014 [21]. It includes detailed structural information for institutions such as location, hospital type (DPC hospitals divided into category 1 [university hospitals], category 2 [community hospitals with equivalent functions to university hospitals], and category 3 [other DPC hospitals], and non-DPC hospitals), numbers of acute-care and long-term-care beds, numbers of nurses and physical therapists, and number of imaging devices. Process information including numbers of inpatients, ambulance acceptances, and out-of-hours hospitalizations is also recorded. In addition, electronically recorded claims data are used to identify monthly numbers of specific procedures performed in each institution, including operations, mechanical ventilation, and renal replacement therapy.

Hospital and patient selection

Using the DPC database, patients hospitalized for AMI (confirmed main diagnosis with ICD-10 codes I21.x) between July 2010 and March 2014 were searched, and hospitals with at least 10 AMI hospitalizations between July 2010 and March 2011 were identified. The websites of organizations participating in the reporting project were also searched to identify hospitals that reported performance data in fiscal years 2011, 2012, and 2013. Fiscal years in Japan start in April and end in March. Enrollment statuses for the 3 years and data from the 2014 Reporting System for Functions of Medical Institutions were linked with the DPC data. DPC category 1 hospitals (university hospitals) were excluded because university hospitals were rare among the hospitals affiliated to the organizations participating in the reporting project. Hospitals that were no longer categorized as DPC hospitals according to the 2014 data and hospitals that discontinued reporting during the study period were also excluded. Reporting hospitals were defined as those that started reporting in any year from 2011 through 2013 and non-reporting hospitals were defined as those without reporting in all 3 years. The restrictions of the DPC database prohibited direct contact with the participating hospitals. Thus, a survey on the details of each hospital’s reporting program and implementation status was not conducted.

Variables

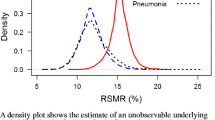

The hospital-level characteristics examined in the study are presented in Table 1. In Japan, there is a local area division based on medical services supply termed the Secondary Medical Area [21]. The annual number of ambulance acceptances within the Secondary Medical Area associated with each hospital were summarized and used as a regional characteristic. In addition to the characteristics obtained from the hospital survey data, the July 2010–March 2011 DPC data were used to identify the number of AMI patients and whether there were hospitalizations to intensive care units (ICUs) and emergency centers, and whether hospitals conducted coronary artery bypass graft (CABG) surgery. As a variable for performance prior to introduction of the quality reporting project, each hospital’s risk-adjusted mortality rate for AMI patients, as proposed by the Quality Indicator/Improvement Project (QIP) [22], were calculated. QIP risk-adjusted mortality takes into account age, sex, and Killip class for risk adjustment. In the derivation of risk-adjusted mortality rate, patients from all hospitals with at least 10 cases of AMI identified in the DPC database in the 6-month period were included to calculate the overall mortality.

The main outcomes of the study were 2 types of mortality in AMI patients: unadjusted in-hospital mortality and QIP risk-adjusted in-hospital mortality. The in-hospital mortality of patients admitted by ambulance and subsequently treated with PCI in particular were also evaluated. As an indicator of process, treatment of AMI patients with aspirin within 2 days of admission was examined. The indicators were selected from the QIs determined by the National Hospital Organization and the QIP. The inclusion/exclusion criteria and risk adjustment methods for the indicators are presented in Additional file 1: Table S1.

Statistical analysis

To adjust for differences in hospital characteristics between the reporting and non-reporting hospitals, one-to-one propensity score matching of hospitals [23] was performed. To estimate the propensity score, a logistic regression model with enrollment in the reporting project as a dependent variable was first fitted. Hospital and regional characteristics and risk-adjusted mortality in the 6 months prior to 2011, presented in Table 1, were entered as independent variables. The c-statistic was used to evaluate the discriminatory ability of the logistic regression model. Using the estimated propensity scores, nearest neighbor matching without replacement, within 0.2 times the standard deviation of the estimated propensity scores, was conducted. The standardized difference was used to compare the characteristics between the two groups before and after matching [24, 25]. An absolute standardized difference of > 10 was considered indicative of imbalance.

The data for AMI patients hospitalized in the matched hospitals from April 2011 to March 2014 were then used to conduct difference-in-differences analyses. Non-reporting hospitals were operationally assigned the same enrollment year as their matched reporting counterparts, and patients hospitalized before the initiation of reporting were excluded. Logistic regression models predicting the outcomes were fitted, with the following entered as independent variables: reporting status of the admitted hospital (reporting vs. non-reporting); year when the reporting was started (as a continuous variable); a yearly time variable representing the number of years after hospital enrollment (difference between year of patient hospitalization and hospital enrollment year, as a continuous variable); and an interaction term multiplying the reporting status and the post-enrollment time variable. Analyses were performed with adjustment for clustering within hospitals using cluster-robust standard errors. The c-statistic was used to evaluate the discriminatory ability of the logistic regression model. The difference-in-differences approach is an econometric method used to evaluate the effect of a policy change by isolating the change in outcome over time related to the intervention from the change experienced over time without the intervention [26, 27]. In this study, the interaction term of the reporting status and the post-enrollment time variable represented the influence per year exerted by the reporting on the outcomes after enrollment, considering the year when reporting was started as the baseline. Enrollment year was entered in the models to adjust for pre-enrollment trends.

In addition to the main analysis, 3 further analyses were conducted with the same regression model used for the main analysis and selection of patients hospitalized in different time periods. First, to examine whether there were changes in outcomes immediately following enrollment, a difference-in-differences analysis between the year when reporting was started and the year before that was performed (2-year, before-after analysis). Second, patients hospitalized in the year before enrollment were added to the main analysis, assuming that quality improvement occurs immediately in the year of enrollment and that the amount of improvement stays the same thereafter. In these analyses, hospitals that started reporting in 2011 and their matched counterparts were excluded because of the lack of full-year data in 2010. Third, the main analysis was repeated excluding hospitals that started reporting in 2013, the final year of observation.

A 2-sided P value of < 0.05 was considered significant. Statistical analyses were conducted using Stata/MP version 14.0 (StataCorp, College Station, TX, USA).

Results

Hospital characteristics and propensity score matching

Two hundred hospitals reported hospital performance in 2011 under the MHLW project. Subsequently, the participating hospitals increased to 327 in 2012 and 438 in 2013. Both DPC and non-DPC hospitals participated in the project.

From the DPC database, 28,548 AMI patients admitted between July 2010 and March 2011 were identified. Among these patients, 27,597 were from 613 hospitals with at least 10 admissions.

The flow of hospital selection is presented in Fig. 1. After linkage with the Reporting System for Functions of Medical Institutions data and exclusion of hospitals, 146 hospitals that participated in the reporting project (48, 69, and 29 hospitals started reporting in 2011, 2012, and 2013, respectively) and 327 non-reporting hospitals were identified. There were 21,004 AMI admissions from these 473 hospitals in the 6-month period. The hospital characteristics before matching are presented in Table 1. The reporting hospitals were larger and better-equipped than the non-reporting hospitals, and admitted more AMI patients.

Propensity score matching between the reporting and non-reporting hospitals produced 135 pairs of hospitals. The c-statistic was 0.667. These hospitals admitted 12,516 AMI patients in the 6-month period. The hospital characteristics of the matched hospitals are also presented in Table 1. Most of the characteristics were balanced between the 2 groups of hospitals. Of the 135 matched reporting hospitals, 42, 66, and 27 hospitals started reporting in 2011, 2012, and 2013, respectively.

Patient characteristics and outcomes after hospital matching

Overall, 30,220 AMI hospitalizations during the April 2011–March 2014 period from the 270 matched hospitals were included in the analysis of mortality, and 3998 of these patients (13.2%) died during hospitalization. The inclusion criteria for QIP risk-adjusted mortality reduced the number of eligible patients to 28,168 for risk-adjusted mortality analysis, and there were 3330 deaths (11.8%). Table 2 presents the characteristics of the patients admitted to the reporting and non-reporting hospitals during different time periods. At 2 years after the start of reporting, the reporting hospitals admitted fewer patients categorized as Killip class 1 compared with the non-reporting hospitals. Otherwise, patients admitted to the reporting hospitals had similar patient characteristics to those admitted to the matched non-reporting hospitals. There were 13,370 AMI patients admitted by ambulance and treated with PCI, and 276 deaths (2.1%) among these patients. Aspirin was administered within 2 days of admission in 22,655 of 26,989 patients who met the inclusion criteria for the process QI (83.9%). The outcomes among the patients admitted to the reporting and non-reporting hospitals during different time periods are presented in Table 3. There were no apparent difference in outcomes between the reporting and non-reporting hospitals.

Difference-in-differences analyses

The results of the logistic regression analyses are presented in Table 4. For all 4 indicators, the odds ratios for reporting status were not significant, indicating that hospital performances were similar between the reporting and non-reporting hospitals in the baseline years. In addition, the baseline performances did not differ significantly across the years that reporting was started. For all 4 indicators, the interaction term was not significant, indicating that reporting was not associated with changes in quality of care. The results of the 3 sensitivity analyses are presented in Table 5. In all 3 analyses, the interaction terms were not significant. The c-statistics of the models in the main analysis and the 3 additional analyses are presented in Additional file 1: Table S2.

Discussion

The present study utilized 2 large databases to evaluate the association between enrollment in the hospital quality reporting project in Japan and improvement in quality of care for patients with AMI. Within the study period, enrollment was associated with neither improvement in process of care nor improvement in outcomes. Reporting of QIs alone may not be sufficient to achieve short-term improvement in quality of care.

There are 2 key assumptions for difference-in-differences analyses: parallel trends and common shocks [26, 27]. Selection of an appropriate control group to meet these assumptions is challenging, and matching is recommended when treatment and control groups differ in pre-intervention levels or trends [27]. In the present study, participation of hospitals in the reporting project was not randomized, and imbalance between the reporting and non-reporting hospitals was an expected result. For example, large hospitals may allocate more resources to improve their quality of care. In addition, an institution’s role in the regional healthcare system may influence whether or not it participates in the project. We obtained detailed hospital characteristics from the census survey data for hospitals and conducted propensity score matching using hospital and regional variables. After the propensity score matching, the characteristics were well-balanced between the reporting and non-reporting hospitals. Furthermore, the numbers of patients and their characteristics were similar across the patients hospitalized in the matched hospitals. Although it cannot be tested whether the 2 assumptions hold true, these results suggest that the matching created valid comparison groups.

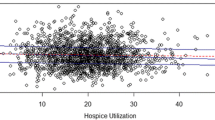

Using the data for patients admitted to the 270 matched hospitals, we examined 4 measures of quality of care. Reporting was not associated with change in mortality of AMI patients, and this finding was observed for both unadjusted and risk-adjusted mortality. Limiting the patients to those who underwent PCI after arrival by ambulance did not change this finding. We also examined a process indicator for AMI patients. Although not significant, there was a small increase over years in the probability of receiving aspirin within 2 days of admission. However, there was no apparent increase that could be attributed to the reporting status of hospitals. Some previous observational studies reported improvements in quality of care after the introduction of quality reporting [8, 10]. However, these studies either did not account for secular trends or their control groups had insufficient comparability. More rigorously designed studies showed no association between enrollment in a quality reporting program in the United States and improvement in quality of surgical care [14, 15]. The present study showed similar results for AMI patients in Japan.

Multiple investigators suggest the potential to avoid treating severe patients as one of the unintended and negative consequences of public reporting [4,5,6]. To evaluate whether this phenomenon existed, we compared the numbers and backgrounds of the treated patients between the matched reporting and non-reporting hospitals. Over years after participation in the project, there was no apparent difference in the numbers of treated AMI patients between the matched reporting and non-reporting hospitals. Furthermore, the patient backgrounds remained generally similar between the 2 groups over years. As an exception, the proportion of patients with less severe AMI decreased in the reporting hospitals. These results suggest that unintended consequences of public reporting, such as avoidance of treating more severe patients, were unlikely.

Previous studies on hospital quality reporting were primarily conducted in the United States, and the present study adds to the body of evidence by reporting results from hospitals operating under a different situation. The healthcare system in Japan is characterized by universal insurance coverage and a nationally uniform fee schedule for reimbursement [28, 29]. In addition, primary care physicians play little role as gatekeepers and patients have free access to virtually all hospitals [29,30,31]. Competition may make hospitals sensitive to their performance and motivate them to improve their quality of care. However, in the present study, there was no evidence of a short-term incremental effect of the reporting. QI reporting alone may be insufficient, and additional efforts may be necessary to improve quality of care. Further research is required to achieve kaizen in healthcare.

Several limitations of the present study should be considered. First, we used administrative data for both hospital matching and outcome assessments. There could be unmeasured hospital characteristics that act as confounders, and the matching may not be perfect. Also, measures of quality depended on outcomes, processes, and risk factors that were obtainable from the DPC database. Second, precise information for each hospital’s quality reporting program was unobtainable, and it was unclear whether the program was actually carried out. Likewise, the control hospitals may have implemented original quality improvement programs, either as a spillover effect or as an independent attempt. Third, the observation period after the start of the program was 3 years at most. The long-term effect of the reporting program was unobservable in this study. Lastly, AMI was the focus of this study. Further research is required to determine the effect of quality reporting on other conditions.

Conclusions

Enrollment in the ministry-led quality reporting project in Japan was not associated with short-term improvement in quality of care of patients with AMI. Further research is required to identify additional efforts that could improve quality of care.

Abbreviations

- AMI:

-

acute myocardial infarction

- CABG:

-

coronary artery bypass graft

- DPC:

-

Diagnosis Procedure Combination

- ICD-10:

-

International Classification of Diseases, Tenth Revision

- ICU:

-

intensive care unit

- MHLW:

-

Ministry of Health, Labour and Welfare

- PCI:

-

percutaneous coronary intervention

- QI:

-

quality indicator

- QIP:

-

Quality Indicator/Improvement Project

References

Groene O, Skau JKH, Frølich A. An international review of projects on hospital performance assessment. Int J Qual Health Care. 2008;20:162–71.

Lansky D. Improving quality through public disclosure of performance information. Health Aff (Millwood). 2002;21:52–62.

Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care. 2003;41(1 Suppl):I30–8.

Moscucci M, Eagle KA, Share D, et al. Public reporting and case selection for percutaneous coronary interventions: an analysis from two large multicenter percutaneous coronary intervention databases. J Am Coll Cardiol. 2005;45:1759–65.

Apolito RA, Greenberg MA, Menegus MA, et al. Impact of the New York state cardiac surgery and percutaneous coronary intervention reporting system on the management of patients with acute myocardial infarction complicated by cardiogenic shock. Am Heart J. 2008;155:267–73.

Joynt KE, Blumenthal DM, Orav EJ, et al. Association of public reporting for percutaneous coronary intervention with utilization and outcomes among Medicare beneficiaries with acute myocardial infarction. JAMA. 2012;308:1460–8.

Ghali WA, Ash AS, Hall RE, et al. Statewide quality improvement initiatives and mortality after cardiac surgery. JAMA. 1997;277:379–82.

Peterson ED, DeLong ER, Jollis JG, et al. The effects of New York’s bypass surgery provider profiling on access to care and patient outcomes in the elderly. J Am Coll Cardiol. 1998;32:993–9.

Baker DW, Einstadter D, Thomas C, et al. The effect of publicly reporting hospital performance on market share and risk-adjusted mortality at high-mortality hospitals. Med Care. 2003;41:729–40.

Hibbard JH, Stockard J, Tusler M. Hospital performance reports: impact on quality, market share, and reputation. Health Aff (Millwood). 2005;24:1150–60.

Tu JV, Donovan LR, Lee DS, et al. Effectiveness of public report cards for improving the quality of cardiac care: the EFFECT study: a randomized trial. JAMA. 2009;302:2330–7.

Fung CH, Lim YW, Mattke S, et al. Systematic review: the evidence that publishing patient care performance data improves quality of care. Ann Intern Med. 2008;148:111–23.

Ketelaar NABM, Faber MJ, Flottorp S, et al. Public release of performance data in changing the behaviour of healthcare consumers, professionals or organisations. Cochrane Database Syst Rev. 2011:CD004538.

Osborne NH, Nicholas LH, Ryan AM, et al. Association of hospital participation in a quality reporting program with surgical outcomes and expenditures for Medicare beneficiaries. JAMA. 2015;313:496–504.

Etzioni DA, Wasif N, Dueck AC, et al. Association of hospital participation in a surgical outcomes monitoring program with inpatient complications and mortality. JAMA. 2015;313:505–11.

Shimada G, Horikawa C, Fukui T. Measurement of quality of healthcare: promotion project by Ministry of Health, Labour and Welfare. Nihon Naika Gakkai Zasshi. 2012;101:3413–8.

Ministry of Health, Labour and Welfare. http://www.mhlw.go.jp/stf/seisakunitsuite/bunya/0000171022.html. Accessed 2 March 2018.

Yasunaga H, Matsui H, Horiguchi H, et al. Clinical epidemiology and health services research using the diagnosis procedure combination database in Japan. Asian Pacific J Dis Manag. 2013;7:19–24.

Killip T, Kimball JT. Treatment of myocardial infarction in a coronary care unit: a two year experience with 250 patients. Am J Cardiol. 1967;20:457–64.

DeGeare VS, Boura JA, Grines LL, et al. Predictive value of the Killip classification in patients undergoing primary percutaneous coronary intervention for acute myocardial infarction. Am J Cardiol. 2001;87:1035–8.

Ministry of Health, Labour and Welfare. Medical Care. http://www.mhlw.go.jp/english/policy/health-medical/medical-care/index.html. Accessed 30 April 2017.

Kyoto University Department of Healthcare Economics and Quality Management. Quality Indicator/Improvement Project. http://med-econ.umin.ac.jp/QIP/. Accessed 30 April 2017.

RB D’A Jr. Propensity score methods for bias reduction in the comparison of a treatment to a non-randomized control group. Stat Med. 1998;17:2265–81.

Austin PC. A critical appraisal of propensity-score matching in the medical literature between 1996 and 2003. Stat Med. 2008;27:2037–49.

Austin PC. Using the standardized difference to compare the prevalence of a binary variable between two groups in observational research. Commun Stat Simul Comput. 2009;38:1228–34.

Dimick JB, Ryan AM. Methods for evaluating changes in health care policy: the difference-in-differences approach. JAMA. 2014;312:2401–2.

Ryan AM, Burgess JF Jr, Dimick JB. Why we should not be indifferent to specification choices for difference-in-differences. Health Serv Res. 2015;50:1211–35.

Ikegami N, Yoo BK, Hashimoto H, et al. Japanese universal health coverage: evolution, achievements, and challenges. Lancet. 2011;378:1106–15.

Hashimoto H, Ikegami N, Shibuya K, et al. Cost containment and quality of care in Japan: is there a trade-off? Lancet. 2011;378:1174–82.

Ikegami N, Campbell JC. Japan’s health care system: containing costs and attempting reform. Health Aff (Millwood). 2004;23:26–36.

Toyabe S, Akazawa K. Referral from secondary care and to aftercare in a tertiary care university hospital in Japan. BMC Health Serv Res. 2006;6:11.

Funding

This work was supported by grants from the Ministry of Health, Labour and Welfare, Japan; Ministry of Education, Culture, Sports, Science and Technology, Japan; and the Japan Agency for Medical Research and Development (AMED). The views expressed are those of the authors and not necessarily those of the funding bodies.

Availability of data and materials

The datasets analyzed during the current study are not publicly available due to contracts with the hospitals providing data to the database.

Author information

Authors and Affiliations

Contributions

HY1 designed the study, conducted analyses, interpreted the results, and drafted the manuscript. MK designed the study, interpreted the results, and revised the manuscript. SO, KM, and HM analyzed and interpreted the data and revised the manuscript. KF and TI collected the data, interpreted the results, and revised the manuscript. HY2 designed the study, collected the data, interpreted the results, and revised the manuscript. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the Institutional Review Board of The University of Tokyo (approval number: 3501). Because of the anonymous nature of the data, the need for informed consent was waived.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Table S1. Inclusion/exclusion criteria and risk adjustment methods for the quality indicators used as outcomes. Table S2. C-statistics of logistic regression models predicting the outcomes. (DOC 42 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Yamana, H., Kodan, M., Ono, S. et al. Hospital quality reporting and improvement in quality of care for patients with acute myocardial infarction. BMC Health Serv Res 18, 523 (2018). https://doi.org/10.1186/s12913-018-3330-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-018-3330-4