Abstract

Background

Significant effort has been directed at developing prediction tools to identify patients at high risk of unplanned hospital readmission, but it is unclear what these tools add to clinicians’ judgment. In our study, we assess clinicians’ abilities to independently predict 30-day hospital readmissions, and we compare their abilities with a common prediction tool, the LACE index.

Methods

Over a period of 50 days, we asked attendings, residents, and nurses to predict the likelihood of 30-day hospital readmission on a scale of 0–100% for 359 patients discharged from a General Medicine Service. For readmitted versus non-readmitted patients, we compared the mean and standard deviation of the clinician predictions and the LACE index. We compared receiver operating characteristic (ROC) curves for clinician predictions and for the LACE index.

Results

For readmitted versus non-readmitted patients, attendings predicted a risk of 48.1% versus 31.1% (p < 0.001), residents predicted 45.5% versus 34.6% (p 0.002), and nurses predicted 40.2% versus 30.6% (p 0.011), respectively. The LACE index for readmitted patients was 11.3, versus 10.1 for non-readmitted patients (p 0.003). The area under the curve (AUC) derived from the ROC curves was 0.689 for attendings, 0.641 for residents, 0.628 for nurses, and 0.620 for the LACE index. Logistic regression analysis suggested that the LACE index only added predictive value to resident predictions, but not attending or nurse predictions (p < 0.05).

Conclusions

Attendings, residents, and nurses were able to independently predict readmissions as well as the LACE index. Improvements in prediction tools are still needed to effectively predict hospital readmissions.

Similar content being viewed by others

Background

Thirty-day hospital readmissions are costly, and can be frustrating for both patients and clinicians. As such, they are increasingly scrutinized, and significant efforts are directed at quantifying, understanding, and preventing them.

One part of these efforts has been the development of risk models to help identify patients at risk for hospital readmission. A popular model has been the LACE index, due to its simplicity and comparable accuracy to other models [1,2,3]. It is calculated by taking into account the length of hospitalization (L), acuity of admission (A), the patient’s Charlson Comorbidity Index (C), and the number of visits to the emergency department during the previous 6 months (E). Scores range from 1 (representing low risk of readmission), to 19 (representing a high risk of readmission). Since its derivation, the LACE index has been found to be variably predictive of hospital readmission in published reports [4,5,6]. However, it continues to be utilized as a risk stratification tool due to its simplicity and the felt need for such prediction models.

The felt need for readmission prediction models derives at least in part from the belief that providers are unable to predict readmissions without them. However, the body of literature supporting this belief is thin. Allaudeen and colleagues addressed this question in Inability of Providers to Predict Unplanned Readmissions, where they compared the predictive ability of prospectively surveyed clinicians with the predictive ability of the Probability of Readmission score (Pra), another predictive model. Their final assessment, based on 159 patients, was that both the care providers and the Pra were equally poor predictors of readmission [7]. Their results were similar to a previous report by the ESCAPE trialists, who reported that physicians, nurses, and a separate predictions model did not successfully predict 6-month (instead of 30-day) hospital readmissions for patients with heart failure [8]. No similar comparison has been published for the LACE index, which was developed later and has been more widely adopted, and no subsequent study has directly confirmed the results that hospital care providers are poor predictors of 30-day readmissions. On the contrary, a separate study found that nurses who were surveyed about patient discharge readiness were better able to predict the discharge readiness than the patients themselves, though in this study, both emergency department visits and readmissions were combined as an outcome measure [9].

The hypothesis that providers are unable to predict hospital readmissions without the use of prediction tools is therefore supported only by a very thin body of literature. We seek to add to that body of literature with this project, in which we have designed a survey to assess providers’ ability to predict 30-day hospital readmissions, and have compared their predictive ability with that of the widely-utilized prediction tool, the LACE index.

Methods

A 3-item survey was designed to assess the abilities of providers to predict 30-day readmissions. In the survey, we ask providers to estimate the risk of readmission on a continuous scale from 0 to 100%. We then ask them, in a second item, to indicate if the patients could be described as having any of the following risk factors for readmission: having poor understanding of their disease, having poor adherence to therapy, having poor social support or access to care, having a condition that is likely to relapse or worsen, requiring therapy that is likely to result in a complication, having a high likelihood of developing a new medical condition that would require readmission, having an organ transplant, or having a previous readmission [7, 10,11,12]. In the last item, the provider indicates his or her role: as a resident, attending, or nurse. The surveys were collected by a trained medical student who attempted to collect all surveys in person on the day of discharge. Surveys that could not be collected on the day of discharge were accepted within 48 h of discharge. The surveys were collected between June 4 and July 24, 2015, a period of 50 days. The LACE index was generated by our electronic health record (EHR), based on visit history and encounter diagnoses, and was collected for each patient on the day of discharge, or within 48 h of discharge. Readmissions were tracked through an electronic health system quality report derived from the health system’s EHR to identify patients discharged and readmitted from our institution, and this report was manually confirmed through chart review.

Survey respondents were the General Internal Medicine Physician attendings and residents rotating on a General Internal Medicine ward at an academic referral hospital, as well as the primary patient nurses who were supporting this service. Surveys were collected from 29 attendings, 19 residents, and 129 nurses. Attempts were made to collect surveys from each provider on each patient who was discharged from the General Internal Medicine service, resulting in 377 surveys collected. Surveys were excluded from analysis if the patients were readmitted before the surveys were completed, if they died within 30 days of their admission, if they were discharged on hospice, or if they had a POLST that specified “Do not rehospitalize”, resulting in a final sample of 359 patients for analysis.

Overall risk assessments were evaluated in two ways. First, risk scores for each provider type, as well as the LACE index, were compared between readmitted and non-readmitted patients. Scores were summarized using means and standard deviations, and were compared between groups using two-sample t-tests. Second, receiver operating characteristic (ROC) curves were estimated for each score, with discrimination evaluated using the area under the curve (AUC). Estimates and 95% confidence intervals (95% CI) for AUCs were computed for each provider type, as well as for the LACE index, and were tested for differences using logistic regression models. Additionally, value-added of risk scores relative to the LACE index was assessed using logistic regression models controlling for the patient’s LACE index. To evaluate performance of risk assessments in patients with the pre-specified risk factors for readmission, AUCs were computed for patients identified as having poor understanding of their disease, having poor adherence to therapy, having poor social support or access to care, having a condition that is likely to relapse or worsen, requiring therapy that is likely to result in a complication, having a high likelihood of developing a new medical condition that would require readmission, having an organ transplant, or having a previous readmission. No inferences were performed due to small sample sizes. We also calculated odds ratios for readmission in these pre-specified subgroups. P-values less than 0.05 were considered statistically significant. All analyses were performed using SAS v. 9.4 (SAS Institute Inc., Cary, NC).

Our institutional review board waived approval for this study, and all study participants provided verbal consent to participate.

Results

For the 359 cases which we included in our analysis, 78 were readmitted to the hospital within 30 days, a readmission rate of 22%. Characteristic of the patients admitted to the General Internal Medicine Wards for the two months during which our study took place are described in Table 1.

Attendings, residents, and nurses all predicted higher likelihood of readmission for the readmitted patients compared to non-readmitted patients, with differences in the predictions reaching statistical significance (p < 0.05, Table 2). Readmitted patients also had a mean LACE index that was significantly higher than non-readmitted patients (p < 0.05). Of note, there was considerable overlap in the LACE indices for readmitted and non-readmitted patients, and similar overlap for the providers’ predictions.

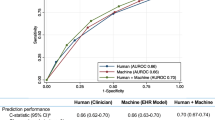

To assess the tradeoff between the sensitivity and specificity of the LACE index and providers’ predictions, we constructed ROC curves (Fig. 1). This assessment suggested that attendings were best able to distinguish patients who would be readmitted from those who would not be, followed by residents, nurses, and the LACE index. We were not able to demonstrate statistical significance in the difference between the AUC of the different predictors and the LACE index (p > 0.05).

Receiver Operating Characteristic Curves for the LACE index and Clinicians’ Subjective Predictions. The figure shows the ROC curves for the LACE index (red) and for the predictions of attendings (blue), residents (green), and nurses (purple). The area under the curve (AUC) derived from the ROC curves was 0.689 for attendings (95% CI 0.603, 0.776), 0.641 for residents (95% CI 0.543, 0.739), 0.628 for nurses (95% CI 0.540, 0.716), and 0.620 for the LACE index (95% CI 0.521, 0.718)

We next hypothesized that while the LACE index appeared not to be superior to the providers’ predictions in terms of distinguishing patients at risk for readmission, it might nevertheless be a useful tool if it could add additional predictive power when combined with provider predictions. However, when we assessed this using a logistic regression analysis, we found that the LACE index only added additional predictive value to residents’ predictions, but not to attendings’ or nurses’ predictions (p < 0.05, Table 3).

Finally, we asked providers to subjectively identify patient characteristics that might place patients at risk for readmission. Our most notable result from this data was that nurses were able to predict 30-day hospital readmissions with an AUC of 0.778 for patients when they described them as having a poor understanding of their illness. Indeed, each provider group demonstrated areas of relative strength and weakness (Table 4), though again we were not able to demonstrate statistical significance in the differences of the providers’ predictions for different groups. When we calculated odds ratios to estimate the risk of readmission in patients identified as having the different characteristics, we found that there was an elevated risk of readmission when attendings believed that patients were poorly adherent to their therapies (OR 1.81, 95% CI 1.04, 3.16) or had severe disease (OR 2.16, 95% CI 1.21, 3.86); or when residents believed that patients were medically complex (OR 2.00, 95% CI 1.15, 3.48) or had a previous admission (OR 2.89, 95% CI 1.46, 5.72). Other subgroups of patients (e.g. those identified by nurses to have poor adherence, or those identified by attendings to have poor social support, etc) did not have an elevated risk of readmission by this analysis.

Discussion

Our results suggest that attendings, residents, and nurses perform as well as the LACE index, an industry-standard and widely utilized prediction tool, in predicting 30-day hospital readmissions. The AUC for the LACE index in our population of 0.620 (95% CI 0.521, 0.718) was qualitatively consistent with the originally reported LACE index c-statistic of 0.684 (95% CI 0.679–0.691) [13]. Clinicians performed just as well, with AUCs that were higher but not statistically different from the LACE index. These results conflict with a previous report, in which neither providers nor the risk model that was assessed (the Pra) were able to outperform chance in predicting 30-day readmissions [7]. We cannot know with certainty what accounts for the difference in our results, but possibilities include the difference in our patient population, including our inclusion of clinicians’ predictions for patients over age 18 instead of only for patients over age 65; as well as the approximately 7 years that elapsed since that previous report, during which much attention and clinician effort has been directed at reducing hospital readmissions.

However, while both clinicians and the LACE index performed better than chance in predicting 30-day hospital readmissions, it is fair to question if that performance was clinically useful. Predictions based on the LACE index and clinician expertise both achieved AUCs of less than 0.7 (often considered a target for “good” predictive capacity), reflecting significant overlap in the predictions for readmitted and non-readmitted patients. In spite of the “improved” ability of physicians and a newer prediction model to predict hospital readmissions, the predictive capacity of both clinicians and the LACE index remained suboptimal.

In our patients, the limited usefulness of the LACE index appears to have been driven by overall high LACE indices for both readmitted and non-readmitted patients (Table 2). Our results are similar to previous studies in which the LACE index did not accurately predict readmissions in an older UK population, or in patients readmitted with heart failure [4, 6]. In both of these previous reports, the Charlson Comorbidity Indices (CCI) were high, resulting in a higher LACE index. Our population is that of a referral academic medical center, which also has a relatively high CCI. Our result, when considered together with the previous reports described above, has particular cautionary relevance to centers that treat patients with high CCI’s. Interestingly, the limitations in clinician predictions also seems to have been driven by an excessively high estimate of readmission risk, with clinicians predicting a higher rate of readmissions than was actually observed. These results underscore the need for ongoing efforts to improve prediction tools, as well as the need for ongoing education of clinicians.

When considering how to improve prediction tools, it will be important to focus on what these tools can add to clinician expertise. Interestingly, in our cohort, the LACE index only added predictive value to inexperienced clinicians (residents), but not to more experienced clinicians (attendings and nurses). Clearly, prediction tools should consistently offer predictive capacity not available to clinicians without them, however this criterion has generally not been assessed in the development of new prediction tools. Our results suggest that prediction tools may warrant validation in the local context of clinician expertise and patient population characteristics, if their usefulness is to be ensured, and if the significant effort and expense of implementing them in health systems is to be justified.

We and others have made initial attempts to clarify what factors play into clinician expertise (or lack thereof) regarding hospital readmissions, as this could be useful information when developing prediction tools to constructively supplement clinician expertise. We did this by asking our clinicians to identify possible risk factors for readmission, and we found that when clinicians identified patients as having poor understanding or severe disease, the odds ratio of readmission was higher; furthermore, the AUCs were highest when clinicians identified patients as having poor understanding, poor adherence, or severe disease. Along these lines, a recent multicenter survey reported that physicians most commonly attributed readmissions to patient factors such as poor understanding or poor social support [11]. Taken together, our results and those of our predecessors suggest that clinician attention and expertise may disproportionately center on variables in the “social” realm (patients with poor understanding, adherence, or support), but may also be limited by clinician “blind spots” where further work to improve the usefulness of readmission prediction tools might be productively directed. In their report, Allaudeen et al. attempted to address these “blind spots” by assessing if clinicians could correctly predict the reasons for potential readmissions. They found that providers generally underestimated the risk of complications of therapy, and hypothesized that this may have contributed to providers’ poor ability to predict readmissions [7]. Our results could be interpreted as supporting this result: when physicians identified patients as receiving high-risk therapy, the AUC was lower (Table 4). An alternative explanation, however, might be that high-risk therapy is an overall less reliable risk factor for hospital readmission.

Our work has some limitations. First, our results were obtained in a cohort of physicians and patients in a single medical center, and may not be applicable to patients and physicians in other settings. Second, our mechanism of detecting readmissions was unable to detect patients readmitted to hospitals not covered by our hospitalist practice group. Because the LACE index and provider estimation of readmission was generally high for both readmitted and non-readmitted patients, the most likely effect this had on our results was to dilute the predictive capacity of both the providers and the LACE index, though we cannot rule out that patients who were readmitted were actually assigned a low risk of readmission by providers, and a high LACE index, which would improve the performance of the LACE index while worsening the performance of the providers. Third, the generation of the LACE index through our EHR depends on completing the problem list in the EHR, a process that is dependent on providers, and which would affect the LACE index if not done accurately. The LACE index has a possible range of scores of 1–19, whereas we asked providers to predict the risk of readmission on a scale from 0 to 100. We did this because percentages are terms that are familiar to clinicians, and to facilitate the construction of ROC curves, however the more expanded scale available to providers may have biased the results in their favor. Finally, the identification of risk factors for readmission was subjective, and may have been limited by clinicians’ lack of knowledge regarding patients’ circumstances (i.e. patients would not have been identified as being nonadherent to therapy if the clinicians were unaware of their nonadherence).

Conclusions

We have shown that clinicians are able to predict 30-day hospital readmissions as well as an industry-standard prediction tool. However, both clinician predictions and available prediction tools remain suboptimal. The LACE index, an objective tool for predicting readmissions, added additional predictive capacity to less experienced (resident) clinicians, but its usefulness was limited in a patient population with a high burden of medical comorbidities, and it did not improve the predictions of experienced clinicians. Clinicians demonstrated relative strength in predicting readmissions in patients with poor understanding of their illness or adherence to their therapies. Further work should be directed at developing tools that enhance clinicians abilities to predict hospital readmissions.

Abbreviations

- 95% CI:

-

95% Confidence Interval

- AUC:

-

Area under the curve

- CCI:

-

Charlson comorbidity index

- EHR:

-

Electronic health record

- OR:

-

Odds ratio

- POLST:

-

Physician orders for life-sustaining treatment

- Pra:

-

Probability of readmission score

- Q1:

-

25th percentile

- Q3:

-

75th percentile

- ROC curve:

-

Receiver operating characteristic curve

- SD:

-

Standard deviation

References

Yu, S, Farooq, F, van Esbroeck, A, Fung, G, Anand, V, Krishnapuram, B. Predicting readmission risk with institution-specific prediction models. Artif Intell Med. 2015; https://doi.org/10.1016/j.artmed.2015.08.005.

Kansagara D, Englander H, Salantiro A, Kagen D, Theobald C, Freeman M, Kripalani S. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;306(15):1688–98.

Kahlon S, Pederson J, Majumdar SR, Belga S, Lau D, Fradette M, et al. Association between frailty and 30-day outcomes after discharge from hospital. CMAJ. 2015;187:799–804.

Wang H, Robinson RD, Johnson C, Zenarosa NR, Jaysawl RD, Keithly J, et al. Using the LACE index to predict hospital readmissions in congestive heart failure patients. BMC Cardiovasc Disord. 2014;14:97.

Tan SY, Low LL, Yang Y, Lee KH. Applicability of a previously validated readmission predictive index in medical patients in Singapore: a retrospective study. BMC Health Serv Res. 2013;13:366.

Cotter PE, Bhalla VK, Wallis SJ, RWS B. Predicting readmissions: poor performance of the LACE index in an older UK population. Age Ageing. 2012;41(6):784–9.

Allaudeen N, Schnipper JL, Orav EJ, Wachter RM, Vidyarthi AR. Inability of providers to predict unplanned readmissions. J Gen Intern Med. 2011;26(7):771–6.

Yamokoski LM, Hasselblad V, Moser DK, Binanay C, Conway GA, Glotzer JM, et al. Prediction of Rehospitalization and death in severe heart failure by physicians and nurses of the ESCAPE trial. J Card Fail. 2007;13(1):8–13.

Weiss M, Yakusheva O, Kathleen B. Nurse and patient perceptions of discharge readiness in relation to postdischarge utilization. Med Care. 2010;48(5):482–6.

Saab D, Nisenbaum R, Dhalla I, Hwang SW. Hospital readmissions in a community-based sample of homeless adults: a matched-cohort study. J Gen Intern Med 2016 [Epub ahead of print].

Herzig SJ, Schnipper JL, Doctoroff L, Kim CS, Flanders SA, Robinson EJ et al. Physician perspectives on factors contributing to readmissions and potential prevention strategies: a multicenter survey. J Gen Intern Med. 2016 Jun 9 [Epub ahead of print, accessed Jun 15, 2016].

Krumholz HM. Post-hospital syndrome – a condition of generalized risk. N Engl J Med. 2013;368(2):100–2.

van Walraven C, Dhalla IA, Bell C, Etchells E, Stiell IG, Zarnke A, et al. Derivation and validation of an index to predict early death or unplanned readmission after discharge from hospital to the community. CMAJ. 2010;182(6):551–7.

Acknowledgements

Not applicable.

Funding

None

Availability of data and materials

The datasets generated and/or analysed during the current study are not publicly available due to patient privacy concerns, but are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

WDM significantly contributed to the design of the study, the analysis and interpretation of data, and was the major contributor in writing the manuscript. KN contributed to the design of the study, the collection of data, the analysis and interpretation of data, and writing the manuscript. SV contributed to the design of the study, was the major contributor to the statistical analysis and interpretation of data, and also assisted in writing the manuscript. ED contributed to the design of the study, the analysis and interpretation of data, and writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The Institutional Review Board waived the need for approval.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Miller, W.D., Nguyen, K., Vangala, S. et al. Clinicians can independently predict 30-day hospital readmissions as well as the LACE index. BMC Health Serv Res 18, 32 (2018). https://doi.org/10.1186/s12913-018-2833-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-018-2833-3