Abstract

Background

Wound care represents a considerable challenge, especially for newly graduated nurses. The development of a mobile application is envisioned to improve knowledge transfer and facilitate evidence-based practice. The aim of this study was to establish expert consensus on the initial content of the algorithm for a wound care mobile application for newly graduated nurses.

Methods

Experts participated in online surveys conducted in three rounds. Twenty-nine expert wound care nurses participated in the first round, and 25 participated in the two subsequent rounds. The first round, which was qualitative, included a mandatory open-ended question solicitating suggestions for items to be included in the mobile application. The responses underwent content analysis. The subsequent two rounds were quantitative, with experts being asked to rate their level of agreement on a 5-point Likert scale. These rounds were carried out iteratively, allowing experts to review their responses and see anonymized results from the previous round. We calculated the weighted kappa to determine the individual stability of responses within-subjects between the quantitative rounds. A consensus threshold of 80% was predetermined.

Results

In total, 80 items were divided into 6 categories based on the results of the first round. Of these, 75 (93.75%) achieved consensus during the two subsequent rounds. Notably, 5 items (6.25%) did not reach consensus. The items with the highest consensus related to the signs and symptoms of infection, pressure ulcers, and the essential elements for healing. Conversely, items such as toe pressure measurement, wounds around drains, and frostbite failed to achieve consensus.

Conclusions

The results of this study will inform the development of the initial content of the algorithm for a wound care mobile application. Expert participation and their insights on infection-related matters have the potential to support evidence-based wound care practice. Ongoing debates surround items without consensus. Finally, this study establishes expert wound care nurses’ perspectives on the competencies anticipated from newly graduated nurses.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Background

Chronic wounds constitute a public health concern with wide-ranging implications for individuals and healthcare systems [1]. A meta-analysis of three observational studies indicates that chronic wounds stemming from various underlying causes occur at a rate of 2.21 cases per 1,000 individuals [2]. This global issue not only imposes a substantial financial burden but also profoundly impacts the quality of life for those affected [3, 4]. The aging of the population and the increasing prevalence of chronic diseases such as diabetes are contributing to the rise in chronic wound cases, resulting in increasingly complex and demanding care needs [2, 4].

Wound healing and care have been integral to nursing practice from its inception [5]. Even today, wound care remains a fundamental aspect of the nurse’s role. Evidence-based practice in nursing plays a crucial role in preventing or mitigating the harmful effects of wounds [6]. Given the complexity of clinical cases and the rapid advancement of treatments, maintaining the best practices in wound care necessitates the continual transfer of up-to-date knowledge and a preventative approach [7, 8]. Despite the availability of continuing education and nursing guidelines, a gap between theory and practice persists [9, 10].

This gap is particularly evident among newly graduated nurses, defined as those who have completed an accredited nursing program and are within their first 12 months of practice [11]. As they enter the workforce, newly graduated nurses often experience a lack of expertise and confidence, making it challenging to navigate the current clinical environment characterized by its high, dynamism, intensity, and heavy workload due to increasingly complex patient care [11]. In this demanding transition period, these nurses face numerous challenges in developing the competence and autonomy expected from them. Problems arise in tasks such as analyzing and organizing data, as well as prioritizing care [12,13,14], all of which are essential elements in wound care. Therefore, newly graduated nurses face difficulties in categorizing and treating pressure injuries, choosing the appropriate dressing, and adequately preparing the wound bed [15,16,17]. The gap they face in wound care is exacerbated by multiple barriers, such as inadequate level of knowledge, limited access to specialized resources and lack of adapted tools [15, 18, 19].

This problem is particularly evident in the province of Quebec, Canada, where nurses have a high level of professional autonomy in wound care, including setting treatment plans and providing care and treatment. They may also be authorized to prescribe for wound-related matters [20]. However, this autonomy presents challenges for newly graduated nurses when managing multifactorial wound problems independently.

To address these barriers, mHealth offers a promising solution to enhance wound care and promote evidence-based practice [21,22,23,24,25]. Authors who have explored the impact of employing a mobile wound care app among newly graduated nurses note that it aids in their continuing education [26], streamlines wound care management [27] and provides guidance in selecting the appropriate dressing [28]. The rapid development and increased utilization of mHealth in wound care have been accelerated by the COVID-19 pandemic in recent years [29,30,31]. While these advancements hold the potential to enhance nursing practices, they also introduce certain risks. The development and evaluation of wound care mobile applications are often inadequately supervised, potentially exposing users to unvalidated content or content influenced by commercial biases [25, 32, 33].

In this context, it becomes crucial to design a new, validated technology for the next generation of nurses. O’Cathain et al. [34] suggest that developers should collect primary data from individuals who can identify the initial components of the technology, contextualizing it to the specific usage environment. Wound care experts are pivotal in the development of such a mobile application. Despite this valuable opportunity to enhance the development process, the unique perspectives of wound care experts regarding the components of mobile applications for this field are rarely addressed in the literature. This aspect remains unexplored with regard to new graduate nurses.

Methods

Study aim

The aim of this study is to establish expert consensus on the initial content for the algorithm that will inform the basis of a wound care mobile application designed for newly graduated nurses. This study is part of a multi-method research project and has a goal to compile a comprehensive list of items that experts consider essential for the application. By combining insights from the existing literature with the items identified by experts, an initial algorithm for the application will be created.

Study design

In this study, we employed the e-Delphi technique, as described by Keeney et al. [35]. This stands for ‘electronic Delphi’ and is a digital adaptation of the original Delphi technique [36]. The classic Delphi method aimed “to obtain the most reliable consensus of opinion of a group of experts by a series of intensive questionnaires interspersed with controlled feedback” [36, p. 458]. The e-Delphi technique, which gained prominence since its use by MacEachren et al. [37], is a valid and reliable approach to consensus-building that utilizes online questionnaires instead of physical mailout [35, 38].

Participants

To create the expert panel, we employed the eligibility criteria approach [35]. This approach involves selecting experts based on specific criteria derived from the study’s purpose. The chosen participants needed to be experts who currently possessed wound care competence and objective perceptions [39, 40]. Their willingness to participate was also a critical factor for the success of the e-Delphi exercise [41]. Additionally, experts should not only have knowledge and experience but also the ability and availability to participate in the study [42]. Table 1 shows the criteria that all experts had to meet. They were selected and designed to be both exclusive enough to minimize bias and inclusive enough to ensure an adequate number of study participants.

An essential aspect of ensuring the rigor of the e-Delphi exercise is a diverse panel. The inclusion of experts from various backgrounds enriches the research by offering a wide range of opinions, diverse perspectives that stimulate debate and solution development, and the sharing of ideas [41,42,43,44]. Our panel was intentionally diverse, comprising individuals with scientific (researchers), clinical (nursing staff), and academic (educators) backgrounds.

In this context, where the quality of the expert panel takes precedence over its size [35], nonprobabilistic sampling techniques are essential [45]. We chose purposive sampling given that experts were selected based on specific criteria [35]. Recruitment was done in collaboration with the Regroupement québécois en soins de plaies and l’Association des infirmières et infirmiers stomothérapeutes du Québec. We used in-person invitations during a scientific event and electronic invitations sent to members. The electronic invitation included a direct link to the questionnaire introduction, accompanied by an explanatory video. Following the guidelines of Dillman et al. [46], we sent two reminder emails two and four weeks after the initial invitation. Networking, such as word-of-mouth, social networks, contacts, and the snowball effect, was also employed as a recruitment method.

Data collection

We collected data individually using the SurveyMonkey® online survey platform, chosen for its information security, user-friendly interface, and versatility (available on computers, tablets, and smartphones) [47]. Each questionnaire was developed for this study and underwent pre-testing by three experts not included in the sample to ensure face validity and content validity [41, 48, 49]. The English translated version of the questionnaires are available in Additional File 1. Similar to the classic Delphi technique, the first round of the e-Delphi exercise was qualitative and involved a mandatory open question for brainstorming:

What items should be part of the mobile application that will be created to support evidence-based wound care practice for newly graduated nurses?

The aim of this first round was to identify the items that would form the basis for the subsequent consultation rounds. This initial round also allowed us to gather socio-professional data from the participants.

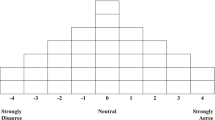

In the following rounds, the items from the previous round were presented. A 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree), was used to determine the level of agreement among the experts regarding each item. After each thematic section, an empty text box was provided to allow participants to add comments. Before each round, a personalized email with a questionnaire link was sent to the participants. Following the methodological recommendation [35], experts were given a two-week window to complete the questionnaire. An initial, personalized reminder email was dispatched one week after sending the questionnaire, followed by a second reminder one week after the deadline. Some participants were granted a two-week extension upon request. The subsequent rounds employed an iterative process where participants could view their responses and the anonymized results of the prior round, including the average agreement score for each item. They were encouraged to consider other viewpoints and, if necessary, to revise their responses in light of this new information. Similar to the classic Delphi technique, the experts did not have direct interactions or meetings with one another [50].

Definition of consensus

Consensus was operationally defined as 80%, following the criteria outlined by Keeney et al. [35]. This could manifest as either a consensus of agreement (when 80% of participants indicated a Likert scale score of 4 or more) or a consensus of disagreement (when 80% of participants indicated a Likert scale score of 2 or less) [35]. Neutral responses (score of 3) were not counted in the 80%. In addition to percentages, the diminishing number of comments was considered as an indicator of consensus and data saturation [35, 51].

Analysis

After the completion of the first round, each participant’s eligibility was verified, including checking their professional status on the professional college website. Qualitative data were then directly extracted from SurveyMonkey® into a Microsoft Word® file for deductive content analysis [35, 52]. Following a thorough reading of and familiarization with the data, responses regarding the inclusion of items in the mobile application were collated and broken down into units of analysis. Subsequently, they underwent a second reading to attain a comprehensive understanding of the data and gain an overview. Afterward, an unconstrained categorization matrix was developed based on Wounds Canada’s Cycle [7], which is a systematic approach for developing personalized wound prevention and management plans. All qualitative data underwent content scrutiny and were coded for correspondence with the identified categories. Duplicate statements were removed, and similar ones were consolidated. The anonymized raw data, align with the final consolidated and categorized list, was shared with another research team member to ensure that the process did not alter the meaning of any statements. For the second round, the questionnaire with items from the first round was generated [35, 42], with questions divided by categories.

Following the completion of the second round, data were extracted from SurveyMonkey® into a Microsoft Excel® file and then anonymized. Descriptive analysis of the data was performed using SPSS® software (version 28), with frequency tables for each item and descriptive statistics (response rate, agreement and disagreement rates, mean, median, standard deviation, and quartiles) computed [35, 51].

Items with achieved consensus were excluded from the third round to shorten the questionnaire and reduce expert fatigue [35]. Items without consensus were identified and combined to create the next round’s questionnaire. Weighted kappa values (k) were calculated for each item to assess the stability of within-subject responses between quantitative rounds [35, 51, 53]. \(\kappa\) values could range from 0.00 to 1.00, with interpretation details provided in Table 2.

The qualitative data collected from the open-ended questions at the end of each section of the questionnaire were synthesized through content analysis [52]. These qualitative data were integrated with the quantitative data to inform decisions regarding the retention, rejection, or addition of items, as well as adjustments to item wording and instructions [55].

Data collection and analysis were conducted simultaneously from September 2022 to February 2023. This paper has been prepared following the CREDES checklist in the EQUATOR network [56].

Results

Participants’ characteristics

A total of 29 wound care experts from all regions of Quebec participated in the first round of the e-Delphi exercise. Their characteristics are summarized in Table 3. Most of the panel had over 15 years of nursing experience (n = 21, 72.4), and the majority came from clinical backgrounds (n = 26, 89.7%). Of the initial respondents, 25 completed both the second and third rounds, resulting in a retention rate of 86.21%. Although 29 experts participated in the first round, four of them did not complete the subsequent rounds; given their lack of reply to our emails, the reasons for their attrition could not be documented.

Number of rounds

This e-Delphi study necessitated three rounds of consultation, with 75 items achieving consensus by the end of the third round. The quantitative evidence of convergence is reinforced by the decrease in the number of subjective comments. A detailed overview of all steps and response rates are shown in Additional File 2.

Identified items and level of consensus

First round

All collected statements (n = 186) were refined to 80 items through the elimination of duplicates and the consolidation of similar entries. From the first round, six categories emerged, which included initial assessment (30 items), goals of care (3 items), integrated team (2 items), plan of care (26 items), outcomes evaluation (2 items) based on Wounds Canada’s Wound Prevention and Management Cycle [7], and technical aspects of the application (17 items). Thus, a total of 80 items were included in the questionnaire for the subsequent round of consultation.

Second round

Of the 80 items, 66 attained consensus (82.5%), while 14 did not (17.5%). Table 4 presents a selection of items that achieved consensus, listed in descending order by agreement level and mean score. Items without consensus are presented in the Additional File 3. A comprehensive list of items, classified by acceptance level and categories, is provided as supplementary information in Additional File 4.

Furthermore, in round 2, a total of 22 comments were synthesized. Here are four examples:

“Toe pressure is not available in many settings.” -Participant 18R

“Toe pressure: not all hospitals are equipped to do it.” -Participant 1A

“I would also add examples of directives to put in the therapeutic nursing plan.” -Participant 11K

“For me, the dressing is secondary […]. It’s so easy to get lost with all the kinds out there, I think someone who’s starting out should focus on the healing phase and not focus on the category of dressing.” -Participant 17Q

Third round

In the third round of consultation, out of the 14 items carried over 9 achieved final consensus (64.29%), while 5 did not (35.71%) (see Additional File 3). The table also shows the kappa values indicating within-subject agreement between rounds 2 and 3. Ten items showed fair to moderate agreement (k from 0.21 to 0.6), while the remaining four items exhibited substantial agreement (k from 0.63 to 0.80) [54]. Notably, no qualitative comments were received during this final round.

Discussion

The aim of this study was to establish expert consensus on the initial content for an algorithm to be used in creating a mobile application for wound care, specifically designed for newly graduated nurses. The e-Delphi approach was used. Wound care experts achieved consensus on 75 items for inclusion in the algorithm of the future application. Nevertheless, the response rate remains high in each round, surpassing the 70% threshold necessary to maintain methodological rigor [35] and outperforming rates seen in other e-Delphi studies of wound care experts [58,59,60].

Several strategies, such as incorporating an animated explanatory video on the initial questionnaire screen, avoiding distribution during the holiday season, and sending personalized email reminders, contributed to the usability of the online questionnaire and mitigated attrition [61]. Additionally, the removal of consensus items from the second round resulted in a more concise third-round questionnaire. While this methodological choice may have contributed to participant retention, it also meant that items already achieving consensus in round 2 had no opportunity to achieve even greater consensus [35]. In addition to the reminders sent, the high response rate can be attributed to the experts’ implicit recognition of the subject’s significance. This level of commitment aligns with the findings of Belton et al. [62], who noted that experts are more likely to continue participating when they perceive the purpose and relevance of the Delphi exercise or when the consensus’s outcome directly affects them. However, this recognition may introduce bias, as individuals with dissenting opinions are more likely to drop out of the study [62].

Composition of the expert panel

While there is no formal, universal guidelines on the required number of experts for a representative panel in a consensus method, the number of experts who completed the e-Delphi exercise is considered satisfactory. The choice of sample size depends on various factors, including the consensus objective, the chosen method, available time, and practical logistics [35, 48, 63, 64]. Wound care e-Delphi studies have shown a wide range of sample sizes, from 14 [65] to 173 participants [60]. Most publications and consensus method guides suggest that a minimum of six participants is necessary for reliable results [35, 41, 42, 64, 66, 67]. While larger sample sizes enhance result reliability, groups exceeding 12 participants may encounter challenges related to attrition and coordination [43, 68]. In their methodological paper on the adequacy of utilizing a small number of experts in a Delphi panel, Akins et al. [69] argue that reliable results and response stability can be achieved with a relatively small expert panel (n = 23) provided they are selected based on strict inclusion criteria. This was particularly relevant in the present study due to the limited number of experts specializing in wound care.

Beyond the numbers, it is important to emphasize that the representativeness of the sample serves a qualitative rather than statistical purpose, focusing on the quality of the expert panel rather than its size [35]. The heterogeneity of the expert panel is a critical element in the rigorous implementation of a consensus method by expanding the range of perspectives, fostering debate, and stimulating the development of innovative solutions [41, 50, 61]. This principle is strongly supported by Niederberger and Spranger [70], who suggest drawing experts from diverse backgrounds to create a broad knowledge base that can yield more robust and creative results. Additionally, the heterogeneity of the expert panel helps mitigate potential conflicts of interest related to publications, clinical environments, or affiliations with universities.

Consensus

The literature on Delphi techniques does not provide a universally agreed upon definition of consensus [35]. However, the 80% consensus threshold used in this study exceeds the thresholds proposed in some methodological literature, such as 51% [71] and 75% [63]. This level of consensus aligns with other Delphi studies in wound care, typically ranging between 75% [58] and 80% [72, 73]. The results of this e-Delphi study indicate consensus for 75 items based on descriptive statistics, and analysis of comments. The decreasing number of comments and the interquartile ranges less than or equal to one demonstrate convergence of opinions. In addition to scientific criteria, practical factors such as available time and participant fatigue were considered. Consequently, the e-Delphi concluded after three rounds, as consensus was achieved for most items. This aligns with the typical practice of Delphi exercises, which often involve two or three rounds [70]. It was unlikely that a fourth round would introduce new items. Consensus aims to reconcile differences rather than eliminate them. Hence, it was decided to address remaining areas of debate and less stable items in the subsequent stage of the application design process, utilizing another method: focus groups with prospective application users.

The substantial number of items that gained consensus in the second round suggests the complexity of considerations for safe wound care delivery. Many of them, including clinical situation assessment and factors affecting wound healing, were considered highly essential. The top 10 consensus items, separated by minimal differences, clustered closely together. Notably, the distribution of agreement was markedly skewed, with experts more likely to strongly agree or agree (score of 4 or 5) than to disagree (score of 1 or 2) or remain neutral (score of 3).

The item that achieved the strongest consensus in this study, namely “signs and symptoms of infection”, aligns with the latest guidelines from the International Wound Infection Institute guidelines [74]. This high ranking was anticipated due to ongoing concerns surrounding antimicrobial resistance and the pressing need to improve practices related to the assessment and management of wound infections [74]. In addition to “signs and symptoms of infection”, there was also significant consensus on the appropriate timing for wound cultures. Wound cultures are often unnecessarily requested when wounds lack clinical signs of infection, resulting in approximately 161,000 wound cultures performed annually in Quebec and an average annual expenditure exceding CAN$15.6 million [75]. This problem could be addressed with the future application, which would recommend performing a culture exclusively to guide treatment decisions after a clinical diagnosis of infection based on signs and symptoms [74, 76]. Certainly, the experts’ positions on these infection-related issues have the potential to foster safe, evidence-based wound care practice.

Some items, although considered essential, received notably lower average agreement levels. This was particularly evident in items related to dressings, including trade names and government reimbursement codes, which achieved some of the lowest consensus in the second round. Qualitative comments shed light on this phenomenon, suggesting that experts prioritize fundamental wound care principles: the identification and management of causal factors and adequate wound bed preparation should precede the selection of a dressing [77, 78]. Nonetheless, the assessment of the ankle-brachial index and its indications also achieved some of the lowest consensus scores during the second round. This finding reflects Quebec’s initial wound-care training, which designates the ankle-brachial index as a subject reserved for university-level education [79]. This implies that recently graduated nurses from colleges may lack the necessary knowledge in this aspect of vascular assessment. Nonetheless, it is recommended as an item to be included, and this result fuels the ongoing debate regarding university training as the standard for entry into the profession [80].

It is worth noting the shift in opinions between the second and third rounds which underscores the value of the iterative process in the e-Delphi technique employed. The extended range of kappa coefficients highlights the impact of the process and feedback on the evolving views of experts. It is essential to remember that kappa measures the level of agreement between individual experts between two rounds, not among the experts on the panel [51]. For example, some experts may have revised their opinions due to decreased confidence and aligned with the majority’s view. While methodologically adequate, the sample size can be considered statistically small, making a single expert changing their stance significantly affect the kappa coefficient [53, 81]. Scheibe et al. describe these variations as “inevitable” [82, p.272]. However, the average responses after the third round changed by less than one point for each item that progressed from the second round, demonstrating the overall stability of the aggregate rank and the reliability of the agreement for these items [83]. In quantifying the extent of disagreement, the range of the standard deviation of items that achieved consensus in the third round decreased. This suggests a reduction in outliers and a convergence of viewpoints as the rounds progressed [51]. These results support the conclusions of Greatorex and Dexter [83], namely that the results of each item submitted to the Delphi technique must have acceptable mean and standard deviation values to represent a consensus.

Non-consensus

The remaining areas of debate after this study include less frequently encountered wounds such as frostbite and wounds around drains. One of the items that failed to achieve consensus was toe pressure measurement, which had the lowest level of agreement. Despite a considerable increase in the level of agreement (from 56 to 72%), the mean remained almost unchanged, and the standard deviation remained the same, indicating that the experts who had strongly disagreed continued to do so. The qualitative data obtained during this study supported this result, highlighting a major issue related to the availability of the equipment required for this measurement. Nevertheless, toe pressure measurement is recommended when the vessels are incompressible, as is the case for nearly 20% of people with diabetes [84].

Two items achieved persistent disagreement: the inclusion of links to independent studies on different products and international best practice guidelines. The diversity of opinions requires further exploration but could reflect a desire to ensure that the application is efficient and user-friendly. Given the current context of shortages and the increasing reliance on digital technology in the wake of the pandemic, clinical decision-support tools must be effective and developed in a way that does not contribute to work overload [85].

Implications

Four main implications can be drawn from this study. First, as mentioned earlier, the results will inform the development of the algorithm that will be used to create a wound care mobile application. Second, the high levels of consensus demonstrated in this study indicate strong support among experts for the creation of digital wound care tools, which can help bridge the existing gap between wound care theory and practice. Third, presenting items thematically can assist stakeholders in utilizing parts of the results to create tools such as a comprehensive and holistic initial assessment tool. Finally, this study defines the expectations of expert wound care nurses regarding the competencies new nurses should possess upon entering the profession. While the future application can support knowledge, it cannot replace training, which forms the foundation of skill development. Therefore, this study provides a set of items that could be used to enhance initial training and professional development. For future research, it will be important to validate and compare these results with those of scientific and academic nurses. Given that the expert panel for this study primarily consisted of clinical nurses, experts from the fields of research and education were under-represented. Given this composition, it is not possible to establish statistically significant differences between these groups (e.g., academic vs. clinical backgrounds). This would be an interesting avenue to explore with a larger sample and with members from various health disciplines.

Strengths

The primary strength of this study lies in the choice of the e-Delphi technique and its transparent and rigorous implementation to achieve consensus in a field where empirical data are often lacking [35]. Given the challenges posed by the COVID-19 pandemic and the uncertainties surrounding in-person meetings, the use of an online questionnaire remains an unquestionable advantage, which justifies the choice of the e-Delphi technique. Moreover, experts are highly unlikely to travel long distances to participate in discussion groups, as suggested by the nominal group method [86]. Additionally, the asynchronous completion of the questionnaire sets the e-Delphi technique apart, recognizing the considerable challenge of coordinating the already busy schedules of experts.

The adoption of the e-Delphi technique in this study, following the classic Delphi technique used in nursing since the mid-1970s [41], offered several advantages. It was cost-effective, efficient, environmentally friendly, and not constrained by geographical boundaries. Additionally, it allowed for pretesting, had no sampling limits, and enabled asynchronous participation, ensuring data accessibility for the research team at any time and location [35, 87,88,89,90]. Considering the variable schedules of expert wound care nurses, these benefits undoubtedly contributed to the high retention rate. The iterative e-Delphi process enhanced the experts’ reflexivity, leading to a wealth of data. Beyond the advantages of standardization, such as improved external validity, this collaborative approach enhances the acceptability of these items [55].

Another strength of this study is the protection of inter-participant anonymity. The e-Delphi technique enabled experts from diverse backgrounds and levels of expertise to express their views without fear of bias or judgment from others. This approach minimizes potential biases associated with dominant group opinions, social influences, and the halo effect [87]. Additionally, each participant’s input held equal weight in the process [35, 63].

Limitations

This study has several limitations. First, all the participating experts were from Quebec, which introduces a geographical bias, restricting the generalizability of the results beyond this region. This choice was deliberate to ensure that the experts had a deep understanding of the specific context in which the future application would be used. It is important to recognize that the findings of Delphi studies are typically specific to the expert panel [35, 40]. Second, the use of purposive sampling introduced an inherent selection bias [45]. Additionally, network recruitment might have led experts to recommend like-minded colleagues. To mitigate this, the experts were recruited with the goal of achieving the broadest possible representation and encompassing a wide range of viewpoints. Another methodological limitation is that this e-Delphi study did not facilitate direct interaction between the experts, which prevented in-depth debate and discussion.

Despite the anonymity, the experts might have been influenced by the opinions of their peers or the results of previous rounds, potentially leading to a conformity bias associated with the bandwagon effect, which could have influenced them to withhold their honest opinions [63, 67]. Conversely, an anchoring bias may have influenced experts not to consider alternative perspectives [63, 67]. Last, it is important to remember that expert consensus does not represent absolute truth. Instead, it represents a valuable outcome based on the opinions of a selected group of experts and must be interpreted critically and contextually in conjunction with the literature.

Conclusions

Experts were actively engaged and given the opportunity to contribute to bridging the gap between theory and practice. With the e-Delphi technique, consensus was successfully reached on the initial content to be included in the algorithm for a wound care mobile application intended for newly graduated nurses. This marks the beginning of further research and development for this digital tool. The next phase involves validating these results with prospective users, creating a prototype, and conducting laboratory testing.

Data availability

The datasets analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- BWAT:

-

Bates-Jensen Wound Assessment Tool

- COVID-19:

-

Coronavirus Disease 2019

- CREDES:

-

Conducting and REporting of DElphi Studies

- e-Delphi:

-

electronic Delphi

- EQUATOR:

-

Enhancing the QUAlity and Transparency Of health Research

- IQR:

-

Interquartile range

- k :

-

kappa value

- SD:

-

Standard deviation

References

Olsson M, Jarbrink K, Divakar U, Bajpai R, Upton Z, Schmidtchen A, Car J. The humanistic and economic burden of chronic wounds: a systematic review. Wound Repair Regen. 2019;27(1):114–25. https://doi.org/10.1111/wrr.12683.

Martinengo L, Olsson M, Bajpai R, Soljak M, Upton Z, Schmidtchen A, Car J, Järbrink K. Prevalence of chronic wounds in the general population: systematic review and meta-analysis of observational studies. Ann Epidemiol. 2019;29:8–15. https://doi.org/10.1016/j.annepidem.2018.10.005.

Nussbaum SR, Carter MJ, Fife CE, Davanzo J, Haught R, Nusgart M, Cartwright D. An economic evaluation of the impact, cost, and medicare policy implications of chronic nonhealing wounds. Value Health. 2018;21(1):27–32. https://doi.org/10.1016/j.jval.2017.07.007.

Sen CK. Human wound and its burden: updated 2020 compendium of estimates. Adv Wound Care. 2021;10(5):281–92. https://doi.org/10.1089/wound.2021.0026.

Nightingale F. Notes on nursing: what it is, and what it is not. New-York, NY: D. Appleton; 1865.

Kielo E, Suhonen R, Salminen L, Stolt M. Competence areas for registered nurses and podiatrists in chronic wound care, and their role in wound care practice. J Clin Nurs. 2019;28(21–22):4021–34. https://doi.org/10.1111/jocn.14991.

Orsted HL, Keast DH, Forest-Lalande L, Kuhnke JL, O’Sullivan-Drombolis D, Jin S, Haley J, Evans R. Best practice recommandations for the prevention and management of wounds. In: foundations of best practice for skin and wound management. Wounds Canada. 2018. https://www.woundscanada.ca/docman/public/health-care-professional/bpr-workshop/165-wc-bpr-prevention-and-management-of-wounds/file. Accessed 17 Nov 2023.

Stacey M. Why don’t wounds heal? Wounds Int. 2016;7(1):16–21. https://woundsinternational.com/journal-articles/why-dont-wounds-heal/.

Gagnon J, Lalonde M, Polomeno V, Beaumier M, Tourigny J. Le Transfert des connaissances en soins de plaies chez les infirmières: une revue intégrative des écrits. Rech Soins Infirm. 2020;4(143):45–61. https://doi.org/10.3917/rsi.143.0045.

Lin F, Gillespie BM, Chaboyer W, Li Y, Whitelock K, Morley N, Morrissey S, O’callaghan F, Marshall AP. Preventing surgical site infections: facilitators and barriers to nurses’ adherence to clinical practice guidelines - a qualitative study. J Clin Nurs. 2019;28(9–10):1643–52. https://doi.org/10.1111/jocn.14766.

Duchscher JB. A process of becoming: the stages of new nursing graduate professional role transition. J Contin Educ Nurs. 2008;39(10):441–50. https://doi.org/10.3928/00220124-20081001-03.

Casey K, Fink R, Krugman M, Propst J. The graduate nurse experience. J Nurs Adm. 2004;34(6):303–11. https://doi.org/10.1097/00005110-200406000-00010.

del Bueno DJ. Why can’t new grads think like nurses? Nurse Educ. 1994;19(4):9–11. https://doi.org/10.1097/00006223-199407000-00008.

Li S, Kenward K. A national survey of nursing education and practice of newly licensed nurses. JONAS Healthc Law Ethics Regul. 2006;8(4):110–5. https://doi.org/10.1097/00128488-200610000-00004.

Gagnon J, Lalonde M, Beaumier M, Polomeno V, Tourigny J. Étude descriptive des facilitateurs et des obstacles dans le transfert des connaissances en soins de plaies chez des infirmiers et des infirmières nouvellement diplômées travaillant Au Québec. Rev Francoph Int De Rech Infirm. 2022;8(1):100267. https://doi.org/10.1016/j.refiri.2022.100267.

Kielo E, Salminen L, Stolt M. Graduating student nurses’ and student podiatrists’ wound care competence - an integrative literature review. Nurse Educ Pract. 2018;29:1–7. https://doi.org/10.1016/j.nepr.2017.11.002.

Stephen-Haynes J. Preregistration nurses’ views on the delivery of tissue viability. Br J Nurs. 2013;22(20):S18–23. https://doi.org/10.12968/bjon.2013.22.Sup20.S18.

Monaghan T. A critical analysis of the literature and theoretical perspectives on theory-practice gap amongst newly qualified nurses within the United Kingdom. Nurse Educ Today. 2015;35(8):e1–7. https://doi.org/10.1016/j.nedt.2015.03.006.

Murray M, Sundin D, Cope V. New graduate registered nurses’ knowledge of patient safety and practice: a literature review. J Clin Nurs. 2018;27(1–2):31–47. https://doi.org/10.1111/jocn.13785.

Ordre des infirmières et infirmiers du Québec. Le champ d’exercice et les activités réservées des infirmières et infirmiers. OIIQ. 2016. https://www.oiiq.org/documents/20147/237836/1466_doc.pdf. Accessed 4 Aug 2023.

Patel A, Irwin L, Allam D. Developing and implementing a wound care app to support best practice for community nursing. Wounds UK. 2019;15(1):90–5. https://www.wounds-uk.com/download/wuk_article/7840.

Kulikov PS, Sandhu PK, Van Leuven KA. Can a smartphone app help manage wounds in primary care? J Am Assoc Nurse Pract. 2019;31(2):110–5. https://doi.org/10.1097/JXX.0000000000000100.

Zhang Q, Huang W, Dai W, Tian H, Tang Q, Wang S. Development and clinical uses of a mobile application for smart wound nursing management. Adv Skin Wound Care. 2021;34(6):1–6. https://doi.org/10.1097/01.ASW.0000749492.17742.4e.

Shamloul N, Ghias MH, Khachemoune A. The utility of smartphone applications and technology in wound healing. Int J Low Extrem Wounds. 2019;18(3):228–35. https://doi.org/10.1177/1534734619853916.

Moore Z, Angel D, Bjerregaard J, O’Connor T, McGuiness W, Kröger K, Rasmussen B, Yderstrœde KB. eHealth in wound care: from conception to implementation. J Wound Care. 2015;24(Sup5):S1–44. https://doi.org/10.12968/jowc.2015.24.Sup5.S1.

Friesen MR, Hamel C, McLeod RD. A mHealth application for chronic wound care: findings of a user trial. Int J Environ Res Public Health. 2013;10(11):6199–214. https://doi.org/10.3390/ijerph10116199.

Beitz JM, Gerlach MA, Schafer V. Construct validation of an interactive digital algorithm for ostomy care. J Wound Ostomy Cont Nurs. 2014;41(1):49–54. https://doi.org/10.1097/01.WON.0000438016.75487.cc.

Jun YJ, Shin D, Choi WJ, Hwang JH, Kim H, Kim TG, Lee HB, Oh TS, Shin HW, Suh HS, et al. A mobile application for wound assessment and treatment: findings of a user trial. Int J Low Extrem Wounds. 2016;15(4):344–53. https://doi.org/10.1177/1534734616678522.

Barakat-Johnson M, Kita B, Jones A, Burger M, Airey D, Stephenson J, Leong T, Pinkova J, Frank G, Ko N, et al. The viability and acceptability of a virtual wound care command centre in Australia. Int Wound J. 2022;19(7):1769–85. https://doi.org/10.1111/iwj.13782.

Kim PJ, Homsi HA, Sachdeva M, Mufti A, Sibbald RG. Chronic wound telemedicine models before and during the COVID-19 pandemic: a scoping review. Adv Skin Wound Care. 2022;35(2):87–94. https://doi.org/10.1097/01.ASW.0000805140.58799.aa.

Lucas Y, Niri R, Treuillet S, Douzi H, Castaneda B. Wound size imaging: ready for smart assessment and monitoring. Adv Wound Care. 2021;10(11):641–61. https://doi.org/10.1089/wound.2018.0937.

Koepp J, Baron MV, Hernandes Martins PR, Brandenburg C, Kira ATF, Trindade VD, Ley Dominguez LM, Carneiro M, Frozza R, Possuelo LG, et al. The quality of mobile apps used for the identification of pressure ulcers in adults: systematic survey and review of apps in App Stores. JMIR Mhealth Uhealth. 2020;8(6):e14266–e. https://doi.org/10.2196/14266.

Shi C, Dumville JC, Juwale H, Moran C, Atkinson R. Evidence assessing the development, evaluation and implementation of digital health technologies in wound care: a rapid scoping review. J Tissue Viability. 2022;31(4):567–74. https://doi.org/10.1016/j.jtv.2022.09.006.

O’Cathain A, Croot L, Duncan E, Rousseau N, Sworn K, Turner K, Yardley L, Hoddinott P. Guidance on how to develop complex interventions to improve health and healthcare. BMJ. 2019;9(8):e029954. https://doi.org/10.1136/bmjopen-2019-029954.

Keeney S, Hasson F, McKenna H. The Delphi technique in nursing and health research. Oxford, UK: Wiley-Blackwell; 2011.

Dalkey N, Helmer O. An experimental application of the Delphi method to the use of experts. Manage Sci. 1963;9(3):458–67. https://doi.org/10.1287/mnsc.9.3.458.

MacEachren AM, Pike W, Yu C, Brewer I, Gahegan M, Weaver SD, Yarnal B. Building a geocollaboratory: supporting human–environment Regional Observatory (HERO) collaborative science activities. Comput Environ Urban Syst. 2006;30(2):201–25. https://doi.org/10.1016/j.compenvurbsys.2005.10.005.

Donohoe H, Stellefson M, Tennant B. Advantages and limitations of the e-Delphi technique: implications for health education researchers. Am J Health Educ. 2012;43(1):38–46. https://doi.org/10.1080/19325037.2012.10599216.

Jairath N, Weinstein J. The Delphi methodology: a useful administrative approach. Can J Nurs Adm. 1994;7(3):29–42. PMID: 7880844. https://pubmed.ncbi.nlm.nih.gov/7880844/.

Goodman CM. The Delphi technique: a critique. J Adv Nurs. 1987;12(6):729–34. https://doi.org/10.1111/j.1365-2648.1987.tb01376.x.

Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–82. https://doi.org/10.1046/j.1365-2648.2003.02537.x.

Jünger S, Payne S. The crossover artist: consensus methods in health research. In: Walshe C, Brearley S, editors. Handbook of theory and methods in applied health research. Cheltenham, UK: Edward Elgar Publishing; 2020. pp. 188–213.

Murphy MK, Black NA, Lamping DL, McKee CM, Sanderson CF, Askham J, Marteau T. Consensus development methods, and their use in clinical guideline development. Health Technol Assess. 1998;2(3):i–iv. https://doi.org/10.3310/hta2030.

Trevelyan EG, Robinson PN. Delphi methodology in health research: how to do it? Eur J Integr Med. 2015;7(4):423–8. https://doi.org/10.1016/j.eujim.2015.07.002.

Polit DF, Beck CT. Nursing research: generating and assessing evidence for nursing practice. 11th ed. Philadelphia, PA: Wolters Kluwer; 2021.

Dillman DA, Smyth JD, Christian LM. Internet, phone, mail, and mixed-mode surveys: the tailored design method. 4th ed. Hoboken, NJ: Wiley; 2014.

SurveyMonkey. Legal terms and policies. 2023. https://www.surveymonkey.com/mp/legal/. Accessed 15 Jun 2023.

Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000;32(4):1008–15. https://doi.org/10.1046/j.1365-2648.2000.t01-1-01567.x.

Clibbens N, Walters S, Baird W. Delphi research: issues raised by a pilot study. Nurse Res. 2012;19(2):37–44. https://doi.org/10.7748/cnp.v1.i7.pg21.

Couper MR. The Delphi technique: characteristics and sequence model. Adv Nurs Sci. 1984;7(1):72–7. https://doi.org/10.1097/00012272-198410000-00008.

Holey EA, Feeley JL, Dixon J, Whittaker VJ. An exploration of the use of simple statistics to measure consensus and stability in Delphi studies. BMC Med Res Methodol. 2007;7(1):52. https://doi.org/10.1186/1471-2288-7-52.

Elo S, Kyngas H. The qualitative content analysis process. J Adv Nurs. 2008;62(1):107–15. https://doi.org/10.1111/j.1365-2648.2007.04569.

Chaffin WW, Talley WK. Individual stability in Delphi studies. Technol Forecast Soc Change. 1980;16(1):67–73. https://doi.org/10.1016/0040-1625(80)90074-8.

Anthony DM. Understanding advanced statistics: a guide for nurses and health care researchers. New-York, NY: Churchill Livingstone; 1999.

Dionne CE, Tremblay-Boudreault V. L’approche Delphi. In: Corbière M, Larivière N, editors. Méthodes qualitatives, quantitatives et mixtes. 2nd ed. Québec: Presses de l’Université du Québec; 2020. pp. 173 – 91.

Jünger S, Payne S, Brine J, Radbruch L, Brearley S. Guidance on conducting and REporting DElphi studies (CREDES) in palliative care: recommendations based on a methodological systematic review. Palliat Med. 2017;31(8):684–706. https://doi.org/10.1177/0269216317690685.

National Institute of Health. Sex & gender. Office of Research on Women’s Health. n.d. https://orwh.od.nih.gov/sex-gender#ref-2-foot. Accessed 19 Jun 2023.

Eskes AM, Maaskant JM, Holloway S, van Dijk N, Alves P, Legemate DA, Ubbink DT, Vermeulen H. Competencies of specialised wound care nurses: a European Delphi study. Int Wound J. 2014;11(6):665–74. https://doi.org/10.1111/iwj.12027.

Keast DH, Bain K, Hoffmann C, Swanson T, Dowsett C, Lázaro-Martínez JL, Karlsmark T, Münter K-C, Ruettimann Liberato de Moura M, Brennan MR, et al. Managing the gap to promote healing in chronic wounds: an international consensus. Wounds Int. 2020;11(3):58–63. https://www.woundsinternational.com/journals/issue/624/article-details/managing-gap-promote-healing-chronic-wounds-international-consensus.

Serena T, Bates-Jensen B, Carter MJ, Cordrey R, Driver V, Fife CE, Haser PB, Krasner D, Nusgart M, Smith APS, et al. Consensus principles for wound care research obtained using a Delphi process. Wound Repair Regen. 2012;20(3):284–93. https://doi.org/10.1111/j.1524-475X.2012.00790.x.

Hall DA, Smith H, Heffernan E, Fackrell K. Recruiting and retaining participants in e-Delphi surveys for core outcome set development: evaluating the COMiT’ID study. PLoS ONE. 2018;13(7):e0201378. https://doi.org/10.1371/journal.pone.0201378.

Belton I, MacDonald A, Wright G, Hamlin I. Improving the practical application of the Delphi method in group-based judgment: a six-step prescription for a well-founded and defensible process. Technol Forecast Soc Change. 2019;147:72–82. https://doi.org/10.1016/j.techfore.2019.07.002.

Keeney S, Hasson F, McKenna H. Consulting the oracle: ten lessons from using the Delphi technique in nursing research. J Adv Nurs. 2006;53(2):205–12. https://doi.org/10.1111/j.1365-2648.2006.03716.x.

Fink A, Kosecoff J, Chassin M, Brook RH. Consensus methods: characteristics and guidelines for use. Am J Public Health. 1984;74(9):979–83. https://doi.org/10.2105/AJPH.74.9.979.

Haesler E, Swanson T, Ousey K, Carville K. Clinical indicators of wound infection and biofilm: reaching international consensus. J Wound Care. 2019;28(Sup3b):s4–12. https://doi.org/10.12968/jowc.2019.28.Sup3b.S4.

Jones J, Hunter D. Consensus methods for medical and health services research. BMJ. 1995;311(7001):376–81. https://doi.org/10.1136/bmj.311.7001.376.

Winkler J, Moser R. Biases in future-oriented Delphi studies: a cognitive perspective. Technol Forecast Soc Change. 2016;105:63–76. https://doi.org/10.1016/j.techfore.2016.01.021.

Kea B, Sun BC-A. Consensus development for healthcare professionals. Intern Emerg Med. 2015;10(3):373–83. https://doi.org/10.1007/s11739-014-1156-6.

Akins RB, Tolson H, Cole BR. Stability of response characteristics of a Delphi panel: application of bootstrap data expansion. BMC Med Res Methodol. 2005;5:37. https://doi.org/10.1186/1471-2288-5-37.

Niederberger M, Spranger J. Delphi technique in health sciences: a map. Front Public Health. 2020;8(457):1–10. https://doi.org/10.3389/fpubh.2020.00457.

McKenna HP. The Delphi technique: a worthwhile research approach for nursing? J Adv Nurs. 1994;19(6):1221–5. https://doi.org/10.1111/j.1365-2648.1994.tb01207.x.

LeBlanc K, Baranoski S, Christensen D, Langemo D, Edwards K, Holloway S, Gloeckner M, Williams A, Campbell K, Alam T, et al. The art of dressing selection: a consensus statement on skin tears and best practice. Adv Skin Wound Care. 2016;29(1):32–46. https://doi.org/10.1097/01.ASW.0000475308.06130.df.

Pope E, Lara-Corrales I, Mellerio J, Martinez A, Schultz G, Burrell RP, Goodman L, Coutts P, Wagner J, Allen U, et al. A consensus approach to wound care in Epidermolysis Bullosa. J Am Acad Dermatol. 2012;67(5):904–17. https://doi.org/10.1016/j.jaad.2012.01.016.

International Wound Infection Institute. Wound infection in clinical practice. 2022. https://woundinfection-institute.com/wp-content/uploads/IWII-CD-2022-web-1.pdf. Accessed 8 Feb 2023.

Institut national d’excellence en santé et en services sociaux. La culture de plaie: pertinence et indications. INESSS. 2020. https://www.inesss.qc.ca/fileadmin/doc/INESSS/Rapports/Biologie_medicale/INESSS_Avis_Culture_plaie.pdf. Accessed 8 Feb 2023.

Ward D, Holloway S. Validity and reliability of semi-quantitative wound swabs. Br J Community Nurs. 2019;24(Sup12):S6–11. https://doi.org/10.12968/bjcn.2019.24.Sup12.S6.

Sibbald RG, Elliott JA, Persaud-Jaimangal R, Goodman L, Armstrong DG, Harley C, Coelho S, Xi N, Evans R, Mayer DO, et al. Wound bed preparation 2021. Adv Skin Wound Care. 2021;34(4):183–95. https://doi.org/10.1097/01.ASW.0000733724.87630.d6.

Atkin L, Bućko Z, Conde Montero E, Cutting K, Moffatt C, Probst A, Romanelli M, Schultz GS, Tettelbach W. Implementing TIMERS: the race against hard-to-heal wounds. J Wound Care. 2019;23(Sup3a):S1–50. https://doi.org/10.12968/jowc.2019.28.Sup3a.S1.

Comité de la formation des infirmières. Avis - Technologies de l’information et de la communication (TIC) dans la formation infirmière initiale. OIIQ. 2017. https://www.oiiq.org/documents/20147/1306159/avis-tic-ca-20170615-16.pdf/b97aa514-d8d9-ae62-046e-852ce299796d. Accessed.

Ordre des infirmières. Et infirmiers du Québec. La petite histoire de 1917 à 1991. Montréal, QC: OIIQ; 2008.

Lange T, Kopkow C, Lützner J, Günther K-P, Gravius S, Scharf H-P, Stöve J, Wagner R, Schmitt J. Comparison of different rating scales for the use in Delphi studies: different scales lead to different consensus and show different test-retest reliability. BMC Med Res Methodol. 2020;20(1):28. https://doi.org/10.1186/s12874-020-0912-8.

Scheibe M, Skutsch M, Schofer J. Experiments in Delphi methodology. In: Linstone HA, Turoff M, editors. The Delphi method: techniques and applications. Boston, MA: Addison-Wesley; 1975. pp. 262–87.

Greatorex J, Dexter T. An accessible analytical approach for investigating what happens between the rounds of a Delphi study. J Adv Nurs. 2000;32(4):1016–24. https://doi.org/10.1046/j.1365-2648.2000.t01-1-01569.x.

Hembling BP, Hubler KC, Richard PM, O’Keefe WA, Husfloen C, Wicks R, Dressor H. The limitations of ankle brachial index when used alone for the detection/screening of peripheral arterial disease in a population with an increased prevalence of diabetes. J Vasc Ultrasound. 2007;31(3):149–51. https://doi.org/10.1177/154431670703100304.

Chance EA, Horne R. Relationship between nursing, health technology and work overload: a praxeoloical approach. J Nurs Healthc Manage. 2021;4(1):1–16. https://www.scholarena.com/article/Relationship-Between-Nursing.pdf.

Allen J, Dyas J, Jones M. Building consensus in health care: a guide to using the nominal group technique. Br J Community Nurs. 2004;9(3):110–4. https://doi.org/10.12968/bjcn.2004.9.3.12432.

Barrett D, Heale R. What are Delphi studies? Evid Based Nurs. 2020;23(3):68–9. https://doi.org/10.1136/ebnurs-2020-103303.

Keeney S, Hasson F, McKenna HP. A critical review of the Delphi technique as a research methodology for nursing. Int J Nurs Stud. 2001;38(2):195–200. https://doi.org/10.1016/S0020-7489(00)00044-4.

Linstone HA, Turoff M. The Delphi method: techniques and applications. Boston, MA: Addison-Wesley; 1975.

McPherson S, Reese C, Wendler MC. Methodology update: Delphi studies. Nurs Res. 2018;67(5):404–10. https://doi.org/10.1097/NNR.0000000000000297.

Acknowledgements

We would like to express our sincerest gratitude to the participants, the expert wound care nurses, in this study, who gave their valuable time to complete the e-Delphi rounds. We would also like to thank the Regroupement Québécois en Soins de Plaies for their support in recruiting experts. Finally, we would like to thank Julie Meloche, statistician, for her contribution to the production of the statistics and interpretation of the results.

Funding

This work is a part of a PhD thesis supported by the Canadian Institutes of Health Research Doctoral Research Award (number: 202111FBD- 476880 − 67262), the Fonds de Recherche du Québec Santé (number: 2022-2023-BF2-319284), and the University of Ottawa. The funders had no role in the study design, the collection, analysis, and interpretation of data, the writing of the manuscript, or the decision to publish.

Author information

Authors and Affiliations

Contributions

JG: Conceptualization, Methodology, Formal analysis, Investigation, Writing - Original Draft —Review & Editing, Visualization, Funding acquisition. JC: Conceptualization, Validation, Writing - Review & Editing. SP: Conceptualization, Validation, Writing - Review & Editing. ML: Conceptualization, Methodology, Validation, Writing - Review & Editing, Supervision. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This research received approval from the University of Ottawa’s Research Ethics Board (reference: H-07-22-8163, date: 07-09-2022). It was performed in accordance with relevant guidelines and regulations. Study participation was voluntary, and informed consent was obtained from all participants. They were assured that the collected data would remain strictly confidential and that they had the option to withdraw from participation at any time. All study information and contact details for the research team were provided on the first page of the online questionnaire.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Gagnon, J., Chartrand, J., Probst, S. et al. Content of a wound care mobile application for newly graduated nurses: an e-Delphi study. BMC Nurs 23, 331 (2024). https://doi.org/10.1186/s12912-024-02003-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12912-024-02003-x