Abstract

Electrocardiogram (ECG) signals are very important for heart disease diagnosis. In this paper, a novel early prediction method based on Nested Long Short-Term Memory (Nested LSTM) is developed for sudden cardiac death risk detection. First, wavelet denoising and normalization techniques are utilized for reliable reconstruction of ECG signals from extreme noise conditions. Then, a nested LSTM structure is adopted, which can guide the memory forgetting and memory selection of ECG signals, so as to improve the data processing ability and prediction accuracy of ECG signals. To demonstrate the effectiveness of the proposed method, four different models with different signal prediction techniques are used for comparison. The extensive experimental results show that this method can realize an accurate prediction of the cardiac beat’s starting point and track the trend of ECG signals effectively. This study holds significant value for timely intervention for patients at risk of sudden cardiac death.

Similar content being viewed by others

Introduction

Sudden cardiac death is defined as a death occurring usually within an hour of onset of symptoms, arising from an underlying cardiac disease. Sudden cardiac death is a complication of a number of cardiovascular diseases and is often unexpected [1, 2]. Clinical manifestations may include chest pain, shortness of breath, fatigue, weakness, persistent angina pectoris, arrhythmia, etc. [3]. At present, there are limited effective methods to predict the occurrence of SCD in individuals without prior cardiac issues. As a simple, easy-to-use, reliable ECG analysis tool, the electrocardiogram (ECG) provides abundant information for the diagnosis and treatment of cardiovascular disease. Based on ECG signals, abnormal and significant fluctuations can be detected in patients before the onset of SCD [4, 5]. For example, Ventricular Fibrillation (VF) is an important manifestation of sudden cardiac death, and the trend of VF can be obtained by monitoring ECG signals [6, 7]. However, ECG is a weak signal with strong nonlinearity, non-stationarity, and randomness, which affect the final diagnostic results. Therefore, accurate prediction of ECG signals plays a pivotal role in the early detection and prevention of Sudden Cardiac Death [8, 9].

At present, the traditional machine learning models are commonly applied for ECG prediction. These models make forecasting based on historical data, such as classification model and regression model [10,11,12,13]. For example, Liu et al. [14] developed a cardiac arrest classification model utilizing wavelet transform and the AdaBoost algorithm. This model effectively distinguishes cardiac arrest from ECG signals and predicts its occurrence with an impressive accuracy of 97.56% within 5 minutes before the event. Ebrahimzadeh et al. [15] employed a Multi-Layer Perceptron (MLP) to classify abnormal ECG signals, with the aim of predicting Sudden Cardiac Death (SCD). Their model demonstrates increasing prediction accuracy as it approaches the critical point of sudden death. Sengupta et al. [16] utilized Random Forest, Least Squares Discriminant, and Support Vector Machine in the classification of 12-lead ECG signals to predict abnormal myocardial relaxation and assess the likelihood of SCD. Hou et al. [17] presented a novel deep learning-based algorithm that combined an LSTM-based auto-encoder (LSTM-AE) network with support vector machine (SVM) for ECG arrhythmias classification. This method exhibits high accuracy, sensitivity, and specificity in classifying various heartbeat types, showcasing its potential for ECG arrhythmia classification. Kaya et al. [18] proposed an innovative approach that combines angle transform (AT) and LSTM for the automatic identification of congestive heart failure (CHF) and arrhythmia (ARR) using ECG signals. However, most of these methods achieve classification based on patients’ ECG signals and those of healthy individuals, which struggles to address dynamic system modeling problems related to time [19, 20].

With the rapid development of artificial intelligence, neural networks has been broadly applied in signal processing and achieves excellent performance. Jin et al. [21] proposed a regression model based on the Regularized Extreme Learning Machine (RELM) to predict ECG signals by analyzing the correlation between ECG and human gait. Zheng et al. [22] employed a traditional Echo State Network (ESN), a type of Recurrent Neural Network, to forecast ECG signals, yielding superior results compared to conventional regression machine learning techniques. Wang et al. [23] devised a cardiac beat prediction algorithm for SCD based on ECG, establishing a time series prediction model for dynamic human ECG signals to accurately anticipate ECG signal patterns. Sakib et al. [24] employed a Reservoir Computing (RC)-based ESN method for magnetocardiography (MCG) monitoring and helped to detect cardiac activity.

In recent years, some powerful sequence models have been proposed to assist with ECG analysis with their advantage of exploring time-frequency based features [25,26,27,28,29,30]. As one of the most commonly used sequence model, the Long Short-Term Memory (LSTM) network has been proven to be effective to track information over extended periods [31, 32]. For instance, Liu et al. [33] employed LSTM to predict influenza trends and achieved better results than linear models. Balci et al. [34] presented a hybrid Attention-based LSTM-XGBoost algorithm for detecting atrial fibrillation (AF) in long-recorded ECG data. Combined with preprocessing techniques, this method achieves a high accuracy, offering a reliable support system for clinicians and facilitating data tracking in long ECG record reviews.

However, traditional LSTM networks exhibit weak robustness and low prediction accuracy in complex tasks, as the memory cells store memories unrelated to the current time step [35, 36]. To address this issue, we propose an integrated approach combining data preprocessing and prediction model construction to predict ECG signal trends in advance. Firstly, data prepocessing is performed on the original ECG signals, including wavelet denoising, normalization, and phase space reconstruction. Then, the Nested LSTM model is utilized for signal prediction. At this step, an inner LSTM unit is adopted, which can guide the memory forgetting and memory selection of ECG signals, so as to improve the data processing ability and prediction accuracy of ECG signals.

This paper is organized as follows. In Introduction section, a brief introduction of the existing ECG signal prediction methods is made. A detailed algorithm and description of the proposed methods are presented in Theory and calculation section. Experiment and results section provides implementation details of the experiments. The effectiveness and superiority of the proposed method are verified through experiments and results analysis. Conclusion section summarizes this paper.

Theory and calculation

Model construction

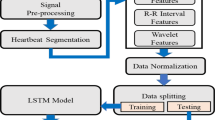

The model construction proposed in this paper is presented in Fig. 1, including the data preprocessing strategy, the prediction model Nested LSTM and model evaluation.

Preprocessing methods

Considering the uncertainty and complexity of ECG signals, it would be a difficult to capture the trend of the data directly. Thus, the data preprocessing strategy is adopted to ensure the quality of data, after which some unwanted noise are removed from the ECG signals. Therefore, we employ a data preprocessing strategy to ensure data quality and remove unwanted noise from the ECG signals. The preprocessing methods include signal denoising, normalization, and phase space reconstruction.

Signals denoising

Due to the movement of human limbs, breathing, electromagnetic interference of the surrounding environment, ECG signals are accompanied by a lot of noise, including baseline drift, power frequency interference, electromyographic interference, and motion artifacts, which could have a certain impact on the prediction results. The frequency ranges and signal energy ranges of the four types of noise are as follows: (1) Baseline drift noise: the noise frequency is less than 5Hz; the energy range is between 0.01 Hz and 1 Hz. (2) Power frequency interference noise: the noise frequency range is 50 Hz or 60 Hz; the energy range is concentrated in frequency components near the power frequency. (3) Myoelectric interference noise: The noise frequency range is in the range of 5HZ 2000Hz. The energy range is between 10 Hz and 500 Hz, depending on the frequency of muscle activity. (4) Motion artifact noise: Noise frequency range is in the range of 3Hz 14Hz, depending on the frequency and amplitude of motion. The energy range is in the range of 5Hz 10Hz, reflecting the frequency components caused by the subject’s movement.

Therefore, it is necessary to denoise the ECG signals. Wavelet denoising method has the characteristics of multi-resolution analysis and has the ability to characterize the local characteristics of signals in both time and frequency domains. It is very suitable for analyzing nonstationary signals such as ECG signals and extracting the local characteristics of signals. Therefore, the wavelet denoising method is used to denoise the ECG signals in this paper. Its process is shown in Fig. 2. The specific steps are as follows:

-

Input the original ECG signals containing noise;

-

Decompose the original ECG signals in 7 layers through the wavelet transform in the wavelet denoising method, and the wavelet function selects DB6;

-

Extract wavelet coefficients of each layer, including approximation coefficients and detail coefficients;

-

Obtain the threshold of each layer by using unbiased likelihood estimation; that is, give a threshold L for each layer, calculate its likelihood estimate, and then minimize the likelihood of L to obtain the threshold of each layer. The details of determining the threshold are as follows:

-

Step 1: After squaring the wavelet coefficients of each resolution level, arrange them in order from small to large, and obtain the vector \(P=[P_1,P_1,...,P_N]\), where N represents the length of the wavelet coefficient.

-

Step 2: Calculate the risk vector R based on the vector P, and find the smallest \(R_i\) in the risk vector as the risk value. The formula is as follows:

$$\begin{aligned} R=[R_1,R_1,...,R_N] \end{aligned}$$(1)$$\begin{aligned} R_i=[N-2*i+(N-i)*P_i+sum(P_k)]/N \end{aligned}$$(2) -

Step 3: The threshold value L is calculated from the square of the wavelet coefficient \(P_i\) corresponding to the risk value \(R_i\):

$$\begin{aligned} L=median(abs(W(j-1,k)))/0,6745*sqrt(P_i) \end{aligned}$$(3)

-

-

Denoise the decomposed 7-layer signals according to the selected threshold; if \({X_i}\) is the ECG signals of the i-th layer after denoising, and \({d_i}\) is the ECG signals of the i-th layer before denoising, the denoising method of each layer as follows:

$$\begin{aligned} {X_i} = \left\{ \begin{array}{ll} {d_i},&{} {X_i} \ge {L_{i}} \\ 0,&{} {X_i} < {L_i} \end{array}\right. i = 1,2, \cdots ,{7} \end{aligned}$$(4) -

Reconstruct the 7-layer signals through inverse wavelet transform; the reconstructed ECG signals is:

$$\begin{aligned} X = {X_1} + {X_2} + \cdots + {X_{7}} \end{aligned}$$(5)We adopted the method of wavelet reconstruction after wavelet transform, that is, using the inverse wavelet transform method. The steps are as follows:

-

Step 1: For the highest level of detail coefficients and the lowest frequency approximation coefficients, use the inverse high pass filter and inverse low pass filter of the wavelet basis function for upsampling and convolution to obtain the reconstructed signal.

-

Step 2: For each level of detail and approximation coefficients, the inverse high pass filter and inverse low pass filter of the wavelet basis function are used for upsampling and convolution to obtain the reconstructed signal.

-

Step 3: Repeat steps 1 and 2 until all levels of refactoring are completed.

-

-

Output the denoised ECG signals.

Normalization

In order to obtain better fitting results and prevent the divergence of training results, it is necessary to standardize the training set, and the standardized equation is:

Where X denotes the training set, \(\overline{X}\) denotes the average value of the training set, and \(\sigma\) denotes the standard deviation of the training set.

Phase space reconstruction

In the model training, we aim to predict the ECG signals in the next time step based on the historical data. It is necessary to use prior data as the training data to predict ECG signals in future time steps. In this paper, the final training set is constructed by phase space reconstruction. If the reconstruction dimension is m, the time delay is tau, and the ECG signals of the normalized training set is \(X = \left[ \begin{array}{cccc} {x_1},&{x_2},&\cdots ,&{x_{n + 1 + (m - 1)tau}} \end{array}\right]\), then the reconstructed training set is:

The ECG signal in the input layer of the predition model are reconstructed from Equations (7) and (8). The construction rule is to start from the first sampling point of the selected ECG signal and use the \(1-st\) to \(99-th\) sampling points as the input sample, the \(100-th\) sample point is taken as the output sample, and so on. The input samples of the \(i-th\) training set are the \(i~i+98\) sampling points, and the output samples are the \(i+99-th\) sampling points, where \(i = 1, 2, ..., 5 000-99\). A total of 4901 input-output sample pairs are generated, which is the training data set of the model.

Nested LSTMs model

Information exhibits time correlation, and historical information can hold valuable clues for predicting future events. Traditional machine learning methods only have short-term memory, which has prediction limitations in the case of limited information. LSTM introduces ingenious controllable self circulation to generate a path that allows gradient to flow continuously for a long time, which makes it especially suitable for processing tasks related to time series and tracking information in a longer time. As a result, the extended models of LSTM have received increasing attention by virtue of the obvious advantages.

Nested LSTM shares the same input layer, hidden layer, and output layer as LSTM, and its unit structure is illustrated in Fig. 3. In this figure, a new inner LSTM structure is adopted to replace the memory cells of the traditional LSTM. When accessing the inner memory, they are gated in the same way. Therefore, the Nested LSTM can access the inner memory more specifically, which makes the Nested LSTM prediction model has stronger processing capabilities for ECG signals and higher prediction accuracy. This enhancement allows the Nested LSTM to capture and utilize more intricate temporal patterns in the data, making it well-suited for tasks that require detailed information processing and precise predictions in the context of electrocardiogram signals.

Nested LSTM is divided into inner LSTM and outer LSTM. The gating system of both inner and outer LSTM is consistent with that of traditional LSTM. Within this system, there are four gating systems: forget gate, input gate, candidate memory cell, and output gate. The calculation equations for each gate are as follows.

Forget gate:

Input gate:

Candidate memory cell:

Memory cell: The input and hidden states of the inner LSTM are:

The memory cell update method of the outer LSTM is:

Output gate:

A new round of hidden state:

Where \(\sigma\) denotes the sigmoid function. In the outer LSTM, \({W_{fx}}\) and \({W_{fh}}\) denote the weight matrix of the forget gate; \({W_{ix}}\) and \({W_{ih}}\) denote the weight matrix of the input gate; \({W_{cx}}\) and \({W_{ch}}\) denote the weight matrix of the candidate memory cell; \({W_{ox}}\) and \({W_{oh}}\) denote the weight matrix of the output gate; \({b_f}\), \({b_i}\), \({b_c}\) and \({b_o}\) denote the bias of the forget gate, input gate, candidate memory cell, and output gate respectively. In the inner LSTM, \(\overline{x}_t\), \(\overline{h}_{t - 1}\) and \(\overline{c}_{t - 1}\) denote the current input, the hidden state and memory cell of the previous round, respectively. In the outer LSTM, \({\overline{W}_{fx}}\) and \({\overline{W}_{fh}}\) denote the weight matrix of the forget gate; \({\overline{W}_{ix}}\) and \({\overline{W}_{ih}}\) denote the weight matrix of the input gate; \({\overline{W}_{cx}}\) and \({\overline{W}_{ch}}\) denote the weight matrix of the candidate memory cell; \({\overline{W}_{ox}}\) and \({\overline{W}_{oh}}\) denote the weight matrix of the output gate; \(\overline{b}_f\), \(\overline{b}_i\), \(\overline{b}_c\) and \(\overline{b}_o\) denote the bias of the forget gate, input gate, candidate memory cell, and output gate respectively; \(\overline{x}_t\), \(\overline{h}_{t - 1}\) and \(\overline{c}_{t - 1}\) denote the current input, the hidden state and memory cell of the previous round, respectively.

The output of the output layer is:

Where \({W_{yh}}\) denotes the weight matrix of the output layer.

The Nested LSTM model is a deep neural network that incorporates both feedforward and feedback mechanisms. The feedforward mechanism completes the forward calculation of the Nested LSTM using equations (6)-(20). In contrast, the feedback mechanism employs the error backpropagation algorithm to train and update various network parameters.

The training process of the feedback mechanism first needs to define the loss function:

Where \({E_t}\) denotes the error at time t, E denotes the total error, \({y_t}\) denotes the training value, and \(\overset{\wedge }{y_t}\) is the target value.

The weight matrix and the bias term of each gating system need to be updated by the loss function [31]. Its process is shown in Fig. 4 and the specific steps are as follows:

-

Initialize the parameters of the prediction model and set the error threshold.

-

Input the ECG signals training set.

-

Perform forward calculation according to equations (6)-(20) to obtain the output corresponding to the current input.

-

Define the loss function.

-

Solve the gradient of each weight according to the loss function, and then update the weight matrix according to the gradient guide and update the bias terms.

-

Judge whether the training error is less than the error threshold; if yes, skip to Step7; if not, skip to Step3.

-

End of training.

Experiment and results

Data source

In this study, ECG signals were obtained from the Sudden Cardiac Death Holter Database on the PhysioNet website [37], a resource for complex physiological and biomedical signal research. The database features 20 patient groups who experienced actual cardiac arrest and exhibited potential sinus rhythm, persistent rhythm, and atrial fibrillation prior to the event. Medical experts have meticulously annotated these ECG signals, identifying the onset of sudden cardiac death. The dataset for this study consists of 20 sets of 20 second predicted signals (20 seconds including 16 seconds before and 4 seconds after the SCD event). The detailed information of 20 groups ECG signal used in this paper are shown in Table 1. We randomly select a set of ECG signals as the training set, that is, randomly create a model for each subject. The construction rule is to start from the first sampling point of the selected ECG signal and use the \(1_{st}\) to \(99_{th}\) sampling points as the input sample, the \(100_{th}\) sample point is taken as the output sample, and so on. The input samples of the \(i_{th}\) training set are the \(i-i+98\) sampling points, and the output samples are the \(i+99th\) sampling points, where \(i = 1, 2, ..., 5 000-99\). A total of 4901 input-output sample pairs are generated, which is the training data set of the model.

Data preprocessing results

Utilizing all ECG signals as model input may introduce noise and decrease prediction accuracy. As a result, a wavelet denoising method is employed to remove noise. Denoising outcomes are displayed in Figs. 5, 6 and 7.

From Figs. 5, 6 and 7, it is evident that the signals 30.dat and 31.dat exhibit noticeable baseline drift noise before denoising, while signal 32.dat exhibits both baseline drift and EMG noise. However, after denoising, the signals 30.dat-32.dat become more stable (as supported by Table 2). This demonstrates that the enhanced wavelet denoising method employed in this study effectively eliminates baseline drift and EMG signal noise, indicating its capability to denoise ECG signals.

This study assesses the denoising effect through visual and signal-to-noise ratio (SNR). The denoising results are further compared using SNR, a technical metric that measures the ratio of signal energy to noise energy. The specific definitions are as follows:

Where x(n) denotes the original signal, and \({x_m}(n)\) denotes the denoising signal. We can evaluate the denoising effect by comparing the SNR of ECG signal before and after denoising. The SNR results are shown in Table 2.

Table 2 demonstrates that a portion of the noise in the aforementioned ECG signals has been removed, as evidenced by the increased SNR before and after denoising. This finding more clearly indicates the presence of a significant amount of noise in the ECG signal, which could impact prediction outcomes. Consequently, it is necessary to employ a denoising method to process the ECG signal prior to making predictions.

Predicion results and analysis

The Nested LSTM model was used to predict the risk of actual cardiac arrest for the 20 groups of ECG signals. In the experiment, we trained the model 20 times in total, and the training time was between 43s-58s. A selection of prediction results is presented in Figs. 8, 9 and 10. The error is calculated as the difference between the true value and the predicted value.

Figures 8, 9, and 10 illustrate notable fluctuations in the ECG signal. The actual values are represented in blue, while the fitted predictions are shown in red. Impressively, the proposed method exhibits remarkable proficiency in capturing these variations and accurately predicting trends in the ECG signal. The experimental results highlight the potential of predicting sudden cardiac death (SCD) before its occurrence, which holds life-saving implications for patients.

There are several techniques for classifying the risk of SCD. Support Vector Machines (SVM) is a classical classification method that is widely used. Echo State Networks (ESN) and Long Short-Term Memory (LSTM) networks are adaptive data analysis methods that have been employed in SCD detection. Bidirectional LSTM (Bi-LSTM) is an improved method of LSTM, comprising forward and backward LSTM components, which enables the summarization of temporal information from both past and future contexts. To validate the performance of the Nested LSTM model, the four models mentioned above are compared. In order to quantitatively assess the performance of the predictive models, two types of error measurements are employed in the experiment: mean absolute error (MAE) and root mean square error (RMSE).

Where N denotes the predicted ECG signals length, \({Y_n}\) denotes the predicted value, and \(\widehat{Y_n}\) denotes the target value. The prediction error results are averaged. The prediction RMSE results of the above models are shown in Table 3, Figs. 11 and 12, the prediction MAE results of the above models are shown in Table 4, Figs. 13 and 14, and the prediction average results of the above models are shown in Table 5.

RMSE can reflect the overall error of signal prediction. According to Figs. 11, 12 and Table 3, it can be observed that the SVM model has the highest RMSE. The SVM model, which lacks a feedback structure and cannot obtain historical information characteristics, performs poorly in ECG signal prediction. The ESN model, despite having a feedback structure, is simple and also exhibits a large error. The LSTM and Bi-LSTM models have low RMSE values, with their results being quite similar. Nested LSTM enhances the memory function of LSTM and extracts historical information features more accurately. When compared to the SVM, ESN, LSTM, and Bi-LSTM models, the RMSE of Nested LSTM is the smallest.

MAE represents the average of the absolute error between the predicted value and the observed value. According to Figs. 13, 14 and Table 4, it can be seen that the SVM model also has the largest MAE, and the Nested LSTM has the smallest MAE among the five models.

According to Table 5, it can be seen that the SVM model has the largest average RMSE and average MAE, and the Nested LSTM model has the smallest average RMSE and average MAE. When compared to the SVM, ESN, LSTM, and Bi-LSTM models, the average RMSE of Nested LSTM is reduced by 61.1%, 41.2%, 20.5%, and 17.9%, respectively. And the average MAE of Nested LSTM is reduced by 88.7%, 78.2%, 25.8%, and 32.6%, respectively. In conclusion, the Nested LSTM model demonstrates a strong nonlinear mapping ability for ECG signals.

Conclusion

In this study, we present an early prediction method for sudden cardiac death (SCD) risk using Nested LSTM based on electrocardiogram (ECG) sequential features to predict a patient’s ECG signals. ECG prediction is an effective approach for the early prediction of SCD risk. One limitation of the traditional prediction methods is that it has a low predict accuracy for strong nonlinear ECG, which may turn out to be inappropriate for practical applications. Thus, it is highly desirable to develop an optimized ECG prediction model with a high prediction accuracy. Focusing on the timeliness and accuracy of prediction, this paper focuses on the nonlinear mapping capability of Nested LSTM for ECG signals. The memory cell of Nested LSTM is replaced by an inner LSTM, which has strong memory ability. To demonstrate the effectiveness and applicability of the proposed model, the ECG signals of 20 groups of actual cardiac arrest patients are taken for conducting the empirical study. The experiment results show that the proposed model achieves better performance in comparison with other four models.

The current similar methods include classification and regression techniques. References [14,15,16] employ classification models to anticipate abnormal and non-abnormal ECG patterns, whereas references [21,22,23] utilize regression models to align with ECG signal trends and identify ECG outliers. Distinguished from conventional classification techniques, this approach excels in forecasting the onset of SCD heartbeats, effectively capturing the dynamic, nonlinear, and non-stationary nature of time series, and adeptly accommodating the irregular trends in electrocardiogram signals. Furthermore, in contrast to traditional regression methodologies, the study devises an encompassing strategy that merges data preprocessing with predictive model development for ECG prediction. Empirical findings demonstrate a notable reduction in fitting errors, specifically in terms of RMSE and MAE, underscoring the efficacy of this novel methodology.

As for future work, we will study the multi-step prediction method of ECG signal characteristics, and use a series of deep learning methods and reinforcement learning methods to reduce multi-step prediction errors. More significantly, the practical applicability of ECG signal prediction methods will be verified in SCD diagnostic applications, potentially saving patients’ lives from SCD events.

Availability of data and materials

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Barker J, Li X, Khavandi S, Koeckerling D, Mavilakandy A, Pepper C, et al. Machine learning in sudden cardiac death risk prediction: a systematic review. Europace. 2022;24(11):1777–87.

Seely KD, Crockett KB, Nigh A. Sudden cardiac death in a young male endurance athlete[J]. J Osteopath Med. 2023;123(10):461–5.

Alon B, Andrew B, Moss AJ, Ilan G. Genetics of sudden cardiac death. Curr Cardiol Rep. 2018;13(5):364–76.

Ribeiro AH, Ribeiro MH, Paixo G, Oliveira DM, Ribeiro A. Author Correction: Automatic diagnosis of the 12-lead ECG using a deep neural network. Nat Commun. 2020;11(1):2227.

Potter EL, Rodrigues C, Ascher D, Marwick TH. Machine Learning Applied to Energy Waveform ECG for Prediction of Stage B Heart Failure in the Community. J Am Coll Cardiol. 2020;75(11):1894.

Desai U, Martis RJ, Gurudas Nayak C, Seshikala G, Sarika K, SHETTY K R. Decision support system for arrhythmia beats using ECG signals with DCT, DWT and EMD methods: A comparative study. J Mech Med Biol. 2016;16(01):1640012.

Desai U, Nayak CG, Seshikala G, Martis RJ, Fernandes SL. Automated diagnosis of tachycardia beats. In: Smart Computing and Informatics: Proceedings of the First International Conference on SCI 2016, Volume 1. Springer Publishing: Smart Innovation, Systems and Technologies; 2018. pp. 421–9. https://www.springer.com/series/8767.

Howell SJ, Alday E, German D, Bender A, Tereshchenko L. Lifetime sex-specific sudden cardiac death prediction using ECG global electrical heterogeneity: the atherosclerosis risk in communities (ARIC) study. Eur Heart J. 2019;40(Supplement_1):3516.

Kaji H, Iizuka H, Sugiyama M. ECG-Based Concentration Recognition With Multi-Task Regression. IEEE Trans Biomed Eng. 2019;66(1):101–10.

Hossain MA, Hossain ME, Rahaman MA. Multipurpose medical assistant robot (Docto-Bot) based on internet of things. Int J Electr Comput Eng. 2021;11(6):5558.

Faruk N, Abdulkarim A, Emmanuel I, Folawiyo YY, Adewole KS, Mojeed HA, et al. A comprehensive survey on low-cost ECG acquisition systems: Advances on design specifications, challenges and future direction. Biocybernetics Biomed Eng. 2021;41(2):474–502.

Mireles C, Sanchez M, Cruz-Ortiz D, Salgado I, Chairez I. Home-care nursing controlled mobile robot with vital signal monitoring. Med Biol Eng Comput. 2023;61(2):399–420.

Liu B, Chen W, Wang Z, Pouriyeh S, Han M. RAdam-DA-NLSTM: A Nested LSTM-Based Time Series Prediction Method for Human-Computer Intelligent Systems. Electronics. 2023;12(14):3084.

Liu G, Wang Y, Cai J. Analysis of epileptic seizure detection method based on improved genetic algorithm optimization back propagation neural network. Biomed Eng Res. 2019;36(2):95–100.

Ebrahimzadeh E, Manuchehri MS, Amoozegar S, Araabi BN, Soltanian-Zadeh H. A time local subset feature selection for prediction of sudden cardiac death from ECG signal. Med Biol Eng Comput. 2017;56(7):1253–70.

Mohammad K, Khadijeh R, Ateke G, Maryam A. Early detection of sudden cardiac death using nonlinear analysis of heart rate variability. Biocybernetics Biomed Eng. 2018;38(4):931–40.

Hou B, Yang J, Wang P, Yan R. LSTM-based auto-encoder model for ECG arrhythmias classification. IEEE Trans Instrum Meas. 2019;69(4):1232–40.

Kaya Y, Kuncan F, Tekin R. A new approach for congestive heart failure and arrhythmia classification using angle transformation with LSTM. Arab J Sci Eng. 2022;47(8):10497–513.

Yuan T, Chen Y, Liu S. Prediction Model for Ionospheric Total Electron Content Based on Deep Learning Recurrent Neural Network. J Space Sci. 2018;38(1):48–57.

Attia ZI, Noseworthy PA, Lopez-Jimenez F, Asirvatham SJ, Deshmukh AJ, Gersh BJ, et al. An artificial intelligence-enabled ECG algorithm for the identification of patients with atrial fibrillation during sinus rhythm: a retrospective analysis of outcome prediction. Lancet (London, England). 2019;394(10201):861–7.

Jin CW. Research on association analysis between human gait and ECG with machine learning. Hangzhou University of Electronic Science and technology; 2019.

Zheng X, Zhou Q, Cai G, Zhu Q. Research progress of identification methods based on ECG. Beijing Biomed Eng. 2016;35(2):208–13.

Wang H, Yang J, Liu X. Prediction of sudden cardiac death based on ECG signal and echo state network. Laser J. 2019;264(9):187–90.

Sakib S, Fouda MM, Al-Mahdawi M, Mohsen A, Oogane M, Ando Y, et al. Noise-removal from spectrally-similar signals using reservoir computing for MCG monitoring. In: ICC 2021-IEEE International Conference on Communications. IEEE; 2021. pp. 1–6. https://ieeexplore.ieee.org/xpl/conhome/9500243/proceeding.

Xue Y, Zhang Q, Neri F. Self-adaptive particle swarm optimization-based echo state network for time series prediction. Int J Neural Syst. 2021;31(12):2150057.

Rjoob K, Bond R, Finlay D, et al. Machine learning and the electrocardiogram over two decades: Time series and meta-analysis of the algorithms, evaluation metrics and applications[J]. Artif Intell Med. 2022;132(/):102381.

Shahi S, Fenton F H, Cherry E M. A machine-learning approach for long-term prediction of experimental cardiac action potential time series using an autoencoder and echo state networks[J]. Chaos: An Interdisciplinary J Nonlinear Sci. 2022;32(6):1–14.

Qing L, Lulu X. Comparison and analysis of research hotspot trend prediction models based on machine learning algorithm - BP neural network, Support Vector Machine and LSTM model. Mod Intell. 2019;39(04):24–34.

Kamarul Azman S, Isbeih Y, Moursi MS, Elbassioni K. A Unified Online Deep Learning Prediction Model for Small Signal and Transient Stability. IEEE Trans Power Syst. 2020;35(6):4585–98.

He Z, Han M, Han B. Pattern-adaptive time series prediction via online learning and paralleled processing using CUDA. In: 2019 IEEE 16th international conference on mobile ad hoc and sensor systems workshops (MASSW). IEEE; 2019. pp. 31–6. https://ieeexplore.ieee.org/xpl/conhome/9044469/proceeding.

Hochreiter S, Schmidhuber J. Long Short-Term Memory. Neural Comput. 1997;9(8):1735–80.

Wang S, Gang H, Guosheng H, Chunli X, Haiqiang L. Application of long-term and short-term memory network based on gray wolf optimization algorithm in time series prediction. Chin Sci Pap. 2017;12(20):32–7.

Liu L, Han M, Zhou Y, Wang Y. Lstm recurrent neural networks for influenza trends prediction. In: Bioinformatics Research and Applications: 14th International Symposium, ISBRA 2018, Beijing, China, June 8-11, 2018, Proceedings 14. Springer Publishing: Lecture Notes in Computer Science; 2018. pp. 259–64. https://www.springer.com/series/0558.

Furkan B. A Hybrid Attention-based LSTM-XGBoost Model for Detection of ECG-based Atrial Fibrillation. Gazi Univ J Sci Part A Eng Innov. 2022;9(3):199–210.

Moniz JRA, Krueger D. Nested LSTMs. Proc Mach Learn Res. 2017;77(1):530–44.

Ma X, Li Y, Cui Z, Wang Y. Forecasting Transportation Network Speed Using Deep Capsule Networks with Nested LSTM Models. IEEE Trans Intell Transp Syst. 2021;22(8):4813–24.

Goldberger AL, Amaral LA, Glass L, Hausdorff JM, Ivanov PC, Mark RG, et al. PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation. 2000;101(23):e215–20.

Acknowledgements

All authors conceptualized the study. W.K and C.W participated in data collection, analysis, interpretation of data and drafted the manuscript; Z.K and L.B.T participated in interpretation of results; H.M.guided the revision of the paper. All authors have critically reviewed the manuscript and approved the final version.

Funding

This research was funded by the National Science and Technology Program Grant No. G2022016011L, LingYan Research and Development Projects of Science and Technology Department of the Zhejiang Province of China under Grants No. 2022C03122, No.2024C01165 and No. 2023C03189, Public Welfare Technology Application and Research Project of Zhejiang Province of China under Grant No. LGF21F010004.

Author information

Authors and Affiliations

Contributions

All authors conceptualized the study. W.K and C.W participated in data collection, analysis, interpretation of data and drafted the manuscript; Z.K and L.B.T participated in interpretation of resultss; H.M.guided the revision of the paper. All authors have critically reviewed the manuscript and approved the final version.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The dataset used in this research is publicly available and can be accessed at https://physionet.org/content/sddb/1.0.0/.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wang, K., Zhang, K., Liu, B. et al. Early prediction of sudden cardiac death risk with Nested LSTM based on electrocardiogram sequential features. BMC Med Inform Decis Mak 24, 94 (2024). https://doi.org/10.1186/s12911-024-02493-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-024-02493-4