Abstract

Purpose

With the in-depth application of machine learning(ML) in clinical practice, it has been used to predict the mortality risk in patients with traumatic brain injuries(TBI). However, there are disputes over its predictive accuracy. Therefore, we implemented this systematic review and meta-analysis, to explore the predictive value of ML for TBI.

Methodology

We systematically retrieved literature published in PubMed, Embase.com, Cochrane, and Web of Science as of November 27, 2022. The prediction model risk of bias(ROB) assessment tool (PROBAST) was used to assess the ROB of models and the applicability of reviewed questions. The random-effects model was adopted for the meta-analysis of the C-index and accuracy of ML models, and a bivariate mixed-effects model for the meta-analysis of the sensitivity and specificity.

Result

A total of 47 papers were eligible, including 156 model, with 122 newly developed ML models and 34 clinically recommended mature tools. There were 98 ML models predicting the in-hospital mortality in patients with TBI; the pooled C-index, sensitivity, and specificity were 0.86 (95% CI: 0.84, 0.87), 0.79 (95% CI: 0.75, 0.82), and 0.89 (95% CI: 0.86, 0.92), respectively. There were 24 ML models predicting the out-of-hospital mortality; the pooled C-index, sensitivity, and specificity were 0.83 (95% CI: 0.81, 0.85), 0.74 (95% CI: 0.67, 0.81), and 0.75 (95% CI: 0.66, 0.82), respectively. According to multivariate analysis, GCS score, age, CT classification, pupil size/light reflex, glucose, and systolic blood pressure (SBP) exerted the greatest impact on the model performance.

Conclusion

According to the systematic review and meta-analysis, ML models are relatively accurate in predicting the mortality of TBI. A single model often outperforms traditional scoring tools, but the pooled accuracy of models is close to that of traditional scoring tools. The key factors related to model performance include the accepted clinical variables of TBI and the use of CT imaging.

Similar content being viewed by others

Instruction

Traumatic brain injury (TBI) is a major global health problem, with 950,000 TBI cases per year [1], which is mainly triggered by falls and road injuries. With an increasing population density, the aging of the population, and the use of motor vehicles, motorcycles, and bicycles, the incidence of TBI may continue to increase over time, and patients with TBI are prone to death and disability [2]. Among various injuries, TBI accounts for 1/3 to 1/2 of all deaths from injuries and is the leading cause of disability in patients aged below 40 [3]. TBI has caused a heavy burden in low- and middle-income countries due to the high incidence, mortality, disability, social economic losses, and reduced life expectancy and life quality [4]. Therapeutic treatments are often determined based on the prognosis of patients. Hence, reliable prognosis is essential for a neurosurgeon to decide whether to perform surgical treatment [5]. The use of calculation tools for the predication of TBI patient’s prognosis facilitates physicians to increase or reduce the frequency of interventions in patients with good or poor prognoses respectively.

The scoring tools currently applied in clinical practice to judge the prognosis of TBI patients include the Glasgow Outcome Scale and Head Marshall CT Classification [6, 7], with differences in the use of scoring tools for different wards, for instance, SOFA (Sequential Organ Failure Assessment), APACHE II (Acute Physiology and Chronic Health Disease Classification System II), and GCS (Glasgow Coma Scale) are often used in intensive care units (ICUs) to roughly assess the prognosis of patients [8]. However, diversified evaluation nodes and usage scenarios often make these tools fail to achieve an expected predictive performance in the precise stratification of TBI patients. For example, there are controversies between the GCS score and the Full Outline of Unresponsiveness (FOUR) Score, although the latter was developed to address the evaluation shortcomings of the former [9, 10].

Moreover, there are currently many types of scoring tools available, but neither comprehensive and systematic exploration of the predictive factors related to prognosis, nor horizontal comparison has been reported. Whether there are differences in predicting prognosis between these tools and how to choose an appropriate clinical scoring tool remain to be elucidated. Over the years, some scholars have applied the machine learning(ML) method to establish predictive models for patients with traumatic brain injuries(TBI), including neural network(NN), Naive Bayes(NB), logistic regression(LR), decision tree(DT), and support vector machine(SVM) [11, 12]. ML prediction models differ from previous scoring tools in predictive factors. However, there is a lack of evidence to underpin that the prediction performance of predictive factors in ML models is better than that of traditional scoring tools. Moreover, whether ML prediction models have better predictive performance than clinical scoring tools remains controversial. The latest guidelines for severe brain injury management do not provide an answer to this question. Therefore, we conducted this systematic review and meta-analysis to explore the predictive value of ML versus traditional scoring tools in the mortality risk stratification of TBI patients.

Methodology

The systematic review was implemented in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses of Diagnostic Test Accuracy Reviews (PRISMA-DTA) statements. The study protocol has been registered in the International Prospective Register of Systematic Reviews (PROSPERO) and approved prior to the start of the study (ID: CRD42022377756).

Inclusion and exclusion criteria

Inclusion criteria

-

(1)

The study subjects are patients diagnosed with TBI;

-

(2)

The study types are case-control studies, cohort studies, nested case-control studies, and case-cohort studies;

-

(3)

A complete prediction model for mortality risk was constructed;

-

(4)

Studies without external verification were also included;

-

(5)

Different studies on ML algorithms were published based on the same data set;

-

(6)

Studies reported in English were included.

Exclusion criteria

-

(1)

Studies of the following types: meta-analysis, review, guidance, expert opinion, etc.;

-

(2)

Only risk factors were analyzed, and no complete ML model was constructed;

-

(3)

Studies lacking the following outcome measures which are used to assess the accuracy of the ML model: Roc, C-statistics, C-index, sensitivity, specificity, accuracy, recovery rate, accuracy rate, confusion matrix, diagnostic four-grid table, F1 score, and calibration curve;

-

(4)

Studies on the accuracy of single-factor prediction.

Retrieval strategy

As of November 27, 2022, a systematic retrieval was conducted in the databases including PubMed, Embase.com, Cochrane, and Web of Science. The retrieval terms include subject terms and free text words. The following terms (including synonyms and closely related words) are used as index terms or free text words: "traumatic brain injuries", and "machine learning". Only peer-reviewed articles are included. The complete retrieval strategy for all databases is provided in Table S1.

Literature screening and data extraction

We imported the retrieved literature into Endnote, screened the initial eligible original studies by title and abstract after checking for duplication, downloaded the full texts of the potentially eligible studies, and then determined the final eligible original studies according to a full-text review. Before data extraction, a standard data extraction spreadsheet was prepared. The extracted data contained the title of the literature, the author of the literature, year of publication, country of the author, study type, patient source, background of TBI occurred, time of death, number of dead samples, total sample size, number of dead samples in the training set, total sample size in the training set, generation method of the verification set, number of dead samples in the verification set, sample size of the verification set, processing method for missing values, variable screening method, type of model used, and modeling variables. The literature screening and data extraction mentioned above were implemented by two independent investigators (JW and MJY). Dissents, if any, were solved by a third investigator (HCW).

Risk of bias assessment

Two investigators used the prediction model risk of bias(ROB) assessment tool (PROBAST) to assess the ROB of each model in each study and the applicability of our reviewed questions [13]. Disagreements, if any, were solved by a third investigator. Based on a series of specific questions, each model was respectively assessed as having a "high", "unclear" or "low" ROB in four domains (participators, predictors, outcomes, and analysis). The same scale was used to assess the applicability of each model to our reviewed questions in three domains (participators, predictors, and results).

Outcome measures

To quantitatively summarize the prediction performance of ML models, we extracted discrimination and calibration measures. The discriminative ability quantified the capacity of the models to distinguish individual development from non-development results. The C-index or the area under the receiver operating characteristic curve (AUROC) with a 95% confidence interval (95% CI) was extracted. When the number of death events was very small in original studies, the C-index was insufficient to reflect the accuracy of models in predicting death cases, so our outcome measures also included sensitivity and specificity. If none of such outcomes were available but the AUC value was reported, sensitivity and specificity were obtained from the Roc curve based on the optimal Youden index.

Statistical methods

Meta-analysis was performed on the C-index and accuracy of ML models. If the C-index lacks 95% CI and standard errors, we estimated the standard errors by referring to the studies of Debray TP et al. [14]. Given the differences in the variables included in the ML models and the inconsistency in parameters, a random-effects model was preferred for the meta-analysis of the C-index. In addition, a bivariate mixed-effects model was adopted for the meta-analysis of the sensitivity and specificity. The meta-analysis was conducted using R4.2.0 (R development Core Team, Vienna, http://www.R-project.org).

Result

Literature screening results

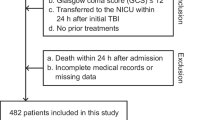

The study selection process is shown in Fig. 1. A total of 3,701 unique records were identified, and 76 reports were reviewed. After excluding studies with no full texts, conference abstracts, registration protocols, failure to provide any outcome measures for ML accuracy, and failure to create a new ML model, 47 studies were finally included [11, 12, 15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59].

Basic characteristics of the included literature

The 47 included studies were from Asia (N = 17, 36%), Europe (N = 16, 34%), America (N = 12, 26%), and Middle East (N = 2, 4%). The total number of subjects was 2,080,819. The study type included case-control studies (N = 32), prospective cohort studies (N = 10), and retrospective cohort studies (N = 4). Most articles were mainly published in 2022 (N = 10), followed by 2020 (N = 7), and 2018 (N = 6). Totally, 156 models were included, including 122 newly-developed ML models, of which there were 98 ML models for predicting in-hospital mortality, and 24 ML models for predicting out-of-hospital mortality. The ML models for predicting in-hospital mortality mainly included the SVM (N = 16), DT (N = 13), LR (N = 12), random forest(RF) (N = 10), K-Nearest Neighbor(KNN) (N = 8), and NN (N = 8).

Predictors

In the newly-developed ML models, the most common variable was the GCS score (N = 88; 9.6%), age (N = 74; 8.1%), CT classification (N = 72; 7.9%), pupil size/light reflex (N = 31; 3.4%), glucose (N = 30; 3.3%), systolic blood pressure (N = 30; 3.3%), hypotension (N = 15; 1.6%), heart rate (N = 15; 1.6%), and hypoxemia: (N = 14; 1.5%). The specific predictors are presented in Table S2.

Risk of bias assessment result

Most study subjects were distributed in case-control studies, and the ROB was low in the domain of participants. Regarding the domain of predictors, there was a high bias in whether predictors were assessed without clear result data (N = 83; 68%). Because most studies included were case-control studies, and only a small number of them were prospective studies and retrospective cohort studies, the ROB in outcomes was low or unclear. As for the statistical analysis, there was a high bias (N = 67) in terms of whether the sample size was reasonable, which was mainly caused by the lack of an independent verification cohort or a sample size that was less than 100 for verification. There was a small amount of high bias in terms of whether the selection of predictors by a single-factor analysis was avoided, while the rest were at a low or unclear ROB (Fig. 2).

Meta-analysis Result

In-hospital mortality

Newly-developed machine learning models

The pooled C-index of 98 ML prediction models in predicting in-hospital mortality was 0.86 (95% CI: 0.84, 0.87) in the training set (Fig. 3). The sensitivity and specificity of 64 models were reported, and the pooled sensitivity and specificity were 0.79 (95% CI: 0.75, 0.82) and 0.89 (95% CI: 0.86, 0.92), respectively. For the in-hospital mortality, the verification set only included LR (N = 3) [C-index: 0.86 (95% CI: 0.81, 0.90); sensitivity: 0.59 to 0.66; specificity: 0.93 to 0.92]. The most common algorithms in ML prediction included the SVM (N = 16) [C-index: 0.87 (95% CI: 0.83, 0.92); sensitivity: 0.86 (95% CI: 0.80, 0.91); specificity: 0.87 (95% CI: 0.74, 0.94)], the DT (N = 13) [C-index: 0.82 (95% CI: 0.76, 0.88); sensitivity: 0.83 (95% CI: 0.67, 0.92); specificity: 0.93 (95% CI: 0.78, 0.98)], the LR (N = 12) [C-index: 0.88 (95% CI: 0.83, 0.93); sensitivity: 0.68 (95% CI: 0.58, 0.77); specificity: 0.91 (95% CI: 0.85, 0.95)], the RF (N = 10) [C-index: 0.85 (95% CI: 0.81, 0.89); sensitivity: 0.86 (95% CI: 0.73, 0.93); specificity: 0.92 (95% CI: 0.76, 0.98)], the NN (N = 8) [C-index: 0.91 (95% CI: 0.88, 0.93); sensitivity: 0.78 (95% CI: 0.57, 0.91); specificity: 0.92 (95% CI: 0.87, 0.95)], and the KNN (N = 8) [C-index: 0.79 (95% CI: 0.70, 0.88)] (Tables 1 and 2).

Mature Clinically Recommended Tools

There were 16 clinical scoring tools and some tools externally validated in large-scale cohort studies for predicting the in-hospital mortality, with a pooled C-index of 0.86 (95% CI: 0.85, 0.88) in the training set. The sensitivity and specificity of 11 tools were reported, and the pooled sensitivity and specificity were 0.71 (95% CI: 0.64, 0.78) and 0.84 (95% CI: 0.78, 0.89), respectively. In the verification set, there were only 3 tools predicting in-hospital mortality, with a pooled C-index of 0.687 (95% CI: 0.525, 0.848), with no sensitivity or specificity (Figs. 3, 4 and 5a, b).

Out-of-hospital mortality

Newly developed machine learning models

There were 24 ML prediction models predicting the out-of-hospital mortality in the training set, with a pooled C-index of 0.83 (95%CI: 0.81, 0.85). The sensitivity and specificity 4 models were reported, and the pooled sensitivity and specificity were 0.74 (95%CI: 0.67, 0.81) and 0.75 (95%CI: 0.66, 0.82), respectively. In the verification set, there were 9 prediction models predicting the out-of-hospital mortality, and the pooled C-index was 0.82 (95%CI: 0.81, 0.83), with no sensitivity or specificity. The most common algorithm of ML prediction models for out-of-hospital mortality was the LR (N = 10) [C-index: 0.84 (95%CI: 0.81, 0.88); sensitivity: 0.70 to 0.77; specificity: 0.61 to 0.75] (Tables 1 and 2).

Mature Clinically Recommended Tools

In the training set, there were 18 clinical scoring tools and some tools externally validated in large-scale cohort studies for predicting out-of-hospital mortality, and the pooled C-index was 0.817 (95%CI: 0.789, 0.845). The sensitivity and specificity of 7 tools were reported, and the pooled sensitivity and specificity were 0.75 (95%CI: 0.68, 0.80) and 0.79 (95%CI: 0.73, 0.84), respectively. In the verification set, there were only 4 tools predicting out-of-hospital mortality, and the pooled C-index was 0.813 (95%CI: 0.789, 0.836), with no sensitivity or specificity.

Discussion

Main findings

Our study is the first of its kind to systematically review the performance of ML in predicting the mortality of patients with TBI. According to the meta-analysis of 47 studies, ML models are highly accurate in predicting the mortality of patients with TBI. For instance, the C-index of most ML models such as the SVM, DT, LR, RF, and NN was greater than 0.8, especially the C-index of the NN, which was greater than 0.9. In addition, our studies also included some clinical scoring tools. The C-index of GCS predicting in-hospital mortality was 0.88 (95% CI: 0.85, 0.89), the sensitivity was 0.50 to 0.51, and the specificity was 0.90. Further, external validated tools in large-scale cohort studies were also included, such as CRASH core [C-index: 0.92 (95% CI: 0.89, 0.96); sensitivity: 0.73; specificity: 0.90] and IMPACT ct [C-index: 0.88 (95%CI: 0.84, 0.91); sensitivity: 0.80; specificity: 0.78]. In terms of in-hospital mortality, there was no huge difference in the overall prediction performance of ML and traditional scoring tools, but the prediction performance of the NN and batch normalization was outstanding. Regarding out-of-hospital mortality, the prediction performance of ML was slightly superior to traditional clinical scoring and some tools externally validated in large cohort studies. Recently published studies have revealed that there is a trend that the performance of the ML model is superior to that of LR, which may be related to the recent improvements in ML algorithms and the increasing popularity of special statistical software. The clinical decision support system should be constantly updated and improved, to meet the high requirements for risk prediction models in clinical practice, and further realize multidisciplinary collaborative decision-making as well as the benchmarking and monitoring of achievements. However, for predicting out-of-hospital mortality, LR is still the most frequently used model, which is easy to understand and realize owing to its simplicity, with no need for complex mathematical knowledge. In addition, LR can better interpret the result as well as the influence of variables on the result by coefficients, making it easy to analyze and understand. Furthermore, LR is easy to realize, because most programming languages provided corresponding bases and tools, making it easy to develop and apply.

Clinical practice findings

In clinical practice, the initial assessment and treatment of acute TBI are still dependent on the GCS scale. GCS remains the most widely used scoring system for assessing the severity of TBI because it is fast and direct. Despite its utility, GCS also has important limitations, and cannot provide sufficient information, especially in the diagnosis of mild sub-clinical TBI and the prediction of TBI lineage. In the case of mild sub-clinical TBI, patients may have no obvious neurological symptoms, but GCS can only determine the score by evaluating the eye, oral, and limb reactions. Therefore, it may be not sensitive enough and may fail to detect mild TBI, especially mild cognitive and emotional disorders, which may affect the life quality and work capacity of patients. In addition, GCS cannot predict the recovery and long-term prognosis of patients. Although GCS can provide estimated information about the severity of brain injuries, it is still inaccurate to predict the recovery conditions and prognosis of patients by merely relying on the GCS score [60]. Although GCS can be used to predict in-hospital mortality, objectivity and accuracy are still poor, for instance, the speech components of intubated patients cannot be accurately assessed. In such cases, some doctors give the lowest possible score, while others infer the speech reaction score from other neurological manifestations [61]. Besides, GCS cannot detect subtle clinical changes in unconscious patients, such as abnormal brainstem reflexes and abnormal limb postures [62]. Add four score: therefore, we also pay attention to the comprehensive nonresponsiveness scale (four score) for predicting mortality in TBI. It is a new coma scale developed for the limitations of GCs, including eye opening (E), motor function (m), brainstem reflex (b) and respiratory mode (R). Compared with GCS, four score has similar mortality prediction ability, It seems that it can be used as an alternative to predict the early mortality of TBI patients in ICU [63]. Because it has some advantages, for example, in intubated patients, all components of the four score can be scored, but the GCS score cannot be scored [64]. However, its advantages are not obvious. Evidence based medicine evidence shows that the GCS and four scores are of equal value in predicting in-hospital mortality and adverse outcomes. The similar performance of these scores in the evaluation of TBI patients allows medical staff to choose to use any of them according to the situation at hand [65]. And we found that there is no comparative study between ML and the total nonresponsiveness scale in TBI.

In contrast, ML models use a large amount of real data for training and prediction, have wider coverage, contain more variables, and can reveal potential correlations and rules. The development of clinical scoring tools is inevitably subject to the subjective influence of investigators, and some variables may be ignored or over-considered. Additionally, ML models can carry out accurate and detailed predictions based on manifestations and characteristics of cases, thereby avoiding clinical scoring tools’ problem that their results may be too vague and too generalized. Furthermore, ML models can not only learn and optimize prediction algorithms adaptively, thus improving prediction accuracy, but also can implement personalized prediction and design of therapeutic plans based on the characteristics and historical data on patients [66]. However, clinical scoring tools tend to recommend templates and standardized therapeutic plans, which cannot satisfy the needs of different patients. Finally, ML prediction models can process various types of data, including imageology, laboratory, and biochemical information, to help doctors comprehensively evaluate the conditions of patients, while clinical scoring tools usually focus only on a few important indexes, so some implicit influencing factors may be ignored.

ML models used for medical purposes have their respective specificity and applicability, and different models are required for different diseases and clinical problems [67, 68]. However, due to the lack of studies with large sample sizes and in absence of a clear consensus, it is difficult to select a suitable model. Therefore, it is necessary to comprehensively analyze different algorithms and hyper-parameters to find the optimal model [69], and meanwhile consider the precision, complexity and training time of the model. Finally, the performance of the selected model can be assessed by the techniques such as cross-verification, so as to ensure its best efficiency in practical application [70]. ML algorithms make some hypotheses for the relationship between predictors and the target variables, which is called learning deviation, and introduce deviation into the model. The hypothesis made by ML algorithms indicates that some algorithms are more suitable for certain datasets than other algorithms [71]. Therefore, the degree of improvement by ML and the clinical influence are still uncertain. Nonetheless, it is certain that the big data analysis of ML is highly effective for the absorption and assessment of massive complex medical healthcare data. In addition, among the variables we calculated, the GCS score, age, CT classification, pupil, and glucose assay ranked top. GCS, as a clinical scoring tool, has become a variable in the ML model, which is not beyond our expectations, because it contains some key indexes for assessing the prognosis such as language, awareness, and action [72]. Interestingly, we have found the significance of CT classification in predicting the prognosis of TBI, which conforms to the clinical application of CT imaging to intuitively assess TBI of patients, predict the prognosis, help determine therapeutic strategies, and provide information for preparing such rehabilitation plan [73]. However, given the huge amount of CT imaging data, hundreds to thousands of images can be obtained per scanning, with complex data, including many different tissues and structures. Sometimes, artifacts may also affect the quality of images. Therefore, it is difficult to make progress in the traditional studies on CT data and clinical disease changes. Nevertheless, ML can automatically process a large number of clinical CT imaging data and extract useful characteristics, with no need for manual intervention, significantly lowering the time and costs required for clinical CT imaging data processing and analysis, and improving work efficiency. ML algorithms can rapidly and accurately identify and position key organs, structures, and anomalies, and further help doctors make more accurate and scientific medical decisions. In addition, it can also make personalized therapeutic plans, allowing more accurate treatment for patients and improving therapeutic effects [74]. In the future, studies on ML and CT imaging will be further implemented, and ML will be applied in a more extensive range. In this process, larger cohort studies are required to support its clinical application. Meanwhile, interdisciplinary cooperation becomes more and more important, including the cooperation of experts from the medicine, computer science, and mathematical sectors, to speed up the application and development of ML in CT imaging.

Our advantage is that we have conducted a comprehensive retrieval strategy and a throughout analysis. We have included any models for predicting the mortality of TBI, including not only ML models but also traditional tools externally validated in large cohort studies and traditional scoring tools. The most important advantage of ML algorithms is to more effectively handle missing data, and implement more complex calculations. It can perform a non-linear prediction of outcomes, without relying on the data distribution hypothesis, such as the independence and multiple collinearities of observation. Owing to outstanding model identification capacity, ML can help clinicians make decisions, and it has also been demonstrated to have an outstanding performance in identifying endocrine diseases [75] and predicting mortality of inflammatory diseases, etc. [76] .

Our study also has certain limitations. Firstly, the study only included the articles written in English, so it may fail to fully consider the relevant literature in other languages. Secondly, data conversion was used in data extraction, which may result in certain deviations. The AUC curve, sensitivity, and specificity were not reported in some of the included studies. Finally, although the meta-analysis has fully summarized the characteristics and trends of relevant literature, more refined explorations are still required, for instance, on the prediction capacity of ML for TBI mortality of different patient groups in different regions, which can provide a clear understanding of the actual feasibility of ML in predicting TBI mortality.

Conclusion

According to the systematic review and meta-analysis, ML models can predict mortality of TBI, with an excellent discriminative ability in case-control studies. The key factors related to the model performance include the accepted clinical variables of TBI and the use of CT imaging. Although a single model is often superior to traditional scoring tools, the assessment of the summary performance is restricted by the heterogeneity of studies. Therefore, it is necessary to develop a standardized report guideline on ML for TBI. Currently, ML models are rarely used clinically, so it is in urgent demand for determining their clinical influence on different patient groups, to ensure their universality and apply the theoretical knowledge to clinical practices.

Availability of data and materials

All data generated or analyzed during this study are included in this published article (and its Supplementary Information files).

References

Dewan MC, Rattani A, Gupta S, Baticulon RE, Hung YC, Punchak M, Agrawal A, Adeleye AO, Shrime MG, Rubiano AM et al. Estimating the global incidence of traumatic brain injury. J Neurosurg 2018;130(4):1080–97.

Collaborators GTBIaSCI. Global, regional, and national burden of traumatic brain injury and spinal cord injury, 1990–2016: a systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019;18(1):56–87.

Daugherty J, Waltzman D, Sarmiento K, Xu L. Traumatic Brain Injury-Related Deaths by Race/Ethnicity, Sex, Intent, and Mechanism of Injury - United States, 2000–2017. MMWR Morbidity and mortality weekly report. 2019;68(46):1050–6.

De Silva MJ, Roberts I, Perel P, Edwards P, Kenward MG, Fernandes J, Shakur H, Patel V. Patient outcome after traumatic brain injury in high-, middle- and low-income countries: analysis of data on 8927 patients in 46 countries. Int J Epidemiol. 2009;38(2):452–8.

Williamson T, Ryser MD, Abdelgadir J, Lemmon M, Barks MC, Zakare R, Ubel PA. Surgical decision making in the setting of severe traumatic brain injury: A survey of neurosurgeons. PLoS ONE. 2020;15(3):e0228947.

McMillan T, Wilson L, Ponsford J, Levin H, Teasdale G, Bond M. The Glasgow Outcome Scale – 40 years of application and refinement. Nat reviews Neurol. 2016;12(8):477–85.

Degos V, Lescot T, Zouaoui A, Hermann H, Préteux F, Coriat P, Puybasset L. Computed tomography-estimated specific gravity of noncontused brain areas as a marker of severity in human traumatic brain injury. Anesth Analg. 2006;103(5):1229–36.

Karami Niaz M, Fard Moghadam N, Aghaei A, Mardokhi S. Evaluation of mortality prediction using SOFA and APACHE IV tools in trauma and non-trauma patients admitted to the ICU. Eur J Med Res. 2022;27(1):188.

Saika A, Bansal S, Philip M, Devi BI, Shukla DP. Prognostic value of FOUR and GCS scores in determining mortality in patients with traumatic brain injury. Acta Neurochir (Wien). 2015;157(8):1323–8.

Wijdicks EF, Bamlet WR, Maramattom BV, Manno EM, McClelland RL. Validation of a new coma scale: The FOUR score. Ann Neurol. 2005;58(4):585–93.

van der Ploeg T, Nieboer D, Steyerberg EW. Modern modeling techniques had limited external validity in predicting mortality from traumatic brain injury. J Clin Epidemiol. 2016;78:83–9.

Rau CS, Kuo PJ, Chien PC, Huang CY, Hsieh HY, Hsieh CH. Mortality prediction in patients with isolated moderate and severe traumatic brain injury using machine learning models. PLoS ONE. 2018;13(11):e0207192.

Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS, Reitsma JB, Kleijnen J, Mallett S. PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann Intern Med. 2019;170(1):51–8.

Debray TP, Damen JA, Riley RD, Snell K, Reitsma JB, Hooft L, Collins GS, Moons KG. A framework for meta-analysis of prediction model studies with binary and time-to-event outcomes. Stat Methods Med Res. 2019;28(9):2768–86.

Rostami E, Gustafsson D, Hanell A, Howells T, Lenell S, Lewen A, Enblad P. Prognosis in moderate-severe traumatic brain injury in a Swedish cohort and external validation of the IMPACT models. Acta Neurochir (Wien). 2022;164(3):615–24.

Nourelahi M, Dadboud F, Khalili H, Niakan A, Parsaei H. A machine learning model for predicting favorable outcome in severe traumatic brain injury patients after 6 months. Acute Crit Care. 2022;37(1):45–52.

Raj R, Luostarinen T, Pursiainen E, Posti JP, Takala RSK, Bendel S, Konttila T, Korja M. Machine learning-based dynamic mortality prediction after traumatic brain injury. Sci Rep. 2019;9(1):17672.

Abdelhamid SS, Scioscia J, Vodovotz Y, Wu J, Rosengart A, Sung E, Rahman S, Voinchet R, Bonaroti J, Li S et al. Multi-Omic Admission-Based Prognostic Biomarkers Identified by Machine Learning Algorithms Predict Patient Recovery and 30-Day Survival in Trauma Patients. Metabolites. 2022;12(9):774.

Tu KC, Eric Nyam TT, Wang CC, Chen NC, Chen KT, Chen CJ, Liu CF, Kuo JR. A Computer-Assisted System for Early Mortality Risk Prediction in Patients with Traumatic Brain Injury Using Artificial Intelligence Algorithms in Emergency Room Triage. Brain. Sci 2022;12(5):612.

Wong TH, Nadkarni NV, Nguyen HV, Lim GH, Matchar DB, Seow DCC, King NKK, Ong MEH. One-year and three-year mortality prediction in adult major blunt trauma survivors: a National Retrospective Cohort Analysis. Scand J Trauma Resusc Emerg Med. 2018;26(1):28.

Rau CS, Wu SC, Chien PC, Kuo PJ, Chen YC, Hsieh HY, Hsieh CH. Prediction of Mortality in Patients with Isolated Traumatic Subarachnoid Hemorrhage Using a Decision Tree Classifier: A Retrospective Analysis Based on a Trauma Registry System. Int J Environ Res Public Health. 2017;14(11):1420.

Abujaber A, Fadlalla A, Gammoh D, Abdelrahman H, Mollazehi M, El-Menyar A. Prediction of in-hospital mortality in patients on mechanical ventilation post traumatic brain injury: machine learning approach. BMC Med Inform Decis Mak. 2020;20(1):336.

Christie SA, Hubbard AE, Callcut RA, Hameed M, Dissak-Delon FN, Mekolo D, Saidou A, Mefire AC, Nsongoo P, Dicker RA, et al. Machine learning without borders? An adaptable tool to optimize mortality prediction in diverse clinical settings. J Trauma Acute Care Surg. 2018;85(5):921–7.

Raj R, Wennervirta JM, Tjerkaski J, Luoto TM, Posti JP, Nelson DW, Takala R, Bendel S, Thelin EP, Luostarinen T, et al. Dynamic prediction of mortality after traumatic brain injury using a machine learning algorithm. NPJ Digit Med. 2022;5(1):96.

Maeda Y, Ichikawa R, Misawa J, Shibuya A, Hishiki T, Maeda T, Yoshino A, Kondo Y. External validation of the TRISS, CRASH, and IMPACT prognostic models in severe traumatic brain injury in Japan. PLoS ONE. 2019;14(8):e0221791.

Hsu SD, Chao E, Chen SJ, Hueng DY, Lan HY, Chiang HH. Machine Learning Algorithms to Predict In-Hospital Mortality in Patients with Traumatic Brain Injury. J Pers Med. 2021;11(11):1144.

Zheng RZ, Zhao ZJ, Yang XT, Jiang SW, Li YD, Li WJ, Li XH, Zhou Y, Gao CJ, Ma YB, et al. Initial CT-based radiomics nomogram for predicting in-hospital mortality in patients with traumatic brain injury: a multicenter development and validation study. Neurol Sci. 2022;43(7):4363–72.

Wu E, Marthi S, Asaad WF. Predictors of Mortality in Traumatic Intracranial Hemorrhage: A National Trauma Data Bank Study. Front Neurol. 2020;11:587587.

Zhang G, Wang M, Cong D, Zeng Y, Fan W. Traumatic injury mortality prediction (TRIMP-ICDX): A new comprehensive evaluation model according to the ICD-10-CM codes. Med (Baltim). 2022;101(31):e29714.

Camarano JG, Ratliff HT, Korst GS, Hrushka JM, Jupiter DC. Predicting in-hospital mortality after traumatic brain injury: External validation of CRASH-basic and IMPACT-core in the national trauma data bank. Injury. 2021;52(2):147–53.

Matsuo K, Aihara H, Nakai T, Morishita A, Tohma Y, Kohmura E. Machine Learning to Predict In-Hospital Morbidity and Mortality after Traumatic Brain Injury. J Neurotrauma. 2020;37(1):202–10.

Morris RS, Tignanelli CJ, deRoon-Cassini T, Laud P, Sparapani R. Improved Prediction of Older Adult Discharge After Trauma Using a Novel Machine Learning Paradigm. J Surg Res. 2022;270:39–48.

Gao L, Smielewski P, Li P, Czosnyka M, Ercole A. Signal Information Prediction of Mortality Identifies Unique Patient Subsets after Severe Traumatic Brain Injury: A Decision-Tree Analysis Approach. J Neurotrauma. 2020;37(7):1011–9.

Ronning PA, Pedersen T, Skaga NO, Helseth E, Langmoen IA, Stavem K. External validation of a prognostic model for early mortality after traumatic brain injury. J Trauma. 2011;70(4):E56–61.

Amorim RL, Oliveira LM, Malbouisson LM, Nagumo MM, Simoes M, Miranda L, Bor-Seng-Shu E, Beer-Furlan A, De Andrade AF, Rubiano AM, et al. Prediction of Early TBI Mortality Using a Machine Learning Approach in a LMIC Population. Front Neurol. 2019;10:1366.

Guimarães KAA, de Amorim RLO, Costa MGF, Costa Filho CFF. Predicting early traumatic brain injury mortality with 1D convolutional neural networks and conventional machine learning techniques. Inf Med Unlocked 2022, 31. https://doi.org/10.1016/j.imu.2022.100984.

Daley M, Cameron S, Ganesan SL, Patel MA, Stewart TC, Miller MR, Alharfi I, Fraser DD. Pediatric severe traumatic brain injury mortality prediction determined with machine learning-based modeling. Injury. 2022;53(3):992–8.

Hale AT, Stonko DP, Brown A, Lim J, Voce DJ, Gannon SR, Le TM, Shannon CN. Machine-learning analysis outperforms conventional statistical models and CT classification systems in predicting 6-month outcomes in pediatric patients sustaining traumatic brain injury. Neurosurg Focus. 2018;45(5):E2.

Feng JZ, Wang Y, Peng J, Sun MW, Zeng J, Jiang H. Comparison between logistic regression and machine learning algorithms on survival prediction of traumatic brain injuries. J Crit Care. 2019;54:110–6.

Zhu P, Hussein NM, Tang J, Lin L, Wang Y, Li L, Shu K, Zou P, Xia Y, Bai G, et al. Prediction of Early Mortality Among Children With Moderate or Severe Traumatic Brain Injury Based on a Nomogram Integrating Radiological and Inflammation-Based Biomarkers. Front Neurol. 2022;13:865084.

Gravesteijn BY, Nieboer D, Ercole A, Lingsma HF, Nelson D, van Calster B, Steyerberg EW. collaborators C-T: Machine learning algorithms performed no better than regression models for prognostication in traumatic brain injury. J Clin Epidemiol. 2020;122:95–107.

Han J, King NK, Neilson SJ, Gandhi MP, Ng I. External validation of the CRASH and IMPACT prognostic models in severe traumatic brain injury. J Neurotrauma. 2014;31(13):1146–52.

Harrison DA, Griggs KA, Prabhu G, Gomes M, Lecky FE, Hutchinson PJ, Menon DK, Rowan KM. Risk Adjustment In Neurocritical care Study I: External Validation and Recalibration of Risk Prediction Models for Acute Traumatic Brain Injury among Critically Ill Adult Patients in the United Kingdom. J Neurotrauma. 2015;32(19):1522–37.

Lesko MM, Jenks T, O'Brien SJ, Childs C, Bouamra O, Woodford M, Lecky F. Comparing model performance for survival prediction using total Glasgow Coma Scale and its components in traumatic brain injury. J Neurotrauma. 2013;30(1):17–22.

Li X, Lu C, Wang J, Wan Y, Dai SH, Zhang L, Hu XA, Jiang XF, Fei Z. Establishment and validation of a model for brain injury state evaluation and prognosis prediction. Chin J Traumatol. 2020;23(5):284–9.

Nelson DW, Rudehill A, MacCallum RM, Holst A, Wanecek M, Weitzberg E, Bellander BM. Multivariate outcome prediction in traumatic brain injury with focus on laboratory values. J Neurotrauma. 2012;29(17):2613–24.

Rached M, Gaudet JG, Delhumeau C, Walder B. Comparison of two simple models for prediction of short term mortality in patients after severe traumatic brain injury. Injury. 2019;50(1):65–72.

Abujaber A, Fadlalla A, Gammoh D, Abdelrahman H, Mollazehi M, El-Menyar A. Prediction of in-hospital mortality in patients with post traumatic brain injury using National Trauma Registry and Machine Learning Approach. Scand J Trauma Resusc Emerg Med. 2020;28(1):44.

Raj R, Siironen J, Kivisaari R, Hernesniemi J, Skrifvars MB. Predicting outcome after traumatic brain injury: development of prognostic scores based on the IMPACT and the APACHE II. J Neurotrauma. 2014;31(20):1721–32.

Rughani AI, Dumont TM, Lu Z, Bongard J, Horgan MA, Penar PL, Tranmer BI. Use of an artificial neural network to predict head injury outcome. J Neurosurg. 2010;113(3):585–90.

Shi HY, Hwang SL, Lee KT, Lin CL. In-hospital mortality after traumatic brain injury surgery: a nationwide population-based comparison of mortality predictors used in artificial neural network and logistic regression models. J Neurosurg. 2013;118(4):746–52.

Sut N, Simsek O. Comparison of regression tree data mining methods for prediction of mortality in head injury. Expert Syst Appl. 2011;38(12):15534–9.

Weimer JM, Nowacki AS, Frontera JA. Withdrawal of Life-Sustaining Therapy in Patients With Intracranial Hemorrhage: Self-Fulfilling Prophecy or Accurate Prediction of Outcome? Crit Care Med. 2016;44(6):1161–72.

Kaewborisutsakul A, Tunthanathip T. Development and internal validation of a nomogram for predicting outcomes in children with traumatic subdural hematoma. Acute Crit Care. 2022;37(3):429–37.

Servia L, Montserrat N, Badia M, Llompart-Pou JA, Barea-Mendoza JA, Chico-Fernandez M, Sanchez-Casado M, Jimenez JM, Mayor DM, Trujillano J. Machine learning techniques for mortality prediction in critical traumatic patients: anatomic and physiologic variables from the RETRAUCI study. BMC Med Res Methodol. 2020;20(1):262.

Bhattacharyay S, Milosevic I, Wilson L, Menon DK, Stevens RD, Steyerberg EW, Nelson DW, Ercole A. participants C-Ti: The leap to ordinal: Detailed functional prognosis after traumatic brain injury with a flexible modelling approach. PLoS ONE. 2022;17(7):e0270973.

Hashemi B, Amanat M, Baratloo A, Forouzanfar MM, Rahmati F, Motamedi M, Safari S. Validation of CRASH Model in Prediction of 14-day Mortality and 6-month Unfavorable Outcome of Head Trauma Patients. Emerg (Tehran Iran). 2016;4(4):196–201.

Signorini DF, Andrews PJ, Jones PA, Wardlaw JM, Miller JD. Predicting survival using simple clinical variables: a case study in traumatic brain injury. J Neurol Neurosurg Psychiatry. 1999;66(1):20–5.

Andrews PJ, Sleeman DH, Statham PF, McQuatt A, Corruble V, Jones PA, Howells TP, Macmillan CS. Predicting recovery in patients suffering from traumatic brain injury by using admission variables and physiological data: a comparison between decision tree analysis and logistic regression. J Neurosurg. 2002;97(2):326–36.

Maas AI, Stocchetti N, Bullock R. Moderate and severe traumatic brain injury in adults. Lancet Neurol. 2008;7(8):728–41.

Prasad K. The Glasgow Coma Scale: a critical appraisal of its clinimetric properties. J Clin Epidemiol. 1996;49(7):755–63.

Chakrabarti D, Ramesh VJ, Manohar N. Brainstem Contusion: A Fallacy of GCS-BIS Synchrony. J Neurosurg Anesthesiol. 2016;28(4):429–30.

Nyam TE, Ao KH, Hung SY, Shen ML, Yu TC, Kuo JR. FOUR Score Predicts Early Outcome in Patients After Traumatic Brain Injury. Neurocrit Care. 2017;26(2):225–31.

Sadaka F, Patel D, Lakshmanan R. The FOUR score predicts outcome in patients after traumatic brain injury. Neurocrit Care. 2012;16(1):95–101.

Ahmadi S, Sarveazad A, Babahajian A, Ahmadzadeh K, Yousefifard M. Comparison of Glasgow Coma Scale and Full Outline of UnResponsiveness score for prediction of in-hospital mortality in traumatic brain injury patients: a systematic review and meta-analysis. Eur J Trauma Emerg Surg 2022. PMID: 36152069.

Lee S, Reddy Mudireddy A, Kumar Pasupula D, Adhaduk M, Barsotti EJ, Sonka M, Statz GM, Bullis T, Johnston SL, Evans AZ et al. Novel Machine Learning Approach to Predict and Personalize Length of Stay for Patients Admitted with Syncope from the Emergency Department. J Pers Med. 2022;13(1):7.

Sajda P. Machine learning for detection and diagnosis of disease. Annu Rev Biomed Eng. 2006;8:537–65.

Shickel B, Tighe PJ, Bihorac A, Rashidi P. Deep EHR: A Survey of Recent Advances in Deep Learning Techniques for Electronic Health Record (EHR) Analysis. IEEE J biomedical health Inf. 2018;22(5):1589–604.

Ansarullah SI, Mohsin Saif S, Abdul Basit Andrabi S, Kumhar SH, Kirmani MM, Kumar DP. An Intelligent and Reliable Hyperparameter Optimization Machine Learning Model for Early Heart Disease Assessment Using Imperative Risk Attributes. J Healthc Eng. 2022;2022:9882288.

Adnan M, Alarood AAS, Uddin MI, Ur Rehman I. Utilizing grid search cross-validation with adaptive boosting for augmenting performance of machine learning models. PeerJ Comput Sci. 2022;8:e803.

Bernhardt M, Jones C, Glocker B. Potential sources of dataset bias complicate investigation of underdiagnosis by machine learning algorithms. Nat Med. 2022;28(6):1157–8.

Jennett B, Teasdale G, Braakman R, Minderhoud J, Knill-Jones R. Predicting outcome in individual patients after severe head injury. Lancet (London England). 1976;1(7968):1031–4.

Majercik S, Bledsoe J, Ryser D, Hopkins RO, Fair JE, Brock Frost R, MacDonald J, Barrett R, Horn S, Pisani D, et al. Volumetric analysis of day of injury computed tomography is associated with rehabilitation outcomes after traumatic brain injury. J Trauma Acute Care Surg. 2017;82(1):80–92.

Hough DM, Yu L, Shiung MM, Carter RE, Geske JR, Leng S, Fidler JL, Huprich JE, Jondal DY, McCollough CH, et al. Individualization of abdominopelvic CT protocols with lower tube voltage to reduce i.v. contrast dose or radiation dose. AJR Am J Roentgenol. 2013;201(1):147–53.

Ahn JC, Noh YK, Rattan P, Buryska S, Wu T, Kezer CA, Choi C, Arunachalam SP, Simonetto DA, Shah VH, et al. Machine Learning Techniques Differentiate Alcohol-Associated Hepatitis From Acute Cholangitis in Patients With Systemic Inflammation and Elevated Liver Enzymes. Mayo Clin Proc. 2022;97(7):1326–36.

Zhang Z, Yang L, Han W, Wu Y, Zhang L, Gao C, Jiang K, Liu Y, Wu H. Machine Learning Prediction Models for Gestational Diabetes Mellitus: Meta-analysis. J Med Internet Res. 2022;24(3):e26634.

Acknowledgements

We would like to thank the researchers and study participants for their contributions.

Funding

The authors declare that they did not receive any funding from any source.

Author information

Authors and Affiliations

Contributions

Conceptualization: Jue Wang; Methodology: Jue Wang; Formal analysis and investigation: Jue Wang and Ming Jing Yin; Writing - original draft preparation: Jue Wang; Writing - review and editing: Jue Wang; Supervision: Han Chun Wen.And all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All analyses were based on previous published studies, thus no ethical approval and patient consent are required.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Table S1.

Literature search strategy.

Additional file 2: Table S2.

Predictors.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wang, J., Yin, M.J. & Wen, H.C. Prediction performance of the machine learning model in predicting mortality risk in patients with traumatic brain injuries: a systematic review and meta-analysis. BMC Med Inform Decis Mak 23, 142 (2023). https://doi.org/10.1186/s12911-023-02247-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-023-02247-8