Abstract

Background

Clinical decision support (CDS) tools built using adult data do not typically perform well for children. We explored how best to leverage adult data to improve the performance of such tools. This study assesses whether it is better to build CDS tools for children using data from children alone or to use combined data from both adults and children.

Methods

Retrospective cohort using data from 2017 to 2020. Participants include all individuals (adults and children) receiving an elective surgery at a large academic medical center that provides adult and pediatric services. We predicted need for mechanical ventilation or admission to the intensive care unit (ICU). Predictor variables included demographic, clinical, and service utilization factors known prior to surgery. We compared predictive models built using machine learning to regression-based methods that used a pediatric or combined adult-pediatric cohort. We compared model performance based on Area Under the Receiver Operator Characteristic.

Results

While we found that adults and children have different risk factors, machine learning methods are able to appropriately model the underlying heterogeneity of each population and produce equally accurate predictive models whether using data only from pediatric patients or combined data from both children and adults. Results from regression-based methods were improved by the use of pediatric-specific data.

Conclusions

CDS tools for children can successfully use combined data from adults and children if the model accounts for underlying heterogeneity, as in machine learning models.

Similar content being viewed by others

Background

Clinical decision support (CDS) tools are increasingly being used to assist providers during routine clinical care and for treatment decisions. While tools that are developed for specialized environments or specific populations often have better performance [1], there is a logistical cost to implementing and maintaining multiple models. The development of population-specific CDS tools requires significant additional effort for development, implementation, training, and maintenance. Moreover, development of subgroup-specific CDS tools may not be analytically feasible due to the relatively small size of the data sets that would be used for development. Therefore, there is a critical need to determine whether it is better to have multiple tools developed for specific patient populations, or more generalized tools that perform well—though perhaps not optimally—across multiple populations and environments.

Pediatric patients are an important subgroup for which it may be necessary to develop specialized CDS tools. It is well recognized that children have different physiological profiles, risk factors, and event rates for different clinical outcomes and adverse events [2,3,4,5,6,7]. Moreover, there are well documented differences in patterns of healthcare utilization and outcomes between adult and pediatric populations. Because many hospitals serve both pediatric and adult patients, it is necessary to determine whether CDS tools should be built specifically for different age groups versus for all patients. To date, the CDS tools that have been developed using adult data and then applied to children have not performed well [8,9,10,11,12]. Moreover, various pediatric-specific CDS tools or risk indices have been developed to reasonable levels of success, particularly when using modern predictive techniques incorporating machine learning [13,14,15,16,17].

At the beginning of the COVID-19 pandemic, we were tasked with developing a CDS tool for hospital resource utilization after planned elective surgeries, including anticipated length of stay, discharge to a skilled nursing facility, intensive care unit (ICU) admission, requirement for mechanical ventilation) [18]. Since the start of the pandemic surgical leadership has used the CDS tool in conjunction with knowledge of the local COVID-19 infection rates to determine whether to continue with or postpone an elective surgical case if the tertiary care hospital were to become resource-constrained. The data are pulled directly from our Epic-based system into a datamart. An R-script generates the requisite predictions, which are then visualized within a Tableau dashboard. In order to deploy the CDS quickly, we designed our tool to operate across all age groups and implemented it within a Tableau dashboard that has been in use since June 2020. Since implementing the CDS, we have had the opportunity to examine the applicability of the tool within the pediatric patient population. Herein, we directly compare the performance of two sets of CDS tools designed to predict post-surgical resource utilization: one trained on a mixed adult-pediatric data set, the other trained solely on pediatric data set. Additionally, we examine whether a machine-learning algorithm that is equipped to model heterogeneity (i.e., interactions of different characteristics) is better suited to operate across patient populations than a model that cannot model these interactions. Overall, our results show that while children have different risk factors than adults, machine-learning approaches are well suited to modeling these heterogeneities in a mixed sample.

Methods

Data

Study setting

This study was conducted using data from the electronic health records (EHR) system at Duke University Health System, which consists of three hospitals – a large tertiary care hospital and two community hospitals. Pediatric surgeries are almost exclusively performed at the tertiary care center. Our institution has used an integrated EPIC system since 2014, which covers the three hospitals in our system as well as a network of over 100 primary care and outpatient specialty clinics.

Cohort

We abstracted patient and encounter data for all elective surgeries from January 1, 2017, to March 1, 2020 (i.e., prior to the COVID-19 pandemic). There is no formal specification within our EHR for elective surgery. Instead, we included procedures coded with the admission source “Surgery Admit Inpatient.” This code corresponds to instances where the patient is admitted directly to the hospital for surgery rather than via, for example, via the emergency department. Additionally, we excluded procedures taking place on a Saturday or Sunday and any procedures that were not marked as completed. We defined a pediatric patient as any patient less than or equal to 18 years old on the date of their surgery. Patients were considered adults if they were 19 years of age or older at the time of surgery. We developed two cohorts for the purposes of model development. The “combined” cohort included all patients, regardless of age. The “pediatric” cohort excluded patients 19 years of age or older.

Predictor variables

We abstracted patient-level predictor variables known prior to the time of surgery, including patient demographics, service utilization history, medications prescribed in the past year, comorbidities, and surgery-specific factors. We abstracted pre-surgical CPT codes and grouped them by specialty. We retained all codes that had at least 25 total instances, resulting in 284 unique procedure groupings. A total of 53 unique predictor variables (with multiple levels each) were considered (Additional file 1: Tables 1 and 2). For binary variables such as comorbidities and medications, this list was winnowed such that each model used predictors present in at least 0.5% of cases. These binary predictors were calculated separately for the combined and pediatric cohorts. The model based on the combined cohort used 48 predictor variables, while the model based on the pediatric cohort used 34 predictor variables.

Outcome variable definition

In the initial development of the CDS tool, we were tasked with predicting four outcomes related to hospital resource utilization: overall length of stay, admission to the intensive care unit (ICU), requirement for mechanical ventilation, and discharge to a skilled nursing facility. Because children are rarely discharged to a skilled nursing facility and evaluating continuous outcomes poses unique challenges, we focused on the two binary outcomes: admission to the ICU and requirement for mechanical ventilation.

Statistical Analysis

Descriptive statistics

We compared the pediatric and adult patient populations. We report standardized mean differences (SMDs) where an SMD > 0.10 indicates that the two groups are out of balance.

Predictive model algorithms

A predictive model may not transport well from one patient group to another if each group has different underlying risk factors for the outcomes of interest. Analytically, this would mean that there is an interaction between a demographic characteristic (i.e., age) and a risk factor (e.g., weight). To assess this hypothesis, we considered three modeling approaches. In our initial work we used the Random Forests (RF) algorithm [19]. RF is a machine-learning algorithm that consists of an aggregation of decision trees; one feature of decision trees is that they are well suited for modeling interactions. The second approach was LASSO logistic regression. LASSO is an extension of logistic regression that performs an implicit variable selection to generate more stable predictions [20]. Like typical regression models, LASSO does not explicitly model interactions. Our final model was also a LASSO model to which we explicitly added an interaction term between age and each predictor.

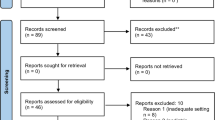

Analysis workflow

Our overall workflow is shown in Fig. 1. We randomly divided the full dataset into training (two-thirds) and testing (one-third) sets. From the training set, we created two analytic training cohorts: a combined dataset of adults and children and a subset of children alone. For the testing set, we only used children to assess how the different models perform in a pediatric population. We fit the models on the training data using cross-validation to choose optimal tuning parameters and applied the best model to the independent test data. Overall, we fit a total of 12 models that combined two outcomes, two cohorts (combined and pediatric), and three modeling approaches. To assess performance during the COVID period, we abstracted data on pediatric encounters March 2020 to January 2022.

Model metrics

For each of the 12 models we calculated global performance metrics, including the area under the receiver operator characteristic (AUROC) and the calibration slope. We used a bootstrap to calculate 95% confidence intervals and a permutation test to assess differences between the model AUROCs. To gain insights into the differences between the combined and pediatric models, we used the RF model fit to identify the top important variables within each model.

Decision rule analysis

We assessed the impact each model would have on a generated decision rule. As in our initial CDS tool, we transformed the predicted probabilities into discrete categorizations of low, medium and high risk. The lower cutoff was calibrated in all models to correspond to a sensitivity of 95%, such that 95% of the positive training data fell into the medium- or high-risk categories and 5% into the low-risk category. This focus on sensitivity was intended to overestimate rather than underestimate risk in assigning categories. The upper cutoff was set to maximize the utility of the high-risk category, thereby creating a model with a large positive predictive value (PPV = true positives/predicted positives) while still encompassing a significant portion of the data. Because of the large difference in baseline probability between ICU admission and requirement for mechanical ventilation, this threshold was set separately for each outcome. We determined that an 80% PPV was optimal for models predicting ICU admission and a 50% PPV was optimal for models predicting requirement for mechanical ventilation.

All analyses were performed in R version 3.6.3. The ranger and glmnet packages were used for the RF and LASSO models, respectively [21, 22]. This work was declared exempt by the DUHS IRB.

Results

Cohort description

We abstracted data on a total of 42,209 elective surgeries, of which 39,547 (94%) were for patients 19 years of age and older and 2,662 (6%) patients 18 years of age or younger. Table 1 presents patient information stratified on age. As expected, there were meaningful differences (SMD > 0.10) for almost all patient characteristics, highlighting the differences between adult and pediatric patients. Table 2 shows surgery characteristics stratified on age, similarly showing meaningful differences in resource utilization, severity, and procedure type.

Predictive model performance

We built 6 models for each of the two clinical outcomes of interest – ICU admission and requirement for mechanical ventilation. After building models based on their respective training sets (n = 27,182 for combined adult and pediatric patients; n = 1,815 for pediatric patients only), we evaluated all models against the pediatric testing set (n = 894; results shown in Table 3). The best prediction model for ICU admission had an AUROC of 0.945 (95% CI: 0.928, 0.960) in the test data while the best model for requirement for mechanical ventilation had an AUROC of 0.862 (95% CI: 0.919, 0.902). ROC plots are shown in Additional file 1: Fig. 1a and b. There were significant differences in performance between the different models when using the combined adult/pediatric data. In predicting ICU admission and requirement for mechanical ventilation, the RF models performed better overall than the LASSO models (ICU admission: p < 0.001; requirement for mechanical ventilation: p < 0.023). Performance was not significantly different between the RF models using combined adult/pediatric data and pediatric data alone (ICU admission: p = 0.886; requirement for mechanical ventilation: p = 0.112). Conversely, the performance of the pediatric LASSO models was significantly better than LASSO models developed with combined adult/pediatric data (ICU admission: p < 0.002; requirement for mechanical ventilation: p < 0.028). Incorporation of explicit age-based interactions in the LASSO model attenuated differences between models developed with combined adult/pediatric data and pediatric data alone, and there was only a significant difference in performance for models predicting ICU admission (ICU admission p < 0.004; requirement for mechanical ventilation p = 0.077). Testing the model on 1428 pediatric encounters during the COVID period yielded very similar performance (Additional file 1: Table 3).

Decision rule performance

We assessed the accuracy of decision rules for each model using the pediatric test data (Fig. 2). We set the desired sensitivity of the low threshold at 95%. We set the PPV of ICU admission and requirement for mechanical ventilation at 80% and 50%, respectively. While the RF models showed no difference in global performance (based on AUROC), there were slight differences in the performance of a decision rule (Table 3). Importantly, the models developed using only pediatric data were closer to the desired decision rule metrics than models developed using combined adult/pediatric data for prediction of both ICU admission and requirement for mechanical ventilation. Of note, the models trained on combined adult/pediatric data were more sensitive than the models trained on pediatric data alone for both ICU admission (0.979 vs. 0.935) and requirement for mechanical ventilation (0.976 vs. 0.941).

Variable importance

While RF does not generate beta coefficients, this machine learning approach can identify “important” predictors, or predictors that play a role in achieving the prediction accuracy of the model. Table 4 shows the top 10 variables identified by the RF models for both combined adult/pediatric and purely pediatric cohorts. All four models included age, height, weight, previous ambulatory encounters, specialty, and service among the top predictors. Notably, the important predictors for the different models varied with respect to the specific surgery CPT code, comorbidities, and medication usage.

Discussion and conclusion

We sought to assess the performance of CDS tools to predict resource utilization after pediatric elective surgeries using either combined adult/pediatric data or pediatric data alone. Our results indicate that models using a traditional regression-based method exhibit better performance if they are built with cohort specific pediatric data than with combined adult/pediatric data. In contrast, models using a machine learning method (RF) exhibited better performance when built using combined adult/pediatric data than with pediatric data alone. These findings suggest that machine learning-based models may be able to more appropriately account for key differences between pediatric and adult patient populations. Moreover, these findings have important implications for the development of CDS tools for different populations.

CDS tools are increasingly used to help guide clinical care and decision making; however, very few of these tools are developed specifically for pediatric populations [23]. In our experience, data from pediatric patients are frequently removed from the datasets used to develop CDS tools. Moreover, datasets for specific clinical subpopulations, such as pediatric patients, are frequently not large enough to train the models that underlie these tools. Further, it is valuable to be able to use a single CDS across multiple patient populations. For example, while tools exist for hospital readmission for specific sub-populations [24, 25], we have found it easier to use a generalized hospital readmission risk score at our own institution [26]. Being able to leverage data from both adult and pediatric patients in the development of CDS tools could facilitate the development of CDS tools that perform well among pediatric patient populations.

Though we found that CDS tools based on combined adult and pediatric data perform well, this does not imply that children and adults have the same risk factors. Other groups have found that models trained solely on adults do not translate well to children [8], including tools for comorbidity indices [9], emergency medical services (EMS) dispatch triage protocols [10], mortality scores [11], and surgery duration [12]. Similarly, we found that models built with regression-based methods using combined adult and pediatric data did not perform as well within the pediatric population as a model built on pediatric data alone. Importantly, a machine learning method that included interaction terms for age improved the transportability of a regression-based model developed with combined adult and pediatric data to a pediatric population. These findings are supported by the examination of the top predictor variables from the models using combined adult and pediatric data versus pediatric data alone, in which adult and pediatric populations were found to have different important predictors for the clinical outcomes of interest. Our findings demonstrate that accurately capturing and modeling differences associated with clinical sub-populations is a critical component in developing transportable CDS tools.

Our study has several strengths as well as some limitations. We leveraged a large dataset from a diverse patient population to develop and test multiple modeling approaches. However, as a single center study, model development and performance are dependent on local context, including composition of the local patient population, types of data commonly captured within the EHR, and the clinical scenario for which the CDS tool is being developed. Moreover, study results are likely to be dependent on the relative size of the pediatric population available for model development. It is possible that a health system with a high volume of pediatric surgeries may have a pediatric population that is large enough to develop a CDS tool specific to that population. Our findings are also specific to CDS tools designed to predict post-surgical resource utilization and may or may not be generalizable to other CDS types. Therefore, we do not view these as definitive results that model developers should always combine adult and pediatric data. Instead, our study provides an overall approach that can be used to develop and evaluate different models for CDS tools.

Overall, our findings demonstrate that while adults and children have different risk factors and characteristics that are important for predicting clinical outcomes, appropriate machine learning techniques can generate CDS tools that effectively model outcomes for both pediatric and adult populations. Importantly, this finding suggests that even for clinical outcomes for which the relevant pediatric patient population may be small, models may be developed using data from adults, provided that the model accounts for the interaction between age and important patient- and procedure-level factors. Further, these findings indicate that there are additional opportunities and clinical scenarios that may be amenable to the development and application of CDS tools.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available due patient privacy but are available from the corresponding author on reasonable request.

Change history

12 May 2022

A Correction to this paper has been published: https://doi.org/10.1186/s12911-022-01846-1

References

Ding X, Gellad ZF, Mather C, et al. Designing risk prediction models for ambulatory no-shows across different specialties and clinics. J Am Med Inform Assoc JAMIA. 2018;25(8):924–30. https://doi.org/10.1093/jamia/ocy002.

Figaji AA. Anatomical and physiological differences between children and adults relevant to traumatic brain injury and the implications for clinical assessment and care. Front Neurol. 2017;8:685. https://doi.org/10.3389/fneur.2017.00685.

Hattis D, Ginsberg G, Sonawane B, et al. Differences in pharmacokinetics between children and adults—II Children’s variability in drug elimination half‐lives and in some parameters needed for physiologically‐based pharmacokinetic modeling. Risk Anal. 2003;23(1):117–142. https://doi.org/10.1111/1539-6924.00295

Rogers DM, Olson BL, Wilmore JH. Scaling for the VO2-to-body size relationship among children and adults. J Appl Physiol. 1995;79(3):958–67. https://doi.org/10.1152/jappl.1995.79.3.958.

Nadkarni VM. First documented rhythm and clinical outcome from in-hospital cardiac arrest among children and adults. JAMA. 2006;295(1):50. https://doi.org/10.1001/jama.295.1.50.

Podewils LJ, Liedtke LA, McDonald LC, et al. A national survey of severe influenza-associated complications among children and adults, 2003–2004. Clin Infect Dis. 2005;40(11):1693–6. https://doi.org/10.1086/430424.

McGregor TL, Jones DP, Wang L, et al. Acute kidney injury incidence in noncritically Ill hospitalized children, adolescents, and young adults: a retrospective observational study. Am J Kidney Dis. 2016;67(3):384–90. https://doi.org/10.1053/j.ajkd.2015.07.019.

Mack EH, Wheeler DS, Embi PJ. Clinical decision support systems in the pediatric intensive care unit. Pediatr Crit Care Med J Soc Crit Care Med World Fed Pediatr Intensive Crit Care Soc. 2009;10(1):23–8. https://doi.org/10.1097/PCC.0b013e3181936b23.

Jiang R, Wolf S, Alkazemi MH, et al. The evaluation of three comorbidity indices in predicting postoperative complications and readmissions in pediatric urology. J Pediatr Urol. 2018;14(3):244.e1-244.e7. https://doi.org/10.1016/j.jpurol.2017.12.019.

Fessler SJ, Simon HK, Yancey AH, Colman M, Hirsh DA. How well do General EMS 911 dispatch protocols predict ED resource utilization for pediatric patients? Am J Emerg Med. 2014;32(3):199–202. https://doi.org/10.1016/j.ajem.2013.09.018

Castello FV, Cassano A, Gregory P, Hammond J. The Pediatric Risk of Mortality (PRISM) Score and Injury Severity Score (ISS) for predicting resource utilization and outcome of intensive care in pediatric trauma. Crit Care Med. 1999;27(5):985–8. https://doi.org/10.1097/00003246-199905000-00041.

Bravo F, Levi R, Ferrari LR, McManus ML. The nature and sources of variability in pediatric surgical case duration. Paediatr Anaesth. 2015;25(10):999–1006. https://doi.org/10.1111/pan.12709.

Master N, Zhou Z, Miller D, Scheinker D, Bambos N, Glynn P. Improving predictions of pediatric surgical durations with supervised learning. Int J Data Sci Anal. 2017;4(1):35–52. https://doi.org/10.1007/s41060-017-0055-0.

Killien EY, Mills B, Errett NA, et al. Prediction of pediatric critical care resource utilization for disaster triage. Pediatr Crit Care Med J Soc Crit Care Med World Fed Pediatr Intensive Crit Care Soc. 2020;21(8):e491–501. https://doi.org/10.1097/PCC.0000000000002425.

Wulff A, Montag S, Rübsamen N, et al. Clinical evaluation of an interoperable clinical decision-support system for the detection of systemic inflammatory response syndrome in critically ill children. BMC Med Inform Decis Mak. 2021;21(1):62. https://doi.org/10.1186/s12911-021-01428-7.

Oofuvong M, Ratprasert S, Chanchayanon T. Risk prediction tool for use and predictors of duration of postoperative oxygen therapy in children undergoing non-cardiac surgery: a case-control study. BMC Anesthesiol. 2018;18(1):137. https://doi.org/10.1186/s12871-018-0595-4.

McLeod DJ, Asti L, Mahida JB, Deans KJ, Minneci PC. Preoperative risk assessment in children undergoing major urologic surgery. J Pediatr Urol. 2016;12(1):26.e1-7. https://doi.org/10.1016/j.jpurol.2015.04.044.

Goldstein BA, Cerullo M, Krishnamoorthy V, et al. Development and performance of a clinical decision support tool to inform resource utilization for elective operations. JAMA Netw Open. 2020;3(11): e2023547. https://doi.org/10.1001/jamanetworkopen.2020.23547.

Breiman L. Random forests. Mach Learn. 2001;45(1):5–32. https://doi.org/10.1023/A:1010933404324.

Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc Ser B Methodol. 1996;58(1):267–88. https://doi.org/10.1111/j.2517-6161.1996.tb02080.x.

Wright MN, Ziegler A. ranger: A fast implementation of random forests for high dimensional data in C++ and R. J Stat Softw. 2017;77(1). doi:https://doi.org/10.18637/jss.v077.i01

Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J Stat Softw. 2010; https://doi.org/10.18637/jss.v033.i01

Goldstein BA, Navar AM, Pencina MJ, Ioannidis JPA. Opportunities and challenges in developing risk prediction models with electronic health records data: a systematic review. J Am Med Inform Assoc JAMIA. 2017;24(1):198–208. https://doi.org/10.1093/jamia/ocw042.

Eapen ZJ, Liang L, Fonarow GC, et al. Validated, electronic health record deployable prediction models for assessing patient risk of 30-day rehospitalization and mortality in older heart failure patients. JACC Heart Fail. 2013;1(3):245–51. https://doi.org/10.1016/j.jchf.2013.01.008.

Perkins RM, Rahman A, Bucaloiu ID, et al. Readmission after hospitalization for heart failure among patients with chronic kidney disease: a prediction model. Clin Nephrol. 2013;80(6):433–40. https://doi.org/10.5414/CN107961.

Gallagher D, Zhao C, Brucker A, et al. Implementation and continuous monitoring of an electronic health record embedded readmissions clinical decision support tool. J Pers Med. 2020;10(3):E103. https://doi.org/10.3390/jpm10030103.

Acknowledgements

We have no acknowledgements.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

PS analyzed and interpreted the data regarding resource utilization, under guidance from BG. PS, BG, and JH were contributors in writing the manuscript. RT, KH, and JR provided clinical expertise in study design and analysis. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All methods were carried out in accordance with relevant institutional and governmental policies and regulations, this work was approved by the Duke University Health System Institutional Review Board under protocol number Pro00065513 as exempt research. As this study is a retrospective chart review using de-identified data, the DUHS IRB approved it as exempt from additional informed consent requirements.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: part of the ‘Outcome Variable Definition’ and the entirety of the ‘Descriptive statistics’ subsection was missing.

Supplementary information

Additional file 1: Supplemental Table 1.

Predictor Variables used in each model. Supplemental Table 2. CPT Code Ranges Used (286, including None and Other). Supplemental Table 3. Performance of models on pediatric patients (n = 1428) during the COVID period (March 2020 – January 2022). Supplemental Figure 1. ROC plots for ICU and Ventilator models based on training population and model type.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Sabharwal, P., Hurst, J.H., Tejwani, R. et al. Combining adult with pediatric patient data to develop a clinical decision support tool intended for children: leveraging machine learning to model heterogeneity. BMC Med Inform Decis Mak 22, 84 (2022). https://doi.org/10.1186/s12911-022-01827-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-022-01827-4