Abstract

Background

Parent-reported experience measures are part of pediatric Quality of Care (QoC) assessments. However, existing measures were not developed for use across multiple healthcare settings or throughout the illness trajectory of seriously ill children. Formative work involving in-depth interviews with parents of children with serious illnesses generated 66 draft items describing key QoC processes. Our present aim is to develop a comprehensive parent-reported experience measure of QoC for children with serious illnesses and evaluate its content validity and feasibility.

Methods

For evaluating content validity, we conducted a three-round Delphi expert panel review with 24 multi-disciplinary experts. Next, we pre-tested the items and instructions with 12 parents via cognitive interviews to refine clarity and understandability. Finally, we pilot-tested the full measure with 30 parents using self-administered online surveys to finalize the structure and content.

Results

The Delphi expert panel review reached consensus on 68 items. Pre-testing with parents of seriously ill children led to consolidation of some items. Pilot-testing supported feasibility of the measure, resulting in a comprehensive measure comprising 56 process assessment items, categorized under ten subthemes and four themes: (1) Professional qualities of healthcare workers, (2) Supporting parent-caregivers, (3) Collaborative and holistic care, and (4) Efficient healthcare structures and standards. We named this measure the PaRental Experience with care for Children with serIOUS illnesses (PRECIOUS).

Conclusions

PRECIOUS is the first comprehensive measure and has the potential to standardize assessment of QoC for seriously ill children from parental perspectives. PRECIOUS allows for QoC process evaluation across contexts (such as geographic location or care setting), different healthcare workers, and over the illness trajectory for children suffering from a range of serious illnesses.

Similar content being viewed by others

Background

Each year, over 21 million children globally suffer from serious life-threatening and life-limiting illnesses [1, 2], placing significant burden of care on their parents and the health system. Parents of these children often need to navigate convoluted healthcare systems to make crucial medical and financial decisions [3]. As a result, they too are susceptible to adverse health and psychosocial outcomes [4]. Given the established relationship between parental and child well-being [5, 6], healthcare workers (HCWs) should strive to meet the needs of both groups and approach the parent/child dyad as a single unit of care [7,8,9].

According to the Donabedian Quality of Care (QoC) model [10], process measures evaluate the activities that revolve around care delivery, such as communication between patients and HCWs and timely notifications of clinical or lab test results. Parent-Reported Experience Measures (PaREMs) are process measures that help us to understand “what” and “how” care activities occurred from the perspective of parents [11, 12], acknowledging them not only as proxies of the child-patient but also as the other key recipient of care services [13,14,15]. PaREMs are invaluable for reporting and driving process improvements in, within, and across health and social care settings, including homes, hospices, and clinics [16, 17]. They support parental engagement, which can in turn educate both parents and HCWs, inform policymakers, and improve service delivery and governance [18].

Most existing validated experience measures are designed for adults with serious illnesses. Moreover, other measures intended for children, such as the widely used Consumer Assessment of Healthcare Providers and Systems surveys, of which only a couple have child versions, were not designed for parents of seriously ill children with unique care needs. Rather, most measures are for children who are not necessarily seriously ill or are otherwise healthy but hospitalized episodically. Our published scoping review, which mapped and evaluated PaREMs for parents of seriously ill children, showed that there is no single PaREM that is applicable across the illness trajectory and diverse service providers involved in a seriously ill child’s care network [19]. For example, while the Measure of Processes of Care (MPOC) addresses family-centred behaviors of HCWs from community-based centres, it is not intended for acute or hospital care. Conversely, the Quality of Children’s Palliative Care Instrument (QCPCI) assesses specialist hospital care throughout the illness, but excludes community-based services. The Pediatric Integrated Care Survey [20] captures parental experiences of care coordination across settings, but omits some QoC domains crucial for the chronic, serious illness journey such as parental empowerment, caregiving stress reduction, and access to financial and informational resources – which are vital for parents managing prolonged child illnesses with high caregiving demands.

Given the complex and multidisciplinary nature of pediatric serious illnesses, there is a need for a comprehensive PaREM that can assess quality of care across diverse settings and HCWs for seriously ill children and their parents. Such measures should also strive to be widely relevant both across contexts (such as geographic location or care setting) and throughout the illness trajectory for children suffering from a range of serious illnesses. Involving a series of steps, we first started with a qualitative study to identify key care processes which are important to parents of seriously ill children yet relevant across various care settings and their HCWs, and over the illness trajectory [21]. Through this formative work, we drafted 66 preliminary QoC items categorized into four themes and 10 subthemes (Table 1). Each care process described parental priorities regarding care practices, interactions, services and procedures throughout the illness trajectory and across HCWs. Presently, to address limitations in existing PaREMs, we aim to establish content validity, assess feasibility (through survey response rates and time) and provide preliminary evidence of construct validity for a newly developed measure which standardizes QoC evaluation from the parental perspective across the care continuum and over time.

Methods

The study was conducted in Singapore, a multi-cultural and multi-ethnic country in Southeast Asia. Singapore’s healthcare system is a mixed financing model that blends individual responsibility through compulsory savings (‘Medisave’) and government subsidies with national insurance (‘Medishield’) for comprehensive coverage [22]. We first revised the initial 66 QoC items to present them from a first-person perspective and added standardized stems (e.g., Over the past 12 months, our child's healthcare workers…), response options (i.e., Always to Never) and real-world examples to clarify complex terminologies (e.g., …advised us on how to reduce our child's medical expenses, such as access to subsidies or financing schemes). We then adopted a multi-method, multi-stakeholder approach, incorporating modified Delphi expert panel review, establishment of a steering committee, and pre-testing and pilot-testing with parents.

Delphi expert panel review

The Delphi technique is a structured group communication and consensus building process, which iteratively engages experts to evaluate complex real-world issues [23, 24]. This established and effective method gathers and synthesizes informed opinions on focused areas of interest [25]. The approach, widely used to develop guidelines, criteria, quality indicators, and policy frameworks, is grounded in expert opinions and lived experiences [26, 27]. We used a modified online Delphi approach to determine the content validity of the drafted items [28], which has been similarly used to determine the content validity of items in adult patient-reported experience measures [29], quality appraisal tools [30], and surveys measuring evidence-based practice [31]. This technique was particularly helpful for us to aggregate existing expertise and the item set developed from earlier formative work.

Delphi expert panel review: participants and procedures

We conducted a three-round modified online Delphi expert panel review from April to June 2022, moderated by three facilitators (EAF, CM, FAJL) [32]. While the classic Delphi methodology employs four rounds [33], we employed three rounds because others have found that this enables adequate reflection on group responses and is appropriate to reach consensus [34]. We defined an “expert” as an individual who has over one year of experience working directly with children suffering from serious illnesses and their families, either directly providing care services to them or conducting research related to them. We purposively sampled local experts from multiple disciplines across the major institutions caring for children with serious illnesses. This purposive sampling technique ensured that the content would be relevant to the target parent population and aligned with the local sociocultural and healthcare system. In line with our aim to develop a widely applicable PaREM, we also included international experts with experience in designing parent-reported experience measures in other settings. We invited each expert via email to seek their consent. The panel included HCWs from multiple disciplines and researchers working with children with serious illnesses or pediatric measure development. In addition, in the spirit of family-centered care, we included parent-caregivers as experts as they can provide the most authentic and first-hand experience as end-users. We provided each expert with a handout (Additional file 1) explaining the study’s purpose, procedures, and materials before the review.

During each two-week round, experts independently provided input which the facilitators used to make modifications to the measure during one-week breaks between rounds. We hosted all survey links on Qualtrics (NUS Enterprise). In Round One, experts voted for each item on a three-point Likert scale in response to the question “Does the item appropriately capture the subtheme…”. The response options were: (i) ‘No, not appropriate’; (ii) ‘Yes, with changes to item or response options’ with accompanying free-text input; and (iii) ‘Yes, no changes to recommend’. After voting on items within each subtheme individually, we presented experts with all corresponding items under that theme and asked them if any key processes were missing from a QoC perspective. If they responded ‘Yes’, they were prompted to suggest additional processes using a free-text box.

In Round Two, experts continued to vote on each item in response to the same question. We included a free-text response box with every item to enable experts to elaborate on their responses and offer open-ended input. Thus, the response options were: (i) No, not appropriate; and (ii) Yes, appropriate.

In Round Three, we presented experts with a full list of all items, subthemes and themes and requested that they suggest further improvements to any aspect, such as word choice, redundancies, order of items, etc. To complete the Delphi process, experts had to respond to all three rounds, otherwise we marked their participation as incomplete.

As outlined in Fig. 1, feedback from each round informed subsequent modifications to the items, exemplifying the Delphi method’s iterative, consensus-building, and expert-driven nature. Items in each round were modified and added from previous rounds, allowing for the items’ iterative evolution. Experts also had access to prior rounds’ results, facilitating reflection and allowing possible adjustment of their own views. Results and expert feedback for each item per round were shared anonymously with all experts to prevent bias and protect participants from potential negative perceptions of their views.

Delphi expert panel review analysis

For every round, we compiled aggregated ratings, whether consensus was achieved, and made modifications to previous versions of the measure. We predefined consensus as 70%, as commonly suggested and used in Delphi studies [35], to represent a substantial majority without being impractical. This consensus threshold represented the proportion of experts who responded either ‘Yes, with changes’ or ‘Yes, no changes to recommend’ in the first round, and who responded ‘Yes, appropriate’ in the second round. This aimed to achieve expert agreement that each item appropriately represented each subtheme’s targeted aspect of quality of care (the ‘construct’). Drafted items which had attained consensus were termed “candidate” items and retained for the next phase of pre-testing.

Establishment of steering committee

After establishing content validity of the candidate items through the modified Delphi process, we formed a multidisciplinary steering committee to guide the development of the full measure. This committee, consisting of experts in measure development (MG), health services research (TO, RM) and palliative care research (EAF, CM), met regularly to refine the measure. Additionally, an experienced former nurse provided input on early drafts, considering local sociocultural and linguistic nuances.

Pretesting

From July to September 2022, we pretested the measure using cognitive debriefing interviews with parents of seriously ill children [36] to identify potentially imprecise wording, poor ordering of items, and mismatches between intended and interpreted meanings of items [37]. We incrementally evaluated and improved the relevance of the candidate items and instructions. To test whether the QoC processes remained relevant to parents of children at various developmental stages, we staggered the interviews across four batches. Each batch corresponded to children’s age group (early childhood (0 – 5 years), middle childhood (6 – 12 years), adolescence (13 – 17 years), and young adulthood (18 – <21 years)). After each batch of interviews, interim steering committee meetings were held for iterative review and modification of items and instructions.

For pre-testing we targeted a sample size of 12 participants, aiming for three parents per child’s age group. This sample size was selected to provide a 70% chance of detecting any omission that 10% of the population would deem significant, calculated based on the complement rule in probability theory, expressed as (1 – 0.1)12 = 0.28 [38]. Thus, with 12 participants, we had a 72% likelihood of identifying important omissions noted by 10% of parents.

Pretesting participants and procedures

We recruited adult (>21 years old) parents of seriously ill children (<21 years old) in Singapore through referrals from study collaborators. Parents of young adults (18 to < 21 years) were included as the majority of individuals with developmental disabilities continue to be cared for in their family homes [39]. We conducted face-to-face interviews in parents’ homes or through videoconference based on their preference, which were audio-recorded and transcribed. All interviews were held by the first author, a female health services researcher with experience in qualitative methods who had no prior or dependent relationship with any parent. We used a think-aloud approach during interviews where parents verbalized their thoughts while completing the measure [40]. This allowed us to evaluate the meaning of parent’s answers, the degree of difficulty encountered in completing the measure, and the nature of completion problems. We also employed a concurrent ‘verbal-probe’ method [41], where we asked parents specific questions after each item based on Tourangeau’s four-stage cognitive model of question response [42] to determine how information is understood, retrieved, judged, and reported. An illustration of the verbal probes used in the cognitive interviews is shown in Table 2. After completing the measure, we individually debriefed parents about their general impressions of its length and overall ease, asked them to highlight any difficulty in understanding the items, and explain whether the care processes were relevant to them.

Pretesting analysis

We conducted cognitive interviews and steering committee discussions iteratively across the four batches. After each cognitive interview, we summarized responses to items and verbal probes from the think-aloud approach. We identified items where parents had difficulty understanding terms or phrasing and highlighted those where they had interpreted differently than intended. Verbal probes helped us to compare parents’ understanding of items to the intended meaning. We also noted items where a high proportion (>25%) of parents selected the ‘Not Applicable’ response option, indicating a lack of clarity or relevance to parental experiences.

Pilot-testing

After successfully completing pretesting, we conducted pilot-testing [37] from October to December 2022 to identify potential issues in administering the measure during full-scale validation and to generate preliminary data on construct validity and measurement properties in the target population.

Pilot-testing participants and procedures

We recruited 30 parents (>21 years old) of seriously ill children (<18 years old) in Singapore, following recommended sample size for pilot-studies in measure development [43]. We recruited a diverse representation of parents through partner organizations, parent-advocates, and co-investigators. Parents were invited to self-administer an online survey hosted on Qualtrics (NUS Enterprise), which included sociodemographic questions and three measures: our new PaREM and two measures previously identified in the scoping review—the MPOC-20 [44] and QCPCI [45]. MPOC-20 measures parents’ perceptions of family-centeredness of community services and QCPCI evaluates hospital-based palliative care for children with life-threatening conditions. Both are QoC measures targeting different care settings but applicable to both short- and long-term care. Thus, MPOC-20 and QCPCI were included since they have some overlapping constructs with our PaREM and were deemed to be useful for evaluating the measurement properties of the new measure in full-scale validation. Higher scores denote better QoC in all measures. We randomized the order of administration of the three measures to mitigate order effects.

Pilot-testing analysis

We followed standard scoring, reporting and interpretation protocols for MPOC-20 and QCPCI scores according to CanChild’s and Widger et al.’s guidelines, respectively. PRECIOUS utilized a 5-point Likert scale ranging from Never = 0 to Always = 4, with a “Not Applicable” response option for context-dependent processes. After scoring the measures, we conducted a preliminary assessment of the construct validity of the PRECIOUS subscales by descriptively comparing subscale scores across the three PaREMs. For each PRECIOUS item, we calculated the number of valid responses, percentage of parents choosing ‘Never’ (floor), 25th percentile, mean (SD), 50th percentile, 75th percentile, percentage of parents choosing ‘Always’ (ceiling), and minimum and maximum scores. We also tracked time to complete the entire survey and response rates: 1) proportion eligible, 2) proportion consenting, 3) dropout rate, and 4) data completeness (item-response). To assess convergent validity, we calculated Spearman’s correlation coefficient (ρ) between PRECIOUS, QCPCI and MPOC-20 subscales, and an overall Quality of Care rating from QCPCI. MPOC-20 did not include a global QoC rating.

Results

Delphi expert panel review results

While 33 experts were invited and 26 consented to participate, 24 experts completed the Delphi rounds (2 incompletes). Their experience covered various areas: hospice care (n = 1), pediatric palliative care (n = 2), pediatric complex care (n = 1), intensive or critical care (n = 2), measure development (n = 2), allied health (n = 4), pediatric nursing (n = 4), home care (n = 2), health services research (n = 2), and parent-caregiving (n = 4). Experts had diverse experience levels, with 8 having over 20 years of experience, 6 having 10-19 years, 4 having 5-9 years, and 6 having 1-4 years of experience. 83% (N = 20) of experts were from Singapore and 17% (N = 4) were international experts from Canada (N = 2), the United Kingdom (N = 1), and the United States of America (N = 1).

Detailed results specifying all aggregated ratings, consensus and modifications across rounds one and two are presented in Additional file 2. In summary, after Round One, we eliminated one item due to lack of consensus: “I have the ability to choose my child's healthcare workers.” We attained consensus on 65 items and modified 49 items. For instance, we reworded the original item “I receive the same information from different healthcare workers" to "I receive consistent information from different healthcare workers,” based on feedback that HCWs should avoid providing conflicting advice to parents but do not need to provide identical information. Experts proposed 14 new processes for panel review in Round Two that were not covered by existing items, including “…communicate with my child in a way that is sensitive to his/her needs”.

After Round Two, we eliminated 7 items due to lack of consensus or high degree of overlap with other items, including “… make sure my child's medical equipment are properly maintained beyond healthcare facilities”. We attained consensus on 72 items, with 41 being modified.

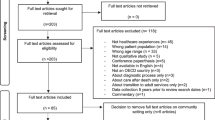

After Round Three, we consolidated and incorporated expert recommendations, and the facilitators conducted a review to combine overlapping items. For example, “… ensure I am fully informed” and “…give me information on my child's condition in a timely manner” were merged into one item. We thus removed 4 items and modified 20, while 48 remained unchanged. For example, we clarified the wording of the item “… give me all available management options for my child” to “…inform me of all available medical options for my child.” No new items were proposed during Round Three. Overall, consensus was achieved on 68 candidate items under four themes and 10 subthemes at the end of the Delphi review. Figure 2 presents a flowchart summarizing the aims, procedures and results of various phases of the measure development process.

Steering committee actions following Delphi expert panel review

After reviewing the 68 candidate items, the steering committee suggested removing two items that were conceptually indistinguishable, standardized item response options, and refined the instructions. These resulted in the full measure with 66 items for pre-testing. We named the measure the PaRental Experience with care for Children with serIOUS illnesses (PRECIOUS).

Pretesting results

We conducted cognitive interviews with 12 parents of seriously ill children (characteristics in Table 3). Additional file 3 contains details of pre-testing feedback and modifications across cognitive interviews. Parents of children in Early childhood, Middle childhood and Adolescence interpreted the items similarly and reported that existing processes were comprehensive and relevant. However, parents of Young Adults felt that their experience with, and hence the relevance of, PRECIOUS items changed substantially after their child left the purview of the pediatric health system.

Steering committee actions following pre-testing

Given that parents of Young Adults transitioning from the pediatric to adult health system reported that PRECIOUS items were less relevant to them, the steering committee decided to limit the measure to children under 18 years old in subsequent phases. Additionally, we took extra care to ensure clarity of the statements without modifying the intended construct. For example, the item “…discussed with us how the scope of care could be tailored to provide comfort for my child” was clarified to “…discussed with us how care could be adjusted to improve my child’s comfort”.

Broadly, the modifications made to the items throughout the iterative process of pre-testing reduced the number of items, simplified the terms and sentences used, and reduced the number of examples. For example, parents found the item “…gave us opportunities to advocate or speak up for my child” to be cumbersome and this was streamlined to “…listened to us when we spoke up for my child”. Pre-testing concluded with most items being modified and 9 being removed; 57 items were retained in the measure (abbreviated items in Table 4, full measure in Additional file 4).

Pilot-testing results

Thirty parents completed the pilot-test (characteristics in Table 3). Summarized pilot-testing results are reported in Table 5. Parents took a median time of 16.6 minutes (Interquartile range: 11.0 – 26.7) to complete consent, sociodemographic data collection, and the three measures (PRECIOUS, QCPCI, and MPOC-20). Dropout rate was 25%, which is commonly seen in web surveys [46,47,48], suggesting that completing all three PaREMs in full-scale validation is feasible. Four PRECIOUS items had high non-response rates (‘Not Applicable’), ranging from 27% to 57%.

All three PaREMs revealed current gaps in care. On QCPCI, overall QoC was rated as 2.3/4.0 on average, just above the ‘Good’ rating of 2.0. Parents reported other subscales between 2.42 – 2.72 out of 4.0. This range corresponded to frequencies between ‘Sometimes’ (2.0) and ‘Frequently’ (3.0). Sibling support was found to be ‘Rarely’ experienced with a mean score of 1.05 among the children with siblings (n = 14, 47%). The five MPOC-20 subscales showed that parental needs were ‘Sometimes’ (4.0) met with mean scores ranging from 3.89 – 4.77 out of 7.0. On PRECIOUS, the four subscales ranged from 2.25 – 2.80 out of 4.0, marginally above a frequency of ‘Sometimes’ (2.0). Results for each item of PRECIOUS (full results in Additional file 5) suggested similar gaps in care processes. Thirty-three percent of parents reported ‘Seldom’ having access to sufficient financial support for non-medical expenses, such as therapy. Emotional support for the child was also ‘Seldom’ experienced with a mean score of 1.08/4.0.

Table 6 presents Spearman’s ρ between the four PRECIOUS subscales, MPOC-20 subscales, and QCPCI subscales. The PRECIOUS subscales were significantly correlated with the Global Rating of QoC, MPOC-20 and QCPCI subscales, except for QCPCI’s Sibling Support and MPOC-20’s Providing General Information.

Steering committee actions following pilot-testing

Among the four items with high non-response rates, the committee decided to retain three for future testing with a larger sample size due to the small sample of the pilot-test. One item was removed, “…asked us if we wanted to contribute to the community of seriously ill children, such as letting us support other families or participating in research” (non-response rate of 38%). We created an infographic (Additional file eFigure 1) with selected results and distributed it to the study team and parents who had agreed to be recontacted. These recipients were invited to provide any additional feedback. Finally, based on the steering committee’s discussions, the instructions of PRECIOUS were revised for simplicity. For example, “Sharing your experience will help in improving the quality of care your family and future families will receive” was shortened to “Sharing your views will help improve the quality of care in the future”.

Discussion

To enhance QoC for seriously ill children, service providers should regularly evaluate care processes that parents consider important. Existing PaREMs for seriously ill children typically focus on specific care settings, resulting in a fragmented assessment of QoC [19]. To address this gap, we have developed the PRECIOUS measure to encourage closer collaboration between and within care teams and settings. PRECIOUS is a comprehensive PaREM that provides HCWs with a holistic view of parental priorities, comprising of 56 specific, well-defined QoC processes that are categorized into four themes (Efficient healthcare structures and standards, Supporting parent caregivers, Collaborative & holistic care, and Professional qualities of healthcare workers) and 10 subthemes. It is applicable to parents of seriously ill children (<18 years old) across multiple care settings and throughout their illness trajectory. Designed as a process measure to capture parental experience, PRECIOUS complements outcome measures and quality indicators related to effectiveness and safety, thereby highlighting potential areas for intervention.

Developing PRECIOUS also revealed that parents of seriously ill children often share common challenges and experiences, irrespective of the child patient’s specific illness or age. This aligns with broader findings indicating shared parental experiences across various clinical scenarios. For instance, research on children with medical complexity [49] and tracheotomized children [50, 51] showed that despite varied diagnoses, the challenges faced by parents are consistent. These findings highlight the value of a versatile measure capable of evaluating care quality across the spectrum of pediatric serious illnesses. PRECIOUS subscales correlated with other QoC measures developed for the illness trajectory —MPOC-20 for (community care) and QCPCI for (hospital care), suggesting that it may be applicable both across illness categories and settings. Furthermore, these correlations highlight the interconnectedness of care experiences, underscoring the importance of introducing comprehensive measures like PRECIOUS in expanding health and social care systems [52,53,54].

In considering the limitations of our measure, it is crucial to acknowledge the context-specific nature of our findings, which primarily pertain to the healthcare system in a high-income and socio-culturally diverse Southeast Asian country. While PRECIOUS was developed with a global perspective in mind and involved international experts in the Delphi expert panel, whether it can be applied in different healthcare settings is unknown at present [55, 56]. Diversity in pediatric healthcare delivery models and cultural expectations regarding child-patient care across settings needs careful consideration and potential adjustment to items to address context-dependent practices (e.g., availability of universal coverage of insurance, access to general and/or tertiary healthcare) and sociocultural norms (i.e., cultural and religious beliefs in dealing with disability) [57]. Importantly, this paper presents key measure development steps but PRECIOUS should be subjected to further validation, continuous assessment, and refinement before and after it is implemented in varied contexts.

Future research should adapt and validate the measure across different healthcare environments, thereby enhancing its universal applicability and effectiveness in improving care for seriously ill children globally. Exploring differences in parental experiences based on the child's phase of illness and whether the child is communicative could also be part of future efforts. Lastly, developing a shorter version is a key future goal because the comprehensive nature of PRECIOUS may make it cognitively burdensome. Having established the content validity and feasibility of the 56-item PRECIOUS measure, our next step is to establish its measurement properties through a larger study.

Conclusions

The 56-item PaREM PRECIOUS has been developed to (i) capture key care processes important to parents of seriously ill children; (ii) be applicable across contexts (such as geographic location or care setting) and different HCWs, and (iii) be relevant throughout the illness trajectory for children suffering from a range of serious illnesses. When fully validated and integrated into routine care, PRECIOUS will provide a standardized evaluation of QoC processes across diverse healthcare settings over time.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- QoC:

-

Quality of Care

- PRECIOUS:

-

PaRental Experience with care for Children with serIOUS illnesses

- HCWs:

-

Healthcare workers

- PaREMs:

-

Parent-Reported Experience Measures

- MPOC:

-

Measure of Processes of Care

- QCPCI:

-

Quality of Children’s Palliative Care Instrument

References

Wood F, Simpson S, Barnes E, Hain R. Disease trajectories and ACT/RCPCH categories in paediatric palliative care. Palliat Med. 2010;24(8):796–806.

Chambers L. A guide to children’s palliative care. 4th ed. Bristol: Together for Short Lives; 2018.

King SM, Rosenbaum PL, King GA. Parents’ perceptions of caregiving: development and validation of a measure of processes. Dev Med Child Neurol. 1996;38(9):757–72.

Berman L, Raval MV, Ottosen M, Mackow AK, Cho M, Goldin AB. Parent perspectives on readiness for discharge home after neonatal intensive care unit admission. J Pediatr. 2019;205(98–104): e4.

Newland LA. Family well-being, parenting, and child well-being: pathways to healthy adjustment. Clin Psychol. 2015;19(1):3–14.

Raina P, O’Donnell M, Rosenbaum P, Brehaut J, Walter SD, Russell D, et al. The health and well-being of caregivers of children with cerebral palsy. Pediatrics. 2005;115(6):e626–36.

Grange A, Bekker H, Noyes J, Langley P. Adequacy of health-related quality of life measures in children under 5 years old: systematic review. J Adv Nurs. 2007;59(3):197–220.

Care IfP-aF-C. What is patient‐and family‐centered care? Bethesda: Institute for Patient-and Family-Centered Care; 2010.

Curtis K, Foster K, Mitchell R, Van C. Models of care delivery for families of critically ill children: an integrative review of international literature. J Pediatr Nurs. 2016;31(3):330–41.

Donabedian A. Evaluating the quality of medical care. 1966. Milbank Q. 2005;83(4):691–729.

Verma R. Overview: what are PROMs and PREMs. St Leonards, Australia: Agency for Clinical Innovation (ACI); 2016.

Weldring T, Smith SMS. Article Commentary: Patient-Reported Outcomes (PROs) and Patient-Reported Outcome Measures (PROMs). Health Serv Insights. 2013;6:HSI.S11093.

Tremblay D, Roberge D, Berbiche D. Determinants of patient-reported experience of cancer services responsiveness. BMC Health Serv Res. 2015;15(1):1–10.

Schembri S. Experiencing health care service quality: through patients’ eyes. Aust Health Rev. 2015;39(1):109–16.

Ahmed F, Burt J, Roland M. Measuring patient experience: concepts and methods. Patient. 2014;7(3):235–41.

Organization WH. Quality of care: a process for making strategic choices in health systems. Geneva: World Health Organization; 2006.

Brady BM, Zhao B, Niu J, et al. Patient-Reported Experiences of Dialysis Care Within a National Pay-for-Performance System. JAMA Intern Med. 2018;178(10):1358–67. https://doi.org/10.1001/jamainternmed.2018.3756.

Bombard Y, Baker GR, Orlando E, Fancott C, Bhatia P, Casalino S, et al. Engaging patients to improve quality of care: a systematic review. Implement Sci. 2018;13(1):98.

Ang FJL, Finkelstein EA, Gandhi M. Parent-reported experience measures of care for children with serious illnesses: a scoping review. Pediatr Crit Care Med. 2022;23:1e416.

Ziniel SI, Rosenberg HN, Bach AM, Singer SJ, Antonelli RC. Validation of a parent-reported experience measure of integrated care. Pediatrics. 2016;138(6):e20160676.

Ang FJL, Chow CTC, Chong P-H, Tan TSZ, Amin Z, Buang SNH, et al. A qualitative exploration of parental perspectives on quality of care for children with serious illnesses. Front Pediatr. 2023;11:1167757.

Haseltine WA. Affordable excellence: the Singapore health system. Washington, D.C.: Brookings Institution Press; 2013.

Hsu C-C, Sandford BA. The Delphi technique: making sense of consensus. Pract Assessment Res Eval. 2007;12(10). https://doi.org/10.7275/pdz9-th90.

Jorm AF. Using the Delphi expert consensus method in mental health research. Aust N Z J Psychiatry. 2015;49(10):887–97.

Jünger S, Payne SA, Brine J, Radbruch L, Brearley SG. Guidance on Conducting and REporting DElphi Studies (CREDES) in palliative care: recommendations based on a methodological systematic review. Palliat Med. 2017;31(8):684–706.

Jones J, Hunter D. Consensus methods for medical and health services research. BMJ. 1995;311(7001):376.

Biondo PD, Nekolaichuk CL, Stiles C, Fainsinger R, Hagen NA. Applying the Delphi process to palliative care tool development: lessons learned. Support Care Cancer. 2008;16:935–42.

Falzarano M, Pinto ZG. Seeking consensus through the use of the Delphi technique in health sciences research. J Allied Health. 2013;42(2):99–105.

Bull C, Crilly J, Latimer S, Gillespie BM. Establishing the content validity of a new emergency department patient-reported experience measure (ED PREM): a Delphi study. BMC Emerg Med. 2022;22(1):65.

Hong QN, Pluye P, Fàbregues S, Bartlett G, Boardman F, Cargo M, et al. Improving the content validity of the mixed methods appraisal tool: a modified e-Delphi study. J Clin Epidemiol. 2019;111:49-59.e1.

Fernández-Domínguez JC, Sesé-Abad A, Morales-Asencio JM, Sastre-Fullana P, Pol-Castañeda S, de Pedro-Gómez JE. Content validity of a health science evidence-based practice questionnaire (HS-EBP) with a web-based modified Delphi approach. Int J Qual Health Care. 2017;28(6):764–73.

Akins RB, Tolson H, Cole BR. Stability of response characteristics of a Delphi panel: application of bootstrap data expansion. BMC Med Res Methodol. 2005;5(1):1–12.

Erffmeyer RC, Erffmeyer ES, Lane IM. The Delphi technique: an empirical evaluation of the optimal number of rounds. Group & Organization Studies. 1986;11(1–2):120–8.

Iqbal S, Pipon-Young L. The Delphi method. Psychologist. 2009;22:598–601.

Diamond IR, Grant RC, Feldman BM, Pencharz PB, Ling SC, Moore AM, et al. Defining consensus: a systematic review recommends methodologic criteria for reporting of Delphi studies. J Clin Epidemiol. 2014;67(4):401–9.

Denzin NK, Lincoln YS, editors. The SAGE handbook of qualitative research. 4th ed. Thousand Oaks: SAGE; 2011.

Ruel E, Wagner WE, III, Gillespie BJ. The practice of survey research: theory and applications. Thousand Oaks, California; 2016. Available from: https://methods.sagepub.com/book/the-practice-of-survey-research.

Rényi A. Probability theory: Courier Corporation. 2007.

Parish S, Pomeranz A, Hemp R, Rizzolo M, Braddock D. Family support for families of persons with developmental disabilities in the US: Status and trends. Policy Research Brief 1–12. Minneapolis: Research and Training Center on Community Living, Institute …; 2001.

Charters E. The use of think-aloud methods in qualitative research an introduction to think-aloud methods. Brock Educ J. 2003;12(2). https://doi.org/10.26522/brocked.v12i2.38.

Willis GB, Artino AR Jr. What do our respondents think we’re asking? Using cognitive interviewing to improve medical education surveys. J Grad Med Educ. 2013;5(3):353–6.

Tourangeau R. Cognitive sciences and survey methods. Cognitive aspects of survey methodology: building a bridge between disciplines. Washington: National Academy Press; 1984.

Johanson GA, Brooks GP. Initial scale development: sample size for pilot studies. Educ Psychol Measur. 2010;70(3):394–400.

King S, King G, Rosenbaum P. Evaluating health service delivery to children with chronic conditions and their families: development of a refined Measure of Processes of Care (MPOC−20). Child Health Care. 2004;33(1):35–57.

Widger K, Brennenstuhl S, Duc J, Tourangeau A, Rapoport A. Factor structure of the Quality of Children’s Palliative Care Instrument (QCPCI) when completed by parents of children with cancer. BMC Palliat Care. 2019;18(1):1–9.

Manfreda KL, Vehovar V, editors. Survey design features influencing response rates in web surveys. The international conference on improving surveys (ICIS). Copenhagen: ICIS 2002 International Conference on Improving Surveys; 2002.

Dillman DA, Eltinge JL, Groves R, Little RJ. Survey nonresponse. New York: Wiley; 2002.

Bosnjak M, Tuten TL. Prepaid and promised incentives in web surveys: an experiment. Soc Sci Comput Rev. 2003;21(2):208–17.

McLorie EV, Hackett J, Fraser LK. Understanding parents’ experiences of care for children with medical complexity in England: a qualitative study. BMJ Paediatrics Open. 2023;7(1):e002057.

Chandran A, Sikka K, Thakar A, Lodha R, Irugu D, Kumar R, et al. The impact of pediatric tracheostomy on the quality of life of caregivers. Int J Pediatr Otorhinolaryngol. 2021;149:110854.

Johnson RF, Brown A, Brooks R. The family impact of having a child with a tracheostomy. Laryngoscope. 2021;131(4):911–5.

Cohen E, Lacombe-Duncan A, Spalding K, MacInnis J, Nicholas D, Narayanan UG, et al. Integrated complex care coordination for children with medical complexity: a mixed-methods evaluation of tertiary care-community collaboration. BMC Health Serv Res. 2012;12(1):366.

Newham JJ, Forman J, Heys M, Cousens S, Lemer C, Elsherbiny M, et al. Children and Young People’s Health Partnership (CYPHP) Evelina London model of care: protocol for an opportunistic cluster randomised controlled trial (cRCT) to assess child health outcomes, healthcare quality and health service use. BMJ Open. 2019;9(8):e027301.

Turchi RM, Antonelli RC, Norwood KWJ, Adams RC, Brei TJ, Burke RT, et al. Patient- and family-centered care coordination: a framework for integrating care for children and youth across multiple systems. Pediatrics. 2014;133(5):e1451–60.

Latif AS. The importance of understanding social and cultural norms in delivering quality health care-a personal experience commentary. Trop Med Infect Dis. 2020;5(1):22.

Napier AD, Ancarno C, Butler B, Calabrese J, Chater A, Chatterjee H, et al. Culture and health. Lancet. 2014;384(9954):1607–39.

Wiener L, McConnell DG, Latella L, Ludi E. Cultural and religious considerations in pediatric palliative care. Palliat Support Care. 2013;11(1):47–67.

Acknowledgements

We are very grateful for the trust of all families who participated in this study. We thank the Star PALS team at HCA Hospice Limited, Rare Disorders Society (Singapore) and Children’s Cancer Foundation for their support in the outreach and data collection for this study. Finally, we thank Ms Bairavi Joann for her support in reviewing early drafts of the PRECIOUS measure.

Funding

FJLA was funded by a President’s Graduate Fellowship while conducting this study during her doctoral training at Duke-NUS Medical School, Singapore. This work was supported by Lien Centre for Palliative Care, Duke-NUS, the “Estate of Tan Sri Khoo Teck Puat” and the SingHealth Duke-NUS Global Health Institute (SDGHI_PHDRG_FY2022_0001-01). Funders were not involved in the design of the study; in data collection, analysis, or interpretation; or in writing the manuscript.

Author information

Authors and Affiliations

Contributions

FJLA was the principal investigator and was primarily responsible for the design, conduct (including data collection, analysis, and interpretation), and write-up of this manuscript. EAF oversaw all aspects of the study design, conduction, interpretation, and manuscript write-up. EAF, TO, CM, RM, and MG were steering committee members and were involved in the study conceptualization and design, had access to de-identified data, and were directly involved with data analysis and interpretation. PHC, CC-TC, ZA, TSZT and KT were involved in the study design, data collection, and interpretation of results. All authors revised drafts critically for important intellectual content and read and approved the final manuscript. Each author agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The National University of Singapore Institutional Review Board (NUS-IRB) approved studies involving human participants separately. Delphi panel review: NUS-IRB-2022–135; Pre-testing and pilot-testing: NUS-IRB-2022–301. All participants provided informed consent to participate. All study procedures were performed in accordance with the ethical standards of the institutional review board and with the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Ang, F.J.L., Gandhi, M., Ostbye, T. et al. Development of the Parental Experience with Care for Children with Serious Illnesses (PRECIOUS) quality of care measure. BMC Palliat Care 23, 66 (2024). https://doi.org/10.1186/s12904-024-01401-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12904-024-01401-x