Abstract

Background

The experience sampling method (ESM) is an intensive longitudinal research method.

Participants complete questionnaires at multiple times about their current or very recent state. The design of ESM studies is complex. People with psychosis have been shown to be less adherent to ESM study protocols than the general population. It is not known how to design studies that increase adherence to study protocols. A lack of typology makes it is hard for researchers to decide how to collect data in a way that allows for methodological rigour, quality of reporting, and the ability to synthesise findings. The aims of this systematic review were to characterise the design choices made in ESM studies monitoring the daily lives of people with psychosis, and to synthesise evidence relating the data completeness to different design choices.

Methods

A systematic review was conducted of published literature on studies using ESM with people with psychosis. Studies were included if they used digital technology for data collection and reported the completeness of the data set. The constant comparative method was used to identify design decisions, using inductive identification of design decisions with simultaneous comparison of design decisions observed. Weighted regression was used to identify design decisions that predicted data completeness. The review was pre-registered (PROSPERO CRD42019125545).

Results

Thirty-eight studies were included. A typology of design choices used in ESM studies was developed, which comprised three superordinate categories of design choice: Study context, ESM approach and ESM implementation. Design decisions that predict data completeness include type of ESM protocol used, length of time participants are enrolled in the study, and if there is contact with the research team during data collection.

Conclusions

This review identified a range of design decisions used in studies using ESM in the context of psychosis. Design decisions that influence data completeness were identified. Findings will help the design and reporting of future ESM studies. Results are presented with the focus on psychosis, but the findings can be applied across different mental health populations.

Similar content being viewed by others

Background

The experience sampling method (ESM) is an intensive longitudinal research method [1]. ESM is conducted in real world settings as a participant goes about their daily life [2]. Participants complete self-report questions about transient experiences at multiple times, typically followed by questions relating to current environment or context [3]. Prior to the advent of digital technologies, ESM involved filling in a diary or booklet [4]. Most ESM designs are now computerised and allow researchers to identify the exact time a momentary assessment was completed [5]. The review will focus specifically on digital ESM because paper-based approaches are increasingly redundant and the review focus on data completeness is likely to be strongly influenced by data collection approach.

ESM can provide an accurate assessment of phenomena as they occur [2]. It allows researchers to gain more ecologically valid insights into the impact of daily events on participants, which are difficult to measure under laboratory conditions [6]. ESM can be used to examine temporal precedence between variables [2]. By asking participants to report experiences over a period of time, researchers can investigate fluctuations between variables which may not be captured using other methods [7].

ESM has been used widely in mental health research [8], and a review of its use has identified a number of applications including improving understanding of symptoms and social interactions, identifying causes of symptom variation and evaluating treatments [9]. ESM is a valid approach when capturing mental health states in participants with psychosis [10].

Data completeness is a particular challenge in ESM. Missing data is common in research using ESM methods [11]. Data incompleteness can occur for a number of reasons, such as participants finding ESM burdensome and time consuming [2], leading to reduced adherence to the study protocol, resulting in reduced data quantity [12] and quality [13]. Incomplete data sets can cause important aspects of experience to be overlooked by researchers and also bias statistical models used for analysis [14]. People with psychosis have been shown to be less adherent to ESM study protocols than the general population [13]. Studies that recruit people with psychosis have higher rates of participant withdrawal, resulting in fewer participants included in final analyses [15].

ESM design

Conducting an ESM study involves making several design decisions [16]. For example, deciding when and how frequently participants answer questionnaires. A questionnaire prompt may be sent to participants at pre-defined intervals (time contingent protocol), scheduled at random times (signal contingent protocol) or carried out when a predefined event has occurred (event contingent protocol) [17]. Studies can also use hybrid designs, which combine sampling protocols [9]. Setting the frequency of questionnaire prompts involves consideration of participant burden as well as how rapidly the target phenomenon is expected to vary [4].

There is evidence that design decisions influence completion rates. For example, longer questionnaires have been associated with higher levels of participant burden [18]. Protocol adherence has been shown to reduce over time, and also to be dependent on the time of day a questionnaire is received [13]. A systematic review investigating compliance with study protocols and retention in ESM studies in participants with severe mental illness found that frequent assessments and short intervals between questionnaires reduce data completeness, and increasing participant reimbursement increases data completeness [15].

There is a need for greater consistency in the design of ESM studies [9]. ESM is a collection of methods and is usually reported in relation to general characteristics rather than a defined set of design options [4]. When designing an ESM study, researchers have insufficient evidence on which to base design decisions [18]. Designs of ESM studies are often based on individual research questions [16], leading to a large heterogeneity of designs [15]. Additional methodological research is needed in order for studies to be replicable and standardised [9].

Developing consistency in design is impeded by the absence of a typology of design decisions. No typology for ESM design choices currently exists. A typology could help to define and classify ESM research methods [19], increasing both methodological rigour in developing and reporting individual studies and the ability to compare or combine findings.

Review aims

The aim of this systematic review is to characterise the design choices made in digital ESM studies monitoring the daily lives of people with psychosis. The objectives in relation to ESM studies involving people with psychosis are:

-

(1) to develop a typology of design choices used in digital ESM studies and

-

(2) to synthesise evidence relating data completeness to different ESM design choices.

Methods

A systematic review of the literature was carried out following PRISMA guidance [20]. Studies published in academic journals that met inclusion criteria were assessed for methodological quality. The constant comparative method [21] was used to identify design decisions to produce a typology. Weighted regression was used to identify design decisions that predicted data completeness.

Eligibility criteria

Inclusion criteria:

-

• Participants: papers that reported on participants with a clinical or research diagnosis of psychosis, either as a category or by specific diagnosis, e.g., schizophrenia either as the study population or as a separately reported and disaggregable sub-group

-

• Methods: Studies using ESM to monitor participants with psychosis

-

• Studies which used digital technology to administer ESM

-

• Studies which included experience sampling as part of a wider design, e.g. as part of an intervention

-

• English language full text articles, reviews and conference abstracts

-

• Papers published from January 2009 to July 2021

-

• Studies which either reported the completeness of data or gave sufficient data to allow calculation of data completeness where not specifically reported

Exclusion criteria:

-

• Studies recruiting non clinically diagnosed adults, i.e. participants self-reporting psychosis without clinical or research validation

-

• Studies using non-digital approaches to data collection

-

• Lifelogging, quantified self and other self-tracking approaches used by individuals to record personal data, since these are not research methodologies used to collect data for scientific purposes

Data sources and search strategy

A systematic search was developed and conducted in collaboration with two information specialists with expertise in systematic review searches (authors EY and NT). These data sources and associated search strategy are described below.

Six sources were used.

First, the following electronic databases were searched with a date limit of January 2009 to July 2021 (date of last search): Medline, Embase, PsycInfo (all via Ovid), Cochrane Library, and Web of Science Core Collection. The search terms are described in detail in additional file 1.

Second, the table of contents for the following journals were hand searched: Journal of Medical Internet Research, Journal of Medical Internet Research (Mental health), Journal of Medical Internet Research (mHealth and uHealth), Journal of Methods in Psychiatric Research, Psychiatric Rehabilitation Journal, Psychiatric Services, Psychological Assessment Schizophrenia Bulletin and Schizophrenia Research. Issues from 2009 to July 2021 were searched. These journals were chosen as they regularly published recovery-related papers.

Third, web-based searches were conducted using: Google Scholar, ResearchGate and Academia.edu. They were searched using the terms ‘experience sampling’ and ‘psychosis’, ‘ecological momentary assessment’ and ‘psychosis’, ‘experience sampling’ and ‘schizophrenia’ and ecological momentary assessment and ‘schizophrenia’. Due to the large number of results found on Google Scholar only the first five pages (100 results) per search string were searched.

Fourth, grey literature searches were conducted using OpenGrey. This was conducted using the same search terms used for the web-based searches.

Fifth, reference lists of included papers were hand-searched. Backward citation tracking was conducted by hand-searching the reference lists of all included papers. Forward citation tracking of papers citing included studies was conducted using Scopus and Google Scholar.

Finally, a panel of five experts with expertise in experience sampling methods was consulted for additional studies meeting the inclusion criteria.

Data extraction and appraisal

Eligible citations were collated and uploaded to EndNote, and duplicates were removed. The titles of all identified citations were screened for relevance against the inclusion criteria by ED and FN, who rated all of the studies for inclusion. Data were extracted into an Excel spreadsheet developed as a Data Abstraction Table (DAT) for the review. The complete DAT can be found in (Additional file 2). Full text was obtained for potentially relevant papers and eligibility decided by the lead author.

Quality assessment

In the absence of a typology for reporting of ESM studies, recommended reporting criteria for ambulatory studies [22] were used. Studies were assigned points based on whether they had reported elements of the study design recommended by the guidelines. Examples of recommended reporting criteria include ‘explanation of the rationale for the sampling design’ and ‘full description of the hardware and software used to collect data’. Corresponding to the number of items on the reporting criterion, the maximum possible score was 12 points. Studies scoring 0 to 6 were arbitrarily considered to be low quality, and studies scoring 7 to 12 were considered high quality. This rating was carried out by ED.

Subgroup analysis was undertaken for studies included in Objective 2 (predictors of data completeness). Subgroup analysis was not undertaken for the Objective 1 typology because the aim was to develop an exhaustive typology [23].

Data analysis

To meet Objective 1 (design typology), design decisions were iteratively identified from the included papers. A preliminary typology of design decisions was developed by analysts who were familiar with the field of ESM (ED, MC, MS). This preliminary typology was used as headings in the initial version of the Data Abstraction Table (DAT). The constant comparative method [21] was used to refine the preliminary typology, by combining inductive category coding with simultaneous comparison of incidents observed [24]. Included papers were coded using existing DAT headings, and further or combined categories were iteratively identified [25]. The DAT was then structured using all identified design decisions and corresponding data extracted from each study [26]. Extending and combining of the preliminary typology was achieved through discussion amongst researchers ED, MC, FN, MS.

To meet objective 2, the outcome of data completeness was defined as the percentage of questionnaires completed by participants in each study out of a possible total allowed by each study protocol. This was taken directly from the paper where possible. Where percentage of data completeness was not reported, completeness was calculated by converting the total questionnaires completed during the study into a percentage using the total possible questionnaires allowed by the study protocol. The percentage represents the total data completeness for each study. Each study had a different number of questionnaires to report and different number of participants The percentage therefore includes variation between participants and within participants as questionnaire completion was completed over time. Each of the categories from the typology were used as predictor variables. Additional predictor variables included in the analysis were mean age of study participants and percentage of male participants.

A weighted regression was carried out. This approach assumes that the completeness is a summary statistic from a study with unknown variance and this standard error. Each completeness percentage statistic is weighted by how many participants were in each study. The number of questionnaires (denominator variable) was also included as a predictor to see if this predicted completeness. The completion outcome is analysed as a standard regression but where each estimate of completeness is given weight dependent on its sample size.

Predictor variables were entered into the weighted regression model. Design features not used in any included study, such as 2.1.1 ESM protocol: signal contingent, were excluded. For continuous predictor variables, cut-points were used to produce broadly equal sized categories: Participant gender (0%—32% male, 33%-65% male, 66%-100% male). For each predictor variable, the first category was then used as the reference category and each of the other categories individually and where relevant in grouped combinations were compared with the reference.

A p-value for each predictor was calculated using the ANOVA function comparing the difference in model fit (R squared value) for each predictor and the intercept model (i.e. no variables). Significant predictors were then further explored by comparing beta values in their models with their corresponding p-values. These were reported and tabulated to see the impact on completeness and to explore which differences between categories of the predictor were significantly associated with completeness.

Results

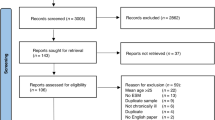

Thirty-eight publications were included in the review. The study selection process is summarised in Fig. 1 using the PRISMA flowchart [27].

Characteristics of included publications are presented in Table 1.

Quality assessment

All 38 studies were assessed for quality. Overall, 14 (37%) were evaluated as high quality and 24 (63%) as low quality.

Participants

The 38 included studies recruited a total of 2,722 participants with psychosis. Overall, 51% (n = 1,380) of participants were male. The mean age was 41 years. Other participant demographic variables were reported inconsistently across studies. Data were collected from 2,643 (97%) participants in the community and 79 (3%) were inpatients at the time of data collection. Participants had diagnoses including schizophrenia, spectrum disorder, psychosis, non-affective psychotic disorder, bipolar disorder, schizophreniform disorder, schizo-affective disorder, delusional disorder, or psychotic disorder not otherwise specified (NOS), depression with psychotic symptoms, delusional disorder, first episode psychosis and major depression.

ESM design choices

Design choices are summarised in Table 2. Not all design decisions were reported across all studies.

Data completeness

Percentage of data completeness was obtained for 29 studies. The remaining nine studies expressed data completeness as either a percentage or number of participants who completed more than a predefined threshold amount, meaning it was not possible to determine the exact data completeness percentage. Data completeness across included studies in summarised in Fig. 2.

Objective 1: Typology of design choices used in ESM studies

Analysis of included publications identified 24 design decisions. Three superordinate themes were identified from the designs: Study context, ESM approach and ESM implementation.

Superordinate theme 1: Study context

The Study Context theme describes decisions made when designing an ESM study which are not ESM-specific decision. These are shown in Table 3.

Superordinate theme 2: ESM Approach

ESM approach describes the design decisions relating specifically to experience sampling and are shown in Table 4.

Superordinate theme 3: ESM Implementation

The theme of ESM implementation is shown in Table 5.

Objective 2: Predictors of data completeness

A weighted regression of design decisions included in the typology was conducted, and the significance of each design choice as a predictor of data completeness is shown in Table 6.

The regression identified six candidate predictors of data completeness: ESM protocol, length of time per measurement, total time in the study, research team contact, accepted response rate and collecting other data. The findings from the weighted regression for specific values of these six candidate predictors are shown in Table 7.

Table 7 shows that using a time contingent protocol rather than a signal contingent protocol was significantly associated with reduced data completeness by around 12%. Greater data collection burden was consistently associated with reduced data completeness: every extra hour in measurement duration reduced data completeness by 2%, every additional day enrolled in the study reduced data completeness by 0.5%, and collecting extra data alongside ESM data reduced data completeness by 19%. Finally, researcher-initiated contact with participants increased data completeness by 17.5% when compared to participant-initiated contact.

Sensitivity analysis

The analysis was repeated only including the 10 studies rated as high quality that expressed the data completeness as a percentage. The quality assessment ratings for studies is shown in Additional file 2. 14 studies were rated as high quality. The quality criteria met by fewest studies was justification of sample size (met by 3 studies) and rationale for the sampling design (met by 5 studies). The weighted regression identified 3 design decisions that predicted of data completeness: sample size (p: 0.012), other data collected (p: 0.006) and hardware used (p: 0.045). The statistically significant predictors with beta values, standard errors and p-values can be found in additional file 3.

Discussion

This systematic review identified design decisions used in experience sampling studies of people with psychosis. The resulting typology identified three superordinate themes relating to design decisions in ESM studies: Study context, ESM approach and ESM implementation. Weighted regression was then used to identify six design decisions that predicted data completeness: ESM protocol, other data collected, length of time in study, measurement duration, accepted response rate and contact with the research team.

Objective 1: Typology of design choices used in digital ESM studies

A systematic search of published literature on ESM allowed the creation of a typology that accurately represent the methods used in the field [65]. The resulting typology can help researchers to choose designs, help establish a common language and help to provide the field of ESM research with organisational structure [66].

Four ESM protocols were included in the typology. Event-contingent assessments, Signal-contingent assessments, Time-contingent assessments, and hybrid assessments. Three ESM protocols are commonly cited in ESM literature [67]. A questionnaire prompt may be sent to participants at pre-defined intervals (time contingent), scheduled at random times (signal contingent) or carried out when a predefined event has occurred (event contingent) [17]. ‘Hybrid assessment’ has been used to describe combined protocols.

A sampling protocol is often selected based on the variables of interest [16]. Choice of protocol may depend on whether the variables are discrete, relating to distinct events such as social interactions, or continuous events with less identifiable parameters, such as mood [4]. Discrete events are well suited to event contingent protocols as they have definable beginning and end points. Rather than waiting for a signal or prompt, participants fill out a questionnaire when a discrete event occurs. Time contingent and signal contingent protocols are better suited to measurement of continuous variables. Participants are not required to identify the beginning or end of a pre-defined event in order to complete a questionnaire in time contingent or signal contingent designs [9].

Signal and time contingent protocols can be carried out at fixed or flexible time points [9]. Some authors described their signal contingent designs as stratified [37, 39, 43] or semi-random [16]. In stratified sampling, questionnaires are sent at random time points within pre-programmed time windows. These parameters are unknown to the participant [58]. An example of this is a protocol with a range of 90 min within which at least one beep occurred with a minimum of 15 min and a maximum of 3 h between each beep. The intention of the stratified protocol is to balance the requirement for collecting variable and valid data with participant burden [43]. The data may be more variable than a time contingent protocol, as the timing of the signal cannot be anticipated by participants. Participants will not therefore be less likely to alter their daily life or habits to incorporate the sampling. Some stratified sampling protocols included personalising the daily measurement period to each participant’s waking hours [39, 58].

Another design choice relating to ESM approach is whether the digital technology used for data collection was provided by the researchers or participants were required to use their own smartphones. The present review found there to be no significant difference in data completeness between studies which provided a device and those in which participants used their own phone. There is conflicting opinion about this in ESM literature [22]. Disadvantages of using participant-owned devices may include increased distractions from other applications on the phone and decreased uniformity of study procedures [68]. Advantages may include reduced study costs and also reduced requirement for participants to meet researchers face to face [69], which could reduce participant burden. A meta-analysis on ESM protocol compliance in substance users found no significant difference in adherence rates for participants who used their own phone compared with participants who used researcher provided devices [70].

The typology identified measures used for ESM studies that were derived from psychometrically validated scales and others that were not validated. Many of the measures which were not validated were created by the research team for the purpose of the study. In ESM studies, researchers have often selected items from longer, validated measures and adapted the questions to fit the study time frame [22]. This is often due to the lack of validated measures available for use in ESM studies [71]. Researchers should consider that adding “right now” to a questionnaire item does not necessarily mean that it is appropriate for measuring momentary states [3]. Measuring momentary experiences is different from measuring phenomenon included in cross-sectional questionnaires that occur generally and retrospectively [9]. When considering what questionnaires to use in ESM studies, researchers should take into account the momentary nature of the phenomena and develop items that accurately capture how they are experienced over the course of the study duration [72].

The typology also identified support offered to participants once data collection has commenced. This is a common method of encouraging protocol adherence [13]. It can take the form of technical support, motivational support, or emotional support.

This study found no significant association between data completeness and reimbursement to participants. However, reimbursement can involve a number of different strategies, including providing added incentives to participants who achieve high levels of protocol adherence, withholding payment if compliance falls below a certain threshold, and providing payment at regular face to face meetings [22]. The value of participant reimbursement has been found to be positively associated with protocol adherence [15]. However, the authors note that they did not consider the strategy used to provide the incentives. Instead, a total incentive was calculated for each study. Another study investigated studies which provided reimbursement proportional to the number of questionnaires completed. No increase in protocol adherence was found [70]. The difference in findings indicate that more research is needed in this area, particularly on the influence of different reimbursement strategies on data completeness.

Applicability to different populations

Design choices included in the typology are consistent with those described in suggested ESM reporting guidelines for research in psychopathology [22]. This suggests that the typology can be applied across different mental health populations. Future research is required to validate the typology for use with transdiagnostic groups. For example, when being used to collect data from participants with depression, or measuring discrete variables such as self-harming behaviours [73]. This suggests that event contingent protocols may be used more frequently with this population [4].

Objective 2: Design decisions that predict data completeness

The ESM protocol used predicted data completeness. Using a signal contingent protocol compared to a time contingent protocol was shown to increase data completeness by around 12%. This is in contrast with previous research which has shown that signal contingent sampling may be perceived as more burdensome by study participants [74] leading to lower levels of adherence compared to other protocols [75]. The authors suggest that higher levels of predictability afforded by time contingent protocols may increase adherence as participants are able to integrate responding to questionnaires into their daily routine [75]. Knowledge of when to expect the questionnaire prompts may allow participants to plan their daily tasks in accordance with the scheduled questionnaires [15].

A study of ESM in participants with substance dependence found that participants may prefer to isolate themselves, or to be in quiet environment when responding to questionnaires [76]. In this case, the additional burden of anticipating the signal at a certain time and finding a quiet environment may account for lower data completeness with a time contingent protocol. Similarly, the psychosis population in our review may have more cognitive impairments such as reduced attention, meaning that the potential for integrating data collection into daily life is reduced, so a signal contingent assessment is easier to provide an immediate response to. As there are advantages and disadvantages to each ESM protocol and inconsistent findings regarding their effects on data completeness, it has been suggested that the choice of protocol should be based on the requirements of the study [15]. This may involve choosing a protocol that is based on the nature of the variables of interest [4].

Design decisions relating to scheduling were found to influence data completeness. Longer study lengths and longer daily measurement duration predicted lower levels of data completeness. For every day participants were enrolled in a study, data completeness reduced by 0.5%. Similarly, every additional hour of measurement duration per day reduced data completeness by 2%. These findings are consistent with previous research. A study analysed predictors of adherence to ESM protocols in a pooled data set of 10 ESM studies. The sample consisted of 1,717 participants, of whom 15% had experienced psychosis. The results showed that ESM protocol adherence declined over the duration of study days [13]. More generally, the problems experienced by people living with psychosis, such as negative symptoms and amotivaton, may require lower burden data collection procedures.

Some studies have customised the time period per day that sampling took place for each participant. This has included personalising the daily measurement period to each participant’s waking hours [29, 34, 36]. Sampling took place for the same number of hours per day for each participant but began and ended at different times. This review only included the total number of hours per day sampling took place in the analysis. Future research could investigate the relationship between personalised scheduling and data completeness. Completeness, to allow diurnal variation in symptomatology to be integrated into the data collection schedule. For example, an individual who is more preoccupied with hallucinatroy experiences in the morning may be more able to respond to data collection prompts later in the day.

Monitoring participants once ESM has commenced has been recommended in order to encourage protocol adherence [22]. Support from researchers during the data collection phase is either initiated by researchers or by participants. Researcher initiated contact with participants throughout the duration of the study increased data completeness by 17.5% compared to participant-initiated contact. These findings support active researcher support once data collection has commenced. This is something which may be particularly beneficial if participants find the study procedures burdensome.

Strengths and limitations

One strength of this study is the rigorous search strategy. This was designed in collaboration with two information specialists with expertise in conducting systematic review searches in the field of mental health. Another strength is the use of several analysists with differing expertise. Areas of expertise include clinical expertise, mental health services research and technology research and design.

Several limitations can be identified. Studies were only included which reported data completeness, or studies where it was possible to calculate this. Studies that did not report this could have been included for Objective 1, which may have increased generalisability of the findings. Similarly, studies were only included if they used digital ESM which may also have limited generalisability. The title, abstract and full paper sifting was only carried out by one author (ED), which may introduce inclusion bias. In the absence of an appropriate quality checking tool, recommended reporting guidelines were used instead, which may not have been fully capturing quality. Finally, a meta regression could not be carried out, and the weighted regression that was conducted instead does not account for given estimates of variance, meaning that conclusions drawn from the analysis need to be interpreted with caution. Additionally, a small number of studies (n = 10) were included in the sensitivity analysis.

Conclusions

The study addresses a knowledge gap related to design decisions for ESM studies recruiting people with psychosis. The typology of design choices used in ESM studies identifies key design decisions to consider when designing and implementing an experience sampling studies. The typology could be used to inform the design of future experience sampling studies in transdiagnostic mental health populations. The review also identifies a number of predictors of data completeness. This knowledge could help future researchers to increase the likelihood of achieving fuller data sets.

Future research might seek to add additional design choices to the typology and to refine design decisions as the field advances. Future research may also examine how the typology is used by researchers when designing ESM studies. Researchers may also validate the typology for use with different mental health populations.

Availability of data and materials

All data generated or analysed during this study are included in this published article and its supplementary information files.

Abbreviations

- ESM:

-

Experience Sampling Method

- DAT:

-

Data abstraction table

References

Bolger N, Laurenceau J-P. Preface. Intensive longitudinal methods. London: Guilford; 2013. p. ix–x.

Palmier-Claus JE, Haddock G, Varese F. Why the experience sampling method. In: Palmier-Claus JE, Haddock G, Varese F, editors. Experience sampling in metal health research. London: Routledge; 2019. p. 1–7.

Kimhy D, Vakhrusheva J. Experience sampling in the study of psychosis. In: Palmier-Claus JE, Haddock G, Varese F, editors. Experience sampling in mental health research. London: Routledge; 2019. p. 38–52.

Shiffman S, Stone AA, Hufford MR. Ecological momentary assessment. Annu Rev Clin Psychol. 2008;4:1–32.

Burke LE, Shiffman S, Music E, Styn MA, Kriska A, Smailagic A, et al. Ecological momentary assessment in behavioral research: addressing technological and human participant challenges. J Med Internet Res. 2017;19(3):e77. https://doi.org/10.2196/jmir.7138.

Conner T, Mehl M. Ambulatory assessment: Methods for studying everyday life. Emerging trends in the social and behavioral sciences: An interdisciplinary, searchable, and linkable resource. 2015. https://onlinelibrary.wiley.com/browse/book/10.1002/9781118900772/all-topics. Accessed 28 Oct 2022.

Bolger N, Davis A, Rafaeli E. Diary methods: Capturing life as it is lived. Annu Rev Psychol. 2003;54(1):579–616.

Kimhy D, Myin-Germeys I, Palmier-Claus J, Swendsen J. Mobile assessment guide for research in schizophrenia and severe mental disorders. Schizophr Bull. 2012;38(3):386–95.

Myin-Germeys I, Kasanova Z, Vaessen T, Vachon H, Kirtley O, Viechtbauer W, et al. Experience sampling methodology in mental health research: new insights and technical developments. World Psychiatry. 2018;17(2):123–32.

Ben-Zeev D, McHugo GJ, Xie H, Dobbins K, Young MA. Comparing retrospective reports to real-time/real-place mobile assessments in individuals with schizophrenia and a nonclinical comparison group. Schizophr Bull. 2012;38(3):396–404.

Depp C, Kaufmann CN, Granholm E, Thompson W. Emerging applications of prediction in experience sampling. In: Palmier-Claus JE, Haddock G, Varese F, editors. Experience sampling in mental health research. London: Routledge; 2019.

Fuller-Tyszkiewicz M, Skouteris H, Richardson B, Blore J, Holmes M, Mills J. Does the burden of the experience sampling method undermine data quality in state body image research? Body Image. 2013;10(4):607–13.

Rintala A, Wampers M, Myin-Germeys I, Viechtbauer W. Response compliance and predictors thereof in studies using the experience sampling method. Psychol Assess. 2019;31(2):226.

McLean D, Nakamura J, Csikszentmihalyi J. Explaining System Missing: Missing Data and Experience Sampling Method. Soc Psychol Personal Sci. 2017;8(4):434–41.

Vachon H, Viechtbauer W, Rintala A, Myin-Germeys I. Compliance and retention with the experience sampling method over the continuum of severe mental disorders: meta-analysis and recommendations. J Med Internet Res. 2019;21(12):e14475.

Janssens K, Bos E, Rosmalen J, Wichers M, Riese H. A qualitative approach to guide choices for diary design. BMC Med Res Methodol. 2018;18(1):140.

Wheeler L, Reis HT. Self-recording of everyday life events: Origins, types, and uses. J Pers. 1991;59(3):339–54.

Eisele G, Vachon H, Lafit G, Kuppens P, Houben M, Myin-Germeys I, et al. The effects of sampling frequency and questionnaire length on perceived burden, compliance, and careless responding in experience sampling data in a student population. Assessment. 2020;29(2):136–51 1073191120957102.

Beissel-Durran tG. A Typology of Research Methods Within the Social Sciences. 2004.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9.

Glaser B, Strauss A. The discovery of grounded theory. New Brunswick: Aldine Transaction; 1967.

Trull TJ, Ebner-Priemer UW. Ambulatory assessment in psychopathology research: A review of recommended reporting guidelines and current practices. J Abnorm Psychol. 2020;129(1):56.

Bailey K. Typologies and taxonomies in social science. In: Bailey K, editor. Typologies and taxonomies. California: Sage publications; 1994. p. 1–16.

Goetz J, Lecompte M. Ethnographic Research and the Problem of Data Reduction. Anthropol Educ Q. 1981;12:51–70.

Dye J, Schatz I, Rosenberg B, Coleman S. Constant comparison method: A kaleidoscope of data. The qualitative report. 2000;4:1–10.

Glaser B. The constant comparative method of qualitative analysis. Soc Probl. 1965;12:436–45.

Page M, McKenzie J, Bossuyt P, Boutron I, Hoffmann T, Mulrow C. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Br Med J. 372(71).

Ben-Zeev D, Frounfelker R, Morris SB, Corrigan PW. Predictors of self-stigma in schizophrenia: New insights using mobile technologies. J Dual Diagn. 2012;8(4):305–14.

Ben-Zeev D, Morris S, Swendsen J, Granholm E. Predicting the occurrence, conviction, distress, and disruption of different delusional experiences in the daily life of people with schizophrenia. Schizophr Bull. 2012;38(4):826–37.

So SH-W, Peters ER, Swendsen J, Garety PA, Kapur S. Detecting improvements in acute psychotic symptoms using experience sampling methodology. Psych Res. 2013;210(1):82–8.

Reininghaus U, Kempton MJ, Valmaggia L, Craig TK, Garety P, Onyejiaka A, et al. Stress sensitivity, aberrant salience, and threat anticipation in early psychosis: an experience sampling study. Schizophr Bull. 2016;42(3):712–22.

Klippel A, Myin-Germeys I, Chavez-Baldini U, Preacher KJ, Kempton M, Valmaggia L, et al. Modeling the interplay between psychological processes and adverse, stressful contexts and experiences in pathways to psychosis: an experience sampling study. Schizophr Bull. 2017;43(2):302–15.

Moitra E, Gaudiano BA, Davis CH, Ben-Zeev D. Feasibility and acceptability of post-hospitalization ecological momentary assessment in patients with psychotic-spectrum disorders. Compr Psychiatry. 2017;74:204–13.

Dupuy M, Misdrahi D, N’Kaoua B, Tessier A, Bouvard A, Schweitzer P, et al. Mobile cognitive testing in patients with schizophrenia: A controlled study of feasibility and validity. Journal de Thérapie Comportementale et Cognitive. 2018;28(4):204–13.

Ainsworth J, Palmier-Claus JE, Machin M, Barrowclough C, Dunn G, Rogers A, et al. A comparison of two delivery modalities of a mobile phone-based assessment for serious mental illness: native smartphone application vs text-messaging only implementations. J Med Internet Res. 2013;15(4):e60.

Granholm E, Holden JL, Mikhael T, Link PC, Swendsen J, Depp C, et al. What Do People With Schizophrenia Do All Day? Ecological Momentary Assessment of Real-World Functioning in Schizophrenia. Schizophr Bull. 2020;46(2):242–51.

Bell IH, Rossell SL, Farhall J, Hayward M, Lim MH, Fielding-Smith SF, et al. Pilot randomised controlled trial of a brief coping-focused intervention for hearing voices blended with smartphone-based ecological momentary assessment and intervention (SAVVy): Feasibility, acceptability and preliminary clinical outcomes. Schizophr Res. 2020;216:479–87.

Reininghaus U, Oorschot M, Moritz S, Gayer-Anderson C, Kempton MJ, Valmaggia L, et al. Liberal acceptance bias, momentary aberrant salience, and psychosis: an experimental experience sampling study. Schizophr Bull. 2019;45(4):871–82.

So SH-w, Chung LK-h, Tse C-Y, Chan SS-m, Chong GH-c, Hung KS-y, et al. Moment-to-moment dynamics between auditory verbal hallucinations and negative affect and the role of beliefs about voices. Psychol Med. 2021;51(4):661–7.

Jongeneel A, Aalbers G, Bell I, Fried EI, Delespaul P, Riper H, et al. A time-series network approach to auditory verbal hallucinations: Examining dynamic interactions using experience sampling methodology. Schizophr Res. 2020;215:148–56.

Granholm E, Ben-Zeev D, Fulford D, Swendsen J. Ecological momentary assessment of social functioning in schizophrenia: impact of performance appraisals and affect on social interactions. Schizophr Res. 2013;145(1–3):120–4.

Brenner CJ, Ben-Zeev D. Affective forecasting in schizophrenia: comparing predictions to real-time Ecological Momentary Assessment (EMA) ratings. Psychiatr Rehabil J. 2014;37(4):316.

Hartley S, Haddock G, e Sa DV, Emsley R, Barrowclough C. An experience sampling study of worry and rumination in psychosis. Psychol Med. 2014;44(8):1605–14.

Kimhy D, Vakhrusheva J, Liu Y, Wang Y. Use of mobile assessment technologies in inpatient psychiatric settings. Asian J Psychiatr. 2014;10:90–5.

Edwards CJ, Cella M, Tarrier N, Wykes T. The optimisation of experience sampling protocols in people with schizophrenia. Psychiatry Res. 2016;244:289–93.

Mulligan LD, Haddock G, Emsley R, Neil ST, Kyle SD. High resolution examination of the role of sleep disturbance in predicting functioning and psychotic symptoms in schizophrenia: A novel experience sampling study. J Abnorm Psychol. 2016;125(6):788.

Kimhy D, Wall MM, Hansen MC, Vakhrusheva J, Choi CJ, Delespaul P, et al. Autonomic regulation and auditory hallucinations in individuals with schizophrenia: an experience sampling study. Schizophr Bull. 2017;43(4):754–63.

Steenkamp L, Weijers J, Gerrmann J, Eurelings-Bontekoe E, Selten J-P. The relationship between childhood abuse and severity of psychosis is mediated by loneliness: an experience sampling study. Schizophr Res. 2022;241:306–11.

Swendsen J, Ben-Zeev D, Granholm E. Real-time electronic ambulatory monitoring of substance use and symptom expression in schizophrenia. Am J Psychiatry. 2011;168(2):202–9.

Moran EK, Culbreth AJ, Barch DM. Ecological momentary assessment of negative symptoms in schizophrenia: Relationships to effort-based decision making and reinforcement learning. J Abnorm Psychol. 2017;126(1):96.

Kimhy D, Delespaul P, Ahn H, Cai S, Shikhman M, Lieberman JA, et al. Concurrent measurement of “real-world” stress and arousal in individuals with psychosis: assessing the feasibility and validity of a novel methodology. Schizophr Bull. 2010;36(6):1131–9.

Blum LH, Vakhrusheva J, Saperstein A, Khan S, Chang RW, Hansen MC, et al. Depressed mood in individuals with schizophrenia: a comparison of retrospective and real-time measures. Psychiatry Res. 2015;227(2–3):318–23.

Edwards CJ, Cella M, Emsley R, Tarrier N, Wykes TH. Exploring the relationship between the anticipation and experience of pleasure in people with schizophrenia: An experience sampling study. Schizophr Res. 2018;202:72–9.

Cella M, He Z, Killikelly C, Okruszek Ł, Lewis S, Wykes T. Blending active and passive digital technology methods to improve symptom monitoring in early psychosis. Early Interv Psychiatry. 2019;13(5):1271–5.

Visser KF, Esfahlani FZ, Sayama H, Strauss GP. An ecological momentary assessment evaluation of emotion regulation abnormalities in schizophrenia. Psychol Med. 2018;48(14):2337–45.

Johnson EI, Grondin O, Barrault M, Faytout M, Helbig S, Husky M, et al. Computerized ambulatory monitoring in psychiatry: a multi-site collaborative study of acceptability, compliance, and reactivity. Int J Methods Psychiatr Res. 2009;18(1):48–57.

Fielding-Smith SF, Greenwood KE, Wichers M, Peters E, Hayward M. Associations between responses to voices, distress and appraisals during daily life: an ecological validation of the cognitive behavioural model. Psychol Med. 2022;52(3):538–47.

Harvey PD, Miller ML, Moore RC, Depp CA, Parrish EM, Pinkham AE. Capturing Clinical Symptoms with Ecological Momentary Assessment: Convergence of Momentary Reports of Psychotic and Mood Symptoms with Diagnoses and Standard Clinical Assessments. Innov Clin Neurosci. 2021;18(1–3):24.

Hanssen E, Balvert S, Oorschot M, Borkelmans K, van Os J, Delespaul P, et al. An ecological momentary intervention incorporating personalised feedback to improve symptoms and social functioning in schizophrenia spectrum disorders. Psychiatry Res. 2020;284:112695.

Ben-Zeev D, Buck B, Chander A, Brian R, Wang W, Atkins D, et al. Mobile RDoC: Using Smartphones to Understand the Relationship Between Auditory Verbal Hallucinations and Need for Care. Schizophrenia Bulletin Open. 2020;1(1):sgaa060.

Durand D, Strassnig M, Moore R, Depp C, Pinkham A, Harvey P. Social Functioning and Social Cognition in Schizophrenia and Bipolar Disorder: Using Ecological Momentary Assessment to Identify the Origin of Bias Self Reports. Biol Psychiat. 2021;89(9 Supplement):S131–2.

Ludwig L, Mehl S, Krkovic K, Lincoln TM. Effectiveness of emotion regulation in daily life in individuals with psychosis and nonclinical controls—An experience-sampling study. J Abnorm Psychol. 2020;129(4):408.

Hermans KS, Myin-Germeys I, Gayer-Anderson C, Kempton MJ, Valmaggia L, McGuire P, et al. Elucidating negative symptoms in the daily life of individuals in the early stages of psychosis. Psychol Med. 2021;51(15):2599–609.

Strassnig M, Harvey P, Depp C, Granholm E. Smartphone-Based Assessment of Real-World Sedentary Behavior in Schizophrenia. Biol Psychiat. 2020;87(9 Supplement):S339–40.

Du Toit JL, Mouton J. A typology of designs for social research in the built environment. Int J Soc Res Methodol. 2013;16(2):125–39.

Teddlie C, Tashakkori A. A general typology of research designs featuring mixed methods. Res Sch. 2006;13(1):12–28.

Himmelstein PH, Woods WC, Wright AG. A comparison of signal-and event-contingent ambulatory assessment of interpersonal behavior and affect in social situations. Psychol Assess. 2019;31(7):952.

Meers K, Dejonckheere E, Kalokerinos EK, Rummens K, Kuppens P. mobileQ: A free user-friendly application for collecting experience sampling data. Behav Res Methods. 2020;52(4):1510–5.

Hofmann W, Patel PV. SurveySignal: A convenient solution for experience sampling research using participants’ own smartphones. Soc Sci Comput Rev. 2015;33(2):235–53.

Jones A, Remmerswaal D, Verveer I, Robinson E, Franken IH, Wen CKF, et al. Compliance with ecological momentary assessment protocols in substance users: a meta-analysis. Addiction. 2019;114(4):609–19.

Fisher CD, To ML. Using experience sampling methodology in organizational behavior. J Organ Behav. 2012;33(7):865–77.

Varese F, Haddock G, Palmier-Claus J. Designing and conducting an experience sampling study. In: Palmier-Claus J, Haddock G, Varese F, editors. Experience sampling in mental health research. New York: Routledge; 2019. p. 9–17.

Armey MF, Schatten HT, Haradhvala N, Miller IW. Ecological momentary assessment (EMA) of depression-related phenomena. Curr Opin Psychol. 2015;4:21–5.

Piasecki TM, Hufford MR, Solhan M, Trull TJ. Assessing clients in their natural environments with electronic diaries: rationale, benefits, limitations, and barriers. Psychol Assess. 2007;19(1):25.

Walsh E, Brinker JK. Temporal considerations for self-report research using short message service. Journal of Media Psychology. 2015.

Serre F, Fatseas M, Debrabant R, Alexandre J-M, Auriacombe M, Swendsen J. Ecological momentary assessment in alcohol, tobacco, cannabis and opiate dependence: A comparison of feasibility and validity. Drug Alcohol Depend. 2012;126(1–2):118–23.

Acknowledgements

Not applicable.

Funding

This research was funded by the NIHR Nottingham Biomedical Research Centre. MC is additionally supported by NIHR MindTech MedTech Co-operative. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care.

Author information

Authors and Affiliations

Contributions

This systematic review was planned and designed by ED, MS, CC and MC. The literature search was carried out by E.Y and N.T. The screening was carried out by ED. Quality checking was performed by ED. Data was analysed by ED, FN, CC, MC, CN and MS. All the authors have read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Sources, search strategy, and study selection.

Additional file 2.

Data abstraction table.

Additional file 3:

Additional Table 1. Overall p-values for predictors of percentage completeness for high quality studies only (n=10). Additional Table 2. Statistical significant predictors with beta values, standard errors and p-values, for high quality studies (n=10).

Additional file 4.

Quality assessment.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Deakin, E., Ng, F., Young, E. et al. Design decisions and data completeness for experience sampling methods used in psychosis: systematic review. BMC Psychiatry 22, 669 (2022). https://doi.org/10.1186/s12888-022-04319-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12888-022-04319-x