Abstract

Background

Prognostic indicators, treatments, and survival estimates vary by cancer type. Therefore, disease-specific models are needed to estimate patient survival. Our primary aim was to develop models to estimate survival duration after treatment for skeletal-related events (SREs) (symptomatic bone metastasis, including impending or actual pathologic fractures) in men with metastatic bone disease due to prostate cancer. Such disease-specific models could be added to the PATHFx clinical-decision support tool, which is available worldwide, free of charge. Our secondary aim was to determine disease-specific factors that should be included in an international cancer registry.

Methods

We analyzed records of 438 men with metastatic prostate cancer who sustained SREs that required treatment with radiotherapy or surgery from 1989–2017. We developed and validated 6 models for 1-, 2-, 3-, 4-, 5-, and 10-year survival after treatment. Model performance was evaluated using calibration analysis, Brier scores, area under the receiver operator characteristic curve (AUC), and decision curve analysis to determine the models’ clinical utility. We characterized the magnitude and direction of model features.

Results

The models exhibited acceptable calibration, accuracy (Brier scores < 0.20), and classification ability (AUCs > 0.73). Decision curve analysis determined that all 6 models were suitable for clinical use. The order of feature importance was distinct for each model. In all models, 3 factors were positively associated with survival duration: younger age at metastasis diagnosis, proximal prostate-specific antigen (PSA) < 10 ng/mL, and slow-rising alkaline phosphatase velocity (APV).

Conclusions

We developed models that estimate survival duration in patients with metastatic bone disease due to prostate cancer. These models require external validation but should meanwhile be included in the PATHFx tool. PSA and APV data should be recorded in an international cancer registry.

Similar content being viewed by others

Introduction

In the United States, prostate cancer is the most common diagnosed malignancy and the second leading cause of cancer death in men [1, 2]. The clinical treatment decision-making process is challenging because prostate cancer is a complex disease. Several tumor markers and biomarkers are associated with prognosis. For example, proximal prostate-specific antigen (PSA) (defined as the most recent value measured at least 6 months before developing metastasis) < 10 ng/mL is an independent predictor of metastasis-free survival among men with biochemical recurrence after undergoing radical prostatectomy [3]. In addition, the change in alkaline phosphatase concentration over time, alkaline phosphatase velocity (APV), is a prognostic biomarker associated with overall survival in men with castration-resistant prostate cancer [4, 5]. Metwalli et al. [5] found that higher APV was also an independent predictor of overall survival, as well as for bone metastasis–free survival in patients with castration-resistant prostate cancer, where APV ≥ 50 (upper quartile) is “quick rising”, APV of 0 is “no rising”, and all other APV values are “slow rising.” High APV (uppermost quartile of velocity) is also predictive of distant metastasis–free survival in patients who have undergone radical prostatectomy and experienced biochemical recurrence [4].

The approach to treating men with metastatic bone disease due to prostate cancer requires balanced consideration of clinical benefits, life expectancy, comorbidities, quality of life, and the risk of adverse effects. Clinical practice guidelines [6] published recently by the Musculoskeletal Tumor Society recommend that physicians consider using clinical support tools, such as PATHFx, available worldwide at no cost at www.pathfx.org. The tool is designed to estimate a patient’s survival trajectory by estimating survival after treatment for a skeletal-related event (SRE), which is defined as pathologic fracture; spinal cord compression requiring surgical treatment; or nonsurgical treatment, including radiotherapy, cryotherapy, or radiofrequency ablation. PATHFx currently estimates the likelihood of survival at 1, 3, 6, 12, 18 and 24 months after surgical or nonsurgical intervention or an SRE [7,8,9,10,11]. However, patients with metastatic prostate cancer often live much longer than 24 months after an SRE. For this reason, disease-specific models should be developed to augment—or take the place of—the generic models currently powering the PATHFx decision-making support tool. Its use supports shared decision making by ensuring that treatment strategies align with each patient’s personal survival trajectory and functional goals.

PATHFx has been externally validated in several centers worldwide, continual advances in the treatment of patients with advanced cancer require that the models be updated regularly. For this reason, we updated the six PATHFx models using recent data obtained from patients undergoing contemporary systemic therapy, including targeted agents, and immunotherapy [12]. Validation data for this study were derived from the International Bone Metastasis Registry, which helps ensure that the updated models are applicable to various patient populations worldwide. This commitment to lifecycle management ensures that PATHFx remains applicable as treatment philosophies change and new therapies become available, thereby providing clinicians with the most current, broadly applicable tool to estimate survival in this patient population.

Currently, the PATHFx tool groups cancer diagnoses according to historical data on survival rates. Because prognostic indicators, treatment protocols, and survival estimates vary widely by cancer type, it may be beneficial to develop disease-specific survival models. Such models would make use of prognostic information unique to men with metastatic prostate cancer, such as proximal PSA and APV.

Our primary purpose was to develop models to estimate the duration of survival after treatment for skeletal-related events (SREs) in men with metastatic bone disease due to prostate cancer. Such models could inform the PATHFx clinical decision support tool, which currently groups cancer types according to historical survival data. Our secondary purpose was to determine disease-specific factors that should be included in an international cancer registry.

Methods

Guidelines

This retrospective prognostic classification study followed the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis guidelines [13] and the Guidelines for Developing and Reporting Machine Learning Predictive Models in Biomedical Research [14].

Data source and patient selection criteria

The study population comprised 29,000 men enrolled in the institutional review board–approved Center for Prostate Disease Research (CPDR) Multicenter National Database Program [15]. We reviewed the records of men who sustained a SRE due to metastatic prostate cancer and subsequently required treatment with radiotherapy or surgery between 1989 and 2017. There were 1,404 men with prostate cancer that metastasized to bone in the data set. Of these, 438 patients had sufficient information to calculate APV, defined as the slope of the linear regression line of alkaline phosphatase values obtained after the diagnosis of metastatic bone disease, plotted against time in years.

Outcome

We developed six models designed to estimate the likelihood of survival at 1, 2, 3, 4, 5, and 10 years after treatment of an SRE.

Demographic, clinical, and pathologic features

Consistent with previous methods of using APV as a prognostic feature, and because of the strong skew and non-normality of the APV distribution, APV was binned into the uppermost quartile (“quick-rising”) of all observed values and then compared with the lower 3 quartiles combined (“slow-rising”) and zero value (“no-rising”) [4, 5]. Proximal PSA, defined as PSA concentration at the time of diagnosis of metastatic bone disease, was missing in 11% of these records. Data for all other features were complete. For each consecutive time point, the number of patients decreased because of censoring. Patient demographic and clinical data extracted for analysis were as follows: self-reported race (black or white/other), presence of comorbidities, age at first known bone metastasis, proximal PSA, APV values, method of local treatment of the primary tumor (radiotherapy or surgery), adjuvant therapy (radiotherapy, chemotherapy, and hormonal therapy) and date of death.

Categorical and continuous features included in the models and the proportions of missing data are listed in Tables 1 and 2. We used Bayes factor (BF) analysis to compare the cohorts. BF analysis considers the strength of evidence supporting or contradicting the study hypothesis. The analysis is categorized by the following: BF ≥ 100 indicates strong supporting evidence for the alternative hypothesis; BF < 100 indicates strong supporting evidence for the null hypothesis; and BF of approximately 0 indicates no probable difference between the 2 groups [16, 17].

Model development

We selected gradient boosting machine (GBM) modeling because it is a decision tree machine learning technique that builds an ensemble of shallow and weak trees or learners in succession (rather at than all at once as in random forest machine learning), so each tree learns and improves from the previous iteration. GBM modeling trains models in a gradual, additive, and sequential manner, which strengthens the final product [18, 19]. The final model is built on the strength of previous, smaller predictors.

We used Python, version 3.7.4 (Python Software Foundation, Beaverton, OR) to develop the models. For each model, we split the data 80/20 into train and test groups and a further 80/20 split of the train group into train and validation sets stratified by the binary outcome feature across all groups. Data were shuffled to create random order before splitting into listed groups. Because the number of patients at each time point differed, the exact number of records in the train, validation, and test groups vary by time point and cohort. Data types were changed to integer, float, and object as applicable. Missing data were imputed using the multiple imputation by chained equations algorithm from the IterativeImputer package. Our data were preprocessed to scale using the MinMaxScaler package of sklearn.preprocessing. Six GBM models were created, 1 for each of the 6 survival time points, using the train set and the GradientBoostingClassifier package in sklearn.ensemble.

Feature selection

Because of our limited data set, we made all categorical features binary. This allowed for a more transparent analysis of results. We performed feature selection using Boruta Random Forest algorithm; all features were included. Features were further characterized for magnitude and direction of each feature’s association with the outcome (patient survival) using the local interpretable model–agnostic explanations (LIME) package in R software for the models [20].

Model regulation

GBM models continue improving to minimize error at the risk of overfitting. For internal validation, we used a cross-validated grid search to direct our choice of parameters using GridSearch CV within sklearn.model_selection package (Python Software Foundation). Our scoring measure of interest was the AUC. For each model, we selected parameters that produced the highest AUC in the validation set. Parameters of interest were learning rate, number of estimators, maximum depth of tree, minimum number of samples per node to be considered for splitting, minimum number of samples required in a terminal node, and subsample percentage included for each tree.

Performance assessment

We created predictive values for each model by using each corresponding test set. First, calibration plots were used to visualize the concurrence of the predicted probabilities with the observed frequencies in the data set. Then, discriminatory ability was determined by estimating the AUC. Next, Brier scores were used to determine overall accuracy of the predictions. The Brier score measures distance between the actual outcome and the predicted probability assigned to the outcome for each observation, where the best possible Brier score for accuracy is 0 and the worst is 1 [21, 22]. Finally, we determined whether the models possessed clinical utility by using decision curve analysis [23, 24], as described previously in this patient population [12].

Results

Participants

Continuous and categorical features for the train and test sets are listed in Tables 1 and 2, respectively. As expected, we found no difference between continuous features in the train and test sets (BF of approximately 0) (Table 1). When comparing categorical features, we found no difference between the 2 groups (BF of approximately 0) for treatment type and survival (yes/no) at any time point (Table 2).

Model development and validation

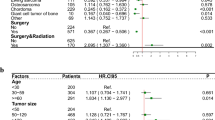

The AUC was between 0.73 and 0.86 for all 6 models (Table 3). Brier scores were < 0.20 and demonstrated the model’s predictions were accurate. The relative influence table for the 6 models in Fig. 1 shows the degree of influence for each feature on the overall model. Proximal PSA and patient age at the time of first-known SRE consistently had the most influence across all models. Treatment method and APV became increasingly influential with the later time period models.

A-F This figure shows both the relative influence of each feature and whether the feature has a positive or negative association with survival. The directionality (to support or contradict the outcome of interest) of each level of the model features is ranked by average weight of feature level across all cases. Blue bars (positive feature weight) are associated with features that are associated with survival; red bars (negative feature weight) represent features that are negatively associated with survival at (A) 1 year, (B) 2 years, (C) 3 years, (D) 4 years, (E) 5 years, and (F) 10 years

Global application (Model-level interpretation)

In earlier survival estimate models, proximal PSA and age at diagnosis had more influence on the outcome variable. Notably, APV was an important feature at all time points; quick-rising APV was more influential in later survival estimate mode. Unexpectedly, the method of treating the primary disease also had strong influence; however, treatment-naïve status was more influential on survival than was radiotherapy and/or chemotherapy.

Local application (Patient-level interpretation)

To trust and apply models correctly, clinicians must be able to interpret them at the patient level [20]. The positive and negative directionality of each model is shown in Fig. 1. Overall, features positively associated with survival were younger age at metastasis diagnosis, proximal PSA < 10 ng/mL, slow-rising APV, no-rising APV, radiotherapy treatment, and hormonal or chemotherapy treatment (Fig. 1). Features negatively associated with survival were older age at metastasis diagnosis, proximal PSA > 10 ng/mL, quick-rising APV values, and being treatment-naïve (Fig. 1). The patient-level interpretations were consistent with global-level model application.

Clinical utility

Decision curve analysis showed that physicians may achieve better outcomes by using the 6 models described above, rather than assuming all will survive, or none will survive for 1, 2, 3, 4, 5, and 10 years, respectively (Fig. 2). Decision curve analysis measures the net benefit of using a clinical support tool across different threshold probabilities defined as the point of equipoise when considering 2 courses of treatment (e.g., nonsurgical vs. surgical for short-term survival estimates, less invasive vs. more invasive for longer-term estimates). Low-threshold probabilities are associated with healthier patients, whereby physicians have a low threshold to offer surgery. In contrast, high-threshold probabilities are associated with patients in which surgery carries greater risk.

A-F Decision curve analyses of each of the 6 models designed to estimate patient survival at (A) 1 year, (B) 2 years, (C) 3 years, (D) 4 years, (E) 5 years, and (F) 6 years after treatment or surgery for skeletal-related events due to bone metastasis from prostate cancer. These results suggest that all the models (dotted line) should be used rather than assuming all patients (continuous line) or no patients (thick continuous line) will survive longer than the period of each predictive model

Discussion

The duration of survival for prostate cancer patients with metastatic bone disease is difficult to predict. We successfully developed models to estimate survival in patients with prostate cancer who have metastatic bone disease to help clinicians navigate treatment algorithms. Previous studies have shown that APV is predictive of distant metastasis–free survival [4, 5, 25]. In this study, we showed that machine learning–based models can predict survival in prostate cancer patients, and that these models improve in both discriminatory ability and accuracy with the addition of APV data. Specifically, the patient’s primary disease treatment type and APV became increasingly influential in the later time period models. Although further external validation studies are required, these data justify inclusion of these models in the PATHFx tool, an open-source clinical decision-making support tool for survival estimation (https://www.pathfx.org) [7].

Patient race and ethnicity may provide important information on genetic and socioeconomic factors pertaining to disease [26, 27]. Race was self-reported by patients at the time of enrollment and divided into 2 broad categories (white/other or black). Using the CPDR database, Cullen et al. [28] found self-reported black race was not a predictor of poorer overall survival among participants in the CPDR Multicenter National Database Program undergoing active surveillance, despite race-based differences in baseline clinical risk characteristics.

Although PATHFx is validated, it does not offer disease-specific estimates of survival [12]. The prostate cancer–specific models at 1 and 2 years can be compared directly with the PATHFx 1- and 2-year models in terms of discriminatory ability (AUC) and accuracy of prediction (Brier score). The new 1-year prostate disease–specific model we developed (AUC = 0.85; Brier score = 0.07) was superior to the PATHFx (version 3.0) 1-year model (AUC = 0.78; Brier score = 0.18). However, the 2-year prostate disease–specific model (AUC = 0.80; Brier score = 0.17) was no better than the PATHFx 2-year model (AUC = 0.82; Brier score = 0.12). Based on this direct comparison, the 1-year prostate disease–specific model could be used independently to accurately determine survival duration in men with metastatic prostate cancer. However, predictive algorithms continue to improve with exposure to more data [29]. Therefore, we believe there is room for improvement by incorporating additional PATHFx variables, such as hemoglobin concentration and absolute lymphocyte count.

Although the classification ability of the prostate-specific models derived in this study is no better than that of the current PATHFx tool [12], we have developed 4 additional models that estimate survival at 3, 4, 5, and 10 years. Validation statistics and decision curve analysis indicate that these models are suitable for clinical use. Incorporating these prostate cancer–specific models into the PATHFx clinical support tool is part of our continued responsibility to provide accurate estimations of survival to help clinicians and patients navigate complex treatment algorithms. Unlike traditional statistical decision rules, the accuracy of machine learning–based models can be improved over time with better machine learning methods, more data, changes in practice, changes in the patient population, and/or better understanding of disease processes [29].

When evaluating the results of this study, its limitations must be considered. It is possible that other statistical techniques could be used to develop similar prognostic models for prostate cancer. Our author group has extensive experience using various machine learning techniques. Some techniques are prone to overfitting and produce overly optimistic results. Therefore, we implemented GBM with hyperparameter tuning to mitigate the risk of overfitting. Our study was limited by missing data, which can result in incomplete codification of train data and overfitting; however, we mitigated these effects by using a “holdout” validation set. Despite these results, external validation studies are necessary before these models can be recommended for use in other patient populations. Beyond APV, there may be other laboratory-related features to consider for use in the model; however, the data are not complete in the CPDR database. The number of features available for the model was a limitation. Only 31% of the CPDR data had APV data, so we restricted the data set to the 438 records with APV values. Nevertheless, we expect the models to continue to improve as more data become available.

Although the addition of APV to the models improved performance, we may see continued improvement in model performance by including additional demographic and laboratory-based patient data. For example, Stattin et al. [30] found that a panel of kallikrein marker (human kallikrein-related peptidase 2 [hK2] and total, free, and intact PSA) is strongly predictive of distant metastasis in men with modestly elevated PSA. As these data are collected and added to national and international prostate cancer registries, we could continue to augment the models for survival estimations. Additionally, different mechanisms exist to measure and categorize APV, and previously determined [4, 5, 25] cut points were used in this analysis. Furthermore, PSMA PET and bone scintigraphy have been shown to predict the survival of end-stage prostate cancer patients [31]. It possible that integrating APV into PATHFx with these imaging biomarkers may further strengthen survival estimates.

By including disease-specific information such as APV, we have developed a tool that helps predict survival duration in men with metastatic bone disease due to prostate cancer. Although external validation studies are required to support its use in other patient populations, these data justify inclusion of these models in the PATHFx tool. In addition, data used in the GBM model, including APV and proximal PSA, should be included in the International Bone Metastasis Registry.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available due to Department of Defense regulations, but may be available from the corresponding author on reasonable request.

Abbreviations

- APV:

-

Alkaline Phosphatase Velocity

- AUC:

-

Area Under The Curve

- BF:

-

Bayes Factor

- CPDR:

-

Center for Prostate Disease Research

- GBM:

-

Gradient Boosting Machine

- LIME:

-

Local Interpretable Model–Agnostic Explanations

- PSA:

-

Prostate-Specific Antigen

- SRE:

-

Skeletal-Related Event

References

Siegel RL, Miller KD, Jemal A. Cancer statistics, 2019. CA Cancer J Clin. 2019;69(1):7–34.

Jemal A, Siegel R, Ward E, Hao Y, Xu J, Thun MJ. Cancer statistics, 2009. CA Cancer J Clin. 2009;59(4):225–49.

Markowski MC, Suzman D, Chen Y, Trock BJ, Cullen J, Feng Z, Antonarakis ES, Paller CJ, Han M, Partin AW, et al. PSA doubling time (PSADT) and proximal PSA value predict metastasis-free survival (MFS) in men with biochemically recurrent prostate cancer (BRPC) after radical prostatectomy (RP). J Clin Oncol. 2017;35(15):5075.

Salter CA, Cullen J, Kuo C, Chen Y, Hurwitz L, Metwalli AR, Dimitrakoff J, Rosner IL. Alkaline Phosphatase Kinetics Predict Metastasis among Prostate Cancer Patients Who Experience Relapse following Radical Prostatectomy. Biomed Res Int. 2018;2018:4727089.

Metwalli AR, Rosner IL, Cullen J, Chen Y, Brand T, Brassell SA, Lesperance J, Porter C, Sterbis J, McLeod DG. Elevated alkaline phosphatase velocity strongly predicts overall survival and the risk of bone metastases in castrate-resistant prostate cancer. Urol Oncol. 2014;32(6):761–8.

Musculoskeletal Tumor Society (MSTS), American Society for Radiation Oncology (ASTRO), American Society of Clinical Oncology (ASCO): The Treatment of Metastatic Carcinoma and Myeloma of the Femur: Clinical Practice Guideline. Available at:https://www.astro.org/ASTRO/media/ASTRO/Patient%20Care%20and%20Research/PDFs/MSTSBonemetsGLPC.pdf. Accessed on 30 Apr 2021.

Forsberg JA, Eberhardt J, Boland PJ, Wedin R, Healey JH. Estimating survival in patients with operable skeletal metastases: An application of a bayesian belief network. PLoS One. 2011;6(5):e19956.

Forsberg JA, Sjoberg D, Chen QR, Vickers A, Healey JH. Treating metastatic disease: Which survival model is best suited for the clinic? Clin Orthop. 2013;471(3):843–50.

Forsberg JA, Wedin R, Bauer HC, Hansen BH, Laitinen M, Trovik CS, Keller JO, Boland PJ, Healey JH. External validation of the Bayesian Estimated Tools for Survival (BETS) models in patients with surgically treated skeletal metastases. BMC Cancer. 2012;12:493.

Meares C, Badran A, Dewar D. Prediction of survival after surgical management of femoral metastatic bone disease - A comparison of prognostic models. J Bone Oncol. 2019;15:100225.

Ogura K, Gokita T, Shinoda Y, Kawano H, Takagi T, Ae K, Kawai A, Wedin R, Forsberg JA. Can a multivariate model for survival estimation in skeletal metastases (PATHFx) be externally validated using Japanese patients? Clin Orthop. 2017;475(9):2263–70.

Anderson AB, Wedin R, Fabbri N, Boland P, Healey J, Forsberg JA. External Validation of PATHFx Version 3.0 in Patients Treated Surgically and Nonsurgically for Symptomatic Skeletal Metastases. Clin Orthop. 2020;478(4):808–18.

Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): the TRIPOD statement. Ann Intern Med. 2015;162(1):55–63.

Luo W, Phung D, Tran T, Gupta S, Rana S, Karmakar C, Shilton A, Yearwood J, Dimitrova N, Ho TB, et al. Guidelines for Developing and Reporting Machine Learning Predictive Models in Biomedical Research: A Multidisciplinary View. J Med Internet Res. 2016;18(12):e323.

Brassell SA, Dobi A, Petrovics G, Srivastava S, McLeod D. The Center for Prostate Disease Research (CPDR): a multidisciplinary approach to translational research. Urol Oncol. 2009;27(5):562–9.

Goodman SN. Toward evidence-based medical statistics. 1: The P value fallacy. Ann Intern Med. 1999;130(12):995–1004.

Goodman SN. Introduction to Bayesian methods I: measuring the strength of evidence. Clin Trials. 2005;2(4):282–90 (discussion 301-284, 364-278).

Natekin A, Knoll A. Gradient boosting machines, a tutorial. Front Neurorobot. 2013;7:21.

Friedman JH. Greedy function approximation: A gradient boosting machine. Ann Statist. 2001;29(5):1189–232.

Ribeiro MT, Singh S, Guestrin C: Model-Agnostic Interpretability of Machine Learning. Available at https://arxiv.org/abs/1606.05386. Accessed on Mar 16. In.; 2020.

Brier GW. Verification of Forecasts Expressed in Terms of Probability. Mon Weather Rev. 1950;78(1):1–3.

Assel M, Sjoberg DD, Vickers AJ. The Brier score does not evaluate the clinical utility of diagnostic tests or prediction models. Diagn Progn Res. 2017;1:19.

Vickers AJ, Van Calster B, Steyerberg EW. Net benefit approaches to the evaluation of prediction models, molecular markers, and diagnostic tests. BMJ. 2016;352:i6.

Vickers AJ, Elkin EB. Decision curve analysis: a novel method for evaluating prediction models. Med Decis Making. 2006;26(6):565–74.

Hammerich KH, Donahue TF, Rosner IL, Cullen J, Kuo HC, Hurwitz L, Chen Y, Bernstein M, Coleman J, Danila DC, et al. Alkaline phosphatase velocity predicts overall survival and bone metastasis in patients with castration-resistant prostate cancer. Urol Oncol. 2017;35(7):460 e421-460 e428.

Newman LA, Kaljee LM. Health Disparities and Triple-Negative Breast Cancer in African American Women: A Review. JAMA Surg. 2017;152(5):485–93.

Leopold SS, Beadling L, Calabro AM, Dobbs MB, Gebhardt MC, Gioe TJ, Manner PA, Porcher R, Rimnac CM, Wongworawat MD. Editorial: The Complexity of Reporting Race and Ethnicity in Orthopaedic Research. Clin Orthop. 2018;476(5):917–20.

Cullen J, Brassell SA, Chen Y, Porter C, L’Esperance J, Brand T, McLeod DG. Racial/Ethnic patterns in prostate cancer outcomes in an active surveillance cohort. Prostate Cancer. 2011;2011:234519.

Liu Y, Chen PC, Krause J, Peng L. How to Read Articles That Use Machine Learning: Users’ Guides to the Medical Literature. JAMA. 2019;322(18):1806–16.

Stattin P, Vickers AJ, Sjoberg DD, Johansson R, Granfors T, Johansson M, Pettersson K, Scardino PT, Hallmans G, Lilja H. Improving the Specificity of Screening for Lethal Prostate Cancer Using Prostate-specific Antigen and a Panel of Kallikrein Markers: A Nested Case-Control Study. Eur Urol. 2015;68(2):207–13.

Seifert R, Herrmann K, Kleesiek J, Schäfers M, Shah V, Xu Z, et al. Semiautomatically Quantified Tumor Volume Using 68Ga-PSMA-11 PET as a Biomarker for Survival in Patients with Advanced Prostate Cancer. J Nuclear Med. 2020;61:1786–92. https://doi.org/10.2967/jnumed.120.242057.

Acknowledgements

We would like to acknowledge the Prostate Cancer Research Program for arranging helpful institutional support and thank Darryl Nousome, MPH, PhD, for his contributions. For their editorial assistance, we thank Jenni Weems, MS, Kerry Kennedy, BA, and Rachel Box, MS, in the Editorial Services group of The Johns Hopkins Department of Orthopaedic Surgery.

Funding

This was funded through CDMRP to USUHS. 2016 Defense Medical Research and Development Program Clinical Research Intramural Initiative—Precision Medicine Research Award Log# DM160500; USU Project #: SUR-90–4284; HJF Cooperative Agreement # HU0001-17–2-0027; HJF Award #: 65284–309263-1.00.

Author information

Authors and Affiliations

Contributions

Study design (A.B.A., C.G., R.W., J.A.F.); analysis (A.B.A., C.G., R.W., C.K., J.C., J.A.F.); data collection (C.K., B.R.C.); writing (A.B.A., C.G., R.W., J.C., J.A.F.); revision (A.B.A., C.G., R.W., C.K., Y.C., J.C., J.A.F.); obtaining funding (J.C., J.A.F.); study concept and supervision (J.A.F.). The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to partcipate

The study was approved by the Institutional Review Board of the Uniformed Services University of Health Sciences. Protocol #SUR-90–4284. All methods were carried out in accordance with relevant guidelines and regulations. The Institutional Review Board of the Uniformed Services University of Health Sciences waived informed consent.

Consent for publication

Not applicable.

Competing interests

One author (JAF) is a paid consultant to the Solsidan Group, LLC, Maryland, USA and two authors (RW and JAF) are minority shareholders in Prognostix AB, Stockholm, Sweden. All other authors report no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The views expressed are those of the author and do not reflect the official policy of the Department of the Army/Navy/Air Force, Department of Defense, or U.S. Government.

Location of Work: The work was performed at Walter Reed National Military Medical Center and Center for Prostate Disease Research.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Anderson, A.B., Grazal, C., Wedin, R. et al. Machine learning algorithms to estimate 10-Year survival in patients with bone metastases due to prostate cancer: toward a disease-specific survival estimation tool. BMC Cancer 22, 476 (2022). https://doi.org/10.1186/s12885-022-09491-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12885-022-09491-7