Abstract

Background

Several machine learning (ML) classifiers for thyroid nodule diagnosis have been compared in terms of their accuracy, sensitivity, specificity, negative predictive value (NPV), positive predictive value (PPV), and area under the receiver operating curve (AUC). A total of 525 patients with thyroid nodules (malignant, n = 228; benign, n = 297) underwent conventional ultrasonography, strain elastography, and contrast-enhanced ultrasound. Six algorithms were compared: support vector machine (SVM), linear discriminant analysis (LDA), random forest (RF), logistic regression (LG), GlmNet, and K-nearest neighbors (K-NN). The diagnostic performances of the 13 suspicious sonographic features for discriminating benign and malignant thyroid nodules were assessed using different ML algorithms. To compare these algorithms, a 10-fold cross-validation paired t-test was applied to the algorithm performance differences.

Results

The logistic regression algorithm had better diagnostic performance than the other ML algorithms. However, it was only slightly higher than those of GlmNet, LDA, and RF. The accuracy, sensitivity, specificity, NPV, PPV, and AUC obtained by running logistic regression were 86.48%, 83.33%, 88.89%, 87.42%, 85.20%, and 92.84%, respectively.

Conclusions

The experimental results indicate that GlmNet, SVM, LDA, LG, K-NN, and RF exhibit slight differences in classification performance.

Similar content being viewed by others

Background

There is a high incidence of thyroid nodules following the widespread use of high-resolution ultrasound in clinical practice. Ultrasonography plays an important role in the diagnosis of thyroid nodules because it is noninvasive, economical, and convenient. Most thyroid nodules are benign; however, it is difficult to differentiate malignant nodules from benign nodules owing to their hidden early clinical symptoms [1, 2]. Therefore, differentiating benign and malignant thyroid nodules is challenging. Known suspicious US features of differentiated thyroid nodules are margins, borders, calcification, and shape [3, 4]. In this paper, we chose 13 features, including conventional US features, and features based new imaging techniques, such as strain elastosonography (SE) and contrast-enhanced ultrasound (CEUS); see more details in the Materials section.

Machine learning (ML) is one of the fastest developing fields in the computer science field. ML serves as a useful reference tool for classification following the development of artificial intelligence.

Several types of classifiers are used in ML. The support vector machine (SVM), random forest (RF), logistic regression, GlmNet, linear discriminant analysis (LDA), and K-NN are the most common classifiers.

The original SVM was proposed by Vapnik and Ya in 1963. The current standard originated in 1993 and was proposed by Corte and Vapnikdition. SVM is a core machine-learning technology for resolving a variety of classification and regression problems, which produces nonlinear boundaries by constructing a linear boundary in a large, transformed version of the feature space [5]. SVM has been applied to all types of problems, such as object and handwritten digit recognition and image and text classification. The general form of the decision function f (x) for SVM:

where k(x, xi) is the kernel function, b is the bias, 0 ≤ αi ≤ C andΣ(αiyi) = 0.where αi can be obtained through training, and C is a penalty term parameter set by user [5,6,7]. In this study, the Gaussian kernel function \({k}_{\gamma }(X, X^{\prime}) = e(-\hspace{0.17em}\gamma ||X\hspace{0.17em}-\hspace{0.17em}X^{\prime}{||}^{2})\) was used to address the nonlinearity classification [5]. The SVM with a Gaussian kernel is implemented in MATLAB using the LIBSVM toolkit, which is a library for SVMs and is publicly available.

Figure 1 is the architecture of an SVM. x = [x1, x2,… xn] is an n-dimensional input feature vector, and y is the decision value.

RFs were first proposed by Breiman and Cutler. RF is a versatile machine-learning algorithm that can implement regression, classification, and dimensionality reduction. Random forests are a combination of decision trees, where each decision tree depends on the values of a random vector sampled independently [8]. The performance of random forests is quite similar to that of the bootstrap aggregating algorithm for many problems, which depends on the strength of the individual trees in the forest and the correlation between trees [5]. The steps of the algorithm are as follows:

-

N samples are randomly sampled with replacements from the data set.

-

The m features are randomly sampled from all the features. A certain strategy (CART) is used to select one feature from m features as the split attribute of the node.

-

The above two steps are repeated n times, that is, to generate n decision trees to form a random forest.

-

After each decision, the final vote is confirmed as the category for new data.

K−Nearest Neighbors is memory-based and requires no preprocessing of the sample and no model to fit [5, 9]. Given point x0, k points that are the closest distance to x0 were found. The majority vote is then used to classify k points [5]. The decision rule is defined as follows.

where Nk(X) is the neighborhood of X.

Logistic regression is a generalized linear regression model and is the most common algorithm used in binary classification problems. The decision function of the logistic regression is

where sigmoid (.) is the activation function and x is the matrix of the input data. The value is set to 1 if Z ≥ 0.5. By contrast, the value is regarded as zero if Z < 0.5.

The GlmNet is a generalized linear model with penalized maximum likelihood. GlmNet solves the following binomial likelihood function:

where

where α is the mixing factor, λ is the regularization parameter, and Pα(β) is the elastic net penalty. The model is a ridge regression model when α is zero. The model is a lasso regression when α = 1.

In the space of dimensionality reduction and data classification, LDA is wildly used. The principle of LDA is to project the labeled data into a lower-dimensional space using the projection method; therefore, the projected points can be easily distinguished, and the points of the same category will be closer to the projected space. The principle of LDA is to maximize the distance between classes and and to minimize the distance between the within-class [10]. The mapping function is

where X is the dataset to be categorized. The original central point of Category i is

where Di represents the set of points belonging to category i and n is the number of Di.

The variance before the projection of category i s

The central point after the projection of category i is:

The variance after the projection of Category i is

where Yi is the data set after Di mapping.

Assuming that there are two categories in the dataset, the loss function is

where \({S}_{B}^{2}={({m}_{1}-{m}_{2})}^{2} and\, {S}_{w}^{2}={S}_{1}^{2}+{S }_{2}^{2}\)

The goal is to find the W that makes J(W) the biggest.

The motivation behind this study is to develop a better understanding of the classification process and evaluate it in terms of accuracy and sensitivity, specificity, NPV, PPV, and AUC, and to analyze the weaknesses and strengths of known classifiers in differentiating malignant from benign nodules. These issues are important and valuable for the application of machine classifiers in thyroid research and for clinicians and researchers who would like to gain an understanding of the classification process and analysis.

Results

The performance of these classifiers is summarized in Table 1. Based on the results in Table 1, logistic regression works relatively well and achieves maximum accuracy (86.48%), which shows the best classification performance. However, there are only slight differences in the performances of the six classifiers.

A statistical test method was applied to classifier performance differences to quantitatively compare the classifiers [11]. The 10-folder cross-validation paired t-test was applied to compare the two classifiers, and the significance level was 0.05. When the p-value was < 0.05, the two classifiers were significantly different. Table 2 shows the p-values of the paired t-tests. The results indicate that the six classifiers have no significant differences.

Discussion

In this analysis, the cross-validation technique and paired t-test method were applied to tune parameters and assess classifier performance differences, respectively. The experimental results indicate that GlmNet, SVM, LDA, logistic regression, K-NN, and random forests exhibit slight differences in classification performance. The reason for this result may originate from our data, as all variables and labels are binary.

For clinical research, there are lots of classifiers for a real application. It is useful for clinician to select an optimal classifier. Our exprehensive comparison study may be such an effort for helping clinicians in their real problem.

Conclusions

The strength of this study is that 13 features regarding gender, SE, and CEUS in combination with other 10 conventional US features were used to compare different classifiers in the diagnosis of malignancy and benign disease. This study had a few limitations. First, the sample size was small. Moreover, this was a retrospective study. The established model requires further research to validate and support it. Large-sample studies are expected to be performed in the future. Second, the data in this study were binary. Finally, it is a good way to use other model data with new methods such as deep learning for thyroid nodule diagnosis [12, 13].

Materials and method

Materials

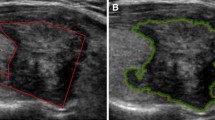

A database of 525 patients (396 females and 129 males) who underwent conventional US, SE, and CEUS at Shenzhen Second People’s Hospital was retrospectively reviewed. The patients were subdivided into two groups based on the final pathology results: those with benign thyroid nodules (n = 297) and those with malignancy (n = 228). We chose 13 features based on our clinical experience and data as many as possible according to our current imaging equipment; all features are listed in Table 3. In this study, 10 conventional US features of malignancy were: irregular margins, ill-defined borders, taller-than-wide shapes, hypoechogenicity or marked hypoechogenicity, microcalcification, posterior echo attenuation, peripheral acoustic halo, interrupted thyroid capsule, central vascularity, and suspected cervical lymph node metastasis. We chose the images according to clinicical experience.

SE is an advanced technology used to evaluate tissue elasticity through the action of an external force. Under the same conditions, soft materials are more distorted than hard materials [2]. The degree of distortion under an external force was used to evaluate tissue hardness. Based on the fact that benign thyroid nodules are softer than malignant nodules, SE is used to differentiate benign from malignant nodules [2].

The SE score was based on Xu’s scoring system [14] as follows: Score 1: the nodule is predominantly white; Score 2: the nodule is predominantly white with few black portions; Score 3: the nodule is equally white and black; Score 4: the nodule is predominantly black with a few white spots; Score 5: nodules are almost completely black; and Score 6: nodules are completely black without white spots. A nodule was considered malignant if the score was greater than 4. CEUS is a new technique that infuses microbubbles into blood capillaries, which are smaller than the erythrocytes. Owing to the ultrasound scattering effect produced by blood capillaries, it can estimate the blood perfusion features of thyroid nodules to evaluate angiogenesis [2].

By comparing the echogenicity brightness between the thyroid nodule and surrounding parenchyma at peak enhancement, the degree of enhancement was classified as hypo, iso, hyper, or no enhancement. According to the echogenicity intensity of the thyroid nodules, the enhancement identity was classified as homogeneous and heterogeneous. Additionally, the nodule was regarded as malignant if the pattern of enhancement was heterogeneous hypoenhancement.

Method

All statistical analysis in this study was conducted using MATLAB software, version R2015a.

Different classifiers had different tuning parameters. There were no tunable parameters for the LDA and logistic regression classifiers. There were two parameters for RF.

The number of randomly selected variables m and decision trees ntree was fixed at 500 as the default value for the two tunable parameters. Therefore, RF was the only tunable parameter in this study. The tunable parameter of K-NN is the number of neighbors K. The other classifiers had two tunable parameters (SVM and GlmNet). The SVM had two tunable parameters: the Gaussian kernel(γ) and penalty coefficient (c). There were two tunable parameters for GlmNet: the mixing factor (α) and the regularization parameter (λ).

In this study, a five-fold cross-validation technique was used to tune the parameters for the classifiers. In each folder, based on a grid of parameter values, the optimal tunable parameters of the classifier were determined using five-fold cross-validation of the training data, which maximized classification accuracy. Table 4 provides a grid of parameter values from which the optimal parameters of the classifiers are chosen by five-fold cross-validation of the training data. This study evaluated performance using 10-folder cross-validation, including sensitivity, specificity, accuracy, PPV, NPV, and AUC.

Availability of data and materials

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ML:

-

Machine learning

- US:

-

Conventional ultrasonography

- SE:

-

Strain elastosonography

- CEUS:

-

Contrast-enhanced ultrasound

- SVM:

-

Support vector machine

- LDA:

-

Linear discriminant analysis

- RF:

-

Random forest

- LG:

-

Logistic regression

- KNN:

-

K-nearest neighbors

- NPV:

-

Negative predictive value

- PPV:

-

Positive predictive value

- AUC:

-

The area under the receiver operating curve

References

Batawil N, Alkordy T. Ultrasonographic features associated with malignancy in cytologically indeterminate thyroid nodules. Eur J Surg Oncol. 2014;40(2):182–6.

Pang T, Huang L, Deng Y, Wang T, Chen S, Gong X, Liu W. Logistic regression analysis of conventional ultrasonography, strain elastosonography, and contrast-enhanced ultrasound characteristics for the differentiation of benign and malignant thyroid nodules. PLoS One. 2017;12(12):0188987.

Zhao RN, Zhang B, Yang X, Jiang YX, Lai XJ, Zhang XY. Logistic regression analysis of contrast-enhanced ultrasound and conventional ultrasound characteristics of sub-centimeter thyroid nodules. Ultrasound Med Biol. 2015;41(12):3102–8.

Chng CL, Kurzawinski TR, Beale T. Value of sonographic features in predicting malignancy in thyroid nodules diagnosed as follicular neoplasm on cytology. Clin Endocrinol. 2015;83(5):711.

Franklin J. The elements of statistical learning: data mining, inference and prediction. Publ Am Stat Assoc. 2010;99(466):567–567.

Drucker H, Burges CJC, Kaufman L, Smola AJ, Vapnik V. Support vector regression machines. Adv Neural Inf Process Syst. 1997;28(7):779–84.

Cortes C, Vapnik V. Support-vector networks. Mach Learn. 1995;20(3):273–97.

Breiman L. Random forests. Mach Learn. 2001;45(1):5–32.

Cover T, Hart P. Nearest neighbor pattern classification. IEEE Trans Inf Theory. 1967;13(1):21–7.

Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning. New York: Springer; 2009.

Hothorn T, Hornik K, Zeileis A. Unbiased recursive partitioning: a conditional inference framework. J Comput Graph Stat. 2006;15(3):651–74.

Zhu YC, AlZoubi A, Jassim S, Jiang Q, Zhang Y, Wang YB, Ye XD, Hongbo DU. A generic deep learning framework to classify thyroid and breast lesions in ultrasound images. Ultrasonics. 2021;110:106300. https://doi.org/10.1016/j.ultras.2020.106300. Epub 2020 Nov 12. PMID: 33232887.

Zhu YC, Jin PF, Bao J, Jiang Q, Wang X. Thyroid ultrasound image classification using a convolutional neural network. Ann Transl Med. 2021;9(20):1526. https://doi.org/10.21037/atm-21-4328. PMID: 34790732; PMCID: PMC8576712.

Zhang YF, He Y, Xu HX, Xu XH, Liu C, Guo LH, Liu LN, Xu JM. Virtual touch tissue imaging on acoustic radiation force impulse elastography. J Ultrasound Med. 2014;33(4):585–95.

Acknowledgements

Not applicable.

Funding

This work was supported in part by Grants JCYJ20140414170821285 and JCYJ20150529164154046 from Shenzhen Science and Technology Innovation Committee and JCYJ20160422113119640 from Shenzhen Fundamental Research Project.

Author information

Authors and Affiliations

Contributions

Conceptualization, Weixiang Liu, Jianguang Liang and Xianfen Diao; Methodology, Tiantian Pang and Xiaogang Li; Validation, Tiantian Pang, Xianfen Diao, Jianguang Liang and Xiaogang Li; Formal Analysis, Tiantian Pang; Investigation, Leidan Huang and Xuehao Gong; Data Curation, Xuehao Gong and Leidan Huang; Writing–Original Draft Preparation, Tiantian Pang.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Shenzhen Second People’s Hospital.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Liang, J., Pang, T., Liu, W. et al. Comparison of six machine learning methods for differentiating benign and malignant thyroid nodules using ultrasonographic characteristics. BMC Med Imaging 23, 154 (2023). https://doi.org/10.1186/s12880-023-01117-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-023-01117-z