Abstract

Background

Evidence-Based Medicine (EBM) integrates best available evidence from literature and patients’ values, which then informs clinical decision making. However, there is a lack of validated instruments to assess the knowledge, practice and barriers of primary care physicians in the implementation of EBM. This study aimed to develop and validate an Evidence-Based Medicine Questionnaire (EBMQ) in Malaysia.

Methods

The EBMQ was developed based on a qualitative study, literature review and an expert panel. Face and content validity was verified by the expert panel and piloted among 10 participants. Primary care physicians with or without EBM training who could understand English were recruited from December 2015 to January 2016. The EBMQ was administered at baseline and two weeks later. A higher score indicates better knowledge, better practice of EBM and less barriers towards the implementation of EBM. We hypothesized that the EBMQ would have three domains: knowledge, practice and barriers.

Results

The final version of the EBMQ consists of 80 items: 62 items were measured on a nominal scale, 22 items were measured on a 5 point Likert-scale. Flesch reading ease was 61.2. A total of 343 participants were approached; of whom 320 agreed to participate (response rate = 93.2%). Factor analysis revealed that the EBMQ had eight domains after 13 items were removed: “EBM websites”, “evidence-based journals”, “types of studies”, “terms related to EBM”, “practice”, “access”, “patient preferences” and “support”. Cronbach alpha for the overall EBMQ was 0.909, whilst the Cronbach alpha for the individual domain ranged from 0.657–0.940. The EBMQ was able to discriminate between doctors with and without EBM training for 24 out of 42 items. At test-retest, kappa values ranged from 0.155 to 0.620.

Conclusions

The EBMQ was found to be a valid and reliable instrument to assess the knowledge, practice and barriers towards the implementation of EBM among primary care physicians in Malaysia.

Similar content being viewed by others

Background

Evidence-based medicine (EBM) is defined as the integration of best available evidence in a conscientious, explicit and judicious manner from literature and patients’ values which then informs clinical decision making [1]. Practicing EBM in clinical practice helps doctors make a proper diagnosis and selects the best treatment available to treat or manage a disease [2]. The use of EBM in clinical setting is thought to provide the best standard of medical care at the lowest cost [3].

Evidence-based medicine has an increasing impact in primary care over recent years [4]. It involves patients in decision making and influences the development of guidelines and quality standards for clinical practice [4]. Primary care physicians are the first person of contact for patients [5]. They have high workload and at the same time they need to uphold the quality of healthcare [6]. Therefore, it is important for them to treat patients based on research evidence, clinical expertise and patient preferences [7]. However, integrating EBM into clinical practice in primary care is challenging as there are variations in team composition, organisational structures, culture and working practices [8].

A search from literature revealed that the international main barriers were lack of time, lack of resources, negative attitudes towards EBM and inadequate EBM skills [9]. A recent qualitative study conducted in 2014 found that the unique barriers in implementing EBM among primary care physicians in Malaysia were lack of awareness and attention toward patient values. Patient values forms a key element of EBM and they still preferred obtaining information from their peers and interestingly, they used WhatsApp—a smart phone messenger [10].

Therefore, we need an instrument to determine the knowledge, practice and barriers of the implementation of EBM among the primary care physicians. It is important to have an instrument to identify the gaps on a larger scale and improve the implementation of EBM in their clinical practice. A systematic review by Shaneyfelt et al. [11] reported that 104 instruments have been developed to evaluate the acquisition of skills by healthcare professionals to practice EBM. These instruments assessed one or more of the following domains on EBM: knowledge, attitude, search strategies, frequency of use of evidence sources, current applications, intended future use and confidence in practice. However, only eight instruments were validated: four instruments assessed the competency in EBM teaching and learning [12,13,14,15,16], whilst four assessed knowledge, attitude and skills [16,17,18,19]. However, no instrument has assessed the knowledge, practice and barriers in the implementation of EBM. Therefore, this study aimed to develop and validate the English version of the Evidence-Based Medicine Questionnaire (EBMQ), which was designed to assess knowledge, practice and barriers of primary care physicians regarding the implementation of EBM.

Methods

Development of the evidence-based medicine questionnaire

A literature search was conducted in PubMed; using keywords such as “Evidence-based medicine”, “general practioners”, “primary care physicians” and “survey/questionnaire” from this search, nine relevant studies were identified [12,13,14,15,16, 19, 20]. However, only one instrument [20] evaluated the attitude and needs of primary care physicians. Twenty four items from this questionnaire and findings from two previous qualitative studies in rural and urban primary care settings in Malaysia [10, 21] were used to develop the EBMQ (version 1). The EBMQ was developed in English, as English is used in the training of doctors in medical schools and also taught as a second language in all public schools in Malaysia.

Face and content validity of the EBMQ was verified by an expert panel which consisted of nine academicians (a nurse, a pharmacist and seven primary care physicians). Each item was reviewed, and the relevance and appropriateness of each item was discussed (version 2). A pilot test was then conducted on ten medical officers with a minimum of one year working experience wihout any postgraduate qualification. They were asked to evaluate verbally if any items were difficult to understand. Feedback received were that the font was too small and that there was no option for “place of work” for those working in a University hospital. Changes were made based on these comments to produce version 3, which was then pilot tested in another two participants. No difficulties were encountered. Hence, version 3 was used as the final version.

The evidence based medicine questionnaire (EBMQ)

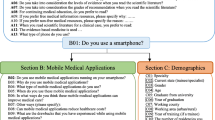

The EBMQ consists of 84 items and 6 sections as shown in Table 1. Only 55 items (33 items in the “knowledge” domain, 9 items in the “practice” domain and 13 items in the “barriers” domain) were measured on a Likert-scale, and could be validated. The final version of the EBMQ is added in Additional file 1. A higher score indicates better knowledge and better practice of EBM and less barriers in practicing EBM.

Participants took 15 to 20 min to complete the EBMQ. We hypothesized that the EBMQ would have 3 domains: knowledge, practice and barriers.

Validation of the evidence-based medicine questionnaire

Participants

Primary care physicians with or without EBM training, who could understand English and who attended a Diploma in Family Medicine workshop, were recruited from December 2015 to January 2016.

Sample size

Sample size calculation was based on a participant to item ratio of 5:1 to perform factor analysis [22]. There are 55 items in the EBMQ. Hence, the minimum number of participants required was 55*5 = 275.

Procedure

Permission was obtained from the Academy of Family Physicians Malaysia to recruit participants who attended their workshops. For those who agreed, written informed consent was obtained. Participants were then asked to fill in the EBMQ at baseline. Two weeks later, the EBMQ was mailed to each participant, with a postage-paid return envelope. If a reply was not obtained within a week, participants were contacted via email and/or SMS, and reminded to send in their completed EBMQ form as soon as possible.

Data analysis

Data were analyzed using the Statistical Package for Social Sciences (SPSS) version 22 software (Il, Chicago, USA). Normality could not be assumed, hence non-parametric tests were used. Categorical variables were presented as percentage and frequencies, while continuous variables were presented as median and interquartile range (IQR).

Validity

Flesch reading ease

The readability of the EBMQ was assessed using Flesch reading ease. This was calculated based on the average number of syllables per word and the average number of words per sentence [23]. An average document should have a score of 60–70 [23].

Exploratory factor analysis

Exploratory factor analysis (EFA) was used to test the underlying structures within the EBMQ. EFA is a type of factor analysis that is utilised to identify the number of latent variables that underlies an entire set of items [24]. EFA was performed to explore the factors appropriateness that can be grouped into specific factors and also to provide information about the validity of each item in each domain. It is important to ensure that the items in each domain of the EBMQ are connected to their basic factors.

Factor loadings were assessed using the Keiser-Meyer-Olkin (KMO) and Bartlett’s test of sphericity. The principal components variance with promax variation were used for data reduction purposes, and eigenvalues > 1 was selected to see the variances of the principal components. KMO value of > 0.6, individual factor loadings > 0.5, average variance extracted (AVE) > 0.5 and composite reliability (CR) > 0.7, indicate good structure within the domains [25, 26].

Discriminative validity

To assess discriminative validity, participants were divided into those with or without EBM training. We hypothesized that the knowledge and practice of participants with EBM training would have better knowledge, better practice and less barriers than those without EBM training. The Chi-square test was used to determine if there was any difference between the two groups. A p-value < 0.05 was considered as statistically significant.

Reliability

Internal consistency

Internal consistency was performed to test the consistency of the results and estimates the reliability of the items in the EBMQ. The internal consistency of the EBMQ was assessed using Cronbach’s α coefficient. A Cronbach’s alpha value of 0.5–0.69 is acceptable, while values of 0.70–0.90 indicate a strong internal consistency [27]. Corrected item-total correlations should be > 0.2 for it to be considered acceptable [28]. If omitting an item increases the Cronbach’s α significantly, the item will be excluded.

Test-retest reliability

The test-retest was performed to measure the reliability and stability of the items in the EBMQ over a period of time. It is also important to administer the same test twice to measure the consistency of the answers by the participants. The intra-class correlation coefficient (ICC) was used to assess the total score at test-retest. A ICC agreement value of 0.7 was considered acceptable [29]. ICC values between 0.75 and 1.00 indicate high reliability, 0.60 and 0.74 indicate good reliability, 0.40–0.59 has fair reliability and those below 0.40 indicate low reliability [30].

Results

A total of 343 primary care doctors were approached; of whom 320 agreed to participate (response rate = 93.2%). The majority of them were female (69.4%) with a median age of 32.2 years [IQR = 4.0]. Nearly all (97.2%) were medical officers, working in government health clinics (54.4%) and possessed no postgraduate qualifications after their basic medical degree (78.4%). All participants had heard about EBM, but only 222 (69.7%) had attended an EBM course (Table 2).

Validity

Flesch reading ease of the EBMQ was 61.2. Initially, we hypothesized that the “knowledge” domain would have two factors. However, EFA found that the “knowledge” domain had four factors: (“evidence-based medicine websites”, “evidence-based journals”, “type of studies” and “terms related to EBM”) after 9 items (item C1: “Clinical Practice Guidelines”, item C7: “Dynamed”, item C11: “InfoPoems”, item C4: “Cochrane”, item C8: “TRIP database”, item C15: “BestBETs”, item C9: “MEDLINE”, item C17: “Medscape” and item C16: “UpToDate”) were removed. This model explained 54.3% of the variation (Table 3).

EFA found that the “practice” domain had only one factor with eight items after one item (item 9: “I prefer to manage patients based on my experience”) was removed. This model explained 49.0% of the variation (Table 3).

We hypothesized that the ‘barriers’ domain would only have one factor. However, EFA revealed that the ‘barriers’ domain has three factors (“access”, “support” and “patient’s preferences”) after three items were removed (item 7: “I can consult the specialist anytime to answer my queries”, item 10: “I have the authority to change the management of patients in my clinic” and item 11: “There are incentives for me to practice EBM”). This model explained 49.9% of the variation (Table 3).

Discriminative validity

In the “knowledge” domain, doctors who had EBM training had significant higher scores in 13 out of 24 items compared to those without training. In the “practice” domain, doctors who had EBM training had significant higher scores in 5 out 8 items compared to those without training. In the “barriers” domain, doctors who had EBM training had significant higher scores in 5 out of 10 items compared to those without training (Table 4).

Reliability

Cronbach alpha for the overall EBMQ was 0.909, whilst individual domains ranged from 0.657 to 0.933 (Table 4). All corrected item-total correlation (CITC) values were > 0.2. At retest, 185 participants completed the EBMQ (response rate = 57.85%), as n = 23 (42%) were uncontactable. Thirty items had good and fair correlations (r = 0.418–0.620) while 12 items had low correlations (r = < 0.4). (Table 5).

Discussion

The EBMQ was found to be a valid and reliable instrument to assess the knowledge, practice and barriers of primary care physicians regarding the implementation of EBM. The final EBMQ consists of 42 items with 8 domains after 13 items were removed. The Flesch reading ease was 61.2. This indicates that the EBMQ can be easily understood by 13–15 years old students who study English as a first language [23].

Initially, we hypothesized that there were two factors in the “knowledge” domain: “sources related to EBM” and “terms related to EBM”. However, EFA revealed that the EBMQ had four factors: “evidence-based medicine websites”, “evidence-based journals”, “terms related to EBM” and “type of studies” after 9 items were removed. This was because “sources related to EBM” was further divided into another three factors. It is not surprising because knowledge is a broad concept that can be further recategorized. EFA revealed that the “practice” domain had one factor which concurred with our initial hypothesis. One item (item P9: “I prefer to manage patients based on my experience”) was removed as this was regarding doctors’experience rather than their practice. Initially, we hypothesized that there was one factor in the “barriers” domain. However, EFA revealed that there were three factors: ‘access to resources’, ‘patient preferences towards EBM’ and ‘support from the management’ after three items were removed. This may be because instead of one barrier, EFA had re-grouped into three factors that provided a better description of barriers encountered by the primary care physicians. As highlighted in literature [9, 31], there are many barriers to practice EBM and some of it were also categorized according the specific and types of barriers.

The EBMQ was able to discriminate the knowledge, practice and barriers between doctors with and without EBM training. In the knowledge domain, there were significant differences for all items in the “terms related to EBM”. This is not surprising as doctors with EBM training would have been exposed to these terms. No differences was found between those with and without EBM training in “information sources related to EBM” as those who did not attend EBM training could still access online information resources. Several studies were found to improve knowledge but did not report in detail which areas on knowledge. Hence, we could not compare their findings to our studies [32,33,34,35].

Our findings also showed that doctors with EBM training had better practice of EBM. This differed from several studies which reported changes in practice [32, 36,37,38,39] and some reported no changes in practice [35, 40]. However, the authors commented that these findings were not meaningful as it was self-perceived. Other than that, in our findings, doctors who attended EBM training had less barriers regarding the implementation of EBM in their clinical practice. They seemed to have better access to resources, more patients had a positive attitude towards EBM, and better support from management to practice EBM compared to those without EBM training. This could be because doctors with EBM training knew how to overcome problems that would prevent them from practicing EBM. In the systematic review [41], the barriers in the implementation of EBM remains unclear as it was not reported.

The overall Cronbach’s alpha as well as the individual domains were > 0.7. This indicates that the EBMQ has adequate psychometric properties, which was similar to previous studies [12, 14,15,16, 19, 42]. The majority (71.4%) of the items in EBMQ had good and fair correlation at test-retest, which indicates that the EBMQ has achieved adequate reliability. The reliability testing two weeks later did not affect the methodology as the acceptable time interval for test-retest reliability is approximately 2 weeks [28]. The discriminative validity was performed using the baseline data and not after retest which then impact on the methodology.

To our knowledge, this was the first validation study assessed the discriminative validity (i.e. between doctors with and without EBM training) that assessed their implementation of EBM. One of the limitations of this study was that participants were recruited whilst attending a Family Medicine module workshop. This may mean that participants that were recruited may be more interested in the practice of EBM as they are already interested in furthering their postgraduate studies. This cohort are likely to be more interested with the practice of EBM as they are more incline to further their studies rather than the normal general practitioners. Hence, our result may not be generalizable.

Conclusions

The EBMQ was found to be a valid and reliable instrument to assess the knowledge, practice and barriers of primary care physicians towards EBM in Malaysia. The EBMQ can be used to assess doctors’ practices and barriers in the implementation of EBM. Information gathered from the administration of the EBMQ will assist policy makers to identify the level of knowledge, practice and barriers of EBM and to improve its uptake in clinical practice. Although the findings of this study are not generalizable, they may be of interest to primary care physicians in other countries.

Abbreviations

- AVE:

-

Average variance extracted

- CITC:

-

Corrected item-total correlation

- CR:

-

Composite reliability

- EBM:

-

Evidence-based medicine

- EBMQ:

-

Evidence-based medicine questionnaire

- EFA:

-

Exploratory factor analysis

- ICC:

-

Intra-correlation coefficient

- IQR:

-

Interquartile range

- KMO:

-

Kaiser-Meyer-Oklin

- SPSS:

-

Statistical Package for Social Sciences

References

Sackett DL, Rosenberg WMC, Gray JAM, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ. 1996;312:71–2.

Saarni SI, Gylling HA. Evidence based medicine guidelines: a solution to rationing or politics disguised as science? J Med Ethics. 2004;30:171–5.

Lewis SJ, Orland BI. The importance and impact of evidence-based medicine. J Manag Care Pharm. 2004;10:S3–5.

Slowther A, Ford S, Schofield T. Ethics of evidence based medicine in the primary care setting. J Med Ethics. 2004;30:151–5.

Kumar R. Empowering primary care physicians in India. J Fam Med Prim Care. 2012;1:1–2.

Mohr DC, Benzer JK, Young GJJD. Provider workload and quality of care in primary care settings: moderating role of relational climate. Med Care. 2013;51:108–14.

Tracy CS, Dantas GC, Upshur REG. Evidence-based medicine in primary care: qualitative study of family physicians. BMC Fam Prac. 2003;4:6–6.

Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:a1655.

Sadeghi-Bazargani H, Tabrizi JS, Azami-Aghdash S. Barriers to evidence-based medicine: a systematic review. J Eval Clin Pract. 2014;20:793–802.

Hisham R, Liew SM, Ng CJ, Mohd Nor K, Osman IF, Ho GJ, Hamzah N, Glasziou P. Rural doctors’ views on and experiences with evidence-based medicine: the FrEEDoM qualitative study. PLoS One. 2016;11:e0152649.

Shaneyfelt T, Baum KD, Bell D, Feldstein D, Houston TK, Kaatz S, Whelan C, Green M. Instruments for evaluating education in evidence-based practice: a systematic review. JAMA. 2006;296:1116–27.

Ruzafa-Martinez M, Lopez-Iborra L, Moreno-Casbas T, Madrigal-Torres M. Development and validation of the competence in evidence based practice questionnaire (EBP-COQ) among nursing students. BMC Med Educ. 2013;13:19.

Adams S, Barron S. Development and testing of an evidence-based practice questionnaire for school nurses. J Nurs Meas. 2010;18:3–25.

Johnston JM, Leung GM, Fielding R, Tin KY, Ho LM. The development and validation of a knowledge, attitude and behaviour questionnaire to assess undergraduate evidence-based practice teaching and learning. Med Educ. 2003;37:992–1000.

Fritsche L, Greenhalgh T, Falck-Ytter Y, Neumayer H, Kunz R. Do short courses in evidence based medicine improve knowledge and skills? Validation of berlin questionnaire and before and after study of courses in evidence based medicine. BMJ. 2002;325:1338–41.

Ramos KD, Schafer S, Tracz SM. Validation of the Fresno test of competence in evidence based medicine. BMJ. 2003;326:319–21.

Rice KHJ, Abrefa-Gyan T, Powell K. Evidence-based practice questionnaire: a confirmatory factor analysis in a social work sample. Adv Soc Work. 2010;11:158–73.

Iovu MB, Runcan P. Evidence-based practice: knowledge, attitudes, and beliefs of social workers in Romania. Revista de Cercetare si Interventie Sociala. 2012;38:54–70.

Upton D, Upton P. Development of an evidence-based practice questionnaire for nurses. J Adv Nurs. 2006;53:454–8.

McColl A, Smith H, White P, Field J. General practitioners’ perceptions of the route to evidence based medicine: a questionnaire survey. BMJ. 1998;316:361–5.

Blenkinsopp A, Paxton P. Symptoms in the pharmacy: a guide to the management of common illness. 3rd ed. Oxford. Blackwell Science; 1998.

Gorsuch RL. Factor analysis. 2nd ed. Lawarence Erlbaum Associates: Hillsdale; 1983.

Flesch R. A new readability yardstick. J Appl Psychol. 1948;32:221–33.

van der Eijk C, Rose J. Risky business: factor analysis of survey data – assessing the probability of incorrect dimensionalisation. PLoS One. 2015;10:e0118900.

Kaiser HF. A second generation little jiffy. Psychometrika. 1970;35:401–15.

Hidalgo B, Goodman M. Multivariate or multivariable regression? Am J Pub Health. 2013;103:39–40.

Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16:297–334.

Streiner DN. G. Health measurement scales: a practical guide to their development and use. 2nd ed. Oxford: Oxford University Press; 1995.

Terwee CB, Bot SD, de Boer MR, van der Windt DA, Knol DL, Dekker J, Bouter LM, de Vet HC. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol. 2007;60:34–42.

Cicchetti DV. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol Assess. 1994;6:284–90.

Zwolsman S, te Pas E, Hooft L, Wieringa-de Waard M, van Dijk N. Barriers to GPs’ use of evidence-based medicine: a systematic review. Br J Gen Pract. 2012;62:e511–21.

Dizon JM, Grimmer-Somers K, Kumar S. Effectiveness of the tailored evidence based practice training program for Filipino physical therapists: a randomized controlled trial. BMC Med Educ. 2014;14:147.

Chen FC, Lin MC. Effects of a nursing literature reading course on promoting critical thinking in two-year nursing program students. J Nurs Res. 2003;11:137–47.

Bennett S, Hoffmann T, Arkins M. A multi-professional evidence-based practice course improved allied health students’ confidence and knowledge. J Eval Clin Pract. 2011;17:635–9.

McCluskey A, Lovarini M. Providing education on evidence-based practice improved knowledge but did not change behaviour: a before and after study. BMC Med Educ. 2005;5:40.

Levin RF, Fineout-Overholt E, Melnyk BM, Barnes M, Vetter MJ. Fostering evidence-based practice to improve nurse and cost outcomes in a community health setting: a pilot test of the advancing research and clinical practice through close collaboration model. Nurs Adm Q. 2011;35:21–33.

Stevenson K, Lewis M, Hay E. Do physiotherapists’ attitudes towards evidence-based practice change as a result of an evidence-based educational programme? J Eval Clin Pract. 2004;10:207–17.

Kim SC, Brown CE, Ecoff L, Davidson JE, Gallo AM, Klimpel K, Wickline MA. Regional evidence-based practice fellowship program: impact on evidence-based practice implementation and barriers. Clin Nurs Res. 2013;22:51–69.

Lizarondo LM, Grimmer-Somers K, Kumar S, Crockett A. Does journal club membership improve research evidence uptake in different allied health disciplines: a pre-post study. BMC Res Notes. 2012;5:588.

Yost J, Ciliska D, Dobbins M. Evaluating the impact of an intensive education workshop on evidence-informed decision making knowledge, skills, and behaviours: a mixed methods study. BMC Med Educ. 2014;14:13.

Hecht L, Buhse S, Meyer G. Effectiveness of training in evidence-based medicine skills for healthcare professionals: a systematic review. BMC Med Educ. 2016;16:103.

Rice K, Hwang J, Abrefa-Gyan T, Powell K. Evidence-based practice questionnaire: a confirmatory factor analysis in a social work sample. Adv Soc Sci. 2010;11:158–73.

Acknowledgements

We would like to thank the participants of this study.

Funding

This study was funded by University of Malaya Research Grant (RP037A-15HTM).

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

NCJ and LSM conceived the study and CYC, KEM, NSH, SO, LPY, KLA participated in its design and coordination. RH, NCJ, LSM and LSMP contributed to data analysis and interpretation. KC provided statistical advice, data analysis and interpretation. RH drafted the manuscript and all the authors critically revised it and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study received ethics approval from the University of Malaya Medical Centre Medical Ethics Committee (MREC: 962.9). Informed written consent was obtained from all participants who agreed to participate in this study.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

The Evidence-Based Medicine Questionnaire (EBMQ), The final version of EBMQ to assess doctors’ knowledge, practice and barriers regarding the implementation of Evidence-Based Medicine in primary care. (DOCX 228 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Hisham, R., Ng, C.J., Liew, S.M. et al. Development and validation of the Evidence Based Medicine Questionnaire (EBMQ) to assess doctors’ knowledge, practice and barriers regarding the implementation of evidence-based medicine in primary care. BMC Fam Pract 19, 98 (2018). https://doi.org/10.1186/s12875-018-0779-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12875-018-0779-5