Abstract

Background

Systematic reviews can apply the Appraisal of Guidelines for Research & Evaluation (AGREE) II tool to critically appraise clinical practice guidelines (CPGs) for treating low back pain (LBP); however, when appraisals differ in CPG quality rating, stakeholders, clinicians, and policy-makers will find it difficult to discern a unique judgement of CPG quality. We wanted to determine the proportion of overlapping CPGs for LBP in appraisals that applied AGREE II. We also compared inter-rater reliability and variability across appraisals.

Methods

For this meta-epidemiological study we searched six databases for appraisals of CPGs for LBP. The general characteristics of the appraisals were collected; the unit of analysis was the CPG evaluated in each appraisal. The inter-rater reliability and the variability of AGREE II domain scores for overall assessment were measured using the intraclass correlation coefficient and descriptive statistics.

Results

Overall, 43 CPGs out of 106 (40.6%) overlapped in seventeen appraisals. Half of the appraisals (53%) reported a protocol registration. Reporting of AGREE II assessment was heterogeneous and generally of poor quality: overall assessment 1 (overall CPG quality) was rated in 11 appraisals (64.7%) and overall assessment 2 (recommendation for use) in four (23.5%). Inter-rater reliability was substantial/perfect in 78.3% of overlapping CPGs. The domains with most variability were Domain 6 (mean interquartile range [IQR] 38.6), Domain 5 (mean IQR 28.9), and Domain 2 (mean IQR 27.7).

Conclusions

More than one third of CPGs for LBP have been re-appraised in the last six years with CPGs quality confirmed in most assessments. Our findings suggest that before conducting a new appraisal, researchers should check systematic review registers for existing appraisals. Clinicians need to rely on updated CPGs of high quality and confirmed by perfect agreement in multiple appraisals.

Trial Registration

Protocol Registration OSF: https://osf.io/rz7nh/

Similar content being viewed by others

Introduction

Low back pain (LBP) is a major contributor to years lived with disability and a leading cause of limited activity and absence from work [1, 2]. In response to the global burden of LBP, major medical societies or specialized working groups have developed clinical practice guidelines (CPGs) for its diagnosis and management [3, 4]. The principles of CPGs design are well established but the growing multiplication of CPGs has cast doubt on their quality [5]. The current gold standard for the appraisal of CPG quality is the Appraisal of Guidelines for REsearch & Evaluation (AGREE) instrument developed by the AGREE Collaboration in 2003 [6,7,8]. The updated version, known as AGREE II, consists of 23 appraisal criteria (items) grouped into six independent quality domains. There are two overall assessment items: one to evaluate overall CPG quality (overall assessment 1) and one to judge whether a CPG should be recommended for use in practice (overall assessment 2) [9]. Substantial time and resources go into the development of CPGs ex novo, so it may be more efficient to adapt a high-quality CPG (or selected recommendations) for local use, when available [10,11,12]. Systematic reviews authors can apply AGREE II in their critical appraisal of CPGs for LBP [13,14,15,16,17], but stakeholders, clinicians, and policy makers may find it difficult to discern the highest quality CPG when appraisals give different quality ratings of overlapping CPGs. With this study we wanted to determine the proportion of CPGs evaluated in more than one appraisal (i.e., overlapping CPGs) and measure the inter-rater reliability (IRR) and variability of AGREE II scores for overlapping CPGs.

Materials and methods

Meta-epidemiological study

The study was conducted according to the guidelines for reporting meta-epidemiological methodology research [18] since the specific reporting checklist for methods research studies is currently under development (MethodologIcal STudy reporting Checklist [MISTIC]) [19]. The protocol is available on the public Open Science Framework (OSF) repository at https://osf.io/rz7nh/

Search strategy and study selection

We summarized the findings of systematic reviews that applied the AGREE II tool to appraise the quality of CPGs for LBP. We defined these systematic reviews as “appraisals”. For details about the AGREE II instrument, see https://www.agreetrust.org/resource-centre/agree-ii/.

We systematically searched six databases (PubMed, EMBASE, CINAHL, Web of Science, Psychinfo, PEDRO) from January 1, 2010 through March 3, 2021. AGREE II was published in 2010 [6]. The full search strategy is presented in Additional file 1.

Eligibility criteria

Two independent reviewers screened titles and abstracts against eligibility criteria: 1) systematic reviews (i.e., CPGs appraisals) that used the AGREE II tool to evaluate CPGs quality; 2) CPGs for LBP prevention, diagnosis, management, and treatment irrespective of cause (e.g., non-specific LBP, spondylolisthesis, lumbar stenosis, radiculopathy); 3) AGREE II ratings were reported. Included in the present study were appraisals on mixed populations (e.g., neck and back pain) when the data on back pain were reported separately. A third reviewer was consulted to resolve reviewer disagreement. Rayyan software [20] was used to manage screening and selection.

Data extraction

Data were entered on a pre-defined data extraction form (Excel spreadsheet). Two authors extracted the data for: study author, year of publication, protocol registration, number of raters, training in use of the AGREE II tool, population, intervention, exclusion criteria for each appraisal, references of CPGs, AGREE II items/domain scores and two overall assessments: overall assessment 1 (overall CPG quality [measured on a 1-7 scale]) and overall assessment 2 (recommendation for use [yes, yes with modifications, no]). When reported by the appraisers, quality ratings (high, moderate, low) were also extracted.

The reporting of overall assessment varied across appraisals. For overall assessment 2, we collected information about the number of raters who selected the categories “yes”, “yes with modification” or “no” (e.g., 75% raters judged “yes”; 25% “yes with modifications” and 0% “no”) labeling this “raw recommendation for use”. In appraisals that reported only a single recommendation (such as yes) without the percentage for all three categories, we assigned this category by default, labeling this “final recommendation for use” [21].

The corresponding authors were contacted when AGREE II domain scores and overall assessments were not reported. When no response was received, we calculated the domain scores based on AGREE II item scores according to the AGREE II formulas [9].

Data synthesis and analysis

The characteristics of the appraisals eligible for inclusion were summarized using descriptive statistics. Overlapping was defined as how many times a CPG was re-assessed for quality in different appraisals using the AGREE II tool. We measured IRR and variability of the AGREE II domain scores for CPGs that were assessed by at least three appraisals. We used the average intraclass correlation coefficient (ICC) with 95% confidence interval (CI) of the six domain scores to formulate agreement between overlapping CPGs [22]. The degree of agreement was graded according to Landis and Koch [23]: slight (0.01-0.2); fair (0.21-0.4); moderate (0.41-0.6); substantial (0.61-0.8); and almost perfect (0.81-1). For quantitative variables (AGREE II domain scores and overall assessment 1), we measured variability by calculating the interquartile range (IQR) as the difference between the first and the third quartile (Q3-Q1). We measured variability in qualitative variables (overall assessment 2 and quality ratings) as agreement/disagreement of judgments. We defined “perfect agreement” when all appraisals gave the same judgment for the same category (e.g., all judged “high quality” for the same CPGs, IRR=1). Variability of each of the six domain scores for the overlapping CPGs (assessed by at least three appraisals) is reported as mean IQR. Statistical significance was set at P < 0.05. All tests were two-sided. Data analysis was performed using STATA [24].

Results

Search results

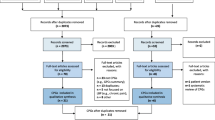

The systematic search retrieved 254 records. After duplicates were removed, 192 records were obtained, 163 of which were discarded. The full text of the remaining 29 was examined; 12 did not meet the inclusion criteria (Fig. 1). Finally, 17 appraisals that applied the AGREE II tool were included in the analysis [17, 25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40].

Characteristics of CPG appraisals

Table 1 presents the general characteristics of the 17 appraisals. The median year of publication was 2020 (range, 2015-2021). Eleven appraisals assessed CPGs for LBP and six assessed CPGs not restricted to LBP alone (e.g., chronic musculoskeletal pain). Seven appraisals (41.2%) reported a protocol registration in PROSPERO and two (11.8%) a protocol registration in other online registries or repositories. Three appraisals (17.6%) involved four AGREE II raters and the remaining involved two or three. Six appraisals (35.3%) stated that the raters had received training for using the tool. The rating of all six domains was reported in 14 appraisals (82.4%) [17, 25,26,27,28,29,30,31,32,33,34,35, 37, 40] and the rating of 23 item scores in two [36, 39]. Overall assessment 1 (overall CPG quality) was reported in 11 appraisals (64.7%) [25,26,27,28,29,30, 32,33,34, 37, 40] and overall assessment 2 (recommendation for use) in four (23.5%) [25, 27, 28, 32]. A quality rating (not part of the AGREE II tool) was given in nine appraisals (53%) [17, 26, 28, 29, 33, 34, 36, 37, 39]. One appraisal reported AGREE II ratings in supplementary materials that were unavailable [38]. Four authors [29, 35,36,37] supplied missing data as requested.

Overlapping CPGs

A total of 43/106 CPGs (40.6%) were overlapping in 17 appraisals (i.e., assessed by at least two appraisals) and 23 CPGs [42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65] had been assessed by at least three appraisals. The six CPGs that most often overlapped were issued by: the National Institute for Health and Care Excellence (NICE) 2016 [63] (9 appraisals), the American College of Physicians (ACP) 2017 [47] (8 appraisals), the American Physical Therapy Association (APTA) 2012 [56] (8 appraisals), the Belgian Health Care Knowledge Centre (KCE) 2017 [43] (6 appraisals), the American Pain Society (APS) 2009 [65] (5 appraisals), and the Council on Chiropractic Guidelines and Practice Parameters (CCGPP) 2016 [55] (5 appraisals). Table 2 presents the overlapping CPGs.12874_1621

Inter-rater reliability

Table 3 presents the ICC averages of the overlapping CPGs assessed by at least three appraisals. IRR was perfect in 13 CPG ratings (56.6%), substantial in five (21.7%), moderate in two (8.7%), fair in one (4.3%), and slight in two (8.7%). The highest agreement was reached in the ACP 2017 [47], the APTA 2012 [56], and the APS 2009 [65] and the lowest in the NICE 2009 [64], the Toward Optimized Practice Low Back Pain Working Group (TOP) 2017 [53], and the American Society of Interventional Pain Physicians (ASIPP) 2013 [49]. In the most often overlapping CPGs (at least five appraisals), the IRR was perfect in all, except the KCE 2017 [43] (substantial).

Variability in domain scores

The most variable domains of overlapping CPGs (assessed by at least three appraisals) were Domain 6 - Editorial Independence (mean IQR 38.6), Domain 5 - Applicability (mean IQR 28.9), and Domain 2 - Stakeholder Involvement (mean IQR 27.7). Among all domains, the most variable CPG was issued by TOP 2017 [53] (mean IQR 51.4) and the least was issued by the Institute for Clinical Systems Improvement (ICSI) 2018 [44] (mean IQR 11) (Table 4). Domain 6 – Editorial Independence was the most variable domain of the CPGs that most often overlapped (assessed by at least five appraisals) (Fig. 2).

Variability of six domains of AGREE II applied to the most often overlapping CPGs (assessed by at least five appraisals). The vertical axis represents AGREE II domain scores (0-100), the horizontal axis represents six AGREE II Domains. Legend. Domain 1: Scope and Purpose, Domain 2: Stakeholder involvement, Domain 3: Rigour of Development, Domain 4: Clarity of presentation, Domain 5: Applicability, Domain 6: Editorial Independence. ACP: American College of Physicians; APS: American Pain Society; APTA: American Physical Therapy Association; CCGPP: Council on Chiropractic Guidelines and Practice Parameters; KCE: Belgian Health Care Knowledge Centre; NICE: National Institute for Health and Care Excellence. * NICE 2016 was assessed by nine appraisals but the domain scores were available for eight; ACP 2017 was assessed by eight appraisals but available for seven

Variability of overall assessments 1 and 2

Because of missing data and heterogeneity of reporting (e.g., 0-100 scale or 1-7 scale for overall assessment 1; raw recommendation for use or final recommendation for overall assessment 2), we trasparently reported the judgments of the two overall assessments of overlapping CPGs assessed by at least three appraisals in Table 5. For overall assessment 2, a perfect agreement was achieved in 5/20 CPG assessments (25%), heterogeneity of reporting in 8/20 (40%), and no complete agreement in 7/20 (35%). For quality ratings (high, moderate, low), a perfect agreement was achieved in 10/19 (53%) while the remaining 9/10 (47%) did not completely agree.

Table 6 presents the variability in the most often overlapping CPGs (assessed by at least 5 appraisals) Overall assessment 1 varied the most in the KCE 2017 [43] (IQR 23 on a 0-100 scale) and the least in the NICE 2016 [63] (IQR 9.4 on a 0-100 scale). Agreement in quality ratings was perfect in the NICE 2016 [63] (3/3 high quality), the APTA 2012 [56] (3/3 low quality), and the CCGPP 2016 [55] (2/2 high quality).

Recommended CPGs

Additional file 3 lists the CPGs that can be recommended for clinicians based on: overall assessment 2 (i.e., yes recommendation for use); quality rating (i.e., high); agreement of appraisals that overlapped for the same CPG (i.e., perfect agreement as measured by the ICC); and updated status of publication. Overall, NICE 2016 [63] and CCGPP 2016 [55] ranked first and second, respectively.

Discussion

More than one third of CPGs for LBP have been re-assessed by different appraisals in the last six years. This implies a potential waste of time and resources, since many appraisals assessed the same CPGs. Researchers contemplating AGREE II appraisal of CPGs for LBP should carefully think before embarking on a new systematic review and editors should bear in mind that much has already been published. Although the PRISMA [86] and the PROSPERO [87, 88] initiatives have been around for more than 10 years, half (53%) of the appraisals were registered as systematic reviews. Nonetheless, perfect/substantial agreement in 78% of AGREE II ratings confirmed the CPG quality. Agreement was highest in the ACP 2017 [47], the APTA 2012 [56], and the APS 2009 [65], and lowest in the NICE 2009 [64], the TOP 2017 [53], and the ASIPP 2013 [49].

Here we compared similarities and differences across appraisals. A plausible explanation for the discrepancy in the degree of agreement on CPGs is that the AGREE II tool includes different information within a single item. Raters may focus their attention on some aspects more than others because there is no composite weight of judgement [55]. In addition, discordances may stem from the availability and ease of access to supplementary contents to better address domain judgment. AGREE II does, however, recommend that raters read the clinical CPG document in full, as well as any accompanying documents [9].

Analysis of variability within domains of the appraisals that assessed the same CPG showed that the two most variable domains were Domain 6 – Editorial Independence and Domain 5 – Applicability and Domain 2 – Stakeholder Involvement. There was poor reporting for some CPGs in Domain 6 item scores, resulting in potential financial conflict of interest between CPG developers, stakeholders, and industry [89]. Conflict of interest can arise for anyone involved in CPG development (funders, systematic review authors, panel members, patients or their representatives, peer reviewers, researchers) [90] and have an impact on biased recommendations with consequences for patients [91, 92]. Affiliation, member role, and management of potential conflict of interest in the recommendation process must be transparently reported to improve judgment consistency. There is an important difference between declaring an interest and determining and managing a potential conflict of interest [93, 94]. While not all interests constitute a potential cause for conflict, assessment must be fully described before taking a decision [95]. Furthermore, inadequate information results in an unclear conflict of interest statement, which can open the way to subjective judgment and variation in the scores for this domain. One solution would be to have a document that identifies explicit links between interests and conflict of interst for each CPG recommendation, so as to give a transparent judgment.

Unsurprisingly, Domain 2 – Stakeholder Involvement also varied widely because it shares the same issue of the description of CPG development groups. This domain presents broad assessment of patient values, preferences, and experiences (e.g., patients/public participation in a CPG development group, external review, interview or literature review), which could be perceived as valid alternative strategies and not a combination of actions. For example, one would expect patient involvement on a LBP CPG development panel rather than consultation of the literature on patient values. This choice reflects patient involvement because it influences guideline development, implementation, and dissemination. CPGs developed without patient involvement may ultimately not be acceptable for use [96].

Domain 5 – Applicability was found poorly and heterogeneously reported in other conditions, too [5, 97]. One reason for domain variability is that the items in this domain often rely on information supplementary to the main guideline document. Supplementary documents may sometimes no longer be retrievable, especially if the CPG is outdated. Implementation of CPGs is not always considered an integrated activity of CPG development. Without an assessment of CPG uptake (e.g., monitoring/audit, facilitators, barriers to its application), its recommendations may not be fully and adequately translated into clinical practice [5]. In some cases, monitoring is not enough without indications or solutions to overcome barriers. Balancing judgments is difficult and may result in variability for this domain.

Finally, due to missing data (overall assessment 1 not reported in 35% of appraisals; overall assessment 2 not reported in 76% of appraisals) and heterogeneity of reporting (1-7 point or 0-100 point scales; final recommendation for use or raw recommendation for use), we found it difficult to synthesize agreements and provide implications for clinical practice. Though not mandatory in the AGREE II tool, a quality rating (high, moderate, low) was reported in 53% of appraisals but agreement was perfect in only half of the appraisals. Our findings are consistent with a previous study on CPG appraisals in rehabilitation in which reporting of the two overall assessments was poor and the quality ratings differed from low to high in more than one fourth of approaisals when different cut-offs were applied to rate the same CPG [21].

In general, variability can be partly explained by the different number of items in each domain, the number of raters, and the subjective rating of AGREE II items that can be differently weighted as leniency and strictness bias [98].

Another factor that could explain variability is the suboptimal use of the AGREE II tool: 65% of the CPG appraisals in our sample did not provide information on whether the raters had received training in use of the AGREE II tool [99] and only 18% involved at least four raters, as recommended in the AGREE II manual [9]. Clinical and methodological competences should always be well balanced among raters,and reported to ensure adherence to high standards. We strongly suggest appraisals report whether raters have received AGREE II training [99]. Some issues with AGREE II validity may arise (e.g., AGREE II video tutorials; “My AGREE PLUS” platform) [100] when the training resources are not consistently updated.

Strengths and limitations

This is the first meta-epidemiological study to examine the overlapping of appraisals applying the AGREE II tool to CPGs for LBP. The sizeable sample of appraisals encompassing CPGs for LBP prevention, diagnosis, and treatment supports the external validity of our findings. Nevertheless, some limitations must be noted. We used as the unit for analysis the overlapping CPGs assessed by at least three appraisals, including CPGs assessed by up to eight appraisals, which may have increased judgment variability. On the conservative side, however, when we restricted our analysis to CPGs assessed by at least five appraisals, the results showed patterns similar to the larger primary sample. We then assessed the variability of overall assessments and quality ratings reported by appraisals when the data were available and homogeneously reported. We did not standardize or convert judgments when the data were reported heterogeneously (e.g., 1-7 point scale or 0-100 scale; final recommendation or raw recommendation for use). This cautious strategy meant that we could not measure the variability of overall assessments for the whole sample since the data were missing from 35% (overall assessment 1) to 76% (overall assessment 2) of appraisals. The percentages of poor reporting are known [97, 101, 102] and similar findings were documented for a large sample of CPGs on rehabilitation (35% overall assessment 1 and 58% overall assessment 2) [21].

Implications

We suggest that time and resources in conducting LBP appraisals can be optimized when appraisal raters follow the AGREE II manual recommendations for conducting (e.g., number of raters; AGREE II training) and reporting (e.g., overall assessment 2). Before starting a new appraisal, researchers should check academic databases and systematic review registers (e.g., PROSPERO) for published appraisals. Also journal editors could help reduce redundancy by checking compliance with the AGREE II manuals and high-quality standards of reporting for manuscript submissions. Finally, the AGREE Enterprise should invest efforts to promote more transparent and detailed reporting (i.e., support of judgment for AGREE II domains and overall assessments). Considering a wide evaluation including overall assessment 2 (i.e., yes recommendation for use), quality rating (i.e., high), agreement of appraisals that overlapped for the same CPG (i.e., perfect agreement) and updated status of publication, we found that NICE 2016 [63] and CCGPP 2016 [55] would be of value and benefit to clinicians in their practice with LBP patients.

We are aware that a CPG has a limited life span between systematic search strategy to answer the clinical questions and year of publication of the guideline itself [27]. The validity of recommendations more than three years old is often potentially questionable [103].

Conclusion

We found that more than one third of the CPGs in our sample had been re-assessed for quality by multiple appraisals during the last six years. We found poor and heterogeneous reporting of recommendations for use (i.e., overall assessment 2), which generates unclear information about their application in clinical practice. Clinicians need to be able to rely on high quality CPGs based on updated evidence with perfect agreement by multiple appraisals.

Availability of data and materials

The datasets generated and analysed during the current study are available in the OSF repository, https://osf.io/rz7nh/.

Abbreviations

- ACOEM:

-

American College of Occupational and Environmental Medicine

- ACP:

-

American College of Physicians

- AGREE II:

-

Appraisal of Guidelines for Research & Evaluation II

- ACR:

-

American College of Radiology

- AOA:

-

American Osteopathic Association

- APS:

-

American Pain Society

- APTA:

-

American Physical Therapy Association

- ASIPP:

-

American Society of Interventional Pain Physicians;

- BPS:

-

British Pain Society

- CAAM:

-

China Association of Acupuncture-Moxibustion

- CCGI:

-

Canadian Chiropractic Guideline Initiative

- CI:

-

Confidence Interval

- CPGs:

-

Clinical Practice Guidelines

- CCGPP:

-

Council on Chiropractic Guidelines and Practice Parameters

- DAI:

-

Deutsches Ärzteblatt International

- DHA:

-

Danish Health Authority

- DSA:

-

Dutch Society of Anesthesiologists

- IQR:

-

Interquartile Range

- ICC:

-

Intraclass correlation

- ICSI:

-

Institute for Clinical Systems Improvement

- IRR:

-

Inter-rater reliability

- KCE:

-

Belgian Health Care Knowledge Centre

- KIOM:

-

Korea Institute of Oriental Medicine

- KNGF:

-

Koninklijk Nederlands Genootschap voor Fysiotherapie

- LBP:

-

Low Back Pain

- MSK:

-

Musculoskeletal

- NASS:

-

North American Spine Society

- NICE:

-

National Institute for Health and Care Excellence

- NVL:

-

Nationale Versorgungs Leitlinie

- OA1:

-

Overall assessment 1

- OA2:

-

Overall assessment 2

- OMG:

-

Ottawa Methods Group

- PARM:

-

Philippine Academy of Rehabilitation Medicine

- PEDro:

-

Physiotherapy Evidence Database

- PSP:

-

Polish Society of Physiotherapy

- PRISMA:

-

Preferred Reporting Intervention for Systematic Review and Meta-analysis

- Q:

-

Quartile

- SIGN:

-

Scottish Intercollegiate Guidelines Network

- SOEGCP:

-

State of Oregon Evidence-based Clinical Guidelines Project

- TOP:

-

Toward Optimized Practice Low Back Pain Working Group

- VADoD:

-

Veterans Affairs/Department of Defense Collaboration Office

References

Hoy D, March L, Brooks P, Blyth F, Woolf A, Bain C, et al. The global burden of low back pain: estimates from the Global Burden of Disease 2010 study. Ann Rheum Dis. 2014;73(6):968–74.

Vrbanic TS. Low back pain–from definition to diagnosis. Reumatizam. 2011;58(2):105–7.

O’Connell NE, Cook CE, Wand BM, Ward SP. Clinical guidelines for low back pain: a critical review of consensus and inconsistencies across three major guidelines. Best Pract Res Clin Rheumtol. 2016;30(6):968–80.

O’Sullivan K, O’Keeffe M, O’Sullivan P. NICE low back pain guidelines: opportunities and obstacles to change practice. Br J Sports Med. 2017;51(22):1632–3.

Alonso-Coello P, Irfan A, Sola I, Gich I, Delgado-Noguera M, Rigau D, et al. The quality of clinical practice guidelines over the last two decades: a systematic review of guideline appraisal studies. Qual Saf Health Care. 2010;19(6):e58.

AGREE Next Steps Consortium. Appraisal of guidelines for research and evaluation II: AGREE II instrument. Avaiable at http://www.agreetrust.org/. 2013.

Brouwers MC, Kho ME, Browman GP, Burgers JS, Cluzeau F, Feder G, et al. AGREE II: advancing guideline development, reporting and evaluation in health care. CMAJ. 2010;182(18):E839-42.

Guyatt G, Vandvik PO. Creating clinical practice guidelines: problems and solutions. Chest. 2013;144(2):365–7.

AGREE Next Steps Consortium. Appraisal of guidelines for research and evaluation II: AGREE II instrument. Avaiable at https://www.agreetrust.org/wp-content/uploads/2017/12/AGREE-II-Users-Manual-and-23-item-Instrument-2009-Update-2017.pdf. 2017.

Schunemann HJ, Wiercioch W, Brozek J, Etxeandia-Ikobaltzeta I, Mustafa RA, Manja V, et al. GRADE Evidence to Decision (EtD) frameworks for adoption, adaptation, and de novo development of trustworthy recommendations: GRADE-ADOLOPMENT. J Clin Epidemiol. 2017;81:101–10.

Fervers B, Burgers JS, Voellinger R, Brouwers M, Browman GP, Graham ID, et al. Guideline adaptation: an approach to enhance efficiency in guideline development and improve utilisation. BMJ Qual Saf. 2011;20(3):228–36.

Van der Wees PJ, Moore AP, Powers CM, Stewart A, Nijhuis-van der Sanden MW, de Bie RA. Development of clinical guidelines in physical therapy: perspective for international collaboration. Phys Ther. 2011;91(10):1551–63.

van Tulder MW, Tuut M, Pennick V, Bombardier C, Assendelft WJ. Quality of primary care guidelines for acute low back pain. Spine. 2004;29(17):E357-62.

Bouwmeester W, van Enst A, van Tulder M. Quality of low back pain guidelines improved. Spine. 2009;34(23):2562–7.

Doniselli FM, Zanardo M, Manfrè L, Papini GDE, Rovira A, Sardanelli F, et al. A critical appraisal of the quality of low back pain practice guidelines using the AGREE II tool and comparison with previous evaluations: a EuroAIM initiative. Eur Spine J. 2018;27(11):2781–90.

Dagenais S, Tricco AC, Haldeman S. Synthesis of recommendations for the assessment and management of low back pain from recent clinical practice guidelines. Spine J. 2010;10(6):514–29.

Meroni R, Piscitelli D, Ravasio C, Vanti C, Bertozzi L, De Vito G, et al. Evidence for managing chronic low back pain in primary care: a review of recommendations from high-quality clinical practice guidelines. Disabil Rehabil. 2019;43(7):1029–43.

Murad MH, Wang Z. Guidelines for reporting meta-epidemiological methodology research. Evid Based Med. 2017;22(4):139–42.

Lawson DO, Puljak L, Pieper D, Schandelmaier S, Collins GS, Brignardello-Petersen R, et al. Reporting of methodological studies in health research: a protocol for the development of the MethodologIcal STudy reportIng Checklist (MISTIC). BMJ Open. 2020;10(12):e040478.

Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan-a web and mobile app for systematic reviews. Syst Rev. 2016;5(1):210.

Bargeri S, Iannicelli V, Castellini G, Cinquini M, Gianola S. AGREE II appraisals of clinical practice guidelines in rehabilitation showed poor reporting and moderate variability in quality ratings when users apply different cuff-offs: a methodological study. J Clin Epidemiol. 2021;139:222–31.

Bartko JJ. On various intraclass correlation reliability coefficients. Psychol Bull. 1976;83(5):762–5. https://doi.org/10.1037/0033-2909.83.5.762.

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–74.

StataCorp. Stata Statistical Software: Release 15. College Station: Stata- Corp LLC; 2017.

Acevedo JC, Sardi JP, Gempeler A. Systematic review and appraisal of clinical practice guidelines on interventional management for low back pain. Revista de la Sociedad Espanola del Dolor. 2016;23(5):243–55.

Anderson DB, Luca KD, Jensen RK, Eyles JP, Van Gelder JM, Friedly JL, et al. A critical appraisal of clinical practice guidelines for the treatment of lumbar spinal stenosis. Spine J. 2021;21(3):455–64.

Castellini G, Iannicelli V, Briguglio M, Corbetta D, Sconfienza LM, Banfi G, et al. Are clinical practice guidelines for low back pain interventions of high quality and updated? A systematic review using the AGREE II instrument. Bmc Health Serv Res. 2020;20(1):970.

Corp N, Mansell G, Stynes S, Wynne-Jones G, Morso L, Hill JC, et al. Evidence-based treatment recommendations for neck and low back pain across Europe: a systematic review of guidelines. Eur J Pain. 2021;25(2):275–95.

Doniselli FM, Zanardo M, Manfre L, Papini GDE, Rovira A, Sardanelli F, et al. A critical appraisal of the quality of low back pain practice guidelines using the AGREE II tool and comparison with previous evaluations: a EuroAIM initiative. Eur Spine J. 2018;27(11):2781–90.

Ernstzen DV, Louw QA, Hillier SL. Clinical practice guidelines for the management of chronic musculoskeletal pain in primary healthcare: a systematic review. Implement Sci. 2017;12(1):1.

Franz K, van Wijnen H. YY physiotherapeutic clinical guidelines for the management of nonspecific low back pain literature review. Physioscience. 2015;11(2):53–61.

Hoydonckx Y, Kumar P, Flamer D, Costanzi M, Raja SN, Peng PL, et al. Quality of chronic pain interventional treatment guidelines from pain societies: assessment with the AGREE II instrument. Eur J Pain. 2020;24(4):704–21.

Krenn C, Horvath K, Jeitler K, Zipp C, Siebenhofer-Kroitzsch A, Semlitsch T. Management of non-specific low back pain in primary care - a systematic overview of recommendations from international evidence-based guidelines. Prim Health Care Res Dev. 2020;21:e64.

Lin I, Wiles L, Waller R, Goucke R, Nagree Y, Gibberd M, et al. What does best practice care for musculoskeletal pain look like? Eleven consistent recommendations from high-quality clinical practice guidelines: systematic review. Br J Sports Med. 2020;54(2):79–86.

Ng JY, Mohiuddin U, Azizudin AM. Clinical practice guidelines for the treatment and management of low back pain: a systematic review of quantity and quality. Musculoskelet Sci Pract. 2021;51:102295.

Nordin M, Randhawa K, Torres P, Yu HN, Haldeman S, Brady O, et al. The global spine care initiative: a systematic review for the assessment of spine-related complaints in populations with limited resources and in low- and middle-income communities. Eur Spine J. 2018;27:816–27.

Rathbone T, Truong C, Haldenby H, Riazi S, Kendall M, Cimek T, et al. Sex and gender considerations in low back pain clinical practice guidelines: a scoping review. BMJ Open Sport Exerc Med. 2020;6(1):e000972.

Stander J, Grimmer K, Brink Y. A user-friendly clinical practice guideline summary for managing low back pain in South Africa. S Afr J Physiother. 2020;76(1):1366.

Wong JJ, Cote P, Sutton DA, Randhawa K, Yu H, Varatharajan S, et al. Clinical practice guidelines for the noninvasive management of low back pain: a systematic review by the Ontario Protocol for Traffic Injury Management (OPTIMa) Collaboration. Eur J Pain. 2017;21(2):201–16.

Yaman ME, Gudeloglu A, Senturk S, Yaman ND, Tolunay T, Ozturk Y, et al. A critical appraisal of the North American Spine Society guidelines with the Appraisal of Guidelines for Research and Evaluation II instrument. Spine J. 2015;15(4):777–81.

Ng JY, Mohiuddin U. Quality of complementary and alternative medicine recommendations in low back pain guidelines: a systematic review. Eur Spine J. 2020;29(8):1833–44.

Zhao H, Liu B, Liu Z, Xie L-m, Fang Y-g, Zhu Y, et al. Clinical practice guidelines of using acupuncture for low back pain. World J Acupuncture-moxibustion. 2016;26:1–13.

Van Wambeke P, Desomer A, Ailiet L, Berquin A, Dumoulin C, Depreitere B, et al. Low back pain and radicular pain: assessment and management. KCE Rep. 2017;287.

Thorson D, Campbell R, Massey M, Mueller B, McCathie B, Richards H, et al. Adult Acute and Subacute Low Back Pain. Bloomington: Institute for Clinical Systems Improvement (ICSI); 2018.

Stochkendahl MJ, Kjaer P, Hartvigsen J, Kongsted A, Aaboe J, Andersen M, et al. National clinical guidelines for non-surgical treatment of patients with recent onset low back pain or lumbar radiculopathy. Eur Spine J. 2018;27(1):60–75.

Staal J, Hendriks E, Heijmans M, Kiers H, Lutgers-Boomsma A, Rutten G, et al. KNGF Clinical Practice Guideline for Physical Therapy in patients with low back pain. KNGF Clinical Practice Guideline for Physical Therapy in patients with low back pain Amersfoort. The Netherlands: Royal Dutch Society for Physical Therapy (KNGF); 2013.

Qaseem A, Wilt TJ, McLean RM, Forciea MA, Denberg TD, Clinical Guidelines Committee of the American College of P, et al. Noninvasive treatments for acute, subacute, and chronic low back pain: a clinical practice guideline from the american college of physicians. Ann Intern Med. 2017;166(7):514–30.

Pangarkar SS. VA/DoD clinical practice guideline for diagnosis and treatment of low Back pain. 2017.

Manchikanti L, Abdi S, Atluri S, Benyamin RM, Boswell MV, Buenaventura RM, et al. An update of comprehensive evidence-based guidelines for interventional techniques in chronic spinal pain. Part II: guidance and recommendations. Pain Physician. 2013;16(2 Suppl):49–283.

Kreiner DS, Shaffer WO, Baisden JL, Gilbert TJ, Summers JT, Toton JF, et al. An evidence-based clinical guideline for the diagnosis and treatment of degenerative lumbar spinal stenosis (update). Spine J. 2013;13(7):734–43.

Kreiner DS, Hwang SW, Easa JE, Resnick DK, Baisden JL, Bess S, et al. An evidence-based clinical guideline for the diagnosis and treatment of lumbar disc herniation with radiculopathy. Spine J. 2014;14(1):180–91.

Kassolik K, Rajkowska-Labon E, Tomasik T, Pisula-Lewandowska A, Gieremek K, Andrzejewski W, et al. Recommendations of the Polish Society of Physiotherapy, the Polish Society of Family Medicine and the College of Family Physicians in Poland in the field of physiotherapy of back pain syndromes in primary health care. Fam Med Prim Care Rev. 2017;3:323–34.

Group TOPLBPW. Evidence-Informed Primary Care Management of Low Back Pain. Edmonton: Toward Optimized Practice; 2017.

Goertz M, Thorson D, Bonsell J, Bonte B, Campbell R, Haake B, et al. Adult acute and subacute low back pain. Institute for Clinical Systems Improvement. 2012;8.

Globe G, Farabaugh RJ, Hawk C, Morris CE, Baker G, Whalen WM, et al. Clinical practice guideline: chiropractic care for low back pain. J Manipulative Physiol Ther. 2016;39(1):1–22.

Delitto A, George SZ, Van Dillen L, Whitman JM, Sowa G, Shekelle P, et al. Low back pain: clinical practice guidelines linked to the International classification of functioning, disability, and health from the orthopaedic section of the american physical therapy association. J Orthop Sports Phys Ther. 2012;42(4):A1–57.

Chou R, Cote P, Randhawa K, Torres P, Yu H, Nordin M, et al. The Global Spine Care Initiative: applying evidence-based guidelines on the non-invasive management of back and neck pain to low- and middle-income communities. Eur Spine J. 2018;27(Suppl 6):851–60.

Chenot J-F, Greitemann B, Kladny B, Petzke F, Pfingsten M, Schorr SG. Non-specific low back pain. Deutsch Ärztebl Int. 2017;114(51–52):883.

Cheng L, Lau K, Lam W. Evidence-based guideline on prevention and management of low back pain in working population in primary care. Xianggang Uankeyixueyuan Yuekan Hong Kong Pract. 2012;34:106–15.

Bussieres AE, Stewart G, Al-Zoubi F, Decina P, Descarreaux M, Haskett D, et al. Spinal manipulative therapy and other conservative treatments for low back pain: a guideline from the Canadian chiropractic guideline initiative. J Manipulative Physiol Ther. 2018;41(4):265–93.

Brosseau L, Wells GA, Poitras S, Tugwell P, Casimiro L, Novikov M, et al. Ottawa Panel evidence-based clinical practice guidelines on therapeutic massage for low back pain. J Bodyw Mov Ther. 2012;16(4):424–55.

(SIGN). SIGN. Management of chronic pain. Edinburgh: SIGN publication no. 136; 2013.

Low Back Pain and Sciatica in Over 16s: Assessment and Management. London: © Nice, 2016.; 2016.

Low Back Pain: Early Management of Persistent Non-specific Low Back Pain. London: © Royal College of General Practitioners.; 2009.

Chou R, Loeser JD, Owens DK, Rosenquist RW, Atlas SJ, Baisden J, et al. Interventional therapies, surgery, and interdisciplinary rehabilitation for low back pain: an evidence-based clinical practice guideline from the American Pain Society. Spine. 2009;34(10):1066–77.

Hegmann KT, Travis R, Belcourt RM, Donelson R, Eskay-Auerbach M, Galper J, et al. Diagnostic tests for low back disorders. J Occup Environ Med. 2019;61(4):e155–68.

Hegmann KT, Travis R, Belcourt RM. Low back disorders. 2016.

Patel ND, Broderick DF, Burns J, Deshmukh TK, Fries IB, Harvey HB, et al. ACR appropriateness criteria low back pain. J Am Coll Radiol. 2016;13(9):1069–78.

Guidelines TFotLBPCP. American Osteopathic Association guidelines for osteopathic manipulative treatment (OMT) for patients with low back pain. J Osteopath Med. 2016;116(8):536–49.

Chou R, Qaseem A, Snow V, Casey D, Cross JT Jr, Shekelle P, et al. Diagnosis and treatment of low back pain: a joint clinical practice guideline from the American College of Physicians and the American Pain Society. Ann Int Med. 2007;147(7):478–91.

Lee J, Gupta S, Price C, Baranowski A. Low back and radicular pain: a pathway for care developed by the British Pain Society. Brit J Anaesth. 2013;111(1):112–20.

Itz CJ, Willems PC, Zeilstra DJ, Huygen FJ. Dutch multidisciplinary guideline for invasive treatment of pain syndromes of the lumbosacral spine. Pain Practice. 2016;16(1):90–110.

Airaksinen O, Brox JI, Cedraschi C, Hildebrandt J, Klaber-Moffett J, Kovacs F, et al. European guidelines for the management of chronic nonspecific low back pain. Eur Spine J. 2006;15(Suppl 2):s192.

Deer TR, Grider JS, Pope JE, Falowski S, Lamer TJ, Calodney A, et al. The MIST guidelines: the Lumbar Spinal Stenosis Consensus Group guidelines for minimally invasive spine treatment. Pain Pract. 2019;19(3):250–74.

Jun JH, Cha Y, Lee JA, Choi J, Choi T-Y, Park W, et al. Korean medicine clinical practice guideline for lumbar herniated intervertebral disc in adults: an evidence based approach. Eur J Integr Med. 2017;9:18–26.

Kreiner DS, Hwang S, Easa J, Resnick DK, Baisden J, Bess S, Diagnosis and treatment of lumbar disc herniation with radiculopathy, et al. Lumbar disc herniation with radiculopathy| NASS clinical guidelines. Burr Ridge: NASS; 2012.

Kreiner DS, Baisden J, Mazanec D, Patel R, Bess RS, Burton D, et al. Diagnosis and Treatment of Adult Isthmic Spondylolisthesis. Evidence-Based Clinical Guidelines for Multidisciplinary Spine Care. North American Spine Society. 2014.

Matz PG, Meagher R, Lamer T, Tontz WL Jr, Annaswamy TM, Cassidy RC, et al. Guideline summary review: an evidence-based clinical guideline for the diagnosis and treatment of degenerative lumbar spondylolisthesis. Spine J. 2016;16(3):439–48.

Bundesärztekammer (BÄK) KBK, & Arbeitsgemeinschaft der Wissenschaftlichen Medizinischen Fachgesellschaften (AWMF). Nationale VersorgungsLeitlinie Nicht-spezifischer Kreuzschmerz – Langfassung, 2. Auflage. Version 1. [National Guideline: Non-specific low back pain. Long version: 2nd edition, version 1]. 2017.

Medicine PAoR. Low back pain management guideline. Quezon City: Philippine Academy of Rehabilitation Medicine (PARM); 2012.

Picelli A, Buzzi MG, Cisari C, Gandolfi M, Porru D, Bonadiman S, et al. Headache, low back pain, other nociceptive and mixed pain conditions in neurorehabilitation. Evidence and recommendations from the Italian Consensus Conference on Pain in Neurorehabilitation. Eur J Phys Rehabil Med. 2016;52(6):867–80.

Livingston C, King V, Little A, Pettinari C, Thielke A, Gordon C. Evidence-based clinical guidelines project Evaluation and management of low back pain: a clinical practice guideline based on the joint practice guideline of the American College of Physicians and the American Pain Society (diagnosis and treatment of low back pain). Office for Oregon Health Policy and Research, Salem. 2011.

Program TOPT. Evidence-informed primary care management of low back pain: clinical practice guidelines. 3rd ed. Alberta: Institute of Health Economics; 2015. p. 49.

Practice TO. Guideline for the Evidence Informed Primary Care Management of Low Back Pain. 2009.

Chiodo A, Alvarez D, Graziano G, Haig A, Harrison R, Park P, et al. Acute low back pain: guidelines for clinical care [with consumer summary]. 2010.

Liberati AAD, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1-34.

Booth A, Clarke M, Dooley G, Ghersi D, Moher D, Petticrew M, et al. The nuts and bolts of PROSPERO: an international prospective register of systematic reviews. Syst Rev. 2012;1:2.

Booth A, Clarke M, Ghersi D, Moher D, Petticrew M, Stewart L. An international registry of systematic-review protocols. Lancet. 2011;377(9760):108–9.

Chen Q, Li L, Chen Q, Lin X, Li Y, Huang K, et al. Critical appraisal of international guidelines for the screening and treatment of asymptomatic peripheral artery disease: a systematic review. BMC Cardiovasc Disord. 2019;19(1):17.

Traversy G, Barnieh L, Akl EA, Allan GM, Brouwers M, Ganache I, et al. Managing conflicts of interest in the development of health guidelines. CMAJ. 2021;193(2):49–54.

Norris SL, Holmer HK, Ogden LA, Burda BU. Conflict of interest in clinical practice guideline development: a systematic review. PLoS One. 2011;6(10): e25153.

Shaneyfelt TM, Centor RM. Reassessment of clinical practice guidelines: go gently into that good night. JAMA. 2009;301(8):868–9.

Schunemann HJ, Al-Ansary LA, Forland F, Kersten S, Komulainen J, Kopp IB, et al. Guidelines international network: principles for disclosure of interests and management of conflicts in guidelines. Ann Intern Med. 2015;163(7):548–53.

Alhazzani W, Lewis K, Jaeschke R, Rochwerg B, Moller MH, Evans L, et al. Conflicts of interest disclosure forms and management in critical care clinical practice guidelines. Intensive Care Med. 2018;44(10):1691–8.

6. Declaration and management of interest. In: WHO Handbook for Guideline Development. 2nd ed. Geneva: World Health Organization; 2014. Available: https://apps.who.int/iris/bitstream/handle/10665/145714/9789241548960_eng.pdf (accessed 26 Nov 2019).

Armstrong MJ, Mullins CD, Gronseth GS, Gagliardi AR. Impact of patient involvement on clinical practice guideline development: a parallel group study. Implement Sci. 2018;13(1):55.

Dijkers MP, Ward I, Annaswamy T, Dedrick D, Feldpausch J, Moul A, et al. Quality of rehabilitation clinical practice guidelines: an overview study of AGREE II appraisals. Arch Phys Med Rehabil. 2020;101(9):1643–55.

Cheng KHC, Hui CH, Cascio WF. Leniency bias in performance ratings: the big-five correlates. Front Psychol. 2017;8:521.

https://www.agreetrust.org/resource-centre/agree-ii/agree-ii-training-tools/ Accessed on 29 June 2021.

https://www.agreetrust.org/about-the-agree-enterprise/frequently-asked-questions/ Accessed on 28 June 2021.

Hoffmann-Esser W, Siering U, Neugebauer EA, Brockhaus AC, Lampert U, Eikermann M. Guideline appraisal with AGREE II: Systematic review of the current evidence on how users handle the 2 overall assessments. PLoS One. 2017;12(3):e0174831.

Hoffmann-Esser W, Siering U, Neugebauer EAM, Lampert U, Eikermann M. Systematic review of current guideline appraisals performed with the Appraisal of Guidelines for Research & Evaluation II instrument-a third of AGREE II users apply a cut-off for guideline quality. J Clin Epidemiol. 2018;95:120–7.

Martinez Garcia L, Sanabria AJ, Garcia Alvarez E, Trujillo-Martin MM, Etxeandia-Ikobaltzeta I, Kotzeva A, et al. The validity of recommendations from clinical guidelines: a survival analysis. CMAJ. 2014;186(16):1211–9.

Acknowledgements

The authors t thank J.D. Baggott and Kenneth Adolf Britsch for language revision and the appraisals authors who helped us to track down missing data.

Funding

The study was supported by the Italian Ministry of Health “Linea 3 – Valutazione della qualità delle attuali linee guida in ortopedia e in riabilitazione”. The funding sources had no controlling role in the study design, data collection, analysis, interpretation or report writing. The registered protocol study is available in OSF repository (https://osf.io/rz7nh/)

Author information

Authors and Affiliations

Contributions

Concept development providing idea for the research: SG, GC. Design and planning the methods to generate the results: SG, GC, SB, RM, MC, VI. Supervision: SG, GC, RM, MC. Data collection: SB, VI. Analysis and interpretation (statistics, evaluation and presentation of the results): SB, VI, SG, GC, RM, MC. Writing: SB, SG, GC. Critical review (revised manuscript for intellectual content; this does not relate to spelling and grammar checking): SG, GC, SB, RM, MC, VI. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search strategy.

Additional file 2

. Exclusion criteria of appraisals.

Additional file 3

. List of CPGs recommend considering quality ratings, OA2, ICC and status of publication.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Gianola, S., Bargeri, S., Cinquini, M. et al. More than one third of clinical practice guidelines on low back pain overlap in AGREE II appraisals. Research wasted?. BMC Med Res Methodol 22, 184 (2022). https://doi.org/10.1186/s12874-022-01621-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-022-01621-w