Abstract

Background

In everyday life, negative emotions can be implicitly regulated by positive stimuli, without any conscious cognitive engagement; however, the effects of such implicit regulation on mood and related neuro-mechanisms, remain poorly investigated in literature. Yet, improving implicit emotional regulation could reduce psychological burden and therefore be clinically relevant for treating psychiatric disorders with strong affective symptomatology.

Results

Music training reduced the negative emotional state elicited by negative odours. However, such change was not reflected at the brain level.

Conclusions

In a context of affective rivalry a musical training enhances implicit regulatory processes. Our findings offer a first base for future studies on implicit emotion regulation in clinical populations.

Similar content being viewed by others

Background

The ability to effectively regulate emotions has direct impacts on the level of our well-being [1, 2]. Such ability constantly influences decision-making processes and has conspicuous consequences on our daily social interactions [3, 4]. Emotion regulation can be understood as an either implicit (automatic, without monitoring or insight) or explicit (monitored with conscious effort and awareness) regulatory process of affective internal states [5]. From a neurocognitive perspective, this regulation has historically been seen as the result of bottom-up or top-down brain activation processes, describing mainly cortical-subcortical interactions. Both carry salient information about our internal and/or external environment, and lead to a certain affective state and associated behavioural reactions [6,7,8,9,10]. However, newer views see regulation as a more complex interactive process on multiple levels [11, 12].

As a matter of fact, our affective state is often generated and updated by multisensory incoming stimuli which can be of conflicting nature [13]. In such circumstances, which we earlier referred to as a state of ‘affective rivalry’, we are usually confronted with difficulties in regulating and integrating affective states [13]. Difficulties in regulating emotions can indeed converge into dysregulated mood states that have the potential to contribute as risk factor to the development of affective disorders, such as pathological anxiety and clinical depression [14,15,16]. Hence, these difficulties might be particularly exacerbated in patients with a diagnosis of affective disorder. To eventually aid treatment of affective disorders we aim to train adaptive emotion regulation on incongruent emotional states, at first in healthy volunteers in the present study, as well as in clinical circumstances as subject of future research.

In a previous paper we presented a design that encompassed both, multisensory integration and affective rivalry, and investigated on a behavioural and functional brain level how implicit regulatory processes during incongruent emotional situations affected mood in healthy participants [13]. Although positive and negative music as well as pleasant and unpleasant odours are well established stimuli for independently inducing congruent emotions like happiness, sadness or disgust [17,18,19,20,21], to our knowledge no studies at that time had combined these modalities as rivals to investigate the resulting emotional state. To do so we recreated in our previous laboratory setting a state of “affective rivalry” with auditory (musical) and olfactory stimuli. We then confirmed that negative olfactory and positive auditory stimuli are independently capable of inducing congruent emotional states, and moreover demonstrated, that when pairing a negative olfactory with a positive auditory stimulus, the olfactory stimulus dominates the emotional response. The results we found were likely related to the unique “hard-wired” anatomic connections of the olfactory system and its evolutionary salience [22]. By contrast, the affective perception of music is assumed involves both automatic bottom-up and more cognitively influenced top-down regulation processes [19, 23, 24], but is being capable of inducing strong positive and negative emotions, and even emotionally neutral states [19, 25, 26]. Despite the strong potential to induce affectively valenced states, a strong interindividual variance in this effect has been observed [27, 28]. In this study we focus on the feeling state induced by emotions rather than a cognitive labelling, which has been separately described [29, 30].

The results from our previous study support the hypothesis that music can implicitly modulate the subjective experience of an existing negative affective state. The interaction of valenced music and valenced olfaction represents a regulatory process that is "uninstructed, effortless and proceeds without awareness", which is how implicit emotion regulation can be defined [5]. Commonly, implicit emotion regulation is investigated by fear inhibition and emotional conflict paradigms, which are associated to activity in ventral anterior cingulate and ventromedial prefrontal cortex [5, 6, 31]. To date, however, the presence of long-term effects of this modulation on mood remains to be investigated, at behavioural as well as the brain level [32]. These potential effects could be then used to contribute to a better stabilization of dysregulated emotional states. For instance, a musical intervention such as music-listening may be applied for the prevention as well as an adjunct to the classical treatments of affective disorders, in order to achieve better emotional regulation outcomes [33].

In the present study we investigated whether and to what extend music-listening was able to implicitly modulate emotion regulation during an affectively rival situation. To examine this hypothesis, we employ the same affectively rivalry design as in our previous study. Specifically, we investigated whether the regulatory effects of positive auditory stimuli on mood induction by unpleasant odour can be modulated by a three-week musical training, and to what extend such an experience can lead to functional changes at the brain level. We predicted a significant positive effect of the musical intervention on the individual mood levels. We anticipated that such change might reflect improved implicit emotion counter-regulation abilities, probably mediated by changes in brain activity in areas that have been previously associated with implicit emotion regulation or salience processing, such as (dorsal) anterior cingulate cortex, inferior frontal cortex, amygdala and ventromedial prefrontal cortex [6, 34]. According to our prediction that music-listening on a regular basis modulates emotion regulation and is capable of improving counter-regulation abilities, we refer to our intervention furthermore as musical training in the sense of passive music-listening and not active music-performing, while musical experience or simply music-listening also might be applicable terms.

Results

Behavioural results

The three ratings are reported successively: Disgust ratings to begin with, followed by odour ratings and finally the emotional subjective state ratings. Means and standard errors are reported in Table 1.

Disgust ratings of olfactory stimuli

The two groups did not show differences at the baseline in disgust rating scores (all p > 0.05). Negative odours stimuli were rated significantly more negative compared to the neutral odour stimuli (t = 22.375, p = < 0.001). Before the training (T0) a significant effect of the negative olfactory stimuli (O) on disgust ratings across the sample was found (F1,30 = 556.13, p < 0.001, ηp2 = 0.95) independently of the occurrence of neutral or positive auditory stimuli (A x O, p > 0.05). No significant effects of the positive auditory stimuli were found (A) (t = - 0.764, p = 0.45). At the end of the training (T1), a general effect of time (F1,30 = 32.55, p < 0.001, ηp2 = 0.52), and a significant interaction between the effect of the negative olfactory stimuli over time (O x T) (F1,30 = 13.27, p = 0.001, ηp2 = 0.31) was found. Post hoc results from paired t-tests indicated a reduction in disgust toward negative stimuli among the sample at the second measurement (t = 3.863, p = 0.001). The same interaction was not significant for auditory stimuli (A x T, p > 0.05). There was a significant difference in decrease of disgust rating over time between the two groups T x G (F1,30 = 4.71, p = 0.038, ηp2 = 0.136), the training group in general showed a stronger decrease in disgust over all conditions. The more specific interaction effects were not significant (A x O x T, O x T x G or A x O x T x G) (all p > 0.05). The latter might be related to a floor effect in the conditions with no disgusting odour (Oo). Figure 1a shows an overview of the disgust ratings.

Results of the a disgust ratings of the olfactory stimuli, b the valence ratings of the auditory stimuli, and C the ratings of the emotional states. The ratings are shown for the first (T0) and the second (T1) measurement of the control group (CG) without an intervention and the training group (TG) which received a twenty-day musical training between the two measurements. The four conditions are A0O− (neutral auditory combined with negative olfactory stimulation), A0O0 (neutral auditory combined with neutral olfactory stimulation), A+O− (positive auditory combined with negative olfactory stimulation), and A+O0 (positive auditory combined with neutral olfactory stimulation)

Valence ratings of auditory stimuli

The two groups did not differ at baseline in auditory rating scores (all p > 0.05). In general, in both measurements, the effect of the auditory stimuli (A) on the valence ratings of the music was significant (F1,30 = 69.31, p < 0.001; ηp2 = 0.69). Positive auditory stimuli were rated significantly more positive compared to neutral auditory stimuli (t = − 7.118, p < 0.001). A significant effect of the olfactory stimuli on the valence ratings of the music was also found (F1,30 = 67.61, p < 0.001; ηp2 = 0.69). Specifically, positive auditory stimuli were rated as more pleasant during neutral compared with negative olfactory stimulation (t = − 5.974, p < 0.001).

No other effects were significant, but two strong trends were observable. We detected a trend for an effect of time in general (F1,30 = 3.96, p = 0.056; ηp2 = 0.12). with a trend for an increase in valence ratings of the auditory stimuli. The second trend indicated an interaction between olfactory stimuli and time, O x T (F1,30 = 3.93, p = 0.056; ηp2 = 0.12) with a stronger increase in valence ratings in conditions with disgusting smells. We did not find any other significant effect on the valence ratings of the auditory stimuli (all p > 0.05). See Fig. 2b for an overview of the valence ratings of the auditory stimuli.

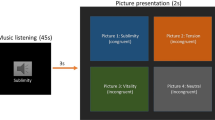

Schematic visualization of the experimental design. Exemplary trial (duration 32 s) out of 48 trials, presented in the fMRI task. Each trial began with the presentation of an auditory stimulus (duration 16 s, first second fade-in, last second fade-out). Four olfactory stimuli (duration 1 s each, interrupted by breaks of 2 s) were administered during the continuing presentation of the auditory stimulus. All four of the olfactory stimuli were either negative or neutral. The first olfactory pulse was administered jittered at random with a delay of 1.0, 1.5, 2.0, or 2.5 s relative to the beginning of the auditory stimulus, so always after the fade-in period was completed. The administration of the stimuli combination was followed by three ratings (duration 11 s, 3.66 s per rating) and a baseline period (duration 5 s), where a fixation cross was shown [13]

Ratings of emotional state

The two groups did not differ at baseline in emotional rating scores (all p > 0.05). Overall, a significant effect of the olfactory stimuli (F1,30 = 105.81, p < 0.001; ηp2 = 0.78) and a significant effect of the auditory stimuli (F1,31 = 45.19, p < 0.001; ηp2 = 0.60) were found on the ratings of the individual affective state. Under the same auditory condition, the emotional state was rated significantly more negative during negative compared to neutral olfactory stimulation (t = − 10.176, p < 0.001), while the effect of negative olfactory stimuli on emotional state was lower when combined with positive music A × O (F1,30 = 7.21, p = 0.012; ηp2 = 0.19).

A significant general effect of Time (F1,30 = 17.67, p < 0.001; ηp2 = 0.37), with a general increase in the general emotion well-being over all conditions was found. Furthermore, a significant interaction between the olfactory stimuli and Time, O x T (F1,30 = 11.91, p = 0.002; ηp2 = 0.28) was found, which relates to a general stronger increase in well-being at the second measurement in the condition in which a disgusting smell was administered. The interaction between auditory stimuli and Time, A x T was also significant (F1,30 = 13.01, p = 0.001; ηp2 = 0.30), with a stronger difference between the auditory stimuli at the second measurement. In relation to the training group, we observed a significant interaction of Time and Group, T x G (F1,30 = 10.92, p = 0.002; ηp2 = 0.27), with only the group with music training showing an increase in emotion ratings and the no-training group remaining stable. Furthermore, we found a strong trend in the interaction between negative olfactory stimuli, Time, and Group, O x T x G (F1,30 = 3.38, p = 0.07; ηp2 = 0.10), with the control group (CG) feeling emotionally worse during the occurrence of negative odours on the second measurement than the training group (TG). Despite the low sample size, we even found a trend that the emotional state during the occurrence of negative odours was differently modulated by the presence of positive auditory stimuli A x O x T x G (F1,30 = 3.69, p = 0.06; ηp2 = 0.11). During the second measurement indeed, the musical training group felt significantly emotionally better during the occurrence of positive auditory and negative odours (A+ O−) than the control group (t = − 3.034, p = 0.005). Figure 1C shows an overview of the ratings of the emotional states.

Imaging results

Main effect of positive auditory and negative olfactory stimulation

Main effects of the two stimuli were similar to the initial study of the first measurement [13], whereas no significant group differences after the musical training could be found in the imaging results.

Interaction of auditory and olfactory stimulation before and after the musical training

None of the main effects or differential contrast did reveal significant interaction with the training groups.

Discussion

In our previous study we have shown that both auditory and olfactory stimuli are able to generate congruent emotions, but the pattern found in affectively rival stimulation suggested that implicit emotion regulation seems to be working uni-directionally in the case of negative olfaction paired with positive music: The music did not relieve the negative affect caused by the smell, but the smell tainting the enjoyment of the music and the overall well-being, which renders useful from an evolutionary perspective. We assumed that disgust of the olfactory stimuli and valence of the musical stimuli potentially reflect lower level (e.g. generation) emotional perception of the stimuli, while thinking about the current emotional state and giving to it a judgment reflects higher order regulatory processes. Relations with behavioural ratings that were found in the previous study, indicated that modulation of brain activation in these areas may also guide conscious labelling of emotional state and valence of external stimuli. Thus, as discussed earlier these areas may link integratory brain activation to emotional experience and therefore play a central role on implicit emotion regulation [13].

In the present training study, we aimed to investigate whether a three-week musical training is able to enhance implicit emotion regulation abilities on mood within an affective rivalry paradigm using stimuli of the auditory and olfactory system. We found that the musical training induced a behavioural change on the subjective affective state which is concordant with our initial hypothesis. In fact, only participants who received music training showed lower negative affective state when negative odours were paired with positive music. Such effect suggests that regular listening to music might increase implicit emotional regulatory abilities when unpleasant emotional conflictual contests occur. Passive listening to classical music twice per day might have led to a modulation of attention towards the musical stimuli presented during the experiment. The musical stimuli might have drawn attention more strongly, this might have had a ‘protective’ function from the impact of the negative olfactory stimulus. Only the training group did display significantly better subjective affective state as a result of getting negative olfactory stimulation while listening to positive music. The training might therefore in this group have led to a stronger implicit regulatory effect of positive auditory stimulation on the evaluation of the subjective affective state. However, the training did not induce any change at whole brain level. Additionally, we did not find any change in the activity of brain areas typically involved in emotional regulation processes, such as the dorsolateral prefrontal cortex (DLPFC; e. g. attentional processes), or in brain areas which indicate the necessity to regulate per se (ventrolateral prefrontal cortex; VLPFC) [34]. Despite these null results on whole brain level, the effect found in the behavioural measurements after the training indicates an enhanced implicit regulatory mechanism in a contest of daily-life implicit affective rivalry. These results are in line with those from systematic music therapy studies on clinical population [35,36,37,38]. However, to the authors’ knowledge, up to date no studies have investigated music-training effects on implicit emotion regulation processes in a contest of affective rivalry. If brain-function changes following musical training might lead to enhanced implicit emotional regulation skills, remains a question to be further investigated. One possible explanation of such brain-behavioural in-congruency is that the duration and/or intensity of our musical training was not intense enough to induce functional changes at brain level or that the training design was not sufficiently powered to detect subtle changes in brain activity in interaction with the training and stimulation. This seems in line with recent results from a music-based emotion regulation momentary assessment study in distressed participants, which indicate a significant music-related improvement in emotion regulation abilities only after two months of musical intervention (and not before) [39]. Another possible explanation might rely on the nature of implicit emotional regulatory changes per se. Emotion regulation processes might indeed change along the musical training, leading the participants to develop more conscious strategies (for instance from implicit to more explicit-controlled strategies) of emotional regulation that we, perhaps, were not able to catch with this specific fMRI paradigm (which is principally designed to investigate implicit emotional regulation brain processes during the paired presentation of the stimuli) [40]. Finally, we cannot exclude that behavioural changes might have been triggered by ‘social desirability’ effects on subjective affective ratings: participants in the training group—although not informed—may have assumed a purpose behind the training and if so, we cannot rule out the possibility that such an assumption may have influenced the affective ratings. In summary, the present study represents a first attempt to investigate the neuro-cognitive mechanisms of implicit emotion regulation during affective rivalry and offers a first base for future similar neuroimaging studies on [41] clinical populations.

Limitations

We did not assess subjective ratings of unpaired auditory and olfactory stimuli; thus, we cannot be sure on valence of these stimuli. Nevertheless, we would argue, that the pairing with neutral stimulation can serve as a reasonable control condition and especially for positive music (paired with non-scented air) the values reflect unbiased reports. Furthermore, we cannot rule out the possibility that the differences rather reflect a valence effect, that is that negative stimulation wins over positive stimulation. We cannot refute this possible effect on the basis of our data, which would pose an interesting question for future research.

Participants may have practiced music as amateurs for many years more than one year before the study. This may have influenced the impact of the musical excerpts on their emotional state. As we are using very well-known musical excerpts, former amateurs may be more familiar with them and with musical stimuli in general, and this might diminish the effect of the music-listening training (as they were already trained beforehand), and thus reduce the experimental effect for those individuals. One year of musical inactivity will not wash out many years of training during childhood and adolescence. Musical training effects are known to persist [42, 43].

Although the auditory stimuli used in this study have shown to be capable of inducing affect-congruent emotions, subjectively selected musical pieces may lead to an enhanced effect, which could be a topic for future research. See also Blood et al. 2001 [44].

Conclusions

In our study we demonstrate that a three-week musical training leads to a more positive affective state when negative odours are paired with positive music. However, a three-week musical training is not related to significant plastic changes. These results seem to suggest that implicit emotion regulation processes can be positively modulated by a regular music listening practice yet might only marginally influence related brain activation.

Methods

Participants and general procedure

Thirty-two volunteers, divided into a music intervention group (n = 16, mean age = 24.93 years, SEM = 0.80) and a non-intervention control group (n = 16, mean age = 25.25 years, SEM = 0.76), participated in the experiment. The mean educational level was 13 years of school (SEM = 0.1) for both the intervention group (SEM = 0.1) and the non-intervention group (SEM = 0.06). The participants and the task were identical to our previous study on affective rivalry [13], in which we only analysed the first measurement with collapsed groups. The participants did not listen to music more than 60 min per day and did not play or practice any musical instrument in the last 12 months, nor have they been musical professionals at any time. All participants were right-handed and had no history of neurological or psychiatric disorders or severe head trauma and no known abnormalities in olfactory or auditory function tested by the use of the semi-structured interview SKIDPIT [45].

Each participant was tested twice, one session at the beginning of the experiment and a second session 21 days after the first measurement. The experimental procedures and task employed in the two testing sessions were identical. During each of the two fMRI measurements we exposed the participants to unpleasant odours with an olfactometer, together with the auditory stimulation. Participants were instructed to actively listen to the pieces of music but were not asked to perform any kind of cognitive mood regulation. The music intervention group conducted a twenty-day musical training in-between the two measurements. Additionally, we did not inform the participants regardless of group about the aim of the study and asked them not to change their behavioural patterns during the experiment.

Musical training

The music intervention or musical training consisted of passively listening to a selection of classical music, twice a day, in the morning and in the evening for about 15 min each session. The music was selected according to previous studies, that have shown its efficacy to successfully induce positive emotional states [19, 41, 46,47,48,49,50,51,52] (Table 2). The music pieces were merged into 20 sessions of approximately 15 min, whereby only a few shorter pieces occurred (at most) twice, in order to maintain a high level of variety. The sequence for the 20 morning sessions was randomised for each participant. For the evening sessions the reverse sequence was taken.

The music was provided online through an internet server and additionally on a CD in case no internet was available. Each participant could log in with a separate username/password combination within a time frame in the morning and evening, the sessions were time-logged. The participants were explicitly asked not to do anything else while listening to the music, and at the end of each session they had to answer two pro forma questions about the music to maintain high levels of attention during the sessions.

Auditory stimuli

The auditory stimuli were generated from musical scales and pieces previously shown to induce positive or neutral emotional states, then evaluated and selected in a separate pre-study on an independent set of subjects. Based on the ratings in the pre-study a set of 8 positive (Table 2) and 4 neutral auditory pieces (1. C major scale up, 2. C major scale down, 3. Adagio of Mozart’s Clarinet Concert and 4. Allegro Moderato of Beethoven’s Piano Concert no. 4) were selected for the presentation during the fMRI task. The volume of the sequences was levelled and adjusted to each participant individually before the experiment, to assure that the sound was clearly perceivable. Details about the pre-study and generating of the auditory stimuli are presented in our previous study [13]. The music bits taken for the positive auditory stimuli were all also parts of the music pieces selected for the training.

Olfactory stimuli

The participants were exposed to one olfactory condition (the unpleasant odour H2S –hydrosulphide- in nitrogen) and a neutral baseline condition, during which the regular ambient airflow was held constant with no odour superimposed [13]. The application was unirhinally on the right side, which shows better results regarding the BOLD responses as described in several studies [53,54,55]. For the stimulus presentation a Burghart OM4 olfactometer [56] was used.

fMRI task and valence ratings

One session included 48 blocks, during each block, in the first 16 s the auditory in combination with the olfactory stimuli were presented, followed by three valence ratings with a total duration of 11 s and finally a baseline period of 5 s (Fig. 2). During the valence ratings, the participant had to indicate on a 5-point scale a) how disgusting they would rate the smell (0 = not at all to 5 = extremely), b) how they would rate the music (0 = very negative to 5 = very positive; 2.5 = neutral), and c) how they currently felt (0 = very bad to 5 = very good; 2.5 = neutral). During stimulus presentation and baseline period a fixation cross was visible. We assumed that disgust of the olfactory stimuli and valence of the musical stimuli potentially reflect conceptually lower level (e.g. generation) emotional perception of the stimuli, while thinking about the current emotional state and giving to it a judgment reflects conceptually higher order regulatory processes.

The auditory stimuli (A) were either neutral (A0) or positive (A+), were faded in during the first and out during the last second by linear volume progression/regression.

Simultaneously either normal air as neutral (O0) or H2S as negative (O−) olfactory condition (O) was presented. To minimise habituation effects H2S was applied in 4 pulses with duration of 1 s each, interrupted by breaks of 2 s in between, a procedure which has been successfully applied in previous studies [17, 57, 58]. The beginning of the first olfactory pulse was jittered at random relative to the beginning of each auditory stimulus. For the visual presentation in the scanner including the ratings the software Presentation (Neurobehavioural Systems Inc., Berkeley, CA, USA) was used. The participants gave their responses by moving a cursor to the desired location via button boxes (LumiTouch, Photon Control, Burnaby, Canada). Each combination of the two different stimuli (A0O−, A0O0, A+O−, A+O0) was presented 12 times in randomised order of appearance. The same procedures were applied during the second fMRI measurement 21 days later.

fMRI data acquisition

Functional imaging was performed on a 3 T Trio MR Scanner (Siemens Medical Systems, Erlangen, Germany) using echo-planar imaging (EPI) sensitive to BOLD contrast (whole brain, T2*, voxel size: 3.4 × 3.4 × 3.3 mm3, matrix size 64 × 64, field of view [FoV] = 220 mm2, 36 axial slices, slice gap = 0.3 mm, acquisition orientation: ascending, echo time [TE] = 30 ms, repetition time [TR] = 2 s, flip angle [α] = 77°, approx. 780 volumes, slice orientation: AC-PC).

Statistical data analysis

Behavioural data

All analyses were performed with SPSS Software (IBM SPSS for Statistic, version 24). Repeated measures analyses of variance (rmANOVA) were calculated for the three dependent variables disgust, music valence, and emotional state ratings, using two factors: Auditory stimulation (with two levels: A0, A+) and olfactory stimulation (with two levels: O0, O−). This model generated a 2 × 2 design per session. To investigate the effect of the musical training on the subjective affective state two factors were added: a factor time (T) was used as a within subjects factor with two levels (T0: before training, T1: after training), and a factor group (G) was used as between subjects factor with two levels (control group, CG: no musical training; training group, TG: with musical training). Thus, a 2 × 2x2 × 2 repeated measures ANOVA was implemented in SPSS. Significant interaction effects were decomposed by post-hoc paired-sample t-tests when applicable. Greenhouse–Geisser corrected p-values were used. Note that SPSS implements a mixed-effects model that account for subject as random effect.

fMRI data processing

The functional and anatomical images were preprocessed and analysed using the FMRIB Software Library (FSL; Oxford Centre for Functional Magnetic Resonance Imaging of the Brain, University of Oxford, UK; www.fmrib.ox.ac.uk/fsl) [59]. The first three volumes of each functional time series were discarded to disregard magnetization effects and initial transient signal changes. Functional images were spatially smoothed with a 5 mm full width at half-maximum Gaussian kernel to reduce inter-subject variability. Further preprocessing steps include three-dimensional Movement Correction using FMRIB’s Linear Image Registration Tool (MCFLIRT) [60]. Furthermore, Independent Component Analysis based Automatic Removal of Motion Artifacts (ICA-AROMA) was used for denoising [61, 62]. By linear regression of ICA components, AROMA identified components as noise and (non-aggressively) regresses out the time courses of these components. Subsequently, a high pass filter of 100 s was administered. Prior to group analyses, individual functional images were normalised to MNI space in a two-step procedure combining linear and non-linear registration.

For the first level analysis four different exploratory variables (EVs) (A0O−, A0O0, A+O−, A+O0) were defined (block design) in the general linear model. Each block was modelled as lasting 16 s (onset to end) and labelled according to the underlying combination of olfactory and auditory stimuli. The onset functions and durations were convolved with the canonical hemodynamic response function (HRF). Additional nuisance regressors for instructions, any displays of no interest, as well as eventual confounding order-effects in the presentation in olfactory/auditory stimuli between the first (T0) and second fMRI session (T1) were added as covariates of non-interest. We built contrasts from these parameter estimates. The contrasts reflected the main effect per condition (A0O−, A0O0, A+O−, A+O0), the main effect of positive auditory and negative olfactory stimulation respectively (A + and O−) as well differential effects (positive auditory minus neutral auditory and negative olfactory minus neutral olfactory). In a fixed effects analysis per subject, the repeated measurements for all these contrasts were modelled on an intermediate level (average effect collapsed over conditions and difference between the measurement time-points). The parameters estimated from this intermediate level were entered into a two-sample t-test on the group level.

Thereby, we examined group differences’ main effects of conditions, differential contrasts as well as interactions. Of these contrasts, the interaction of time and group difference in brain activity to condition A+O− was the one most directly tested the hypothesis of interaction of training with automatic emotion regulation. Furthermore, group differences on interaction terms would indicate modulation of integration and appraisal processes.

Availability of data and materials

The datasets generated during and/or analysed during the current study are not publicly available due to lack of consent by participants. When the ethics approval for the current study was submitted, data sharing was not standard. The ethics committee of the Medical School, RWTH Aachen University, Germany subsequently decided that if participants were not asked to grant approval for data sharing, data sharing would not be allowed.

Abbreviations

- ANOVA:

-

Analysis of variance

- AROMA:

-

Automatic Removal of Motion Artifacts

- BOLD:

-

Blood oxygen level dependant

- CD:

-

Compact disc

- DLPFC:

-

Dorsolateral prefrontal cortex

- e.g.:

-

Exempli gratia

- EPI:

-

Echo-planar imaging

- EV:

-

Exploratory variables

- fMRI:

-

Functional magnet resonance imaging

- FMRIB:

-

Functional Magnetic Resonance Imaging of the Brain

- FSL:

-

FMRIB software library

- HRF:

-

Hemodynamic response function

- ICA:

-

Independent Component Analysis

- MCFLIRT:

-

Movement Correction using FMRIB’s Linear Image Registration Tool

- MNI:

-

Montreal Neurological Institute

- rmANOVA:

-

Repeated measures analysis of variance

- SPSS:

-

Statistical Products and Service Solutions

- VLPFC:

-

Ventrolateral prefrontal cortex

References

De Castella K, Goldin P, Jazaieri H, Ziv M, Dweck CS, Gross JJ. Beliefs about emotion: links to emotion regulation, well-being, and psychological distress. Basic Appl Soc Psychol. 2013;35(6):497–505.

Gross JJ, John OP. Individual differences in two emotion regulation processes: implications for affect, relationships, and well-being. J Pers Soc Psychol. 2003;85(2):348–62.

Berking M, Wupperman P. Emotion regulation and mental health: recent findings, current challenges, and future directions. Curr Opin Psychiatry. 2012;25(2):128–34.

Eftekhari A, Zoellner LA, Vigil SA. Patterns of emotion regulation and psychopathology. Anxiety Stress Coping. 2009;22(5):571–86.

Gyurak A, Gross JJ, Etkin A. Explicit and implicit emotion regulation: a dual-process framework. Cogn Emot. 2011;25(3):400–12.

Etkin A, Buchel C, Gross JJ. The neural bases of emotion regulation. Nat Rev Neurosci. 2015;16(11):693–700.

Gross JJ. Antecedent- and response-focused emotion regulation: divergent consequences for experience, expression, and physiology. J Pers Soc Psychol. 1998;74(1):224–37.

Kohn N, Kellermann T, Gur RC, Schneider F, Habel U. Gender differences in the neural correlates of humor processing: implications for different processing modes. Neuropsychologia. 2011;49(5):888–97.

Mauss IB, Cook CL, Cheng JY, Gross JJ. Individual differences in cognitive reappraisal: experiential and physiological responses to an anger provocation. Int J Psychophysiol. 2007;66(2):116–24.

Ochsner KN, Ray RR, Hughes B, McRae K, Cooper JC, Weber J, Gabrieli JD, Gross JJ. Bottom-up and top-down processes in emotion generation: common and distinct neural mechanisms. Psychol Sci. 2009;20(11):1322–31.

Morawetz C, Riedel MC, Salo T, Berboth S, Eickhoff SB, Laird AR, Kohn N. Multiple large-scale neural networks underlying emotion regulation. Neurosci Biobehav Rev. 2020;116:382–95.

Smith R, Lane RD. The neural basis of one’s own conscious and unconscious emotional states. Neurosci Biobehav Rev. 2015;57:1–29.

Berthold-Losleben M, Habel U, Brehl AK, Freiherr J, Losleben K, Schneider F, Amunts K, Kohn N. Implicit affective rivalry: a behavioral and fmri study combining olfactory and auditory stimulation. Front Behav Neurosci. 2018;12:313.

Aldao A, Nolen-Hoeksema S, Schweizer S. Emotion-regulation strategies across psychopathology: a meta-analytic review. Clin Psychol Rev. 2010;30(2):217–37.

Cisler JM, Olatunji BO. Emotion regulation and anxiety disorders. Curr Psychiatry Rep. 2012;14(3):182–7.

Joormann J, Stanton CH. Examining emotion regulation in depression: a review and future directions. Behav Res Ther. 2016;86:35–49.

Habel U, Koch K, Pauly K, Kellermann T, Reske M, Backes V, Seiferth NY, Stocker T, Kircher T, Amunts K, et al. The influence of olfactory-induced negative emotion on verbal working memory: individual differences in neurobehavioral findings. Brain Res. 2007;1152:158–70.

Krumhansl CL. An exploratory study of musical emotions and psychophysiology. Can J Exp Psychol. 1997;51(4):336–53.

Mitterschiffthaler MT, Fu CH, Dalton JA, Andrew CM, Williams SC. A functional MRI study of happy and sad affective states induced by classical music. Hum Brain Mapp. 2007;28(11):1150–62.

Seubert J, Rea AF, Loughead J, Habel U. Mood induction with olfactory stimuli reveals differential affective responses in males and females. Chem Senses. 2008;34(1):77–84.

Vernet-Maury E, Alaoui-Ismaili O, Dittmar A, Delhomme G, Chanel J. Basic emotions induced by odorants: a new approach based on autonomic pattern results. J Auton Nerv Syst. 1999;75(2–3):176–83.

Lundstrom J, Boesveldt S, Albrecht J. Central processing of the chemical senses: an overview. ACS Chem Neurosci. 2011;2(1):5–16.

Koelsch S, Fritz T, Cramon DYV, Muller K, Friederici AD. Investigating emotion with music: an fMRI study. Hum Brain Map. 2006;27(3):239–50.

Moore KS. A systematic review on the neural effects of music on emotion regulation: implications for music therapy practice. J Music Ther. 2013;50(3):198–242.

Koelsch S. Towards a neural basis of music-evoked emotions. Trends Cogn Sci. 2010;14(3):131–7.

Peretz I, Hebert S. Toward a biological account of music experience. Brain Cogn. 2000;42(1):131–4.

Kreutz G, Ott U, Teichmann D, Osawa P, Vaitl D. Using music to induce emotions: Influences of musical preference and absorption. Psychol Music. 2008;36(1):101–26.

Vuoskoski J. Personality, style, and music use. In: Ashley R, Timmers R, editors. The Routledge companion to music cognition. Abingdon: Routledge; 2017. p. 453–63.

Schubert E. The influence of emotion, locus of emotion and familiarity upon preference in music. Psychol Music. 2007;35(3):499–515.

Zentner M, Grandjean D, Scherer KR. Emotions evoked by the sound of music: characterization, classification, and measurement. Emotion. 2008;8(4):494–521.

Koole SL, Rothermund K. “I feel better but I don’t know why”: the psychology of implicit emotion regulation. Cogn Emot. 2011;25(3):389–99.

Hou J, Song B, Chen ACN, Sun C, Zhou J, Zhu H, Beauchaine TP. Review on neural correlates of emotion regulation and music: implications for emotion dysregulation. Front Psychol. 2017;8:501.

Chanda ML, Levitin DJ. The neurochemistry of music. Trends Cogn Sci. 2013;17(4):179–93.

Kohn N, Eickhoff SB, Scheller M, Laird AR, Fox PT, Habel U. Neural network of cognitive emotion regulation—an ALE meta-analysis and MACM analysis. Neuroimage. 2014;87:345–55.

Porter S, McConnell T, McLaughlin K, Lynn F, Cardwell C, Braiden HJ, Boylan J, Holmes V, Music in Mind Study G. Music therapy for children and adolescents with behavioural and emotional problems: a randomised controlled trial. J Child Psychol Psychiatry. 2017;58(5):586–94.

Fachner J, Gold C, Erkkila J. Music therapy modulates fronto-temporal activity in rest-EEG in depressed clients. Brain Topogr. 2013;26(2):338–54.

Chu H, Yang CY, Lin Y, Ou KL, Lee TY, O’Brien AP, Chou KR. The impact of group music therapy on depression and cognition in elderly persons with dementia: a randomized controlled study. Biol Res Nurs. 2014;16(2):209–17.

Raglio A, Attardo L, Gontero G, Rollino S, Groppo E, Granieri E. Effects of music and music therapy on mood in neurological patients. World J Psychiatry. 2015;5(1):68–78.

Hides L, Dingle G, Quinn C, Stoyanov SR, Zelenko O, Tjondronegoro D, Johnson D, Cockshaw W, Kavanagh DJ. Efficacy and outcomes of a music-based emotion regulation mobile app in distressed young people: Randomized controlled trial. JMIR mHealth uHealth. 2019;7(1):e11482.

Braunstein LM, Gross JJ, Ochsner KN. Explicit and implicit emotion regulation: a multi-level framework. Soc Cogn Affect Neurosci. 2017;12(10):1545–57.

Peretz I, Gagnon L, Bouchard B. Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition. 1998;68(2):111–41.

Schellenberg EG. Long-term positive associations between music lessons and IQ. J Educ Psychol. 2006;98(2):457–68.

White-Schwoch T, Carr KW, Anderson S, Strait DL, Kraus N. Older adults benefit from music training early in life: biological evidence for long-term training-driven plasticity. J Neurosci. 2013;33(45):17667–74.

Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc Natl Acad Sci USA. 2001;98(20):11818–23.

Demal U. SKIDPIT-light screeningbogen. Universität. Wien; 1999

Halberstadt JB, Niedenthal PM, Kushner J. Resolution of lexical ambiguity by emotional state. Psychol Sci. 1995;6(5):278–82.

Niedenthal PM, Setterlund MB. Emotion congruence in perception. Pers Soc Psychol Bull. 1994;20(4):401–11.

Kallinen K. Emotional ratings of music excerpts in the western art music repertoire and their self-organization in the Kohonen neural network. Psychol Music. 2005;33(4):373–93.

Baumgartner T, Esslen M, Jäncke L. From emotion perception to emotion experience: Emotions evoked by pictures and classical music. Int J Psychophysiol. 2006;60(1):34–43.

van Tricht MJ, Smeding HMM, Speelman JD, Schmand BA. Impaired emotion recognition in music in Parkinson’s disease. Brain Cogn. 2010;74(1):58–65.

Olafson KM, Ferraro FR. Effects of emotional state on lexical decision performance. Brain Cogn. 2001;45(1):15–20.

Terwogt MM, Van Grinsven F. Musical expression of moodstates. Psychol Music. 1991;19(2):99–109.

Broman DA, Olsson MJ, Nordin S. Lateralization of olfactory cognitive functions: effects of rhinal side of stimulation. Chem Senses. 2001;26(9):1187–92.

Kobal G, Kettenmann B. Olfactory functional imaging and physiology. Int J Psychophysiol . 2000;36(2):157–63.

Zatorre RJ, Jones-Gotman M. Right-nostril advantage for discrimination of odors. Percept Psychophys. 1990;47(6):526–31.

Bougeard R, Fischer C. The role of the temporal pole in auditory processing. Epileptic Disord Int Epilepsy J Videotape. 2002;4(Suppl 1):S29-32.

Koch K, Pauly K, Kellermann T, Seiferth NY, Reske M, Backes V, Stocker T, Shah NJ, Amunts K, Kircher T, et al. Gender differences in the cognitive control of emotion: an fMRI study. Neuropsychologia. 2007;45(12):2744–54.

Schneider F, Habel U, Reske M, Toni I, Falkai P, Shah NJ. Neural substrates of olfactory processing in schizophrenia patients and their healthy relatives. Psychiat Res-Neuroim. 2007;155(2):103–12.

Jenkinson M, Beckmann CF, Behrens TEJ, Woolrich MW, Smith SMFSL. Neuroimage. 2012;62(2):782–90.

Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17(2):825–41.

Pruim RHR, Mennes M, Buitelaar JK, Beckmann CF. Evaluation of ICA-AROMA and alternative strategies for motion artifact removal in resting state fMRI. Neuroimage. 2015;112:278–87.

Pruim RHR, Mennes M, van Rooij D, Llera A, Buitelaar JK, Beckmann CF. ICA-AROMA: a robust ICA-based strategy for removing motion artifacts from fMRI data. Neuroimage. 2015;112:267–77.

Williams JR. The Declaration of Helsinki and public health. Bull World Health Organ. 2008;86(8):650–2.

Acknowledgements

The Interdisciplinary Centre for Clinical Research—Brain Imaging Facility, Aachen supported the analysis of this study.

Funding

This work was supported by the Faculty of Medicine, RWTH Aachen University (START program 138/09) and by the German Research Foundation (DFG, IRTG 1328, International Research Training Group).

Author information

Authors and Affiliations

Contributions

MB-L Implementation of the study, Statistics, Imaging process, Programming, Writing, and Manuscript editing; SP Writing, and Manuscript editing; UH Supervision, Infrastructure, Conceptualization, and Manuscript editing; KL Conceptualization, Music evaluation, Supervision music, and Manuscript editing; FS Supervision, Infrasctructure, and Manuscript editing; KA Supervision, Infrastructure, and Manuscript editing; NK Implementation of the study, Statistics, Imaging process, Programming, Writing, and Manuscript editing.

Corresponding author

Ethics declarations

Ethics approval

The study was approved by the ethics committee of the Medical School, RWTH Aachen University, Germany (EK 058/11) and was carried out in accordance with the Declaration of Helsinki in its latest form [63]. Participation was reimbursed at the end of each experiment.

Competing interests

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Berthold-Losleben, M., Papalini, S., Habel, U. et al. A short-term musical training affects implicit emotion regulation only in behaviour but not in brain activity. BMC Neurosci 22, 30 (2021). https://doi.org/10.1186/s12868-021-00636-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12868-021-00636-1