Abstract

Normalization is a crucial step in the analysis of single-cell RNA-sequencing (scRNA-seq) counts data. Its principal objectives are reduction of systematic biases primarily introduced through technical sources and transformation of counts to make them more amenable for the application of established statistical frameworks. In the standard workflows, normalization is followed by feature selection to identify highly variable genes (HVGs) that capture most of the biologically meaningful variation across the cells. Here, we make the case for a revised workflow by proposing a simple feature selection method and showing that we can perform feature selection before normalization by relying on observed counts. We highlight that the feature selection step can be used to not only select HVGs but to also identify stable genes. We further propose a novel variance stabilization transformation inclusive residuals-based normalization method that in fact relies on the stable genes to inform the reduction of systematic biases. We demonstrate significant improvements in downstream clustering analyses through the application of our proposed methods on biological truth-known as well as simulated counts datasets. We have implemented this novel workflow for analyzing high-throughput scRNA-seq data in an R package called Piccolo.

Similar content being viewed by others

Introduction

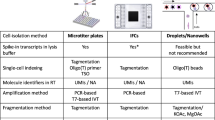

Bulk RNA sequencing (RNA-seq) studies have led to a significant improvement in our understanding of gene expression profiles associated with healthy as well as diseased states of various tissue types. However, these studies only provide an averaged view at the tissue level in which subtle but crucial distinctions of the constituent cell-types and states are obscured. Rapid advances in single-cell RNA-seq (scRNA-seq) protocols and platforms over the past decade have now facilitated investigation of transcriptional profiles at the level of individual cells, thereby enabling identification of distinct cell-types and cell states [1,2,3,4,5], as well as stages of development and differentiation [6, 7].

In contrast to measurements on bulk tissues, single-cell measurements have significantly greater uncertainty due to the low amounts of starting material as well as low capture efficiencies of the protocols (typically, high-throughput protocols only capture between 5 and 20\(\%\) of the molecules present in each cell [8]). As a result, even deeply sequenced datasets may have up to \(50\%\) zeros [9]. The high sparsity poses a significant challenge during the computational analysis. Early attempts to build statistical models to explain the relationship between the observed counts and the true underlying gene expression levels relied on zero-inflation models to explain the excess zeros. However, data generated using newer scRNA-seq protocols that rely on unique molecular identifiers (UMIs) have been shown to be sufficiently described using simpler statistical models that do not include zero inflation [10, 11]. In this paper, any reference to scRNA-seq data will specifically pertain to UMI counts data.

A critical step in the computational analyses of both bulk RNA-seq and scRNA-seq datasets is that of normalization. One of its key objectives is to reduce the biases introduced by technical sources or even biological sources such as cell cycle state, so that we can confidently identify true biological differences [9, 12,13,14]. Owing to the small amount of mRNAs captured per cell, the effect of these biases is more pronounced in the case of scRNA-seq data, further underscoring the need to reduce the impact of these biases on downstream analyses. Typically, normalization is performed by re-scaling the observed counts using cell-specific size factors to reduce the differences in sampling depths (total counts) between the cells. The scaled counts are then transformed with the help of a monotonic non-linear function (usually the logarithm function) to stabilize the variances of genes across different mean expression levels.

In the standard scRNA-seq workflow (implemented for instance in Seurat [15,16,17,18] and Scanpy [19]) normalization is followed by a feature selection step that focuses on identifying genes that capture most of the biological variation across the cells while eliminating genes that do not exhibit meaningful biological variation. This sequence of steps—normalization followed by feature selection—in the standard workflow appears quite reasonable, especially given the fact that differentially expressed genes can be identified reliably only after reducing the sampling depth differences between the cells. However, objective (i). identification of genes that are differentially expressed between groups of cells, is not the same as objective (ii). identification of highly variable genes (HVGs). While the identification of differentially expressed genes between distinct groups of cells requires that the sampling depth differences be reduced through normalization, we show that it isn’t necessary to perform normalization in order to identify HVGs.

In this article, we propose a simple feature selection method that relies on a regression-based approach to estimate dispersion coefficients for the genes based on their observed counts. Using this method, we show that feature selection can be performed before normalization. Importantly, during the feature selection step we not only identify variable genes, but also shortlist stable genes. The variation in the counts of these stable genes is expected to primarily reflect the biases introduced by the technical sources, and can therefore be used to estimate cell-specific size factors in order to perform normalization. During normalization we also need to ensure variance stabilization, especially when relying on principal components analysis (PCA) for dimensionality reduction. Keeping this in mind, we propose a residuals-based normalization method that not only reduces the impact of sampling depth differences between the cells but simultaneously ensures variance stabilization by explicitly relying on a monotonic non-linear transformation (default choice is the log transformation).

We demonstrate significant improvements in downstream clustering analyses enabled by the application of our feature selection and normalization methods on biological truth-known as well as simulated counts datasets. Based on these results, we make the case for a revised scRNA-seq analysis workflow in which we first perform feature selection and subsequently perform normalization using our residuals-based approach. We have implemented this novel scRNA-seq workflow in an R package called Piccolo.

Results

Genes with small counts exhibit quasi-Poisson variance

Genes with low mean expression levels show Poisson-like variance of their counts

As pointed out by Sarkar and Stephens [11], a good starting point for understanding the nature of the scRNA-seq counts data is to recall that the observed counts reflect contributions from both the underlying expression levels of the genes as well as the measurement errors. This necessitates that the contributions from the two be carefully distinguished in order to better understand and explain the true biological variation. Based on their analysis, they found that a simple Poisson distribution sufficed to explain measurement error, while a simple Gamma distribution often sufficed to explain the variation in expression levels across cells. The observation model built using these two distributions yields the Gamma-Poisson (or negative binomial (NB)) distribution which is well-known as a plausible model to explain over-dispersed counts. Under this model, the mean-variance relationship is given by,

where \(\alpha _{NB}\) is the NB over-dispersion coefficient (\(\alpha _{NB} = 0\) yields the familiar Poisson mean-variance relationship: \(\sigma ^2 = \mu\)).

A more familiar expression for the NB mean-variance relationship is,

where \(\theta\) is referred to as the inverse over-dispersion coefficient.

Another mean-variance relationship closely related to the Poisson and the NB is the quasi-Poisson (QP) wherein,

where \(\alpha _{QP}\) is the QP dispersion coefficient (\(\alpha _{QP} = 1\) yields the familiar Poisson mean-variance relationship; \(\alpha _{QP} > 1\) would be associated with counts over-dispersed with respect to the Poisson, while \(\alpha _{QP} < 1\) would indicate counts under-dispersed with respect to the Poisson).

We began our investigation into the nature of UMI counts by considering the mean-variance relationship of counts for genes in a technical negative control data set [10](hereafter referred to as Svensson 1). This data set consisted of droplets containing homogenous solutions of endogenous RNA as well as spike-in transcripts. The variability of counts in this data arises solely due to technical sources and is not attributable to any underlying biological source. The left panel in Fig. 1A shows the variance (\(\sigma ^2\)) vs mean (\(\mu\)) log-log plot for Svensson 1. Each dot in the plot corresponds to a gene. The colors of the dots reflect the local point density, with darker shades (deep blues) indicating low density and brighter shades (bright yellow) indicating high density. The black line depicts the variance expected under the Poisson model (\(\sigma ^2 = \mu\)).

We begin by noting that the UMI counts are heteroskedastic in nature since the variances of the genes depend on the mean (larger variances corresponding to larger means). If we then move on to focus on genes with low mean expression levels (especially \(\mu < 0.1\)), we can see that the mean-variance relationship appears to be well-approximated by the Poisson model since their observed variances lie close to the black line. It’s only for genes with higher mean expression levels (especially \(\mu > 1\)) that the variance of the observed counts exceed the variance expected under the Poisson model. For comparison with counts data with inherent biological variation, we looked at the NIH/3T3 fibroblast cell line data set [10] (hereafter referred to as NIH/3T3) and the 10X Genomics Peripheral Blood Mononuclear Cells (PBMC) 33k data set [20] (hereafter referred to as PBMC 33k). Even for these two datasets, most of the genes with low mean expression levels exhibit Poisson-like mean-variance relationship (middle and right panels in Fig. 1A).

The Poisson-like nature of the mean-variance relationship at low expression levels can be made clearer with the help of a simple example. Consider a gene with \(\mu = 0.01\). Let us assume that the counts of this gene are actually NB distributed and that \(\alpha _{NB} = \frac{1}{\theta } = 1\) is the true estimate of the over-dispersion coefficient. The variance of the counts will then be \(\sigma _{NB}^2 = 0.01 + 1*(0.01)^2 = 0.0101\). In comparison, the expected variance under the Poisson model will be \(\sigma _{Pois}^2 = 0.01\). The percent difference between the two variances is a mere \(1\%\). Thus, even if we approximated the variance for the counts of this gene with that predicted by the simple Poisson model, the estimated variance would differ by only \(1\%\) from the true NB variance. The percentage difference between the Poisson and the NB variances would of course increase for larger values of \(\alpha _{NB}\), however, estimates of \(\alpha _{NB}\) for real biological datasets typically range between 0.01 and 1 [21, 22].

Genes with low mean expression exhibit quasi-Poisson variance. In all the plots, each dot represents a gene and the color of the dots reflect the local point density, with brighter shades (yellow) indicating high density and darker shades (deep blue) indicating low density. A Variance (\(\sigma ^2\)) vs mean (\(\mu\)) log-log scatter plots for the Svensson 1 technical control (left panel), NIH/3T3 fibroblast cell line (center panel), and PBMC 33k (right panel) datasets. The solid black line corresponds to the Poisson model (\(\sigma ^2 = \mu\)). For genes with low mean expression levels, the variance can be adequately described by the Poisson model. B Quasi-Poisson dispersion coefficients (\(\alpha _{QP}\)) vs mean (\(\mu\)) log-log scatter plots for the Svensson 1 (left panel), NIH/3T3 (center panel), and PBMC 33k (right panel) datasets. \(\alpha _{QP}\) for each gene were estimated from the observed counts using a regression-based approach

We can formalize the discussion above with the help of Eqs. 1 and 3. We note that when \(\alpha _{NB}\mu<< 1\),

where we defined \(\alpha _{QP}\) in terms of \(\alpha _{NB}\) and \(\mu\) as \(\alpha _{QP} = 1+\alpha _{NB}\mu\) (see Appendix for a discussion on viewing QP variance as a special case of NB variance).

Thus, we conclude that counts of genes with low mean expression levels and moderate over-dispersion (such that \(\alpha _{NB}\mu<< 1\)) exhibit variances that do not deviate significantly from the variances predicted under the Poisson model.

QP dispersion coefficients can be obtained using a regression-based approach

For the NB distribution, the usual approach is to use maximum-likelihood estimation (MLE) to obtain estimates for the over-dispersion parameter (\(\alpha _{NB}\)). This approach apart from being computationally intensive has the weakness that if in fact the distribution is not NB, the maximum-likelihood estimator is inconsistent. Cameron and Trivedi proposed a regression-based test that offers a more robust alternative by requiring only the estimates of the mean and variance for each gene [23, 24]. The test is set up to estimate over-dispersion beyond the null model (Poisson distribution) by specifying the alternate model in the form of a scalar multiple of a function of the mean,

where the scalar multiple (\(\alpha\)) is estimated using least squares regression (see Appendix).

The QP mean-variance relation (see Eq. 3) corresponds precisely to such an alternative hypothesis in which the variance is simply a scalar multiple (\(\alpha _{QP}\)) of the mean. This enables us to use this simple yet robust regression-based approach to estimate the QP dispersion coefficients (\(\alpha _{QP}\)) for each gene by simply relying on the estimates of their mean and variance.

QP variance for counts of genes with low mean expression is simply due to the observed counts being small and is not biological in origin

We obtained estimates for \(\alpha _{QP}\) for all genes using the regression-based approach. Based on these estimates, we filtered out genes that were under-dispersed compared to the Poisson model (\(\alpha _{QP} < 1\)). For the remaining genes, we plotted \(\alpha _{QP}\) vs \(\mu\) log-log plots (Fig. 1B). For Svensson 1 (left panel in Fig. 1B), it is evident that for genes with low mean expression levels their \(\alpha _{QP}\) values lie close to 1 (\(10^0\)). In particular, we note that the mean of the \(\alpha _{QP}\) for genes with \(\mu < 0.1\) is 1.0474. This shows that for these genes with low mean expression their \(\alpha _{QP}\) values are close to 1 which is consistent with the observation that the counts of these genes exhibit Poisson-like variance. Since Svensson 1 is a technical control data set, this observation further supports the simple Poisson distribution as an appropriate model for explaining measurement error, particularly for genes with low mean expression levels. Furthermore, genes with \(\mu < 0.1\) do not appear to exhibit any dependence on \(\mu\). We evaluate this quantitatively by using the non-parametric Kendall’s rank and Spearman’s rank correlation tests to determine whether there is a statistical dependence between \(\alpha _{QP}\) and \(\mu\) values for genes with \(\mu < 0.1\) (see Methods). Both tests evaluate how well the relationship between two variables can be described using a monotonic function. For both tests, the correlation coefficients - \(\tau\) and \(\rho\) respectively—indicate a statistical dependence if the values are close to \(+1\) or \(-1\), while values of \(\tau\) or \(\rho\) closer to 0 indicate the absence of such a statistical dependence. The resultant correlation coefficient values of \(\tau = 0.04611706\) (\(p = 0.001244\)) and \(\rho = 0.0880\) (\(p = 3.475E-05\)), support the assertion that there is no statistical dependence between \(\alpha _{QP}\) and \(\mu\) values for genes with low mean expression levels.

For NIH/3T3 (middle panel in Fig. 1B) and PBMC 33k (right panel in Fig. 1B), despite greater variability in \(\alpha _{QP}\) due to the inherent biological variability in the data, similar observations were made—namely that the \(\alpha _{QP}\) values are close to 1 for genes with \(\mu < 0.1\) and that there was a lack of dependence between \(\alpha _{QP}\) and \(\mu\) for those genes. For NIH/3T3, the mean of the \(\alpha _{QP}\) for genes with \(\mu < 0.1\) is 1.1388, and both Kendall’s correlation coefficient \(\tau = 0.0644\) (\(p = 1.28E-05\)) and Spearman’s correlation coefficient \(\rho = 0.1014\) (\(p = 4.084e-06\)) indicate that there is no statistical dependence between \(\alpha _{QP}\) and \(\mu\) for genes with \(\mu < 0.1\). For PBMC 33k, the mean of the \(\alpha _{QP}\) for genes with \(\mu < 0.1\) is 1.3710, and the Kendall’s correlation coefficient \(\tau = 0.1349\) (\(p < 2.2E-16\)) and Spearman’s correlation coefficient \(\rho = 0.2035\) (\(p < 2.2E-16\)) once again suggesting that there is no significant statistical dependence between the \(\alpha _{QP}\) values and \(\mu\) for genes with low mean expression levels.

To summarize, we observed that the counts for genes with low mean expression levels exhibit QP variance (see Appendix for a discussion on how the lack of dependence between \(\alpha _{QP}\) and \(\mu\) for genes with low mean expression levels manifests as a non-decreasing relationship between their \(\theta\) and \(\mu\)). It is important to highlight here that we observed this relationship not just in the biological datasets (NIH/3T3 and PBMC 33k) but also in the technical control data set (Svensson 1), suggesting that this relationship is not biological in origin and can be understood more simply in terms of the fact that for genes with small counts the variance of those counts can barely exceed the variance expected under the Poisson distribution.

Feature selection can be performed before normalization

Standard scRNA-seq workflow is based on an assumption that reflects a confusion between the distinct objectives of identification of variable genes and differentially expressed genes

In the standard scRNA-seq workflow, identification of HVGs—genes that exhibit greater variability in counts compared to other features in the data set—is performed only after normalization. This particular sequence in the workflow is based on the assumption that unless the systematic biases are reduced or eliminated, we cannot reliably identify features that best capture the biological variability inherent in the data. A more careful examination reveals that this assumption reflects a confusion between two fundamentally distinct objectives. The first objective— identification of HVGs - as mentioned above, is about identifying genes that exhibit higher variability of counts compared to other features in the data set; we expect that these genes capture most of the biological variability in the data set. The second objective—identification of differentially expressed (DE) genes—is about identifying genes that exhibit differences in their expression levels between distinct sets of cells. For identifying DE genes, it is indeed imperative that the systematic biases are reduced or eliminated in order to identify genes that truly reflect actual biological differences between the distinct groups of cells being compared. However, it is possible to identify HVGs without first accounting for the systematic biases through normalization since these biases owing to their systematic nature are expected to manifest as additional but consistent sources of variation for the counts of genes across cells. This is in fact the assumption underlying the normalization approaches that rely on estimation of cell-specific size factors to re-scale and adjust the observed counts.

We can state the expectation discussed above in terms of the QP dispersion coefficients. Suppose we have gene A and gene B with comparable mean expression levels (\(\mu _A \approx \mu _B\)) such that,

where \({\tilde{\alpha }}_{QP}^A\) and \({\tilde{\alpha }}_{QP}^B\) are the QP dispersion coefficients of gene A and gene B in the hypothetical case where there is no systematic bias. Our expectation is that even in the presence of systematic biases, the relative magnitudes of the dispersion coefficients for the bias-affected counts will be such that,

where \(\alpha _{QP}^A\) and \(\alpha _{QP}^B\) are the respective QP dispersion coefficients obtained from the observed counts of genes A and B. Thus, the expectation is that genes that exhibit high variability in their counts due to underlying biological differences will exhibit high variability even in the presence of systematic biases. We can test whether this is a reasonable expectation by introducing systematic biases into a given data set and then verifying whether the genes we identify as variable for the modified data set are consistent with the ones identified for the original data set. Before proceeding to perform such a test, however, we first need to lay down a method to identify HVGs.

Feature selection method based on QP dispersion coefficients identifies HVGs and stable genes

As discussed earlier, distinguishing between measurement and biological processes by separately modeling their contributions to observed counts enables a better understanding and interpretation of the observed counts [11]. It also provides a simple and straightforward basis for identifying and filtering out features that are relatively uninformative prior to any downstream analysis: if the measurement model sufficiently describes the observed counts for a given gene, then no meaningful biological inference can be drawn based on the counts of that gene. This is so since all the variation in the counts for that gene can be attributed to the measurement process itself, with negligible or no contribution from any biological process. We call such genes whose counts are adequately described by the measurement model as biologically uninformative. Since a simple Poisson distribution suffices to explain measurement error, using the estimates for \(\alpha _{QP}\) we can easily identify genes that are likely to be biologically uninformative—genes with \(\alpha _{QP} \le 1\) do not exhibit over-dispersion with respect to the Poisson measurement model and can be filtered out.

Feature selection can be performed before normalization based on dispersion coefficients estimated using the observed counts. A Quasi-Poisson dispersion coefficient (\(\alpha _{QP}\)) vs mean (\(\mu\)) log-log scatter plots for genes that exhibited over-dispersion with respect to the Poisson (\(\sigma ^2 > \mu\)) for the Svensson 1 technical control data set. Each dot represents a gene. The dashed gray vertical lines illustrate how the genes are binned based on their mean expression levels (in this figure, there are 10 bins). Genes within adjacent pairs of dashed vertical lines belong to the same bin. For each bin, the default choice of \(\alpha _{QP(Reference|Bin)}\) is the \(\alpha _{QP}\) corresponding to 10th quantile within the bin. B Violin-box plots showing the percentage match between the top 3000 highly variable genes (HVGs) identified by our feature selection method for the original (unsubsampled) and corresponding 100 subsampled datasets. The original 5 datasets (PBMC 33k, NIH/3T3 cell line, Mouse cortex, Mouse lung, and PBMC r1) were obtained using different single-cell platforms—10X Chromium v1, 10X Chromium v3, DroNC-seq, Drop-seq, and inDrops respectively. The box/violin plots are colored according to the platforms. For all 5 datasets, more than \(80\%\) of the top 3000 HVGs shortlisted for the subsampled datasets matched the top 3000 HVGs of the original datasets. C \(\alpha _{QP} - \alpha _{QP(Reference|Bin)}\) vs mean (\(\mu\)) linear-log scatter plot for genes that exhibited over-dispersion with respect to the Poisson (\(\sigma ^2 > \mu\)) for the Svensson 1 data set. The dashed red horizontal line illustrates the threshold to shortlist HVGs. Genes with \(\alpha _{QP} - \alpha _{QP(Reference|Bin)} > 20\) in this case are shortlisted as HVGs. On the other hand, genes with \(\alpha _{QP} - \alpha _{QP(Reference|Bin)} < 0\) are shortlisted as stable genes. D \(\alpha _{QP} - \alpha _{QP(Reference|Bin)}\) vs mean (\(\mu\)) linear-log scatter plot for genes that exhibited over-dispersion with respect to the Poisson (\(\sigma ^2 > \mu\)) for the PBMC 33k data set. Labeled genes with \(\alpha _{QP} - \alpha _{QP(Reference|Bin)} > 20\) are shortlisted as HVGs. On the other hand, genes with \(\alpha _{QP} - \alpha _{QP(Reference|Bin)} < 0\) are shortlisted as stable genes (some labeled in figure). Inset in top left corner—light colored histogram corresponding to all genes with \(\alpha _{QP} > 1\), and dark colored histogram corresponding to the top 3000 HVGs. Note that the HVGs are shortlisted across the different expression levels with no preferential selection based on the mean expression level of the genes

While we can simply rely on the \(\alpha _{QP}\) to identify biologically uninformative genes, identification of HVGs based on just the magnitudes of \(\alpha _{QP}\) would result in a bias towards genes with higher mean expression levels (see Fig. 1B). In order to address this and ensure that there is no preferential selection of genes with higher mean expression levels, we propose the following approach to shortlist HVGs:

-

Group the genes into bins (default is 1000 bins) based on their mean expression levels; each bin contains approximately the same number of genes with comparable mean expression levels

-

Sort the genes within each bin into quantiles based on their \(\alpha _{QP}\)

-

Obtain the \(\alpha _{QP}\) corresponding to the reference quantile within each bin (default reference is the 10th quantile)—we refer to this as \(\alpha _{QP(Reference|Bin)}\)

-

Calculate \(\alpha _{QP} - \alpha _{QP(Reference|Bin)}\) for each gene - larger values indicate greater over-dispersion

We illustrate the binning process involved in our feature selection method for Svensson 1 (Fig. 2A). For ease of illustration we show 10 bins (see Appendix and Additional file 1: Fig. S13 for a discussion on how the number of bins impact the identification of HVGs), where each bin corresponds to a segment or region between the vertical dashed lines. Since Svensson 1 is a technical control data set, we don’t expect to see much variability in the expression levels of the genes, and indeed in Fig. 2C we can see that there are just two genes (spike-in transcripts ERCC-00074 and ERCC-00130) that exceed the threshold of 20 for \(\alpha _{QP} - \alpha _{QP(Reference|Bin)}\) (red horizontal dashed line). For PBMC 33k, in contrast, we observe 15 genes that exceed the threshold of 20 for \(\alpha _{QP} - \alpha _{QP(Reference|Bin)}\) (Fig. 2D). The threshold of 20 was picked simply to illustrate how the HVGs may be shortlisted. In practice, we can rank the genes based on the magnitudes of \(\alpha _{QP} - \alpha _{QP(Reference|Bin)}\) and shortlist the top 3000 genes. An important point to highlight here is that the shortlisted HVGs do not exclude genes with low mean expression—note the inset panel in the top left corner of Fig. 2D, where we show the histogram based on the mean expression levels for the HVGs (darker shade) together with the histogram for all genes with \(\alpha _{QP} > 1\) (lighter shade).

Aside from identifying variable genes, we can also shortlist genes that do not exhibit much variability in their counts. We refer to these genes as stable genes. Identification of such genes is very useful since we expect that the variability in the counts of these genes is primarily attributable to the measurement process and is not confounded by biological differences between the cells. Thus, we can rely on these genes to obtain more reliable estimates for the cell-specific size factors in order to reduce the impact of the differences in sampling depths. Based on our feature selection method, genes with \(\alpha _{QP} - \alpha _{QP(Reference|Bin)} < 0\) are shortlisted as stable genes. For PBMC 33k, two of these genes (PFDN2 and RPL32) are labeled in Fig. 2D below the horizontal black line at \(\alpha _{QP} - \alpha _{QP(Reference|Bin)} = 0\).

HVGs can be consistently identified despite the introduction of systematic biases

Having introduced the feature selection method, we can now test the expectation that the HVGs can be identified despite the presence of systematic biases. To perform the test, we picked 5 UMI counts datasets obtained from different platforms: PBMC 33k (10X Genomics Chromium v1), NIH/3T3 (10X Genomics Chromium v3), Mouse Cortex r1 (DroNC-seq) [8], Mouse Lung (Drop-seq) [25], and PBMC r1 (inDrops) [8]; r1 denotes replicate 1. For each of these datasets, we randomly picked \(30\%\) of the cells and subsampled the counts in those cells to a fraction of the original total count. The fractions were allowed to take one of the following values - 0.3, 0.4, 0.5, 0.6, 0.7 - and were picked randomly for each cell. We did this 100 times for each of the 5 datasets. Using our feature selection method, we shortlisted the top 3000 HVGs in each of the subsampled datasets and compared with the top 3000 HVGs in the respective original (unsubsampled) datasets to see how many of the top 3000 HVGs matched between the two. We observed that on average more than \(80\%\) of the top 3000 HVGs shortlisted for the subsampled datasets matched the top 3000 HVGs obtained from the original datasets across the different platforms and tissue types (Fig. 2B). Thus, despite the introduction of random systematic biases there is good agreement between the HVGs obtained for the original datasets and the HVGs obtained for corresponding datasets with the introduced biases. This supports our assertion that feature selection can be performed prior to normalization.

Normalization can be performed using a residuals-based approach that includes variance stabilization

Cell-specific size factors should be estimated using stable genes

The basic objective of normalization is to reduce systematic biases introduced due to technical or potentially uninteresting biological sources (such as cell size, cell cycle state) before further downstream analyses such as clustering and differential expression. The most common approach to reduce such systematic biases is to scale the counts within each cell by cell-specific size factors [13, 26]. The simplest estimates are given by,

where \(X_{gc}\) is the observed count of gene g in cell c, and C is the total number of cells. The numerator in Eq. (6) is the total UMI count in cell c (\(N_{c}\)), while the denominator is the mean of the total counts of the cells.

There is an intimate link between the estimates of size factors given by Eq. (6) and the estimates for expected means (\({\hat{\mu }}_{gc}\)) under the assumption that the counts are Poisson distributed. The size factors as given by Eq. (6) should be viewed as estimates under the approximation that the counts are Poisson distributed (see Appendix). Keeping this in mind, we argue that it is most appropriate to calculate the size factors by relying on the stable genes identified using our feature selection method. The counts of these genes do not exhibit significant over-dispersion compared to the Poisson, and as discussed earlier, we expect that the primary source of the variability of their counts is the measurement process which is what we are trying to account for with the help of the size factors. Therefore, we propose the following refinement to the estimation of the size factors,

where \(Stable \, g\) refers to the set of stable genes.

Limitations of widely used normalization methods

In the standard scRNA-seq workflow, normalization and feature selection are typically followed by dimensionality reduction using principal components analysis (PCA). An important step prior to PCA is to ensure variance stabilization by transforming the size factor adjusted counts using the acosh, log, sqrt functions etc. This ensures that genes with higher expression levels (and as a consequence larger variance) do not contribute disproportionately to the overall variance as evaluated through PCA. The log-based variance stabilization transformation (hereafter referred to as logSF) is the most popular method according to which the transformed counts are given by,

where the pseudocount of 1 ensures that the log transformation works with zero counts, and in fact returns zeros for these counts even after transformation. However, the logSF normalization is not very effective since the total counts of the cells (\(N_c\)) shows up as a primary source of variation in PCA even after normalization (see Additional file 1: Fig. S15). This can be traced to the fact that under this transformation, the zeros remain zeros while only the non-zero counts are scaled according to the size factors. Systematic differences in the number of zero counts between the cells can therefore be identified as a major source of variation even after transformation [27, 28].

Residuals-based approaches proposed by Hafemeister et al. [20], Townes et al. [27], and more recently by Lause et al. [21] provide alternatives that lead to much more effective normalization (see Additional file 1: Fig. S16). The Pearson residuals under the NB model are approximately given by,

where \(X_{gc}\) is the observed count of gene g in cell c, \({\hat{\mu }}_{gc}\) is the estimated mean of gene g in cell c, and \({\hat{\alpha }}_g\) is the estimated over-dispersion parameter for gene g.

The increased effectiveness of normalization with the Pearson residuals can be attributed to \({\hat{\mu }}_{gc}\) taking into account the systematic differences in the total counts between the cells. Unlike the case with logSF normalization where zero counts are transformed back to zeros, the zero counts are instead transformed to negative residual values whose magnitudes vary depending on the total counts of the respective cells.

The rationale underlying the residuals-based approach is that the null model should correspond to the measurement process so that the residuals provide estimates for deviations away from the expectations under the measurement model. However, the sparsity and the skewed nature of the counts distributions pose significant challenges to achieving effective variance stabilization with the help of residuals-based methods. The lack of variance stabilization becomes especially noticeable for genes that are robustly expressed in only a subset of cells while showing negligible expression in the rest of the cells (such genes would be considered as markers of the specific cell sub-populations in which they are expressed) [22] (see Appendix for more discussion on this). Furthermore, for genes with very low mean expression levels (and as a consequence extremely small estimated standard deviations) even cells with just 1 or 2 UMI counts sometimes end up with unusually large residual values that are then addressed through heuristic approaches [20, 21, 29].

Novel residuals-based normalization that includes variance stabilization

Keeping in mind the limitations of the widely used normalization methods discussed above, we propose a conceptually simple residuals-based normalization method that reduces the influence of systematic biases by relying on size factors estimated using stable genes while simultaneously ensuring variance stabilization by explicitly relying on a variance stabilization transformation.

In order to motivate our approach, we begin by pointing out that z-scores are simply Pearson residuals corresponding to the normal distribution. Assuming counts, \(Y_{gc}\), that are normally distributed,

the MLEs for \(\mu _g\) and \(\sigma _{g}^2\) are given by, \({\hat{\mu _g}} = (\sum _c Y_{gc})/C\) and \({\hat{\sigma }}_{g}^2 = (\sum _c (Y_{gc} - {\hat{\mu _g}})^2)/C\), respectively. The corresponding Pearson residuals-based on these estimates are given by,

which as already noted above correspond to z-scores (for simplicity, we assumed that there are no differences in total counts between the cells).

In order to compute z-scores for our data, we first need to apply a variance stabilization transformation to the raw counts (\(X_{gc}\)) to bring their distribution closer to the normal distribution. The variance stabilization transformation can be performed using monotonic non-linear functions, g(X), such that the transformed counts are given by,

To compute the residuals, we need estimates for the means and variances of Y based on estimates for means and variances of X. We can arrive at approximations for both using a Taylor series expansion around \(X = \mu\) (see Appendix). In particular for \(g(X) = log(X+1)\), the first order approximations of the mean and variance are given by (the first-order approximation to the variance of a transformed random variable is also known as the Delta method attributed to R. A. Dorfman [30]),

At this point we need estimates for the means (\({\hat{\mu }}_{gc}\)) and the variances (\({\hat{\sigma }}_{gc}^2\)) that account for the systematic biases. Note, that the usual estimate for the mean expression level of gene g based on the observed counts (\(\mu _g = \frac{\sum _c X_{gc}}{C}\)) includes biological as well as technical effects. However, we are interested in inferring the mean expression level and variance that is primarily reflective of the underlying biology. This can be accomplished by relying on the size factors (Eq. (7)) to adjust the observed counts of the respective cells and then obtaining the estimates for mean and variance based on the scaled counts. Thus,

Using the estimates for mean and variance for gene g, we get the following estimates for mean and variance of gene g in each cell c,

With these estimated means (\({\hat{\mu }}_{gc}\)) and variances (\({\hat{\sigma }}_{gc}^2\)) that account for the systematic biases, from Eqs. (10) and (11) we get,

and

Based on these first-order approximations for means and variances under the \(log(X+1)\) transformation, we now define our z-scores based normalization,

This log-stabilized z-score transformation is the default normalization method in our R package called Piccolo (see Appendix for a discussion on other variance stabilization approaches implemented in Piccolo). Hereafter, we refer to it as the Piccolo normalization.

First-order approximations for estimates of mean and variance under variance stabilization transformation are valid for majority of the genes

The first-order approximations for the mean and variance of the counts under the variance stabilization transformation in our residuals-based normalization are based on the assumption that the transformed values (raw counts transformed by the variance stabilizing transformation) vary linearly over the range of raw counts close to the mean expression level. We tested the validity of this assumption for each gene by fitting a straight line (using linear regression) through the log-transformed values corresponding to those raw counts that fall within one standard deviation away from the mean expression value. We relied on the resultant adjusted \(R^2\) (coefficient of determination) values to evaluate the validity of the assumption since values of adjusted \(R^2\) close to 1 indicate that the transformed values can be approximated to lie along a straight line over the corresponding range of raw counts.

Piccolo normalization reduces sampling depth differences between cells and also ensures effective variance stabilization. A 2-dimensional (2D) scatter plots based on the first 2 PCs of the Svensson 1 technical control data set. Each dot is a cell and is colored according to the size factors; brighter shades (yellow) correspond to larger size factors and darker shades (deep blue) correspond to smaller size factors. The left panel shows the 2D PC scatter plot for the raw counts, while the right panel shows the 2D PC scatter plot for the z-scores (residuals) obtained from Piccolo normalization. The coefficient (\(\rho\)) in the top-right corner of the panels shows the canonical correlation coefficient between the size factors and the top 5 PCs. Smaller values of \(\rho\) indicate that the impact of the sampling depth differences has been reduced more effectively by the normalization. Piccolo normalization reduces the impact of sampling depth on the overall variation as evaluated through PCA. B Variance vs mean (\(\mu\)) linear-log scatter plots for the top 3000 HVGs of the PBMC 33k data set after applying respective normalizations - Piccolo (top-left panel), Analytic Pearson residuals (top-right), Scran logSF (bottom-left), and SCTransform v2 (bottom-right). The y-axis scale was limited to a maximum value of 5 to aid visual comparison; genes with variance greater than 5 were clipped to have the maximum value of 5. The residuals obtained using Piccolo exhibit variances that do not vary much beyond 1 unlike the the residuals obtained with Analytic Pearson and SCTransform v2

We found that for all UMI counts datasets included in our study, more than \(85\%\) of the genes had adjusted \(R^2\) values greater than 0.8 (see Additional file 1: Table 1 and Fig. S18). Thus, for UMI counts data obtained using high-throughput protocols the first-order approximation utilized in our normalization method is valid, particularly for genes with small counts.

Piccolo normalization reduces the impact of sampling depth differences between cells while simultaneously ensuring variance stabilization

As stated earlier, the objective of normalization is to reduce or eliminate the systematic biases in counts between cells that are not reflective of actual biological differences. Since technical control data do not have any biological source of variation, differences in sampling depths are expected to be the major source of variation between the cells (droplets). In terms of PCA, this would translate to sampling depth showing up as a major contributor in one of the first few principal components (PCs).

In Fig. 3A, we show the scatter plots of cells based on their coordinates along PC1 and PC2. The colors of the dots reflect the size factors of the respective cells, with brighter shades (yellow) indicating larger size factors. Recall that larger size factors correspond to cells with larger sampling depths across the stable genes (see Eq. (7)). In order to evaluate whether size factors correlate with the first few PCs, we calculated the canonical correlation coefficient (\(\rho\)) [31] between the size factors and the top 5 PCs; \(\rho\) close to 1 would indicate strong correlation between the size factors and one of the top 5 PCs. For Svensson 1 raw counts (left panel Fig. 3A), we can clearly observe a color gradient along PC1, with cells with larger size factors lying predominantly on the left and cells with smaller size factors predominantly on the right. Thus for raw counts, sampling depth differences indeed show up as a major source of variation between the cells. The value of the canonical correlation coefficient - \(\rho = 0.97\) - supports this further.

Next, we applied the Piccolo normalization to Svensson 1 (right panel in Fig. 3A) and confirmed that the sampling depth differences are no longer identified as a major source of variation by PCA. In fact, not only do we not observe a color gradient along PC1 or PC2, but even the canonical correlation coefficient between the size factors and the top 5 PCs is significantly reduced to \(\rho = 0.3\) which suggests a weak correlation. Similar observations were made for another technical control data set [32] (see Fig. S17). These results demonstrate that the Piccolo normalization is able to reduce the impact of systematic differences in sampling depths.

To examine the effectiveness of variance stabilization, we looked at the variances of the residuals after applying normalization to the raw counts. We compared Piccolo with two other residuals-based normalization methods - analytic Pearson [21](Analytic Pearson), and the regularized NB regression approach in scTransform [20, 29] (SCTransform v2). For reference, we also looked at the simple logSF based normalization approach implemented in Scran [12] (Scran logSF). Note, Scran relies on pooling of cells to arrive at estimates for the cell-specific size factors that are then used for the logSF normalization.

For the PBMC 33k data set, we first used our feature selection method to shortlist the top 3000 HVGs, and then applied the Piccolo normalization to compute the residuals corresponding to the raw counts of these HVGs. The Analytic Pearson residuals were also computed for these top 3000 HVGs identified with our feature selection method. This enables a direct comparison between the two methods since the residuals were calculated using the same set of features. In contrast, for SCTransform v2 and Scran logSF, the top 3000 HVGs were shortlisted using their own respective approaches. For each of the normalization methods, we then calculated the variances of the residuals. These variances are shown in the variance-mean linear-log plots in Fig. 3B. It is apparent that the residuals obtained from Piccolo (Fig. 3B, top-left panel) exhibit much lesser scatter compared to the other two residuals-based approaches (Fig. 3B, top-right panel and bottom-right panel). In Fig. 3B bottom-left panel, we also show the variance of the log-transformed normalized values obtained with Scran logSF for reference. We note that the log-transformed values also exhibit much lesser deviation from 1 compared to the raw counts based residuals methods. Note, given the heteroskedastic nature of our counts data the increase in the variances of the transformed values (obtained from log-transformation based normalization or our variance stabilized residuals based normalization) as the mean expression levels increase is not eliminated. However, compared to the raw counts this dependence is reduced for the transformed values which plays an important role when we employ PCA downstream.

Piccolo feature selection and normalization lead to concrete improvements in downstream cell clustering

Residuals-based normalization which includes a variance stabilization transformation preserves cell-cell similarities between cells that share cell-type identities

Normalization is typically followed by dimensionality reduction and unsupervised clustering to identify groups of cells with similar expression profiles. Depending on the biological system, the groups of cells may correspond to distinct cell-types, or states. The identification of such groups is a pivotal step since it informs crucial downstream analyses such as differential expression and marker genes identification. The most popular scRNA-seq workflows (for example, Seurat [15,16,17,18] and Scanpy [19]) employ PCA to perform dimensionality reduction. Based on the PCs, k-nearest neighbour (k-NN) graphs are generated (with cells as nodes) in which communities of cells that are most similar to each other are detected using graph-partitioning algorithms such as Leiden [33] and Louvain [34].

To investigate how well our normalization method preserves cell-cell similarities between cells that share cell-type identities, we began by examining a truth-known data set (data set in which the cell-type identities of the cells are already known) prepared by Duo et al. [35] using cells purified with cell-type specific isolation kits by Zheng et al. [36]. Briefly, they prepared the data set by shortlisting purified cells belonging to 8 PBMC cell-types - B-cells, CD14 monocytes, CD56 NK cells, CD4 T-helper cells, memory T-cells, naive T-cells, naive cytotoxic T-cells, and regulatory T-cells - such that there were roughly equal numbers of cells corresponding to each cell-type in the final data set (between 400-600 cells per cell-type). We refer to this data set as Zheng Mix 8eq. Since Zheng Mix 8eq consists of a mix of well-separated cell-types (for instance, B-cells vs T-cells) and similar cell-types (different types of T-cells), it provides a simple yet reasonably challenging scenario for evaluating how well cells belonging to the different cell-types can be distinguished after normalizing the counts with the respective normalization methods.

We used Piccolo to shortlist the top 3000 HVGs and applied our normalization to obtain the residuals for those HVGs. For Analytic Pearson, the residuals were computed for the top 3000 HVGs shortlisted using our feature selection method, while for Scran logSF and SCTransform v2 the transformed counts and residuals were respectively computed for the top 3000 HVGs shortlisted using their own methods. Subsequently, we performed PCA and shortlisted the first 50 PCs. We then used a simple k-NN based classification approach based on the PCs to predict cell-type labels for each cell by relying on the known cell-type labels (see Methods). Finally, we evaluated the extent of the agreement between the predicted labels and the known labels by calculating the following clustering metrics: the Macro F1 score, the adjusted Rand index (ARI), and the adjusted mutual information (AMI).

In Fig. 4A, we show the Uniform Manifold Approximation and Projection (UMAP) [37] plots for Zheng Mix 8eq with Piccolo normalization (top-left panel), Analytic Pearson (top-right panel), Scran logSF (bottom-left panel), and SCTransform v2 (bottom-right panel). In the plots, each dot represents a cell and is colored according to the known cell-type labels (legend provided at the bottom of the 4 panels). Qualitatively, it is apparent from the UMAP plots that the B-cells, CD56 NK cells, and CD14 monocytes can easily be distinguished compared to the rest. As expected, it’s more difficult to distinguish between the different kinds of T-cells, with memory and naive cytotoxic T-cells being the only ones that are comparatively easier to distinguish, particularly with the residuals-based approaches. The values of ARI, AMI, and Macro F1 quantifying the extent of the agreement between the predicted and the known cell labels are listed in the bottom-right corner of the panels for the respective normalization methods. While there isn’t a significant difference in the metrics between the 4 normalization methods, we do observe the highest values with Piccolo.

Piccolo normalization preserves cell-cell similarities between cells sharing cell-type identities. A 2D UMAP plots after applying respective normalizations for the top 3000 HVGs of the Zheng Mix 8eq data set (consists of 3994 cells with roughly equal numbers of cells belonging to 8 distinct PBMC cell-types). Dots represent cells and are colored using the known cell-type labels (legend at the bottom of the panel). Clustering metrics - ARI, AMI, Macro F1 - based on comparisons between predicted cell labels (obtained using a kNN-based classification approach) and known cell labels are listed in the bottom-right corner in each panel. B Violin-box plots of the clustering metrics obtained for 100 subsets of the Zheng Mix 8eq data set. The colors correspond to the respective normalization methods used. For all the 100 subsets, the highest values of the metrics was observed with Piccolo (red) (seeAdditional file 1: Table 2). C Violin-box plots comparing the clustering metrics obtained for 100 subsets of the Zheng Mix 8eq data set with Piccolo normalization used with HVGs shortlisted obtained from other methods; Piccolo (Scran LogSF) and Piccolo (SCTransform v2) refer to the methods where the top 3000 HVGs were obtained using Scran logSF and SCTransform v2 respectively, and then the normalization was performed on those HVGs using Piccolo. Piccolo yielded higher values of the clustering metrics despite relying on HVGs shortlisted by other methods (see Additional file 1: Table 3). D Comparisons of overlap between the k-NN inferred separately on two halves of data split by genes. Relative k-NN overlap was calculated by dividing the mean overlap per data set by its average across all normalization methods. Colored dots indicate averages across the 100 splits (small grey dots) per normalization method - their colors are consistent with the colors in panel B. This panel is similar to Fig. 2a in [22] and highlights that Piccolo (red) surpasses the other methods in fulfilling the necessary condition of k-NN consistency

However, these observations are not sufficient to argue for or against any of the methods. For a more robust comparison between the normalization methods, we created 100 subsets of Zheng Mix 8eq by randomly picking \(50\%\) of the cells in the original data set 100 times. For each of the 100 subsets, we used the same approach as discussed above for the 4 normalization methods and computed the respective ARI, AMI, and Macro F1 scores for the predicted cell labels based on the kNN-based classification approach. In Fig. 4B, we show the violin-box plots for the ARI, AMI, and Macro F1 scores for the 100 subsets. The colors correspond to the respective normalization methods used (red corresponds to Piccolo). We can clearly see that for all 3 clustering metrics, the highest values were consistently obtained with Piccolo normalization, reflecting that the best agreement between predicted and known labels is achieved using our feature selection and normalization method. For each clustering metric, we used paired Wilcoxon tests to quantify whether the differences between the values of the metric obtained with Piccolo normalization and other normalization methods were statistically significant (see Methods). For all 3 metrics, the values obtained with Piccolo normalization were found to be consistently higher than those obtained with other methods (all paired Wilcoxon test p-values were found to be highly significant - \(p < 1E-11\), see Additional file 1: Table 2).

For our analyses on Zheng Mix 8eq so far, while Piccolo and Analytic Pearson normalization were applied on the same set of HVGs, the sets of HVGs for Scran logSF and SCTransform v2 were different. Thus, some of the differences in the results are attributable to the differences in the sets of HVGs. To compare just the normalization methods, we used the top 3000 HVGs shortlisted by Scran logSF and SCTransform v2 respectively, and computed the residuals using Piccolo normalization for these respective HVGs. Piccolo (Scran logSF) denotes the method wherein the HVGs were obtained from Scran logSF and then the Piccolo normalization was performed for those HVGs. Similarly, Piccolo (SCTransform v2) denotes the method wherein the HVGs were obtained from SCTransform v2 and then the Piccolo normalization was performed for those HVGs. By applying these methods, we arrived at clustering metrics for the 100 subsets which are shown using the violin-box plots in Fig. 4C. The colors correspond to the feature selection and normalization methods used. We used paired Wilcoxon tests to compare the values of the metrics obtained with the normalization method that was used to shortlist the HVGs, with values of the metrics obtained with Piccolo normalization using those HVGs. For ARI and Macro F1, the values obtained with Piccolo normalization (Piccolo (Scran logSF) and Piccolo (SCTransform v2)) were consistently higher than the values obtained with the respective normalization methods with which the HVGs were shortlisted (paired Wilcoxon test \(p < 1E-09\)). For AMI, while the values with Piccolo (Scran logSF) were consistently higher than with Scran logSF (paired Wilcoxon test \(p < 1E-17\)), the values obtained with Piccolo (SCTransform v2) were not as significantly high compared to the ones obtained with SCTransform v2 (paired Wilcoxon test \(p < 0.06\)) (see Additional file 1: Table 3). Overall, it is clear that even with other HVGs, Piccolo normalization is better at preserving cell-cell similarities between cells of the same cell-type compared to the other normalization methods. Moreover, from Fig. 4C what is most striking is that the values of the clustering metrics obtained using our feature selection and normalization are the highest overall (red violin-box plot corresponds to Piccolo). This clearly suggests that not only is the performance of Piccolo normalization consistently better than the other normalization approaches (assessed with the aid of the clustering metrics), but even our feature selection method is effective at shortlisting HVGs that better inform the differences between cells with distinct cell-type identities.

Piccolo maintains consistency of k-nearest neighbors - a necessity for ensuring robustness of downstream analyses involving nearest neighbor graphs

In a recent article comparing different transformations for scRNA-seq data, Ahlmann-Eltze and Huber highlighted the k-nearest neighbor (k-NN) graph as a fundamental data structure which is used to infer cell-types, states, trajectories etc [22]; to remind, the k-NN graph in scRNA-seq analyses is obtained by relying on a lower-dimensional representation of the cells using PCA, and subsequently shortlisting the k-NNs of each cell based on Euclidean distances in the PC space (typically k is 10). They pointed out that the consistency of the k-NNs is a necessary (albeit not sufficient) condition for the robustness of downstream analyses that rely on k-NN graphs. They evaluated k-NN consistency by evenly splitting each data set into two halves based on the genes, such that the two resultant subsets contained mutually exclusive sets of genes (they referred to them as gene subset 1 and gene subset 2, see Fig. 2a in [22]). They then applied the respective transformation approaches to the gene subset 1 and gene subset 2 datasets separately and obtained k-NNs for each cell corresponding to each of the subsets. Using these 2 sets of k-NN cells for each cell, they performed a pairwise comparison to determine the extent of overlap between them and thereby assess consistency.

We performed a similar analysis for the Zheng Mix 8eq (10X), Mouse Cortex r1 (DroNC-seq), and PBMC r1 (inDrops) datasets (see Methods). While Ahlmann-Eltze and Huber only considered 10X derived UMI counts datasets, we included datasets obtained from other droplet-based high-throughput technologies to showcase the performance of Piccolo in preserving k-NN consistency. We performed the gene-based splits for each data set 100 times. k-NN overlaps were obtained per cell and then averaged across all the cells to arrive at one mean estimate per iteration. Relative k-NN overlap values were calculated by dividing these mean NN overlap values by their average across all iterations for all 4 normalization methods. Figure 4D shows the resultant values of the relative k-NN overlaps for each normalization method for the 100 splits (small grey dots). The large colored dots indicate the averages across the 100 splits per normalization method; colors of the dots were kept consistent with the colors used in panel B for each of the methods. Unlike Fig. 2a in [22] where the authors aggregated the relative k-NN overlap values across the datasets, we show the relative k-NN overlaps for each data set separately to highlight that Piccolo (red) easily surpasses the other methods in fulfilling the necessary condition of k-NN consistency despite basic differences in these droplet-based high-throughput technologies (all paired Wilcoxon test p-values between Piccolo and other methods were less than \(2.2E-10\)). Based on these results, we conclude that our proposed normalization method ensures the consistency of k-NNs and will therefore enable more robust inferences to be drawn from downstream analyses that rely on k-NN graphs.

Piccolo enables identification of groups containing few cells as well as groups with cells that express fewer differentially expressed genes

To further investigate the performance of Piccolo, we utilized 3 simulation tools—SPARSim [38], Splat [39], and SCRIP [40]— to simulate counts based on estimation of parameters derived from real UMI counts datasets. We selected these 3 tools since they enable de novo simulations of single cell counts wherein we can specify both the number of groups of cells, as well as the extent of differential expression between the groups. In a recent benchmarking study of scRNA-seq simulation methods [41], SPARSim was in fact found to rank among the best in terms of overall performance (see Fig. 2 in [41]). In order to generate simulated counts for user-specified number of groups, all 3 methods require a homogeneous cell population as input to estimate the parameters for simulation. Keeping this in mind, we prepared a data set consisting exclusively of B cells obtained from the Zheng Mix 8eq data set. In addition, we used the NIH/3T3 and HEK293T datasets since the cells in these two datasets are derived from cell lines and therefore constitute homogeneous cell populations.

We explored the following two simple simulation scenarios to benchmark and compare the normalization methods:

Piccolo enables identification of groups containing fewer cells as well as groups of cells with fewer differentially expressed genes. A Violin-box plots of the clustering metrics—ARI, AMI, Macro F1—obtained for simulated counts data generated for Scenario 1 based on parameters derived from 3 different real datasets (each row corresponds to one data set) and 3 different simulation tools (each column corresponds to one simulation tool). The colors correspond to the respective normalization methods used. For most simulated counts datasets, the highest values of the metrics were observed with Piccolo (red). B Heatmap showing the mean F1 scores per group aggregated over all the simulated counts data for Scenario 1 for each of the normalization methods. The tiles of the heatmap are colored according to the mean F1 scores with larger scores corresponding to brighter shades (yellow), and lower scores corresponding to darker shades (deep blue). Piccolo has the highest mean F1 scores for all 6 groups, with the improvement especially notable for Group 6 (group with fewest cells). C Same as panel B but for Scenario 2 (see Additional file 1: Fig. S22). Piccolo has the highest mean F1 scores for all 6 groups. Note, colors are scaled differently in panels B and C to bring out the differences between the mean F1 scores in the respective simulation scenarios

-

Scenario 1: 6 groups of cells with different number of cells per group, while keeping the extent of differential expression between the groups roughly the same. The objective behind this simulation was to examine whether the groups with the fewest cells can be reliably identified after applying the different normalization methods.

-

Scenario 2: 6 groups of cells with the same number of cells per group, but with different numbers of differentially expressed genes in each group. The objective behind this simulation was to examine how well we can distinguish between cells belonging to distinct groups, especially the ones that have fewer differentially expressed genes.

For each scenario and for each of the 3 reference datasets, we simulated 50 datasets using the 3 simulation tools respectively (see Methods for details). This gave us 450 simulated counts datasets for each scenario discussed above (900 in total). For each data set, we applied the 4 normalization methods and applied PCA to the residuals/transformed counts to shortlist the first 50 PCs. This was followed by the application of our k-NN based classification approach to predict the labels for each cell based on the known cell-group labels. We then quantified the agreement between the predicted labels and the known labels by estimating ARI, AMI, and Macro F1 values. In panel A in Fig. 5, we show the violin-box plots for the 3 clustering metrics for the simulated datasets generated for Scenario 1 (see Additional file 1: Fig. S22 for the corresponding panels for Scenario 2). The colors correspond to the normalization methods used. The panels in Fig. 5A are arranged such that the rows correspond to the reference datasets and the columns correspond to the simulation tool that was used to simulate the 50 datasets. We used paired Wilcoxon tests to compare the values of the metrics obtained with other normalization methods with those obtained after applying Piccolo. The results are summarized in Additional file 1: Tables 4, 5, 6, 7, 8 and 9. Overall, we observed that regardless of the data set or the simulation tool used to generate the simulated counts, the clustering metrics yielded after applying Piccolo (red) were consistently among the highest. Only for the simulated counts datasets generated by SPARSim for Scenario 2 based on the NIH/3T3 and HEK293T datasets were the clustering metrics obtained with Scran LogSF marginally but consistently higher (see Additional file 1: Fig. S22 and Table 5). In stark contrast, for the simulated counts datasets generated by Splat for both Scenario 1 and Scenario 2 based on the NIH/3T3 and HEK293T datasets the clustering metrics obtained with Scran LogSF were consistently the lowest, while with Piccolo we continued to observe the highest values.

In order to evaluate how well the methods enabled the identification of rarer cell populations (Group 6 in Scenario 1), as well as groups of cells with fewer differentially expressed genes (Group 6 in Scenario 2), we aggregated the group-wise F1 scores across all the simulated datasets for each scenario. Panels B and C show the heatmaps of the mean F1 scores per group for Scenario 1 and Scenario 2 respectively. The tiles of the heatmap have been colored based on the values of the F1 scores, with brighter shades (yellow) indicating larger values (greater agreement between the predicted and the known group labels), and darker shades (dark blue) indicating smaller values (less agreement between the predicted and the known group labels). For both scenarios, the highest mean F1 scores for all the groups were obtained after using Piccolo. Note in particular, the differences between the mean F1 scores for Group 6 for Scenario 1. The fact that these improvements are so noticeable after aggregating across 450 datasets underscores the concrete improvements in cell-cell grouping enabled by our feature selection and normalization method.

The consistency with which we observed higher values of the clustering metrics with Piccolo becomes all the more striking when we take into account the differences in the nature of the counts data generated by the different simulation frameworks. It is well understood that all simulation frameworks have limitations, and cannot faithfully capture all the attributes of real datasets [41]. Overall, SPARSim generated counts that appeared to closely follow the expected mean-variance relationship (see \(\sigma ^2\) vs. \(\mu\) log-log and \(\alpha _{QP}\) vs. \(\mu\) log-log plots in Additional file 1: Figs. S4, S5 and S6). SCRIP generated simulated counts data exhibited the most unusual mean-variance behavior, especially for genes with larger \(\mu\) (see Additional file 1: Figs. S10, S11 and S12). This was surprising considering that it was proposed as an improvement over the popular Splat framework. Given that our normalization method relies on first-order approximations for the mean and variance of the log-transformed counts, we expected that such differences in the nature of the counts would lead to significant variations in the performance of Piccolo and potentially lead to poor results. However, as demonstrated with the aid of these sets of simulations the method is robust to such differences as long as they don’t deviate too significantly from the expected behavior for counts usually obtained from high-throughput protocols.

In summary, with the help of Piccolo we observed concrete improvements in the clustering outputs, both in terms of identification of rarer cell groups as well as groups of cells that expressed fewer DE genes. The simulation scenarios were deliberately kept simple in order to facilitate a more straightforward comparison and interpretation. When we factor in the differences in the nature of counts generated by the different simulation tools as well as the fact that the counts were generated based on parameters derived from 3 independent datasets, the robustness of the improvements enabled by Piccolo become all the more compelling.

Discussion

We began our investigation into the nature of UMI counts by examining the mean-variance relationships of the observed counts for the genes and showed that for genes with small counts the variance of the counts can be approximated quite well by the quasi-Poisson variance. We pointed out that this quasi-Poisson nature of the variance simply reflects the fact that the counts for the respective genes are small in most cells. We followed this by examining and questioning the assumption underlying typical scRNA-seq workflows. In a typical scRNA-seq workflow, feature selection is preceded by normalization. Implicit in this sequence of steps is the assumption that features which exhibit high biological variability can be identified only after taking into account the systematic technical biases. We pointed out that this assumption reflects a confusion between the distinct objectives of identification of the differentially expressed genes, and the HVGs. While it is imperative that the counts be normalized to account for the systematic biases prior to a differential expression analysis, we showed that in fact it is possible to identify HVGs based on just the observed counts. We proposed a simple approach for feature selection that relies on quasi-Poisson dispersion coefficients estimated from the observed counts using a regression-based method. A key advantage with assessing the overall variability of counts for each gene prior to normalization is the ability to identify genes whose counts do not vary significantly across the cells. We refer to these genes as stable genes. We posited that the variability of counts for such stable genes is primarily reflective of the systematic biases (such as sampling depth differences) and can more reliably inform the estimation of size factors for each cell. Based on these observations, we propose a revision of the scRNA-seq workflow in which feature selection precedes, and in fact informs normalization (see Fig. 6).

Before proceeding to discuss the salient aspects of our revised workflow and the normalization method proposed in this paper, it will be helpful to summarize and highlight some key aspects of the existing methods. In a recent article comparing different transformations for scRNA-seq data, Ahlmann-Eltze and Huber showed that the simple logSF normalization outperformed the residuals-based normalization methods particularly in ensuring consistency of the k-nearest neighbors [22]; consistency was evaluated by evenly splitting the data into two halves such that the two subsets contained mutually exclusive sets of genes, and inquiring whether the cells shared the same sets of neighbouring cells between the split subsets (nearest neighbour cells were identified based on Euclidean distances between the cells in the PC space). This consistency is primarily attributable to the variance stabilization ensured by the log-transformation. However, as pointed out earlier, a significant disadvantage of the logSF normalization is that it is not very effective at reducing the sampling depth differences between the cells (Additional file 1: Fig. S15). In contrast, the residuals-based methods reduce sampling-depth differences between the cells much more effectively (Additional file 1: Fig. S16). However, a drawback of the residuals-based approaches is that they are unable to effectively reduce and stabilize the variances, particularly for marker genes (see Appendix and [22]).

Keeping in mind the respective advantages and disadvantages of the logSF normalization and the residuals-based methods described above, we proposed a residuals-based (z-scores) based normalization method which includes a variance stabilization transformation (the default transformation function used in Piccolo is the log, see Appendix for descriptions of other options).

The revised scRNA-seq workflow implemented in Piccolo. We contrast the conventional scRNA-seq workflow (left) with the revised scRNA-seq workflow (right) proposed by us and implemented in Piccolo. The starting point for all analyses are the counts matrices. In the conventional workflow, the counts are first normalized and variance stabilized followed by feature selection to identify HVGs. Dimensionality reduction is then performed on the normalized counts. In contrast, in the revised workflow proposed by us, we first perform feature selection to identify both the HVGs as well as stable genes. We rely on the stable genes to estimate cell-specific size factors for performing normalization. During normalization, we also ensure variance stabilization which in turn leads to significant improvement while performing dimensionality reduction with PCA

Given the observation that the majority of counts obtained from high-throughput technologies are small, we relied on first-order approximations for the means and variances of the transformed counts to estimate the residuals (see Additional file 1: Fig. S18). In addition, the residuals relied on means and variances that were adjusted using the size factors estimated with the help of stable genes. Thus, our conceptually straightforward approach simultaneously ensures variance stabilization and reduces the impact of the sampling depth differences thereby leading to concrete improvements in the downstream clustering performance.

We first applied our normalization method on technical control datasets (Fig. 3A and Additional file 1: Fig. S17) and demonstrated that it does reduce the impact of sampling depth differences between the cells. Using the PBMC 33k data set (and other datasets, see Additional file 1: Fig. S19), we showed that our normalization method also ensures effective variance stabilization. We then applied Piccolo to a truth-known data set, and showed that it improves downstream clustering analysis and is able to preserve cell-cell similarities between cells that share cell-type identities better than other popular normalization methods. We also examined how well the consistency of k-NNs was ensured by Piccolo, and demonstrated that it surpasses the other methods in satisfying this basic requirement to ensure the robustness of downstream analyses that rely on k-NN graphs. With the help of simulations, we were also able to show that Piccolo consistently enabled the identification of groups that contain small number of cells, whereas other normalization methods failed to consistently and reliably ensure the same. In addition, we also showed that with Piccolo we can better distinguish between groups that express fewer differentially expressed genes. These results are especially relevant biologically when viewed from the perspective of the identification of rare cell-types, or distinguishing between cell states.

While our method offers significant improvements over the existing workflows, we now discuss some of its limitations. Beginning with feature selection, a fundamental assumption underlying our bin-based approach is that across all expression levels there are always some genes which are not biologically variable. This assumption is not motivated by biological observations and has been made to facilitate ease of computation. It is possible that we overlook some HVGs because of this assumption. In our current implementation, we mitigate this by keeping the level for the reference dispersion coefficient in each bin relatively low (10th quantile is the default). However, there is definite scope for further improvement of the feature selection process to ensure that the HVGs are more effectively identified. Another point of consideration tied to feature selection is the use of stable genes for estimating cell-specific size factors. Due to the sparsity of the data, it is possible that the counts across the stable genes for some cells are all zero resulting in the size factor estimates to be zero for those cells. In Piccolo, we address this by iteratively adding sets of genes from the bottom of the list of HVGs till none of the cells have a size factor estimate of zero. Despite this limitation, we still expect that these size factors will not be confounded by actual biological variation as much as when we estimate them using all the genes (since that includes the HVGs). With regard to our normalization method, we relied on the first-order approximations for both the mean and the variance under the variance stabilization transformation. These approximations will work well as long as the non-linear transformation function is approximately linear in the range of the observed counts (see Additional file 1: Fig. S18). For small counts, this is indeed true, however for larger counts these approximations may lead to incorrect estimates. Given the nature of the droplet-based UMI counts data at present, our results suggest that the first-order approximations work quite well. We point out here that for datasets that exhibit larger counts and less sparsity, the conventional approach of the log-based normalization can be expected to work reliably and is available as one of the options in the Piccolo R package. We also want to note that in this study we did not discuss and elaborate on differential expression (DE) analysis which forms a vital component of all scRNA-seq studies. This was done to focus attention on the conceptual clarifications and simplifications for the core steps of feature selection and normalization that shape all downstream analyses, including identification of DE genes. We remind here that if we are unable to consistently and reliably identify groups of cells that actually correspond to distinct cell-types or states, then the downstream DE analyses are unlikely to be as informative and helpful. In the worst cases, they may even be misleading. Briefly, we would like to mention here that after applying our normalization method to the observed counts, the distribution of the residuals are brought closer to the normal distribution, thus making it possible to employ the two-sample Student’s t-test with the null hypothesis that the means of the two samples are the same. Typically, in scRNA-seq analyses the Wilcoxon rank-sum test, which is a non-parametric alternative to the two-sample t-test, is the preferred choice.