Abstract

In this paper, we provide an overview of some recently introduced principles and ideas for speech enhancement with linear filtering and explore how these are related and how they can be used in various applications. This is done in a general framework where the speech enhancement problem is stated as a signal vector estimation problem, i.e., with a filter matrix, where the estimate is obtained by means of a matrix-vector product of the filter matrix and the noisy signal vector. In this framework, minimum distortion, minimum variance distortionless response (MVDR), tradeoff, maximum signal-to-noise ratio (SNR), and Wiener filters are derived from the conventional speech enhancement approach and the recently introduced orthogonal decomposition approach. For each of the filters, we derive their properties in terms of output SNR and speech distortion. We then demonstrate how the ideas can be applied to single- and multichannel noise reduction in both the time and frequency domains as well as binaural noise reduction.

Similar content being viewed by others

1 Review

1.1 Introduction

The problem of speech enhancement, or noise reduction as it is also sometimes called, is a well-known, longstanding problem with important applications in, for example, speech communication systems and hearing aids, where additive noise can, and often does, have a detrimental impact on the speech quality. Although the problem is a classical one and many solutions have been proposed throughout the years, it has arguably not been well-understood, even for the comparably simple case of linear filters. Indeed, it is not until quite recently that steps have been taken to accurately formulate the problem and characterize the desirable properties of possible solutions. Simply put, the performance of speech enhancement methods can be assessed in terms of two quantities, namely noise reduction and speech distortion, and an optimal solution to the speech enhancement would, thus, explicitly take both into account. As an example that this has historically not been done, consider the classical and well-known Wiener filter. It is usually derived from a mean-square error (MSE) criterion, and it is not until recently that its properties in terms of noise reduction and speech distortion have been thoroughly analyzed [1]. Although it was shown in [1] that the Wiener filter indeed improves the signal-to-noise ratio (SNR) (this had not been shown before), it does so without any explicit control over the amount of distortion incurred on the speech signal. As a result, other filters are worth considering. The minimum variance distortionless response (MVDR) filter, in principle, guarantees that no distortion is incurred on the speech signal while the noise is reduced as much as possible. The maximum SNR filter, on the other hand, yields the highest possible output SNR but does so at the cost of a considerable amount of speech distortion. Other competing methods to linear filtering include spectral subtraction methods [2], subspace methods [3, 4], and statistical methods [5–7].

In this paper, we continue the research into methods for speech enhancement based on linear filtering. More specifically, we provide a brief overview of linear filters derived from the conventional approach and the recently introduced orthogonal decomposition approach. We do so in a more general framework than what is typical. More specifically, the speech enhancement problem is stated as the problem of finding a rectangular filter matrix for estimating the speech signal vector from a noisy signal observation vector. Using the two aforementioned approaches, we derive the maximum SNR, Wiener, tradeoff, and MVDR filters and analyze and relate their properties. All of the derived filters are based on second-order statistics of the observed signal as well as the noise. While estimation of these statistics are not considered herein, there exist multiple, well-known methods for conducting this estimation in practice both in single-channel [8, 9] and multichannel [10–12] scenarios. Finally, we then proceed to demonstrate and discuss their application in various settings, including time and frequency domain enhancement and single- and multichannel enhancement.

The rest of the paper is organized as follows. In Section 1.2, we introduce the signal model and basic assumptions and state the problem at hand. We then, in Section 1.3, address the problem using the conventional approach, define various useful performance measures, and derive and compare some optimal filters. We then proceed to present an alternative approach based on the orthogonal decomposition in Section 1.4, and we use this to derive optimal filters. These are then also compared in terms of their noise reduction and speech distortion properties. In Section 1.5, we show how the two approaches can be applied in various speech enhancement contexts before concluding on the work in Section 2.

1.2 Signal model and problem formulation

We consider the very general signal model of an observation signal vector of length M:

where the superscript T is the transpose operator, and x and v are the speech and noise signal vectors, respectively, which are defined similarly to the noisy signal vector, y. We assume that the components of the two vectors x and v are zero mean, stationary, and circular. We further assume that these two vectors are uncorrelated, i.e., E(x vH)=E(v xH)=0M×M, where E(·) denotes mathematical expectation, the superscript H is the conjugate-transpose operator, and 0M×M is a matrix of size M×M with all its elements equal to 0. In this context, the correlation matrix (of size M×M) of the observations is:

where Φ x =E(x xH) and Φ v =E(v vH) are the correlation matrices of x and v, respectively. In the rest of this paper, we assume that the rank of the speech correlation matrix, Φ x , is equal to P≤M and the rank of the noise correlation matrix, Φ v , is equal to M.

In order to be able to derive appropriate performance measures and optimal linear filters that can achieve a clear objective according to these measures, it is of great importance to define, without any ambiguity, the desired signal that we want to estimate or extract from the observations. Also, in general, y should be written explicitly as a function of this desired signal. In some context, x1, the first element of x, is the desired signal; in some other situations, the whole vector x or part of it is the desired signal vector. Therefore, in a general manner, our desired signal vector is defined as:

where 1≤Q≤M. In the same way, we define the vector v Q as the first Q components of v. Then, the objective of speech enhancement (or noise reduction) is to estimate x Q from y. This should be done in such a way that the noise is reduced as much as possible with no or little distortion of the desired signal vector [1, 13–15]. In the rest of this study, we consider two important cases: without (conventional approach) and with the orthogonal decomposition of the speech signal vector.

1.3 Speech enhancement with the conventional approach

1.3.1 Principle

Our objective is to estimate x Q from y, even though y is not an explicit function of x Q . With linear filtering techniques [3, 4, 16–20], the desired signal vector is estimated as:

where z is supposed to be the estimate of x Q ,

is a rectangular filtering matrix of size Q×M, h q , q=1,2,…,Q are complex-valued filters of length M, xfd=H x is the filtered desired signal, and vrn=H v is the residual noise. We deduce that the correlation matrix of z is:

where and .

An interesting particular case is Q=P=1. In this scenario, Equation 4 simplifies to:

where h is a complex-valued filter of length M. Since Φ x is a rank 1 matrix, it can be written as:

where is the variance of x1 and d is a vector of length M, whose first element is equal to 1.

1.3.2 Performance measures

We are now ready to define the most important performance measures in the context of linear filtering.

The input SNR is defined as:

where tr(·) denotes the trace of a square matrix, and and are the correlation matrices (of size Q×Q) of x Q and v Q , respectively.

The output SNR, obtained from Equation 6, helps quantify the SNR after filtering. It is given by:

Then, the main objective of speech enhancement is to find an appropriate H that makes the output SNR greater than the input SNR. Consequently, the quality of the noisy signal may be enhanced.

The noise reduction factor quantifies the amount of noise being rejected by H. This quantity is defined as the ratio of the power of the original noise over the power of the noise remaining after filtering, i.e.,

Any good choice of H should lead to ξnr(H)≥1, in which case the noise has been attenuated.

The desired speech signal can be distorted by the rectangular filtering matrix. Therefore, the speech reduction factor is defined as:

For optimal filters, we should have ξsr(H)≥1 as the optimal filter would otherwise amplify the desired signal.

By making the appropriate substitutions, one can derive the relationship among the measures defined so far, i.e.,

This fundamental expression indicates the equivalence between gain/loss in SNR and distortion (for both speech and noise).

Another way to measure the distortion of the desired signal vector due to the filtering operation is via the speech distortion index defined as:

The speech distortion index is always greater than or equal to 0 and should be upper bounded by 1 for optimal rectangular filtering matricesa; so the higher the value of υsd(H) is, the more the desired signal is distorted.

We define the error signal vector between the estimated and desired signals as:

which can also be expressed as the sum of two uncorrelated error signal vectors:

where

is the signal distortion due to the rectangular filtering matrix and

represents the residual noise. Therefore, the MSE criterion is:

where

is the identity filtering matrix, with I Q being the Q×Q identity matrix. Using the fact that , J(H) can be expressed as the sum of two other MSEs, i.e.,

where

and

We deduce that

We observe how the MSEs are related to the different performance measures.

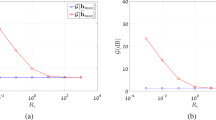

1.3.3 Optimal filters

Let λmax be the maximum eigenvalue of the matrix with corresponding eigenvector bmax. It can be shown that the maximum SNR filtering matrix is given by [20]:

where β q , q=1,2,…,Q are arbitrary complex numbers with at least one of them different from 0. The corresponding output SNR is:

The output SNR with the maximum SNR filtering matrix is always greater than or equal to the input SNR, i.e., oSNR(Hmax)≥iSNR. We also have oSNR(H)≤λmax,∀H. The best way to find the β q s is by minimizing distortion. By substituting Hmax into the distortion-based MSE and minimizing with respect to the β q s, we get

If we differentiate the MSE criterion, J(H), with respect to H and equate the result to zero, we find the Wiener filtering matrix:

where I M is the M×M identity matrix. The output SNR with the Wiener filtering matrix is always greater than or equal to the input SNR, i.e., oSNR(HW)≥iSNR. Obviously, we have

and, in general,

To better compromise between noise reduction and speech distortion, we can minimize the speech distortion index with the constraint that the noise reduction factor is equal to a positive value that is greater than 1, i.e.,

where 0<β<1 to insure that we get some noise reduction. The previous optimization leads to the tradeoff filter:

where μ>0 is a Lagrange multiplier. The output SNR with the tradeoff filtering matrix is always greater than or equal to the input SNR, i.e., oSNR(HT,μ)≥iSNR, ∀μ>0. Usually, μ is chosen in a heuristic way, so that for

-

μ=1, HT,1=HW, which is the Wiener filtering matrix;

-

μ>1, results in a filtering matrix with low residual noise at the expense of high speech distortion (as compared to Wiener); and

-

μ<1, results in a filtering matrix with high residual noise and low speech distortion (as compared to Wiener).

We should have for μ≥1,

and for μ≤1,

Another filter can be derived by just minimizing Jds(H). We obtain the minimum distortion (MD) rectangular filtering matrix:

where is the pseudoinverse of Φ x . If Φ x is a full-rank matrix, HMD becomes the identity filter, Ii, which does not affect the observations. The MD filter is very close to the well-known MVDR filter.

For Q=P=1, it is possible to derive the MVDR filter. Indeed, by minimizing the variance of the filter’s output, ϕ z =hHΦ y h, or the variance of the residual noise, , subject to the distortionless constraint, hHd=1, we easily get

It can be checked that Jds(hMVDR)=0, proving that hMVDR is distortionless.

It is also possible to derive the MVDR (square) filtering matrix for Q=M. Using the well-known eigenvalue decomposition [21], the speech correlation matrix can be diagonalized as:

where

is a unitary matrix, i.e., and

is a diagonal matrix. The orthonormal vectors qx,1,qx,2,…,qx,M are the eigenvectors corresponding, respectively, to the eigenvalues λx,1,λx,2,…,λx,M of the matrix Φ x , where λx,1≥λx,2≥⋯≥λx,P>0 and λx,P+1=λx,P+2=⋯=λx,M=0. Let

where the M×P matrix T x contains the eigenvectors corresponding to the nonzero eigenvalues of Φ x and the M×(M−P) matrix Ξ x contains the eigenvectors corresponding to the null eigenvalues of Φ x . It can be verified that

Notice that and are two orthogonal projection matrices of rank P and M−P, respectively. Hence, is the orthogonal projector onto the speech subspace (where all the energy of the speech signal is concentrated), or the range of Φ x and is the orthogonal projector onto the null subspace of Φ x . Using Equation 43, we can write the speech vector as:

We deduce from Equation 44 that the distortionless constraint is:

since, in this case, . Now, from the criterion:

we find the MVDR:

Equation 47 can also be expressed as:

Of course, for P=M, the MVDR filtering matrix simplifies to the identity matrix, i.e., HMVDR=I M . As a consequence, we can state that the higher the dimension of the null space of Φ x is, the more the MVDR is efficient in terms of noise reduction. The best scenario corresponds to P=1. We can verify that Jds(HMVDR)=0.

1.4 Speech enhancement with the orthogonal decomposition of the speech signal vector

1.4.1 Principle

Another perspective for speech enhancement is to extract the desired signal vector, x Q , from x. This way, the observation signal vector, y, will be an explicit function of x Q . As a consequence, the objectives that we wish to achieve are much easier to handle.

In this section, we assume that the elements x q , q=1,2,…,Q are not fully coherent, so that is a full-rank matrix. To extract x Q from x, we need to decompose x into two orthogonal components: one correlated with (or is a linear transformation of) the desired signal vector and the other one orthogonal to x Q and, hence, will be considered as an interference signal vector. Specifically, the vector x is decomposed into the following form [22, 23]:

where

is a linear transformation of the desired signal vector, is the cross-correlation matrix (of size M×Q) between x and x Q , , and

is the interference signal vector. It is easy to see that xd and xi are orthogonalb, i.e.,

We observe that the first Q elements of xd and xi are equal to x Q and 0Q×1, respectively. Now, we can express the observation signal vector as an explicit function of x Q , i.e.,

With this approach, the estimator is:

where

is a rectangular filtering matrix of size Q×M, are complex-valued filters of length M, is the filtered desired signal, is the residual interference, and is the residual noise. The correlation matrix of z′ is then:

where , with being the correlation matrix (whose rank is equal to Q) of xd, , with being the correlation matrix of xi, and .

1.4.2 Performance measures

The input SNR is identical to the definition given in Equation 9.

From Equation 56, we deduce the output SNR:

where

is the correlation matrix of the interference-plus-noise. The obvious objective is to find an appropriate H′ in such a way that oSNR(H′)≥iSNR.

The noise reduction factor is:

A reasonable choice of H′ should give a value of the noise reduction factor greater than 1, meaning that the noise and interference have been attenuated by the filter.

The speech reduction factor is defined as:

A rectangular filtering matrix that does not affect the desired signal requires the constraintc:

Hence, ξsr(H′)=1 in the absence of (correlated) distortion and ξsr(H′)>1 in the presence of distortion.

Again, we have the fundamental relationship:

When no distortion occurs, the gain in SNR coincides with the noise reduction factor.

We can also quantify the distortion with the speech distortion index:

The speech distortion index is always greater than or equal to 0 and should be upper bounded by 1 for optimal filtering matrices, which corresponds to the case where the filtering matrix is just a matrix of zeros; so the higher the value of υsd(H′) is, the more the desired signal is distorted.

The error signal is:

It can be written as the sum of two orthogonal error signal vectors:

where

is the signal distortion due to the rectangular filtering matrix and

represents the residual interference-plus-noise. Having defined the error signal, we can now write the MSE criterion:

where

and

We deduce that

showing how the MSEs are related to the different performance measures.

1.4.3 Optimal filters

Let be the maximum eigenvalue of the matrix with corresponding eigenvector . We easily find that the maximum SNR filtering matrix with minimum distortion is:

and

The output SNR with the maximum SNR filtering matrix is always greater than or equal to the input SNR, i.e., . We also have .

The minimization of the MSE criterion leads to the Wiener filtering matrix:

which is identical to the Wiener filter obtained with the classical approach. Even though the Wiener filter obtained with the two different approaches is the same, its evaluation with the performance measures is slightly different due the conceptual difference between the two methods. We always have .

We can rewrite the Wiener filtering matrix as:

This form is interesting because it shows an obvious link with some other optimal filtering matrices as it will be verified later.

Another way to express the Wiener filter is:

The MVDRd rectangular filtering matrix is obtained by minimizing the MSE of the residual interference-plus-noise, Jrs(H′), subject to the constraint that the desired signal vector is not distorted. Mathematically, this is equivalent to:

The solution to the above optimization problem is:

which is interesting to compare to [Equation 75]. We can rewrite the MVDR as:

We should always have

By minimizing the speech distortion index with the constraint that the noise reduction factor is equal to a positive value that is greater than 1, we find the tradeoff filtering matrix:

which can be rewritten as:

where μ′≥0 is a Lagrange multiplier. Usually, μ′ is chosen in an ad hoc way, so that for

-

μ′=1, , which is the Wiener filtering matrix;

-

μ′=0 [from Equation 82], , which is the MVDR filtering matrix;

-

μ′>1, results in a filtering matrix with low residual noise (as compared to Wiener) at the expense of high speech distortion; and

-

μ′<1, results in a filtering matrix with high residual noise and low speech distortion (as compared to Wiener).

We always have . We should also have for μ′≥1,

and for μ′≤1,

The case Q=M is interesting because for both approaches, performance measures and optimal square filtering matrices are identical. We can draw the same conclusions for the case Q=P=1.

1.5 Application examples

In this section, we show how the two approaches can be applied to different applications of speech enhancement.

1.5.1 Single-channel noise reduction in the time domain

The single-channel noise reduction problem in the time domain consists of recovering the desired signal (or clean speech) x(t), t being the discrete-time index, of zero mean from the noisy observation (microphone signal) [1]:

where v(t), assumed to be a zero-mean random process, is the unwanted additive noise that can be either white or colored but is uncorrelated with x(t).

The signal model given in Equation 85 can be put into a vector form by considering the L most recent successive time samples, i.e.,

where x(t) and v(t) are defined in a similar way to y(t). We define the desired signal vector as:

that we can estimate from y(t) with either of the two methods. Estimating the desired signal using conventional, rectangular filters was considered in [24], while [22] considers the orthogonal decomposition approach. Simulation results showing the performance of the two filtering methods for single-channel noise reduction are also found in [22, 24].

1.5.2 Single-channel noise reduction in the time-frequency domain

Using the short-time Fourier transform (STFT), Equation 85 can be rewritten in the time-frequency domain as [13, 25]:

where the zero-mean complex random variables Y(k,n), X(k,n), and V(k,n) are the STFTs of y(t), x(t), and v(t), respectively, at frequency bin k∈{0,1,…,K−1} and time frame n. Here, the sample X(k,n) is the desired signal.

The simplest way to estimate X(k,n) is by applying a positive gain to Y(k,n) with the conventional approach. However, the noise reduction performance may be limited.

A more general approach to estimate the desired signal is by filtering the observation signal vector of length P[25]:

and using the orthogonal decomposition to extract X(k,n) from x(k,n), which is defined in a similar way to y(k,n). Thanks to this approach, the non-negligible interframe correlation is taken into account, which is not the case when just a gain is used. As a consequence, we can better compromise between noise reduction and speech distortion.

The STFT-based filtering methods for single-channel noise reduction was considered in, e.g., [26–28], where experimental results can also be found.

1.5.3 Multichannel noise reduction in the time domain

In the multichannel scenario, we have a microphone array with M sensors that captures a convolved source signal in some noise field. In the time domain, the received signals are expressed as [29, 30]:

where g m (t) is the acoustic impulse response from the unknown speech source, s(t), location to the m th microphone, ∗ stands for linear convolution, and x m (t) and v m (t) are, respectively, the convolved speech and additive noise at microphone m. We assume that the signals x m (t)=g m (t)∗s(t) and v m (t) are uncorrelated, zero mean, real, and broadband. By definition, x m (t) is coherent across the array. The noise signals, v m (t), are typically only partially coherent across the array.

By processing the data by blocks of L time samples, the signal model given in Equation 90 can be put into a vector form as:

where

is a vector of length L, and x m (t) and v m (t) are defined similarly to y m (t). It is more convenient to concatenate the M vectors y m (t), m=1,2,…,M together as:

where vectors and of length ML are defined in a similar way to .

We consider x1(t) as the desired signal vector. Our problem then may be stated as follows: given , our aim is to preserve x1(t) while minimizing the contribution of . Both approaches can be used but the one based on the orthogonal decomposition is more appropriate since it will better exploit the correlation among the convolved speech signals at the microphones for noise reduction. The orthogonal decomposition approach for multichannel noise reduction was considered in, e.g., [31, 32], where experimental results can also be found.

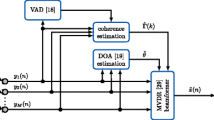

1.5.4 Multichannel noise reduction in the frequency domain

In the frequency domain, at the frequency index f, Equation 90 can be expressed as:

where Y m (f), G m (f), S(f), V m (f), and X m (f) are the frequency-domain representations of y m (t), g m (t), s(t), v m (t), and x m (t), respectively.

It is more convenient to write the M frequency-domain microphone signals in a vector notation:

where

and

Expression in Equation 95 depends explicitly on the desired signal, X1(f), that we want to estimate from y(f).

There is another interesting way to write Equation 95. First, it is easy to see that

where

is the partially normalized [with respect to X1(f)] coherence function between X m (f) and X1(f). Using Equation 97, we can rewrite Equation 95 as:

where

is the partially normalized [with respect to X1(f)] coherence vector (of length M) between x(f) and X1(f). This shows that the two approaches for noise reduction are identical. More details on multichannel noise reduction in the frequency domain as well as experimental results can be found in [25, 33].

1.5.5 Binaural noise reduction

Binaural noise reduction [34] consists of the estimation of the received speech signal at two different microphones with a sensor array of M microphones. One estimate is for the left ear and the other for the right ear. This way and thanks to our binaural hearing system, we will be able to localize the speech source in the space. In the frequency domain, we can estimate, for example, X1(f) and X2(f). As explained above, the two methods are the same. In the time domain, we can estimate, for example, x1(t) and x2(t). The method based on the orthogonal decomposition is more appropriate since it may distort less the signals. Distortion in binaural noise reduction is problematic since it may affect the cues for localization and separation. Experimental results and further theoretical details on binaural noise reduction using the approaches mentioned herein are found in [35, 36].

2 Conclusions

In this paper, we have given a brief overview of linear filtering methods for speech enhancement based on two approaches: a so-called conventional approach and an approach based on the orthogonal decomposition. In the context of these two different approaches, various optimal filters (e.g., MVDR, maximum SNR, and Wiener filters) have been derived and their properties in terms of different performance measures have been assessed and compared. These performance measures, simply put, quantify the properties of the filters and approaches in terms of noise reduction and speech distortion and show how they offer different tradeoffs between the two. We have also demonstrated how the approaches can be applied in various speech enhancement contexts, including single- and multichannel enhancement in both the time and frequency domains and in binaural noise reduction.

Endnotes

a The upper bound comes from the fact that this distortion is obtained when the filtering matrix only contains zeros, which should be the maximum expected distortion.

b It is legitimate to consider xi as an interference, since the desired signal is entirely in xd, and xi and xd are uncorrelated.

c Here, the distortionless constraint is in the sense that we can perfectly recover the desired signal vector, even though the residual interference can add some uncorrelated distortion to the desired signal.

d We use the terminology MVDR because we can completely extract the desired signal with this filter.

References

Chen J, Benesty J, Huang Y, Doclo S: New insights into the noise reduction Wiener filter. IEEE Trans. Audio Speech Lang. Process. 2006, 14(4):1218-1234. doi:10.1109/TSA.2005.860851

Boll S: Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 1979, 27(2):113-120. doi:10.1109/TASSP.1979.1163209 10.1109/TASSP.1979.1163209

Ephraim Y, Van Trees HL: A signal subspace approach for speech enhancement. IEEE Trans. Speech Audio Process. 1995, 3(4):251-266. doi:10.1109/89.397090 10.1109/89.397090

Jensen SH, Hansen PC, Hansen SD, Sørensen JA: Reduction of broad-band noise in speech by truncated QSVD. IEEE Trans. Speech Audio Process. 1995, 3(6):439-448. doi:10.1109/89.482211 10.1109/89.482211

McAulay RJ, Malpass ML: Speech enhancement using a soft-decision noise suppression filter. IEEE Trans. Acoust. Speech Signal Process. 1980, 28(2):137-145. doi:10.1109/TASSP.1980.1163394 10.1109/TASSP.1980.1163394

Ephraim Y, Malah D: Speech enhancement using a minimum mean-square error log-spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process 1985, 33(2):443-445. doi:10.1109/TASSP.1985.1164550 10.1109/TASSP.1985.1164550

Srinivasan S, Samuelsson J, Kleijn WB: Codebook-based bayesian speech enhancement for nonstationary environments. IEEE Trans. Audio Speech Lang. Process 2007, 15(2):441-452. doi:10.1109/TASL.2006.881696

Rabiner LR, Sambur MR: An algorithm for determining the endpoints of isolated utterances. Bell Syst. Tech. J 1975, 54(2):297-315. 10.1002/j.1538-7305.1975.tb02840.x

Martin R: Noise power spectral density estimation based on optimal smoothing and minimum statistics. IEEE Trans. Speech Audio Process 2001, 9(5):504-512. doi:10.1109/89.928915 10.1109/89.928915

Freudenberger J, Stenzel S, Venditti B: A noise PSD and cross-PSD estimation for two-microphone speech enhancement systems. Proc. IEEE Workshop Statist. Signal Process 2009, 709-712. doi:10.1109/SSP.2009.5278478

Lotter T, Vary P: Dual-channel speech enhancement by superdirective beamforming. EURASIP J. Appl. Signal Process 2006, 2006(1):1-14. doi:10.1155/ASP/2006/63297

Hendriks RC, Gerkmann T: Noise correlation matrix estimation for multi-microphone speech enhancement. IEEE Trans. Audio Speech Lang. Process 2012, 20(1):223-233. doi:10.1109/TASL.2011.2159711

Benesty J, Chen J, Huang Y, Cohen I: Noise Reduction in Speech Processing. Springer, Berlin; 2009.

Loizou P: Speech Enhancement: Theory and Practice. CRC Press, Boca Raton; 2007.

Vary P, Martin R: Digital Speech Transmission: Enhancement, Coding and Error Concealment. John Wiley & Sons Ltd, Chichester, England; 2006.

Doclo S, Moonen M: GSVD-based optimal filtering for single and multimicrophone speech enhancement. IEEE Trans. Signal Process 2002, 50(9):2230-2244. doi:10.1109/TSP.2002.801937 10.1109/TSP.2002.801937

Dendrinos M, Bakamidis S, Carayannis G: Speech enhancement from noise: a regenerative approach. Speech Commun 1991, 10(1):45-57. doi:10.1016/0167-6393(91)90027-Q 10.1016/0167-6393(91)90027-Q

Hu Y, Loizou PC: A generalized subspace approach for enhancing speech corrupted by colored noise. IEEE Trans. Speech Audio Process 2003, 11: 334-341. doi:10.1109/TSA.2003.814458 10.1109/TSA.2003.814458

Hermus K, Wambacq P, Hamme HV: A review of signal subspace speech enhancement and its application to noise robust speech recognition. EURASIP J. Adv. Signal Process. 2007., 2007(1–15): Article ID 45821

Benesty J, Chen J: Optimal Time-Domain Noise Reduction Filters - A Theoretical Study. Springer, Berlin; 2011.

Golub GH, van Loan CF: Matrix Computations. The John Hopkins University Press, Baltimore; 1996.

Benesty J, Chen J, Huang Y, Gaensler T: Time-domain noise reduction based on an orthogonal decomposition for desired signal extraction. J. Acoust. Soc. Am 2012, 132(1):452-464. doi:10.1121/1.4726071 10.1121/1.4726071

Long T, Chen J, Benesty J, Zhang Z: Single-channel noise reduction using optimal rectangular filtering matrices. J. Acoust. Soc. Am 2013, 133: 1090-1101. doi:10.1121/1.4773269 10.1121/1.4773269

Jensen JR, Benesty J, Christensen MG, Chen J: A class of optimal rectangular filtering matrices for single-channel signal enhancement in the time domain. IEEE Trans. Audio Speech Lang. Process 2013, 21(12):2595-2606. doi:10.1109/TASL.2013.2280215

Benesty J, Chen J, Habets E: Speech Enhancement In the STFT Domain. Springer, Berlin; 2011.

Benesty J, Huang Y: A perspective on single-channel frequency-domain speech enhancement. Synth. Lect. Speech Audio Process 2011, 8(1):1-101.

Huang H, Zhao L, Chen J, Benesty J: A minimum variance distortionless response filter based on the bifrequency spectrum for single-channel noise reduction. Digit. Signal Process 2014, 33(0):169-179. doi:10.1016/j.dsp.2014.06.008

Chen J, Benesty J: Single-channel noise reduction in the STFT domain based on the bifrequency spectrum. Proc. IEEE Int. Conf. Acoust., Speech, Signal Process 2012, 97-100. doi:10.1109/ICASSP.2012.6287826

Benesty J, Huang Y, Chen J: Microphone Array Signal Processing. Springer, Berlin; 2008.

Brandstein M, Ward D (Eds): Microphone Arrays - Signal Processing Techniques and Applications. Springer, Berlin, Germany; 2001.

Benesty J, Souden M, Chen J: A perspective on multichannel noise reduction in the time domain. Appl. Acoust 2013, 74(3):343-355. doi:10.1016/j.apacoust.2012.08.002 10.1016/j.apacoust.2012.08.002

Jensen JR, Christensen MG, Benesty J: Multichannel signal enhancement using non-causal, time-domain filters. Proc. IEEE Int. Conf. Acoust., Speech, Signal Process 2013, 7274-7278. doi:10.1109/ICASSP.2013.6639075

Souden M, Benesty J, Affes S: On optimal frequency-domain multichannel linear filtering for noise reduction. IEEE Trans. Audio Speech Lang. Process 2010, 18(2):260-276. doi:10.1109/TASL.2009.2025790

Kollmeier B, Peissig J, Hohmann V: Binaural noise-reduction hearing aid scheme with real-time processing in the frequency domain. Scand. Audiol. Suppl 1993, 38: 28-38.

Benesty J, Chen J, Huang Y: Binaural noise reduction in the time domain with a stereo setup. IEEE Trans. Audio Speech Lang. Process 2011, 19(8):2260-2272. doi:10.1109/TASL.2011.2119313

Chen J, Benesty J: A time-domain widely linear MVDR filter for binaural noise reduction. Proc. IEEE Workshop Appl. of Signal Process. to Aud. and Acoust 2011, 105-108. doi:10.1109/ASPAA.2011.6082262

Acknowledgements

This research was funded by the Villum Foundation and the Danish Council for Independent Research, Grant ID: DFF – 1337-00084.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to this work. All authors read and approved the final manuscript.

Jacob Benesty, Mads Græsbøll Christensen, Jesper Rindom Jensen and Jingdong Chen contributed equally to this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

To view a copy of this licence, visit https://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Benesty, J., Christensen, M.G., Jensen, J.R. et al. A brief overview of speech enhancement with linear filtering. EURASIP J. Adv. Signal Process. 2014, 162 (2014). https://doi.org/10.1186/1687-6180-2014-162

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-6180-2014-162