Abstract

In order to qualify quantum algorithms for industrial NP-Hard problems, comparing them to available polynomial approximate classical algorithms and not only to exact exponential ones is necessary. This is a great challenge as, in many cases, bounds on the reachable approximation ratios exist according to some highly-trusted conjectures of Complexity Theory. An interesting setup for such qualification is thus to focus on particular instances of these problems known to be “less difficult” than the worst-case ones and for which the above bounds can be outperformed: quantum algorithms should perform at least as well as the conventional approximate ones on these instances, up to very large sizes. We present a case study of such a protocol for two industrial problems drawn from the strongly developing field of smart-charging of electric vehicles. Tailored implementations of the Quantum Approximate Optimization Algorithm (QAOA) have been developed for both problems, and tested numerically with classical resources either by emulation of Pasqal’s Rydberg atom based quantum device or using Atos Quantum Learning Machine. In both cases, quantum algorithms exhibit the same approximation ratios as conventional approximation algorithms or improve them. These are very encouraging results, although still for instances of limited size as allowed by studies on classical computing resources. The next step will be to confirm them on larger instances, on actual devices, and for more complex versions of the problems addressed.

Similar content being viewed by others

1 Introduction

In the quest for a quantum advantage, situating quantum algorithms and technologies with respect to classical approaches for solving NP-hard problems is a key question. Seminal works from Shor and Grover were a major breakthrough in this perspective in the early developments of quantum computing. Based on the theoretical model of quantum computation, they developed quantum algorithms that provide respectively an exponential speed-up for integer factorization [1], and a quadratic speed-up on exhaustive search in an unstructured database [2], a principle applicable to any NP problem providing at most such a speed-up in terms of query complexity [3, 4]. However, implementing these algorithms requires fault-tolerant quantum machines handling a large number of qubits, which have yet to be built.

While many technological obstacles currently impede the creation of such machines, experimental physicists have been capable of controlling quantum systems precisely enough to simulate complex many-body quantum systems. These quantum devices present strong quantum properties and offer scientists a control on the quantum aspects of physical systems. They can have sizes of several hundreds of quantum particles, and because of the unavoidable coupling between the system and its environment, these quantum platforms fall in the category of Noisy Intermediate Scale Quantum (NISQ) devices [5]. Among them, there is a strong belief that Analog Quantum Simulators (AQS) can perform specific tasks intractable for classical computers in polynomial time, such as the dynamical simulation of strongly interacting quantum Hamiltonians [6, 7], and it is expected that AQS will be among the first to propose useful applications in the short-term [8]. Lately, there has been growing interest in knowing if the quantum characteristics of these devices can be steered towards outperforming classical computers on industry-relevant tasks. An active field of research is currently guided towards combinatorial optimization, where the Hilbert space spanned by the many-body quantum system is used to efficiently encode a high-dimensional discrete problem.

In this context, algorithms that can run on such NISQ devices have been developed. Quantum Annealing [9], the Quantum Adiabatic Algorithm [10], and the Quantum Approximate Optimization Algorithm [11] are among the most promising ones. A better understanding of the performances of these approaches on industrial NP-hard problems is of great interest, both for quantum computing adoption and for the application domains concerned.

Approximate results are of great interest for practical applications, specifically industrial ones where a close-to-optimal solution is of significant value when exact solutions are unreachable due to exponential conventional computing time. Interestingly, some NP-hard problems are easier to approximate than others. For example, while the \(0/1\)-Knapsack problem is NP-complete, it admits a fully polynomial-time approximation scheme (PTAS) [12]. To grasp the performances and the quality of solutions provided by NISQ algorithms, we must rigorously compare them to their approximate classical counterparts. Determining where quantum approaches sit in the approximation complexity landscape is key in understanding their potential for practical problems. One should be aware that for some NP-hard problems bounds have been proven on these ratios which cannot be exceeded by classical algorithms unless some very strongly-believed conjectures of Complexity Theory would be broken; thus, improving these ratios beyond these bounds in these cases would establish a quantum supremacy for solving some NP-hard problems. Another desirable scenario is that quantum approaches find comparable approximation ratios as classical algorithms but faster or at a lower cost, which would already provide significant value for industries.

Taking this latter point into consideration, an interesting workout for qualification of quantum approaches is to focus on particular instances of NP-Hard problems known to be “less-difficult” than the worst-case ones, in the sense that approximation algorithms do exist for them that could outperform the above bounds, which are precisely established for the worst-case instances: our quantum algorithms should perform at least as well as the conventional approximate ones on these instances, up to very large sizes.

In this article, we present and discuss a case study based on this protocol, for two problems drawn from the rapidly growing sector of smart-charging of electrical vehicles in which EDF, the French utility for electricity production and supply, is strongly involved. Once appropriately modeled, these problems appear as classical NP-hard graph theory problems, Max-k-Cut and Maximum Independent Set (MIS) respectively. To solve these problems, we develop dedicated extensions of the Quantum Approximate Optimization Algorithm (QAOA) [11, 13]. Besides providing numerical results evaluating the performances of these approaches on real data sets of electrical vehicle loads, the aim of this paper is to illustrate practical issues faced when trying to address real industrial problems with available NISQ frameworks. In particular, achieving good performances with quantum approaches requires the design of hardware-efficient procedures, that exploit the strengths of a given quantum processor. In this framework, hardware and software are jointly developed in order to optimize the execution of the overall implementation. Among the variety of platforms that are currently being investigated, programmable arrays of single neutral atoms manipulated by light beams appear as a very powerful and scalable technology to manipulate up to a few thousands qubits [14–16]. For the problems under consideration, we provide some implementation details on such platform as developed and commercialized by the company Pasqal.

The paper is organized as follows: Sect. 2 describes the smart-charging of electrical vehicles problems (SC1) and (SC2) which serve as use-cases; Sect. 3.2 and 3.3 present the extension of QAOA to Max-k-Cut and the implementation of the approach proposed in Ref. [13] to solve MIS, respectively; Sect. 4 is dedicated to our results and Sect. 5 to conclusions and further works. Complementary material is presented in the Appendix.

2 Two smart-charging problems and their modeling as NP-hard problems

Electric Mobility will admittedly play a key role in solving major environmental and public health problems in the next decades. It should indeed participate in reducing greenhouse gas and fine particles emissions. Besides, the use of vehicle batteries both as energy storage and power supply devices (“Vehicle to Grid” or “V2G”) could significantly improve the flexibility of the electric system, reducing the use of fossil fuels during high-peak in demand, assuming the energy stored in the batteries comes from renewable resources.

Many difficult problems lie ahead of this scheme, related to the optimal management of the electric system in terms of cost while satisfying various hard technical constraints. These include, among others, the modulation of electricity demand taking into account the potentially high demand specific to electric vehicle loads, the needs of electric vehicle users, and the availability of buffer required to guarantee the frequency stability of the grid. “Smart-charging” refers to the process of optimally managing the charge and discharge of electrical vehicles in this framework. It appears as a mandatory condition to allow electric mobility expansion.

Many such problems take the form of typical scheduling and Operational Research problems. They are large sized combinatorial optimization problems, many of them known to be NP-hard/complete.

The following sections describe two samples of these problems, and their modeling for quantum resolution.

2.1 Vocabulary and common hypotheses

Both problems will be tackled under the following assumptions:

-

A load station is made up of several charging points, each of them loading at most a single electric vehicle (EV) at a given time step;

-

The charging points are parallel identical machines that supply the same power. The charging time of a given EV is thus independent of the charging point it is scheduled on;

-

We consider neither additional job characteristics and constraints (release/due dates, charging profile imposed by the battery state) nor global resource constraints on the load station (maximal power deliverable at a given time step);

-

Preemption is not allowed: a load task cannot be interrupted to be resumed later, on the same charging point or another one.

2.2 Minimization of total weighted load completion time (SC1) and Max-k-Cut

We consider \(J = \lbrace 1, \dots,n \rbrace \) charging jobs of n EVs with durations \(T = \lbrace t_{1}, \dots,t_{n} \rbrace \), to be scheduled on a set \(I = \lbrace 1, \dots,k \rbrace \) of k charging points. An integer weight \(w_{j} > 0\) is associated to each job j, measuring its importance. For example, we might want to prioritize the charge of emergency vehicles. The time at which a load j ends, called the completion time is noted \(C_{j}\) and we want to minimize the weighted total time of completion of the charges \(\sum_{j\in J}w_{j}C_{j}\).

(SC1) is a classical scheduling problem known to be NP-hard in the general case, polynomial on a single machine or without priorities/weights attached to the jobs [17]. If the number of machines m is fixed, (SC1) is NP-hard in the weak sense that it can be solved by pseudo-polynomial algorithms,Footnote 1 typically based on dynamic programming. In the case where m is not fixed, (SC1) is NP-Hard in the strong sense, meaning that no such pseudo-polynomial algorithm exists except if \(P=NP\).

Different approximation approaches have been studied for (SC1), until some PTAS in the general case and FPTASFootnote 2 in the weakly NP-Hard variant were established [18–21].

Interestingly for our purpose, some of these approximation approaches were based on the reformulation of (SC1) as a weighted Max-k-Cut problem, a problem that can be tackled by the Quantum Approximation Optimization Algorithm (QAOA), at least in its \(k=2\) set up i.e. Max-Cut.

Consider the complete graph \(G=(V,E)\) whose vertices V correspond to the n jobs in J, and with a weight assigned to each edge \((k,j)\) in E defined by \(w_{kj}= \min \{w_{k} t_{j}; w_{j} t_{k}\}\). The maximal k-cut of this graph provides with the optimal allotment of the n jobs to the k machines. Informally, this relies on the well known “Smith Rule” [22] stating that once jobs have been assigned to machines, the optimal scheduling is given by executing them in the non-increasing order defined by the ratio \(\frac{w_{j}}{t_{j}}\). On this basis, minimizing the weighted total completion time of the tasks can be shown to be equivalent to maximizing the above weight on crossing edges between the subsets of a m-partition of V [18, 19].

For both Max-Cut and Max-k-Cut, known polynomial randomized approximation algorithms obtain high approximation ratios \(C/C_{\mathrm{opt}}\)—where C represents the average value of the solution provided by the algorithm and \(C_{\mathrm{opt}}\) the optimal one –, namely 0.878567 [23] and \((1-\frac{1}{k}+ 2\frac{\ln k}{k^{2}} )\) [24], respectively (the latter is improved for small values of m in Ref. [25]). Moreover, improving these ratios is proved to be NP-Hard, unless some highly-believed conjectures of Complexity Theory would be false. In the case of Max-Cut, improving the approximation ratio from 0.878567 up to \((\frac{16}{17}=0.941176)\) is NP-Hard [26] and thus out of reach of any polynomial classical algorithms unless \(P=NP\), as it is to increase the ratio upon \(1-1/34 k\) for Max-k-Cut [27]. Likewise, both problems are APX-Hard and thus, unless \(P=NP\), they have no PTAS [24, 28]. Finally, tighter results were proven under the “Unique Games Conjecture”, namely that improving the above original ratios for Max-Cut and Max-k-Cut established in Ref. [23] and Ref. [24] is NP-Hard [29].

This means that such improvements by some quantum algorithms would establish a “quantum supremacy” on NP-Complete problems, unless these conjectures turn out to be false, which is considered unlikely. Here, we do not aim at achieving such an approximation ratio improvement with quantum algorithms in the general/worst-case. Alternately, we analyze their performances on some graphs drawn from real-world problems considering the performances obtained by the best-known randomized approximation classical algorithms. We observe that QAOA outperforms Goemans and Williamson ratio on these particular instances of Max-Cut. Similar results were observed in Ref. [30] on small random graphs. Establishing that such improvement would hold true for worst-case and large graphs instances is still an open question, and will remain so as long as we stay in the NISQ era. In any case, reaching similar approximation ratios as classical solutions, but faster or at a lower energy cost would already be of significant value for industries.

In this context, it should be noted that although these limits to improving the approximation ratios are valid for general/worst-case instances of Max-k-Cut, it does not mean that they are valid for particular instances with a specific structure. Thus, since efficient classical approximation schemes are known for (SC1), we can expect its instances reformulated as instances of Max-k-Cut to be easier to approximate than general/worst-case ones. We will see that this is indeed the case, both when using classical and quantum algorithms.

2.3 Optimal scheduling of load time intervals within groups (SC2) and MIS

We now consider the following problem (SC2): given a set of load tasks represented as intervals on a timeline, such that each of them belongs to a specific group, for example distinct vehicle fleets of a company, select a subset of these loads (i) which maximizes the number of non-overlapping tasks and (ii) such that at most one load in each group is completed. The goal is here to both minimize the completion time of the selected loads and to guarantee that no group will be over-represented in the schedule.

This problem belongs to the class of Interval Scheduling problems [31]. More precisely, it is a Group Interval Scheduling, or Job Interval Selection problem. It can be restricted without loss of generality to the case where all the groups contain the same number of tasks, k. It is NP-Complete for \(k \geq 3\), and has no PTAS for \(k \geq 2\) unless \(P=NP\) [32]. Some polynomial approximation ratios have been obtained in the general case, namely 0.5 in Ref. [32], improved to 0.63211 in Ref. [33], while polynomial algorithms exist in cases where some parameters are fixed [34].

Let \(I = \lbrace (s_{1},e_{1}) \dots (s_{nk},e_{nk}) \rbrace \), where n is the number of groups, be the set of intervals representing load job starting and ending dates, and \(G=(V,E)\) be the graph whose vertices in V correspond to intervals in I, and with an edge \((i,j)\) in E iff interval i and j overlap or i and j belong to the same group. A set S of vertices is called an independent set when no two elements of S are adjacent in the graph. Clearly, an independent set of G represents a feasible solution to the problem, and its Maximum Independent Set (MIS) is the optimal one.Footnote 3

Following our protocol for quantum algorithm qualification, we will limit ourselves to specific instances of (SC2) that can be formulated as MIS on two-dimensional Unit-Disk (UD) graphs. These geometrical graph are graphs in which two vertices are coupled by an edge if the distance between them is below a threshold value. This choice is motivated by two reasons. First, as described at Sect. 3.3 below, Pasqal’s neutral atom quantum processor is particularly well suited to natively implement the MIS on Unit-Disk graphs. Second, the MIS on such graphs, although remaining NP-Complete, is known to be “less difficult” to approximate than the MIS on general graphs, and has a PTAS while the general MIS does not [35, 36]. Of course, this protocol requires to transform (SC2) graphs to Unit-Disk graphs, a procedure we presented and analyzed in Sect. 3.3.2.

3 Quantum approaches to smart-charging problems

In this section, we describe how to use quantum approaches for solving the problems presented above.

3.1 QAOA in a nutshell

The “Quantum Approximate Optimization Algorithm” (QAOA) computes approximate solutions to combinatorial optimization problems, with a theoretical guarantee of convergence when the depth of the quantum circuit increases [11].

QAOA is a variational algorithm for combinatorial problems in which a quantum processor works hand-in-hand with a classical counterpart, as illustrated in Fig. 1 (see Ref. [37] for a review on variational algorithm). The quantum processor is used to prepare a wave function \(|\mathbf{z}_{\boldsymbol{\gamma },\boldsymbol{\beta }}\rangle \). In the most general case, \(|\mathbf{z}\rangle =|z_{1} z_{2}\cdots z_{n}\rangle \) represents a n-qudit state vector, with \(z_{i} \in \{0,1,\ldots,d\}\), and the subscript in \(|\mathbf{z}_{\boldsymbol{\gamma },\boldsymbol{\beta }}\rangle \) indicates that the state belongs to a family of states that is parameterized by the angles γ and β. More specifically, \(|\mathbf{z}_{\boldsymbol{\gamma },\boldsymbol{\beta }}\rangle \) is generated by the successive application of unitaries generated by the non-commutative operators M̂ and Ĉ, with angles given by \(\boldsymbol{\beta }=(\beta _{1},\beta _{2},\ldots,\beta _{p})\) and \(\boldsymbol{\gamma }=(\gamma _{1},\gamma _{2},\ldots,\gamma _{p})\), respectively. Given an initial state \(|\mathbf{z}_{0} \rangle \), the wavefunction prepared by the quantum processor takes the following form,

A common choice for the cost operator Ĉ (also sometimes referred to as energy operator) is the diagonal operator in the computational basis, \(\hat{C}|\mathbf{z}\rangle = C(\mathbf{z})|\mathbf{z}\rangle \), where \(C(\cdot)\) is the cost function to be optimized for, while the mixing operator M̂ induces transitions between states in the computational basis [11, 38]. In the following, we will always reformulate our problems under the form of minimization problems, by changing the sign of the cost function of the original problems. The ground state of the energy operator Ĉ corresponds to the optimal solution to the optimization problem. The dimension p of the vectors γ and β is called the depth of the algorithm.

Principle of the QAOA algorithm. A quantum processor, which can be operated in either digital or analog mode, is used to prepare an ansatz wavefunction from which we construct the mean value \(\langle \hat{C} \rangle _{\mathrm{obs}}\) using numerous measurements. A classical optimizer then updates the variational parameters. Some problems are naturally tailored to the analog mode of the platform, while others require a digital mapping. In digital mode, the unitaries are built from quantum circuits, made of elementary quantum gates acting each on one or a few qubits. In the analog mode, the unitaries are built from sequences of Hamiltonians that can be controlled in a continuous manner

The quantum state is then measured to construct an statistical estimator \(\langle \hat{C} \rangle _{\mathrm{obs}}\) of the cost function to be minimized. A classical optimization procedure uses this estimator to update the variational parameters γ and β for the next iteration. This loop repeats until convergence to a final state, from which an estimate of the solution to the problem is extracted. The approximation ratio \(\langle \hat{C} \rangle /C_{\mathrm{opt}}\), where \(C_{\mathrm{opt}}=\min_{\mathbf{z}} C(\mathbf{z})\), measures the quality of the approximation yielded by QAOA.

Once optimal parameters for QAOA are found the optimal assignment of variables is returned by repeatedly preparing and measuring the quantum circuit while saving the best result. As was pointed in [11], a solution with value at least \(\langle \hat{C} \rangle - 1\) is obtained with probability \(1 - \frac{1}{m}\) after \(O(m \log m)\) launches where \(m = C_{\mathrm{opt}} \leq |E|\). Thus the sampling step adds only a polynomial factor and is in general not accounted in the run-time analysis of QAOA.

In the following, we will consider two distinct ways to prepare the ansatz wavefunction (1) on Pasqal quantum processors. In the first one, the quantum processor is used in digital mode, and the trial wavefunction is the output of a quantum circuit composed of discrete quantum gates. In the second one, the quantum processor is used in an analog manner and the trial wavefunction results from the application of a continuously parameterized Hamiltonian.

3.2 Max-k-Cut

We describe here an extension of QAOA to deal with weighted Max-k-Cut problems using quantum circuits. We also introduce a hardware-efficient implementation of this procedure on arrays of neutral atoms.

3.2.1 Encoding on the quantum processor

We show here how to extend QAOA to Max-k-Cut problems on weighted graphs. Denoting by \(z_{i}\in \{1,2,\ldots,k\}\) the subset node i belongs to, the goal of Max-k-Cut is to minimize the cost function:

where the edge \(w_{uv}\) participates to the cost function if nodes u and v are in separated subsets.

Originally QAOA was designed for optimization problems. In that case, a problem with n variables naturally maps to a system of n qubits. For optimization over integer-valued variables one has to define a correspondence between basis states of a Hilbert space of some dimension and solutions to the initial problem, and then restrict the quantum evolution to the feasible domain.

A possible approach to tackle this issue is to apply the unary encoding [38] that for any natural \(k > 2\) attributes k qubits to each node and a node u is colored in c if \(|z_{u}\rangle = |0\dots 010\dots 0\rangle \) where the bitstring \(z_{u}\) has only one 1 on the position c. This encoding uses Nk qubits for an instance with N nodes and requires some non-trivial modifications to the mixing operator M in order to keep the evolution in the feasible subspace, which is spanned by basis states with only one 1 per node. This encoding was also used in Ref. [39] for solving graph-coloring problem with QAA.

We suggest instead to use the conventional binary encoding of integers for \(k=2^{l}\). In this encoding each node is associated to a set of l qubits \(\mathbf{z} = \{ z^{(0)}, \dots,z^{(l-1)}\}\) that will indicate the color of the node. More specifically, having qubits in the state \(\mathbf{z}_{u}\) corresponds to having the node u in the subset \(z_{u}=z^{(0)}z^{(1)}\cdots z^{(l-1)}\). Note that we need \(Nl \approx N\ln k\) qubits to encode the entire coloring of the graph. In the specific case where \(k=2^{l}\), the computational basis that spans the Hilbert space of our platform corresponds exactly to all the \(2^{Nl}\) possible colorings of the graph. It means that, contrary to the unary encoding, no modification to the operator M is required. This approach is easier to compile to elementary gates and less expensive in terms of number of qubits than the unary encoding. A natural extension for all natural k would be to use qudits instead of qubits [40].

For binary encoding we can build a cost operator Ĉ that is diagonal in the computational basis, such that \(\hat{C}|\mathbf{z}_{1} \dots \mathbf{z}_{N} \rangle = C(\mathbf{z}_{1} \dots \mathbf{z}_{N} )|\mathbf{z}_{1} \dots \mathbf{z}_{N} \rangle \), by writing:

where \(\sigma _{u}^{(i)}\) corresponds to a Pauli-Z matrix \(\sigma _{z}\) acting on the atom \(i \in \{0, \dots,l-1\}\) associated to node u. The operator C in Eq. (3) can be decomposed as a sum of operators with \(\{2,4, \dots, 2l \}\)-body interaction terms. Interaction terms involving more than 2-body operators are not directly implementable on most quantum computing platforms which only support 2-qubit gates. Instead, they can be decomposed as sums of two-body terms, which can be realized with CNOT gates. An example of the resulting circuit for an edge is shown in Fig. 2.

Because of the completeness of the Max-k-Cut graphs that we are studying, the cost operator in Eq. (3) involves coupling terms between all qubits. However, all quantum chips have a finite connectivity in practice. The physical realization of the desired terms between remote qubits thus requires the introduction of a large number of SWAP gates, that are detrimental to the performance of the procedure. Next, we introduce a hardware-efficient implementation of Max-k-Cut on Rydberg atom arrays which minimizes this overhead.

3.2.2 Towards a hardware-efficient implementation of Max-k-Cut on Rydberg atom arrays

Mapping the QUBO (Quadratic unconstrained binary optimization) representation of the Max-k-Cut problem as discussed above introduces an overhead in the number of CNOT gates as a result of the decomposition of multi-spin terms into pair interactions. An alternative to the QUBO representation of the problem is the parity encoding, originally proposed in Ref. [41] (Lechner–Hauke–Zoller scheme) and recently extended to applications using QAOA [42].

Let us summarize here the main steps of the parity transformation for completeness and then apply it to the Max-k-Cut problem. The parity encoding encodes the relative orientation of several logical qubits as a single physical qubit. With this encoding, each physical qubit represents the parity of several logical qubits, e.g. the physical spin \(\hat{z}_{12} = z_{1} z_{2}\) represents the product of two logical bits in the QUBO representation. Here, \(\hat{z}_{ij}\) is used as short notation of the z-component of the physical qubit \(\sigma _{ij}^{(z)}\) and \(z_{i}\) is used for the logical qubits \(\sigma _{i}^{(z)}\). The parity encoding represents the full problem in terms of the parity variables. This is not trivially possible, as the number of degrees of freedom of the logical graph are N (the number of logical spins), while the number of physical spins is K, the number of edges in the graph. To compensate for this difference, a number of \(C = K - N +1\) constraints need to be introduced. These constraints are conditions on the physical qubits that have to be fulfilled. In the Lechner–Hauke–Zoller scheme, these constraints are constructed from closed loops in the logical graph which results in a physical implementation that consists of K qubits and \(K-N+1\) 4-body constraints. Remarkably, the problem is fully encoded in the local fields while all interactions are uniform and problem independent [41].

Applying the parity encoding to the Max-4-cut problem allows us to reduce the number of CNOT gates to order \(N^{2}\). In the first step, we make use of the parity transformation of each term \(z^{0}_{i} z^{0}_{j} \rightarrow \hat{z}^{0}_{ij}\) and \(z^{1}_{i} z^{1}_{j} \rightarrow \hat{z}^{1}_{ij}\). As a second step we transform the 4-body term of the two-coloring problem which has the form \(z^{0}_{i} z^{0}_{j} z^{1}_{i} z^{1}_{j}\). We notice, that this term in the parity encoding is \(\hat{z}^{0}_{i}\hat{z}^{1}_{i}\) and thus a simple pair interaction between two physical qubit. Remarkably, the pair interaction can be performed between nearest neighbors by introducing a two-layer setup as depicted in Fig. 3.

Parity transformation of the Max-4-cut problem. The setup consists of two layers of qubits (blue bottom and red top). The bottom layer represents the 0th bit of the color and the top layer the 1st bit of the color. The qubits within the same layer interact via 4-body constraints [41] (green squares). The interaction terms \(\hat{z}^{0}_{i}\hat{z}^{1}_{i} = z^{0}_{i} z^{0}_{j} z^{1}_{i} z^{1}_{j}\) are efficiently encoded as pair gates between neighboring qubits of the two layers (black lines). Note, that the 4-body constraints are decomposed into parallelizable CNOT gates within the layers [42]

The cost function using the above construction reads as

Let us now comment on the resources requirements for this hardware-efficient implementation. The number of qubits is \(N(N-1)\) and the number of CNOT gates is \(9N^{2} - 41N + 48\). In the above example for \(N=5\) the number of qubits is \(N_{p}=20\) and number of CNOT Gates is \(N_{c} = 68\). In addition, the CNOT gates can be fully parallelized which results in a constant depth of global gates. In comparison, the number of qubits in the standard gate model is N and the number of CNOT gates to be calculated but assumed to be order \(N^{3}\). This encoding provides thus a strong advantage in platforms which show a capacity to scale up the number of qubits.

With a number of thousands of qubits of next generation neutral atom devices [16] in combination with the prospect of full parallelization of the parity encoding, Max-k-Cut represents a realistic application of near-term quantum devices.

3.3 MIS

In this Section we present how to solve a MIS problem using a QAOA procedure on a neutral atom processor working in an analog mode [13, 43]. We first show how an analog control over the atoms allows us to realize a Hamiltonian reproducing the MIS cost function in the case of Unit Disks (UD) graphs. Then, we discuss a procedure enabling us to transform more generic interval graphs to UD graphs.

3.3.1 Rydberg blockade and graph independent sets

When looking for the MIS of a graph G, we separate the nodes into two distinct classes: an independent one and the others. We can attribute a status z to each node, where \(z_{i} = 1\) if node i is attributed to the independent set, and \(z_{i}=0\) otherwise. The Maximum Independent Set corresponds to the minima of the following cost function:

where \(U \gg \Delta (G)\), where \(\Delta (G)\) is the degree of the vertex with maximum degree and \(\langle i,j \rangle \) represents adjacent nodes. In this cost function, we want to promote a maximal number of atoms to the 1 state, but the fact that \(U \gg 1\) strongly penalizes two adjacent vertices in state 1. The minimum of \(C(z_{0},\dots,z_{N})\) therefore corresponds to the maximum independent set of the graph.

Interestingly, the operator Ĉ associated with the cost function of Eq. (5) can be natively realized on a neutral atom platform [13], with some constraints on the graph edges. We map a ground state and a Rydberg state of each atom to a spin \(1/2\), where \(|1 \rangle = |r \rangle \) is a Rydberg state and \(|0 \rangle = |g \rangle \) is a ground state. An atom in a Rydberg state has an excited electron with a very high principal quantum number and therefore exhibits a huge electric dipole moment. As such, when two atoms are excited to Rydberg states, they exhibit a strong van der Waals interaction. Placing N atoms at positions \(\mathbf{r}_{j}\) in a 2D plane, and coupling the ground state \(|0\rangle \) to the Rydberg state \(|1\rangle \) with a laser system enables the realization of the Hamiltonian:

Here, Ω and δ are respectively the Rabi frequency and detuning of the laser system and ħ is the reduced Planck constant. The first two terms of Eq. (6) govern the transition between states \(|0\rangle \) and \(|1 \rangle \) induced by the laser, while the third term represents the repulsive Van der Waals interaction between atoms in the \(|1\rangle \) state. More precisely, \(n_{i} = (\sigma _{i} ^{z} + 1)/2\) counts the number of Rydberg excitations at position i. The interaction strength between two atoms decays as \(|\mathbf{r}_{i}-\mathbf{r}_{j}|^{-6}\).

The shift in energy originating from the presence of two nearby excited atoms induces the so-called Rydberg blockade phenomenon, illustrated in Fig. 4(a). More precisely, if two atoms are separated by a distance smaller than the Rydberg blockade radius \(r_{b} = (C_{6}/\hbar \Omega )^{1/6}\), the repulsive interaction will prevent them from being excited at the same time. On the other hand, the sharp decay of the interaction allows us to neglect this interaction term for atoms distant of more than \(r_{b}\). As such, for \(\Omega =0\), the Hamiltonian in Eq. (6) is diagonal in the computational basis and enables to realize \(H |z_{1},\dots,z_{N}\rangle =(\hbar \delta /2) C(z_{1},\dots,z_{N})|z_{1}, \dots,z_{N}\rangle \), with the cost function specified in Eq. (5), and for which there is a link between atoms i and j if they are closer than \(r_{b}\) apart.

(a) Illustration of the Rydberg blockade effect. When two atoms are far apart, \(|\mathbf{r}_{i}-\mathbf{r}_{j}|>r_{b}\), they don’t interact. On the other hand, if they are separated by less than the Rydberg blockade radius, \(|\mathbf{r}_{i}-\operatorname{\mathbf{}}_{j}|< r_{b}\), a strong interaction prevents the two atoms to be in the state \(|1\rangle \) at the same time. (b) Rydberg blockade and independent sets of a graph. Rydberg atoms correspond to the nodes of a UD-graph. There are edges between adjacent nodes if the distance between them is smaller than the Rydberg blockade radius \(r_{b}\), as illustrated for the top-left node. Due to the Rydberg blockade effect, the dynamics of the system is restricted to independent sets

We have seen that atom arrays enable to study UD-MIS problems with QAOA using an analog control, which will likely offer better performances as compared to a digital approach. In the following, we present a way to transform interval graphs into UD graphs.

3.3.2 From interval graphs to unit disk graphs

Graphs such as the ones used to model our Job Interval Selection problem (SC2) do not correspond to UD graphs, as they are unions of interval intersection graphs (the edges corresponding to the overlapping tasks) and of cluster graphs (the set of complete disjoint cliques corresponding to the groups) and thus one-dimensional intersection graphs, rather than two-dimensional ones. Hence, we have to transform our scheduling graphs in order to implement the search of their MIS on the quantum machine.

The mapping between the original problem graph and the locations of the Rydberg atom/qubits in the machine can be obtained by solving the following continuous quadratic constraint satisfaction problem (QP):

where:

-

\(G=(V,E)\) is the original interval graph of the problem;

-

\(\overline{G}=(V,\overline{E})\) is G’s complementary, i.e. the graph whose vertices \((i,j)\) are connected iff they are not connected in G;

-

r is the Rydberg blockade radius, i.e. the upper bound on the distance between two connected vertices, \(\rho \sim r/3\) a given factor defining the lower bound on their distance, illustrating the minimal spacing between separated atoms that is experimentally feasible;

-

\((x_{i}, y_{i})\) are the coordinates in the Euclidean plan of the atom/qubit representing the node \(i\in V\);

-

L̅ defines the maximum square area available to place atoms on the machine, while imposing the above constraints.

Experimentally, the Rydberg blockade radius r can be around 15μm, for minimal distances between atoms of the order of 5μm, and the atoms are contained in a region characterized by \(\bar{L}\sim 100 \mu \)m.

Ideally, the transformation of the original (SC2) graph into one respecting the constraints of the quantum device, i.e. the solving of the (QP) problem above, should be (i) always feasible, and (ii) sufficiently efficient to preserve the quantum advantage expected when solving the UD MIS problem on the quantum machine, thanks to the “natural” embedding of this problem on it, as shown in the Sect. 3.3.1 above.

Unfortunately these two conditions cannot be met in all cases.

Firstly, depending on the machine characteristics \((r,\rho,\overline{L})\) and the structure of the original interval graph G, (QP) does not necessarily have feasible solutions. For example, strongly connected graphs should make difficult to satisfy the constraint of bringing together the atoms corresponding to connected nodes in the rings delimited by \((r,\rho )\).

Secondly, due to the lower bound in constraints (8) and to constraints (9), (QP) is not convex, and is thus NP-hard in the general case. Classical reformulation-linearizations of non-convex quadratic programs are available for (QP)-like problems [44–46], leading to integer/binary linear constraint satisfaction problems (CSP) that can be tackled by well-studied conventional techniques. However the drawback of this approach is the large number of binary variables. Such a formulation for (QP) is presented in the Appendix A. It was tested on our graph instances using the Ibm Cplex solver. Results revealed that such an approach can compromise the potential quantum advantage expected on the UD-MIS part.

As an alternative, we used a more compact linear formulation which, although not providing a guarantee to find an existing solution in the elapsed time allowed, did perform well on the majority of smart-charging graphs tested. The idea is to replace the quadratic constraints by linear ones but expressing the inclusion in the rings defined by \((r,\rho )\) by means of a given set of parametrized radius. The resulting model is as follows:

where \(f_{\phi }\) is a set of clauses enforcing the belonging of the point \((x_{j},y_{j})\) to the radius defined by the angle ϕ intersecting the ring of center \((x_{i},y_{i})\) defined by \((r,\rho )\). For example:

Because of the “or” and “absolute value” terms, this formulation is still combinatorial, but much more efficient than the previous one.

This formulation was tested with Ibm Cplex solver. It provided 84 UD graphs to be implemented for UD-MIS search on the Rydberg atom based quantum machine, starting from 100 instances of real smart-charging graphs of 12 to 15 nodes each, limiting the (\(\mathit{QPR}_{\Phi }\)) CSP resolution time to 60s for each instance.

This approach to reformulate (SC2) problems into UD-MIS tractable on Pasqal’s quantum machine seems thus valid. However, in the experiments conducted up to now and presented here, we had to solve the (QP) problem this way only for graph instances whose number of vertices was limited. Tackling larger load scheduling problems on larger quantum processors with this method might prove vain, due to the cost of computing the corresponding UD graph of Rydberg atoms, assuming this one does exist.

Our method should thus be considered as a hybrid classical/quantum heuristic for solving (SC2)-like smart-charging problems—NP-Hard with no PTAS—by first trying to quickly find a UD representation of the graph, then aiming to exploit the expected quantum advantage gained when solving the UD-MIS problem on a Rydberg atom quantum machine. Clearly, any improvement of the “mapping” between the original scheduling problem and its representation on the quantum processor will benefit to the method. This is an important research objective that we leave for future work.

4 Results

4.1 Analytical result for Max-Cut: the mean cost in the QAOA1 state

An analytical expression for Max-Cut on unweighted graphs for \(p=1\) was derived in [47]. We extend this result to the case of complete weighted graphs. For an edge \(\langle u, v \rangle \) the mean value of \(C_{u,v}\) for chosen \(\gamma, \beta \) is:

This is an important result for numerical experiments as the obtained expression allows to compute the mean cost in the QAOA1 state without any call to a (simulated) quantum computer in polynomial time, which in turn is useful to analyze the performance of QAOA.

In this expression, we realize that there is a close link between the value of the QAOA angle γ and the weight of the edge \(w_{uv}\). Discussions on the normalization of the graph edges and its impact on the energy landscape are covered in Appendix B.

4.2 Numerical results

In the following, we present a performance analysis of the various procedures presented above. For Max-Cut at depth \(p=1\), we use the analytical formula (15). For Max-4-Cut, MIS, and Max-Cut at depth \(p>1\), we compute the mean cost by Monte-Carlo estimation, by simulating the quantum evolution either on the Atos Quantum Learning Machine [48] or using the QuTIP library [49] on the OCCIGEN supercomputer based in Montpellier, France.

4.2.1 Real data set used

Data were driven from a set of 2250 loads performed during May 2017 on identical charging points of the Belib’s network of load stations located in Paris, France [50].

For both problems (SC1) and (SC2), an instance is a series of chronological loads characterized by their duration for (SC1) and starting/end times for (SC2). The instance size is the number of loads it contains. A data-set is a set of instances whose first load is randomly chosen among the 2250, according to a uniform law. Once an instance is built:

-

for (SC1), a priority is randomly assigned to each load, according to a Poisson’s law, enforcing a constant distribution of the different priority levels in each instance;Footnote 4

-

for (SC2), the belonging to a group is randomly assigned to each load according two a uniform law parameterized by the number of groups and the number of loads in the instance.

4.2.2 Minimization of total weighted load completion time (SC1)

First, we compare the performance of QAOA and the randomized algorithm on Max-Cut instances of different sizes \(N \in [6, 8, 10, 15, 30, 50, 70, 100, 150]\).

The purpose of our performance analysis is not to demonstrate that QAOA beats the best classical algorithm but rather to compare it to a solution of the same nature. Indeed, both QAOA and randomized algorithm return a sample from a certain probability distribution (built by a quantum circuit and uniform distributions respectively).

We leave for future work a more advanced comparison with local classical algorithms of bounded depth, that were suggested as fair competitors to QAOA in [51].

In order to compute the exact optimum \(C_{opt}\) we use brute-force search for Max-Cut on small instances (with up to 30 nodes) and the dynamic program algorithm presented in Ref. [52] for the initial scheduling problem on bigger instances.

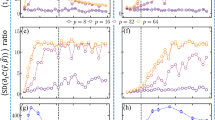

As expected, we observe on the top panel of Fig. 5 that, for a fixed value of N, the approximation ratio improves with the QAOA depth p.

Top panel: Evolution of the approximation ratio of QAOA with depth p for the Max-Cut problem. Bottom panel: Evolution of the average approximation ratio with the instance size for QAOA at depth \(p=1\) (dashed orange line) and for the randomized algorithm on the initial scheduling problem (dotted blue line)

Surprisingly, we also notice that the approximation ratio gets better with the size of the Max-Cut instance. Such behavior is also observed for the randomized algorithm, as illustrated on the bottom panel of Fig. 5. In this numerical experiment we observe that QAOA finds better solutions than the randomized algorithm.

The good performances of the randomized algorithm shown on the bottom panel of Fig. 5 suggest that the difficulty of the problem under consideration decreases with its size. To confirm this insight, let us consider the simpler case of unweighted Max-Cut on a complete graph. In this scenario, choosing a cut at random gives an approximation ratio of \([ \sum_{k} \binom{N}{k}k(N-k) ]/(N^{2}/4)\) which goes to 1 in the large N limit. This fact is illustrated in Fig. 6, where we show the approximation ratios of all possible cuts for different values of N. This plot shows that, for a fixed positive value of the normalized cut number, the corresponding approximation ratio approaches 1 in the large N limit. While our findings suggest that the presence of \(\mathcal{O}(1)\) weights in our problems leads to the same behavior, it does not extend to instances in which the magnitude of the weights would increase with N.

We now present numerical results for Max-4-Cut. Using the normalization factor introduced in Appendix B, we ran QAOA for \(p=7\) layers, and plotted the statistical distribution of the approximation ratio achieved for 98 different instances (Fig. 7). As expected, the approximation ratio increases to 1 with the number of layers. The high value of the approximation ratio achieved is an encouraging result, showing that quantum approaches are comfortably higher than the classical minimal approximation guarantee of 0.857487 [53], even at low depths. At \(p=1\), the tail of the distribution indicates that some instances have been poorly optimized. The fact however that this tail disappears in the next layers shows that initial poor optimization can be corrected in the following layers. This is due to the fact that at the end of each layer our classical algorithm implements a rapid local optimization on all parameters.

Max-4-Cut. QAOA for \(p=7\) layers, and the statistical distribution of the approximation ratio achieved for 98 instances. Proper re-weighting of the graph uniforms the instances. The high value of the approximation ratio achieved is an encouraging result, showing that quantum approaches are comfortably higher than the classical approximation minimal guarantee of 0.857487 [53], even at low depths. While initial optimization might not be ideal, as suggested by the tail of distribution on the first layer, it is corrected by the global smoothing at the next layer

4.2.3 Optimal scheduling of load time intervals within groups (SC2)

Previous studies on quantum approaches for solving UD-MIS investigated the influence of quantum noise QAOA [43], and compared the performances of Quantum Annealing procedures [54] to classical approximation algorithms.Footnote 5

For the classical loop of QAOA, we use here a new global optimization process (Egg optimization) described in the Appendix C.1 that uses a global optimization process called differential evolution (DE) [55]. Using (DE) enables to escape from local minima quite easily. Another important advantage is that the function evaluations can be done in parallel. More specifically, we ran our program on the OCCIGEN supercomputer based in Montpellier, France, where we used 28 CPU cores in parallel for our calculations. This reduced by a factor of 8.8 the time of derivation. In addition, we reduced the amount of phase space addressed by making educated guesses from layer \(p-1\) to layer p, strongly inspired by Ref. [56], and demonstrated precise results with much fewer function calls. The combination of educated guesses and parallel function evaluations made for a consequent speed-up, bringing down typical calculation times of half a day to an hour. Finally, the global optimizer (DE) has shown strong results in the context of noisy and changing landscape [57], a typical behavior of our noisy intermediate-scale quantum platforms. Using (DE) might prove robust in an experimental setup.

As can be seen in Fig. 8(a), the performances of QAOA for solving the UD-MIS problem are good in average, exceeding approximation ratios of 0.95 after seven QAOA layers. As can be noted in the figure, the distribution of the approximation ratio for each layer is rather wide. At the third layer, the distribution starts to separate in two bulks. The lower bulk stagnates by the fourth layer as the approximation ratio stays inferior to 0.85 until the last layer, while the approximation of the upper bulk increases to one as the depth grows. For completeness, we show on panel (b) and (c) of Fig. 8 a typical graph instance of each group. The instances in the lower bulk correspond to worst-case scenarios and represent 9.5% of the instances. Understanding the characteristics of the worst instances is of crucial importance to characterize the quantum approaches. Indeed in the approximation theory the quality of an algorithm is benchmarked on worst-case instances. The approximation ratio achieved by the algorithm on these particular instances is a guarantee from below for any other instance. Finding worst-case scenario has been investigated in the past for Max-Cut on uniform 3-regular graphs at depths \(p=\{1,2,3\}\) [11, 58]. Obtaining a lower bound guarantee of QAOA on UD-MIS, which we leave for future work, would enable us to compare the quantum approach to the classical approximation scheme to assess an eventual quantum advantage.

(a) For each layer, the median is plotted and the violin envelop represents the distribution of the approximation ratio achieved for each of the 84 points. While the approximation ratio grows rapidly to 1 in most cases, there seems to be some instances that are harder to optimize. We isolate a graph representative of the best-case bulk (b) and one for the worst-case bulk (c). Further understanding of the worst-case graphs is important in order to estimate the quality of a quantum approximation algorithm

5 Conclusions and perspectives

Qualifying quantum algorithms on difficult optimization problems is of great importance to evaluate the benefit of quantum computing, as these problems are at the core of many industrial applications where they often constitute performance bottlenecks.

Two major principles must be implemented in such a process:

-

1.

Rely on a collaboration between experts in the application field under study and quantum computing experts, in order to design fine-tuned, ad-hoc, software-hardware solutions;

-

2.

Benchmark quantum algorithms not only against exact classical algorithms, by nature exponential on this class of problems, but also versus available approximate polynomial ones.

The first point is crucial, because quantum solutions can often take advantage of the specific characteristics of the targeted quantum hardware. The second point is required for a fair-play competition between quantum and classical approaches for difficult optimization, in order to precisely evaluate a potential quantum advantage.

This paper reports a case study based on this protocol in the field of smart-charging of electric vehicles. We specified two smart-charging problems, which, although stylized to be treated by available quantum approaches, stay representative of the real operational problems currently solved by the EDF subsidiaries involved in the field. We developed a hardware-efficient implementation of QAOA on quantum devices based on Rydberg atoms arrays to solve these two problems, respectively modeled as “sub-difficult” instances of Max-k-Cut and MIS NP-hard problems. We implemented these procedures on a real data-set of 2250 loads, and compared quantum solutions to classical approximate ones, up to current limits of classical simulation of quantum hardware with \(N\leq 20\) qubits. In both cases, quantum algorithms behave correctly, obtaining high approximation ratios, coherently with the fact that both applications are modeled as “less difficult” instances than the worst-case ones of these two NP-Complete problems.

These results, obtained through a rigorous protocol, are very encouraging. Future works will involve testing the quantum approaches on the real Rydberg atoms quantum processor developed by Pasqal in the 100-1000 qubits range [16]; making the smart-charging problems more realistic by incorporating new constraints (e.g maximal available power on the load station), a real challenge as this should make the associated Hamiltonians to be implemented on the processor more complex; more specifically from an application viewpoint, looking for efficient heuristics to transform general graphs in unit-disk ones, which would drastically simplify the procedure for quantum solving of MIS. On this latter point, another interesting option is to explore smart-charging problems which are “naturally” two dimensional—e.g. based on the “autonomy radius” of vehicles or the “action radius” of charging points –, thus replacing the costly and hypothetical resolution of the (UD) problem by a simple scale reduction in the plan.

Availability of data and materials

The code used for the simulations is available at https://github.com/pasqal-io/SmartCharging, while the data generated and used for the figures is accessible at https://figshare.com/account/home#/projects/101369. The data driven from the Belib network of load stations are public and available at www.data.gouv.fr/fr/datasets/.

Notes

An algorithm is said to be pseudo-polynomial if it is polynomial in the numeric values of its data, but super-polynomial in the length of their binary encoding.

A Polynomial Time Approximation Scheme (PTAS) is a family of ε-parametrized polynomial algorithms allowing to approximate optimal solutions “arbitrary closely” by a factor of \((1 - \varepsilon )\), \(\varepsilon >0\). When the algorithms are also polynomial in their parameter ε, one talks of Full Polynomial Time Approximation Scheme (FPTAS)

The above formulation supposes that a starting date is assigned to each load task, in order to represent it as an interval on the time line. In a real smart-charging management system, such dates could be fixed by the users of the vehicles, imposed by technical constraints or decided by the smart-charging manager.

Note that, for the small instances studied here, classical brute-force algorithm can find the exact solution in a short time

References

Shor PW. Algorithms for quantum computation: discrete logarithms and factoring. In: Proceedings 35th annual symposium on foundations of computer science. 1994. p. 124–34.

Grover LK. A fast quantum mechanical algorithm for database search. In: Proceedings of the twenty-eighth annual ACM symposium on theory of computing. STOC’96. New York: Association for Computing Machinery; 1996. p. 212–9. ISBN 978-0-89791-785-8. https://doi.org/10.1145/237814.237866.

Bennett CH, Bernstein E, Brassard G, Vazirani U. Strengths and weaknesses of quantum computing. SIAM J Comput. 1997;26(5):1510–23. https://doi.org/10.1137/s0097539796300933.

Viamontes GF, Markov IL, Hayes JP. Is quantum search practical?. Comput Sci Eng. 2005;7(3):62–70.

Preskill J. Quantum computing in the NISQ era and beyond. Quantum. 2018;2:79. https://doi.org/10.22331/q-2018-08-06-79

Choi J-Y, Hild S, Zeiher J, Schauss P, Rubio-Abadal A, Yefsah T, Khemani V, Huse DA, Bloch I, Gross C. Exploring the many-body localization transition in two dimensions. Science. 2016;352(6293):1547–52. https://doi.org/10.1126/science.aaf8834.

Scholl P, Schuler M, Williams HJ, Eberharter AA, Barredo D, Schymik K-N, Lienhard V, Henry L-P, Lang TC, Lahaye T, Läuchli AM, Browaeys A. Programmable quantum simulation of 2D antiferromagnets with hundreds of Rydberg atoms. 2020. arXiv e-prints arXiv:2012.12268.

Kendon V. Quantum computing using continuous-time evolution. 2020. arXiv:2004.00704.

Satoshi M, Hidetoshi N. Mathematical foundation of quantum annealing. 2008. arXiv:0806.1859.

Farhi E, Goldstone J, Gutmann S, Quantum SM. Computation by Adiabatic Evolution. 2000. arXiv:quant-ph/0001106.

Farhi E, Goldstone J, Gutmann S. A Quantum Approximate Optimization Algorithm. 2014. arXiv:1411.4028.

Ibarra OH, Kim CE. Fast approximation algorithms for the knapsack and sum of subset problems. J ACM. 1975;22(4):463–8. https://doi.org/10.1145/321906.321909.

Pichler H, Sheng-Tao W, Zhou L, Choi S, Quantum LM. Optimization for Maximum Independent Set Using Rydberg Atom Arrays. 2018. arXiv:1808.10816v1.

Saffman M, Walker TG, Mølmer K. Quantum information with Rydberg atoms. Rev Mod Phys. 2010;82(3):2313–63. https://doi.org/10.1103/RevModPhys.82.2313.

Saffman M. Quantum computing with atomic qubits and Rydberg interactions: progress and challenges. J Phys B, At Mol Phys. 2016;49(20):202001. https://doi.org/10.1088/0953-4075/49/20/202001.

Henriet L, Beguin L, Signoles A, Lahaye T, Browaeys A, Reymond G-O, Jurczak C. Quantum computing with neutral atoms. Quantum. 2020;4:327. https://doi.org/10.22331/q-2020-09-21-327.

Graham RL, Lawler EL, Lenstra JK, Rinnooy Kan AHG. Optimization and approximation in deterministic sequencing and scheduling: a survey. Ann Discrete Math. 1979;5:287–326.

Skutella M. Semidefinite relaxations for parallel machine scheduling. In: 39th annual symposium on foundations of computer science. Palo Alto, CA, USA. 1998. p. 472–81.

Heng Y, Yinyu Y, Jiawei Z. An approximation algorithm for scheduling two parallel machines with capacity constraints. Discrete Appl Math. 2003;130:449–67.

Skutella M, Woeginger GJ. A PTAS for minimizing the total weighted completion time on identical parallel machines. CORE Discussion Papers. Report No.: 1999029. Université catholique de Louvain, Center for Operations Research and Econometrics (CORE); 1999. https://ideas.repec.org/p/cor/louvco/1999029.html.

Woeginger G. When does a dynamic programming formulation guarantee the existence of a fully polynomial time approximation scheme (FPTAS)? INFORMS J Comput. 2000;12:57–74. https://doi.org/10.1287/ijoc.12.1.57.11901.

Smith WE. Various optimizers for single-stage production. Nav Res Logist Q. 1956;3:59–66.

Goemans MX, Williamson DP. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J ACM. 1995;42(6):1115–45.

Frieze A, Jerrum M. Improved approximation algorithms for Max k-Cut and Max bisection. Integer Program Comb Optim. 1995;920:1–13.

de Klerk E, Pasechnik D, Warners J. On approximate graph colouring and Max k-Cut algorithms based on the ϑ function. J Comb Optim. 2004;8(3):267–94.

Hastad J. Some optimal inapproximality results. J ACM. 2001;48(4):798–859.

Kann V, Khanna S, Lagergren J, Panconesi A. On the hardness of approximating Max-k-Cut and its dual. Chic J Theor Comput Sci. 1997;2:1–18.

Papadimitriou CH, Yannakakis M. Optimization, approximation, and complexity classes. J Comput Syst Sci. 1991;43(3):425–40.

Khot S, Kindler G, Mossel E, O’Donnell R. Optimal inapproximability results for MAX-CUT and other 2-variable CSPs? SIAM J Comput. 2007;37(1):319–57.

Crooks GE. Performance of the Quantum Approximate Optimization Algorithm on the Maximum Cut Problem. 2018. arXiv e-prints arXiv:1811.08419.

Kolen AWJ, Lenstra JK, Papadimitriou CH, Spieksma FCR. Interval scheduling: a survey. New York: Wiley; 2007. www.interscience.wiley.com.

Spieksma FCR. On the approximability of an interval scheduling problem. J Sched. 1999;2(5):215–27.

Chuzhoy J, Ostrovsky R, Rabani Y. Approximation algorithms for the job interval selection problem and related scheduling problemsInterval. Math Oper Res. 2006;31(4):730–8.

Bevern R, Mnich M, Niedermeier R, Mathias W. Interval Scheduling and Colorful Independent Sets. 2014. arXiv:1402.0851v2.

Chan TM, Har-Peled S. Approximation algorithms for maximum independent set of pseudo-disks. Discrete Comput Geom. 2012;48(2):373–92.

Nieberg T, Hurink J, Kern W. A robust PTAS for maximum WeightIndependent sets in unit disk graphs. In: International workshop on graph-theoretic concepts in computer science. 2004. p. 214–21.

Cerezo M, Arrasmith A, Babbush R, Benjamin SC, Endo S, Fujii K, McClean JR, Mitarai K, Yuan X, Cincio L, Coles PJ. Variational Quantum Algorithms. 2020. arXiv e-prints arXiv:2012.09265.

Hadfield S, Wang Z, O’Gorman B, Rieffel EG, Venturelli D, Biswas R. From the Quantum Approximate Optimization Algorithm to a Quantum Alternating Operator Ansatz. 2017. arXiv e-prints arXiv:1709.03489.

Kudo K. Constrained quantum annealing of graph coloring. 2018.

Bravyi S, Kliesch A, Koenig R, Tang E. Hybrid quantum-classical algorithms for approximate graph coloring. 2020. arXiv e-prints arXiv:2011.13420.

Lechner W, Hauke P, Zoller P. A quantum annealing architecture with all-to-all connectivity from local interactions. Sci Adv. 2015;1(9):e1500838.

Lechner W. Quantum approximate optimization with parallelizable gates. IEEE Trans Quantum Eng. 2020;1:1–6.

Henriet L. Robustness to spontaneous emission of a variational quantum algorithm. Phys Rev A. 2020;101(1):012335. https://doi.org/10.1103/PhysRevA.101.012335.

Park J, General BS. Heuristics for Nonconvex Quadratically Constrained Quadratic Programming. 2017. arXiv:1703.07870.

Elloumi S, Lambert A. Global solution of non-convex quadratically constrained programs. In: Optimization methods and software. vol. 34. London: Taylor & Francis; 2019. p. 98–114.

Billionnet A, Elloumi S, Lambert A. Linear Reformulations of Integer Quadratic Programs. Model Comput Optim Inf Syst Manag Sci. 2008;14.

Wang Z, Hadfield S, Jiang Z, Rieffel EG. Quantum approximate optimization algorithm for MaxCut: a fermionic view. Phys Rev A. 2018;97(2):022304. https://doi.org/10.1103/physreva.97.022304.

Quantum Learning Machine. https://atos.net/en/solutions/quantum-learning-machine.

Johansson JR, Nation PD, Nori F. QuTiP 2: a Python framework for the dynamics of open quantum systems. Comput Phys Commun. 2013;184(4):1234–40. https://doi.org/10.1016/j.cpc.2012.11.019.

Belib réseau parisien de bornes de recharges pour véhicules électriques. www.data.gouv.fr/fr/datasets/belib-reseau-parisien-de-bornes-de-recharges-accelerees-22-kw-ac-dc-pour-vehicules-electriques/; 2017.

Hastings MB. Classical and quantum bounded depth approximation algorithms. 2019.

Sahni SK. Algorithms for scheduling independent tasks. J ACM. 1976;23(1):116–27. https://doi.org/10.1145/321921.321934.

de Klerk E, Pasechnik DV, Warners JP. On approximate graph colouring and max-k-cut algorithms based on the θ-function. J Comb Optim. 2004;8(3):267–94.

Serret MF, Marchand B, Ayral T. Solving optimization problems with Rydberg analog quantum computers Realistic requirements for quantum advantage using noisy simulation and classical benchmarks. 2020. arXiv:2006.11190.

Storn R, Price K. Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim. 1997;11(4):341–59.

Zhou L, Wang S-T, Choi S, Pichler H, Lukin MD. Quantum approximate optimization algorithm: performance, mechanism, and implementation on near-term devices. Phys Rev X. 2020;10:021067. https://doi.org/10.1103/PhysRevX.10.021067.

Rocca P, Oliveri G, Massa A. Differential evolution as applied to electromagnetics. IEEE Antennas Propag Mag. 2011;53(1):38–49.

Wurtz J, Love PJ. Bounds on MAXCUT QAOA performance for p>1. 2020.

Egger DJ, Marecek J, Woerner S. Warm-starting quantum optimization. 2020.

Shaydulin R, Safro I, Larson J. Multistart methods for quantum approximate optimization. 2019 IEEE high performance extreme computing conference (HPEC). 2019. https://doi.org/10.1109/hpec.2019.8916288.

Shaydulin R, Alexeev Y. Evaluating quantum approximate optimization algorithm: a case study. In: 2019 tenth international green and sustainable computing conference (IGSC). 2019. p. 1–6. https://doi.org/10.1109/IGSC48788.2019.8957201.

Brandao FGSL, Broughton M, Farhi E, Gutmann S, For NH. Fixed Control Parameters the Quantum Approximate Optimization Algorithm’s Objective Function Value Concentrates for Typical Instances. 2018.

Guerreschi GG, Smelyanskiy M. Practical optimization for hybrid quantum-classical algorithms. 2017.

Nelder JA, Mead R. A simplex method for function minimization. Comput J. 1965;7:308–13.

Gill PE, Murray W, Wright MH. Practical optimization. Classics in applied mathematics. Philadelphia: SIAM; 2019. ISBN 978-1-61197-560-4. https://books.google.fr/books?id=G-vEDwAAQBAJ.

Powell MJD. A direct search optimization method that models the objective and constraint functions by linear interpolation. In: Advances in optimization and numerical analysis. Gomez S, Hennart J-P, editors. Dordrecht: Springer; 1994. p. 51–67. ISBN 978-94-015-8330-5. https://doi.org/10.1007/978-94-015-8330-5_4.

Acknowledgements

We thank Thomas Ayral, Antoine Browaeys, Thierry Lahaye and Christophe Jurczak for discussions. This work was developed as a collaboration within the European Commission in the Horizon 2020 FET-Quantum Flagship project PASQuanS (817482).

Funding

WL was supported by the Austrian Science Fund (FWF) through a START grant under Project No. Y1067-N27 and the SFB BeyondC Project No. F7108-N38, the Hauser-Raspe foundation. This material is based upon work supported by the Defense Advanced Research Projects Agency (DARPA) under Contract No. HR001120C0068. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of DARPA. It was granted access to the HPC resources of CINES under the allocation 2019-A0070911024 made by GENCI. It was also supported by EDF R&D, the Research and Development Division of Electricité de France and by Loria, the Lorraine Research Laboratory in Computer Science and its Applications University of Lorraine, France.

Author information

Authors and Affiliations

Contributions

All authors contributed to all the parts of the paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Abbreviations

Analog Quantum Simulators (AQS), Differential Evolution (DE), MIS: Maximum Independent set, Noisy Intermediate Scale Quantum (NISQ), Polynomial-time Approximation Scheme (PTAS), Quantum Approximate Optimization Algorithm (QAOA), Unit-Disk (UD).

Appendices

Appendix A: Integer linear reformulation of the (QP) problem

The (QP) problem introduced Sect. 3.3.2 can be solved by a classical reformulation-linearization of non-convex quadratic programs into binary/integer ones, by considering variables \(x_{i}\) and \(y_{i}\) as integers and replacing them by their binary expansions in constraints(8) and (9), then adding binary variables and appropriate constraints to represent the products between them [44–46]. The resulting binary/integer linear model is as follows:

Once rewritten this way, and assuming that it is feasible, (QP) can be solved by any conventional techniques dedicated to linear discrete constraint satisfaction problems. However, it is wort noting that this formulation quickly leads to a large amount of binary variables, since for each couple \((x_{i},y_{i})\) of continuous variables modeling a vertex in the original problem, we introduce \(2\log (\overline{L})\) binary variables \((bx_{i},by_{i})\) to expand its integer representation, and \((\log (\overline{L}))^{2}\) binary variables \(wx_{i,j}^{k,k'}\)/\(wy_{i,j}^{k,k'}\) to express each product in the constraints related to the edges.

Appendix B: Optimizing the classical loop of QAOA

Although translation of a combinatorial problem focuses on the quantum loop of the QAOA, the quality of the classical optimizer part is of crucial importance. Indeed, an inefficient classical optimizer will bring excessive overhead to the whole process. For practical use, it is of crucial importance to use an optimizer tailored to the machine. In the following paragraphs, we present results on the research for the best classical optimization process.

In the (SC1) problem, the weights \(w_{uv}\) of the edges in the graph are imposed by real data. These weights appear in Eq. (15) and multiply the parameterized angle γ. They therefore impact the phase that is applied to each basis state of the computational basis in the QAOA cycle. To visualize the impact of weights, we plot the energy (or cost-function) landscape at level \(p=1\) as a function of the parameters \((\gamma,\beta )\). Figure 9 contains numerical simulation of the energy landscape for the Max-Cut problem, evaluated on a graph of size 10, with a resolution of 30 points along each axis. For the same problem, we re-weight the adjacency matrix of the graph by different factors. On the first figure, a high density of peaked valleys and hills indicates that optimization is difficult and would require an important amount of function evaluations to find a decent solution. The amount of peaks and valleys is due to the fact that the cost Hamiltonian adds a phase term to each basis vector \(z_{i}\). Indeed, applying Ĉ with an angle γ modifies \(|z_{i}\rangle \) to \(e^{i\gamma C_{i}}|z_{i} \rangle \), where \(C_{i}\) corresponds to the cost of the coloring \(z_{i}\). Modifying the graph weights consequently modifies the the phase applied to each basis state \(z_{i}\). A smaller \(C_{i}\), as seen in the third figure, smooths the cost-function landscape enabling adequate local optimization. Artificially reducing \(C_{i}\) too much however might over-smooth the landscape, reducing the possible phases that QAOA can apply, hence missing the global minima. We want \(C_{i}\) to be big enough to allow basis states \(z_{i}\) to acquire a phase \(e^{i\phi }\) in a comfortable range. At the same time, we do not believe a phase \(\phi > 2 \pi \) is necessary. The re-weighting \(C_{i} = R^{*} C_{i}\), where \(R^{*} =\frac{2\pi }{\max (C_{i})}\) satisfies the two previous conditions. Numerically, we find that re-weighting the adjacency matrices of all instances by the factor R indeed concentrates all optimal parameters in a restricted zone of parameter space.

The zoom effect. From left to right, we normalized an instance from (SC1) respectively by \(R/5\), \(R/2\) and R to observe the effect on the energy landscape of \(\mathrm{QAOA}_{1}\). The closer to R, the better the zoom on the global minima. The re-weighting of a graph affects the energy landscape: it can therefore be used as leverage to either zoom on the point of interest to apply local optimization, or on the contrary it might be used to zoom out of barren plateaus to explore more interesting phase spaces

Practically of course, calculating \(R^{*}\) implies knowledge of \(\max {C_{i}}\), which corresponds to the exact solution to the initial problem. We therefore propose the upper bound \(R =w_{\max } \frac{N^{2}}{4}\), where \(w_{\max } = \max_{u,v}w_{uv}\). It is calculated from the best-cut on a complete graph where all weights would be equal to \(w_{\max }\).

In the scenario of (SC1), we see that the choice for the optimizer depends on the normalization of the weights. If one manages a good normalization, then local methods of optimization can be used with high guarantees of success. In general, we advocate the use of a global optimizer if there is no prior knowledge of the energy landscape.

Appendix C: Finding correct parameters: the Egg optimization and local vs. global methods

3.1 C.1 Educated global guess (Egg) optimization

In order to find the best variational parameters for p layers, we develop a method based on the idea of making an educated guess from previous layers to the new one [56]. The educated global guess (Egg) optimization process uses the differential evolution (DE) [55] rather than a local optimization in an attempt to find the global optimum in a wrinkled energy landscape. (DE) works by starting with an ensemble of points in the phase-space, called the agent population. Then, theses agents are moved around by recombining their coordinates, and the function is evaluated for these new agents. If the new position brings an improvement, it is kept, otherwise it is discarded. This process is repeated until convergence to a minimum, although there is no guarantee that the global minima will be found. While it cannot be sure that our method will always work perfectly, the constant growth of the approximation ratio as p increases in our results is a reassuring indicator, as illustrated on the top panel of Fig. 8. A second major hurdle in the optimization process is the high-dimensionality of the phase space: for p layers, we need to find the global minimum of a space of size 2p. We strongly reduced the complexity of the problem by making a global educated guess for the optimal parameters at layer p using the optimal parameters found at layer \(p-1\) (Alg. 1). The heuristic of an educated guess from the previous layer to the new one was developed in Ref. [56]. Our version uses a global optimizer rather than a local one in an attempt to find the global optima in a wrinkled energy landscape. This very much improved computation time by reducing the amount of phase space addressed, and demonstrated precise results with much fewer function calls than local methods (see Appendix C). The algorithm is described in pseudo-code below (Alg. 1). It works as follows: find optimal parameters \((\gamma _{1}^{*},\beta _{1}^{*})\) for \(p=1\). For the next layer, optimize the function \(C: (\gamma _{2},\beta _{2}) \mapsto C (\gamma _{1}^{*},\gamma _{2}, \beta _{1}^{*},\beta _{2})\). As such, two variables are already fixed and the space to explore is once again only bi-dimensional. Once the optimization ends on the two new coordinates, a local optimization is done on all the coordinates. This quick step enables to re-calibrate the previous parameters: it is therefore possible to achieve a trotterization process for high values of p.

3.2 C.2 Local vs. global methods

In Ref. [10] the grid search was proposed to find optimal parameters for QAOA. This method returns a global optima but quickly becomes intractable while growing the depth p. Thus a practical approach is to use numerical optimization methods. They may be divided in two groups: global and local methods. Global optimization procedures (such as grid search) address a higher amount of the search space while the local ones return a solution that lays in a neighborhood of a predefined initial point.

An experimental protocol for QAOA should specify the method to use in the parameter optimization step as well as its additional parameters. Local optimization routines are often used in works on applied QAOA [59–61], however the reasons why a certain method is chosen for a particular application are usually omitted. For (SC1) we compared different methods in terms of their performances (evaluated by the approximation ratio of the QAOA with returned parameters) and costs (measured in number of calls of the function to optimize, i.e. a function that computes \(\langle \mathbf{z}_{\boldsymbol{\gamma, \beta }} |\hat{C}| \mathbf{z}_{ \boldsymbol{\gamma, \beta }} \rangle \)).

In order to apply a local optimization routine one has to specify a good initialization point. Without any prior knowledge about the parameter space it is chosen randomly from a set of possible values (usually several points are studied in parallel). However in some applications a pattern in optimal parameters is observed. Such pattern may rely optimal parameter values at different depths, as in [56], or the values for certain groups of instances [62].

For \(\mathrm{QAOA}_{1}\) the equality \(\gamma =0\) implies the absence of any quantum effect (the resulting distribution stays uniform). In Fig. 10 we observe that the absolute values of β and γ get smaller for instances with bigger \(|V|\), but in general it does not imply that the quantum effect becomes less important as γ may changed to an arbitrary positive value by multiplying all weights with some factor. We also can’t claim that \(\mathrm{QAOA}_{1}\) output distribution gets closer to uniform even if we observed that their mean values effectively get closer.

We found that at depth \(p=1\) optimal parameter values are close for instances with the same number of nodes \(|V|\) and that they get concentrated while growing the size of the graph 10. For \(p=1\) we developed an initialization procedure for local methods that relies on the observed pattern. At depths \(p>1\) we used the INTERP heuristic introduces in Ref. [56] which is based on the intuition that optimal parameters at depth p are close to a linear transformation of optimal values at \(p-1\), which seems to be true for our instances (see Fig. 10).

A choice of the optimization routine has a high impact on the performance and cost of an experimental implementation of QAOA as was shown in Ref. [63]. By experimentally comparing different optimization methods we observed that for local methods, a gradient-free Nelder–Mead [64] is the best choice for our purposes: its performance is comparable to the one of the quasi-Newton BFGS [65] (and both methods outperform the COBYLA routine [66] which is often used in works on applied QAOA [59]) while it requires less evaluations of the objective function (see Fig. 11).

Cost and performance of different numerical optimization methods on Max-Cut (SC1), for graphs with size \(N= 10\). Differential Evolution (DE) corresponds to a global optimization algorithm, inspired by the INTERP method. While yielding results very close to Nelder–Mead, it does so by requiring very little number of function evaluations. Building optimization processes such as DE that require little function evaluations is key in the NISQ era as it ensures quicker performances on unstable devices