Abstract

Correlation plenoptic imaging (CPI) is a scanning-free diffraction-limited 3D optical imaging technique exploiting the peculiar properties of correlated light sources. CPI has been further extended to samples of interest to microscopy, such as fluorescent or scattering objects, in a modified architecture named correlation light-field microscopy (CLM). Interestingly, experiments have shown that the noise performances of CLM are significantly improved over the original CPI scheme, leading to better images and faster acquisition. In this work, we provide a theoretical foundation to such advantage by investigating the properties of both the signal-to-noise and the signal-to-background ratios of CLM and the original CPI setup.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Plenoptic imaging is based on retrieving both spatial distribution and propagation direction of light within the single exposure of a digital camera [1,2,3]; this enables both refocusing in post-processing, and scanning-free 3D imaging. The technique is currently employed in several applications that include microscopy [4,5,6,7], particle image velocimetry [8], wavefront and remote sensing [9,10,11,12,13], particle tracking and sizing [14], and stereoscopy [1, 15, 16]. Being capable of acquiring multiple 2D viewpoints of the sample of interest within a single shot, plenoptic apparata are amongst the fastest devices for performing scanning-free 3D imaging [17], as already shown recently for surgical robotics [18], imaging of animal neuronal activity [7], blood-flow visualization [19], and endoscopy [20]. The novelty of correlation plenoptic imaging (CPI) [21,22,23,24,25], compared to conventional plenoptic imaging devices, is to employ a correlated light source enabling to collect information on the intensity distribution and the propagation direction of light on two distinct sensors, rather than one [2, 26, 27]. The most immediate advantage is to overome the strong tradeoff between spatial and directional resolution affecting conventional plenoptic imaging implementations [28,29,30,31,32,33,34,35,36]. CPI, however, has the main drawback of no longer being a single-shot technique. In fact, in order to retrieve the amount of information required to reconstruct the 3D scene of interest, an average of the correlations between intensity fluctuations separately measured by the two sensors must be performed over many frames; each frame representing a different statistical realization of the correlated light source, which is generally chosen to be a source of entangled beams or chaotic light. To aim at real-time imaging, the number of required frames should thus be reduced as much as possible. On the other hand, the number of statistical realizations that are sampled cannot be reduced too much without negatively affecting the image quality, namely, its signal-to-noise ratio (SNR) and signal-to-background ratio (SBR). Understanding the relationship linking the image quality with the number of acquired frames would thus provide a powerful tool for optimizing the measurement time of CPI and determining the necessary number of frames for reaching the desired output quality. This has been done in Ref. [37], where the noise properties of the original CPI scheme [21, 25] (therein referred to as SETUP1) have been outlined.

In the present work, we offer a detailed study of the SNR and the SBR characterizing correlation light-field microscopy (CLM), a versatile CPI architecture oriented to microscopy [38]. As we shall better explain in the next section, CLM enables extending the use of CPI to both self-emitting (e.g. fluorescent) and scattering samples, and has the potential to be more robust to the presence of turbulence in the surroundings of the sample. Additionally, experimental evidence has shown that CLM is characterized by far superior noise performances compared to the original CPI scheme [39]. In this paper, we develop a solid theoretical background for interpreting and understanding this effect by comparing the expected noise performances of CLM with the ones of the original CPI scheme [21, 25, 37]. The paper is organized as follows. In Sect. 2, we report the analysis of the SNR and SBR characterizing both the unprocessed point-to-point correlation function of CLM and its final refocused image; the results are compared with one obtained for the original CPI architecture [37]. In Sect. 3, we discuss the theoretical and practical implications of the presented results, while in Sect. 4, we report their theoretical and methodological basis. For the reader’s convenience, lengthy computations and exact formulas are reported in the Appendix.

2 Results

2.1 CLM: working principle, correlation function and refocusing algorithm

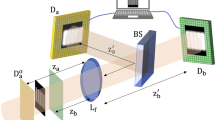

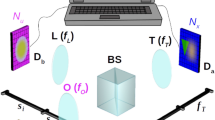

Let us start by briefly presenting the two CPI architectures that will be compared throughout the paper: the original scheme [21], reported in Fig. 1a, and CLM [38], shown in Fig. 1b. The original scheme and CLM represent, respectively, a plenoptic enhancement of ghost imaging with chaotic light [40] and an ordinary microscope. As emphasized in the figure, throughout the whole paper, the two architectures will be identified with the symbols \({\mathcal {G}}\) (as a placeholder for the scheme based on ghost imaging) and \({\mathcal {M}}\) (for the microscopy-oriented scheme). In both setups, light emitted by a chaotic source is split into two paths a and b by means of a beam-splitter (BS) and is recorded at the end of each path by the high-resolution detectors \(D_{a}\) and \(D_{b}\). Despite the intrinsic differences between the two schemes, the roles of \(D_{a}\) and \(D_{b}\) are similar: in both cases, \(D_{b}\) is dedicated to retrieving information about the direction of propagation of light, while \(D_{a}\) collects an image of the sample that can be either focused or out of focus, depending on where the latter is placed along the optical axis. The crucial difference lies in the fact that a conventional image of the object (i.e. based on intensity measurement) is available on \(D_a\) in scheme \({\mathcal {M}}\), but cannot be retrieved in scheme \({\mathcal {G}}\). In fact, the original CPI scheme is based on so-called ghost imaging [40]: the focused image of the object can only be retrieved by measuring correlations between a bucket (i.e. single-pixel) detector (here represented by the entire sensor \(D_{b}\), upon integration over all pixels) collecting light scattered/reflected/transmitted by the object, and a high-resolution detector (here \(D_{a}\)) placed at a distance from the source equal to the object to source distance. However, as we shall see, in both setups, if the object is out of focus, the focused image can still be recovered by means of a refocusing algorithm applied to the point-by-point correlation of the intensity fluctuations measured by \(D_{a}\) and \(D_{b}\).

In scheme \({\mathcal {G}}\), light from a chaotic source is split by a beam splitter (BS). The transmitted beam illuminates the object, passes through an imaging lens, and is recorded by detector \(D_{b}\), which is placed in the conjugate plane of the source, as generated by lens L. The reflected beam impinges on detector \(D_{a}\). When the object to source distance is equal to the distance between \(D_a\) and the source (\(z_a=z_b\)), its focused ghost imaging can be retrieved through correlations between each pixel of \(D_a\) and the overall (integrated) signal from \(D_b\). When the object to source distance differs from the \(D_a\) to source distance (\(z_a\ne z_b\)), the ghost image is blurred, but can be refocused by reconstructing the point-to-point correlation between each pixel of \(D_a\) and \(D_b\) and applying a proper refocusing algorithm. Scheme \({\mathcal {M}}\), instead, belongs to a novel subclass of CPI schemes in which the object is placed before the beam-splitter, is modelled as a chaotic source, and can be transmissive, reflective, scattering, or even self-emitting. The setup involves an objective lens (O), a tube lens (T), and an auxiliary lens (L), with focal lengths \(f_{O}\), \(f_{T}\), \(f_{L}\), respectively. Detector \(D_{a}\) is fixed in the back focal plane of the tube lens, while the sample is at an arbitrary distance \(z_{a}\) from the objective lens; its conventional image is directly focused on \(D_a\) when \(z_{a}=f_{O}\), and is blurred otherwise. Detector \(D_{b}\) retrieves the image of the objective lens, as produced by the lens L. Also in this setup, correlating intensity fluctuations on the two detectors provides the required plenoptic information enabling to reconstruct the focused image of the object also when the object is placed away from the conjugate plane of detector \(D_a\), as defined by the objective and the tube lens.

We shall now demonstrate that CLM entails an improvement of the noise properties with respect to the original CPI protocol. To this end, we shall consider the pixel-by-pixel correlation of the intensity fluctuations measured on the two disjoint detectors \(D_{a}\) and \(D_{b}\). More specifically, the intensity patterns \(I_{A}\left( \varvec{\rho }_{a}\right)\) and \(I_{B}\left( \varvec{\rho }_{b}\right)\), with \(\varvec{\rho }_{a,b}\) the transverse coordinate on each detector plane, are recorded in time to reconstruct the correlation function

where \(\Delta I_{K}(\varvec{\rho }_{k})=I_{K}(\varvec{\rho }_{k})-\left\langle I_{K}(\varvec{\rho }_{k})\right\rangle\) for \(K=A,B\) and \(k=a,b\) are the fluctuations of intensities, with respect to their average values, registered on each pixel of the two detectors. The expectation values in Eq. (1) and in the average intensity should be evaluated considering the source statistics, but they can be approximated by time averages for stationary and ergodic sources [41].

In both setups, the correlation function of Eq. (1) at a fixed \(\varvec{\rho }_{b}\) encodes a coherent image of the object [21, 38, 42, 43]. Each point \(\varvec{\rho }_{b}\) on the plane of detector \(D_{b}\) corresponds to a different point of view on the scene (as further discussed in Sec. 2.3), and the images corresponding to different \(\varvec{\rho }_{b}\) coordinates are shifted one with respect to the other. If the position of the object in each setup satisfies a specific focusing condition (\(z_a=z_b\) for \({\mathcal {G}}\), \(z_a=f_O\) for \({\mathcal {M}}\)), the relative shift of such images vanishes, and one can integrate over the detector \(D_{b}\) to obtain an incoherent image characterized by an improved SNR with respect to the single images. However, if the (intensity or correlation-based) image of the object is out of focus, before piling up the single coherent images through integration over \(D_{b}\), they must be realigned according to the following refocusing algorithm:

where the parameters \((\alpha ,\beta )\) depend on the specific CPI architecture:

where \(M = S_2/(S_1+z_b)\) is the magnification of the image of the source on \(D_b\), in setup \({\mathcal {G}}\), and \(M_a=f_T / f_O\) and \(M_b=S_I/S_O\) are the magnifications in arm a and in arm b, respectively, in setup \({\mathcal {M}}\). It is easy to verify that in the focused cases (\(z_a=z_b\) for \({\mathcal {G}}\), \(z_a=f_O\) for \({\mathcal {M}}\)), there is no need to either shift or scale the variables since the coherent images are already aligned and the high-SNR incoherent image can be obtained by simply integrating over \(D_b\).

2.2 Noise properties of the refocused images in the two CPI architectures

Information on the noise affecting the refocused images can be obtained by considering the variance \({\mathcal {F}}(\varvec{\rho }_a)\) of the signal \(\Sigma _{\mathrm {ref}}\left( \varvec{\rho }_{a}\right)\). If a sequence of \(N_{f}\) frames is collected in order to evaluate the refocused image, the root-mean-square error affecting the evaluation of this quantity can be estimated by \(\sqrt{{\mathcal {F}}\left( \varvec{\rho }_{a}\right) /N_{f}}\). Therefore the signal-to-noise ratio reads

Derivation and properties of the variance term \({\mathcal {F}}\) can be found in Sect. 4. Due to its intrinsically local nature, the SNR of Eq. (4) cannot be used as an indicator of the quality of the refocused image in its entirety. We thus introduce a global figure of merit, the signal-to-background ratio, defined as:

where \(\Sigma _{\mathrm {\text {sig}}}\) is the refocusing function, evaluated in a point where signal is expected, and the quantities in the denominators are reference values of \(\Sigma\) and \({\mathcal {F}}\), evaluated for the background, namely, those parts of the image in which essentially no signal is expected. The distinction between SNR and SBR becomes relevant whenever the variance of the refocused image depends on the signal magnitude, with the two being substantially identical when uniform noise throughout the image is expected (as, e.g., in ghost imaging). The analytical expressions for SNR and SBR in the two CPI architectures of interest are quite cumbersome even in a geometrical-optics approximation, and enable only a few qualitative considerations. To overcome this difficulty and get a better insight about the differences in noise performances of the two schemes, numerical simulations have been carried over.

2.2.1 Simulations of refocused images in the two CPI architectures

Here we shall discuss the results of the numerical simulations of the noise performances obtained in the two CPI architectures under consideration, for two different objects (see Fig. 2): a set of three equally spaced and uniformly transmissive slits, analogous to a typical element of a negative resolution test target, and a set of N equally spaced Gaussian slits, with N varying from 1 to 8. Specifically, the latter masks are characterized by field transmittance profile

with

and \(\{x_i\}_{1\le i\le N}\) the set of slit centers along x.

The noise performances of the two architectures will be compared as the transmissive area of the two objects is changed, which is obtained by varying the slit size for the first object, and N for the second. In the latter case, variation of N also enables checking the capability of the two setups to resolve an increasing number of details. In fact, the noise properties of ghost imaging depend only on the total transmissive area of the object, and not on the number of separate details of which it is composed [40]. Considering the difference in the image formation in CLM and the (ghost imaging-based) original CPI scheme, it is interesting to investigate this property also in CPI architectures under investigation.

On the left hand side, the object binary transfer function used for the simulations to which Figs. 3 and 7 refer: three equally spaced transmissive slits with varying parameter d; on the right hand side, the Gaussian object transfer function of Eq. (6), used for simulations in which the number of details is varied from 1 to 8

Let us start by considering the refocused images of a planar mask made of three parallel transmissive slits, as from Fig. 2a. In order to match the parameters for \({\mathcal {G}}\) and \({\mathcal {M}}\), we consider in both cases a mask placed at a distance of \(26.6\,\mathrm {mm}\) from the focusing element, namely \(1\,\mathrm {mm}\) farther than the conjugate plane of the sensor. The resolution at the focus for \({\mathcal {M}}\) is defined by the typical point-spread function of the microscope [38]. On the other hand, \({\mathcal {G}}\) is not capable of imaging the object with intensity measurements, but only through ghost imaging, namely by correlating the total intensity collected by \(D_{b}\) (regarded as a bucket detector) and the signals acquired by each pixel of \(D_{a}\). Therefore, as in a focused ghost imaging system, the output image is given by

so that the resolution at focus can be defined through the positive point-spread function \(\mathrm {PSF_{CPI}}\) (see [21]) as in conventional imaging. The resolution can be made equal for the two setups by matching their numerical apertures and choosing the source-to-\(D_a\) distance in \({\mathcal {G}}\) to be equal to the objective focal length in \({\mathcal {M}}\). Moreover, the two objects are illuminated by chaotic light of the same wavelength \(\lambda =550\,\mathrm {nm}\), while the numerical apertures of the imaging devices are equal and gives a resolution \(\Delta x = 3\,\mu \mathrm {m}\) on the plane in which the correlation image is focused. In the comparison reported in Fig. 3, we can observe that the performance of setup \({\mathcal {M}}\) is better for all the considered values of the center-to-center slit distance, providing evidence of the advantage entailed by this setup.

SNR of the refocused images \(\Sigma _{\mathrm {ref}}\) of the triple slit reported in Fig. 2, placed \(1\,\)mm away from the plane at focus, averaged over the central points of the three slits and normalized with the square root of the frame number \(N_f\), evaluated for the \({\mathcal {M}}\) (solid blue line) and \({\mathcal {G}}\) (dashed red line) architectures characterized by the same numerical aperture, hence, resolution of the focused image

Let us now consider the noise performance of \({\mathcal {G}}\) and \({\mathcal {M}}\) in the case of the second object, while varying the number of slits N, and considering both focused and refocused images. In both protocols, focused images are characterized by diffraction- limited resolution, which we set to \(\Delta x=1.0\,\mu \mathrm {m}\) in both setups. In this condition, the term \({\mathcal {F}}_{0}\) in \({\mathcal {M}}\) has the strongest spatial modulation, while it smooths out as the object is moved away from the focused plane. Such a spatial dependence, which is not present in the corresponding term for \({\mathcal {G}}\), is the expected to have great impact on the SBR.

Comparison of the SNR (left panel) and SBR (right panel) of the final image obtained from Eq. A2. The plots refer to the case of focused images, obtained in \({\mathcal {G}}\) (red dots) and \({\mathcal {M}}\) (blue dots) schemes, for a varying number of Gaussian slits within an object with transmittance profile reported in Eq. (6) and Fig. 2b

Comparison of the SBR of the final image obtained from Eq. A2 in the case of a deeply out-of-focus object. The images are obtained in setups \({\mathcal {G}}\) (red dots) and \({\mathcal {M}}\) (blue dots) for a varying number of Gaussian slits within an object with transmittance profile reported in Eq. (6) and Fig. 2 b

In the case of focused images, the simulations reported in Fig. 4 have been performed with the center-to-center slit distance \(|x_{i}-x_{i-1} |=3\Delta x\) and width \(w_x=\Delta x\), thus making the object details very close to the best achievable resolution in both setups. The SNR is averaged on the maxima of the image; to compute SBR, the signal and the background are evaluated by averaging, respectively, over the maxima and the minima of the image.

In the out-of-focus case, another feature of the refocusing functions has to be taken into account to rule out contributions that are not strictly related to the pure noise performance: a noise comparison can only make sense as long as the image quality is the same from an optical point of view, namely if the details in the images are refocused with the same visibility

where \(d^{+}\) is the average value of the image on the peaks (corresponding to the slit centers) and \(d^{-}\) is the value in the minima between adjacent peaks. In both setups, as the object is moved away from the plane at focus, the visibility of refocused images decreases, and they do so with a slightly different trend in the two setups. Therefore, the slit distance in the out-of-focus regime have been optimized so that, if the object is placed at the same distance from the focused plane in the two setups, the refocusing visibility is 99% in both setups. We also made sure to consider a case in which the object is very far from the plane at focus, choosing a distance from the focused plane equal to 30 times the natural depth of focus of the systems, which is the same for both setups. In this deeply out-of-focus regime, the SNR is calculated in the maxima of the refocused function and the SBR comes out to be identical to the SNR; we thus report in Fig. 5 only the result for the SBR. While the noise profile in \({\mathcal {G}}\) is always constant, independently of the object distance, it is not constant in \({\mathcal {M}}\), where it flattens out the more the object is moved away from the conjugate plane of the detector, making no difference as to where the term \({\mathcal {F}}_{\text {back}}\) in Eq. (5) is evaluated. On the other hand, the term \(\Sigma _{\text {back}}\) tends to vanish in a resolved image.

For a better quantification of the advantage of \({\mathcal {M}}\) over \({\mathcal {G}}\) in terms of SBR (which, in the out-of-focus case, also tends to coincide with SNR), we report in Fig. 6 the ratio of the values obtained in the two setups.

2.3 Noise properties of the correlation function in the two CPI architectures

The refocused image is a useful tool in any plenoptic imaging device, since it gathers all the information available from the collected data on the objects on a given plane, increasing the SNR and suppressing contributions from other planes. However, each 2D slice of \(\Gamma (\varvec{\rho }_a,\varvec{\rho }_b)\) contains information on the object, providing, for each fixed \(\varvec{\rho }_b\), a high-depth-of-field image observed from a given point of view. If the object is planar and characterized by the field transmittance \(A(\varvec{\rho })\), the correlation function in a geometrical approximation reads, up to irrelevant factors,

where \(\alpha\) and \(\beta\) are reported in Eq. (3), \(n=1\) for \({\mathcal {G}}\), and \(n=2\) for \({\mathcal {M}}\). As anticipated, we see from Eq. (10) that the correlation function, as the detector points are varied, provides a shifted and scaled representation of the aperture function of the sample. In particular, varying the coordinate \(\varvec{\rho }_{b}\) is equivalent to varying the point of view on the object imaged on the detector \(D_{a}\). An intuitive explanation of this effect is that the correlation with the pixels of \(D_{b}\) automatically selects rays emitted by a well-defined part of the source, in \({\mathcal {G}}\), or passing through a well-defined part of the objective lens, in \({\mathcal {M}}\). When dealing with a 2D object, as in our case, refocusing might look like a useless operation, since the object profile is already contained in each single viewpoint. However, the need to refocus arises from the fact that single perspectives are typically too noisy for the object to be distinguished from the background: thus, summing them together improves the SNR and the SBR by the squared root of the number of statistically independent perspectives. Different from the first CPI experiment [25], which was based on scheme G, in a recent CLM experiment [39], single point-of-view images with high enough SNR for the objects to be recognized were obtained. Extending the study and comparison of noise performances directly to the correlation function rather than to the final refocused images is thus interesting and worth investigating.

We define the noise affecting the correlation function as the variance of the product of intensity fluctuations on \(D_{a}\) and \(D_{b}\), divided by \(\sqrt{N_f}\). In this case, there is no suppression of lower-order terms, so that all eight-point correlators contribute to the variance, which reads

The SNR is thus defined as

The definition of SBR can be generalized to the study of the correlation function by considering the signal evaluated at a generic pair of points \((\varvec{\rho }_{a},\varvec{\rho }_{b})\) and the background evaluated at a reference point \((\varvec{\rho }_{a}^{\prime },\varvec{\rho }_{b})\) in which the expected intensity of every considered point-of-view image is practically vanishing:

As evident, the SNR is determined by the adimensional quantity \(\Gamma /{\mathcal {I}}_{A}{\mathcal {I}}_{B}\). To estimate this ratio, we consider the cases of focused and out-of-focus image in the geometrical approximation, which is of much simpler interpretation compared to the refocusing function, as we shall see shortly below.

2.3.1 Focused case

For \({\mathcal {G}}\), the correlation \(\Gamma (\varvec{\rho }_{a},\varvec{\rho }_{b})\) encodes the image of the object intensity transmission function \(|A|^2\) with unitary magnification, while the intensity on the detector \(D_{a}\) is uniform. Taking, for definiteness, \(\varvec{\rho }_{b}=0\), corresponding to the central point of view, we obtain

yielding

with \(p_{{\mathcal {G}}}\) a \(\varvec{\rho }_a\)-independent constant, related to the setup parameters and the object total transmittance. Therefore, both quantities vanish if the signal is small.

For \({\mathcal {M}}\), both \(\Gamma\) and the intensity on \(D_{a}\) encode an image of the squared object intensity profile,

where \(f_{O}\) is the objective focal length, and \(f_{T}\) the tube lens focal length. Therefore, the SNR is approximately independent of the spatial modulation of the signal

with \(p_{{\mathcal {M}}}\) a \(\varvec{\rho }_a\)-independent constant, related with the setup parameters and the object total transmittance. On the other hand, since the intensity \({\mathcal {I}}_{A}\) vanishes out of the object profile, we get

provided \(|A(-(f_O/f_T)\varvec{\rho }_a)|\) is not vanishing.

2.3.2 Out-of-focus case

In the out-of-focus case, intensity on the spatial sensor remains uniform in the case of \({\mathcal {G}}\),

with \(z_{a}\) the source-to-\(D_{a}\) distance and \(z_{b}\) the source-to-object distance, but tends to become uniform even in the case of \({\mathcal {M}}\), where

Therefore, one obtains formally similar results in the case of \({\mathcal {G}}\)

and \({\mathcal {M}}\)

The quantities \(q_{{\mathcal {G}}}\) and \(q_{{\mathcal {M}}}\) are constant with respect to the object transverse coordinates, depending instead on the setup parameters, on the object axial position and on the total transmittance. Therefore, in the out of focus case, the possible advantage of \({\mathcal {M}}\) depends on the detailed structure of the intensities and the correlation function.

2.3.3 Simulations of correlation functions

We repeated the analysis of SNR and SBR for the correlation functions in the same modalities discussed in Sect. 2.2.1. In all the cases that follow, the central viewpoint is analysed, namely the correlation image formed by rays passing through the center of the focusing element, as encoded in \(\Gamma (\varvec{\rho }_a,\varvec{\rho }_b=0)\).

The study of SNR on the images of out-of-focus triple-slit masks is reported in Fig. 7, with the same parameter choice used to characterize noise in the refocused images. By comparing the results with those reported in Fig. 3, it is evident that, as expected, the SNR of the single viewpoint is lower than the SNR on refocused images. On the other hand, the relative advantage entailed by \({\mathcal {M}}\) over \({\mathcal {G}}\) is even amplified.

We also report simulations, at focus and out of focus, with the objects defined in Eq. (6) and characterized by a varying number of slits, choosing the same parameters as in Sect. 2.2.1. The apertures defining the resolution at focus have been set to be equal in the two setups, together with all the other parameters. Simulations at focus, reported in Fig. 8, were performed on an object with details close to the diffraction limit. In the out-of-focus regime, whose results are reported in Fig. 9, we placed the object at a distance from the focused plane equal to 30 times the depth of focus in both setups, with the object parameters adjusted in such a way to have correlation images encoded in \(\Gamma (\varvec{\rho }_a,\varvec{\rho }_b=0)\) resolved at 99% visibility. Finally, Fig. 10 shows the ratios between the SBR obtained in \({\mathcal {M}}\) and \({\mathcal {G}}\), in the cases at focus and out of focus.

SNR of the central point-of-view images of the correlation function \(\Gamma (\varvec{\rho }_a,0)\) of the triple slit reported in Fig. 2, mediated over the central points of the three slits and normalized with the square root of the frame number \(N_f\). The setup and object parameters are the same as those reported in Fig. 3. Solid blue line refers to setup \({\mathcal {M}}\), and dashed red line to \({\mathcal {G}}\)

Comparison of the SNR (upper panel) and SBR (lower panel) at focus for the correlation function \(\Gamma (\varvec{\rho }_a,0)\) obtained in setups \({\mathcal {G}}\) (red dots) and \({\mathcal {M}}\) (blue dots), by varying the number of details (Gaussian slits) of an object with transmittance profile (6)

Comparison of the two SBR in the deeply out-of-focus regime for the correlation functions \(\Gamma (\varvec{\rho }_a,0)\) obtained in setups \({\mathcal {G}}\) (red dots) and \({\mathcal {M}}\) (blue dots), by varying the number of details (Gaussian slits) of an object with transmittance profile (6)

Ratio between the SBR of the correlation functions \(\Gamma (\varvec{\rho }_a,0)\) obtained in setup \({\mathcal {M}}\) and \({\mathcal {G}}\), by varying the number of details (Gaussian slits) of an object with transmittance profile (6). The left panel refers to the case at focus, while the right panel to the considered out-of-focus case

3 Discussion

We have compared the performances, in terms of signal-to-noise and signal-to-background ratios, of two setups for correlation plenoptic imaging. Both schemes are characterized by the 3D imaging capability typical of plenoptic devices. However, in setup \({\mathcal {G}}\), images of the scene can be retrieved only by measuring intensity correlations, while setup \({\mathcal {M}}\) can even work as an ordinary microscope, if the object is placed in the main lens focal plane.

The analysis we performed shows a solid advantage of \({\mathcal {M}}\) in terms of SNR and SBR, in both cases of the correlation function, representing a set of viewpoints on the scene, and the refocused image, in which all perspectives are properly merged. The case of the SBR computed in setup \({\mathcal {M}}\) for the refocused image of an N-slit object is particularly striking, since it shows that the capability to discriminate the signal (i.e. the slit peaks) from the background increases with the number of slits, as opposed to the decreasing behavior observed in the case of \({\mathcal {G}}\). The SNR behaves as expected in all cases, with an overall decreasing trend with increasing number of object details, showing an advantage of \({\mathcal {M}}\) over \({\mathcal {G}}\) by a factor that is small in the focused case, which is only moderately interesting, but can range from 3 to 9 in the much more relevant out-of-focus case, where it tends to coincide with SBR. The differences found in terms of SNR and SBR are noticeable especially in the perspective of reducing the acquisition times. Actually, an increase by factor C in the SNR at a fixed \(N_f\) produces a quadratic decrease by a factor \(C^2\) in the required number of frames (hence, in the acquisition time) to reach a specific SNR value, targeted in an experiment or an application. Though the experimental measurements reported in Ref. [25] and Ref. [39] are not matched in terms of imaging parameters, it is evident that the quality of images in the latter case, based on a setup of the type \({\mathcal {M}}\), underwent an outstanding improvement compared to the former, in which setup \({\mathcal {G}}\) was used, notwithstanding the number of frames, smaller of at least one order of magnitude in Ref. [39].

The characterization of fluctuations that affect the measurement of correlation functions can provide an interesting basis to develop new measurement protocols, in which noisy contributions, that do not bring relevant information on images, can be erased a priori in a deterministic way. A similar task was performed in the case of ghost imaging [44] and [32, 45]. Future research will be devoted to the generalization of the procedure to different correlation plenoptic imaging architectures.

4 Methods

The deterministic propagation from the source to the detectors along the two optical paths a and b depends on the transmission functions of the object and the lenses. We will consider the field modelled by a scalar function V, related with the intensity by \(I=|V|^2\), assuming that polarization degrees of freedom are relevant in the considered systems. In free space, a monochromatic field with frequency \(\omega\) and wavenumber \(k=\omega /c\), evaluated on a plane at a general longitudinal position z, is related with the same field at \(z_{0}<z\) by the paraxial transfer function:

We shall treat radiation emission by the chaotic source as an approximately Gaussian random process, stationary and ergodic. In particular, the field \(V_{S}(\varvec{\rho }_{s})\) at a point \(\varvec{\rho }_{s}\) on the source will be characterized by a Gaussian-Schell equal-time correlator [41]

where \(I_{s}\) is a constant in \({\mathcal {G}}\), and is the object intensity profile in \({\mathcal {M}}\); \(\sigma _g\) is the transverse coherence length. We will consider sources characterized by negligible transverse coherence, accordingly replacing the mutual coherence function \(\exp (-\varvec{\rho }^{2}/2\sigma _{g}^{2})\) in the propagation integrals with \(2\pi \sigma _{g}^{2}\delta ^{(2)}(\varvec{\rho })\). In this hypothesis, Eqs. (25)-(26) fully define the correlation function in Eq. (1)

The refocused image can be expressed as

in terms of the physical observable

We obtain information on the noise affecting the refocused images from the statistical fluctuations of the observable (28) around its average \(\Sigma _{\mathrm {ref}}(\varvec{\rho }_{a})\), namely

with \(\Phi\) a positive function related with the local fluctuations of intensity correlations [compare with the definition (28)]. Being up to fourth-order in the intensity correlations, \(\Phi\) contains up to eight-order correlation terms between electric fields.

To compute the quantities (27)–(29), it is necessary to evaluate up to eight-point field correlators. By using the Gaussian approximation, we will assume that Isserlis-Wick’s theorem is valid for the correlators that involve an equal number of V’s and \(V^{*}\)’s, namely

with \(\mathrm {P}\) a permutation of the primed indexes, while all other correlators, including \(\left\langle V\right\rangle\) itself, vanish [37].

Since field propagation from the source to the detectors is purely deterministic, the eight-point expectation values in (29), involving the fields at the detectors A and B, are determined by the eight-point correlators of the field at the source. In both setups, the most relevant term in \({\mathcal {F}}(\varvec{\rho }_{a})\) is determined by the only contribution to \(\Phi (\varvec{\rho }_{a},\varvec{\rho }_{b1},\varvec{\rho }_{b2})\) in (29) that is factorized with respect to the fields at the two detectors:

The self-correlations of intensity fluctuations are defined, in analogy to (1), as

with \(D=A,B\). Actually, the integrand of (31) is concentrated around \(\varvec{\rho }_{b1}-\varvec{\rho }_{b2}=0\) (see also [37]), but shows no concentration on the \(\varvec{\rho }_{b1}\) plane. Instead, the other terms that characterize \(\Phi (\varvec{\rho }_{a},\varvec{\rho }_{b1},\varvec{\rho }_{b2})\) show concentration in both variables \(\varvec{\rho }_{b1}\) and \(\varvec{\rho }_{b2}\). This property is reflected in the fact that \({\mathcal {F}}-{\mathcal {F}}_{0}\) is typically suppressed with respect to \({\mathcal {F}}_{0}\) like the ratio of the intensity of light contained a coherence area on \(D_{B}\) and the total intensity transmitted to \(D_{B}\). This feature is demonstrated in Ref. [37] for \({\mathcal {G}}\), and in the Appendix for \({\mathcal {M}}\). Hence, throughout the paper, we have approximated the variance of the measured correlations by the term \({\mathcal {F}}_{0}\).

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: There are no associated data available.]

References

E.H. Adelson, J.Y. Wang, Single lens stereo with a plenoptic camera. IEEE Trans. Pattern Anal. Mach. Intell. 14, 99 (1992)

R. Ng, M. Levoy, M. Brédif, G. Duval, M. Horowitz, P. Hanrahan, Light field photography with a hand-held plenoptic camera, Stanford University Computer Science Tech Report CSTR 2005-02, (2005)

G. Lippmann, Épreuves réversibles donnant la sensation du relief. J. Phys. Theor. Appl. 7, 821 (1908)

M. Levoy, R. Ng, A. Adams, M. Footer, M. Horowitz, Light field microscopy. ACM Trans. Graph. 25, 924 (2006)

M. Broxton, L. Grosenick, S. Yang, N. Cohen, A. Andalman, K. Deisseroth, M. Levoy, Wave optics theory and 3-d deconvolution for the light field microscope. Opt. Express 21, 25418 (2013)

W. Glastre, O. Hugon, O. Jacquin, H.G. de Chatellus, E. Lacot, Demonstration of a plenoptic microscope based on laser optical feedback imaging. Opt. Express 21, 7294 (2013)

R. Prevedel, Y.-G. Yoon, M. Hoffmann, N. Pak, G. Wetzstein, S. Kato, T. Schrödel, R. Raskar, M. Zimmer, E.S. Boyden, A. Vaziri, Simultaneous whole-animal 3d imaging of neuronal activity using light-field microscopy. Nat. Methods 11, 727 (2014)

T.W. Fahringer, K.P. Lynch, B.S. Thurow, Volumetric particle image velocimetry with a single plenoptic camera. Meas. Sci. Technol. 26, 115201 (2015)

C. W. Wu, The plenoptic sensor, Ph.D. thesis, University of Maryland, College Park, (2016)

Y. Lv, R. Wang, H. Ma, X. Zhang, Y. Ning, X. Xu, SU-G-IeP4-09: Method of human eye aberration measurement using plenoptic camera over large field of view. Med. Phys. 43, 3679 (2016)

C. Wu, J. Ko, C.C. Davis, Using a plenoptic sensor to reconstruct vortex phase structures. Opt. Lett. 41, 3169 (2016)

C. Wu, J. Ko, C.C. Davis, Imaging through strong turbulence with a light field approach. Opt. Express 24, 11975 (2016)

F.V. Pepe, G. Scala, G. Chilleri, D. Triggiani, Y.-H. Kim, V. Tamma, Distance sensitivity of thermal light second-order interference beyond spatial coherence. Eur. Phys. J. Plus 137, 647 (2022)

E.M. Hall, B.S. Thurow, D.R. Guildenbecher, Comparison of three-dimensional particle tracking and sizing using plenoptic imaging and digital in-line holography. Appl. Opt. 55, 6410 (2016)

S. Muenzel, J.W. Fleischer, Enhancing layered 3d displays with a lens. Appl. Opt. 52, D97 (2013)

M. Levoy, P. Hanrahan, Light field rendering, In: Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques (Association for Computing Machinery, New York, 1996), pp. 31-42

X. Xiao, B. Javidi, M. Martinez-Corral, A. Stern, Advances in three-dimensional integral imaging: sensing, display, and applications. Appl. Opt. 52, 546 (2013)

A. Shademan, R. S. Decker, J. Opfermann, S. Leonard, P. C. Kim, A. Krieger, Plenoptic cameras in surgical robotics: Calibration, registration, and evaluation, In: Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, New York, 2016), pp. 708-714

M.F. Carlsohn, A. Kemmling, A. Petersen, L. Wietzke, 3d real-time visualization of blood flow in cerebral aneurysms by light field particle image velocimetry. Proc. SPIE 9897, 989703 (2016)

H. N. Le, R. Decker, J. Opferman, P. Kim, A. Krieger, J. U. Kang, “3-d endoscopic imaging using plenoptic camera,” In: CLEO: Applications and Technology (Optical Society of America, Washington, DC, 2016), paper AW4O.2

M. D’Angelo, F.V. Pepe, A. Garuccio, G. Scarcelli, Correlation plenoptic imaging. Phys. Rev. Lett. 116, 223602 (2016)

F.V. Pepe, G. Scarcelli, A. Garuccio, M. D’Angelo, Plenoptic imaging with second-order correlations of light. Quantum Meas. Quantum Metrol. 3, 20 (2016)

F.V. Pepe, F. Di Lena, A. Garuccio, G. Scarcelli, M. D’Angelo, Correlation plenoptic imaging with entangled photons. Technol.-Open Access Multidiscip. Eng. J. 4, 17 (2016)

F.V. Pepe, O. Vaccarelli, A. Garuccio, G. Scarcelli, M. D’Angelo, Exploring plenoptic properties of correlation imaging with chaotic light. J. Opt. 19, 114001 (2017)

F.V. Pepe, F. Di Lena, A. Mazzilli, E. Edrei, A. Garuccio, G. Scarcelli, M. D’Angelo, Diffraction-limited plenoptic imaging with correlated light. Phys. Rev. Lett. 119, 243602 (2017)

T.G. Georgiev, A. Lumsdaine, Focused plenoptic camera and rendering. J. Electron. Imaging 19, 021106 (2010)

T. Georgiev, A. Lumsdaine, The multifocus plenoptic camera. Proc. SPIE 8299, 829908 (2012)

T.B. Pittman, Y.H. Shih, D.V. Strekalov, A.V. Sergienko, Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A 52, R3429 (1995)

M. Genovese, Real applications of quantum imaging. J. Opt. 18, 073002 (2016)

O. Schwartz, J.M. Levitt, R. Tenne, S. Itzhakov, Z. Deutsch, D. Oron, Superresolution microscopy with quantum emitters. Nano Lett. 13, 5832 (2013)

Y. Israel, R. Tenne, D. Oron, Y. Silberberg, Quantum correlation enhanced super-resolution localization microscopy enabled by a fibre bundle camera. Nat. Commun. 8, 14786 (2017)

T. Dertinger, R. Colyer, G. Iyer, S. Weiss, J. Enderlein, Fast, background-free, 3D super-resolution optical fluctuation imaging (SOFI). Proc. Natl. Acad. Sci. USA 106, 22287 (2009)

G. Barreto Lemos, V. Borish, G. D. Cole, S. Ramelow, R. Lapkiewicz, A. Zeilinger, Quantum imaging with undetected photons, Nature 512, 409 (2014)

M. D’Angelo, Y.H. Kim, S.P. Kulik, Y. Shih, Identifying entanglement using quantum ghost interference and imaging. Phys. Rev. Lett. 92, 233601 (2004)

G. Scarcelli, Y. Zhou, Y. Shih, Random delayed-choice quantum eraser via two-photon imaging. Eur. Phys. J. D 44, 167 (2007)

M. D’Angelo, A. Mazzilli, F.V. Pepe, A. Garuccio, V. Tamma, Characterization of two distant double-slits by chaotic light second-order interference. Sci. Rep. 7, 2247 (2017)

G. Scala, M. D’Angelo, A. Garuccio, S. Pascazio, F.V. Pepe, Signal-to-noise properties of correlation plenoptic imaging with chaotic light. Phys. Rev. A 99, 053808 (2019)

A. Scagliola, F. Di Lena, A. Garuccio, M. D’Angelo, F.V. Pepe, Correlation plenoptic imaging for microscopy applications. Phys. Lett. A 384, 126472 (2020)

G. Massaro, D. Giannella, A. Scagliola, F. Di Lena, G. Scarcelli, A. Garuccio, F. V. Pepe, M. D’Angelo, Light-field microscopy with correlated beams for extended volumetric imaging at the diffraction limit, arXiv:2110.00807 (2021)

A. Valencia, G. Scarcelli, M. D’Angelo, Y. Yanhua, Two-photon imaging with thermal light. Phys. Rev. Lett. 94, 063601 (2005)

L. Mandel, E. Wolf, Optical coherence and quantum optics (Cambridge University Press, Cambridge, 1995)

S. Wadood, K. Liang, Y. Zhou, J. Yang, M. Alonso, X. Qian, T. Malhotra, S. Hashemi Rafsanjani, A. Jordan, R. Boyd, A. Vamivakas, Experimental demonstration of superresolution of partially coherent light sources using parity sorting. Opt. Express 29, 22034–22043 (2021)

S. Kurdzialek, Back to sources - the role of losses and coherence in super-resolution imaging revisited. Quantum 6, 697 (2022)

F. Ferri, D. Magatti, L.A. Lugiato, A. Gatti, Differential ghost imaging. Phys. Rev. Lett 104, 253603 (2010)

S. Kurdziałek, R. Demkowicz-Dobrzański, Super-resolution optical fluctuation imaging-fundamental estimation theory perspective. J. Opt. 23, 075701 (2021)

Acknowledgements

GS is supported by QuantEra 2/2020 grant; GM, FP and MD acknowledge the support by Istituto Nazionale di Fisica Nucleare (INFN) projects PICS4ME and TOPMICRO, and project Qu3D, supported by the Italian Istituto Nazionale di Fisica Nucleare, the Swiss National Science Foundation (grant 20QT21_187716 “Quantum 3D Imaging at high speed and high resolution”), the Greek General Secretariat for Research and Technology, the Czech Ministry of Education, Youth and Sports, under the QuantERA programme, which has received funding from the European Union’s Horizon 2020 research and innovation programme. GS thanks M. Pawłowski and R. Demkowicz-Dobrzański for the support which led to the realization of the present work.

Funding

Open access funding provided by Università degli Studi di Bari Aldo Moro within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Appendix A Exact expressions for the refocusing function

Appendix A Exact expressions for the refocusing function

The refocusing function \(\Sigma _{\text {ref}}\) for \({\mathcal {M}}\) reads

where \(P_O\) is the objective lens pupil function, \({\mathcal {P}}\left( \kappa \right) =\int d\rho |P_{o}\left( \rho \right) |^{2}e^{-i\kappa \cdot \rho }\) the Fourier transform of the objective intensity transmittance, and \(M=f_T/f_O\) the magnification of focused objects.

The dominant term \({\mathcal {F}}_{0}\) of the variance \({\mathcal {F}}\) reads

with \(\Gamma _{AA}\) (\(\Gamma _{BB}\)) the intensity self-correlation between two points on the detector \(D_a\) (\(D_b\)). Thus,

where \({\mathcal {A}}\left( \kappa \right) =\int d\rho |A\left( \rho \right) |^{2}e^{-i\kappa \cdot \rho }\) is the Fourier transform of the object intensity transmittance. The corresponding expressions for \({\mathcal {G}}\) are reported in Ref. [37].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Massaro, G., Scala, G., D’Angelo, M. et al. Comparative analysis of signal-to-noise ratio in correlation plenoptic imaging architectures. Eur. Phys. J. Plus 137, 1123 (2022). https://doi.org/10.1140/epjp/s13360-022-03295-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjp/s13360-022-03295-1