Abstract

Within the Friedmann–Lemaître–Robertson–Walker (FLRW) framework, the Hubble constant \(H_0\) is an integration constant. Thus, consistency of the model demands observational constancy of \(H_0\). We demonstrate redshift evolution of best fit \(\Lambda \)CDM parameters \((H_0, \Omega _{m})\) in Pantheon+ supernove (SNe). Redshift evolution of best fit cosmological parameters is a prerequisite to finding a statistically significant evolution as well as identifying alternative models that are competitive with \(\Lambda \)CDM in a Bayesian model comparison. To assess statistical significance, we employ three different methods: (i) Bayesian model comparison, (ii) mock simulations and (iii) profile distributions. The first shows a marginal preference for the vanilla \(\Lambda \)CDM model over an ad hoc model with 3 additional parameters and an unphysical jump in cosmological parameters at \(z=1\). From mock simulations, we estimate the statistical significance of redshift evolution of best fit parameters and negative dark energy density (\(\Omega _m > 1\)) to be in the \(1-2 \sigma \) range, depending on the criteria employed. Importantly, in direct comparison to the same analysis with the earlier Pantheon sample we find that statistical significance of redshift evolution of best fit parameters has increased, as expected for a physical effect. Our profile distribution analysis demonstrates a shift in \((H_0, \Omega _m)\) in excess of \(95\%\) confidence level for SNe with redshifts \(z > 1\) and also shows that a degeneracy in MCMC posteriors is not equivalent to a curve of constant \(\chi ^2\). Our findings can be interpreted as a statistical fluctuation or unexplored systematics in Pantheon+ or \(\Lambda \)CDM model breakdown. The first two possibilities are disfavoured by similar trends in independent probes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The (flat) \(\Lambda \)CDM cosmological model is an extremely successful minimal model that returns seemingly consistent cosmological parameters across Type Ia supernovae (SNe) [1, 2], Cosmic Microwave Background [3] and baryon acoustic oscillations [4]. Despite this success, comparison of early and late Universe cosmological parameters has revealed discrepancies [5,6,7,8,9,10,11]. The origin [12, 13] and resolution [14, 15] of these anomalies is a topic of debate. We observe that the \(\Lambda \)CDM model describes approximately 13 billion years of evolution of the Hubble parameter H(z) in the late Universe (conservatively redshifts \(z \lesssim 30\)) with a single fitting parameter, matter density today \(\Omega _m\).Footnote 1 Objectively, given the prevailing belief that \(\Omega _m \sim 0.3\), this marks billions of years of evolution with effectively no free parameters.

As originally pointed out [16] (see also [17]), within the FLRW framework, any mismatch between H(z), an unknown function inferred from Nature, and a theoretical assumption on the effective EoS \(w_{\text {eff}}(z)\), e. g. the \(\Lambda \)CDM model, must mathematically lead to a Hubble constant \(H_0\) that evolves with effective redshift. Simply put, a redshift-dependent \(H_0\) is indicative of a bad model [16]. This prediction can be tested in the late Universe, where the \(\Lambda \)CDM model reduces to two fitting parameters:

To date, independent studies have documented decreasing \(H_0\) trends within model (1) across strong lensing time delay (SLTD) [18, 19], Type Ia supernovae (SNe) [20,21,22,23,24,25] and combinations of cosmological data sets [26,27,28]. Moreover, quasar (QSO) Hubble diagrams [29,30,31] show a preference for larger than expected \(\Omega _m\) values, \(\Omega _m \gtrsim 1\) [32,33,34,35]. It was subsequently noted that \(\Omega _m\) increases with effective redshift in SNe and QSO samples [24, 36, 37]. Although the trend in any given observable is not overly significant, e. g. \(\lesssim 2 \sigma \) for SLTD [18, 19], combining probabilities from independent observables using Fisher’s method, the significance increases quickly [25].

Simple binned mock \(\Lambda \)CDM data analysis [25, 38] suggests that evolution of \((H_0, \Omega _m)\) best fit parameters must be expected in any data set that only provides either observational Hubble H(z) or angular diameter \( D_{A}(z)\) or luminosity distance \(D_{L}(z)\) constraints.Footnote 2 If true, one can expect to separate any given sample into low and high redshift subsamples and see discrepancies in the \((H_0, \Omega _m)\)-plane. Here, we highlight the feature in the latest Pantheon+ SNe sample [39, 40].Footnote 3 The main message of this letter is that evolution of \((H_0, \Omega _m)\) with effective redshift persists in Pantheon+ SNe. Furthermore, an increasing \(\Omega _m\) trend, evident at higher redshifts, continues beyond \(\Omega _m=1\) giving rise to negative DE densities at \(z \gtrsim 1\). In light of concerns highlighted in [45,46,47], the Pantheon+ sample improves on redshift corrections [48]. Thus, errors in the handling of redshifts can be precluded as the origin of the trend. It is worth stressing again that [25, 38] provide a mathematical proof that redshift evolution of best fit \(\Lambda \)CDM parameters cannot be ruled out in mock Planck-\(\Lambda \)CDM data. There are then two relevant questions. Is redshift evolution of best fit \(\Lambda \)CDM parameters evident in observed data? If so, what is its statistical significance?

In cosmology the default is to assess statistical significance with Markov Chain Monte Carlo (MCMC). The increasing \(\Omega _m\) trend is evident in MCMC posteriors, but as we demonstrate, the \(H_0\) posterior is subject to projection effects due to a degeneracy (banana-shaped contour) in the 2D \((H_0, \Omega _m)\) posterior. In the literature, this is interpreted as the data failing to constrain the model, but as we will show in Sect. 5, this is a misconception because it is not supported by the \(\chi ^2\) (see also [49]). We overcome the MCMC degeneracy in three complementary ways. First, we provide a Bayesian comparison between the \(\Lambda \)CDM model and the \(\Lambda \)CDM model with a split at redshift \(z_{\text {split}}\), where \((H_0, \Omega _m)\) are allowed to adopt different values at low and high redshift. Secondly, we employ a frequentist comparison between best fits of the observed data and mock data that focuses on different criteria quantifying evolution in the sample. Finally, we analyse the \(\chi ^2\) through profile distributions. For the Pantheon+ sample split at \(z_{\text {split}}=1\) we find a shift in the cosmological parameters that exceeds 95% confidence level. Note, Pantheon+ is presented as a sample in the redshift range \(0 < z \le 2.26\), but redshift evolution of cosmological parameters is evident in the \(\Lambda \)CDM model from \(z = 0.7\) onwards.

Hints of negative DE densities, especially at higher redshifts, are widespread in the literature, so our observations in Pantheon+ may be unsurprising. Indeed, while \(\Lambda \)CDM mock analysis in [25, 38] confirms that \(\Omega _m > 1\) best fits are precluded with low redshift data, this is no longer true at higher redshifts. We stress again that this is a purely mathematical feature of the \(\Lambda \)CDM model. Starting with studies incorporating Lyman-\(\alpha \) BAO [50], one of the first observables discrepant with Planck-\(\Lambda \)CDM [51,52,53], claims of negative DE densities at higher redshifts, including anti-de Sitter (AdS) vacua at high redshift [54, 55] (however see [56])Footnote 4 and features in data reconstructions [62,63,64,65,66], have been noticeable.Footnote 5 This has led to extensive attempts to model negative DE densities [71,72,73,74,75,76,77,78,79,80,81], most simply as sign switching \(\Lambda \) models [82,83,84,85,86]. Given the sparseness of SNe data beyond \(z=1\), claims of negative DE densities are usually attributed to Lyman-\(\alpha \) BAO,Footnote 6 but here we see the same feature in state of the art Pantheon+ SNe. It is plausible that selection effects are at play (see discussion in [20]), but if the arguments in [25, 38] hold up, then \(\Omega _m > 1\) \(\Lambda \)CDM best fits to data in high redshift bins cannot be precluded. On the contrary, they can be expected.

2 Preliminaries

Our analysis starts by following and recovering results in [88] (see also [39]). We set the stage with a preliminary consistency check. In short, we extremise the likelihood,

where \({\vec {Q}}\) is a 1701-dimensional vector and \(C_{\text {stat+sys}}\) is the covariance matrix of the Pantheon+ sample [39]. The Pantheon+ sample has 1701 SN light curves, 77 of which correspond to galaxies hosting Cepheids in the low redshift range \(0.00122 \le z \le 0.01682\). In order to break the degeneracy between \(H_0\) and the absolute magnitude M of Type Ia, we define the vector

where \(m_i\) and \(\mu _i \equiv m_i - M\) denote the apparent magnitude and distance modulus of the \(i^{\text {th}}\) SN, respectively. The cosmological model, which we assume to be the \(\Lambda \)CDM model (1), enters through the following relations:

Extremising the likelihood, one arrives at the best fit values,

which are in perfect agreement with [88]. We estimate the \(1 \sigma \) confidence intervals through an MCMC exploration of the likelihood with emcee [89], finding excellent agreement with Fisher matrix analysis [88],

It is interesting to compare Pantheon+ constraints on \(\Omega _m h^2\) \((h:= H_0/100)\) directly with Planck. In Fig. 1 we highlight a \(3.7 \sigma \) tension,Footnote 7 which importantly impacts the high redshift behaviour of \(H(z) \sim H_0 \sqrt{\Omega _m} (1+z)^{\frac{3}{2}}\) in the late Universe. This is interesting, as we start to see evolution in best fit \(\Lambda \)CDM parameters at higher redshifts. Given the tension in the Hubble constant [5,6,7,8,9], our focus here is on \(H_0\) and by extension \(\Omega _m\), since both parameters are correlated when one fits data. Of course, if the fitting parameters \(H_0\) and \(\Omega _m\) change with effective redshift, there is no guarantee that \(\Omega _m h^2\) is a constant. If the constancy of \(\Omega _m h^2\) can be tested, this allows one to study the assumption that matter is pressureless. Such studies will require data exclusively in the matter dominated regime where DE and radiation sectors are irrelevant. Given the sparcity of high redshift \(z > 1\) data, competitive studies are still a few years off.

\(3.7 \sigma \) tension between Planck and Pantheon+ for the parameter combination that dictates the high redshift behaviour of the Hubble parameter H(z) in the late Universe. We made use of GetDist [90]

3 Splitting Pantheon+

Having confirmed the results quoted in [88], we depart from earlier analysis and crop the Pantheon+ covariance matrix in order to isolate the \(77 \times 77\)-dimensional covariance matrix \(C_{\text {Cepheid}}\) corresponding to SNe in Cepheid host galaxies and define a new likelihood that is only sensitive to the absolute magnitude M,

We can now split the remaining 1624 SNe into low and high redshift samples, which we demarcate through a redshift \(z_{\text {split}}\). One can crop the original covariance matrix accordingly to get \(C_{\text {SN}}\) for either the low or high redshift sample, but we will primarily focus on the high redshift subsample with \(z > z_{\text {split}}\). The reason being that SNe samples have a low effective redshift, \(z_{\text {eff}} \sim 0.3\), and it is well documented that Planck values \(\Omega _m \sim 0.3\) are preferred. The hypothesis we explore is that such results overlook evolution at higher redshifts, so this explains the focus on high redshift subsamples. In summary, we study the new likelihood,

where we have defined,

The redshift range of the Pantheon+ sample [39] is \(0.00122 \le z \le 2.26137\), so we take \(z_{\text {split}}\) in this range. In the next section we begin the tomographic analysis of splitting the Pantheon+ sample into a low and high redshift subsample. We remark that the likelihoods presented in Eqs. (2) and (8) omit a constant normalisation. Being a constant, it plays no role when one fits data, and is thus routinely omitted in the literature [39]. However, this term is relevant when one performs Bayesian model comparison. We will reinstate the normalisation later.

4 Analysis

It is widely recognised that confronting exclusively high redshift SNe data to the \(\Lambda \)CDM model, MCMC inferences are typically impacted by degeneracies, i. e. banana-shaped posteriors, in the \((H_0, \Omega _m)\)-plane. Later we confirm the impact of projection effects on MCMC posteriors as priors are relaxed in the presence of a degeneracy.Footnote 8 We overcome the degeneracy in MCMC marginalisation through three different prongs of attack that only rest upon on the likelihood or \(\chi ^2\). Here it is worth noting that MCMC is merely an algorithm, whereas the \(\chi ^2\) is a measure or metric of how well a point in parameter space fits the data. First, we provide a Bayesian model comparison based on the Akaike Information Criterion (AIC) [91] between the \(\Lambda \)CDM model and a \(\Lambda \)CDM model allowing a jump in cosmological parameters \((H_0, \Omega _m)\) at a fixed redshift. Despite the vanilla \(\Lambda \)CDM model being preferred by the AIC, the analysis demonstrates that an alternative model, even a physically ad hoc model that contradicts the basic fundamentals of FLRW, becomes more competitive if the \(\Lambda \)CDM fitting parameters change with effective redshift when confronted to data. Secondly, in a frequentist analysis we resort to a comparison between best fits of observed and mock data in the same redshift range with the same data quality to ascertain the significance of evolution. Finally, later in section 5, we employ profile distributions as a secondary frequentist approach.

4.1 Bayesian interpretation

One may interpret the results in Table 1 as a comparison between two models. The first is the \(\Lambda \)CDM model fitted over the entire redshift range of the SNe, \(0.00122 \le z \le 2.26137\), with three parameters \((H_0, \Omega _m, M)\), while the second is the \(\Lambda \)CDM model with a split at redshift \(z_{\text {split}}\) allowing the model to adopt different values of \((H_0, \Omega _m)\) above and below the split. Note that the likelihood (8) separates SNe in Cepheid host galaxies and their only role is to constrain M. For this reason, one is only fitting two effective parameters \((H_0, \Omega _m)\). Furthermore, by introducing the data split, we are comparing this effective two parameter model \((H_0, \Omega _m)\) with an effective five parameter model \((H^{(1)}_0, \Omega ^{(1)}_m, H^{(2)}_0, \Omega ^{(2)}_m, z_{\text {split}})\). Table 1 presents improvements in the \(\chi ^2\) without the normalisation corresponding to the logarithm of the determinant of the covariance matrix \(C_{\text {stat+sys}}\). Since we truncate out \(C_{\text {stat+sys}}\) entries when we split the SNe, this increases the normalisation, thereby penalising the model with the split beyond the 3 extra parameters introduced. We will quantify this number in turn, but only in competitive settings relative to the \(\Lambda \)CDM model where the improvement in \(\chi ^2\) in Table 1 is enough to overcome the additional parameters, i. e. \(\Delta \chi ^2 < -6\).Footnote 9

It should be noted that while model A is the vanilla \(\Lambda \)CDM model, the model B that serves as a foil to \(\Lambda \)CDM is a contradiction, because if \(H_0\) and \(\Omega _m\) change with effective redshift, this violates the mathematical requirement that both are integration constants. For this reason, model B could never replace \(\Lambda \)CDM. Nevertheless, the result is instructive as Bayesian model comparison is prevalent in the cosmology literature. That being said, the focus of this paper is performing a consistency check of the \(\Lambda \)CDM model and this does not necessitate a model B. What the analysis here shows is that we are getting close to a point in time where models incorporating evolution in the fitting parameters \(H_0\) and \(\Omega _m\) may be more competitive than \(\Lambda \)CDM, simply based on SNe data alone.

We recall the Akaike information criterion (AIC) [91],

where \(\chi _{\text {min}}^2\) is the minimum of the \(\chi ^2\), d is the number of free parameters and \(|C_{\text {stay+sys}}|\) denotes the determinant of the Pantheon+ covariance matrix \(C_{\text {stat+sys}}\). Since the latter is a constant, it has no bearing on the best fit parameters, but it impacts the AIC analysis.Footnote 10 However, since \(C_{\text {stat+sys}}\) is a large matrix with small numerical entries, determining the absolute value of \(|C_{\text {stat+sys}}|\) within machine precision is difficult. One can simplify the problem by noting that the \(77 \times 77\) matrix \(C_{\text {Cepheid}}\) is common to both the \(\Lambda \)CDM model and the \(\Lambda \)CDM model with a jump in cosmological parameters, so it contributes to both AIC values and drops out. Thus, we only need to the study the \(1624 \times 1624\) covariance matrix \(C_{\text {SN}}\), but this is still a large matrix with small numerical entries.

Since the \(\Lambda \)CDM model with a jump in cosmological parameters necessitates three additional parameters, i. e. \(\Delta d = 3\), this penalty can only be absorbed to give a lower AIC if \(\Delta \chi _{\text {min}}^2 < -6\). The results of splitting the Pantheon+ sample and fitting the \(\Lambda \)CDM model to data below and above \(z = z_{\text {split}}\) are shown in Table 1. We find that refitting the low redshift sample typically leads to small improvements in \(\chi ^2\), whereas refits of the high redshift sample lead to greater improvements. This outcome is expected if there is evolution across the sample; the evolution is only expected at higher redshifts because SNe samples have a low effective redshift, and as we have noted, SNe samples generically prefer Planck values \(\Omega _m \sim 0.3\). In particular, \(z_{\text {split}}=1\) gives rise to greatest reduction in \(\chi ^2_{\text {min}}\) with respect to the \(\Lambda \)CDM model without the split. However, we need to make sure that differences in \(\ln |C_{\text {SN}}|\) do not counter the improvement in \(\chi _{\text {min}}^2\).

To that end, consider

where A, B and C are respectively \(1599 \times 1599, 1599 \times 25\) and \(25 \times 25\)-dimensional matrices. Note that the dimensionalities are fixed by the choice of \(z_{\text {split}}=1\). The determinant of this block diagonal matrix is

provided the matrix A is invertible. Note that when one introduces the split at \(z_{\text {split}}=1\), one sets \(B = 0\). As a result, the difference in the \(\ln |C_{\text {SN}}|\) is

where \(\ln |A|\) contributes equally to competing AIC values and thus drops out. This removes the problem with machine precision leaving us a comparison of the logarithm of the determinant of smaller \(25 \times 25\) matrices. We are now left with an easy calculation. The AIC changes by \(\Delta \text {AIC} = \Delta \ln |C_{\text {stat+sys}}|+\Delta \chi ^2_{\text {min}} + 2 \Delta d = 1.5-1-6.2 +2 (5-2) = 0.3\), when one replaces the vanilla \(\Lambda \)CDM model with a (contradictory) \(\Lambda \)CDM model with a jump in the parameters \((H_0, \Omega _m)\) at \( z_{\text {split}} = 1\). Thus, despite the evolution seen in \((H_0, \Omega _m)\), Pantheon+ SNe data still has a marginal preference for the vanilla \(\Lambda \)CDM model over a physically “ad hoc model” with 3 additional parameters.

Given that our model B is not only a contradiction, but also has 3 additional parameters, it is not really a serious contender. That being said, the take-home message is clear. If the \(\Lambda \)CDM fitting parameters \((H_0, \Omega _m)\) change with effective redshift in a statistically significant way (see later analysis in Sect. 5 for confirmation), thereby failing our consistency check for a given split into low and high redshift subsamples, this opens the door for competing models. A physically motivated minimal extension of the \(\Lambda \)CDM model evidently may lead to a reversal in the conclusion that the \(\Lambda \)CDM model is preferred.

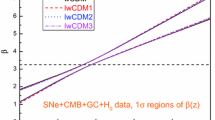

We close this section with additional comments. The change of \((H_0, \Omega _m)\) parameters with effective redshift constitutes a decreasing \(H_0\)/ increasing \(\Omega _m\) best fit trend with effective redshift. This is consistent with earlier analysis of the Pantheon SNe sample [24, 25]. Moreover, as is clear from Fig. 2 of [24] and Table 1, this trend begins at \(z = 0.7\). Upgrading the Pantheon to Pantheon+ samples has not changed this trend. A final point worth stressing is that best fits beyond \(z_{\text {split}} = 1\) prefer a \(\Lambda \)CDM model with negative DE densities, \(\Omega _m > 1\). This is simply a feature of the Pantheon+ [39, 40] data set, but since Risaliti-Lusso QSOs [30, 31] have a strong preference for \(\Omega _m > 1\) inferences in the \(\Lambda \)CDM model at high redshifts, the observations are consistent and both data sets warrant further study.

4.2 An illustration of MCMC bias

Having identified the split that enhances the improvement in fit, here we fix \(z_{\text {split}}=1\) and present MCMC posteriors for data above and below the split. In Fig. 2 the results of this exercise can be seen, where we have allowed for different uniform priors on \(\Omega _m\). There are a number of take-home messages. First, the low redshift (\(H_0, \Omega _m\)) posteriors are Gaussian, as expected, whereas the high redshift (\(H_0, \Omega _m\)) posteriors are not. Secondly, the peak of the \(\Omega _m\) posterior is found in the \(\Omega _m > 1\) regime, but it is robust to changes in the \(\Omega _m\) prior. Thus, imposing \(\Omega _m \le 1\) would simply cut off the peak in the high redshift \(\Omega _m\) posterior. Thirdly, the \(H_0\) posterior is sensitive to the \(\Omega _m\) prior. This is easy to understand as a projection effect. In short, as we relax the prior, the 2D MCMC posterior probes more of the top left corner of the \((H_0, \Omega _m)\)-plane. Configurations in this corner only differ appreciably in \(\Omega _m\), while getting projected onto more or less the same lower value of \(H_0\). Ultimately, what one concludes from the 2D MCMC posteriors is that the data is not good enough to constrain the model. The assumption then is that points in parameter space along the banana-shaped contour give rise to more or less the same values of \(\chi ^2\). As we shall show later, this assumption is false (see also [49]). Of course, the peak of the marginalised 1D \(H_0\) posterior cannot be tracking the minimum of the \(\chi ^2\) as its value is unique up to machine precision. We will now introduce two independent methodologies, mock simulations and profile distributions, which track the minimum of the \(\chi ^2\), and we will assess the statistical significance of evolution between low and high redshift subsamples.

MCMC posteriors for low and high redshift subsamples for the 2 cosmological parameters \((H_0, \Omega _m)\) and the 1 nuisance parameter M, the absolute magnitude of Type Ia SN. The low redshift posteriors are Gaussian, but the high redshift posteriors are not, in line with expectations. Extending the uniform \(\Omega _m\) prior leads to shifts in the peak of the \(H_0\) posterior due to a projection effect. Imposing the standard \(\Omega _m \le 1\) prior cuts off the peak of the \(\Omega _m\) distribution in the high redshift subsample

4.3 Frequentist interpretation

Here we adopt the same likelihood (8), but estimate the probability of finding a decreasing \(H_0\)/increasing \(\Omega _m\) best fit trend and negative DE densities as prominent in mock data. It should be noted that whenever one finds an unusual signal in cosmological data, it is standard practice to run mock simulations to ascertain if the signal is statistically significant or not. Here, the decreasing \(H_0\)/increasing \(\Omega _m\) trend in best fits is the unusual signal that we wish to test. Since we search for evolution trends and one expects little evolution at low z in mocks with good statistics, it is more efficient to remove low redshift SNe and restrict attention to the 210 SNe in the redshift range \(z > 0.5\). Thus, given a realisation of SNe data, we choose a cut-off redshift \(z_{\text {cut-off}}\) in the range \(z_{\text {cut-off}} \in \{0.5, 0.6, 0.7, 0.8, 0.9, 1, 1.1, 1.2 \}\) and remove SNe with \(z \le z_{\text {cut-off}}\). This gives us 8 nested subsamples and for each subsample, we fit the \(\Lambda \)CDM model and record the best fit \((H_0, \Omega _m)\) values. We then construct the sums

where \(H_0\) and \(\Omega _m\) denote the best fits at each \(z_{\text {cut-off}}\), and the difference is relative to the best fits of the full sample (Table 2). See [24] for earlier analysis with the Pantheon sample, where similar sums were employed but with a fixed (not fitted) M. Sums close to zero correspond to realisations of the data with no specific trend that averages to zero. As is clear from Table 1, in Pantheon+ we see a decreasing \(H_0\) and increasing \(\Omega _m\) trend, so we expect \(\sigma _{H_0} < 0\) and \(\sigma _{\Omega _m} > 0\) in Pantheon+ SNe; the concrete numbers are \(\sigma _{H_0} = -115.50\) and \(\sigma _{\Omega _m} = 9.27\) to two decimal places. The advantage of constructing a sum is that it places no particularly importance on the choice of \(z_{\text {split}}\).

Our goal now is to construct Pantheon+ SNe mocks in the redshift range \(z > 0.5\) that are statistically consistent with no evolution in \((H_0, \Omega _m\)). To begin, we fit the full sample using the likelihood (8), identify best fits and \(1 \sigma \) confidence intervals through the inverse of a Fisher matrix (see [88]). We record the result in Table 2, noting that the result agrees almost exactly with (6), despite differences in the likelihood, i. e. (2) versus (8). Note, we could also run an MCMC chain, but we have already demonstrated that the errors are Gaussian in Sect. 2, so whether one uses an MCMC chain or random numbers generated in normal distributions from Table 2, one does not expect a great difference.

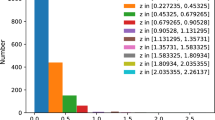

We next generate an array of 3000 \((H_0, \Omega _m, M)\) values randomly in normal distributions with central value corresponding to the best fit value and \(1 \sigma \) corresponding to the errors in Table 2. One could alternatively fix the injected cosmological parameters to the best fits in Table 2, but our approach here allows for greater randomness. For each entry in this array, we construct \(m_i = \mu _{\text {model}} (H_0, \Omega _m, z_i)+M\) for the 210 SNe in the redshift range \(0.5 < z \le 2.26137\). We then generate 210 new values of the apparent magnitude \(m_i\) by generating a random multivariate normal with the covariance matrix \(C_{\text {SN}}\) in (9) truncated from the Pantheon+ covariance matrix \(C_{\text {stat+sys}}\). This gives us one mock realisation of the data for each entry in our \((H_0, \Omega _m, M)\) array, which we fit back to the \(\Lambda \)CDM model for the nested subsamples in order to identify best fit parameters and the sums (14). Note, our mocking procedure drops correlations between \((H_0, \Omega _m, M)\), but this is not expected to make a big difference, since as can be seen from the yellow contour in Fig. 2, which is representative of the full sample, none of the parameters are strongly correlated. Moreover, we do not generate new SNe in Cepheid hosts, so M and its constraints are the same in mock and real data. This is justifiable because M should be insensitive to cosmologyFootnote 11 and here our focus is studying evolution of \((H_0, \Omega _m)\) best fits in high redshift cosmological data. Once this is done for all 3000 realisations, we count the number of mock realisations that give both \(\sigma _{H_0} \le -115.50\) and \(\sigma _{\Omega _{m}} \ge 9.27\). Essentially, by ranking the mocks by \(\sigma _{H_0}\) and \(\sigma _{\Omega _m}\), one can assign a percentile or probability to the observed Pantheon+ sample, just as one would do with the heights of children in a class. Note, in both these exercises it is unimportant what the probability density function (PDF) looks like, simply that numbers are smaller or larger than a certain number. In Fig. 3 we show the result of this exercise. As expected, our mock PDFs are peaked on \(\sigma _{H_0} = \sigma _{\Omega _m} = 0\). From 3000 mocks, we find 240 with more extreme values than the values we find in Pantheon+ SN. This gives us a p-value of \(p = 0.08\) (\(1.4 \sigma \) for a one-sided normal).

We next consider the likelihood of finding negative DE densities (\(\Omega _m > 1\)), as well as the likelihood of finding \(\Omega _m\) best fits as large as the Pantheon sample \( \Omega _m \gtrsim 3\), in the three final entries in Table 1. This can be done by recording best fit \(\Omega _m\) values from mocks with \(z_{\text {cut-off}} \in \{1.0, 1.1, 1.2\}\). From 3000 mocks, we find 298 that maintain \(\Omega _m > 1\) best fits and 77 that maintain larger \(\Omega _m\) best fits than the Pantheon+ sample. This gives us probabilities of \(p=0.1\) (\(1.3 \sigma \)) and \(p=0.026\) (\(1.9 \sigma \)), respectively. In other words, we find negative DE densities in the same redshift range one mock in 10 and larger \(\Omega _m\) best fits one mock in 38. In Fig. 4 we show a subsample of the mock best fits.

A sample of 300 mock best fits for SNe with \(z > z_{\text {cut-off}} \in \{1.0, 1.1, 1.2\}\). 6 mocks (blue) return \(\Omega _m\) best fits that remain above the \(\Omega _m\) best fits in Pantheon+ (solid black), 25 (green) that remain above \(\Omega _m = 1\) and 269 (red) where best fits are recorded below \(\Omega _m = 1\). We impose the bound \(0 \le \Omega _m \le 5\) and some points saturate these bounds

4.4 Pantheon+ covariance matrix and \(z_{\text {split}} = 1\)

Here we comment on how representative are the high redshift best fit \((H_0, \Omega _m)\) values if one splits the sample at \(z_{\text {split}}=1\). We perform this particular analysis so that we can directly compare to profile distributions in the next section. However, in the process we find a secondary result on the covariance matrix that is worth commenting upon. In contrast to the earlier sums, this means that we have singled out a particular redshift by hand and we are assessing the probability of a more specific event. For this reason we expect a probability less than \(p = 0.08\). Once again we perform mock analysis, but surprisingly find that none of best fits to 10,000 mocks fits the data as well as the real data. This may be partly due to the difference in preferred cosmological parameters, but is also expected to be due to a potential overestimation of the Pantheon+ covariance matrix [92].

Distribution of \(\chi ^2\) from 10,000 mocks of \(z > 1\) SNe data, where input parameters are picked in normal distributions consistent with Table 2. The red line corresponds to the value in the Pantheon+ sample. None of our mock data result in smaller \(\chi ^2\) values than real data

Concretely, we construct an array of 10,000 \((H_0, \Omega _m, M)\) mock input parameters by employing the best fits and \(1 \sigma \) confidence intervals in Table 2 as central values and standard deviations for normal distributions. For each entry in this array, we generate a new mock copy of the 25 data points in the Pantheon+ sample above \(z=1\), which we then fit back to the model and record 10,000 \((H_0, \Omega _m, M)\) best fits. Note, we are once again constructing high redshift subsamples that are representative of the full sample by construction. In Fig. 5 we show a comparison of the \(\chi ^2\) from mock data (blue PDF) versus \(\chi ^2\) from real data (red line); none of our mocks lead to lower values of \(\chi ^2\). Nevertheless, if one focuses on best fits, we find both smaller values of \(H_0\) and larger values of \(\Omega _m\) in 375 cases from 10,000 simulations, giving us a probability of \(p = 0.0375\) of finding more extreme best fits. This corresponds to \( 1.8 \sigma \) for a one-sided normal. In the next section we will compare this statistical significance to profile distributions with the same data in the same redshift range. The key point here is that profile distributions provides a consistency check of our mock simulations. In short, if our mocks are trustworthy, we expect to see a \(\sim 1.8 \sigma \) discrepancy in independent profile distribution analysis. A secondary point is that more extreme values of the \(\chi ^2\) are not found, which seems to support observations in [92] that the Pantheon+ covariance matrix is overestimated.

4.5 Restoring the covariance matrix

Earlier we truncated out an off-diagonal block from the Pantheon+ covariance matrix in likelihood (2) in order to decouple 77 SNe in Cepheid hosts from the remaining 1624 SNe and thus define the new likelihood (8). Since this is heavy handed if one only wants to focus on high redshift SNe, here we restore the off-diagonal entries in the covariance matrix. The results are shown in Table 3, where it is evident that the decreasing \(H_0\)/increasing \(\Omega _m\) best fit trend with effective redshift is robust beyond \(z_{\text {split}} = 0.7\). Moreover, we now find that SNe beyond \(z_{\text {split}} = 0.9\) return best fits consistent with negative DE density. We have relaxed the bounds on \(\Omega _m\) in order to accommodate best fits that saturate the bounds and the number of SNe excludes the 77 calibrating SNe. We also record a reduction in \(\chi ^2\) relative to the best fit values in Table 1, where the difference here is that we use (a truncation of) likelihood (2) and not likelihood (8). This provides a sanity check that our best fits are finding new minima as we change likelihood. Evidently, the re-introduction of off-diagonal entries in the covariance matrix impacts best fits, but not the features of interest. As noted in the previous section, the Pantheon+ covariance matrix appears overestimated [92].

5 Profile distributions

In this section we follow the methodology in [93], more specifically [49], where we refer the reader for further details. As explained in [38], removing low redshift H(z) or \(D_{L}(z)\) or \(D_{A}(z)\) data pushes the (flat) \(\Lambda \)CDM model into a non-Gaussian regime where projection effects are unavoidable. If one wants to test the constancy of \(\Lambda \)CDM cosmological parameters in the late Universe, and not simply resort to adopting a working assumption, then one has to overcome these effects. Profile distributions [93] allow one to construct probability density functions that are properly tracking the minimum of the \(\chi ^2\). The latter is by definition the point in model parameter space that best fits the data. As is clear from Fig. 2, where there is a degeneracy (banana-shaped contour) in the \((H_0, \Omega _m)\)-plane, the peak of the \(H_0\) posterior is sensitive to the prior, so it evidently tells one very little about the point in parameter space that best fits the data. Note that profile distributions [93] are simply a variant of profile likelihoods (see section 4 of Ref. [94]), where instead of optimising one recycles the MCMC chain. As a result, the input for both Bayesian and frequentist analysis is the information in the MCMC chain, thereby allowing a more direct comparison between the two approaches.

Here we focus on \(z_{\text {split}}=1\) as both our Bayesian and frequentist mock analysis suggests that this is the redshift split where evolution is most significant. Note, one can of course find sample splits with less evolution, but if one is interested in the self-consistency of a data set within the context of the \(\Lambda \)CDM model, it behoves us to focus on the most extreme cases. Following [49, 93] we fix a generous uniform prior \(\Omega _{m} \in [0, 8]\) and run a long MCMC chain for SNe with \(z > z_{\text {split}} = 1\). The prior has been chosen large enough so that the expected best fit \(\Omega _m \sim 3.4\) from Table 1 (\(z_{\text {split}} = 1\) row) can be recovered from the resulting distribution. We identify the minimum of the \( \chi ^2\), \(\chi ^2_{\text {min}}\), from the full MCMC chain. Next we break up the \(H_0\) and \(\Omega _m\) range into bins and record the lowest value of the \(\chi ^2\) in each bin, which gives us \(\chi ^2_{\text {min}}(H_0)\) and \(\chi ^2_{\text {min}}(\Omega _m)\), respectively. We can then define \(\Delta \chi ^2_{\text {min}}(H_0):= \chi ^{2}_{\text {min}}(H_0) - \chi ^2_{\text {min}}\) for \(H_0\) and an analogous \(\Delta \chi ^2_{\text {min}}(\Omega _m)\) for \(\Omega _m\). We next construct the distributions \(R(H_0) = e^{-\frac{1}{2} \Delta \chi ^2_{\text {min}}(H_0)}\) and \(R(\Omega _m) = e^{-\frac{1}{2} \Delta \chi ^2_{\text {min}}(\Omega _m)}\), which by construction are peaked at \(R(H_0) = R(\Omega _m) = 1\) in the bin with the overall minimum of the \(\chi ^2\) for the full MCMC chain.

It should be stressed that it is easy to select large enough priors for \(H_0\) so that \(R(H_0)\) decays to zero within the priors. Nevertheless, as is clear from Fig. 2, \(\Omega _m\) distributions become broad in high redshift bins and the fall off may be extremely gradual. However, once one switches from MCMC posteriors to profile distributions, we are no longer worried about the volume of parameter space explored in MCMC marginalisation, but simply that each bin is populated and the minimum of the \(\chi ^2\) in each bin has been identified. Thus, it is enough that the MCMC algorithm visits all bins at least once and any empty bin we omit. Concretely, we allow for 200 bins for both \(H_0\) and \(\Omega _m\).

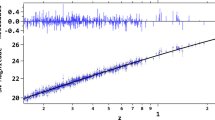

In Fig. 6 we show the unnormalised \(R(H_0)\) and \(R(\Omega _m)\) distributions for high redshift SNe with \(z > z_{\text {split}}=1\). The first point to appreciate is that the peaks of the distributions are close to the best fits in Table 1. Note, this provides a consistency check on the best fits, since extremising the \(\chi ^2\) through gradient descent and hopping around parameter space through MCMC marginalisation are independent. This provides a further test of the robustness of least squares fitting in this context (see also appendix). Secondly, as is evident from the dots to the left of the \(R(H_0)\) peak, \(R(H_0)\) goes to zero at both small and large values of \(H_0\) that are well within our priors. In contrast, as anticipated, the \(R(\Omega _m)\) distribution is almost constant beyond \(\Omega _m \sim 2\) but nevertheless shows a gradual fall off. The fall off towards smaller values of \(\Omega _m\) is considerably sharper. Thirdly, note that the dots essentially follow a curve, but some small bobbles are evident in bins. These features can be ironed out by running a longer MCMC chain. Finally, both \(R(H_0)\) and \(R(\Omega _m)\) confirm that the best fit for \(z > 1\) SNe is not connected to the best fit for the full sample (black lines) through a curve of constant \(\chi ^2\). Thus, we see a degeneracy in (Bayesian) MCMC analysis, but there is no counterpart in a frequentist treatment that involves the \(\chi ^2\). We conclude that it is misconception in the literature that a degeneracy in MCMC posteriors is equivalent to a constant \(\chi ^2\) curve. We remind the reader again that the \(\chi ^2\) is a measure of how well a point in parameter space fits the data.

We next turn our attention to assessing the statistical significance. The black lines in Fig. 6 denote the best fit values for the full sample from Table 2. Thus, these are the expected values if there is no evolution in the sample. To assess the evolution, we normalise the \(R(H_0)\) distribution by dividing through by the area under the full curve, which is most simply evaluated by numerically integrating using Simpson’s rule. We then impose a threshold \(\kappa \le 1\) and retain only the \(H_0\) bins with \(R(H_0) > \kappa \). Integrating under the curve for the retained \(H_0\) values and normalising accordingly one gets a probability p [49]. In Fig. 6 we use dashed, dotted and dashed-dotted lines to denote \(p \in \{0.68, 0.95, 0.997 \}\) corresponding to \(1 \sigma \), \(2 \sigma \) and \(3 \sigma \), respectively, in a Gaussian distribution. Evidently the best fit for the full sample (black line) is removed from the \(H_0\) peak by a statistical significance in the \(95 \%\) to \(99.7\%\) confidence level range. By adjusting the threshold \(\kappa \) further, one finds the area under the curve and the associated probability that terminates at the black line. We find that the black line is located at the \(97.2\%\) confidence level, the equivalent of \(2.2 \sigma \) for a Gaussian distribution. This can be directly compared with \(1.8 \sigma \) from our earlier analysis based on mock simulations. We note that there is a slight difference, but it is worth stressing that two independent techniques agree on a \(\sim 2 \sigma \) discrepancy.

In principle one could repeat the analysis with \(R(\Omega _m)\), but the distribution is broad and has been impacted by our priors. Changing the priors is expected to change the statistical significance of any inference using \(R(\Omega _m)\), so we omit the analysis. If this is unclear, note that restricting the range to \(\Omega _m \in [0, 4]\), would still allow a peak, but the dashed and dotted lines corresponding to \(68\%\) and \(95\%\) of the area under the curve would all shift. The robust take-away is that the peak of the \(R(\Omega _m)\) distribution coincides with negative DE density, \(\Omega _m > 1\). However, there is an important distinction here with MCMC. As we see from Fig. 2, due to a degeneracy in the 2D \((H_0, \Omega _m)\) posterior, changing the \(\Omega _m\) priors can impact the \(H_0\) posterior, whereas with profile distributions the number of times the MCMC algorithm visits a given \(H_0\) bin is unimportant, simply the minimum \(\chi ^2\) in the \(H_0\) bin is relevant. This important difference means that profile distributions are insensitive to changes in prior, modulo the fact that by changing the prior one either extends or cuts the distribution, but the peak does not move.

6 Discussion

The take-home message is that a decreasing \(H_0\)/increasing \(\Omega _m\) best fit trend observed in the Pantheon SNe sample [41] at low significance \(\sim 1 \sigma \) [24] (see [20,21,22, 26, 27, 37] for the \(H_0\) or \(\Omega _m\) trend alone) persists in the Pantheon+ sample [39, 40] with significance \(\sim 1.4 \sigma \) under similar assumptions that do not focus on a particular \(z_{\text {split}}\). Moreover, calibrated \(z >1\) SNe return \(\Omega _m > 1\) best fits, thereby signaling negative DE densities in the \(\Lambda \)CDM model. Note, this outcome is not overly surprising, because one cannot preclude \(\Omega _m > 1\) best fits at high redshifts even in mock Planck-\(\Lambda \)CDM data; beyond some redshift \(\Omega _m > 1\) best fits become probable. This is a mathematical feature of the \(\Lambda \)CDM model [25, 38]. Using profile distributions [93] (see also [49]), a technique which allows us to correct for projection and/or volume effects in MCMC marginalisation, we have independently confirmed the significance at \(\gtrsim 2 \sigma \). Similar features are evident in the literature, most notably Lyman-\(\alpha \) BAO [50] and QSOs standardised through fluxes in UV and X-ray [29,30,31]. Moreover, recent large SNe samples have led to larger \(\Omega _m\) values that are \(1.5 \sigma \) [95] to \(2 \sigma \) [96] discrepant with Planck [3]. From Fig. 4 of Ref. [96] it is obvious that the sample has a high effective redshift. Note, in contrast to [96], where the high effective redshift is an inherent property of the sample, here we deliberately increase the effective redshift of the Pantheon+ sample by binning it.

To put these results in context we return to the generic solution of the Friedmann equation [16],

where \(w_{\text {eff}}(z)\) is the effective EoS. We observe that evolution of \(H_0\) (and \(\Omega _m\)) with effective redshift in the Pantheon+ sample is consistent with a disagreement between the assumed EoS, here the \(\Lambda \)CDM model, and H(z) inferred from Nature. These anomalies are not confined to SNe and we see related features elsewhere [18, 19, 24, 25]. Moreover, JWST is also reporting anomalies that may be cosmological in origin [97,98,99]; JWST anomalies may prefer a phantom DE EoS [99] (however see [100, 101]), which may be a proxy for negative DE densities at higher redshifts. If persistent cosmological tensions [5,6,7,8,9,10,11] are due to systematics, one expects no evolution in \(H_0\) from (15), but this runs contrary to what we are seeing. Our “evolution test”, which may be regarded as a consistency check of the \(\Lambda \)CDM model confronted to data, hence gives a complementary handle on establishing \(\Lambda \)CDM tensions, especially \(H_0\) tension. Note, it is routine to fit data sets in cosmology and simply assume that cosmological parameters are not evolving with effective redshift. Our analysis tests this assumption.

Admittedly, this one result may not be enough to falsify \(\Lambda \)CDM. That being said, if evolution is present, as our Bayesian model comparison shows, this opens up the door for finding alternative models that fit the data better than vanilla \(\Lambda \)CDM. On the contrary, without any change of \((H_0, \Omega _m)\) with redshift across expansive Type Ia SNe samples, as is the standard assumption in the literature, there is little hope of finding an alternative that beats \(\Lambda \)CDM in Bayesian model comparison. From this perspective, our consistency check then feeds into standard Bayesian analysis. However, there is a key difference. Physics demands that models are predictive, i. e. return the same fitting parameters at all epochs, whereas Bayesian methods only assess the goodness of fit and are cruder. Note also that the high redshift subsamples of Pantheon+ we study are small, so they are prone to statistical fluctuations. However, since we see similar trends beyond SNe [25], this makes a statistical fluctuation interpretation less likely. A second possibility is unexplored systematics in \(z > 1\) SNe identified largely through the Hubble Space Telescope (HST) [102,103,104,105]. There is unquestionable value in flagging these anomalies so that they can be explored. If one can eliminate these two, the only remaining possibility is that we must regard the trend as corroborating evidence that \(\Lambda \)CDM tensions are physical and the model is breaking down.

Going forward, if the next generation of SNe data [106] increases the statistical significance of the anomaly, as we have seen here in transitioning from Pantheon to Pantheon+, then there are interesting implications. First, any increasing trend in \(\Omega _m\) with effective redshift prevents one separating \(H_0\) and \(S_8 \propto \sqrt{\Omega _m}\) tensions. This is obvious. Interestingly, sign switching \(\Lambda \) models, which perform well alleviating \(H_0\)/\(S_8\) tensions [84], fit well with our main message here, i.e. negative DE at higher redshifts. Secondly, \(\Lambda \)CDM model breakdown allows us to re-evaluate the longstanding observational cosmological constant problem [107]. Thirdly, and most consequentially, it is likely that changes to the DE sector cannot prevent evolution in \(\Omega _m\), because DE is traditionally irrelevant at higher redshifts. Ultimately, if late-time DE does not or cannot come to the rescue [108], this brings the assumption of pressureless matter scaling as \(a^{-3}\) with scale factor a into question in late Universe FLRW cosmology. Finally, if the evolution of \(\Lambda \)CDM parameters we discussed here is substantiated in future, it rules out the so-called early resolutions to \(H_0\)/\(S_8\) tensions [13], such as early dark energy [109].

Data availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: All data analysed in this work is publicly available and references have been provided.]

Notes

The Hubble constant \(H_0\) is merely the scale of H(z), so it does not dictate evolution. \(\Omega _m\) is also in effect an integration constant from the continuity equation.

Note that \(D_L(z)\) and \(D_{A}(z)\) are not independent within FLRW setting, since \(D_{L}(z) = (1+z)^2 D_{A}(z)\).

\(\Omega _m > 1\) best fits also appear in high redshift observational Hubble data (OHD) [25] that incorporates historical Lyman-\(\alpha \) BAO [50]. However, when updated to the latest Lyman-\(\alpha \) BAO constraints [53, 87], one finds a \(\Omega _m < 1\) best fit, admittedly one that still precludes the Planck \(\Omega _m\) value at greater than \(95 \%\) confidence level [49]. Thus, improvements in data quality can remove signatures of negative DE densities by bringing \(\Omega _m\) values back closer to Planck values.

The relevant constraints are \(\Omega _m h^2 = 0.1430 \pm 0.0014\) and \(\Omega _m h^2 = 0.18 \pm 0.01\) for Planck and Pantheon+, respectively.

In the cosmology literature, it is routinely assumed that all points within MCMC \(68 \%\) credible intervals provide an equally good fit to the data, even in the presence of a degeneracy. As demonstrated in [49], this need not be the case; one can find settings where frequentist confidence intervals are constrained, but MCMC credible intervals are at best inconclusive, thereby undermining any analysis that rests only on MCMC.

Whenever the covariance matrix C has large dimensionality and small entries, it is difficult to determine its determinant |C|; beyond a certain dimensionality, one encounters \(|C| = 0 \Rightarrow \ln |C| = -\infty \) within machine precision. Nevertheless, when the dimensionality becomes smaller, a finite, non-zero determinant is calculable.

We thank an anonymous EPJC referee for pointing this important point out.

If this is not the case, then Type Ia SNe as standardisable candles make little sense. Indeed, it should be safe to replace the 77 SNe in Cepheid hosts with a Gaussian prior on M.

References

A.G. Riess et al. [Supernova Search Team], Astron. J. 116, 1009–1038 (1998). arXiv:astro-ph/9805201

S. Perlmutter et al. [Supernova Cosmology Project], Astrophys. J. 517, 565–586 (1999). arXiv:astro-ph/9812133

N. Aghanim et al. [Planck], Astron. Astrophys. 641, A6 (2020). arXiv:1807.06209 [astro-ph.CO]

D.J. Eisenstein et al. [SDSS], Astrophys. J. 633, 560–574 (2005). arXiv:astro-ph/0501171

A.G. Riess, W. Yuan, L.M. Macri, D. Scolnic, D. Brout, S. Casertano, D.O. Jones, Y. Murakami, L. Breuval, T.G. Brink, et al. Astrophys. J. Lett. 934(1), L7 (2022). arXiv:2112.04510 [astro-ph.CO]

W.L. Freedman, Astrophys. J. 919(1), 16 (2021). arXiv:2106.15656 [astro-ph.CO]

D.W. Pesce, J.A. Braatz, M.J. Reid, A.G. Riess, D. Scolnic, J.J. Condon, F. Gao, C. Henkel, C.M.V. Impellizzeri, C.Y. Kuo, et al. Astrophys. J. Lett. 891(1), L1 (2020). arXiv:2001.09213 [astro-ph.CO]

J.P. Blakeslee, J.B. Jensen, C.P. Ma, P.A. Milne, J.E. Greene, Astrophys. J. 911(1), 65 (2021). arXiv:2101.02221 [astro-ph.CO]

E. Kourkchi, R.B. Tully, G.S. Anand, H.M. Courtois, A. Dupuy, J.D. Neill, L. Rizzi, M. Seibert, Astrophys. J. 896(1), 3 (2020). arXiv:2004.14499 [astro-ph.GA]

T.M.C. Abbott et al. [DES], arXiv:2105.13549 [astro-ph.CO]

M. Asgari et al., [KiDS], Astron. Astrophys. 645, A104 (2021). arXiv:2007.15633 [astro-ph.CO]

L. Perivolaropoulos, F. Skara, New Astron. Rev. 95, 101659 (2022). arXiv:2105.05208 [astro-ph.CO]

E. Abdalla, G. Franco Abellán, A. Aboubrahim, A. Agnello, O. Akarsu, Y. Akrami, G. Alestas, D. Aloni, L. Amendola, L.A. Anchordoqui, et al. JHEAp 34, 49–211 (2022). arXiv:2203.06142 [astro-ph.CO]

E. Di Valentino, O. Mena, S. Pan, L. Visinelli, W. Yang, A. Melchiorri, D.F. Mota, A.G. Riess, J. Silk, Class. Quantum Gravity 38(15), 153001 (2021). arXiv:2103.01183 [astro-ph.CO]

N. Schöneberg, G. Franco Abellán, A. Pérez Sánchez, S.J. Witte, V. Poulin, J. Lesgourgues, Phys. Rep. 984, 1–55 (2022). arXiv:2107.10291 [astro-ph.CO]

C. Krishnan, E.Ó. Colgáin, M.M. Sheikh-Jabbari, T. Yang, Phys. Rev. D 103(10), 103509 (2021). arXiv:2011.02858 [astro-ph.CO]

C. Krishnan, R. Mondol, arXiv:2201.13384 [astro-ph.CO]

K.C. Wong, S.H. Suyu, G.C.F. Chen, C.E. Rusu, M. Millon, D. Sluse, V. Bonvin, C.D. Fassnacht, S. Taubenberger, M.W. Auger et al., Mon. Not. R. Astron. Soc. 498(1), 1420–1439 (2020). arXiv:1907.04869 [astro-ph.CO]

M. Millon, A. Galan, F. Courbin, T. Treu, S.H. Suyu, X. Ding, S. Birrer, G.C.F. Chen, A.J. Shajib, D. Sluse et al., Astron. Astrophys. 639, A101 (2020). arXiv:1912.08027 [astro-ph.CO]

M.G. Dainotti, B. De Simone, T. Schiavone, G. Montani, E. Rinaldi, G. Lambiase, Astrophys. J. 912(2), 150 (2021). arXiv:2103.02117 [astro-ph.CO]

M.G. Dainotti, B. De Simone, T. Schiavone, G. Montani, E. Rinaldi, G. Lambiase, M. Bogdan, S. Ugale, Galaxies 10(1), 24 (2022). arXiv:2201.09848 [astro-ph.CO]

M. Dainotti, B. De Simone, G. Montani, T. Schiavone, G. Lambiase, arXiv:2301.10572 [astro-ph.CO]

W.W. Yu, L. Li, S.J. Wang, arXiv:2209.14732 [astro-ph.CO]

E.Ó. Colgáin, M.M. Sheikh-Jabbari, R. Solomon, G. Bargiacchi, S. Capozziello, M.G. Dainotti, D. Stojkovic, Phys. Rev. D 106(4), L041301 (2022). arXiv:2203.10558 [astro-ph.CO]

E. Ó Colgáin, M.M. Sheikh-Jabbari, R. Solomon, M.G. Dainotti, D. Stojkovic, arXiv:2206.11447 [astro-ph.CO]

C. Krishnan, E. Ó Colgáin, Ruchika, A.A. Sen, M.M. Sheikh-Jabbari, T. Yang, Phys. Rev. D 102(10), 103525 (2020). arXiv:2002.06044 [astro-ph.CO]

X.D. Jia, J.P. Hu, F.Y. Wang, arXiv:2212.00238 [astro-ph.CO]

J.P. Hu, F.Y. Wang, Mon. Not. R. Astron. Soc. 517(1), 576–581 (2022). arXiv:2203.13037 [astro-ph.CO]

G. Risaliti, E. Lusso, Astrophys. J. 815, 33 (2015). arXiv:1505.07118 [astro-ph.CO]

G. Risaliti, E. Lusso, Nat. Astron. 3(3), 272–277 (2019). arXiv:1811.02590 [astro-ph.CO]

E. Lusso, G. Risaliti, E. Nardini, G. Bargiacchi, M. Benetti, S. Bisogni, S. Capozziello, F. Civano, L. Eggleston, M. Elvis et al., Astron. Astrophys. 642, A150 (2020). arXiv:2008.08586 [astro-ph.GA]

T. Yang, A. Banerjee, E.Ó. Colgáin, Phys. Rev. D 102(12), 123532 (2020). arXiv:1911.01681 [astro-ph.CO]

N. Khadka, B. Ratra, Mon. Not. R. Astron. Soc. 497(1), 263–278 (2020). arXiv:2004.09979 [astro-ph.CO]

N. Khadka, B. Ratra, Mon. Not. R. Astron. Soc. 502(4), 6140–6156 (2021). arXiv:2012.09291 [astro-ph.CO]

N. Khadka, B. Ratra, Mon. Not. R. Astron. Soc. 510(2), 2753–2772 (2022). arXiv:2107.07600 [astro-ph.CO]

S. Pourojaghi, N.F. Zabihi, M. Malekjani, Phys. Rev. D 106(12), 123523 (2022). arXiv:2212.04118 [astro-ph.CO]

E. Pastén, V. Cárdenas, arXiv:2301.10740 [astro-ph.CO]

E.Ó. Colgáin, M.M. Sheikh-Jabbari, R. Solomon, Phys. Dark Univ. 40, 101216 (2023). arXiv:2211.02129 [astro-ph.CO]

D. Brout, D. Scolnic, B. Popovic, A.G. Riess, J. Zuntz, R. Kessler, A. Carr, T.M. Davis, S. Hinton, D. Jones et al., Astrophys. J. 938(2), 110 (2022). arXiv:2202.04077 [astro-ph.CO]

D. Scolnic, D. Brout, A. Carr, A.G. Riess, T.M. Davis, A. Dwomoh, D.O. Jones, N. Ali, P. Charvu, R. Chen et al., Astrophys. J. 938(2), 113 (2022). arXiv:2112.03863 [astro-ph.CO]

D.M. Scolnic et al. [Pan-STARRS1], Astrophys. J. 859(2), 101 (2018). arXiv:1710.00845 [astro-ph.CO]

A. Conley et al. [SNLS], Astrophys. J. Suppl. 192, 1 (2011). arXiv:1104.1443 [astro-ph.CO]

M. Sullivan et al. [SNLS], Astrophys. J. 737, 102 (2011). arXiv:1104.1444 [astro-ph.CO]

D. Brout, G. Taylor, D. Scolnic, C.M. Wood, B.M. Rose, M. Vincenzi, A. Dwomoh, C. Lidman, A. Riess, N. Ali, et al. Astrophys. J. 938(2), 111 (2022). https://doi.org/10.3847/1538-4357/ac8bcc. arXiv:2112.03864 [astro-ph.CO]

T.M. Davis, S.R. Hinton, C. Howlett, J. Calcino, Mon. Not. R. Astron. Soc. 490(2), 2948–2957 (2019). arXiv:1907.12639 [astro-ph.CO]

M. Rameez, S. Sarkar, Class. Quantum Gravity 38(15), 154005 (2021). arXiv:1911.06456 [astro-ph.CO]

C.L. Steinhardt, A. Sneppen, B. Sen, Astrophys. J. 902(1), 14 (2020). arXiv:2005.07707 [astro-ph.CO]

A. Carr, T.M. Davis, D. Scolnic, D. Scolnic, K. Said, D. Brout, E.R. Peterson, R. Kessler, Publ. Astron. Soc. Austral. 39, e046 (2022). arXiv:2112.01471 [astro-ph.CO]

E. Ó Colgáin, S. Pourojaghi, M.M. Sheikh-Jabbari, D. Sherwin, arXiv:2307.16349 [astro-ph.CO]

É. Aubourg, S. Bailey, J.E. Bautista, F. Beutler, V. Bhardwaj, D. Bizyaev, M. Blanton, M. Blomqvist, A.S. Bolton, J. Bovy et al., Phys. Rev. D 92(12), 123516 (2015). arXiv:1411.1074 [astro-ph.CO]

T. Delubac et al. [BOSS], Astron. Astrophys. 574, A59 (2015). arXiv:1404.1801 [astro-ph.CO]

A. Font-Ribera et al. [BOSS], JCAP 05, 027 (2014). arXiv:1311.1767 [astro-ph.CO]

H. du Mas des Bourboux, J. Rich, A. Font-Ribera, V. de Sainte Agathe, J. Farr, T. Etourneau, J.M. Le Goff, A. Cuceu, C. Balland, J.E. Bautista, Astrophys. J. 901(2), 153 (2020). arXiv:2007.08995 [astro-ph.CO]

K. Dutta, Ruchika, A. Roy, A.A. Sen, M.M. Sheikh-Jabbari, Gen. Relat. Gravit. 52(2), 15 (2020). arXiv:1808.06623 [astro-ph.CO]

A.A. Sen, S.A. Adil, S. Sen, Mon. Not. R. Astron. Soc. 518(1), 1098–1105 (2022). arXiv:2112.10641 [astro-ph.CO]

L. Visinelli, S. Vagnozzi, U. Danielsson, Symmetry 11(8), 1035 (2019). arXiv:1907.07953 [astro-ph.CO]

G. Ye, Y.S. Piao, Phys. Rev. D 101(8), 083507 (2020). arXiv:2001.02451 [astro-ph.CO]

G. Ye, Y.S. Piao, Phys. Rev. D 102(8), 083523 (2020). arXiv:2008.10832 [astro-ph.CO]

H. Wang, Y.S. Piao, Phys. Lett. B 832, 137244 (2022). arXiv:2201.07079 [astro-ph.CO]

H. Wang, Y.S. Piao, arXiv:2209.09685 [astro-ph.CO]

J.Q. Jiang, Y.S. Piao, Phys. Rev. D 105(10), 103514 (2022)

E. Mörtsell, S. Dhawan, JCAP 09, 025 (2018). arXiv:1801.07260 [astro-ph.CO]

V. Poulin, K.K. Boddy, S. Bird, M. Kamionkowski, Phys. Rev. D 97(12), 123504 (2018). arXiv:1803.02474 [astro-ph.CO]

Y. Wang, L. Pogosian, G.B. Zhao, A. Zucca, Astrophys. J. Lett. 869, L8 (2018). arXiv:1807.03772 [astro-ph.CO]

A. Bonilla, S. Kumar, R.C. Nunes, Eur. Phys. J. C 81(2), 127 (2021). arXiv:2011.07140 [astro-ph.CO]

L.A. Escamilla, J.A. Vazquez, arXiv:2111.10457 [astro-ph.CO]

V. Sahni, A. Shafieloo, A.A. Starobinsky, Astrophys. J. Lett. 793(2), L40 (2014). arXiv:1406.2209 [astro-ph.CO]

E. Ozulker, Phys. Rev. D 106(6), 063509 (2022). arXiv:2203.04167 [astro-ph.CO]

G.B. Zhao, M. Raveri, L. Pogosian, Y. Wang, R.G. Crittenden, W.J. Handley, W.J. Percival, F. Beutler, J. Brinkmann, C.H. Chuang et al., Nat. Astron. 1(9), 627–632 (2017). arXiv:1701.08165 [astro-ph.CO]

S. Capozziello, Ruchika, A.A. Sen, Mon. Not. R. Astron. Soc. 484, 4484 (2019). arXiv:1806.03943 [astro-ph.CO]

E. Di Valentino, E.V. Linder, A. Melchiorri, Phys. Rev. D 97(4), 043528 (2018). arXiv:1710.02153 [astro-ph.CO]

A. Banihashemi, N. Khosravi, A.H. Shirazi, Phys. Rev. D 101(12), 123521 (2020). arXiv:1808.02472 [astro-ph.CO]

A. Banihashemi, N. Khosravi, A.H. Shirazi, Phys. Rev. D 99(8), 083509 (2019). arXiv:1810.11007 [astro-ph.CO]

Ö. Akarsu, J.D. Barrow, C.V.R. Board, N.M. Uzun, J.A. Vazquez, Eur. Phys. J. C 79(10), 846 (2019). arXiv:1903.11519 [gr-qc]

A. Perez, D. Sudarsky, E. Wilson-Ewing, Gen. Relat. Gravit. 53(1), 7 (2021). arXiv:2001.07536 [astro-ph.CO]

Ö. Akarsu, N. Katırcı, S. Kumar, R.C. Nunes, B. Öztürk, S. Sharma, Eur. Phys. J. C 80(11), 1050 (2020). arXiv:2004.04074 [astro-ph.CO]

R. Calderón, R. Gannouji, B. L’Huillier, D. Polarski, Phys. Rev. D 103(2), 023526 (2021). arXiv:2008.10237 [astro-ph.CO]

G. Acquaviva, Ö. Akarsu, N. Katirci, J.A. Vazquez, Phys. Rev. D 104(2), 023505 (2021). arXiv:2104.02623 [astro-ph.CO]

F.X. Linares Cedeño, N. Roy, L.A. Ureña-López, Phys. Rev. D 104(12), 123502 (2021). arXiv:2105.07103 [astro-ph.CO]

O. Akarsu, E. Ó Colgáin, E. Özulker, S. Thakur, L. Yin, arXiv:2207.10609 [astro-ph.CO]

H. Moshafi, H. Firouzjahi, A. Talebian, Astrophys. J. 940(2), 121 (2022). arXiv:2208.05583 [astro-ph.CO]

Ö. Akarsu, J.D. Barrow, L.A. Escamilla, J.A. Vazquez, Phys. Rev. D 101(6), 063528 (2020). arXiv:1912.08751 [astro-ph.CO]

Ö. Akarsu, S. Kumar, E. Özülker, J.A. Vazquez, Phys. Rev. D 104(12), 123512 (2021). arXiv:2108.09239 [astro-ph.CO]

O. Akarsu, S. Kumar, E. Özülker, J.A. Vazquez, A. Yadav, arXiv:2211.05742 [astro-ph.CO]

S. Di Gennaro, Y.C. Ong, Universe 8(10), 541 (2022). arXiv:2205.09311 [gr-qc]

Y.C. Ong, arXiv:2212.04429 [gr-qc]

R. Neveux, E. Burtin, A. de Mattia, A. Smith, A.J. Ross, J. Hou, J. Bautista, J. Brinkmann, C.H. Chuang, K.S. Dawson et al., Mon. Not. R. Astron. Soc. 499(1), 210–229 (2020)

L. Perivolaropoulos, F. Skara, arXiv:2301.01024 [astro-ph.CO]

D. Foreman-Mackey, D.W. Hogg, D. Lang, J. Goodman, Publ. Astron. Soc. Pac. 125, 306–312 (2013). arXiv:1202.3665 [astro-ph.IM]

A. Lewis, arXiv:1910.13970 [astro-ph.IM]

H. Akaike, IEEE Trans. Autom. Control 19(6), 716–723 (1974)

R. Keeley, A. Shafieloo, B. L’Huillier, arXiv:2212.07917 [astro-ph.CO]

A. Gómez-Valent, Phys. Rev. D 106(6), 063506 (2022). arXiv:2203.16285 [astro-ph.CO]

L. Herold, E.G.M. Ferreira, E. Komatsu, Astrophys. J. Lett. 929(1), L16 (2022). arXiv:2112.12140 [astro-ph.CO]

D. Rubin, G. Aldering, M. Betoule, A. Fruchter, X. Huang, A.G. Kim, C. Lidman, E. Linder, S. Perlmutter, P. Ruiz-Lapuente, et al., arXiv:2311.12098 [astro-ph.CO]

T.M.C. Abbott et al. [DES], arXiv:2401.02929 [astro-ph.CO]

M. Boylan-Kolchin, arXiv:2208.01611 [astro-ph.CO]

C.C. Lovell, I. Harrison, Y. Harikane, S. Tacchella, S.M. Wilkins, Mon. Not. R. Astron. Soc. 518(2), 2511–2520 (2022). arXiv:2208.10479 [astro-ph.GA]

N. Menci, M. Castellano, P. Santini, E. Merlin, A. Fontana, F. Shankar, Astrophys. J. Lett. 938(1), L5 (2022). arXiv:2208.11471 [astro-ph.CO]

P. Wang, B.Y. Su, L. Zu, Y. Yang, L. Feng, arXiv:2307.11374 [astro-ph.CO]

S.A. Adil, U. Mukhopadhyay, A.A. Sen, S. Vagnozzi, arXiv:2307.12763 [astro-ph.CO]

N. Suzuki et al. [Supernova Cosmology Project], Astrophys. J. 746, 85 (2012). arXiv:1105.3470 [astro-ph.CO]

A.G. Riess, S.A. Rodney, D.M. Scolnic, D.L. Shafer, L.G. Strolger, H.C. Ferguson, M. Postman, O. Graur, D. Maoz, S.W. Jha et al., Astrophys. J. 853(2), 126 (2018). arXiv:1710.00844 [astro-ph.CO]

A.G. Riess et al. [Supernova Search Team], Astrophys. J. 607, 665–687 (2004). arXiv:astro-ph/0402512 [astro-ph]

A.G. Riess, L.G. Strolger, S. Casertano, H.C. Ferguson, B. Mobasher, B. Gold, P.J. Challis, A.V. Filippenko, S. Jha, W. Li, et al. Astrophys. J. 659, 98–121 (2007). arXiv:astro-ph/0611572

D. Scolnic, S. Perlmutter, G. Aldering, D. Brout, T. Davis, A. Filippenko, R. Foley, R. Hložek, R. Hounsell, S. Jha,et al., arXiv:1903.05128 [astro-ph.CO]

S. Weinberg, Rev. Mod. Phys. 61, 1–23 (1989)

C. Krishnan, R. Mohayaee, E.Ó. Colgáin, M.M. Sheikh-Jabbari, L. Yin, Class. Quantum Gravity 38(18), 184001 (2021). arXiv:2105.09790 [astro-ph.CO]

V. Poulin, T.L. Smith, T. Karwal, M. Kamionkowski, Phys. Rev. Lett. 122(22), 221301 (2019). arXiv:1811.04083 [astro-ph.CO]

Acknowledgements

We thank Leandros Perivolaropoulos for discussion, and Özgur Akarsu and Anjan Sen for comments on a late draft. MMShJ and SP acknowledge SarAmadan grant No. ISEF/M/401332. SP also acknowledges the hospitality of Bu-Ali Sina University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Code availability

This manuscript has no associated code/software. [Authors’ comment: Code/Software supporting the analysis is relatively straightforward, but is available from the authors upon request. The use of publicly available software is acknowledged.]

Appendix A: Robustness of least squares fitting

Appendix A: Robustness of least squares fitting

There is concern that least squares fitting may occasionally find local false minima. This issue can be addressed by starting the \(\chi ^2\) minimisation algorithm from different initial guesses (or priors) in parameter space. Here we show differences that arise in such an exercise are insignificant. Alternatively, as highlighted in the text, one could run an MCMC chain and identify the point in parameter space corresponding to the minimum of the \(\chi ^2\). If the resulting cosmological parameters agree well with \(\chi ^2\) minimisation, then this provides an additional check.

We adopt uniform bounds or priors, \(0< H_0 < 150\) and \(0< \Omega _m < 5\), which are chosen large enough so that they never impact the best fits. As a result, the four corners of our parameter space are \((H_0, \Omega _m) = (\epsilon , \epsilon )\), \((H_0, \Omega _m) = (\epsilon ,5-\epsilon )\), \((H_0, \Omega _m) = (150-\epsilon ,\epsilon )\) and \((H_0, \Omega _m) = (150-\epsilon ,5-\epsilon )\), where we adopt \(\epsilon = 0.001\). In Table 4 we show the differences in best fit \(H_0\), \(\Omega _m\), and \(\chi ^2\) values for 77+1599 SNe in the low redshift range \(0.00122 \le z \le 1\) when minimising the likelihood (8). We truncate the resulting numbers where differences become transparent. As explained in the text, the parameter M decouples, but throughout we start it from its best fit location \(M = - 19.249\). From Table 4 it is clear that the best fit \(H_0\), \(\Omega _m\) and the corresponding \(\chi ^2\) for the best fit, begin to differ at the \(5^{\text {th}}\), \(7^{\text {th}}\) and \(11^{\text {th}}\) decimal place, respectively.

In Table 5 we repeat the analysis for 77 SNe in Cepheid hosts and 25 SNe in the redshift range \(1 < z \le 2.26137\), where we see that differences begin at the \(3^{\text {rd}}\), \(4^{\text {th}}\) and \(9^{\text {th}}\) decimal place, respectively. We have not changed the tolerance in the minimisation algorithm, but we see that the best fits in the smaller sample, where one expects less guidance for fits, are less robust. However, any difference is still small.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3.

About this article

Cite this article

Malekjani, M., Mc Conville, R., Ó Colgáin, E. et al. On redshift evolution and negative dark energy density in Pantheon + Supernovae. Eur. Phys. J. C 84, 317 (2024). https://doi.org/10.1140/epjc/s10052-024-12667-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-024-12667-z