Abstract

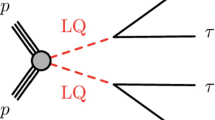

Leptoquarks (\(\textrm{LQ}\)s) are hypothetical particles that appear in various extensions of the Standard Model (SM), that can explain observed differences between SM theory predictions and experimental results. The production of these particles has been widely studied at various experiments, most recently at the Large Hadron Collider (LHC), and stringent bounds have been placed on their masses and couplings, assuming the simplest beyond-SM (BSM) hypotheses. However, the limits are significantly weaker for \(\textrm{LQ}\) models with family non-universal couplings containing enhanced couplings to third-generation fermions. We present a new study on the production of a \(\textrm{LQ}\) at the LHC, with preferential couplings to third-generation fermions, considering proton-proton collisions at \(\sqrt{s} = 13 \, \textrm{TeV}\) and \(\sqrt{s} = 13.6 \, \textrm{TeV}\). Such a hypothesis is well motivated theoretically and it can explain the recent anomalies in the precision measurements of \(\textrm{B}\)-meson decay rates, specifically the \(R_{D^{(*)}}\) ratios. Under a simplified model where the \(\textrm{LQ}\) masses and couplings are free parameters, we focus on cases where the \(\textrm{LQ}\) decays to a \(\tau \) lepton and a \(\textrm{b}\) quark, and study how the results are affected by different assumptions about chiral currents and interference effects with other BSM processes with the same final states, such as diagrams with a heavy vector boson, \(\textrm{Z}^{\prime }\). The analysis is performed using machine learning techniques, resulting in an increased discovery reach at the LHC, allowing us to probe new physics phase space which addresses the \(\textrm{B}\)-meson anomalies, for \(\textrm{LQ}\) masses up to \(5.00\, \textrm{TeV}\), for the high luminosity LHC scenario.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

After more than ten years collecting data, the LHC has confirmed that the Standard Model (SM) is indeed the correct theory describing particle physics for energies below the \(\, \textrm{TeV}\) scale. Nevertheless, there exist reasons to expect the SM to be a low-energy effective realization of a more complete theory. On the theoretical side, we do not know if gravity should be quantized, or if the gauge interactions should be unified, and if so, we do not know how to solve the associated hierarchy problems on the Higgs mass. Moreover, we have no explanation for fermion family replication, nor for the lack of CP violation in the strong sector. This expectation for physics beyond the SM (BSM) is reinforced experimentally, where the observation of neutrino masses, dark matter, and the baryon asymmetry in the Universe, cannot be explained by the SM.

Leptoquarks (\(\textrm{LQ}\)s) are hypothetical bosons carrying both baryon and lepton number, thus interacting jointly with a lepton and a quark. They are a common ingredient in SM extensions where quarks and leptons share the same multiplet. Typical examples of these can be found in the Pati-Salam [1] and SU(5) GUT [2] models. In addition, they can also be found in theories with strong interactions, such as compositeness [3]. Due to their exotic coupling which allows quark-lepton transitions, they have a diverse phenomenology, which naturally leads to several constraints. An important one comes from proton decay, which forces the \(\textrm{LQ}\) mass to values close to the Planck scale, unless baryon and lepton numbers are not violated. Furthermore, in models where the latter are conserved, the \(\textrm{LQ}\) can still be subject to a wide variety of bounds [4,5,6,7,8,9]. Examples of these come from meson mixing, electric and magnetic dipole moments, atomic parity violation tests, rare decays, and direct searches. Nevertheless, the significance of each bound is a model dependent question.

In the last years, an increased interest in low scale \(\textrm{LQ}\)s has emerged due to the anomalies in the precision measurements of the \(\textrm{B}\)-meson decay rates. As it is well known, these corresponded mainly to deviations in the \(R_{K^{(*)}}\) [10,11,12,13] and \(R_{D^{(*)}}\) [14,15,16,17,18,19,20,21,22,23,24,25] ratios, which measure the violation of lepton flavour universality (LFU). What followed was a very intense theoretical development, aiming to explain the anomalies by \(\, \textrm{TeV}\) scale \(\textrm{LQ}\) exchange at tree level [26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41]. Before the end of 2022, it was generally agreed that, within proposed single \(\textrm{LQ}\) solutions, the only candidate capable of addressing all \(\textrm{B}\)-meson anomalies simultaneously and surviving all other constraints was a vector \(\textrm{LQ}\) (\(U_1\)), transforming as \((\textbf{3},\,\textbf{1},\,2/3)\), and coupling mainly to third-generation fermions via \(\textrm{b}\,\tau \) and \(\textrm{t}\,\nu _\tau \) vertices [36, 39]. In spite of a recent re-analysis of \(R_{K^{(*)}}\) data showing this ratio to be compatible with the SM prediction [42,43,44,45], the solution to the \(R_{D^{(*)}}\) anomaly is still an open question and remains a valid motivation for the study of scenarios where new particles have preferential couplings to third-generation fermions. Thus, it is still of interest to continue exploring the possibility of observing the \(U_1\) \(\textrm{LQ}\) at the LHC [41].

As expected, the theoretical community has extensively participated in probing \(\textrm{LQ}\) models by scrutinizing search strategies, recasting LHC results, and predicting the reach in the parameter space via different searches involving third-generation fermions (see for instance [46,47,48,49,50,51,52,53,54,55]). In addition, several \(13 \, \textrm{TeV}\) searches for \(\textrm{LQ}\)s decaying into \(\textrm{t}/\textrm{b}\) and \(\tau /\nu \) final states have been performed by the CMS [56,57,58,59,60,61,62,63,64] and ATLAS [65,66,67,68,69,70,71] collaborations.

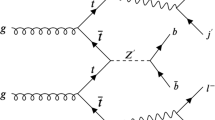

Representative Feynman diagrams of single (a), pair (b), and non-resonant (c) leptoquark production in proton-proton collision experiments. In single and pair production, the diagrams shown involve t-channel LQ exchange, dominant for lower LQ mass. However, for larger mass there exist s-channel diagrams featuring a virtual bottom quark and gluon, respectively

Of the searches above, we find [62] particularly interesting. Here, the CMS collaboration explores signals corresponding to \(\textrm{t}\,\nu \,\textrm{b}\,\tau \) and \(\textrm{t}\,\nu \,\tau \) final states, with \(137 \, \textrm{fb}^{-1}\) of proton-proton (\(\textrm{p}\,\textrm{p}\)) collision data. The former is motivated by \(\textrm{LQ}\) pair production, with one \(\textrm{LQ}\) decaying into \(\textrm{t}\,\nu \) and the other into \(\textrm{b}\,\tau \), while the latter arises from a single \(\textrm{LQ}\) produced in association with a \(\tau \), with a subsequent \(\textrm{LQ}\) decay into \(\textrm{t}\,\nu \) (see Fig. 1 for the corresponding diagrams). From the combination of both production channels, the search excludes \(U_1\) masses under \(1.3-1.7 \, \textrm{TeV}\), with this range depending on the \(U_1\) coupling to gluons and on its coupling \(g_U\) in the \(\textrm{b}_L\,\tau _L\) vertex.

What makes this search particularly attractive is that, for the first time, an LHC collaboration directly places (mass dependent) bounds on \(g_U\). This is important, since having information on this parameter is crucial in order to understand if the \(U_1\) is really responsible for the \(R_{D^{(*)}}\) anomaly. The inclusion of the single-\(\textrm{LQ}\) production mode is important, since its cross-section is directly proportional to \(g^2_U\). However, as can be seen in Fig. 6 of [62], the current constraints are dominated by pair production, with single-\(\textrm{LQ}\) production playing a subleading role. While this is expected [49], it still leads us to ponder the possibility of improving the sensitivity of LHC searches to single-\(\textrm{LQ}\) production, and thus on achieving better constraints on \(g_U\). Other complementary and similar searches to [62] were carried out by both ATLAS [70] and CMS [64].

It is also well known, though, that searches for an excess in the high-\(p_\mathrm{{T}}\) tails of \(\tau \) lepton distributions can strongly probe \(g_U\), up to very large \(\textrm{LQ}\) masses. Indeed, as shown in [41, 72], the new physics effective operators contributing to \(R_{D^{(*)}}\) also contribute to an enhancement in the \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) production rates. This has motivated a large number of recasts [39, 41, 49, 51, 73,74,75,76,77], as well as a CMS search explicitly providing constraints in terms of \(U_1\) [63]. Nevertheless, it is important to note that for these \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) processes, the \(\textrm{LQ}\) participates non-resonantly, so contributions to the \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) rates and kinematic distributions from non-LQ BSM diagrams containing possible virtual particles, such as a heavy neutral vector boson \(\textrm{Z}'\), could spoil a straightforward interpretation of any possible excess [51]. Thus, it is also necessary to understand how the presence of other virtual particles can affect the sensitivity of an analysis probing \(g_U\).

In this work we study the projected \(\textrm{LQ}\) sensitivity at the LHC, considering already available \(\textrm{p}\,\textrm{p}\) data as well as the expected amount of data to be acquired during the High-Luminosity LHC (HL-LHC) runs. We explore a proposed analysis strategy which utilizes a combination of single-, double-, and non-resonant-LQ production, targeting final states with varying \(\tau \)-lepton and b-jet multiplicities. The studies are performed considering various benchmark scenarios for different \(\textrm{LQ}\) masses and couplings, also taking into account distinct chiralities for the third-generation fermions in the \(\textrm{LQ}\) vertex. We also assess the impact of a companion \(\textrm{Z}'\), which is typical of gauge models, in non-resonant \(\textrm{LQ}\) probes, and find that interference effects can have a significant effect on the discovery reach. We consider this effect to be of high interest, given that non-resonant \(\textrm{LQ}\) production can have the largest cross-section, and thus could be an important channel in terms of discovery potential.

An important aspect of this work is that the analysis strategy is developed using a machine learning (ML) algorithm based on Boosted Decision Trees (BDT)[78]. The output of the event classifier is used to perform a profile-binned likelihood test to extract the overall signal significance for each model considered in the analysis. The advantage of using BDTs and other ML algorithms has been demonstrated in several experimental and phenomenological studies [50, 79,80,81,82,83,84]. In our studies, we find that the BDT algorithm gives sizeable improvement in signal significance.

This paper is organized as follows. In Sect. 2 we present our simplified model and review the model parameters which are relevant for solving the \(\textrm{B}\)-meson anomalies. Section 3 describes the details associated with the analysis strategy and the simulation of signal and background samples. Section 4 contains the results of the study, including the projected sensitivity for different benchmark scenarios considered. Finally, in Sect. 5 we discuss the implication of our results and prospects for future studies.

2 A simplified model for the \(U_1\) leptoquark

Extending the SM with a massive \(U_1\) vector \(\textrm{LQ}\) is not straightforward, as one has to ensure the renormalizability of the model. Most of the theoretical community has focused on extensions of the Pati-Salam (PS) models which avoid proton decay, such as the scenario found in [85]. Other examples include PS models with vector-like fermions [86,87,88], the so-called 4321 models [89,90,91], the twin PS\(^2\) model [92, 93], the three-site PS\(^3\) model [94,95,96], as well as composite PS models [97,98,99].

In what follows, we shall restrict ourselves to a simplified non-renormalizable lagrangian, understood to be embedded into a more complete model. The SM is thus extended by adding the following terms featuring the \(U_1\) \(\textrm{LQ}\):

where \(U_{\mu \nu }\equiv \mathcal {D}_\mu U_{1\nu }-\mathcal {D}_\nu U_{1\mu }\), and \(\mathcal {D}_\mu \equiv \partial _\mu +ig_s T^a G_\mu ^a+i\tfrac{2}{3}g'B_\mu \). As evidenced by the second line above, we assume that the \(\textrm{LQ}\) has a gauge origin.Footnote 1

The third and fourth lines in in Eq. (1) shows the \(\textrm{LQ}\) interactions with SM fermions, with coupling \(g_U\), which we have chosen as preferring the third generation.Footnote 2 These are particularly relevant for the \(\textrm{LQ}\) decay probabilities, as well as for the single-\(\textrm{LQ}\) production cross-section. The \(\beta _L^{s\tau }\) parameter, which is the \(\textrm{LQ}\rightarrow s\tau \) coupling in the \(\beta _L\) matrix (see footnote), is chosen to be equal to 0.2, following the fit done in [75], in order to simultaneously solve the \(R_{D^{(*)}}\) anomaly and satisfy the \(\textrm{p}\,\textrm{p}\rightarrow \tau ^+\tau ^-\) constraints. Although \(\beta _L^{s\tau }\) technically alters the single-\(\textrm{LQ}\) production cross-section and \(\textrm{LQ}\) branching fractions, we have confirmed that a value of \(\beta _L^{s\tau } = 0.2\) results in negligible impact on our collider results, and thus is ignored in our subsequent studies.

The \(\textrm{LQ}\) right-handed coupling is modulated with respect to the left-handed one by the \(\beta _R\) parameter. The choice of \(\beta _R\) is important phenomenologically, as it affects the \(\textrm{LQ}\) branching ratios,Footnote 3 as well as the single-\(\textrm{LQ}\) production cross-section. To illustrate the former, Fig. 2 (top) shows the \(\textrm{LQ}\rightarrow \text {b}\tau \) and \(\textrm{LQ}\rightarrow \text {t}\nu \) branching ratios as functions of the \(\textrm{LQ}\) mass, for two values of \(\beta _R\). For large \(\textrm{LQ}\) masses, we confirm that with \(\beta _R = 0\) then \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) \approx \textrm{BR}(\textrm{LQ}\rightarrow \textrm{t}\,\nu )\approx \tfrac{1}{2}\). However, for \(\beta _R = -1\), as was chosen in [38], the additional coupling adds a new term to the total amplitude, leading to \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) \approx \tfrac{2}{3}\). The increase in this branching ratio can thus weaken bounds from \(\textrm{LQ}\) searches targeting decays into \(\textrm{t}\,\nu \) final states, which motivates exploring the sensitivity in b\(\tau \) final states exclusively. Note that although a \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) \approx 1\) scenario is possible by having the \(\textrm{LQ}\) couple exclusively to right-handed currents (i.e, \(g_U\rightarrow 0\), but \(g_U\beta _R\not =0\)), it does not solve the observed anomalies in the \(R_{D^{(*)}}\) ratios. Therefore, although some LHC searches assume \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = 1\), we stress that in our studies we assume values of the model parameters and branching ratios that solve the \(R_{D^{(*)}}\) ratios.

Top: The \(\textrm{LQ}\rightarrow \text {b}\tau \) and \(\textrm{LQ}\rightarrow \text {t}\nu \) branching ratios for \(\beta _{R} = 0\) (solid lines) and \(\beta _{R} = -1\) (dashed lines). Bottom: Signal cross-section as a function of the \(\textrm{LQ}\) mass, for \(\sqrt{ s}=13 \, \textrm{TeV}\), with \(g_U=1.8\). We show single, pair, and non-resonant production, for \(\beta _R=-1,\,0\) in solid and dashed lines, respectively

To further understand the role of \(\beta _R\) at colliders, Fig. 2 (bottom) shows the cross-section for single-\(\textrm{LQ}\) (s\(\textrm{LQ}\)), double-\(\textrm{LQ}\) (d\(\textrm{LQ}\)), and non-resonant (non-res) production, as a function of mass and for a fixed coupling \(g_{U} = 1.8\), assuming \(\textrm{p}\,\textrm{p}\) collisions at \(\sqrt{s} = 13\) \(\, \textrm{TeV}\). We note that this benchmark scenario with \(g_{U}=1.8\) results in a \(\textrm{LQ}\rightarrow \text {b}\tau \) decay width that is <5% of the \(\textrm{LQ}\) mass, for mass values from 250 \(\, \textrm{GeV}\) to 2.5 \(\, \textrm{TeV}\). In the figure, we observe that, since d\(\textrm{LQ}\) production is mainly mediated by events from quantum chromodynamic processes, the choice of \(\beta _R\) does not affect the cross-section. However, for s\(\textrm{LQ}\) production, a non-zero value for \(\beta _R\) increases the cross-section by about a factor of 2 and by almost one order of magnitude in the case of non-res production. These results shown in Fig. 2 are easily understood by considering the diagrams shown in Fig. 1. The \(\textrm{LQ}\) mass value where the s\(\textrm{LQ}\) production cross-section exceeds the d\(\textrm{LQ}\) cross-section depends on the choice of \(g_U\).

We also note that to solve the \(R_{D^{(*)}}\) anomaly, the authors of [75] point out that the wilson coefficient \(C_U\equiv g^2_U\,v^2_{SM}/(4\,M^2_U)\) is constrained to a specific range of values, and this range depends on the value of the \(\beta _{R}\) parameter. Therefore, the allowed values of the coupling \(g_{U}\) depend on \(M_{U}\) and \(\beta _{R}\), and thus our studies are performed in this multi-dimensional phase space.

As noted in Sect. 1, we study the role of a \(\textrm{Z}'\) boson in \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) production. The presence of a \(\textrm{Z}'\) boson in \(\textrm{LQ}\) models has been justified in various papers, for example, in [51]. The argument is that minimal extensions of the SM which include a massive gauge \(U_1\) LQ, uses the gauge group \(SU(4)\times SU(3)^{\prime }\times SU(2)_L \times U(1)_{T_R^3}\). Such an extension implies the presence of an additional massive boson, \(\textrm{Z}^{\prime }\), and a color-octet vector, \(G'\), arising from the spontaneous symmetry breaking into the SM.Footnote 4 The \(\textrm{Z}'\) in particular can play an important role in the projected \(\textrm{LQ}\) discovery reach, as it can participate in \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) production by s-channel exchange, both resonantly and as a virtual mediator. To study the effect of a \(\textrm{Z}'\) on the \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) production cross-sections and kinematics, we extend our benchmark Lagrangian in Eq. (1) with further non-renormalizable terms involving the \(\textrm{Z}'\). Accordingly, we assume the \(\textrm{Z}'\) only couples to third-generation fermions. Our simplified model is thus extended by:

where the constants \(M_{\textrm{Z}^{\prime }}\), \(g_{Z^{\prime }}\), \(\zeta _q \), \(\zeta _t \), \(\zeta _b\), \(\zeta _{\ell }\), \(\zeta _\tau \), are model dependent.

We study two extreme cases for the \(\textrm{Z}'\) mass, following [100], namely \(M_{\textrm{Z}'} = \sqrt{\tfrac{1}{2}}M_U<M_U\) and \(M_{\textrm{Z}'} = \sqrt{\tfrac{3}{2}}M_U>M_U\). We also assume the \(\textrm{LQ}\) and \(\textrm{Z}'\) are uniquely coupled to left-handed currents, i.e. \(\zeta _q=\zeta _\ell = 1\) and \(\zeta _t=\zeta _b=\zeta _\tau =0\). With these definitions, Fig. 3 shows the effect of the \(\textrm{Z}'\) on the \(\tau \tau \) production cross-section, considering \(g_U = 1\), \(\beta _R=0\), and different \(g_{\textrm{Z}^{\prime }}\) couplings. On the top panel, the cross-sections corresponding to the cases where \(M_{\textrm{Z}'} = \sqrt{\tfrac{1}{2}}M_U\) are shown. As expected, the \(\tau \tau \) production cross-section for the inclusive case (i.e., \(g_{\textrm{Z}'} \ne 0\)) is larger than that for the \(\textrm{LQ}\)-only non-res process (\(g_{\textrm{Z}'} = 0\), depicted in blue). This effect increases with \(g_{\textrm{Z}'}\) and, within the evaluated values, can exceed the \(\textrm{LQ}\)-only cross-section by up to two orders of magnitude. In contrast, a more intricate behaviour can be seen in the bottom panel of Fig. 3, which corresponds to \(M_{\textrm{Z}'} = \sqrt{\tfrac{3}{2}}M_U\). Here, for low values of \(M_U\), a similar increase in the cross-section is observed. However, for higher values of \(M_U\), the inclusive \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) cross-section is smaller than the \(\textrm{LQ}\)-only \(\tau \tau \) cross-section. This behaviour suggests the presence of a dominant destructive interference at high masses, leaving its imprint on the results.

\(\tau \tau \) cross-section as a function of the \(\textrm{LQ}\) mass for different values of \(g_U\) and \(g_{\textrm{Z}^{\prime }}\). The estimates are performed at \(\sqrt{s}=13 \, \textrm{TeV}\), \(\beta _R=0\), \(M_{\textrm{Z}^{\prime }} = \sqrt{1/2} M_{U}\) (top), and \(M_{\textrm{Z}^{\prime }} = \sqrt{3/2} M_{U}\) (bottom)

The relative kinematic interference (RKI), as a function of the reconstructed mass of two taus, for different \(\textrm{LQ}\) masses. The studies are performed assuming \(\sqrt{s}=13 \, \textrm{TeV}\), \(\beta _R=0\), \(g_U = 1.0\), \(g_{\textrm{Z}^{\prime }} =1.0\), \(M_{\textrm{Z}^{\prime }} = \sqrt{1/2} M_{U}\) (top), and \(M_{\textrm{Z}^{\prime }} = \sqrt{3/2} M_{U}\) (bottom)

In order to further illustrate the effect, Fig. 4 shows the relative kinematic interference (\(\textrm{RKI}\)) as a function of the reconstructed invariant mass \(m_{\tau \tau }\), for \(g_{\textrm{Z}^{\prime }} = 1\) and varying values of \(M_U\). The RKI parameter is defined as

where \(\sigma _{X}\) is the production cross-section arising due to contributions from X particles. For example, \(\sigma _{\textrm{LQ}+\textrm{Z}'}\) represents the inclusive cross-section where both virtual \(\textrm{LQ}\) and s-channel \(\textrm{Z}'\) exchange contribute. For both cases, we can observe the presence of deep valleys in the RKI curves when \(m_{\tau \tau }\rightarrow 0\), indicating destructive interference between the \(\textrm{LQ}\) and the \(\textrm{Z}'\) contributions. This interference generates a suppression of the differential cross-section for lower values of \(m_{\tau \tau }\) and, therefore, in the integrated cross-section.

The observed interference effects are consistent with detailed studies on resonant and non-res \(\textrm{p}\,\textrm{p}\rightarrow \textrm{t}{\bar{\textrm{t}}}\) production, performed in reference [101].

3 \(\textrm{LQ}\) search strategy and simulation

Our proposed analysis strategy utilizes single-\(\textrm{LQ}\) (i.e. \(\textrm{p}\,\textrm{p}\rightarrow \tau \,\textrm{LQ}\)), double-\(\textrm{LQ}\) (i.e. \(\textrm{p}\,\textrm{p}\rightarrow \textrm{LQ}\,\textrm{LQ}\)), and non-resonant \(\textrm{LQ}\) production (i.e. \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \)) as shown in Fig. 1. At leading order in \(\alpha _s\), since we focus on \(U_1\rightarrow \textrm{b}\,\tau \) decays, the s\(\textrm{LQ}\) process results in the \(\text {b}\tau \tau \) mode, the d\(\textrm{LQ}\) process results in the \(\text {bb}\tau \tau \) mode, and the non-res process results in the \(\tau \tau \) mode. Therefore, in all cases we obtain two \(\tau \) leptons, with either 0, 1, or 2 b jets. The \(\tau \) leptons decay to hadrons (\(\tau _{\text {h}}\)) or semi-leptonically to electrons or muons (\(\tau _{\ell }\), \(\ell = \text {e}\) or \(\mu \)). To this end, we study six final states: \(\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\), \(\textrm{b}\,\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\), and \(\textrm{bb}\,\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\), which can be naively associated to non-res, s\(\textrm{LQ}\) and d\(\textrm{LQ}\) production, respectively. Nevertheless, experimentally it is possible for \(\textrm{b}\) jets to not be properly identified or reconstructed, leading, for instance, to a fraction of d\(\textrm{LQ}\) signal events falling into the \(\textrm{b}\,\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\) and \(\tau _{\mathrm h}\tau _{\textrm{h}/\ell }\) categories. Similarly, soft jets can fake \(\textrm{b}\) jets, such that non-res processes can contribute to the \(\textrm{b}\,\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\) and \(\textrm{bb}\,\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\) final states. This kind of signal loss and mixing is taken into account in our analysis.Footnote 5

The contributions of signal and background events are estimated using Monte Carlo (MC) simulations. We implemented the \(U_1\) model from [51], adjusted to describe the lagrangian in Eqs. (1) and (2), using FeynRules (v2.3.43) [102, 103]. The branching ratios and cross-sections have been calculated using MadGraph5_aMC (v3.1.0) [104, 105], the latter at leading order in \(\alpha _s\). The corresponding samples are generated considering \(\textrm{p}\,\textrm{p}\) collisions at \(\sqrt{s}=13 \, \textrm{TeV}\) and \(\sqrt{s}=13.6 \, \textrm{TeV}\). All samples are generated using the NNPDF3.0 NLO [106] set for parton distribution functions (PDFs) and using the full amplitude square SDE strategy for the phase-space optimization due to strong interference effects with the \(\textrm{Z}'\) boson. Parton level events are then interfaced with the PYTHIA (v8.2.44) [107] package to include parton fragmentation and hadronization processes, while DELPHES (v3.4.2) [108] is used to simulate detector effects, using the input card for the CMS detector geometric configurations, and for the performance of particle reconstruction and identification.

At parton level, jets and leptons are required to have a minimum transverse momentum (\(p_\mathrm{{T}}\)) of \(20 \, \textrm{GeV}\), while \(\textrm{b}\) jets are required to have a minimum \(p_\mathrm{{T}}\) of \(30 \, \textrm{GeV}\). Additionally, we constrain the pseudorapidity (\(\eta \)) to \(|\eta | < 2.5\) for \(\textrm{b}\) jets and leptons, and \(|\eta | < 5.0\) for jets. The production cross-sections shown in the bottom panel of Figs. 2 and 3 are obtained with the aforementioned selection criteria.

Table 1 shows the preliminary event selection criteria for each channel at analysis level. The channels are divided based on the multiplicity of \(\textrm{b}\) jets, \(N(\textrm{b})\), number of light leptons, \(N(\ell )\), number of hadronic tau leptons, \(N(\tau _{\mathrm h})\), and kinematic criteria based on \(\eta \), \(p_\mathrm{{T}}\) and spatial separation of particles in the detector volume \((\Delta R = \sqrt{(\Delta \eta )^{2} + (\Delta \phi )^{2}})\). The minimum \(p_\mathrm{{T}}\) thresholds for leptons are chosen following references [62, 63, 67], based on experimental constrains associated to trigger performance. Following reference [109], we use a flat identification efficiency for \(\textrm{b}\) jets of 70% across the entire \(p_\mathrm{{T}}\) spectrum with misidentification rate of 1%. These values correspond with the “medium working point” of the CMS algorithm to identify \(\textrm{b}\) jets, known as DeepCSV. We also explored the “Loose” (“Tight”) working point using an efficiency of 85% (45%) and mis-identification rate of 10% (0.1%). The “medium working point” was selected as it gives the best signal significance for the analysis.

For the performance of \(\tau _{\text {h}}\) identification in DELPHES, we consider the latest technique described in [110], which is based on a deep neural network (i.e. DeepTau) that combines variables related to isolation and \(\tau \)-lepton lifetime as input to identify different \(\tau _{\text {h}}\) decay modes. Following [110], we consider three possible DeepTau “working points”: (i) the “Medium” working point of the algorithm, which gives a 70% \(\tau _{\text {h}}\)-tagging efficiency and 0.5% light-quark and gluon jet mis-identification rate; (ii) the “Tight” working point, which gives a 60% \(\tau _{\text {h}}\)-tagging efficiency and 0.2% light-quark and gluon jet mis-identification rate; and (iii) the “VTight” working point, which gives a 50% \(\tau _{\text {h}}\)-tagging efficiency and 0.1% light-quark and gluon jet mis-identification rate. Similar to the choice of \(\text {b}\)-tagging working point, the choice of \(\tau _{\text {h}}\)-tagging working point is determined through an optimization process which maximizes discovery reach. The “Medium” working point was ultimately shown to provide the best sensitivity and therefore chosen for this study. For muons (electrons), the assumed identification efficiency is 95% (85%), with a 0.3% (0.6%) mis-identification rate [111,112,113].

After applying the preliminary selection criteria, the primary sources of background are production of top quark pairs (\(\textrm{t}{\bar{\textrm{t}}}\)), and single-top quark processes (single \(\textrm{t}\)), followed by production of vector bosons with associated jets from initial or final state radiation (V+jets), and pair production of vector bosons (VV). The number of simulated MC events used for each sample is shown in Table 2.

We use two different sets of signal samples. The first set includes various \(\{ M_{U},g_{U} \}\) scenarios, for two different values of \(\beta _R\in \{ 0,-1 \}\). We generate signal samples for \(M_{U}\) values between 250 and 5000 GeV, in steps of 250 GeV. The considered \(g_{U}\) coupling values are between 0.25 and 3.5, in steps of 0.25. Although the signal cross-sections depend on both \(M_{U}\) and \(g_{U}\), the efficiencies of our selections only depend on \(M_{U}\) (for all practical purposes) since the decay widths are relatively small compared to the mass of \(M_{U}\) (\(\frac{\Gamma _{U}}{M_{U}} < 5\)%), and thus more sensitive to experimental resolution. In total there are 280 \(\{ M_{U},g_{U},\beta _{R} \}\) scenarios simulated for this first set of signal samples, and for each of these scenarios two subsets of samples are generated, which are used separately for the training and testing of the machine learning algorithm. The second set of signal samples is used to evaluate interference effects between \(\textrm{LQ}\)s and the \(\textrm{Z}^{\prime }\) bosons in non-res production. Using benchmark values \(g_U=1.8\) and \(\beta _R=0\), we consider various \(\{ M_{U},g_{\textrm{Z}^{\prime }} \}\) scenarios for two different \(\textrm{Z}^{\prime }\) mass hypotheses, \(\left( M_{\textrm{Z}'}/M_U\right) ^2 \in \left\{ \tfrac{1}{2},\tfrac{3}{2} \right\} \). The \(M_{U}\) values vary between 500 and 5000 GeV, in steps of 250 GeV. The \(g_{\textrm{Z}^{\prime }}\) coupling values are between 0.25 and 3.5, in steps of 0.25. Therefore, in total there are 280 \(\{ M_{U},g_{\textrm{Z}^{\prime }},\left( M_{\textrm{Z}'}/M_U\right) ^2 \}\) scenarios simulated for this second set of signal samples, and for each of these scenarios a total of \(6.0 \times 10^{5}\) MC events are generated.

As noted previously, the simulated signal and background events are initially filtered using selections which are motivated by experimental constraints, such as the geometric constraints of the CMS detector, the typical kinematic thresholds for reconstruction of particle objects, and the available triggers. The remaining events after the preliminary event selection criteria are used to train and execute a BDT algorithm for each signal point in the \(\{ M_{U},g_{U} \}\) space, in order to maximize the probability to detect signal amongst background events. The BDT algorithm is implemented using the scikit-learn [114] and xgboost (XGB) [115] python libraries. We use the the XGBClassifier class from the xgboost library, a 10-fold cross validation using the scikit-learn method (GridCVFootnote 6) for a grid in a hyperparameter space with 75, 125, 250, and 500 estimators, maximum depth in 3, 5, 7, 9, as well as learning rates of 0.01, 0.1, 1, and 10. For the cost function, we utilize the default mean square error (MSE). Additionally, we use the tree method based on the approximate greedy algorithm (histogram-optimized), referred to as hist, with a uniform sample method. These choices allow us to maximize the detection capability of the BDT algorithm by carefully tuning the hyperparameters, selecting an appropriate cost function, and utilizing an optimized tree construction method.

For each of the six analysis channels and \(\{ M_{U},g_{U} \}\) signal point, the binary XGB classifier was trained (tested) with 20% (80%) of the simulated events, for each signal and background MC sample. Over forty kinematic and topological variables were studied as input for the XGB. These included the momenta of b jets and \(\tau _{\text {h},\ell }\) candidates; both invariant and transverse masses of pairs of \(\tau \) objects and of \(\text {b}\,\tau \) combinations; angular differences between b jets, between \(\tau \) objects, and between the \(\tau _{\text {h},\ell }\) and b jets; and additional variables derived from the missing momentum in the events. After studying correlations between variables and their impact on the performance of the BDT, we found that only eight variables were necessary and responsible for the majority of the sensitivity of the analysis. The variable that provides the best signal to background separation is the scalar sum of the \(p_\mathrm{{T}}\) of the final state objects (\(\tau _{\mathrm h}\), \(\tau _{h/\ell }\), and \(\textrm{b}\) jets) and the missing transverse momentum, referred to as \(S_{\textrm{T}}^{MET}\):

The \(S_{\textrm{T}}^{MET}\) variable has been successfully used in \(\textrm{LQ}\) searches at the LHC, since it probes the mass scale of resonant particles involved in the production processes. Other relevant variables include the magnitude of the vectorial difference in \(p_\mathrm{{T}}\) between the two lepton candidates (\(|\Delta \vec {p}_T |_{\tau _{\text {h}} \tau _{\text {h}/\ell }}\)), the \(\Delta R_{\tau _{\text {h}} \tau _{\text {h}/\ell }}\) separation between them, the reconstructed dilepton mass \(m_{\tau _{\text {h}}\tau _{\text {h}/\ell }}\), and the product of their electric charges (\(Q_{\tau _{\text {h}}} \times Q_{\tau _{\text {h}/\ell }}\)). We also use the \(|\Delta \vec {p}_T|\) between the \(\tau _{\mathrm h}\) candidate and \(\vec {p}_T^{\,miss}\), and (if applicable) the \(|\Delta \vec {p}_T|\) between the \(\tau _{\text {h}}\) candidate and the leading \(\textrm{b}\) jet. For the final states including two \(\tau _{\text {h}}\) candidates, the one with the highest \(p_\mathrm{{T}}\) is used.

\(S_{\textrm{T}}^{MET}\), \(m_{\tau _{\mathrm h} \tau _{\ell }}\), \(\Delta R_{\tau _{\mathrm h}\tau _{\ell }}\), \(|\Delta \vec {p}_T|_{b \tau _{\mathrm h}}\) signal and background distributions for the \(b\tau _h\tau _\ell \) channel. The signal distributions are generated for a benchmark sample with \(\textrm{LQ}\) mass of \(1.5 \, \textrm{TeV}\) maximally coupled to right-handed currents. The combined distribution (shown as a stacked histogram) is the sum of the distributions, correctly weighted according to their respective cross-sections, assuming a coupling \(g_U = 1.8\)

Figure 5 shows some relevant topological distributions, including \(S_{\textrm{T}}^{MET}\) on the top, for the \(\textrm{b}\, \tau _{\mathrm h} \tau _{\ell }\) category. In the Figure we include all signal production modes to this channel, with each component weighted with respect to their total contribution to the combined signal. The combined signal distribution is normalised to unity. We also show all background processes contributing to this channel, each of them individually normalised to unity. We find that the combined signal is dominated by s\(\textrm{LQ}\) production for large values of \(S_{\textrm{T}}^{MET}\), while non-res production dominates for small \(S_{\textrm{T}}^{MET}\). Interestingly, the backgrounds also sit at low \(S_{\textrm{T}}^{MET}\) values, since \(S_{\textrm{T}}^{MET}\) is driven by the mass scale of the SM particles being produced, in this case top quarks and Z/W bosons. This suggest that the s\(\textrm{LQ}\) and d\(\textrm{LQ}\) signals can indeed be separated from the SM background. As expected, the \(S_{\textrm{T}}^{MET}\) s\(\textrm{LQ}\) and d\(\textrm{LQ}\) signal distributions have a mean near \(M_U\), representative of resonant production, and a broad width as expected for large mass \(M_{U}\) hypotheses when information about the z-components of the momenta of objects is not utilised in the \(S_{\textrm{T}}^{MET}\) calculation.

Figure 5 (second from the top) shows the reconstructed mass of the ditau system, for the \(\textrm{b}\tau _{\text {h}}\tau _{\ell }\) search channel. Since the two \(\tau \) candidates in signal events arise from different production vertices (e.g., each \(\tau \) candidate in d\(\textrm{LQ}\) production comes from a different \(\textrm{LQ}\) decay chain), the ditau mass distribution for signal scales as \(m_{\tau _{\text {h}}\tau _{\ell }} \sim p_\mathrm{{T}}(\tau _{\text {h}}) + p_\mathrm{{T}}(\tau _{\ell })\), and thus has a tail which depends on \(M_{U}\) and sits above the expected SM spectrum. On the other hand, the SM \(m_{\tau _{\text {h}}\tau _{\ell }}\) distributions sit near \(m_{\text {Z/W}}\) since the \(\tau \) candidates in SM events arise from \(\textrm{Z}/\textrm{W}\) decays.

Figure 5 (third from the top) shows the \(\Delta R_{\tau _{\text {h}}\tau _{\ell }}\) distribution for the \(\text {b}\tau _{\text {h}}\tau _{\ell }\) channel. In the case of the \(\textrm{p}\,\textrm{p}\rightarrow \tau \tau \) non-res signal distribution, the two \(\tau \) leptons must be back-to-back to preserve conservation of momentum. Therefore, the visible \(\tau \) candidates, \(\tau _{\text {h}}\) and \(\tau _{\ell }\), give rise to a \(\Delta R_{\tau _{\text {h}}\tau _{\ell }}\) distribution that peaks near \(\pi \) radians. In the case of s\(\textrm{LQ}\) production, although the \(\textrm{LQ}\) and associated \(\tau \) candidate must be back-to-back, the second \(\tau \) candidate arising directly from the decay of the \(\textrm{LQ}\) does not necessarily move along the direction of the \(\textrm{LQ}\) (since the \(\textrm{LQ}\) also decays to a b quark). As a result, the \(\Delta R_{\tau _{\text {h}}\tau _{\ell }}\) distribution for the s\(\textrm{LQ}\) signal process is smeared out, is broader, and has a mean below \(\pi \) radians. On the other hand, the \(\tau _{\text {h}}\) candidate in \(\textrm{t}{\bar{\textrm{t}}}\) events is often a jet being misidentified as a genuine \(\tau _{\text {h}}\). When this occurs, the fake \(\tau _{\text {h}}\) candidate can arise from the same top quark decay chain as the \(\tau _{\ell }\) candidate, thus giving rise to small \(\Delta R_{\tau _{\text {h}}\tau _{\ell }}\) values. This difference in the signal and background distributions provides a nice way for the ML algorithm to help decipher signal and background processes.

As noted above, the \(|\Delta \vec {p}_{T}|\) distribution between the visible \(\tau \) candidates and the b-quark jets is an important variable to help the BDT distinguish between signal and background processes. The discriminating power can be seen in Fig. 5 (bottom), which shows the \(|\Delta \vec {p}_{T}|\) between the \(\tau _{\text {h}}\) and b-jet candidate of the \(\text {b}\tau _{\text {h}}\tau _{\ell }\) channel. In the case of d\(\textrm{LQ}\) production, the b quarks and \(\tau \) leptons from the \(\textrm{LQ}\rightarrow \text {b}\tau \) decay acquire transverse momentum of \(p_{T} \sim M_{U}/2\). However, when the \(\tau \) lepton decays hadronically (i.e. \(\tau \rightarrow \tau _{\text {h}}\nu \)), a large fraction of the momentum is lost to the neutrino. Therefore, the \(|\Delta \vec {p}_{T}|_{\text {b}\tau _{\text {h}}}\) distribution for the d\(\textrm{LQ}\) (and s\(\textrm{LQ}\)) process peaks below \(M_{U}\)/2. On the other hand, for a background process such as V+jets, the b jet arises due to initial state radiation, and thus must balance the momentum of the associated vector boson (i.e. \(p_{T}(\text {b}) \sim p_{T}(\text {V}) \sim m_{\text {V}}\)). Since the visible \(\tau \) candidate is tyically produced from the V boson decay chain, its momentum (on average) is approximately \(p_{T}(\tau _{\text {h}}) \sim p_{T}(\text {V})/4 \sim m_{\text {V}}/4\). Therefore, to first order, the \(|\Delta \vec {p}_{T}|\) distribution for the V+jets background is expected to peak below the \(m_{\text {V}}\) mass.

Lets us turn to the results of the \(\textrm{b}\tau _{\mathrm h}\tau _\ell \) BDT classifier, which is shown in Fig. 6 for the different signal production modes and backgrounds. Similar to Fig. 5, the distribution for each individual signal production mode is weighted with respect to their total contribution to the combined signal. The background distributions and combined signal distribution are normalized to an area under the curve of unity. Figure 6 shows the XGB distributions for a signal benchmark point with \(M_{U} = 1.5\) TeV, \(g_{U} = 1.8\), and \(\beta _{R} = -1\). The XGB output is a value between 0 and 1, which quantifies the likelihood that an event is either signal-like (XGB output near 1) or background-like (XGB output near 0). We see that the presence of the s\(\textrm{LQ}\) and d\(\textrm{LQ}\) production modes is observed as an enhancement near a XGB output of unity, while the backgrounds dominate over the low end of the XGB output spectrum, especially near zero. In fact, over eighty percent of the sLQ and dLQ distributions reside in the last two bins, XGB output greater than 0.96, while more than sixty percent of the backgrounds fall in the first two bins, XGB output less than 0.04. It is also interesting to note that in comparison to the sLQ and dLQ distributions in Fig. 6, non-res is broader and not as narrowly peaked near XGB output of 1, which is expected due to the differences in kinematics described above. Overall, if we focus on the last bin in this distribution, we find approximately 0.2% of the background, in contrast to 22% of the non-res, 78% of the sLQ, and 91% of the dLQ signal distributions. These numbers highlight the effectiveness of the XGB output in reducing the background in the region where the signal is expected.

The output signal and background distributions of the XGB classifier, normalised to their cross section times pre-selection efficiency times luminosity, are used to perform a profile binned likelihood statistical test in order to determine the expected signal significance. The estimation is performed using the RooFit [116] package, following the same methodology as in Refs. [117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132]. The value of the significance (\(Z_{sig}\)) is measured using the probability to obtain the same outcome from the test statistic in the background-only hypothesis, with respect to the signal plus background hypothesis. This allows for the determination of the local p-value and thus the calculation of the signal significance, which corresponds to the point where the integral of a Gaussian distribution between \(Z_{sig}\) and \(\infty \) results in a value equal to the local p-value.

Systematic uncertainties are incorporated as nuisance parameters, considering log-priors for normalization and Gaussian priors for shape uncertainties. Our consideration of systematic uncertainties includes both experimental and theoretical effects, focusing on the dominant sources of uncertainty. Following [133], we consider a 3% systematic uncertainty on the measurement of the integrated luminosity at the LHC. A 5% uncertainty arises due to the choice of the parton distribution function used for the MC production, following the PDF4LHC prescription [134]. The chosen PDF set only has an effect on the overall expected signal and background yields, but the effect on the shape of the XGB output distribution is negligible. Reference [110] reports a systematic uncertainty of 2–5%, depending on the \(p_{\text {T}}\) and \(\eta \) of the \(\tau _{\text {h}}\) candidate. Therefore, we utilize a conservative 5% uncertainty per \(\tau _{\text {h}}\) candidate, independent of \(p_{\text {T}}\) and \(\eta \), which is correlated between signal and background processes with genuine \(\tau _{\text {h}}\) candidates, and correlated across XGB bins for each process. We assumed a 5% \(\tau _{\text {h}}\) energy scale uncertainty, independent of \(p_{\text {T}}\) and \(\eta \), following the CMS measurements described in [110]. Finally, we assume a conservative 3% uncertainty per b-jet candidate, following reference [135], and an additional 10% uncertainty in all the background predictions to account for possible mismodeling by the simulated samples. The uncertainties on the background estimates are typically derived from collision data using dedicated control samples that have negligible signal contamination and are enriched with events from the specific targeted background. The systematic uncertainties on the background estimates are treated as uncorrelated between background processes.

Signal significance for different coupling scenarios and \(\textrm{LQ}\) masses, without right-handed currents, using the combination of all search channels. The results pertaining to s\(\textrm{LQ}\), d\(\textrm{LQ}\) and non-res production are displayed respectively from the top. These results are for \(\sqrt{s} = 13 \, \textrm{TeV}\) and \(137 \, \textrm{fb}^{-1}\)

4 Results

The expected signal significance for s\(\textrm{LQ}\), d\(\textrm{LQ}\) and non-res production, and their combination, is presented in Fig. 7. Here, the significance is shown as a heat map in a two dimensional plane of \(g_U\) versus \(M_U\), considering exclusive couplings to left-handed currents, i.e. \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau )=\tfrac{1}{2}\). The dashed lines show the contours of constant signal significance. The \(1.69 \sigma \) contour represents exclusion at 95% confidence level, and the 3-5\(\sigma \) contours represent potential discovery. The grey band defines the set of \(\{ M_{U},g_{U} \}\) values that can explain the \(\textrm{B}\)-meson anomalies, \(C_U\sim 0.01\) for this scenario. The estimates are performed under the conditions for the second run, RUN-II, of the LHC (\(\sqrt{s} = 13 \, \textrm{TeV}\) and \(L = 137 \, \textrm{fb}^{-1}\)). We find that the d\(\textrm{LQ}\) interpretation plot (Fig. 7 second from the top) does not depend on \(g_{U}\), which is expected due to d\(\textrm{LQ}\) production arising exclusively from interactions with gluons. For this reason, the d\(\textrm{LQ}\) production process provides the best mode for discovery when \(g_{U}\) is small. On the other hand, the non-res channel is more sensitive to changes in the coupling parameter \(g_U\), as its production cross-section depends on \(g_{U}^{4}\). Therefore, the non-res production process provides the best mode for discovery when \(g_{U}\) is large. These results confirm the expectations from previous analyses (see for instance [49]), in the sense that the d\(\textrm{LQ}\) and non-res processes complement each other nicely at low and high \(g_{U}\) scenarios. The s\(\textrm{LQ}\) channel combines features from both the d\(\textrm{LQ}\) and non-res channels, in principle making it an interesting option to explore different scenarios and gain a better understanding of \(\textrm{LQ}\) properties, but the evolution of the signal significance in the full phase space is more complicated as it involves resonant \(\textrm{LQ}\) production with a cross-section that depends non-trivially on \(M_{U}\), \(g_{U}\), and the \(\textrm{LQ}\) coupling to gluons. However, Fig. 7 shows that the s\(\textrm{LQ}\) production process can provide complementary and competitive sensitivity to the non-res and d\(\textrm{LQ}\) processes, in certain parts of the phase space.

The top (bottom) panel shows signal significance comparison with ATLAS [70] (CMS and ATLAS [64, 71]) background only hypothesis, for the combination of all channels, with uniquely coupling to left-handed (right-handed) currents. The estimates are performed at \(\sqrt{s} = 13 \, \textrm{TeV}\) and \(137 \, \textrm{fb}^{-1}\)

The top panel of Fig. 8 presents the sensitivity of all signal production processes combined, and compares our expected exclusion region with the latest one from the ATLAS Collaboration [70]. The comparison suggests that our proposed analysis strategy provides better sensitivity than current methods being carried out at ATLAS, especially at large values of \(g_U\). In particular, we find that with the \(\text {pp}\) data already available from RUN-II, our expected exclusion curves begin to probe solutions to the B-anomalies for \(\textrm{LQ}\) masses up to \(2.25\, \textrm{TeV}\).

Figure 8 shows the expected signal significance considering \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = 1\), in order to compare our analysis with the corresponding results from the CMS [64] and ATLAS [71] Collaborations. Let us emphasize again that \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau )\) depends on \(\beta _R\), as illustrated on the top panel of Fig. 2. Thus, although the \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = 1\) scenario is a possible physical case, it does not solve the observed anomalies in the \(R_{D^{(*)}}\) ratios, as it corresponds to the case where LQs couple exclusively to right-handed currents.

With this in mind, the scenario studied by CMS in [64] considers couplings only to left-handed currents, setting artificially the condition \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = 1\). In order to compare, we scale the efficiency\(\times \)acceptance of our selection criteria for \(\beta _R=0\), by a factor of 2.0 for s\(\textrm{LQ}\) and 4.0 for d\(\textrm{LQ}\). According to Fig. 8, the ML approach that we have followed again suggests an optimisation of the signal and background separation, having the potential of improving the regions of exclusion (1.69 \(\sigma \)) with respect to that of CMS. In the bottom panel of the figure we have also included a similar exclusion by ATLAS [71]. However, since ATLAS only considers d\(\textrm{LQ}\) production in the analysis, the results are not entirely comparable, so are included only as a reference.

Signal significance for different coupling scenarios and \(\textrm{LQ}\) masses for all channels. This plot summarizes our results with \(\beta _{R} = 0\) (without right-handed currents) and \(\beta _{R} = -1\) (maximally coupled to right-handed currents). The estimates are performed at \(\sqrt{s} = 13 \, \textrm{TeV}\) and \(137 \, \textrm{fb}^{-1}\)

We now turn to the role of \(\beta _R\), and our capacity of probing the regions solving the B-meson anomalies. Figure 9 shows the maximum significant contours, under LHC RUN-II conditions, for the different \(\textrm{LQ}\) production mechanisms and their combination, considering scenarios with only left-handed currents (\(\beta _R=0\), top) and with maximal right-handed currents (\(\beta _R=-1\), bottom). We find a noticeable improvement in signal significance in all channels when taking \(\beta _R=-1\), as is expected from the increase in \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau )\) branching ratio and production cross-sections (see Fig. 2). However, the region solving the B-meson anomalies also changes, preferring lower values of \(g_U\), such that in both cases we find ourselves just starting to probe this region at large \(M_U\).

Signal significance for different coupling scenarios and \(\textrm{LQ}\) masses, considering the case without coupling to right-handed currents \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = \tfrac{1}{2}\), the case maximally coupled to right- and left-handed currents \(\textrm{BR}(\textrm{LQ}\rightarrow b\,\tau ) = \tfrac{2}{3}\), and the case uniquely coupled to right-handed currents \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = 1\). The estimates are performed at \(\sqrt{s} = 13 \, \textrm{TeV}\) and \(137 \, \textrm{fb}^{-1}\)

The combined significance contours for the different \(\textrm{BR}\) scenarios that have been considered is presented in Fig. 10. These contours illustrate the regions of exclusion for the three cases of interest, namely exclusive left-handed currents (\(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = \tfrac{1}{2}\), \(\beta _R=0\)), maximal left and right couplings (\(\textrm{BR}(\textrm{LQ}\rightarrow b\,\tau ) = \tfrac{2}{3}\), \(\beta _R=-1)\), and exclusive right-handed currents (\(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau ) = 1\), \(g_U\rightarrow 0,\,g_U\beta _R=1\)). For small \(g_U\), we find that the exclusive right-handed scenario is most sensitive, while the exclusive left-handed case is the worst. The reason for this is that this region is excluded principally by d\(\textrm{LQ}\) production, such that having the largest branching ratio is crucial in order to have a large number of events. For larger couplings, both exclusive scenarios end up having similar exclusion regions, with the \(\beta _R=-1\) case being significantly more sensitive. The reason in this case is that the exclusion is dominated by non-res, which has a much larger production cross-section if both currents are turned on.

In order to finalise our analysis of the LQ-only model, we show in Fig. 11 the expected combined significance in the relatively near future. For this, considering \(\sqrt{s} = 13.6 \, \textrm{TeV}\), we show contours for the sensitivity corresponding to integrated luminosities of \(137 \, \textrm{fb}^{-1}\), \(300 \, \textrm{fb}^{-1}\), and \(3000 \, \textrm{fb}^{-1}\), for scenarios with only left-handed currents (top) and with maximal coupling to right-handed currents (bottom). Note that for \(\beta _R = 0\) (\(\beta _R = -1\)), couplings \(g_U\) close to 3.18 (1.85) and \(M_U = 5.0 \, \textrm{TeV}\) can be excluded with \(1.69 \sigma \) significance for the high luminosity LHC era, allowing us to probe the practically the entirety of the B-meson anomaly favored region. Note that the background yields for the high luminosity LHC might be larger due to pileup effects. Nevertheless, as it was mentioned in Sect. 3, we have included a conservative 10% systematic uncertainty associated with possible fluctuations on the background estimations. Although effects from larger pileup might be significant, they can be mitigated by improvements in the algorithms for particle reconstruction and identification, and also on the data-analysis techniques.

As commented on the Introduction, non-res production can be significantly affected by the presence of a companion \(\textrm{Z}'\), which provides additional s-channel diagrams that add to the total cross-section and can interfere destructively with the \(\textrm{LQ}\) t-channel process (see Figs. 3 and 4). From our previous results, we see that non-res always is of high importance in determining the exclusion region, particularly at large \(M_U\) and \(g_U\), meaning it is crucial to understand how this role is affected in front of a \(\textrm{Z}'\) with similar mass.

Change on the non-res signal significance for different \(Z^{\prime }\) coupling scenarios and \(\textrm{LQ}\) masses. The estimates are performed at \(\sqrt{s}=13.0 \) TeV, \(\beta _R=0\), \(g_U = 1.8\), \(M_{\textrm{Z}^{\prime }} = \sqrt{1/2} M_{U}\) (top), and \(M_{\textrm{Z}^{\prime }} = \sqrt{3/2} M_{U}\) (bottom)

The change in sensitivity on the non-res signal significance due this interference effect with the \(\textrm{Z}^{\prime }\) boson is shown in Fig. 12. We consider two opposite cases for the \(\textrm{Z}'\) mass: \(M^2_{\textrm{Z}'} = M^2_U/2\) (top) and \(M^2_{\textrm{Z}'} = 3\,M^2_U/2\) (bottom). Our results are shown on the \(g_{\textrm{Z}'}\) - \(M_U\) plane, for a fixed \(g_U=1.8\) and \(\beta _R=0\). For the \(M^2_{\textrm{Z}'} = M^2_U/2\) scenario, there is an overall increase in the total cross-section, with a larger \(g_{\textrm{Z}'}\) implying a larger sensitivity. This means that our ability to probe smaller values of \(g_U\) could be enhanced, as a given observation would be reproduced with both a specific \(g_U\) and vanishing \(g_{\textrm{Z}'}\), or a smaller \(g_U\) with large \(g_{\textrm{Z}'}\). Thus, for a large enough \(g_{\textrm{Z}'}\), it could be possible to enhance non-res to the point that the entire region favoured by \(\textrm{B}\)-anomalies could be ruled out. In contrast, for \(M^2_{\textrm{Z}'} = 3\,M^2_U/2\) the cross-section is strongly affected by the large destructive interference, such that a larger \(g_{\textrm{Z}'}\) does not necessarily imply an increase in sensitivity. In fact, as can be seen in the bottom panel, for large \(M_U\) the significance is reduced as \(g_{\textrm{Z}'}\) increases, leading to the opposite conclusion than above, namely, that a large \(g_{\textrm{Z}'}\) could reduce the effectiveness of non-res.

The impact of the above can be seen in Fig. 13, which shows our previous sensitivity curves on the \(M_U-g_U\) plane, but this time with a \(\textrm{Z}'\) contribution to non-res. We use the same values of \(M_{\textrm{Z}'}\) as before, but fix \(g_{\textrm{Z}'}=3.5\). For smaller \(M_{\textrm{Z}'}\) (top), the non-res contribution is enhanced so much, that both s\(\textrm{LQ}\) and d\(\textrm{LQ}\) play no role whatsoever in determining the exclusion region. We find that, for small \(g_U\), the sensitivity is dominated by \(\textrm{Z}'\) production such that, since \(M_U\) is related to \(M_{\textrm{Z}'}\), \(\textrm{LQ}\) masses up to \(\sim 3\, \textrm{TeV}\) are excluded. This bound is slightly relaxed for larger values of \(g_U\), which is attributed to destructive interference effects due to an increased \(\textrm{LQ}\) contribution.

Signal significance for different coupling scenarios and \(\textrm{LQ}\) masses, for all channels, with an additional \(\textrm{Z}'\) contribution to non-res production. We set \(\beta _{R} = 0\) and \(g_{\textrm{Z}'}=3.5\), taking \(M^2_{\textrm{Z}'}\) equal to \(M_U^2/2\) (\(3M_U^2/2\)) on the top (bottom) panel

The bottom panel of Fig. 13 shows that case where \(M_{\textrm{Z}'}\) is larger than \(M_U\). As expected from our previous discussion, the behaviour and impact of non-res is modified. For small \(g_U\), we again have the pure \(\textrm{Z}'\) production dominating the non-res cross-section, leading to a null sensitivity on \(g_U\), similar to what happens in dLQ. In contrast, for very large \(g_U\), we find that the pure \(\textrm{LQ}\) non-res production is the one that dominates, and we recover sensitivity regions with a slope similar to those shown in Figs. 7, 8, 9, 10 and 11, shifted towards larger values of \(g_U\). For intermediate values of this coupling, the destructive interference have an important effect again, twisting the exclusion region slightly towards the left. Still, even in this case, we find that s\(\textrm{LQ}\) plays a marginal role in defining the combined exclusion region, and that the final result again depends primarily on d\(\textrm{LQ}\) and non-res production.

5 Discussion and conclusions

Experimental searches for \(\textrm{LQ}\)s with preferential couplings to third generation fermions are currently of great interest due to their potential to explain observed tensions in the \(R_{(D)}\) and \(R_{(D^{*})}\) decay ratios of \(\textrm{B}\) mesons with respect to the SM predictions. Although the LHC has a broad physics program on searches for \(\textrm{LQ}\)s, it is very important to consider the impact of each search within wide range of different theoretical assumptions within a specific model. In addition, in order to improve the sensitivity to detect possible signs of physics beyond the SM, it is also important to strongly consider new computational techniques based on machine learning (ML). Therefore, we have studied the production of \(U_1\) \(\textrm{LQ}\)s with preferential couplings to third generation fermions, considering different couplings, masses and chiral currents. These studies have been performed considering \(\textrm{p}\,\textrm{p}\) collisions at \(\sqrt{s} = 13\, \textrm{TeV}\) and \(13.6\, \textrm{TeV}\) and different luminosity scenarios, including projections for the high luminosity LHC. A ML algorithm based on boosted decision trees is used to maximize the signal significance. The signal to background discrimination output of the algorithm is taken as input to perform a profile binned-likelihood test statistic to extract the expected signal significance.

The expected signal significance for s\(\textrm{LQ}\), d\(\textrm{LQ}\) and non-res production, and their combination, is presented as contours on a two dimensional plane of \(g_U\) versus \(M_U\). We present results for the case of exclusive couplings to left-handed, mixed, and exclusive right-handed currents. For the first two, the region of the phase space that could explain the \(\textrm{B}\) meson anomalies is also presented. We confirm the findings of previous works that the largest production cross-section and best overall significance comes from the combination of d\(\textrm{LQ}\) and non-res production channels. We also find that the sensitivity to probe the parameter space of the model is highly dependent on the chirality of the couplings. Nevertheless, the region solving the \(\textrm{B}\)-meson anomalies also changes with each choice, such that in all evaluated cases we find ourselves just starting to probe this region at large \(M_U\).

Our studies compare our exclusion regions with respect to the latest reported results from the ATLAS and CMS Collaborations. The comparison suggests that our ML approach has a better sensitivity than the standard cut-based analyses, especially at large values of \(g_U\). In addition, our projections for the HL-LHC cover the whole region solving the B-anomalies, for masses up to \(5.00\, \textrm{TeV}\).

Finally, we consider the effects of a companion \(\textrm{Z}^{\prime }\) boson on non-res production. We find that such a contribution can have a considerable impact on the LQ sensitivity regions, depending on the specific masses and couplings. In spite of this, we still consider non-res production as an essential channel for probing LQs in the future.

Data availability statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: This is a theoretical study and no experimental data].

Notes

The couplings in the second line of Eq. (1) can be found in the literature as \(g_s\rightarrow g_s(1-\kappa _U)\) and \(g'\rightarrow g'(1-{\tilde{\kappa }}_U)\), in order to take into account the possibility of an underlying strong interaction.

Having \(\beta _L^{s\tau }\) different from zero also opens new decay channels. These, however, are either suppressed by \(\beta _L^{s\tau }\) and powers of \(\lambda _\textrm{CKM}\). In any case, this effect would decrease \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{b}\,\tau )\) and \(\textrm{BR}(\textrm{LQ}\rightarrow \textrm{t}\,\nu )\) by less than \(3\%\).

Naively, the LQs are associated to the breaking of \(SU(4)\rightarrow SU(3)_{[4]}\times U(1)_{B-L}\), the \(G'\) arises from \(SU(3)_{[4]}\times SU(3)'\rightarrow SU(3)_c\), and the \(Z'\) comes from the breaking of \(U(1)_{B-L}\times U(1)_{T_R^3}\rightarrow U(1)_Y\). Notice that the specific pattern of breaking, and the relations between the masses and couplings, are connected to the specific scalar potential used.

Note that further signal mixing can also occur at the event generation level by including terms at larger order in \(\alpha _s\). For example, in the non-res diagram in Fig. 1, one of the initial \(\textrm{b}\) could come from a \(g\rightarrow \textrm{b}{\bar{\textrm{b}}}\) splitting, leading to non resonant production of \(\textrm{b}\,\tau _{\mathrm h} \tau _{\textrm{h}/\ell }\). Simulating and studying the role of such NLO contributions is outside the scope of this work.

GridCV is a method that allows to find the best combination of hyperparameter values for the model, as this choice is crucial to achieve an optimal performance.

References

J.C. Pati, A. Salam, Lepton number as the fourth color. Phys. Rev. D 10, 275–289 (1974). (Erratum: Phys. Rev. D 11, 703-703 (1975))

H. Georgi, S.L. Glashow, Unity of all elementary particle forces. Phys. Rev. Lett. 32, 438–441 (1974)

B. Schrempp, F. Schrempp, Light leptoquarks. Phys. Lett. B 153, 101–107 (1985)

M. Leurer, A comprehensive study of leptoquark bounds. Phys. Rev. D 49, 333–342 (1994)

S. Davidson, D.C. Bailey, B.A. Campbell, Model independent constraints on leptoquarks from rare processes. Z. Phys. C 61, 613–644 (1994)

M. Leurer, Bounds on vector leptoquarks. Phys. Rev. D 50, 536–541 (1994)

J.L. Hewett, T.G. Rizzo, Much ado about leptoquarks: a comprehensive analysis. Phys. Rev. D 56, 5709–5724 (1997)

F.S. Queiroz, K. Sinha, A. Strumia, Leptoquarks, dark matter, and anomalous LHC events. Phys. Rev. D 91(3), 035006 (2015)

I. Doršner, S. Fajfer, A. Greljo, J.F. Kamenik, N. Košnik, Physics of leptoquarks in precision experiments and at particle colliders. Phys. Rep. 641, 1–68 (2016)

LHCb Collaboration, Test of lepton universality using \(B^{+}\rightarrow K^{+}\ell ^{+}\ell ^{-}\) decays. Phys. Rev. Lett. 113(2014)

LHCb Collaboration, Test of lepton universality with \(B^{0} \rightarrow K^{*0}\ell ^{+}\ell ^{-}\) decays. JHEP 08, 055 (2017)

LHCb Collaboration, Search for lepton-universality violation in \(B^+\rightarrow K^+\ell ^+\ell ^-\) decays. Phys. Rev. Lett. 122(19), 191801 (2019)

LHCb Collaboration, Test of lepton universality in beauty-quark decays. Nat. Phys. 18(3), 277–282 (2022)

B.B. Collaboration, Evidence for an excess of \(\bar{B} \rightarrow D^{(*)} \tau ^-\bar{\nu }_\tau \) decays. Phys. Rev. Lett. 109, 101802 (2012)

B.B. Collaboration, Measurement of an excess of \(\bar{B} \rightarrow D^{(*)}\tau ^- \bar{\nu }_\tau \) decays and implications for charged Higgs bosons. Phys. Rev. D 88(7), 072012 (2013)

Belle Collaboration. Measurement of \(\cal{R}(D)\) and \(\cal{R}(D^{\ast })\) with a semileptonic tagging method (2019)

B. Collaboration, Measurement of the \(\tau \) lepton polarization and \(R(D^{*})\) in the decay \(\bar{B} \rightarrow D^* \tau ^- \bar{\nu }_\tau \) with one-prong hadronic \(\tau \) decays at Belle. Phys. Rev. D 97, 012004 (2018)

B. Collaboration, Measurement of the branching ratio of \(\bar{B}^0 \rightarrow D^{*+} \tau ^- \bar{\nu }_{\tau }\) relative to \(\bar{B}^0 \rightarrow D^{*+} \ell ^- \bar{\nu }_{\ell }\) decays with a semileptonic tagging method. Phys. Rev. D 94, 072007 (2016)

B. Collaboration, Measurement of the \(\tau \) lepton polarization and \(R(D^{*})\) in the decay \(\bar{B} \rightarrow D^* \tau ^- \bar{\nu }_\tau \). Phys. Rev. Lett. 118, 211801 (2017)

B. Collaboration, Measurement of the branching ratio of \(\bar{B} \rightarrow D^{(\ast )} \tau ^- \bar{\nu }_\tau \) relative to \(\bar{B} \rightarrow D^{(\ast )} \ell ^- \bar{\nu }_\ell \) decays with hadronic tagging at Belle. Phys. Rev. D 92, 072014 (2015)

LHCb Collaboration. Measurement of the ratio of branching fractions \(\cal{B}(\bar{B}^0 \rightarrow D^{*+}\tau ^{-}\bar{\nu }_{\tau })/\cal{B}(\bar{B}^0 \rightarrow D^{*+}\mu ^{-}\bar{\nu }_{\mu })\). Phys. Rev. Lett. 115(11), 111803 (2015). [Erratum: Phys.Rev.Lett. 115, 159901 (2015)]

LHCb Collaboration. Measurement of the ratio of branching fractions \(\cal{B}(\bar{B}^0 \rightarrow D^{*+}\tau ^{-}\bar{\nu }_{\tau })/\cal{B}(\bar{B}^0 \rightarrow D^{*+}\mu ^{-}\bar{\nu }_{\mu })\). Phys. Rev. Lett. 115, 111803 (2015). [Erratum: Phys.Rev.Lett. 115, 159901 (2015)]

LHCb Collaboration, Measurement of the ratio of the \(B^{0} \rightarrow D^{*-} \tau ^{+} \nu _{\tau }\) and \(B^0 \rightarrow D^{*-} \mu ^{+} \nu _{\mu }\) branching fractions using three-prong \(\tau \)-lepton decays. Phys. Rev. Lett. 120, 171802 (2018)

LHCb Collaboration, Test of lepton flavor universality by the measurement of the \(B^0 \rightarrow D^{*-} \tau ^+ \nu _{\tau }\) branching fraction using three-prong \(\tau \) decays. Phys. Rev. D 97(7), 072013 (2018)

LHCb Collaboration. Measurement of the ratios of branching fractions \(\cal{R}(D^{*})\) and \({\cal{R}}(D^{0})\) (2023)

G. Hiller, M. Schmaltz, \(R_K\) and future \(b \rightarrow s \ell \ell \) physics beyond the standard model opportunities. Phys. Rev. D 90, 054014 (2014)

B. Gripaios, M. Nardecchia, S.A. Renner, Composite leptoquarks and anomalies in \(B\)-meson decays. JHEP 05, 006 (2015)

R. Alonso, B. Grinstein, J.M. Camalich, Lepton universality violation and lepton flavor conservation in \(B\)-meson decays. JHEP 10, 184 (2015)

L. Calibbi, A. Crivellin, T. Ota, Effective field theory approach to \(b\rightarrow s\ell \ell ^{(^{\prime })}\), \(B\rightarrow K^{(*)}\nu \overline{\nu }\) and \(B\rightarrow D^{(*)}\tau \nu \) with third generation couplings. Phys. Rev. Lett. 115, 181801 (2015)

S. Fajfer, N. Košnik, Vector leptoquark resolution of \(R_K\) and \(R_{D^{(*)}}\) puzzles. Phys. Lett. B 755, 270–274 (2016)

M. Bauer, M. Neubert, Minimal leptoquark explanation for the \(R_{D^{(*)}}\), \(R_K\), and \((g-2)_\mu \) anomalies. Phys. Rev. Lett. 116(14), 141802 (2016)

D. Bečirević, N. Košnik, O. Sumensari, R. Zukanovich-Funchal, Palatable leptoquark scenarios for lepton flavor violation in exclusive \(b\rightarrow s\ell _1\ell _2\) modes. JHEP 11, 035 (2016)

A. Crivellin, D. Muller, T. Ota, Simultaneous explanation of \(R(D^{*}\)) and \(b \rightarrow \mu ^{+} \mu ^{-}\):the last scalar leptoquark standing. JHEP 09, 040 (2017)

G. D’Amico, M. Nardecchia, P. Panci, F. Sannino, A. Strumia, R. Torre, A. Urbano, Flavour anomalies after the \(R_{K^*}\) measurement. JHEP 09, 010 (2017)

G. Hiller, I. Nisandzic, \(R_K\) and \(R_{K^{\ast }}\) beyond the standard model. Phys. Rev. D 96(3), 035003 (2017)

D. Buttazzo, A. Greljo, G. Isidori, D. Marzocca, B-physics anomalies: a guide to combined explanations. JHEP 11, 044 (2017)

D. Bečirević, I. Doršner, S. Fajfer, N. Košnik, D.A. Faroughy, O. Sumensari, Scalar leptoquarks from grand unified theories to accommodate the \(B\)-physics anomalies. Phys. Rev. D 98(5), 055003 (2018)

C. Cornella, J. Fuentes-Martín, G. Isidori, Revisiting the vector leptoquark explanation of the B-physics anomalies. JHEP 07, 168 (2019)

A. Angelescu, D. Bečirević, D.A. Faroughy, F. Jaffredo, O. Sumensari, Single leptoquark solutions to the B-physics anomalies. Phys. Rev. D 104(5), 055017 (2021)

G. Belanger et al., Leptoquark manoeuvres in the dark: a simultaneous solution of the dark matter problem and the \( {R}_{D^{\left(\ast \right)}} \) anomalies. JHEP 02, 042 (2022)

J. Aebischer, G. Isidori, M. Pesut, B.A. Stefanek, F. Wilsch, Confronting the vector leptoquark hypothesis with new low- and high-energy data. 83(2), 153 (2023)

LHCb Collaboration. Test of lepton universality in \(b \rightarrow \ell ^{+} \ell ^{-}\) decays (2022)

LHCb Collaboration. Measurement of lepton universality parameters in \(B^+\rightarrow K^+\ell ^+\ell ^-\) and \(B^0\rightarrow K^{*0}\ell ^+\ell ^-\) decays (2022)

A. Greljo, J. Salko, A. Smolkovič, P. Stangl, Rare \(b\) decays meet high-mass Drell-Yan. JHEP 5, 2023 (2023)

M. Ciuchini, M. Fedele, E. Franco, A. Paul, L. Silvestrini, M. Valli, Constraints on lepton universality violation from rare \(b\) decays. Phys. Rev. D 107, 055036 (2023)

B. Diaz, M. Schmaltz, Y. Zhong, The leptoquark Hunter’s guide: Pair production. JHEP 10, 097 (2017)

I. Doršner, A. Greljo, Leptoquark toolbox for precision collider studies. JHEP 05, 126 (2018)

N. Vignaroli, Seeking leptoquarks in the \(t\overline{t}\) plus missing energy channel at the high-luminosity lhc. Phys. Rev. D 99, 035021 (2019)

M. Schmaltz, Y. Zhong, The leptoquark Hunter’s guide: large coupling. JHEP 01, 132 (2019)

A. Biswas, D. Kumar-Ghosh, N. Ghosh, A. Shaw, A.K. Swain, Collider signature of \(U_1\) leptoquark and constraints from \(b \rightarrow c\) observables. J. Phys. G 47(4), 045005 (2020)

M.J. Baker, J. Fuentes-Martín, G. Isidori, M. König, High-\(p_T\) signatures in vector–leptoquark models. Eur. Phys. J. C 79(4), 334 (2019)

U. Haisch, G. Polesello, Resonant third-generation leptoquark signatures at the Large Hadron Collider. JHEP 05, 057 (2021)

A. Bhaskar, T. Mandal, S. Mitra, M. Sharma, Improving third-generation leptoquark searches with combined signals and boosted top quarks. Phys. Rev. D 104(7), 075037 (2021)

J. Bernigaud, M. Blanke, I.M. Varzielas, J. Talbert, J. Zurita, LHC signatures of \(\tau \)-flavoured vector leptoquarks. JHEP 08, 127 (2022)

R. Leonardi, O. Panella, F. Romeo, A. Gurrola, H. Sun, S. Xue. Phenomenology at the LHC of composite particles from strongly interacting Standard Model fermions via four-fermion operators of NJL type. EPJC 80(309) (2020)

C.M.S. Collaboration, Search for heavy neutrinos or third-generation leptoquarks in final states with two hadronically decaying \(\tau \) leptons and two jets in proton-proton collisions at \( \sqrt{s}=13 \) TeV. JHEP 03, 077 (2017)

C.M.S. Collaboration, Search for third-generation scalar leptoquarks and heavy right-handed neutrinos in final states with two tau leptons and two jets in proton-proton collisions at \( \sqrt{s}=13 \) TeV. JHEP 07, 121 (2017)

C.M.S. Collaboration, Search for third-generation scalar leptoquarks decaying to a top quark and a \(\tau \) lepton at \(\sqrt{s}=\) 13 TeV. Eur. Phys. J. C 78, 707 (2018)

C.M.S. Collaboration, Constraints on models of scalar and vector leptoquarks decaying to a quark and a neutrino at \(\sqrt{s}=\) 13 TeV. Phys. Rev. D 98(3), 032005 (2018)

C.M.S. Collaboration, Search for a singly produced third-generation scalar leptoquark decaying to a \(\tau \) lepton and a bottom quark in proton-proton collisions at \(\sqrt{s} =\) 13 TeV. JHEP 07, 115 (2018)

C.M.S. Collaboration, Search for heavy neutrinos and third-generation leptoquarks in hadronic states of two \(\tau \) leptons and two jets in proton-proton collisions at \(\sqrt{s} =\) 13 TeV. JHEP 03, 170 (2019)

C.M.S. Collaboration, Search for singly and pair-produced leptoquarks coupling to third-generation fermions in proton-proton collisions at s=13 TeV. Phys. Lett. B 819, 136446 (2021)

CMS Collaboration. Searches for additional Higgs bosons and for vector leptoquarks in \(\tau \tau \) final states in proton-proton collisions at \(\sqrt{s}\) = 13 TeV (2022)

CMS Collaboration. The search for a third-generation leptoquark coupling to a \(\tau \) lepton and a b quark through single, pair and nonresonant production at \(\sqrt{s}=13~{{\rm TeV}}\) (2022)

ATLAS Collaboration, Searches for third-generation scalar leptoquarks in \(\sqrt{s} = 13{{\rm TeV}}\) pp collisions with the ATLAS detector. JHEP 06, 144 (2019)

ATLAS Collaboration, Search for a scalar partner of the top quark in the all-hadronic \(t{\bar{t}}\) plus missing transverse momentum final state at \(\sqrt{s}=13\) TeV with the ATLAS detector. Eur. Phys. J. C 80(8), 737 (2020)

ATLAS Collaboration, Search for pair production of third-generation scalar leptoquarks decaying into a top quark and a \(\tau \)-lepton in \(pp\) collisions at \( \sqrt{s} \) = 13 TeV with the ATLAS detector. JHEP 06, 179 (2021)

ATLAS Collaboration, Search for new phenomena in final states with \(b\)-jets and missing transverse momentum in \(\sqrt{s}=13\) TeV \(pp\) collisions with the ATLAS detector. JHEP 05, 093 (2021)

ATLAS Collaboration, Search for new phenomena in \(pp\) collisions in final states with tau leptons, b-jets, and missing transverse momentum with the ATLAS detector. Phys. Rev. D 104(11), 112005 (2021)

ATLAS Collaboration. Search for leptoquarks decaying into the b\(\tau \) final state in \(pp\) collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector (2023)

ATLAS Collaboration. Search for pair production of third-generation leptoquarks decaying into a bottom quark and a \(\tau \)-lepton with the atlas detector (2023)

D.A. Faroughy, A. Greljo, J.F. Kamenik, Confronting lepton flavor universality violation in B decays with high-\(p_T\) tau lepton searches at LHC. Phys. Lett. B 764, 126–134 (2017)

A. Angelescu, D. Bečirević, D.A. Faroughy, O. Sumensari, Closing the window on single leptoquark solutions to the \(B\)-physics anomalies. JHEP 10, 183 (2018)

A. Bhaskar, D. Das, T. Mandal, S. Mitra, C. Neeraj, Precise limits on the charge-2/3 U1 vector leptoquark. Phys. Rev. D 104(3), 035016 (2021)

C. Cornella, D.A. Faroughy, J. Fuentes-Martín, G. Isidori, M. Neubert, Reading the footprints of the B-meson flavor anomalies. JHEP 08, 050 (2021)

L. Allwicher, D.A. Faroughy, F. Jaffredo, O. Sumensari, F. Wilsch, Drell-Yan tails beyond the standard model. JHEP 3, 2023 (2023)

U. Haisch, L. Schnell, S. Schulte, Drell-Yan production in third-generation gauge vector leptoquark models at NLO+PS in QCD. JHEP 2, 2023 (2023)

J. H. Friedman. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29(5), 1189–1232 (2001). Publisher: Institute of Mathematical Statistics

X. Ai, S.C. Hsu, K. Li, C.T. Lu, Probing highly collimated photon-jets with deep learning. JPCS 2438(1), 012114 (2023)

ATLAS Collaboration, Search for the standard model Higgs boson produced in association with top quarks and decaying into a \(b\bar{b}\) pair in \(pp\) collisions at \(\sqrt{s}\) = 13 TeV with the ATLAS detector. Phys. Rev. D 97(7), 072016 (2018)

S. Chigusa, S. Li, Y. Nakai, W. Zhang, Y. Zhang, J. Zheng, Deeply learned preselection of Higgs dijet decays at future lepton colliders. Phys. Lett. B 833, 137301 (2022)

Y.L. Chung, S.C. Hsu, B. Nachman, Disentangling boosted Higgs boson production modes with machine learning. JINST 16, P07002 (2021)

J. Feng, M. Li, Q.S. Yan, Y.P. Zeng, H.H. Zhang, Y. Zhang, Z. Zhao, Improving heavy Dirac neutrino prospects at future hadron colliders using machine learning. JHEP 9, 2022 (2022)

D. Barbosa, F. Díaz, L. Quintero, A. Flórez, M. Sanchez, A. Gurrola, E. Sheridan, and F. Romeo. Probing a Z\(^{\prime }\) with non-universal fermion couplings through top quark fusion, decays to bottom quarks, and machine learning techniques. EPJC 83(413) (2023)

N. Assad, B. Fornal, B. Grinstein, Baryon Number and Lepton Universality Violation in Leptoquark and Diquark Models. Phys. Lett. B 777, 324–331 (2018)

L. Calibbi, A. Crivellin, T. Li, Model of vector leptoquarks in view of the \(B\)-physics anomalies. Phys. Rev. D 98(11), 115002 (2018)

M. Blanke, A. Crivellin, \(B\) Meson anomalies in a Pati-Salam model within the Randall-Sundrum background. Phys. Rev. Lett. 121(1), 011801 (2018)

S. Iguro, J. Kawamura, S. Okawa, Y. Omura, TeV-scale vector leptoquark from Pati-Salam unification with vectorlike families. Phys. Rev. D 104(7), 075008 (2021)

L. Di Luzio, A. Greljo, M. Nardecchia, Gauge leptoquark as the origin of B-physics anomalies. Phys. Rev. D 96(11), 115011 (2017)

A. Greljo, B.A. Stefanek, Third family quark–lepton unification at the TeV scale. Phys. Lett. B 782, 131–138 (2018)

L. Di Luzio, J. Fuentes-Martín, A. Greljo, M. Nardecchia, S. Renner, Maximal flavour violation: a Cabibbo mechanism for leptoquarks. JHEP 11, 081 (2018)

S.F. King, Twin Pati-Salam theory of flavour with a TeV scale vector leptoquark. JHEP 11, 161 (2021)