Abstract

A muon collider would enable the big jump ahead in energy reach that is needed for a fruitful exploration of fundamental interactions. The challenges of producing muon collisions at high luminosity and 10 TeV centre of mass energy are being investigated by the recently-formed International Muon Collider Collaboration. This Review summarises the status and the recent advances on muon colliders design, physics and detector studies. The aim is to provide a global perspective of the field and to outline directions for future work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Colliders are microscopes that explore the structure and the interactions of particles at the shortest possible length scale. Their goal is not to chase discoveries that are inevitable or perceived as such based on current knowledge. On the contrary, their mission is to explore the unknown in order to acquire radically novel knowledge.

The current experimental and theoretical situation of particle physics is particularly favourable to collider exploration. No inevitable discovery diverts our attention from pure exploration, and we can focus on the basic questions that best illustrate our ignorance. Why is electroweak symmetry broken and what sets the scale? Is it really broken by the Standard Model Higgs or by a more rich Higgs sector? Is the Higgs an elementary or a composite particle? Is the top quark, in light of its large Yukawa coupling, a portal towards the explanation of the observed pattern of flavor? Is the Higgs or the electroweak sector connected with dark matter? Is it connected with the origin of the asymmetry between baryons and anti-baryons in the Universe?

The next collider should deepen our understanding of the questions above, and offer broad and varied opportunities for exploration to enable radically unexpected discoveries. A comprehensive exploration must exploit the complementarity between energy and precision. Precise measurements allow us to study the dynamics of the particles we already know, looking for the indirect manifestation of yet unknown new physics. With a very high energy collider we can access the new physics particles directly. These two exploration strategies are normally associated with two distinct machines, either colliding electrons/positrons (ee) or protons (pp).

With muons instead, both strategies can be effectively pursued at a single collider that combines the advantages of ee and of pp machines. Moreover, the simultaneous availability of energy and precision offers unique perspectives of indirect sensitivity to very heavy new physics, as well as unique perspectives for the characterisation of new heavy particles discovered at the muon collider itself.

This is the picture that emerges from the investigations of the muon colliders physics potential performed so far, to be reviewed in this document in Sects. 2 and 5. These studies identify a Muon Collider (MuC), with 10 TeV energy or more in the centre of mass and sufficient luminosity, as an ideal tool for a substantial ambitious jump ahead in the exploration of fundamental particles and interactions. Assessing its technological feasibility is thus a priority for the future of particle physics.

Muon collider concept

Initial ideas for muon colliders were proposed long ago [1,2,3,4,5,6]. Subsequent studies culminated in the Muon Accelerator Program (MAP) in the US (see [7,8,9,10] and [11, 12] for an overview). The MAP concept for the muon collider facility is displayed in Fig. 1. The proton complex produces a short, high-intensity proton pulse that hits the target and produces pions. The decay channel guides the pions and collects the muons from their decay into a bunching and phase rotator system to form a muon beam. Several cooling stages then reduce the longitudinal and transverse emittance of the beam using a sequence of absorbers and radiofrequency (RF) cavities in a high magnetic field. A system of a linear accelerators (linac) and two recirculating linacs accelerate the beams to 60 GeV. They are followed by one or more rings to accelerate them to higher energy, for instance one to 300 GeV and one to 1.5 TeV, in the case of a 3 TeV centre of mass energy MuC. In the 10 TeV collider an additional ring from 1.5 to 5 TeV follows. These rings can be either fast-pulsed synchrotrons or Fixed-Field Alternating gradients (FFA) accelerators. Finally, the beams are injected at full energy into the collider ring. Here, they will circulate to produce luminosity until they are decayed. Alternatively they can be extracted once the muon beam current is strongly reduced by decay. There are wide margins for the optimisation of the exact energy stages of the acceleration system, taking also into account the possible exploitation of the intermediate-energy muon beams for muon colliders of lower centre of mass energy.

The concept developed by MAP provides the baseline for present and planned work on muon colliders, reviewed in Sect. 3. Three main reasons sparked this renewed interest in muon colliders. First, the focus on high collision energy and luminosity where the muon collider is particularly promising and offers the perspective of revolutionising particle physics. Second, the advances in technology and muon colliders design. Third, the difficulty of envisaging a radical jump ahead in the high-energy exploration with ee or pp colliders.

In fact, the required increase of energy and luminosity in future high-energy frontier colliders poses severe challenges [13, 14]. Without breakthroughs in concept and in technologies, the cost and use of land as well as the power consumption are prone to increase to unsustainable levels.

The muon collider promises to overcome these limitations and allow to push the energy frontier strongly. Circular electron-positron colliders are limited in energy by the emission of synchrotron radiation that increases strongly with energy. Linear colliders overcome this limitation but require the beam to be accelerated to full energy in a single pass through the main linac and allow to use the beams only in a single collision [15]. The high mass of the muon mitigates synchrotron radiation emission, allowing them to be accelerated in many passes through a ring and to collide repeatedly in another ring. This results in cost effectiveness and compactness combined with a luminosity per beam power that roughly increases linearly with energy. Protons can be also accelerated in rings and made to collide with very high energy. However, protons are composite particles and therefore only a small fraction of their collision energy is available to probe short-distance physics through the collisions of their fundamental constituents. The effective energy reach of a muon collider thus corresponds to the one of a proton collider of much higher centre of mass energy. This concept is illustrated more quantitatively in Sect. 2.1.

Currently, the limit of the energy reach for muon colliders has not been identified. Ongoing studies focus on a 10 TeV design with an integrated luminosity goal of \(10 ~ \mathrm ab^{-1}\). This goal is expected to provide a good balance between an excellent physics case and affordable cost, power consumption and risk. Once a robust design has been established at 10 TeV – including an estimate of the cost, power consumption and technical risk – other, higher energies will be explored.

The 2020 Update of the European Strategy for Particle Physics (ESPPU) recommended, for the first time in Europe, an R &D programme on muon colliders design and technology. This led to the formation of the International Muon Collider Collaboration (IMCC) [16] with the goal of initiating this programme and informing the next ESPPU process on the muon collider feasibility perspectives. This will enable the next ESPPU and other strategy processes to judge the scientific justification of a full Conceptual Design Report (CDR) and demonstration programme.

The European Roadmap for Accelerator R &D [17], published in 2021, includes the muon collider. The report is based on consultations of the community at large, combined with the expertise of a dedicated Muon Beams Panel. It also benefited from significant input from the MAP design studies and US experts. The report assessed the challenges of the muon collider and did not identify any insurmountable obstacle. However, the muon collider technologies and concepts are less mature than those of electron-positron colliders. Circular and linear electron-positron colliders already have been constructed and operated but the muon collider would be the first of its kind. The limited muon lifetime gives rise to several specific challenges including the need of rapid production and acceleration of the beam. These challenges and the solutions under investigation are detailed in Sect. 3.

The Roadmap describes the R &D programme required to develop the maturity of the key technologies and the concepts in the coming few years. This will allow the assessment of realistic luminosity targets, detector backgrounds, power consumption and cost scale, as well as whether one can consider implementing a MuC at CERN or elsewhere. Mitigation strategies for the key technical risks and a demonstration programme for the CDR phase will also be addressed. The use of existing infrastructure, such as existing proton facilities and the LHC tunnel, will also be considered. This will allow the next strategy process to make an informed choice on the future strategy. Based on the conclusions of the next strategy processes in the different regions, a CDR phase could then develop the technologies and the baseline design to demonstrate that the community can execute a successful MuC project.

Important progress in the past gives confidence that this goal can be achieved and that the programme will be successful. In particular, the developments of superconducting magnet technology has progressed enormously and high-temperature superconductors have become a practical technology used in industry. Similarly, RF technology has progressed in general and experiments demonstrated the solution of the specific muon collider challenge – operating RF cavities in very high magnetic fields – that previously has been considered a showstopper. Component designs have been developed that can cool the initially diffuse beam and accelerate it to multi-TeV energy on a time scale compatible with the muon lifetime. However, a fully integrated design has yet to be developed and further development and demonstration of technology are required.

The technological feasibility of the facility is one vital component of the muon collider programme, but the planning of the facility exploitation is equally important. This includes the assessment of the muon collider potential to address physics questions, as well as the design of novel detectors and reconstruction techniques to perform experiments with colliding muons.

The path to a new generation of experiments

The main challenge to operating a detector at a muon collider is the fact that muons are unstable particles. As such, it is impossible to study the muon interactions without being exposed to decays of the muons forming the colliding beams. From the moment the collider is turned on and the muon bunches start to circulate in the accelerator complex, the products of the in-flight decays of the muon beams and the results of their interactions with beam line material, or the detectors themselves, will reach the experiments contributing to polluting the otherwise clean collision environment. The ensemble of all these particles is usually known as “Beam Induced Backgrounds”, or BIB. The composition, flux, and energy spectra of the BIB entering a detector is closely intertwined with the design of the experimental apparatus, such as the beam optics that integrate the detectors in the accelerator complex or the presence of shielding elements, and the collision energy. However, two general features broadly characterise the BIB: it is composed of low-energy particles with a broad arrival time in the detector.

The design of an optimised muon collider detector is still in its infancy, but the work has initiated and it is reviewed in Sect. 4. It is already clear that the physics goals will require a fully hermetic detector able to resolve the trajectories of the outgoing particles and their energies. While the final design might look similar to those experiments taking data at the LHC, the technologies at the heart of the detector will have to be new. The large flux of BIB particles sets requirements on the need to withstand radiation over long periods of time, and the need to disentangle the products of the beam collisions from the particles entering the sensitive regions from uncommon directions calls for high-granularity measurements in space, time and energy. The development of these new detectors will profit from the consolidation of the successful solutions that were pioneered for example in the High Luminosity LHC upgrades, as well as brand new ideas. New solutions are being developed for use in the muon collider environment spanning from tracking detectors, calorimeters systems to dedicated muon systems. The whole effort is part of the push for the next generation of high-energy physics detectors, and new concepts targeted to the muon collider environment might end up revolutionising other future proposed collider facilities as well.

Together with a vibrant detector development program, new techniques and ideas needs to be developed in the interpretation of the energy depositions recorded by the instrumentation. The contributions from the BIB add an incoherent source of backgrounds that affect different detector systems in different ways and that are unprecedented at other collider facilities. The extreme multiplicity of energy depositions in the tracking detectors create a complex combinatorial problem that challenges the traditional algorithms for reconstructing the trajectories of the charged particles, as these were designed for collisions where sprays of particles propagate outwards from the centre of the detector. At the same time, the potentially groundbreaking reach into the high-energy frontier will lead to strongly collimated jets of particles that need to be resolved by the calorimeter systems, while being able to subtract with precision the background contributions. The challenging environment of the muon collider offers fertile ground for the development of new techniques, from traditional algorithms to applications of artificial intelligence and machine learning, to brand new computing technologies such as quantum computers.

Muon collider plans

The ongoing reassessment of the muon collider design and the plans for R &D allow us to envisage a possible path towards the realisation of the muon collider and a tentative technically-limited timeline, displayed in Fig. 12.

The goal [11, 12] is a muon collider with a centre of mass energy of 10 TeV or more (a \(10^+\) TeV MuC). Passing this energy threshold enables, among other things, a vast jump ahead in the search for new heavy particles relative to the LHC. The target integrated luminosity is obtained by considering the cross-section of a typical \(2\rightarrow 2\) scattering processes mediated by the electroweak interactions, \(\sigma \sim 1~{\textrm{fb}}\cdot (10~{\textrm{TeV}})^2/E_{{\textrm{cm}}}^2\). In order to measure such cross-sections with good (percent-level) precision and to exploit them as powerful probes of short distance physics, around ten thousand events are needed. The corresponding integrated luminosity is

The luminosity requirement grows quadratically with the energy in order to compensate for the cross-section decrease. We will see in Sect. 3 that achieving this scaling is indeed possible at muon colliders.

Assuming a muon collider operation time of \(10^7\) seconds per year, and one interaction point, Eq. (1) corresponds to an instantaneous luminosity

The current design target parameters (see Table 1) enable to collect the required integrated luminosity in a 5-year run, ensuring an appealingly compact temporal extension to the muon collider project even in its data taking phase. Furthermore this ambitious target leaves space to increase the integrated luminosity by running longer or by foreseeing a second interaction point. One could similarly compensate for a possible instantaneous luminosity reduction in the final design.

Muon collider stages

In order to design the path towards a \(10^+\) TeV MuC, one could exploit the possibility of building it in stages. In fact, the design of many elements of the facility is simply independent of the collider energy. Once built and exploited for a lower \(E_{{\textrm{cm}}}\) MuC, they can thus be reused for a higher energy collider. This applies to the muon source and cooling, and to the accelerator complex as well because energy staging is anyway required for fast acceleration. Only the final collision ring of the lower \(E_{{\textrm{cm}}}\) collider could not be reused. However because of its limited size it is a minor addition to the total cost.

A staged approach has several advantages. First, it spreads the total cost over a longer time period and reduces the initial investment. This could enable a faster financing for the first energy stage and accelerate the whole project. Furthermore the reduced energy of the first stage allows, if needed, to make compromises on technologies that might not yet be fully developed, avoiding potential delays. In particular completing construction in 2045 as foreseen in Fig. 12 could be optimistic for a \(10^+\) TeV MuC, but realistic for a first lower-energy collider at few TeV. A centre of mass energy \(E_{{\textrm{cm}}}=3\) TeV is being tentatively considered for the first stage. This matches, with a much more compact design, the maximal \(e^+e^-\) energy that could be achieved by the last stage of the CLIC linear collider [15].

The 3 TeV stage of the muon collider offers amazing opportunities for progress with respect to the LHC and its high-luminosity successor (HL-LHC) [18]. These opportunities include a determination of the Higgs trilinear coupling, extended sensitivity to Higgs and top quark compositeness and to extended Higgs sectors. A selected summary of available studies is reported in Sect. 5. On the other hand, the physics potential of the \(10^+\) TeV collider is much superior to the one of the 3 TeV collider. The higher energy stage will radically advance the knowledge acquired with the first stage operation. Additionally, the energy upgrade would enable to investigate new physics discoveries or tensions with the SM that might emerge at the first stage.

The 3 TeV stage, following Eq. (2), would collect \(0.9\simeq 1\) ab\(^{-1}\) luminosity (with one detector) in five years of full luminosity, after an initial ramp-up phase of two to three years. In the most optimistic scenario the construction of the first stage will proceed rapidly. The first stage will terminate after seven years to leave space to the second stage with radically improved physics performances. If the second stage is instead delayed, the one at 3 TeV could run longer. The optimistic and pessimistic scenarios thus foresee 1 and 2 \(\hbox {ab}^{-1}\) at 3 TeV, respectively.

Other muon colliders

The tentative staging scenario detailed above should serve as the baseline for future investigations of alternative plans. In particular, one could consider the possibility of a first stage of much lower energy than 3 TeV, to be possibly built on an even shorter time scale. However, it is worth remarking in this context that the quadratic luminosity scaling with energy in Eqs. (1) and (2) is not only the aspirational target, but it is also the natural scaling of the luminosity at muon colliders. By following the scaling for low \(E_{{\textrm{cm}}}\) one immediately realises that the luminosity of a muon collider at order 100 GeV energy can not be competitive with the one of an \(e^+e^-\) circular or linear collider. For instance this implies that while there is evidently a compelling physics case for a leptonic “Higgs factory” at around 250 GeV energy, a muon collider would be probably unable to collect the high luminosity needed for a successful program of Higgs coupling measurements, while this is possible for \(e^+e^-\) colliders. In general, the luminosity scaling suggests that a physics-motivated first stage of the muon collider should either exploit some peculiarity of the muons that make \(\mu ^+\mu ^-\) collisions more useful than \(e^+e^-\) collisions, or target the TeV energy that is hard to reach with \(e^+e^-\).

The possibility of operating a first muon collider at the Higgs pole \(E_{{\textrm{cm}}}=m_H=125\) GeV has been discussed extensively in the literature. The idea here is to exploit the large Yukawa coupling of the muon, much larger than the one of the electron, in order to produce the Higgs boson in the s-channel and study its lineshape. The physics potential of such Higgs-pole muon collider will be described in Sect. 5. The major results would be a rather precise and direct determination of the Higgs width and an astonishingly accurate measurement of the Higgs mass. However, the Higgs is a rather narrow particle, with a width over mass ratio \(\varGamma _H/m_H\) as small as \(3\cdot 10^{-5}\). The muon beams would thus need a comparably small energy spread \(\varDelta E/E\hspace{-2pt}=\hspace{-2pt}3\cdot 10^{-5}\) for the programme to succeed. Engineering such tiny energy spread might perhaps be possible. However it poses a challenge for the facility design that is peculiar to the Higgs-pole collider and of no relevance for higher energies, where a much higher spread is perfectly adequate for physics. For this reason, the Higgs-pole muon collider is not currently considered in the staging plan and further study is needed.

Further work is also needed to assess the possible relevance of a muon collider at the \(t{{\overline{t}}}\) production threshold \(E_{{\textrm{cm}}}\simeq 343\) GeV, aimed at measuring the top quark mass with precision. The top threshold could be reached also with \(e^+e^-\) colliders. However the \(e^+e^-\) Higgs factory at 250 GeV, to be possibly built before the muon collider, might not be easily and quickly upgradable to 343 GeV. Moreover, the naturally small (permille-level) beam energy spread and the reduction of initial state radiation effects give an advantage to muons over electrons for the threshold scan. Such “first muon collider” was proposed long ago [19, 20]. Its modern relevance stems from the need of an improved top mass determination for establishing the possible instability of the SM Higgs potential [21]. We will not discuss this option further and we refer the reader to the literature.

This review

A muon collider could be a sustainable innovative device for a big ambitious jump ahead in fundamental physics exploration. It is a long-term project, but with a tight schedule and a narrow temporal window of opportunity. The initiated work must continue in the next decade, fostered by a positive recommendation of the 2023 US Particle Physics Prioritization Panel (P5) and the next Update of the European Strategy for Particle Physics foreseen in 2026/2027. Progress must be made by then on the perspectives for a muon collider to be built and operated, for the outcome of its collisions to be recorded, interpreted and exploited to advance physics knowledge. This offers stimulating challenges for accelerator, experimental and theoretical physics.

Muon colliders require innovative research in each of these three directions. The novelty of the theme and the lack of established solutions enable a high rate of progress, but it also requires that the three directions advance simultaneously because progress in one motivates and supports work in the others. Furthermore, exploiting synergies between accelerator, experimental and theoretical physics is of utmost importance at this initial stage of the muon collider project design.

In this spirit, the present Review summarises the state of the art and the recent progress in all these three areas, and outlines directions for future work. The aim is to provide a global perspective on muon colliders.

This Review is organised as follows. Section 2 summarises the key exploration opportunities offered by very high energy muon colliders and illustrates the potential for progress on selected physics questions. We also outline the challenges for the theoretical predictions needed to exploit this potential. These challenges constitute in fact a tremendous opportunity to advance knowledge of SM physics in a regime where the electroweak bosons are relatively light particles, entailing the emergence of the novel phenomenon of electroweak radiation. Section 3 describes the challenges and the opportunities of muon colliders for accelerator physics. It reviews the basic principles for the design of the muon production, cooling and fast acceleration systems. The required R &D, and a tentative staging plan and timeline, are also outlined. Section 4 describes the experimental conditions that are expected at the muon collider and the ongoing work on the design of the detector and of the event reconstruction software. We devote Sect. 5 to selected muon collider sensitivity projection studies. The \(10^+\) TeV MuC is the main focus, but some opportunities of the 3 TeV stage are also described, as well as those of the Higgs-pole collider. A summary of the perspectives and opportunities for future work on muon colliders is reported in Sect. 6.

2 Physics opportunities

2.1 Why muons?

Muons, like protons, can be made to collide with a centre of mass energy of 10 TeV or more in a relatively compact ring, without fundamental limitations from synchrotron radiation. However, being point-like particles, unlike protons, their nominal centre of mass collision energy \(E_{{\textrm{cm}}}\) is entirely available to produce high-energy reactions that probe length scales as short as \(1/E_{{\textrm{cm}}}\). The relevant energy for proton colliders is instead the centre of mass energy of the collisions between the partons that constitute the protons. The partonic collision energy is distributed statistically, and approaches a significant fraction of the proton collider nominal energy with very low probability. A muon collider with a given nominal energy and luminosity is thus evidently way more effective than a proton collider with comparable energy and luminosity.

This concept is made quantitative in Fig. 2. The figure displays the center of mass energy \({\sqrt{s\,}}_{\hspace{-2pt}p}\) that a proton collider must possess to be “equivalent” to a muon collider of a given energy \(E_{{\textrm{cm}}}=\sqrt{s\,}_{\hspace{-2pt}\mu }\). Equivalence is defined [11, 22, 23] in terms of the pair production cross-section for heavy particles, with mass close to the muon collider kinematical threshold of \(\sqrt{s\,}_{\hspace{-2pt}\mu }/2\). The equivalent \({\sqrt{s\,}}_{\hspace{-2pt}p}\) is the proton collider centre of mass energy for which the cross-sections at the two colliders are equal.

The estimate of the equivalent \({\sqrt{s\,}}_{\hspace{-2pt}p}\) depends on the relative strength \(\beta \) of the heavy particle interaction with the partons and with the muons. If the heavy particle only possesses electroweak quantum numbers, \(\beta =1\) is a reasonable estimate because the particles are produced by the same interaction at the two colliders. If instead it also carries QCD colour, the proton collider can exploit the QCD interaction to produce the particle, and a ratio of \(\beta =10\) should be considered owing to the large QCD coupling and colour factors. The orange line on the left panel of Fig. 2, obtained with \(\beta =1\), is thus representative of purely electroweak particles. The blue line, with \(\beta =10\), is instead a valid estimate for particles that also possess QCD interactions, as it can be verified in concrete examples.

The general lesson we learn from the left panel of Fig. 2 (orange line) is that at a proton collider with around 100 TeV energy the cross-section for processes with an energy threshold of around 10 TeV is quite smaller than the one of a muon collider (MuC) operating at \(E_{{\textrm{cm}}}={\sqrt{s\,}}_{\hspace{-2pt}\mu }\) \(\sim 10\) TeV. The gap can be compensated only if the process dynamics is different and more favourable at the proton collider, like in the case of QCD production. The general lesson has been illustrated for new heavy particles production, where the threshold is provided by the particle mass. But it also holds for the production of light SM particles with energies as high as \(E_{{\textrm{cm}}}\), which are very sensitive indirect probes of new physics. This makes exploration by high energy measurements more effective at muon than at proton colliders, as we will see in Sect. 2.4. Moreover the large luminosity for high energy muon collisions produces the copious emission of effective vector bosons. In turn, they are responsible at once for the tremendous direct sensitivity of muon colliders to “Higgs portal” type new physics and for their excellent perspectives to measure single and double Higgs couplings precisely as we will see in Sects. 2.2 and 2.3, respectively.

On the other hand, no quantitative conclusion can be drawn from Fig. 2 on the comparison between the muon and proton colliders discovery reach for the heavy particles. That assessment will be performed in the following section based on available proton colliders projections.

2.2 Direct reach

The left panel of Fig. 3 displays the number of expected events, at a 10 TeV MuC with 10 \(\hbox {ab}^{-1}\) integrated luminosity, for the pair production due to electroweak interactions of Beyond the Standard Model (BSM) particles with variable mass M. The particles are named with a standard BSM terminology, however the results do not depend on the detailed BSM model (such as Supersymmetry or Composite Higgs) in which these particles emerge, but only on their Lorentz and gauge quantum numbers. The dominant production mechanism at high mass is the direct \(\mu ^+\mu ^-\) annihilation, whose cross-section flattens out below the kinematical threshold at \({\textrm{M}}=5\) TeV. The cross-section increase at low mass is due to the production from effective vector boson annihilation.

Left panel: the number of expected events (from Ref. [24]) at a 10 TeV MuC, with 10 ab\(^{-1}\) luminosity, for several BSM particles. Right panel: \(95\%\) CL mass reach, from Ref. [25], at the HL-LHC (solid bars) and at the FCC-hh (shaded bars). The tentative discovery reach of a 10, 14 and 30 TeV MuC are reported as horizontal lines

Left panel: exclusion and discovery mass reach on Higgsino and Wino dark matter candidates at muon colliders from disappearing tracks, and at other facilities. The plot is adapted from Ref. [47]. Right: exclusion contour [23] for a scalar singlet of mass \(m_\phi \) mixed with the Higgs boson with strength \(\sin \gamma \). More details in Sect. 5.1

The figure shows that with the target luminosity of 10 \(\hbox {ab}^{-1}\) a 10 TeV MuC can produce the BSM particles abundantly. If they decay to energetic and detectable SM final states, the new particles can be definitely discovered up to the kinematical threshold. Taking into account that the entire target integrated luminosity will be collected in 5 years, a few month run could be sufficient for a discovery. Afterwards, the large production rate will allow us to observe the new particles decaying in multiple final states and to measure kinematical distributions. We will thus be in the position of characterising the properties of the newly discovered states precisely. Similar considerations hold for muon colliders with higher \(E_{{\textrm{cm}}}\), up to the fact that the kinematical mass threshold obviously grows to \(E_{{\textrm{cm}}}/2\). Notice however that the production cross-section decreases as \(1/E_{{\textrm{cm}}}^2\).Footnote 1 Therefore, we obtain as many events as in the left panel of Fig. 3 only if the integrated luminosity grows quadratically with the energy as in Eq. (1). A luminosity that is lower than this by a factor of around 10 would not affect the discovery reach, but it might reduce the potential for characterising the discoveries.

The direct reach of muon colliders vastly and generically exceeds the sensitivity of the High-Luminosity LHC (HL-LHC). This is illustrated by the solid bars on the right panel of Fig. 3, where we report the projected HL-LHC mass reach [25] on several BSM states. The \(95\%\) CL exclusion is reported, instead of the discovery, as a quantification of the physics reach. Specifically, we consider Composite Higgs fermionic top-partners T (e.g., the \(X_{5/3}\) and the \(T_{2/3}\)) and supersymmetric particles such as stops \({{\widetilde{t}}}\), charginos \({{\widetilde{\chi }}}_1^\pm \), stau leptons \({{\widetilde{\tau }}}\) and squarks \({{\widetilde{q}}}\). For each particle we report the highest possible mass reach, as obtained in the configuration for the BSM particle couplings and decay chains that maximises the hadron colliders sensitivity. The reach of a 100 TeV proton-proton collider (FCC-hh) is shown as shaded bars on the same plot. The muon collider reach, displayed as horizontal lines for \(E_{{\textrm{cm}}}=10\), 14 and 30 TeV, exceeds the one of the FCC-hh for several BSM candidates and in particular, as expected, for purely electroweak charged states. It should be noted that detailed muon collider sensitivity projections for the BSM candidates in Fig. 3 have not been performed yet. In general, a relatively limited literature exists on direct new physics searches at the MuC [26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44]. More studies would be desirable also to offer targets to the design of the detector.

Several interesting BSM particles do not decay to easily detectable final states, and an assessment of their observability requires dedicated studies. A clear case is the one of minimal WIMP Dark Matter (DM) candidates. The charged state in the DM electroweak multiplet is copiously produced, but it decays to the invisible DM plus a soft undetectable pion, owing to the small mass-splitting. WIMP DM can be studied at muon colliders in several channels (such as mono-photon) without directly observing the charged state [45, 46]. Alternatively, one can instead exploit the disappearing tracks produced by the charged particle [47]. The result is displayed on the left panel of Fig. 4 for the simplest candidates, known as Higgsino and Wino. A 10 TeV muon collider reaches the “thermal” mass, marked with a dashed line, for which the observed relic abundance is obtained by thermal freeze out. Other minimal WIMP candidates become kinematically accessible at higher muon collider energies [45, 46]. Muon colliders could actually even probe some of these candidates when they are above the kinematical threshold, by studying their indirect effects on high-energy SM processes [48, 49]. A more extensive overview of the muon collider potential to probe WIMP DM is provided in Sect. 5.2.

Left panel: schematic representation of vector boson fusion or scattering processes. The collinear V bosons emitted from the muons participate to a process with hardness \(\sqrt{{\hat{s}}}\ll E_{{\textrm{cm}}}\). Right panel: number of expected events for selected SM processes at a muon collider with variable \(E_{{\textrm{cm}}}\) and luminosity scaling as in Eq. (1)

New physics particles are not necessarily coupled to the SM by gauge interaction. One setup that is relevant in several BSM scenarios (including models of baryogenesis, dark matter, and neutral naturalness; see Sect. 5.1) is the “Higgs portal” one, where the BSM particles interact most strongly with the Higgs field. By the Goldstone Boson Equivalence Theorem, Higgs field couplings are interactions with the longitudinal polarisations of the SM massive vector bosons W and Z, which enable Vector Boson Fusion (VBF) production of the new particles. A muon collider is extraordinarily sensitive to VBF production, owing to the large luminosity for effective vector bosons. This is illustrated on the right panel of Fig. 4, in the context of a benchmark model [23, 26] (see also [27, 28]) where the only new particle is a real scalar singlet with Higgs portal coupling. The coupling strength is traded for the strength of the mixing with the Higgs particle, \(\sin \gamma \), that the interaction induces. The scalar singlet is the simplest extension of the Higgs sector. Extensions with richer structure, such as involving a second Higgs doublet, are a priori easier to detect as one can exploit the electroweak production of the new charged Higgs bosons, as well as their VBF production. See Refs. [50,51,52,53,54] for dedicated studies, and Sect. 5.1 for a review.

In several cases the muon collider direct reach compares favourably to the one of the most ambitious future proton collider project. This is not a universal statement, in particular at a muon collider it is obviously difficult to access heavy particles that carry only QCD interactions. One might also expect a muon collider of 10 TeV to be generically less effective than a 100 TeV proton collider for the detection of particles that can be produced singly. For instance, for additional \(Z'\) massive vector bosons, that can be probed at the FCC-hh well above the 10 TeV mass scale. We will see in Sect. 2.4 that the situation is slightly more complex and that, in the case of \(Z'\)s, a 10 TeV MuC sensitivity actually exceeds the one of the FCC-hh in most of the parameter space (see the right panel of Fig. 7).

2.3 A vector bosons collider

When two electroweak charged particles like muons collide at an energy much above the electroweak scale \(m_{{{\textsc {w}}}}\sim 100~\)GeV, they have a high probability to emit electroWeak (EW) radiation. There are multiple types of EW radiation effects that can be observed at a muon collider and play a major role in muon collider physics. Actually we will argue in Sect. 2.5 that the experimental observation and the theoretical description of these phenomena emerges as a self-standing reason of interest in muon colliders.

Here we focus on the practical implications [11, 22,23,24, 55,56,57] of the collinear emission of nearly on-shell massive vector bosons, which is the analog in the EW context of the Weizsäcker–Williams emission of photons. The vector bosons V participate, as depicted in Fig. 5, to a scattering process with a hard scale \(\sqrt{{\hat{s}}}\) that is much lower than the muon collision energy \(E_{{\textrm{cm}}}\). The typical cross-section for VV annihilation processes is thus enhanced by \(E_{{\textrm{cm}}}^2/{{\hat{s}}}\), relative to the typical cross-section for \(\mu ^+\mu ^-\) annihilation, whose hard scale is instead \(E_{{\textrm{cm}}}\). The emission of the V bosons from the muons is suppressed by the EW coupling, but the suppression is mitigated or compensated by logarithms of the separation between the EW scale and \(E_{{\textrm{cm}}}\) (see [22, 23, 55] for a pedagogical overview). The net result is a very large cross-section for VBF processes that occur at \(\sqrt{{\hat{s}}}\sim m_{{{\textsc {w}}}}\), with a tail in \(\sqrt{{\hat{s}}}\) up to the TeV scale.

Left panel: \(1\sigma \) sensitivities (in %) from a 10-parameter fit in the \(\kappa \)-framework at a 10 TeV MuC with 10 ab\(^{-1}\), compared with HL-LHC. The effect of measurements from a 250 GeV \(e^+e^-\) Higgs factory is also reported. Right panel: sensitivity to \(\delta \kappa _\lambda \) for different \(E_{{\textrm{cm}}}\). The luminosity is as in Eq. (1) for all energies, apart from \(E_{{\textrm{cm}}}\hspace{-2pt}=\hspace{-2pt}3\) TeV, where doubled luminosity (of 2 ab\(^{-1}\)) is assumed. More details in Sect. 5.1

We already emphasised (see Fig. 3) the importance of VBF for the direct production of new physics particles. The relevance of VBF for probing new physics indirectly simply stems for the huge rate of VBF SM processes, summarised on the right panel of Fig. 5. In particular we see that a 10 TeV muon collider produces ten million Higgs bosons, which is around 10 times more than future \(e^+e^-\) Higgs factories. Since the Higgs bosons are produced in a relatively clean environment, without large physics backgrounds from QCD, a 10 TeV muon collider (over-)qualifies as a Higgs factory [23, 56,57,58,59]. Unlike \(e^+e^-\) Higgs factories, a muon collider also produces Higgs pairs copiously, enabling accurate and direct measurements of the Higgs trilinear coupling [22, 24, 56] and possibly also of the quadrilinear coupling [60].

The opportunities for Higgs physics at a muon collider are summarised extensively in Sect. 5.1. In Fig. 6 we report for illustration the results of a 10-parameter fit to the Higgs couplings in the \(\kappa \)-framework at a 10 TeV MuC, and the sensitivity projections on the anomalous Higgs trilinear coupling \(\delta \kappa _\lambda \). The table shows that a 10 TeV MuC will improve significantly and broadly our knowledge of the properties of the Higgs boson. The combination with the measurements performed at an \(e^+e^-\) Higgs factory, reported on the third column, does not affect the sensitivity to several couplings appreciably, showing the good precision that a muon collider alone can attain. However, it also shows complementarity with an \(e^+e^-\) Higgs factory program.

On the right panel of the figure we see that the performances of muon colliders in the measurement of \(\delta \kappa _\lambda \) are similar or much superior to the one of the other future colliders where this measurement could be performed. In particular, CLIC measures \(\delta \kappa _\lambda \) at the \(10\%\) level [61], and the FCC-hh sensitivity ranges from 3.5 to \(8\%\) depending on detector assumptions [62]. A determination of \(\delta \kappa _\lambda \) that is way more accurate than the HL-LHC projections is possible already at a low energy stage of a muon collider with \(E_{{\textrm{cm}}}=3\) TeV as discussed in Sect. 5.1.

The potential of a muon collider as a vector boson collider has not been explored fully. In particular a systematic investigation of vector boson scattering processes, such as \(WW\hspace{-3pt}\rightarrow \hspace{-3pt} WW\), has not been performed. The key role played by the Higgs boson to eliminate the energy growth of the corresponding Feynman amplitudes could be directly verified at a muon collider by means of differential measurements that extend well above 1 TeV for the invariant mass of the scattered vector bosons. Along similar lines, differential measurements of the \(WW\hspace{-3pt}\rightarrow \hspace{-3pt} HH\) process has been studied in [24, 56] (see also [22]) as an effective probe of the composite nature of the Higgs boson, with a reach that is comparable or superior to the one of Higgs coupling measurements. A similar investigation was performed in [22, 23] (see also [22]) for \(WW\hspace{-3pt}\rightarrow \hspace{-3pt} t{{\overline{t}}}\), aimed at probing Higgs-top interactions.

2.4 High-energy measurements

Direct \(\mu ^+\mu ^-\) annihilation, such as HZ and \(t{{\overline{t}}}\) production, displays a number of expected events of the order of several thousands, reported in Fig. 5. These are much less than the events where a Higgs or a \(t{{\overline{t}}}\) pair are produced from VBF, but they are sharply different and easily distinguishable. The invariant mass of the particles produced by direct annihilation is indeed sharply peaked at the collider energy \(E_{{\textrm{cm}}}\), while the invariant mass rarely exceeds one tenth of \(E_{{\textrm{cm}}}\) in the VBF production mode.

Left panel: \(95\%\) reach on the Composite Higgs scenario from high-energy measurements in di-boson and di-fermion final states [63]. The green contour display the sensitivity from “Universal” effects related with the composite nature of the Higgs boson and not of the top quark. The red contour includes the effects of top compositeness. Right panel: sensitivity to a minimal \(Z'\) [63]. Discovery contours at \(5\sigma \) are also reported in both panels

The good statistics and the limited or absent background thus enables few-percent level measurements of SM cross sections for hard scattering processes of energy \(E_{{\textrm{cm}}}=10\) TeV at the 10 TeV MuC. An incomplete list of the many possible measurements is provided in Ref. [63], including the resummed effects of EW radiation on the cross section predictions. It is worth emphasising that also charged final states such as WH or \(\ell \nu \) are copiously produced at a muon collider. The electric charge mismatch with the neutral \(\mu ^+\mu ^-\) initial state is compensated by the emission of soft and collinear W bosons, which occurs with high probability because of the large energy.

High energy scattering processes are as unique theoretically as they are experimentally [11, 24, 63]. They give direct access to the interactions among SM particles with 10 TeV energy, which in turn provide indirect sensitivity to new particles at the 100 TeV scale of mass. In fact, the effects on high-energy cross sections of new physics at energy \(\varLambda \gg E_{{\textrm{cm}}}\) generically scale as \((E_{{\textrm{cm}}}/\varLambda )^2\) relative to the SM. Percent-level measurements thus give access to \(\varLambda \sim 100\) TeV. This is an unprecedented reach for new physics theories endowed with a reasonable flavor structure. Notice in passing that high-energy measurements are also useful to investigate flavor non-universal phenomena, as we will see in Sect. 5.3.

This mechanism is not novel. Major progress in particle physics always came from raising the available collision energy, producing either direct or indirect discoveries. Among the most relevant discoveries that did not proceed through the resonant production of new particles, there is the one of the inner structure of nucleons. This discovery could be achieved [64] only when the transferred energy in electron scattering could reach a significant fraction of the proton compositeness scale \(\varLambda _{{{\textsc {qcd}}}}=1/r_{p}=300\) MeV. Proton-compositeness effects became sizeable enough to be detected at that energy, precisely because of the quadratic enhancement mechanism we described above.

Figure 7 illustrates the tremendous reach on new physics of a 10 TeV MuC with 10 ab\(^{-1}\) integrated luminosity. The left panel (green contour) is the sensitivity to a scenario that explains the microscopic origin of the Higgs particle and of the scale of EW symmetry breaking by the fact that the Higgs is a composite particle. In the same scenario the top quark is likely to be composite as well, which in turn explains its large mass and suggest a “partial compositeness” origin of the SM flavour structure. Top quark compositeness produces additional signatures that extend the muon collider sensitivity up to the red contour. The sensitivity is reported in the plane formed by the typical coupling \(g_*\) and of the typical mass \(m_*\) of the composite sector that delivers the Higgs. The scale \(m_*\) physically corresponds to the inverse of the geometric size of the Higgs particle. The coupling \(g_*\) is limited from around 1 to \(4\pi \), as in the figure. In the worst case scenario of intermediate \(g_*\), a 10 TeV MuC can thus probe the Higgs radius up to the inverse of 50 TeV, or discover that the Higgs is as tiny as (35 TeV\()^{-1}\). The sensitivity improves in proportion to the centre of mass energy of the muon collider.

The figure also reports, as blue dash-dotted lines denoted as “Others”, the envelop of the \(95\%\) CL sensitivity projections of all the future collider projects that have been considered for the 2020 update of the European Strategy for Particle Physics, summarised in Ref. [25]. These lines include in particular the sensitivity of very accurate measurements at the EW scale performed at possible future \(e^+e^-\) Higgs, electroweak and Top factories. These measurements are not competitive because new physics at \(\varLambda \sim 100\) TeV produces unobservable one part per million effects on 100 GeV energy processes. High-energy measurements at a 100 TeV proton collider are also included in the dash-dotted lines. They are not competitive either, because the effective parton luminosity at high energy is much lower than the one of a 10 TeV MuC, as explained in Sect. 2.1. For example the cross-section for the production of an \(e^+e^-\) pair with more than 9 TeV invariant mass at the FCC-hh is only 40 ab, while it is 900 ab at a 10 TeV muon collider. Even with a somewhat higher integrated luminosity, the FCC-hh just does not have enough statistics to compete with a 10 TeV MuC.

The right panel of Fig. 7 considers a simpler new physics scenario, where the only BSM state is a heavy \(Z'\) spin-one particle. The “Others” line also includes the sensitivity of the FCC-hh from direct \(Z'\) production. The line exceeds the 10 TeV MuC sensitivity contour (in green) only in a tiny region with \(M_{Z'}\) around 20 TeV and small \(Z'\) coupling. This result substantiates our claim in Sect. 2.2 that a reach comparison based on the \(2\rightarrow 1\) single production of the new states is simplistic. Single \(2\rightarrow 1\) production couplings can produce indirect effect in \(2\rightarrow 2\) scattering by the virtual exchange of the new particle, and the muon collider is extraordinarily sensitive to these effects. Which collider wins is model-dependent. In the simple benchmark \(Z'\) scenario, and in the motivated framework of Higgs compositeness that future colliders are urged to explore, the muon collider is just a superior device.

We have seen that high energy measurements at a muon collider enable the indirect discovery of new physics at a scale in the ballpark of 100 TeV. However the muon collider also offers amazing opportunities for direct discoveries at a mass of several TeV, and unique opportunities to characterise the properties of the discovered particles, as emphasised in Sect. 2.2. High energy measurements will enable us take one step further in the discovery characterisation, by probing the interactions of the new particles well above their mass. For instance in the Composite Higgs scenario one could first discover Top Partner particles of few TeV mass, and next study their dynamics and their indirect effects on SM processes. This might be sufficient to pin down the detailed theoretical description of the newly discovered sector, which would thus be both discovered and theoretically characterised at the same collider. Higgs coupling determinations and other precise measurements that exploit the enormous luminosity for vector boson collisions, described in Sect. 2.3, will also play a major role in this endeavour.

We can dream of such glorious outcome of the project, where an entire new sector is discovered and characterised in details at the same machine, only because energy and precision are simultaneously available at a muon collider.

2.5 Electroweak radiation

The novel experimental setup offered by lepton collisions at 10 TeV energy or more outlines possibilities for theoretical exploration that are at once novel and speculative, yet robustly anchored to reality and to phenomenological applications.

The muon collider will probe for the first time a new regime of EW interactions, where the scale \(m_{{{\textsc {w}}}}\hspace{-2pt}\sim \hspace{-2pt}100~\)GeV of EW symmetry breaking plays the role of a small IR scale, relative to the much larger collision energy. This large scale separation triggers a number of novel phenomena that we collectively denote as “EW radiation” effects. Since they are prominent at muon collider energies, the comprehension of these phenomena is of utmost importance not only for developing a correct physical picture but also to achieve the needed accuracy of the theoretical predictions.

The EW radiation effects that the muon collider will observe, which will play a crucial role in the assessment of its sensitivity to new physics, can be broadly divided in two classes.

The first class includes the emission of low-virtuality vector bosons from the initial muons. It effectively makes the muon collider a high-luminosity vector boson collider, on top of a very high-energy lepton-lepton machine. The compelling associated physics studies described in Sect. 2.3 pose challenges for fixed-order theoretical predictions and Monte Carlo event generation even at tree-level, owing to the sharp features of the Monte Carlo integrand induced by the large scale separation and the need to correctly handle QED and weak radiation at the same time, respecting EW gauge invariance. Strategies to address these challenges are available in WHIZARD [65], they have been recently implemented in MadGraph5_aMC@NLO [22, 66] and applied to several phenomenological studies in the muon collider context. Dominance of such initial-state collinear radiation will eventually require a systematic theoretical modelling in terms of EW Parton Distribution Function where multiple collinear radiation effects are resummed. First studies show that EW resummation effects can be significant at a 10 TeV MuC [55].

The second class of effects are the virtual and real emissions of soft and soft-collinear EW radiation. They affect most strongly the measurements performed at the highest energy, described in Sect. 2.4, and impact the corresponding cross-section predictions at order one [63]. They also give rise to novel processes such as the copious production of charged hard final states out of the neutral \(\mu ^+\mu ^-\) initial state, and to new opportunities to detect new short distance physics by studying, for one given hard final state, different patterns of radiation emission [63]. The deep connection with the sensitivity to new physics makes the study of EW radiation an inherently multidisciplinary enterprise that overcomes the traditional separation between “SM background” and “BSM signal” studies.

At very high energies EW radiation displays similarities with QCD and QED radiation, but also remarkable differences that pose profound theoretical challenges.

First, being EW symmetry broken at low energy, different particles in the same EW multiplet – i.e., with different “EW color” like the W and the Z – are distinguishable. In particular the beam particles (e.g., charged left-handed leptons) carry definite colour thus violating the KLN theorem assumptions. Therefore, no cancellation takes place between virtual and real radiation contributions, regardless of the final state observable inclusiveness [67, 68]. Furthermore, the EW colour of the final state particles can be measured, and it must be measured for a sufficiently accurate exploration of the SM and BSM dynamics. For instance, distinguishing the top from the bottom quark or the W from the Z boson (or photon) is necessary to probe accurately and comprehensively new short-distance physical laws that can affect the dynamics of the different particles differently. When dealing with QCD and QED radiation only, it is sufficient instead to consider “safe” observables where QCD/QED radiation effects can be systematically accounted for and organised in well-behaved (small) corrections. The relevant observables for EW physics at high energy are on the contrary dramatically affected by EW radiation effects.

Second, in analogy with QCD and unlike QED, for EW radiation the IR scale is physical. However, at variance with QCD, the theory is weakly-coupled at the IR scale, and the EW “partons” do not “hadronise”. EW showering therefore always ends at virtualities of order 100 GeV, where heavy EW states (t, W, Z, H) coexist with light SM ones, and then decay. Having a complete and consistent description of the evolution from high virtualities where EW symmetry is restored, to the weak scale where it is broken, to GeV scales, including also leading QED/QCD effects in all regimes is a new challenge [69].

Such a strong phenomenological motivation, and the peculiarities of the problem, stimulate work and offer a new perspective on resummation and showering techniques, or more in general trigger theoretical progress on IR physics. Fixed-order calculations will also play an important role. Indeed while the resummation of the leading logarithmic effects of radiation is mandatory at muon collider energies [63, 70], subleading logarithms could perhaps be included at fixed order. Furthermore one should eventually develop a description where resummation is merged with fixed-order calculations in an exclusive way, providing the most accurate predictions in the corresponding regions of the phase space, as currently done for QCD computations.

A significant literature on EW radiation exists, starting from the earliest works on double-logarithm resummations based on Asymptotic Dynamics [67, 68] or on the IR evolution equation [71, 72]. The factorisation of virtual massive vector boson emissions, leading to the notion of effective vector boson is also known since long [73,74,75,76]. More recent progress includes resummation at the next to leading log in the Soft-Collinear Effective Theory framework [77,78,79,80,81], the operatorial definition of the distribution functions for EW partons [70, 82, 83] and the calculation of the corresponding evolution, as well as the calculation of the EW splitting functions [84] for EW showering and the proof of collinear EW emission factorisation [85,86,87]. Additionally, fixed-order virtual EW logarithms are known for generic process at the 1-loop order [88, 89] and are implemented in Sherpa [90] and MadGraph5_aMC@NLO [91]. Exact EW corrections at NLO are available in an automatic form for arbitrary processes in the SM, for example in the MadGraph5_aMC@NLO [92] and in the Sherpa+Recola [93] packages or using WHIZARD+Recola [94]. Implementations of EW showering are also available through a limited set of splittings in Pythia 8 [95, 96] and in a complete way in Vincia [97].

While we are still far from an accurate systematic understanding of EW radiation, the present-day knowledge is sufficient to enable rapid progress in the next few years. The outcome will be an indispensable toolkit for muon collider predictions. Moreover, while we do expect that EW radiation phenomena can in principle be described by the Standard Model, they still qualify as “new phenomena” until when we will be able to control the accuracy of the predictions and verify them experimentally. Such investigation is a self-standing reason of scientific interest in the muon collider project.

2.6 Muon-specific opportunities

In the quest for generic exploration, engineering collisions between muons and anti-muons is in itself a unique opportunity. The concept can be made concrete by considering scenarios where the sensitivity to new physics stems from colliding muons, rather than electrons or other particles. An overview of such “muon-specific” opportunities is provided in Sect. 5.3 based on the available literature [23, 29,30,31,32,33,34,35,36,37,38, 51, 98,99,100,101,102,103,104,105,106,107,108,109,110,111,112]. A brief discussion is reported below.

It is worth emphasising in this context that lepton flavour universality is not a fundamental property of Nature. Therefore new physics could exist, coupled to muons, that we could not yet discover using electrons. In fact, it is not only conceivable, but even expected that new physics could couple more strongly to muons than to electrons. Even in the SM lepton flavour universality is violated maximally by the Yukawa interaction with the Higgs field, which is larger for muons than for electrons. New physics associated to the Higgs or to flavour could follow the same pattern, offering a competitive advantage to muon over electron collisions at similar energies. The comparison with proton colliders is less straightforward. By the same type of considerations one expects larger couplings with quarks, especially with the ones of the second and third generation. This expectation should be folded in with the much lower luminosity for heavier quarks at proton colliders than for muons at a muon collider. The perspectives of muon versus proton colliders are model-dependent and of course strongly dependent on the energy of the muon and of the proton collider.

Recently experimental anomalies in g-2 and in B-meson physics measurements triggered numerous studies of muon-philic new physics. These results provide interesting quantitative illustrations of the generic added value for exploration of a collider that employs second-generation particles. They show the muon collider potential to probe new physics that is presently untested because it couples mostly to muons. These models, and others with the same property, will still exist – though in a slightly different region of their parameter space – even if the anomalies will be explained by SM physics as the most recent LHCb results suggest for the B-meson anomalies [113, 114].

Illustrative results are reported in Fig. 8, displaying the minimal muon collider energy that is needed to probe different types of new physics potentially responsible for the g-2 anomaly. The solid lines correspond to limits on contact interaction operators due to unspecified new physics, that contribute at the same time to the muon g-2 and to high-energy scattering processes. Semi-leptonic muon-charm (muon-top) interactions that can account for the g-2 discrepancy can be probed by di-jets at a 3 TeV (10 TeV) MuC, whereas a 30 TeV collider could even probe a tree-level contribution to the muon electromagnetic dipole operator directly through \(\mu \mu \rightarrow h\gamma \). These sensitivity estimates are agnostic on the specific new physics model responsible for the anomaly. Explicit models typically predict light particles that can be directly discovered at the muon collider, and correlated deviations in additional observables. We will see in Sect. 5.3 that a complete coverage of several models that accommodate the current discrepancy is possible already at a 3 TeV MuC, and a collider of tens of TeV could provide a full-fledged no-lose theorem.

Summary, from Sect. 5.3 of the muon collider perspectives to probe the muon g-2 anomaly

3 Facility

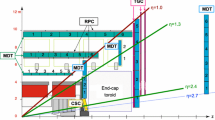

The Muon Accelerator Programme (MAP) has developed a concept for the muon collider, shown in Fig. 9. This concept serves as the starting point for the baseline concept and a seed for the tentative parameters in the design studies initiated by the International Muon Collider Collaboration (IMCC) [16]. The tentative target parameters are reported in Table 1.

This section describes the physical principles that motivate the present baseline design and outlines promising avenues that may yield improved performances or efficiency. Technical issues are also highlighted. Section 3.1 provides an overview of the overall design concept. An approximate expression for luminosity is derived in some detail, as this motivates many of the design choices. Consideration of attainable energy, facility scale and power requirements are described. Possible upgrade schemes and timescales are outlined. The detailed description of design concepts and technical issues surrounding each subsystem are reported in Sects. 3.2–3.7, describing the proton source, target, front end, muon cooling system, acceleration and collider in turn. The concepts and technologies developed for the muon collider will require technical demonstration, to be achieved by a number of demonstrator facilities described in Sect. 3.8. Section 3.10 is devoted to the synergies between the R &D programme for the muon collider and the one of other muon-beam facilities. We summarise our conclusions in Sect. 3.11.

3.1 Design overview

Most muon collider designs foresee that muons are created as a product of a high power proton beam incident on a target. Most muons are produced by decay of pions created in the target. In order to capture a large number of negatively and positively charged secondary particles over a broad range of momenta, a solenoid focusing system is used rather than the more conventional horn-type focusing. Following the target the beam is cleaned and most pions decay leaving a beam composed mostly of muons. The muons are captured longitudinally in a sequence of RF cavities arranged to manipulate the single short bunch with large energy spread into a series of bunches each with a much smaller energy spread.

Following creation of a bunch train, the beam is split into the different charge species and each charge species is cooled separately by a 6D ionisation cooling system. Ionisation cooling increases the beam brightness and hence luminosity. An initial cooling line reduces the muon phase space volume sufficiently that each bunch train can be remerged into a single bunch. Further cooling then reduces the longitudinal and transverse beam size. Final cooling systems for each charge species results in a beam suitable for acceleration and collision.

Ionisation cooling is chosen as it operates on a time scale that is competitive with the muon lifetime. Despite the short time scale, a significant number of muons are lost due to muon decay as well as transmission losses. Nonetheless the increased beam brightness provided by the cooling system yields a significant increase in luminosity.

The beam is then accelerated. Rapid acceleration is required in order to maintain an acceleration time that is much shorter than the muon lifetime in the laboratory frame. Satisfactory yields may be achieved by leveraging the muon lifetime increase during acceleration due to Lorentz time dilation to maximise the acceleration efficiency \(\eta _\tau \). The number of muons N changes with time t according to

where \(m_\mu \), E and \(\tau _\mu \) are the muon mass, energy and lifetime respectively. Assuming the muons are travelling, near to the speed of light c, through a mean field gradient \({\bar{V}}\), their energy changes as \(dE/dt=e{\bar{V}}c\), where e is the muon charge. We thus find

where \(\delta _\tau = e{\bar{V}} \tau _\mu /m_\mu c\) is the mean change in energy in one muon lifetime, normalised to the muon rest energy. Integrating yields the acceleration efficiency

where the product is taken over all accelerator subsystems. Mean gradients \({\bar{V}} \sim O(1{-}10)\) MV/m are possible, with higher gradients available in the early parts of the accelerator chain, yielding \(\delta _\tau \sim O(10)\gg 1\). Muons can thus be accelerated faster than they decay, entailing a limited loss of muons during the acceleration process.

At low energy rapid acceleration is achieved using a linear accelerator, in order to maximise the average accelerating gradient in this relatively short section. At higher energies recirculation may be used to improve the system efficiency, for example in a dogbone recirculator. Finally, a sequence of pulsed synchrotrons bring the beam up to final energy. Synchrotrons that employ a combination of fixed high-field, superconducting dipoles and lower field pulsed dipoles are under study. The muons are eventually transferred into a low circumference collider ring where collisions occur.

Luminosity

The muon collider benefits from significant luminosity even at high energies. Many of the design parameters for a muon collider are driven by the need to achieve a good luminosity. An approximate expression for luminosity may be derived to inform design choices and highlight the critical parameters for optimisation. In particular proton sources are a relatively well-known technology, with examples such as SNS and JPARC in a similar class to the proton driver required for the muon collider. Muon beam facilities comparable to the muon collider have instead never been constructed. In order to quantify the required performance for a muon collider facility, it is convenient to express the luminosity in terms of the proton source parameters and muon facility performance indicators, for example the final muon energy, muon collection efficiency and muon beam quality.

In each beam crossing in a collider the integrated luminosity increases by [115]

where \(N_{\pm ,j}\) are the number of muons in each positively and negatively charged bunch on the \(j^{th}\) crossing and \(\sigma _\perp \) is the geometric mean of the horizontal (x) and vertical (y) RMS beam sizes, assumed to be the same for both charge species.

The number of particles in each beam on the \(j^{th}\) crossing decreases due to muon decay as

where R is the collider radius and \(\gamma \) the Lorentz factor of the muons. If the facility has a repetition rate of \(f_r\) acceleration cycles per second and \(n_b\) bunches circulate in the collider, the luminosity will be

For the designs discussed here the muon passes around the collider ring many times (\(j_\text {max}\rightarrow \infty \)) so we can sum the geometric series. Furthermore, \(2 \pi R/(c\gamma \tau _\mu )\ll 1\), therefore to a good approximation

The average collider radius R, in terms of the average bending field \({\bar{B}}\), is \(R = p/(e{\bar{B}}) \approx \gamma m_\mu c / (e{\bar{B}})\) and

The transverse beam size \(\sigma _\perp \) may be expressed in terms of the beam quality (emittance) and the focusing provided by the magnets. \(\varepsilon _\perp \) and \(\varepsilon _l\) are the normalised emittances in transverse and longitudinal coordinates; a small \(\varepsilon \) indicates a beam occupying a small region in position and momentum phase space. To a good approximation \(\varepsilon \) is conserved during acceleration. The degree to which the beam is focused is denoted by the lattice Twiss parameter \(\beta ^*_\perp \). For a short bunch

Stronger lenses create a tighter focus and make the beam size smaller at the interaction point, reducing \(\beta ^*_\perp \). The minimum beam size is practically limited by the “hourglass effect”; when the focal length of the lensing system is much shorter than the length of the beam itself, the average beam size at the crossing is dominated by particles that are not at the focus [116]. For example, when the RMS bunch length is not zero, but \(\sigma _z = \beta ^*_\perp \), Eq. (11) is replaced by

with a hourglass factor \(f_{hg} \approx 0.76\). The RMS longitudinal emittance is \(\varepsilon _l = \gamma m_\mu c^2 \sigma _\delta \sigma _z\) where \(\sigma _\delta \) is the fractional RMS energy spread, so the luminosity may be expressed as

where \(E_\mu =\gamma m_\mu c^2\) is the energy of the colliding muons.

Naively, the number of muons reaching the accelerator may be obtained from the number and energy of protons, i.e. from the proton beam power. This assumes proton energy is fully converted to pions and the capture and beam cooling systems have no losses. In reality pion production is more complicated; practical constraints such as pion reabsorption, other particle production processes and geometrical constraints in the target have a significant effect. Decay and transmission losses occur in the ionisation cooling system that significantly degrades the efficiency.

The final number of muons per bunch in the collider, \(N_\pm \), can be related to the proton beam power on target \(P_p\) and the conversion efficiency per proton per unit energy \(\eta _\pm \) by

Overall the luminosity may be expressed as

where \(K_L = 4.38 \times 10^{36} \mathrm { \, MeV \, MW^{-2} \, T^{-1} \, s^{-2}} \) and \(P_\pm \) is the muon beam power per species.

This luminosity dependence yields a number of consequences. The luminosity improves approximately with the square of energy at fixed average bending field. We thus find the desired scaling in Eq. (1) that entails, as discussed in the previous section, a constant rate for very massive particles pair-production, as well as a growing VBF rate for precision measurements. The quadratic scaling of the luminosity with energy is peculiar of muon colliders and it is not present, for example, in a linear collider. This is because the beam can be recirculated many times through the interaction point and beamstrahlung has a negligible affect on the focusing that may be achieved at the interaction point of the muon collider. This yields an improvement in power efficiency with energy.

The luminosity is highest for collider rings having strong dipole fields (large \({\bar{B}}\)), so that the circumference is smaller and muons can pass through the interaction region many times before decaying. For this reason a separate collider ring with the highest available dipole fields is proposed after the final acceleration stage, as in Fig. 9.

The luminosity is highest for a small number of very high intensity bunches. The MAP design demanded a single muon bunch of each charge, which yields the highest luminosity per detector. Such a design would enable detectors to be installed at two interaction points.

The luminosity decreases linearly with the facility repetition rate, assuming a fixed proton beam power. For the baseline design, a low repetition rate has been chosen relative to equivalent pulsed proton sources.

The luminosity decreases with the product of the transverse and longitudinal emittance. It is important to achieve a low beam emittance in order to deliver satisfactory luminosity, while maintaining the highest possible efficiency \(\eta _\pm \) of converting protons to muons.

Based on these considerations, an approximate guide to the luminosity normalised to beam power is shown in Fig. 10 and compared with the one of CLIC.

Facility size

The geometric dimensions of the MuC depend on future technology and design choices. Some indication of the dimensions can be estimated. The facility scale is expected to be driven by the pulsed synchrotrons in the acceleration system.

The rapid pulsing required in the synchrotrons precludes the use of high-field ramped superconducting magnets such as those used in the LHC. Static high-field superconducting dipoles are proposed combined with rapidly pulsed low-field dipoles. As the beam accelerates the pulsed dipoles are ramped, enabling variation of the mean dipole field. The static dipoles provide a relatively compact and efficient bend.

Preliminary estimates indicate that acceleration up to 3 TeV centre-of-mass energy, assuming 10 T static dipoles and pulsed dipoles with a field swing ±1.8 T, would require a ring of circumference around 10 km. Around 60% of the ring is estimated to be required for pulsed and static bending dipoles. Acceleration up to 10 TeV centre-of-mass energy would require 16 T static dipoles, which are only expected to become available later in the century, and an approximately 70% dipole packing fraction. A 10 TeV facility could be implemented as an upgrade to the 3 TeV facility, as discussed below. Estimates indicate that a ring circumference of up to 35 km may be required. Tuning to accommodate the beam into existing infrastructure such as the 26.7 km circumference LHC tunnel is possible, for example by changing the energy swing in each ring so that more or less space is required for pulsed dipoles. Options that have a fixed dipole field that varies radially in the same superconducting magnet are under study, which may enable an increased average dipole field to be considered.

Wall-plug power requirements

The power usage of future accelerator facilities, often referred to as the ‘wall-plug power’, is of great concern and in future may be a stronger practical constraint than the financial cost. The goal is to remain at a wall-plug power consumption for the 10 TeV MuC well below the level estimated for CLIC at 3 TeV (550 MW) or FCC-hh (560 MW). This seems readily achievable; the facility length is considerably shorter than other proposed colliders. Reuse of magnets and RF should make the facility more efficient than linear colliders while the lower energy requirement should result in lower power requirements than hh colliders. The design has to advance more to assess the power consumption scale in a robust fashion.

Muon beam production imposes a fixed power consumption requirement. In particular, the muon cooling system requires several GeV of acceleration in normal conducting cavities at high gradient. Additional power consumption arises from the proton source and cooling plant for the target and other cryogenic systems.

A number of key components drive the power consumption as one extends to high energy:

-

The power loss in the fast-ramping magnets of the pulsed synchrotron and their power converter.

-

The cryogenics system that cools the superconducting magnets in the collider ring. This depends on the efficiency of shielding the magnets from the muon decay-induced heating.

-

The cryogenics power to cool the superconducting magnets and RF cavities in the pulsed synchrotrons.

-

The power to provide the RF for accelerating cavities in the pulsed synchrotrons.

The first contribution requires particular study as it depends on unprecedented large-scale fast ramping systems. The second and third contributions require optimisation of the volume reserved for shielding as compared to magnetised volume. The contributions can be estimated reliably following a suitable design and optimisation of the relevant equipment.

In summary power consumption follows a relation

where \(P_{src}\) is the power needed for the muon source, which is constant with energy, \(P_{linac}\) is the power requirement for the linac, which is fixed by the transition energy between the linacs and RCS (Rapid Cycling Synchrotron). \(P_{rf}\) is the power requirement for RCS and collider RF cavities, which is approximately proportional to the beam energy, \(P_{rcs}\) is the power requirement for the RCS magnets which is likely to rise slowly with energy due to the lower losses associated with slower ramping at high energy and \(P_{coll}\) is the power requirement for the collider ring, which is approximately proportional to the collider length i.e. proportional to the beam energy. Overall, the power requirement is expected to rise slightly less than linearly with energy and hence the wall-plug power is expected to be approximately proportional to the beam power.

Upgrade scheme

The muon collider can be implemented as a staged concept providing a road toward higher energies. One such staging scenario is shown in Fig. 11. The accelerator chain can be expanded by an additional accelerator ring for each energy stage. A new collider ring is required for each energy stage. It may be possible to reuse the magnets and other equipment of the previous collider ring, for example in the new accelerator ring.