Abstract

We present a novel approach for the search of dark matter in the DarkSide-50 experiment, relying on Bayesian Networks. This method incorporates the detector response model into the likelihood function, explicitly maintaining the connection with the quantity of interest. No assumptions about the linearity of the problem or the shape of the probability distribution functions are required, and there is no need to morph signal and background spectra as a function of nuisance parameters. By expressing the problem in terms of Bayesian Networks, we have developed an inference algorithm based on a Markov Chain Monte Carlo to calculate the posterior probability. A clever description of the detector response model in terms of parametric matrices allows us to study the impact of systematic variations of any parameter on the final results. Our approach not only provides the desired information on the parameter of interest, but also potential constraints on the response model. Our results are consistent with recent published analyses and further refine the parameters of the detector response model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The frontiers of physics are often explored conducting experiments at the limit of the detector sensitivity. In such a circumstance it is extremely important to control and correctly evaluate the effect of systematic uncertainties. This is particularly relevant when hints of a new signal are sought in event samples contaminated by a significant background that might amplify the impact of systematic effects and thus dilute the relevance of the observation.

The search for dark matter candidates with direct detection experiments is one of such cases where a very rare and feeble signal is searched for in a huge background of events, mostly induced by cosmic-rays and natural radioactivity. In this scenario, robust results in terms of projected experimental sensitivities, as well as exclusion limits, or hints of new physics are only achieved after a deep understanding of all relevant experimental effects.

In the past decade, a number of experiments have been searching for dark matter with masses in the range of a few GeV/c\(^2\) and below, pushing the sensitivity to their experimental limit in terms of total exposure and energy threshold. The current status of the art is led by experiments exploiting scintillation light and ionization charge [1,2,3,4,5,6] or heat [7, 8]. In particular, the DarkSide-50 (DS-50) experiment [5, 6], a large volume dual phase argon Time Projection Chamber, has set the strongest constraint on the spin-independent cross section for dark matter masses below 3.6 GeV/c\(^2\).

In the search for very rare phenomena, the optimal strategy is to identify and reject all background events while retaining a significant fraction of signal events. In this ideal case, often referred to as zero-background search, a handful of candidate events is enough to claim a discovery. More commonly, it is not possible to efficiently separate signal from background events and a different strategy is needed. A refined data analysis has to be put in place to compute the probability that the observed candidate events are due to new physics. Such analyses are based on the so called likelihood function that provides a parametric model for the probability to make an observation assuming certain hypotheses. A detailed and sound description of detector effects and background sources has to go in the likelihood before possible discrepancies observed in the data can be ascribed to new phenomena.

The likelihood function typically depends on a number of parameters, some of which, called parameters of interest, represent the physics quantities relevant for the measurements, some others, called nuisance parameters, encode the detector response and the background properties. Although the likelihood function is at the basis of the inferential processes both in the Bayesian and Frequentist approaches, the impact of the nuisance parameters on the parameters of interest is treated very differently.

In the Frequentist case, the likelihood function is maximised and an approximated dependence of the parameter of interest on the nuisance parameters is obtained through the so called profiling procedureFootnote 1 [9]. However, the dependence of the parameter of interest on these parameters is in general non-linear, and very complicated. For these reasons, analysis approaches based on the profiling of the likelihood of the experiment, valid under linearity, symmetry, or “Gaussianity” assumptions [10], might not be able to reproduce in an accurate way the propagated uncertainties on the parameter of interest.

In the Bayesian approach, a joint posterior probability density function (pdf) for all parameters is constructed from the likelihood function and the prior pdf. The impact of systematic uncertainties on the parameters of interest is obtained by integrating (marginalising) the posterior pdf over the nuisance parameters. This procedure is straightforward and well motivated by probability theory. The main criticism to the Bayesian method related to the intrinsic ‘subjective’ nature of these prior pdfs is not relevant in this case, since the pdfs for the nuisance parameters are normally determined by ancillary measurements.

Independently of the inferential approach, the construction of the likelihood function tailored on the specificity of the measurement is a challenging task. Given the complexity of the detector response and the diverse origin of the background sources, the likelihood function is often sampled with Monte Carlo (MC) simulations. Expected differential event rates are constructed as a function of some relevant observables in the form of histograms of events. These histograms, known as templates, are an approximation of the pdfs for the observables given some specific set of parameters describing the experiment. Different configurations are explored by computing new templates from MC simulations run with different sets of parameters. Given the typical size of millions of events needed to produce accurate templates, this procedure is very time-consuming. To overcome this limitation, a few points in the parameter space are chosen as representative of the systematic variations with respect to the best model. New templates are derived from the computed ones by linear interpolation or more advanced morphing techniques [11].

This work shows that, under certain circumstances, the likelihood function can be expressed as an analytical or semi-analytical function of the relevant parameters, making it unnecessary to run large MC simulations to account for systematic effects. A similar approach, not cast in the Bayesian inference language, has been presented in Refs. [12,13,14]. Alternative analyses incorporating machine learning techniques have been showcased in Refs. [15, 16]. In addition, our paper describes a new technique based on probabilistic graphical models, also known as Bayesian Networks (BN) [17,18,19,20], to take into account systematic uncertainties. In a BN the probabilistic relations between the physical quantities and the observations are evident, and the dependence on detector effects and background contributions is made explicit, allowing for reasoning about causes and effects within the model [21,22,23,24,25,26,27]. To our knowledge, this approach has first been proposed for parametric inference in presence of data points affected by systematic uncertainties in Ref. [28], and later used to describe the Bayesian unfolding in Ref. [29].

This technique has the advantage over the widely used profiling methods to be exact in terms of uncertainty propagation [30], to not rely on template morphing, and to properly take into account cross correlations between parameters and phase space regions. In addition, if the physical parameters describing the detector response model and constrained by calibrations are retained as parameters inside the likelihood function, this method gives the possibility of verifying the goodness of the calibrations and, a posteriori, further constrains the detector response model.

The rest of this article proceeds as follow. In Sect. 2 we describe the BN method and its implementation for an oversimplified DM direct detection experiment. The method is applied to the low mass DM analysis of the DarkSide-50 (DS-50) experiment. The description of the experimental setup and the data-set employed in this study is contained in Sect. 3.

Section 4 describes the detector response model and the implementation via the BN method. In Sect. 5 we show the DS-50 likelihood, while in Sect. 6 we describe the technical implementation. Finally, in Sect. 7, we illustrate the results of this method in terms of sensitivity and exclusion limits for the low mass DM analysis, exploiting both the nuclear recoil (NR) and Migdal effect (ME) [31] signals. The ME signal has been recently studied in Refs. [32,33,34,35,36,37,38,39,40,41,42,43,44,45], and exploited in the search for light DM in Refs. [6, 8, 46,47,48,49,50]. Being these signals a combination of NR and electronic recoil (ER) energy releases, we also test the simultaneously handling of the detector calibrations of the two channels. We obtain comparable sensitivity with respect to the recent published analysis [6]. We also show that making the likelihood explicitly dependent on the detector parameters gives us a much better control over the systematic effects and allows improving the knowledge on the detector response parameters exploiting the new data. In Sect. 8 we draw our conclusions.

2 Concept and method

2.1 Graphical method for Bayesian inference

According to the Bayesian Networks method, the probability density function of a collection of random variables can be represented by a network made of arrows and nodes where:

-

1.

The nodes represent the variables;

-

2.

A solid arrow between two nodes represents a probabilistic link between the two variables;

-

3.

A dashed arrow between two nodes represents a deterministic link between the two variables;

-

4.

A gray node indicates the corresponding variable has been observed.

To briefly illustrate the method, let us take as an example the search for a rare process in presence of a background contribution [51, 52]. This is typically described by a Poisson likelihood, defined as

where x is the observed datum, \(\lambda \) is the intensity of the Poisson process, \(\lambda _B\) regulates the intensity of the background contribution, and \(\lambda _S\) represents the signal strength parameter we want to determine. Figure 1 shows the BN for this simple measurement. We are interested in inferring \(p(\lambda _S|x)\), namely the pdf of \(\lambda _S\), conditioned to the observation of the node x. In other words, looking at the BN of Fig. 1, we want to determine how the information obtained with the observation of the node x propagates backwards in the network to the parameter of interest \(\lambda _S\). In this sense, the Bayesian inferential process can be regarded as an information flow from the observed nodes to the parameters of interest. Once we draw the network, we determine the relations represented by each of the links, and we fix the observed nodes, the rules of probability allow determining the pdf of the unobserved nodes conditioned to the measured data \(p(\lambda _S, \lambda _B, \lambda | x)\), also called the posterior pdf, or simply the posterior. If we are only interested in \(\lambda _S\), we can obtain the posterior \(p(\lambda _S|x)\) integrating over the nuisance parameters’ space.

Bayesian network for a Poisson process with intensity \(\lambda \) and observed number of events x. The intensity \(\lambda \) is in turn the sum of a signal contribution \(\lambda _S\) and a background contribution \(\lambda _B\), and thus the links connecting \(\lambda _S\) and \(\lambda _B\) to \(\lambda \) are deterministic. The dots denote a possible additional portion of the network representing previous measurements, e.g. during calibration, of \(\lambda _B\). The inferential process is an information flow (gray dotted arrow) from the observed node x, denoted in gray, to the parameter of interest \(\lambda _S\)

2.2 Implementation for dark matter direct detection experiments

For this work, we consider the dual phase noble liquid TPC approach to search for DM exploiting the ionization channel only [53,54,55,56]. Simplifying as much as possible, the experiment consists in counting the number of detected events in the active volume as a function of the measured amount of detectable quanta produced during the primary event. The number of detectable quanta is in turn a measurement of the energy of the recoiling target particle. Finally, a histogram of the observed spectrum is obtained. In order to put constraints on the possible DM signal contribution to the observed spectrum, two main ingredients are needed:

-

1.

a detector response model describing how the kinetic energy transferred to the target particles is translated into the number of detectable quanta measured by the experimental apparatus. According to the type of experiment, the target material, and the readout system, this response model can also be highly non-linear, and the parameters regulating it are usually constrained by a set of preliminary calibration measurements.

-

2.

a background model describing the relevant background contributing to the final observed spectrum.

These two models allow computing the expected spectrum starting from the theoretical background and signal spectra.

The common way to implement these two models for the analysis is via a toy MC approach, in which the final expected spectrum and its \(\pm \sigma \) (standard deviation) variations are computed before the fit. During the fit, a morphing procedure is used to take into account possible variations of the spectral shape. According to this approach, a new set of nuisance parameters is used; the number of these newly added parameters and their correlations are chosen on the basis of ad hoc and case dependent prescriptions, while their physical interpretations in terms of physical systematic parameters is often lost. In other words, due to the re-parameterization of the likelihood, the post fit results can not easily be interpreted in terms of the original physical parameters describing the systematic effects.

We developed a method to compute the final expected spectrum as an analytical function of the theoretical spectrum and the experimental parameters. Indeed, the probability to measure a certain number \(N_q\) of detectable quanta given a certain kinetic recoil energy E and a certain set of experimental parameters \(\varvec{\theta }\), can be decomposed as

where \(N_q^{(0)}\) is the originally produced number of detectable quanta, namely the number of detectable quanta before applying other detector related effects such as response non linearity, efficiency, or resolution. Since we have the possibility of computing these two pdfs analytically and since the spectra we are dealing with can be treated as vectors, we can express the final expected spectrum \(S_i^{fin}\) as the following linear combination of the theoretical spectrum \(S_k^{th}\):

where

These two matrices could depend on the background source or on the readout channel. The ability to compute the entries of \(\mathcal {M}^1\) and \(\mathcal {M}^2\), called the smearing matrices, allows us to obtain the final expected spectrum via Eq. (3) and to perform the fit on the observed one by means of linear algebra operations.

Left: Bayesian network for a prototypical dual phase TPC DM direct detection experiment. The node \(x_i\) is the observed number of events in the i-th bin; \(\lambda _i\) is the intensity of the Poisson process in the i-th bin; \(\lambda _S\) and \(\lambda _B\) regulate the strength of the signal and the background contributions, respectively; the boxes in different gray intensities represent the different contributions from all the relevant background or signal sources; \(\varvec{\theta }\) are the parameters describing the detector response model; the dots indicate possible preliminary calibration measurements of \(\varvec{\theta }\); \(\mathcal {M}^1\) and \(\mathcal {M}^2\) are defined in Eq. (4). Right: a possible background expected spectrum (\(S_i^{fin}\)) for different configurations of the \(\varvec{\theta }\) parameters (gray lines). The green curve corresponds to the best calibration fit values of \(\varvec{\theta }\)

Figure 2 (top) shows the Bayesian network describing all the process. In particular, \(S_j^{(0)}\) is the intermediate spectrum after applying only the \(\mathcal {M}^1\) matrix; the dots indicate possible preliminary calibration measurements constraining \(\varvec{\theta }\); the boxes in different gray intensities represent the fact that there are many copies of that portion of the network, one for each background or signal component; \(x_i\) indicates the number of observed events in the i-th bin, while \(\lambda _i\) is the Poisson expected value in the i-th bin; \(\lambda _S\) and \(\lambda _B\) are normalization parameters regulating the intensity of the signal and the background contributions, respectively. Once the BN is constructed, one can use it in a twofold way. Top-down, going from the parameters of the model to the observation, it can be used to simulate events by sampling for example with a Markov Chain Monte Carlo (MCMC); or bottom-up, going from the observation to the parameters of the model, it can be used to perform the inference and measure the parameter of interest. As an example of this method, Fig. 2 (bottom) shows graphically an intermediate node of the BN during the sampling phase, namely a possible background expected spectrum (\(S_i^{fin}\)) for different configurations of the \(\varvec{\theta }\) parameters (gray lines). In addition, the green curve corresponds to the best calibration values of \(\varvec{\theta }\) obtained a posteriori after a fit to a background only observation. From this figure, it is clear that each set of calibration parameters produces a background template that cannot be parametrized with simply a normalization and shape variation without losing the correlation among bins.

3 The DarkSide-50 experiment

The DS-50 experiment exploits a dual-phase liquid argon (LAr) time projection chamber (TPC), operated in Italy at the INFN’s Laboratori Nazionali del Gran Sasso (LNGS). Two measurable interactions can be observed in the 46.4±0.7 kg active target: light from scintillation in the liquid (S1) and ionization electrons. A 200 V/cm electric field in the LAr volume drifts the ionization electrons up to the gas pocket, where they are extracted by an electric field of 2.8 kV/cm into the gas phase, producing a secondary pulse of light (S2) by electroluminescence. The ultraviolet photons from the S1 and S2 signals are converted by a tetraphenyl butadiene wavelength shifter that covers the internal surface of the TPC into visible radiation. Two arrays of 19 3-in photomultipliers (PMTs), located above the anode and below the cathode, detect these photons. The TPC is enclosed in a stainless steel cryostat and lies inside a 30 t boron-loaded liquid scintillator veto equipped with 110 8-in PMTs, in order to actively reject neutrons. This is surrounded by a 1 kt ultra-pure water Cherenkov veto with 80 PMTs that acts as a cosmic muon veto and as a passive shield against external backgrounds. Further details on the detector can be found in Refs. [53, 57,58,59,60].

3.1 Event selection

This analysis uses the full DS-50 exposure of \(\mathcal {E}_\textrm{DS} = 653.1\) live-days, from December 12, 2015 to February 24, 2018. The event selection is exactly the same as the one used for the recent DS-50 publications on the search for low-mass DM [5, 6] and it is described in details in Ref. [5]. We define the region of interest (ROI) as where the ionization response is calibrated [61] and backgrounds are well-understood [5]. This corresponds to the NR(ER) energy range [0.06, 21] keV\(_{\text {er}}\) ([0.64, 393] keV\(_{\text {nr}}\)), matching to the number of electron range \(N_e\) within [4, 170]. Below \(4e^-\), a non-negligible contribution to the background model of spurious electrons captured by impurities along and re-emitted with a delay is present. This analysis considers only single scatter events with a single S2 pulse, except for ‘echoes’ (pulses induced by one or more \(e^-\) extracted from the cathode from S1 or S2 photons via photoelectric effect). Several quality cuts based on S2 / S1 and on the topological distribution and the time profile of the S2 signal are implemented. These cuts reject events with overlapping pulses [5]. Additional cuts are applied in order to remove events with an anomalous start time, associated to random coincidences between very low S1 and S2 pulses from the anode, alpha particles emitted near the TPC walls, whose S1 photons induce S2 pulses by extracting electrons from the cathode, and spurious S2 pulses, induced by electrons captured by impurities. After the quality and selection cuts, the final data-set results in an exposure of \(12,202\pm 180\) kg d.

3.2 Background model

The ROI is dominated by internal backgrounds, such as the \(^{85}\)Kr and \(^{39}\)Ar decays occurring in the LAr fiducial volume, and external backgrounds, including radiation from contaminants in the PMTs and stainless-steel cryostat. Additional negligible backgrounds originate from radiogenic and cosmogenic neutrons, and coherent elastic neutrino-nucleus scattering from solar and atmospheric neutrinos. The spectral shapes of the internal backgrounds take into account recent calculations of atomic exchange and screening effects, validated on measured \({^{63}\textrm{Ni}}\) and \({^{241}\textrm{Pu}}\) spectra down to 200 eV [62, 63]. From 0 to 200 eV a linear uncertainty on such corrections ranging from 25 to \(0\%\) is assumed. Other systematics on the spectral shape come from the ionization response, modeled via Monte Carlo simulations [61], and subdominantly from the uncertainty on the Q-value [64]. The external background model is based on simulations of each material component as measured during the material screening campaign. The input for the simulation are given by these measurements and the associated uncertainties, after the correction because of the decrease activity due to the elapsed time at the dataset date. A more detailed description on the background models can be found in Ref. [5].

3.3 DarkSide-50 response model

The detector calibration and its response has been performed in Ref. [61] for both ER and NR energy deposits.

The ionization yield of an ER or a NR, denoted by the symbol \(Q_y\), is defined as the average number of ion–electron pairs surviving recombination per unit of energy. The two models describing the ionization yields, validated and constrained by the calibration measurements [61], are a modified version of the Thomas–Imel box model for the ER [65] and the Bezrukov model for the NR [66]. The ER ionization yield is given by

where \(E_{er}\) is the energy of the ER, and \(a_0 = 2.41 \pm 0.58\), \(a_1 = 21.0\pm 2.2\), \(a_2 = 0.11\pm 0.03\), and \(a_3 =1.71\pm 0.08\) are the parameters of the model constrained during the calibration measurements [61].Footnote 2 The NR ionization yield is given by

where \(E_{nr}\) is the NR energy, and \(f(C_{box}^{NR}, E_{nr})\) and \(N_i(f_B, E_{nr})\) are two analytical functions of the energy and of \(C_{box}^{NR} = (8.05 \pm 0.15)\,\mathrm{V/cm}\) and \(f_B = (0.67 \pm 0.02)\) [61], which are in turn the two parameters of the model constrained during the calibration measurements.

The ionization response to ER (NR) is measured down to 180 eV\(_{er}\) (500 eV\(_{nr}\)), corresponding to \(\sim 9\) (3) ionization electrons, respectively. The ionization response to both ER and NR is the lowest threshold ever reached in liquid argon. These results are obtained by fitting the specific ER and NR ionization models to \({^{241}\textrm{Am}^{9}\textrm{Be}}\) and \({^{241}\textrm{Am}^{13}\textrm{C}}\) neutron sources data, \(\beta \)-decay data of \(^{39}\)Ar, and electron captures of \(^{37}\)Ar obtained during the DS-50 calibration campaign, and by external datasets from the SCENE [67] and ARIS [68] experiments.

4 The Bayesian Network method for DarkSide-50

In this section we discuss the implementation of the BN method for the DS-50 response model. In particular, we describe how to keep the dependence on the calibration parameters up to the final \(N_{e^-}\) spectrum, where \(N_{e^-}\) is the number of reconstructed primary electrons.

The detector response can be schematized in the following consecutive steps:

-

1.

conversion of the deposited energy to a certain number of detectable quanta (e.g. number of primary electrons) produced during the interaction;

-

2.

detection efficiency or non linearity effects that depend on the position of the events inside the TPC;

-

3.

resolution effects of the photodetectors (e.g. PMTs);

-

4.

effects induced by trigger and analysis event selection.

All these steps contribute to distorting the original theoretical energy spectrum into a different observed one.

4.1 From energy deposit to reconstructed electrons after recombination

The incoming particles interacting with the active volume inside the TPC can produce an ER or a NR. In terms of detector response, the difference in the deposited energy between the two cases lies in the production mechanisms of detectable quanta. This dependence is described by the ionization yield of an ER or a NR. The average number of primary ionization electrons produced is given by

where k denotes the ER or NR process, \(E_{k}\) is the energy deposited in the process k, \(Q_y^{k}\) is the k ionization yield per unit of energy and \(\varvec{\theta _{cal}}=\{a_0,\,a_1,\,a_2,\,a_3,\,C_{box}^{NR},\,f_B\}\) is the complete list of calibration parameters that regulates the ER or NR yield functions. The production of an ion–electron pair surviving recombination is a stochastic process, and it can be represented by a binomial process. The maximum number of electrons that can be produced at a given energy \(E_{NR}\) can be estimated as

where \(w = (19.5\pm 1.0)\,\textrm{eV}\) is the liquid argon average work function [69]. It follows that the probability that a certain amount of energy is released in the TPC in the form of an ion–electron pair can be estimated, using Eq. (7), as

As a result, the probability of having produced a certain number \(N_{e^-}\) of ion–electron pairs is given by the binomial distribution

where

The probability of having a certain number \(N_{e^-}\) of primary ionization electrons can be represented as

where \(\mathcal {N}\) is a normal distribution with \(\mu =\left\langle N_{e^-}^{ER} \right\rangle \), \(\sigma ~=~\sqrt{F \left\langle N_{e^-}^{ER} \right\rangle }\) and F is the Fano factor [70]. In this way the statistical fluctuations of the number of produced detectable quanta for ERs are implemented as normal fluctuations.

The expected spectrum in \(N_{e^-}\) after recombination can be computed from the probabilities in Eqs. (10) and (12). Defining \(x_j^{th}\) as the energy of the j-th bin and \(y_j^{th}\) the content of the j-th bin of the histogram of the theoretical ER or NR spectrum, we introduce the probability matrix \(\mathcal {M}^1\) as

where \(N_{e^-}^i\) is the number of primary ionization electrons in the expected spectrum. As a consequence, the expected spectrum \(S_i^{N_{e^-}}\) can be computed as the product of the \(\mathcal {M}^1\) matrix and the theoretical spectrum

where we have omitted the ER and NR indices to simplify the notation.

The \(\mathcal {M}^1\) probability matrix has some important properties. It is not a square matrix, and, since the total probability is one, the sum of the columns of the matrix is equal to unity

What is remarkable is that the \(\mathcal {M}^1\) matrix as defined in Eq. (13) explicitly depends on the calibration parameters, and thus their uncertainties play the role of the systematic uncertainties of our experiment. Taking into account these parameters directly inside the likelihood, would allow treating the systematic uncertainties in a very clean and straightforward way, without using multi-templates methods to propagate them. This will be clearer and more explicit in the next sections, where the analysis will be described in detail.

A different way to proceed must be considered for the Migdal effect because electrons both in the NR and ER channels are produced. Therefore, the total number of electrons produced in a single event is the sum of the electrons released by the NR and those released by the ER. The probability of releasing \(N_{e^-} = N_{e^-}^{NR}+N_{e^-}^{ER}\) primary ionization electrons, given a NR with energy \(E_{nr}\) and a Migdal emission depositing an energy \(E_{er}\), is given by

where we did not report the dependence on \(\varvec{\theta }_{cal}\) for shortness of notation. In the above equation, which is valid assuming no interference between the two energy releases during recombination, we can identify \(p(N_{e^-}^{NR} = N_{e^-}^k| E_{nr} = x_j^{th})\) with \(\mathcal {M}^{1,NR}_{k,j}\), see Eq. (13). Furthermore, \(p(N_{e^-}^{ER} = N_{e^-}^i - N_{e^-}^k| E_{er} = x_l^{th})\) is equal to \(\mathcal {M}^{1,ER}_{i-k,l}\), see Eq. (13), and \(p(E_{nr} = x_j^{th}, E_{er} = x_l^{th})\) is the double differential Migdal rate normalized to unity. Making the indices explicit, we obtain

where \(\tilde{R}\) indicates the normalized double differential Migdal rate, satisfying the condition

The Migdal expected spectrum \(S_i^{N_{e^-}, Mig}\) after recombination is simply obtained by substituting the normalized double differential Migdal rate in Eq. (17) with the original one.

4.2 Detector effects: efficiency, radial corrections, and PMT response

The two main effects that play a sizable role in the reduction of the S2 signal yield are the so-called radial correction and electron lifetime efficiency [61]. The former effect can be parameterized as an efficiency factor depending only on the radial position of the event. The latter is instead due to the electron lifetime inside the TPC, namely the probability that an ionization electron is captured by electro-negative impurities in the liquid chamber. This is a small effect that depends mainly on the distance between the event and the liquid–gas interface, and therefore on the event depth inside the TPC.

Since we are dealing with energy spectra where the information on the position of the events has been integrated out, we simulate the theoretical spectra retaining the energy and 3D position information. As a consequence, we have access to the position and the related total correction factor of each event. We use this information to numerically compute the pdf to have a certain correction \(\epsilon _{src}^{ch}\) in a given event. This pdf depends on the PMT channel and on the background/signal source spatial features. The result of this procedure is a set of numerical functions (one per source and PMT channel) over which we integrate to get the full convoluted effect. These pdfs depend only on the theoretical spectra, and do not depend on the calibration parameters. They can be therefore computed once before the final MCMC integration, and used as an input for the analysis.

An additional effect due to the PMT channel corrections has to be taken into account. In fact, the contributions from the various PMT channels are not simply summed up in the data spectrum. The PMT response has been equalized to the central PMT. As a consequence, we need to apply the same correction when implementing the detector response model to be compared with data. This correction is a simple multiplicative factor \(r_{ch}\) in the PMT energy response that depends on the PMT channel.

Finally, the PMT resolution effect can be treated as a Gaussian smearing effect, similar to the ER Fano fluctuations of Eq. (12). We can therefore express the probability that an event from the source src in the PMT channel ch is detected to have \(N_{e^-}^f = i\) extracted electrons having originally produced \(N_{e^-}^{(0)} = j\) electrons surviving recombination as

where \(\mu = \epsilon _{src}^{ch} r_{ch} j\), \(\sigma = S \sqrt{\epsilon _{src}^{ ch} r_{ch} j}\), \(b_w\) is the width of the bins of the final observed \(N_{e^-}\) histogram, \(\epsilon _{src}^{ch}\) is the overall correction factor and \(p(\epsilon _{src}^{ch})\) is its pdf as computed by means of the Monte Carlo. Furthermore, \(\mathcal {N}(x|\mu , \sigma )\) is the normal pdf with mean \(\mu \) and variance \(\sigma \), \(r_{ch}\) is the channel correction factor, and \(S = 0.27\) is a width factor that has been determined during the calibration. We then define a set of matrices

such that we can compute the final expected \(N_{e^-}\) spectrum \(S^f_{ch, src}\) in the PMT channel ch induced by the source src as

The \(\mathcal {M}^2\) matrix depends only on the channel corrections, on the width factor S and, by means of the pdf, on the theoretical spectra. These quantities do not depend on the calibration parameters \(\varvec{\theta }_{cal}\). They can be computed once before the final MCMC integration, and used as an input for the analysis. This gives great benefits in terms of performance, because it means that, unlike the two \(\mathcal {M}^1\) matrices, \(\mathcal {M}^2\) does not need to be computed at each step of the MCMC algorithm.

In conclusion, once we have \(S^f_{ch, src}\), we can obtain the final expected spectrum \(S^f_{ER, NR}\) by summing over all the PMT channels and over all the sources

We validated the new NR and ER response model on the implementation of code used for the calibration, for the published DS-50 and for the Migdal analyses [5, 6, 61], and based on a toy MC simulation of the detector response.

5 Likelihood and parameters

Bayesian network for the DS-50 low-mass analysis: the node \(x_i\) is the observed number of events in the i-th bin; \(\lambda _i\) is the intensity of the Poisson process in the i-th bin; the gray box contains the nodes and connections that are repeated over the total number of bins \(N_{bins}\); the dots represent the portion of the network implementing the detector response model in the form of the two smearing matrices \(\mathcal {M}^1\) and \(\mathcal {M}^2\); besides \(\sigma _\textrm{DM}\), which is directly related to \(r_S\) in our case, all the nodes outside the gray box are the free parameters of the likelihood, as described in Sect. 5

In the following, we assume a bin-by-bin Poisson likelihood defined as

where \(x_i\) denotes the number of events in the data spectrum in the i-th bin, \(\varvec{\theta }\) indicates generically all the parameters of the fit – i.e. the calibrations, the signal rate, and the background rates. In the case under consideration, \(\lambda _i(\varvec{\theta })\) is given by

This parametrization of \(\lambda \) is an extension to the one exploited in Ref. [39] to include the detector response model. Here \(S_i^{src}\) represent the expected background and signal spectra for the total exposure \(\mathcal {E_\textrm{DS}}\) as a result of the detector response, see Eq. (22). The variables \(r_{B, src}\) are proportional to the rate of the internal and external background components. Since, for simplicity, the background spectra are normalized to the DS-50 exposure, they are normalized such that \(r_{B, src} = 1\) corresponds to the case in which the exposure is equal to the DS-50 exposure.

The parameter \(r_{S}\) is proportional to the signal rate with a flat prior. The signal spectra are normalized to the DS-50 exposure and computed for \(\sigma _{SI}^{DM} = 10^{-38}\,\textrm{cm}^2\). Therefore, \(r_S = 1\) corresponds to \(\sigma _{SI}^{DM} = 10^{-38}\,\textrm{cm}^2\). The total exposure is denoted by \(\mathcal {E}\) and \(\mathcal {E}_\textrm{DS}\) is the DS-50 exposure of the analyzed dataset. The uncertainty on the exposure is implemented with a normal prior with a \(1.5\%\) standard deviation [5]. The various \(S_i^{src}\) spectra are in turn functions of a possibly different subsets of parameters encoding various aspects of the detector response and background model, and spectrum-specific uncertainties.

The complete likelihood is represented as a Bayesian Network in Fig. 3. As we discussed in Sect. 2, this representation makes clear the structure of Eqs. (23)–(24) and the dependence of the spectra on the various nuisance parameters. In the following, we give a detailed description of its implementation.

5.1 Signal spectra

The signal spectra are explicitly described with two contributions: one due to NR interactions \((S^{\textrm{NR}})\), and another one describing the Migdal effect \((S^{\textrm{Mig}})\). The nuclear recoil contribution is derived assuming the Standard Halo Model with a DM escape velocity \(v_{esc}=544\) km/s, the local standard at rest velocity \(v_0=238\) km/s, \(v_{Earth}=232\) km/s, and the DM density \(\rho _{DM}=0.3\) GeV/c\(^2\)/cm\(^3\) [71]. The Migdal effect contribution to the signal spectra has been computed as in Ref. [6] exploiting the Migdal probabilities computed in Ref. [33].

Figure 4 shows the signal spectra for DM masses of \(4.5\,\textrm{GeV}/c^2\) (blue), \(1.1\,\textrm{GeV}/c^2\) (orange) and \(0.42\,\textrm{GeV}/c^2\) (green), for \(\sigma _{\textrm{DM}}^{\textrm{SI}} = 10^{-38}\,\textrm{cm}^2\). The solid curves show only the NR contribution, while the dashed ones only the Migdal effect.

We are not considering any theoretical systematic uncertainty on the signal spectra, nevertheless they could be easily implemented as additional nuisance parameters.

5.2 Background spectra

The background contributions described in Sect. 3.2 and shown in Fig. 5 are separated in four main spectra, two internal induced by \(^{39}\)Ar and \(^{85}\)Kr, and two external produced by the materials of the PMT and the cryostat. They are denoted respectively with \(S^\textrm{Ar}\), \(S^\textrm{Kr}\), \(S^\textrm{PMT}\), and \(S^\textrm{cryo}\). The relative normalizations have been determined by dedicated measurements and Monte Carlo simulations, as described in Ref. [5]. Their systematic uncertainties are implemented as a normal prior centered in 1 and with a \(14\%\) (\({^{39}\textrm{Ar}}\)), \(4.7\%\) (\(^{85}\textrm{Kr}\)), \(12.6\%\) (PMT) and \(6.6\%\) (cryostat) standard deviation.

The so-called Asimov dataset, namely the expected background (blue line) in the \(N_{e^-} = \left[ 4, 170\right] \) range, assuming the DS-50 exposure. The different colored lines represent the expected contributions from the different background sources. The spectra have a 0.25 \(N_{e^-}\) binning for \(N_{e^-} < 20\) and a 1 \(N_{e^-}\) elsewhere, see text

The \(\mathrm{^{39}\!Ar}\) and the \(\mathrm{^{85}\!Kr}\) background spectra are affected by screening effects and Q-value uncertainties parametrized with \(\sigma _{scr}^\textrm{Ar, Kr}\) and \(\sigma _{Q}^\textrm{Ar, Kr}\), respectively. The Q-value uncertainties are implemented as two Gaussian parameters regulating the \({^{39}\textrm{Ar}}\) and \({^{85}\textrm{Kr}}\) spectra, with the intensity dependent on the energy. Specifically, the energy spectrum, for \(k= {^{39}\textrm{Ar}},\,{^{85}\textrm{Kr}}\), is given by:

where \(y_{th}^{k, (0)}(E_{er})\) is the central theoretical spectrum, \(r(E_{er})\) is the relative uncertainty due to the uncertainty on the Q-value, \(\varTheta \) is the Heaviside function, and \(\sigma _{scr}^k\) and \(\sigma _{Q}^k\) are both controlled by a standardized normal pdf \(\mathcal {N}(0,1)\) as prior function.

5.3 Spectra dependence on the detector response parameters

Both the signal and the background spectra depend on \(\varvec{\theta }_{cal}\) which represents the calibration parameters. The result of the calibration measurements [61] are implemented as a multivariate normal prior.

Equation (22) allows us to compute for any specific detector response configuration (identified by a choice of the parameters \(\varvec{\theta }_{cal}\)) the associated background and signal spectra. In this section, we give some examples on how the spectra change as a function of \(\varvec{\theta }_{cal}\) and show how our implementation is substantially different from constructing average spectra with the corresponding \(\pm \sigma \) variations.

(Left) Signal (left) \({^{39}\textrm{Ar}}\) background (right) spectra obtained by randomly selecting 30 points from the nuisance parameters pdf, the bottom plots show the relative difference with the spectrum obtained with the nominal parameter values (black solid line) and the average (pink solid curve) \(\pm \sigma \) envelopes (pink dashed lines) calculated bin by bin. (Right) Four different signal (left) and \({^{39}\textrm{Ar}}\) background spectra (right) showing significant shape differences with respect to the nominal spectrum, which is also shown; the bottom plot shows the nuisance parameters pulls for each of the spectra selected above

Figure 6 shows the effect of sampling from the prior pdf of the nuisance parameters onto the signal and the \({^{39}\textrm{Ar}}\) spectra. We chose the \({^{39}\textrm{Ar}}\) as a representative background. We show only 30 different randomly selected spectra to keep the plot readable. We stress that these spectra are not chosen on the basis of their shapes. The bottom part of each sub-figure shows the relative difference with respect to the spectrum obtained keeping all nuisance parameters to the central value of their prior (nominal spectrum). From these figures, it is visible how the nuisance parameters have a non-trivial effect on the spectra: (1) overall as well as in specific bins there is a sizable difference with respect to the reference spectrum; (2) more importantly, there is a significant shape difference, possibly with more than one crossing.

At any given value of \(N_e\) we can construct the pdf of the expected number of events and compute the expected value and the \(\pm \sigma \) intervals. From these values we can construct the average spectrum (solid pink curve), and the \(\pm \sigma \) (dash-dotted pink curve). Given the non-linearity of the problem, in general the envelope spectra are different from those computed with the corresponding choice of the \(\varvec{\theta }_{cal}\) parameters. Sizable differences are clearly visible both in total event yield and in shape, especially for the signal spectrum. In addition, the envelope spectrum loses part of the bin-bin correlations, and it may represent a spectrum which does not correspond to any specific configuration \(\tilde{\varvec{\theta }}_{cal}\) of the detector response. The method presented here naturally takes into account the correlations between the \(\varvec{\theta }_{cal}\) parameters,Footnote 3 and the correlation between different spectra induced by the \(\varvec{\theta }_{cal}\) parameters.

The right plots of Fig. 6 show four different signal and \({^{39}\textrm{Ar}}\) spectra that manifest large differences with respect to the nominal spectrum (black curve). For each of these spectra, the bottom plot shows the nuisance parameters pulls with respect to their prior expected values. Although these configurations are not very likely, \(2\,\sigma \) variations in the parameters’ value have a substantial impact on the spectrum, inducing effects as large as doubling the event yield in certain bins.

The implementation in the signal and background models of the systematic variation of the detector response described in this section is the key element for the correct uncertainty propagation in the fit results.

6 Fitting procedure and code implementation

The fit samples from the space of possible detector response models, computes the corresponding spectra both for the signal and backgrounds, tries to fit the observed spectrum, and finally weights the result by the probability of the specific response model sampled. In this way the average model and the corresponding uncertainty are computed from the pdf of the model that fits the observed spectra.

The posterior of all the parameters is sampled by means of the Metropolis-Hastings MCMC algorithm, as implemented in BAT [72, 73]. The BAT package is a set of C++ libraries implementing statistical tools for Bayesian analyses, that has been largely used in experimental and phenomenological analyses.

Since the mathematics behind each single step of the chain – i.e. how the calibration parameters are connected to the final spectra – is mostly linear algebra, this part of the code has been implemented on GPUs by means of the CUDA libraries [74]. A generic implementation of this method is provided in a public GitHub repository [75].

In all the following analyses, we used the standard BAT tools to guarantee that the Markov chains are stable and accurate. In particular, the convergence is obtained by means of the BAT pre-run phase that assures by tuning the Metropolis-Hastings MCMC parameters that all the parallel chains converge to the same region of the parameters’ phase space with an optimal Metropolis-Hastings MC rejection rate. In order to achieve the required statistical accuracy, the sampling is performed by means of 12 parallel MCMC chains, for a total number of steps equal to \(1.2 \times 10^6\).

From the implementation point of view, the background model or background-plus-signal model are implemented as an extension of the BAT BCModel class. The likelihood and the priors are customized following the prescriptions of the previous sections, and the linear algebra implemented in CUDA. The inputs of the fit, i.e. the theoretical spectra and the \(\mathcal {M}^2\) smearing matrices, are read at the constructor level. The GPU preliminary operations are also performed once inside the constructor, to optimize the sampling procedure. A complete fit, including also the prerun phase, takes \(4.8\,\textrm{h}\) (\(40\,\textrm{min}\) for prerun) with 12 treads on an Intel Xeon Silver 4216 CPU (\(2.1\,\textrm{GHz}\)) and a Nvidia GeForce RTX 3090 GPU. This roughly corresponds to a sampling rate of \(6.8\,\textrm{Hz}\) per chain.

7 Results

We compute the upper bound for the DM signal as the 90% Credible Interval (C.I.). This is defined as the value of \(\sigma _{SI}^{DM}(m_{DM})\) corresponding to the 90% quantile of the marginalized posterior pdf for \(r_S\).

In order to avoid possible biases coming from using data, we performed a blind analysis based on a pseudo-dataset. This dataset has been generated from the expected background template, the so-called Asimov dataset, which is plotted in Fig. 5.

If not specified otherwise, all the fits are performed in the full \(N_{e^-} = \left[ 4, 170\right] \) range. Below \(N_{e^-} = 4\), the data are indeed dominated by correlated events, mostly due to the contamination of spurious electrons [5]. On the other hand, the upper threshold is chosen as the maximum point at which the detector response calibrations have been validated, namely \(N_{e^-} = 170\) [61].

The binning of the observed spectrum is denser (0.25 \(N_{e^-}\) binning) in the \(N_{e^-}<20\) region with respect to the higher \(N_{e^-}\) region (1 \(N_{e^-}\) binning). Since the DM signal is exponentially falling, having a denser binning in the low \(N_{e^-}\) region has a beneficial 5–10% impact on the sensitivity in the whole mass region explored by our analysis.

7.1 Impact of the systematic uncertainties on the limit

As a first study, we investigate the impact of the systematic uncertainties on the final limit. For simplicity, we focus on a single DM mass value \(m_{DM} = 4.5\,\textrm{GeV}/c^2\), in which the Migdal effect contribution to the signal spectrum is negligible. We arranged the nuisance parameters in the following 3 groups

-

1.

group CAL: \(a_0\), \(a_1\), \(a_2\), \(a_3\), \(C_{box}^{NR}\), \(f_B\), which represent the calibration parameters;

-

2.

group TH: \(\sigma _{scr}^\textrm{Ar, Kr}\), \(\sigma _Q^\textrm{Ar, Kr}\), which represent systematic uncertainties on the theoretical background spectra;

-

3.

group NORM: E, \(r_{B, src}\), which represent the systematic uncertainties on the normalization of the background spectra.

We therefore performed 6 fits, with the following assumptions:

-

1.

All the systematic parameters are free;

-

2.

The group CAL is free, while all the other parameters are fixed to their expected values;

-

3.

The group NORM+TH are free, while all the other parameters are fixed to their expected values;

-

4.

The group TH is free, while all the other parameters are fixed to their expected values;

-

5.

The group NORM is free, while all the other parameters are fixed to their expected values;

-

6.

All the systematic parameters are fixed to their expected values. This corresponds to a statistic-only fit, with \(r_S\) as the only active parameter.

The results in terms of the sensitivity to the DM cross-section are given in Table 1.

There are two relevant differences between our innovative implementation and the published one. First of all the published approach computes the sensitivity as the frequentist 90% C.L., while here we perform the analysis in the Bayesian approach, and we quote the \(90\%\) quantile of the posterior pdf of the signal strength parameter \(r_S\) [10]. Even if the two quantities aim at expressing the experimental sensitivity, they are conceptually different, and are defined in totally different ways. However, when operating with the same inputs, they should give numerically comparable results. In addition, when we look at the posterior of \(r_S\), we are integrating over all the nuisance parameter space, while the published approach is based on the profiling of the likelihood. If the likelihood is Gaussian the marginalization and the profiling give the same result. On the other hand, in the general case in which the Gaussian assumption is not valid, the profiling procedure typically returns underestimated propagated uncertainties, and as a consequence stronger limits [76].

Posterior pdfs obtained by fitting in different energy ranges a pseudo-dataset with the full background plus signal model assuming a DM mass \(m_{DM} = 4.5\,\textrm{GeV}/c^2\). The blue shaded histograms correspond to the \(N_{e^-} = \left[ 4,30\right] \) window, the orange shaded histograms correspond to the \(N_{e^-} = \left[ 4,170\right] \) window, and the gray dashed line are the prior pdf of each parameter

We find that the impact of the systematic uncertainties on the calibration parameters has the same size as the impact of all the other systematic parameters, including the parameters regulating the normalization of the background and the theoretical uncertainties on the input spectra, and therefore the correct propagation of their uncertainty is relevant in the final result. For completeness, we report in Fig. 10 in Appendix the full multidimensional posterior pdfs of the complete fit, in which all the systematic parameters are not fixed.

As a further crosscheck we also studied the dependence of the parameters’ mean and standard uncertainty of the posterior pdfs as a function of the DM mass \(m_{DM}\): none of the parameters shows a strong correlation with the DM mass, demonstrating the stability of the fitting procedure.

7.2 Impact of the high \(N_{e^-}\) data points on the limit and the calibration parameters

In this subsection we show the impact of choosing different \(N_{e^-}\) windows on the calibration parameters’ posterior pdfs, and, as a consequence, on the upper limit on the DM cross-section. Since the priors on the calibration parameters correspond to the constraints from the calibration, the differences between priors and posteriors tell us that the data give additional information with respect to the calibration measurements. A better knowledge on the calibration parameters allows obtaining a limit less affected by the systematic uncertainties – see the “None” result of Table 1. In order to investigate this effect, we decided to perform a fit to the expected pseudo-dataset in the regions \(N_{e^-} = \left[ 4,30\right] \) and \(N_{e^-} = \left[ 4,170\right] \). The long high \(N_{e^-}\) tail provides better constraints on the calibration parameters.

In Fig. 7 we show the results of two background plus signal fits on the pseudo-dataset performed in the regions \(N_{e^-} = \left[ 4,30\right] \) and \(N_{e^-} = \left[ 4,170\right] \) (orange and blue shaded histograms, respectively) with respect to the prior pdf (gray dashed line), assuming a DM mass \(m_{{DM}} = 4.5\,\textrm{GeV}/c^2\). The figure shows how the width of the calibration parameters’ posteriors are smaller in the \(N_{e^-} = \left[ 4,170\right] \) case rather than both in the prior and the \(N_{e^-} = \left[ 4,30\right] \) cases. In particular, the posterior pdfs of the ER group are more constrained than the prior pdfs. This behavior does not depend on possible features in the data, since this study is performed on Asimov pseudo-dataset. On the other hand, the posterior pdfs of the NR group are similar to their priors. This is expected since in the pseudo-dataset there is no injected signal and the NR signal is relevant only in the first few bins. As a consequence, the larger data-set does not give additional information on the calibration parameters with respect to the calibration measurements. For this reason we obtain the best sensitivity in the \(N_{e^-} = \left[ 4,170\right] \) case – i.e. \(\sigma _{SI}^{DM} \left[ 90\% \,\mathrm{C.I.}\right] = 2.1\times 10^{-43}\,\mathrm{cm^2}\) – while in the \(N_{e^-} = \left[ 4,30\right] \) the sensitivity is weaker – i.e. \(\sigma _{SI}^{DM} \left[ 90\% \,\mathrm{C.I.}\right] = 2.5\times 10^{-43}\,\mathrm{cm^2}\). The other parameters, not shown in the figure, do not exhibit a significant dependence on the range of the fit.

In summary, in this new implementation of the Bayesian approach we complement the calibration results by further constraining some of its parameters, thus reducing their uncertainties by a factor of \(\sim 2\). We can directly benefit from this improvement by having a stronger bound on the signal and being able to justify it in terms of a better knowledge of the calibration parameters.

7.3 Background-only fit on data

We perform a background-only fit on the data: the best fit (top) and the correspondent normalized residuals (bottom) are reported in Fig. 8. The figure shows that the background model describes the observed data without noticeable deviations or excesses. The behavior of the residual is satisfactory and their distribution over all the data points is consistent with the expectation of a standard normal pdf.

In terms of posterior pdf on the fit parameters, no significant deviation from the prior distribution (the results of the calibration measurements) is observed. In Fig. 12 in 1 we report the full multidimensional posterior of the fit.

7.4 Expected and observed limit of the DarkSide-50 experiment

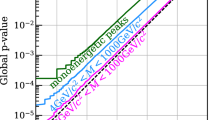

Figure 9 shows the \(90\%\,\mathrm{C.I.}\) expected sensitivity on the DM cross-section \(\sigma _{SI}^{DM}\) as a function of the DM mass (pink dashed line), using the Asimov (background only) dataset of Fig. 5.

The observed limit is shown as a red solid line in Fig. 9. It is computed as the \(90\%\,\mathrm{C.I.}\) bound on the DM cross-section \(\sigma _{SI}^{DM}\) as a function of the DM mass using the full DS-50 dataset.

This line is compared with the most competitive exclusion limits, defined as frequentist 90% C.L.s, in the GeV and sub-GeV DM mass region, i.e. the XENON1T Migdal search [2] (blue line), the XENON1T NR search [3] (blue dashed-dot line), the CRESST-III result [77] (green line), the PandaX-4T result [4] (orange line), and the CDEX result [8, 78] (black line).

No relevant deviations with respect to the expected sensitivity are observed. We performed the study of the behavior of the posterior pdf of the nuisance parameters as a function of the mass of the DM candidate, obtaining similar results, reported in Appendix.

The \(90\%\,\mathrm{C.I.}\) observed limit on the DM cross-section \(\sigma _{SI}^{DM}\) as a function of the DM mass (red solid line) with the inclusion of the Migdal effect for the final DS-50 exposure of \(12202\pm 180\) kg d. The blue line is the frequentist 90% C.L. from the XENON1T Migdal search [2], the blue dashed-dot line is the one from the XENON1T NR search [3], the green line is the one by the CRESST-III experiment [77], the orange line is the one by the PandaX-4T experiment [4], the black line is the one by the CDEX experiment [8, 78], and the purple line is the one from the 2018 DS-50 pure NR analysis [1]. The pink dashed line is the expected sensitivity

8 Conclusions

In this work, we illustrated a novel technique, based on Bayesian Networks, to explicitly include the detector response model in the likelihood function of a measurement. This approach has the advantage with respect to the more conventional profile likelihood methods to not assume “Gaussianity” of the pdfs nor linearity of the problem, to not rely on any signal or background spectrum morphing, and to keep the dependence of the parameter of interest on the response model parameters. In this way, the result of the analysis is not only the measurement of the parameter of interest, but also a possible constraint on the response model. We deployed this method to the search for low mass dark matter with the DS-50 experiment and showed that we obtain consistent results with the recently published analysis [5], and we further constrain the parameters of the detector response model.

In the first sections of this work we introduced the Bayesian Networks approach to the description of a multidimensional pdfs. Such a method gives a clear picture of the connections between the different variables involved in the problem, allowing to identify groups of variable that decouple for the rest the problem. Figure 3 describes the entire likelihood of the measurement and shows the role of the various parameters and the information flow from one another. The description of the problem in terms of BN allowed us to develop an inference algorithm based on a Markov Chain Monte Carlo (MCMC) to compute the posterior probability. This probability is constrained on the observed data, while retaining the dependence on each single parameter. A clever description of the detector response model in terms of parametric matrices allows the exploration of the impact of systematic variations of any parameter onto the final results. The proposed treatment significantly improves the understanding of the interplay between calibrations and spectra, as we elaborated in Sect. 4.2 and as it is visible in Fig. 6. In addition, we provide a further insight on the systematic uncertainties induced by the detector model, and, using the additional constraining power of the analyzed data, reduce the uncertainty on several parameters of the detector model.

Graphical representation of the joint posterior pdf of the fit on the expected pseudo-dataset assuming a DM mass \(m_\chi = 4.5\,\textrm{GeV}/c^2\). The plots on the diagonal of the figure are the uni-dimensional pdf of each single parameter obtained by marginalizing on all the others. The bi-dimensional pdfs in the bottom-left corner of the figure give the joint pdfs of each pair of parameters obtained by marginalizing on the others. The plots show also the credible regions at \(68\%,\,95\%,\,99.7\%\) probability as solid contour lines. The correlation coefficients are given in the upper-right corner of the figure

Evolution of the posterior pdfs as a function of the DM mass. The blue points represent the mean of the posterior pdf and their uncertainty bar is the correspondent standard deviation. In each plot, the gray solid line is the mean of the prior pdf, while the two gray dashed lines correspond to the standard deviation of the prior pdf

Graphical representation of the joint posterior pdf of the background only fit on the DS-50 observed dataset. The plots on the diagonal of the figure are the uni-dimensional pdf of each single parameter obtained by marginalizing on all the others. The bi-dimensional pdfs in the bottom-left corner of the figure give the joint pdfs of each pair of parameters obtained by marginalizing on the others. The plots show also the credible regions at \(68\%,\,95\%,\,99.7\%\) probability as solid contour lines. The correlation coefficients are given in the upper-right corner of the figure

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The data relevant to this publication are reported in form of published plots. No public database or data repository is associated with the article, yet. However, these data will be soon made available on the collaboration website https://darkside.lngs.infn.it.]

Notes

This procedure represents the most common approach in Frequentistic analyses to take systematic uncertainties into account.

We use the following parameterization: \(a_0 = \gamma \rho \,\textrm{keV}\), \(a_1 = 1/\gamma \), \(a_2 = p_0\), and \(a_3 = p_1\), where \(\gamma \), \(\rho \), \(p_0\) and \(p_1\) are the parameters of the extended Thomas–Imel box model defined in Ref. [61].

No assumption on \(\varvec{\theta }_{cal}\) is needed to produce the \(\pm \sigma \) spectra because their joint pdf are included in the method.

References

DarkSide Collaboration, P. Agnes et al., Low-mass dark matter search with the DarkSide-50 experiment. Phys. Rev. Lett. 121(8), 081307 (2018). arXiv:1802.06994

XENON Collaboration, E. Aprile et al., Search for light dark matter interactions enhanced by the Migdal effect or Bremsstrahlung in XENON1T. Phys. Rev. Lett. 123(24), 241803 (2019). arXiv:1907.12771

XENON Collaboration, E. Aprile et al., Light dark matter search with ionization signals in XENON1T. Phys. Rev. Lett. 123(25), 251801 (2019). arXiv:1907.11485

PandaX-4T Collaboration, Y. Meng et al., Dark matter search results from the PandaX-4T commissioning run. Phys. Rev. Lett. 127(26), 261802 (2021). arXiv:2107.13438

DarkSide-50 Collaboration, P. Agnes et al., Search for low-mass dark matter WIMPs with 12 ton-day exposure of DarkSide-50. arXiv:2207.11966

DarkSide Collaboration, P. Agnes et al., Search for dark matter–nucleon interactions via Migdal effect with DarkSide-50. arXiv:2207.11967

CRESST Collaboration, A.H. Abdelhameed et al., First results from the CRESST-III low-mass dark matter program. Phys. Rev. D 100(10), 102002 (2019). arXiv:1904.00498

CDEX Collaboration, Z.Z. Liu et al., Studies of the Earth shielding effect to direct dark matter searches at the China Jinping Underground Laboratory. Phys. Rev. D 105(5), 052005 (2022). arXiv:2111.11243

G. Cowan, K. Cranmer, E. Gross, O. Vitells, Asymptotic formulae for likelihood-based tests of new physics. Eur. Phys. J. C 71, 1554 (2011). arXiv:1007.1727 [Erratum: Eur. Phys. J. C 73, 2501 (2013)]

Particle Data Group Collaboration, P.A. Zyla et al., Review of particle physics. PTEP 2020(8), 083C01 (2020)

M. Baak, S. Gadatsch, R. Harrington, W. Verkerke, Interpolation between multi-dimensional histograms using a new non-linear moment morphing method. Nucl. Instrum. Meth. A 771, 39–48 (2015). arXiv:1410.7388

J. Aalbers, B. Pelssers, V.C. Antochi, P.L. Tan, J. Conrad, Finding dark matter faster with explicit profile likelihoods. Phys. Rev. D 102(7), 072010 (2020). arXiv:2003.12483

LUX Collaboration, D.S. Akerib et al., Fast and flexible analysis of direct dark matter search data with machine. arXiv:2201.05734

R.S. James, J. Palmer, A. Kaboth, C. Ghag, J. Aalbers, FlameNEST: explicit profile likelihoods with the Noble Element Simulation Technique. JINST 17(08), P08012 (2022). arXiv:2204.13621

I. Coarasa et al., Improving ANAIS-112 sensitivity to DAMA/LIBRA signal with machine learning techniques. JCAP 11, 048 (2022). arXiv:2209.14113

J. Herrero-Garcia, R. Patrick, A. Scaffidi, A semi-supervised approach to dark matter searches in direct detection data with machine learning. JCAP 02(02), 039 (2022). arXiv:2110.12248

J. Pearl, Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference (Morgan-Kaufmann, Burlington, 1988)

F.V. Jensen, An Introduction to Bayesian Networks (UCL Press, London, 1996)

G. D’Agostini, Bayesian Reasoning in Data Analysis: A Critical Introduction (2003)

D. Koller, N. Friedman, Probabilistic Graphical Models: Principles and Techniques (MIT Press, Cambridge, 2009)

R.A. Howard, J.E. Matheson, Readings on the Principles and Applications of Decision Analysis (Strategic Decisions Group, 1989)

J. Smith, The Annals of Statistics (1989)

T. Verma, J. Pearl, in 4th Workshop on Uncertainty in Artificial Intelligence (1988)

D. Geiger, J. Pearl, in Proceedings of the Fourth Conference on Uncertainty in Artificial Intelligence (1988)

D. Geiger, T. Verma, J. Pearl, Machine Intelligence and Pattern Recognition, vol. 10 (Elsevier, Amsterdam, 1990)

D. Geiger, T. Verma, J. Pearl, Networks 20, 507 (1990)

C. Peters, A. Higuera, S. Liang, V. Roy, W.U. Bajwa, H. Shatkay, C.D. Tunnell, A Method for Quantifying Position Reconstruction Uncertainty in Astroparticle Physics using Bayesian Networks. arXiv:2205.10305

G. D’Agostini, Fits, and especially linear fits, with errors on both axes, extra variance of the data points and other complications. arXiv:physics/0511182

G. D’Agostini, Improved iterative Bayesian unfolding. arXiv e-prints (2010). arXiv:1010.0632

G. D’Agostini, Asymmetric uncertainties: sources, treatment and potential dangers. arXiv:physics/0403086

A. Migdal, Ionization of atoms accompanying \(\alpha \)- and \(\beta \)-decay. J. Phys. USSR 4, 449 (1941)

R. Bernabei et al., On electromagnetic contributions in WIMP quests. Int. J. Mod. Phys. A 22, 3155–3168 (2007). arXiv:0706.1421

M. Ibe, W. Nakano, Y. Shoji, K. Suzuki, Migdal effect in dark matter direct detection experiments. JHEP 03, 194 (2018). arXiv:1707.07258

M.J. Dolan, F. Kahlhoefer, C. McCabe, Directly detecting sub-GeV dark matter with electrons from nuclear scattering. Phys. Rev. Lett. 121(10), 101801 (2018). arXiv:1711.09906

N.F. Bell, J.B. Dent, J.L. Newstead, S. Sabharwal, T.J. Weiler, Migdal effect and photon bremsstrahlung in effective field theories of dark matter direct detection and coherent elastic neutrino-nucleus scattering. Phys. Rev. D 101(1), 015012 (2020). arXiv:1905.00046

D. Baxter, Y. Kahn, G. Krnjaic, Electron ionization via dark matter-electron scattering and the Migdal effect. Phys. Rev. D 101(7), 076014 (2020). arXiv:1908.00012

R. Essig, J. Pradler, M. Sholapurkar, T.-T. Yu, Relation between the Migdal effect and dark matter-electron scattering in isolated atoms and semiconductors. Phys. Rev. Lett. 124(2), 021801 (2020). arXiv:1908.10881

Z.-L. Liang, L. Zhang, F. Zheng, P. Zhang, Describing Migdal effects in diamond crystal with atom-centered localized Wannier functions. Phys. Rev. D 102(4), 043007 (2020). arXiv:1912.13484

G. Grilli di Cortona, A. Messina, S. Piacentini, Migdal effect and photon Bremsstrahlung: improving the sensitivity to light dark matter of liquid argon experiments. JHEP 11, 034 (2020). arXiv:2006.02453

C.P. Liu, C.-P. Wu, H.-C. Chi, J.-W. Chen, Model-independent determination of the Migdal effect via photoabsorption. Phys. Rev. D 102(12), 121303 (2020). arXiv:2007.10965

U.K. Dey, T.N. Maity, T.S. Ray, Prospects of Migdal effect in the explanation of XENON1T electron recoil excess. Phys. Lett. B 811, 135900 (2020). arXiv:2006.12529

S. Knapen, J. Kozaczuk, T. Lin, Migdal effect in semiconductors. Phys. Rev. Lett. 127(8), 081805 (2021). arXiv:2011.09496

N.F. Bell, J.B. Dent, B. Dutta, S. Ghosh, J. Kumar, J.L. Newstead, Low-mass inelastic dark matter direct detection via the Migdal effect. Phys. Rev. D 104(7), 076013 (2021). arXiv:2103.05890

J.F. Acevedo, J. Bramante, A. Goodman, Accelerating composite dark matter discovery with nuclear recoils and the Migdal effect. Phys. Rev. D 105(2), 023012 (2022). arXiv:2108.10889

W. Wang, K.-Y. Wu, L. Wu, B. Zhu, Direct Detection of Spin-Dependent Sub-GeV Dark Matter via Migdal Effect. arXiv:2112.06492

LUX Collaboration, D.S. Akerib et al., Results of a search for sub-GeV dark matter using 2013 LUX data. Phys. Rev. Lett. 122(13), 131301 (2019). arXiv:1811.11241

EDELWEISS Collaboration, E. Armengaud et al., Searching for low-mass dark matter particles with a massive Ge bolometer operated above-ground. Phys. Rev. D 99(8), 082003 (2019). arXiv:1901.03588

CDEX Collaboration, Z.Z. Liu et al., Constraints on spin-independent nucleus scattering with sub-GeV weakly interacting massive particle dark matter from the CDEX-1B experiment at the China Jinping Underground Laboratory. Phys. Rev. Lett. 123(16), 161301 (2019). arXiv:1905.00354

XENON Collaboration, E. Aprile et al., Search for light dark matter interactions enhanced by the Migdal effect or Bremsstrahlung in XENON1T. Phys. Rev. Lett. 123(24), 241803 (2019). arXiv:1907.12771

COSINE-100 Collaboration, G. Adhikari et al., Searching for low-mass dark matter via Migdal effect in COSINE-100. arXiv:2110.05806

P. Astone, G. D’Agostini, Inferring the intensity of Poisson processes at the limit of the detector sensitivity (with a case study on gravitational wave burst search). arXiv:hep-ex/9909047

G. D’Agostini, About probabilistic inference and forecasting by playing with multivariate normal distributions. arXiv:1504.02065

DarkSide Collaboration, P. Agnes et al., First results from the DarkSide-50 dark matter experiment at Laboratori Nazionali del Gran Sasso. Phys. Lett. B 743, 456–466 (2015). arXiv:1410.0653

XENON Collaboration, E. Aprile et al., Dark matter search results from a one ton-year exposure of XENON1T. Phys. Rev. Lett. 121(11), 111302 (2018). arXiv:1805.12562

LUX Collaboration, D.S. Akerib et al., Results from a search for dark matter in the complete LUX exposure. Phys. Rev. Lett. 118(2), 021303 (2017). arXiv:1608.07648

PandaX Collaboration, X. Cao et al., PandaX: a liquid xenon dark matter experiment at CJPL. Sci. China Phys. Mech. Astron. 57, 1476–1494 (2014). arXiv:1405.2882

DarkSide Collaboration, P. Agnes et al., Results from the first use of low radioactivity argon in a dark matter search. Phys. Rev. D 93(8), 081101 (2016). arXiv:1510.00702 [Addendum: Phys. Rev. D 95, 069901 (2017)]

DarkSide Collaboration, P. Agnes et al., Low-mass dark matter search with the DarkSide-50 experiment. Phys. Rev. Lett. 121(8), 081307 (2018). arXiv:1802.06994

DarkSide Collaboration, P. Agnes et al., DarkSide-50 532-day dark matter search with low-radioactivity argon. Phys. Rev. D 98(10), 102006 (2018). arXiv:1802.07198

DarkSide Collaboration, P. Agnes et al., Constraints on sub-GeV dark-matter–electron scattering from the DarkSide-50 experiment. Phys. Rev. Lett. 121(11), 111303 (2018). arXiv:1802.06998

DarkSide Collaboration, P. Agnes et al., Calibration of the liquid argon ionization response to low energy electronic and nuclear recoils with DarkSide-50. Phys. Rev. D 104(8), 082005 (2021). arXiv:2107.08087

X. Mougeot, C. Bisch, Consistent calculation of the screening and exchange effects in allowed \({\beta }^{-}\) transitions. Phys. Rev. A 90, 012501 (2014)

S.J. Haselschwardt, J. Kostensalo, X. Mougeot, J. Suhonen, Improved calculations of beta decay backgrounds to new physics in liquid xenon detectors. Phys. Rev. C 102, 065501 (2020). arXiv:2007.13686

M. Wang, W.J. Huang, F.G. Kondev, G. Audi, S. Naimi, The AME 2020 atomic mass evaluation (II). Tables, graphs and references. Chin. Phys. C 45(3), 030003 (2021)

J. Thomas, D.A. Imel, Recombination of electron–ion pairs in liquid argon and liquid xenon. Phys. Rev. A 36, 614–616 (1987)

F. Bezrukov, F. Kahlhoefer, M. Lindner, F. Kahlhoefer, M. Lindner, Interplay between scintillation and ionization in liquid xenon Dark Matter searches. Astropart. Phys. 35, 119–127 (2011). arXiv:1011.3990

SCENE Collaboration, H. Cao et al., Measurement of scintillation and ionization yield and scintillation pulse shape from nuclear recoils in liquid argon. Phys. Rev. D 91, 092007 (2015). arXiv:1406.4825

P. Agnes et al., Measurement of the liquid argon energy response to nuclear and electronic recoils. Phys. Rev. D 97(11), 112005 (2018). arXiv:1801.06653

T. Doke, A. Hitachi, J. Kikuchi, K. Masuda, H. Okada, E. Shibamura, Absolute scintillation yields in liquid argon and xenon for various particles. Jpn. J. Appl. Phys. 41, 1538–1545 (2002)

U. Fano, Ionization yield of radiations. 2. The fluctuations of the number of ions. Phys. Rev. 72, 26–29 (1947)

D. Baxter et al., Recommended conventions for reporting results from direct dark matter searches. Eur. Phys. J. C 81(10), 907 (2021). arXiv:2105.00599

A. Caldwell, D. Kollár, K. Kröninger, BAT—the Bayesian analysis toolkit. Comput. Phys. Commun. 180, 2197–2209 (2009). arXiv:0808.2552

F. Beaujean, A. Caldwell, D. Greenwald, D. Kollar, K. Kröninger, O. Schulz, Bayesian Analysis Toolkit: BAT. https://github.com/bat/bat

NVIDIA, P. Vingelmann, F.H. Fitzek, CUDA, release: 10.2.89 (2020)

S. Piacentini, “lowmass-bat.” https://github.com/piacent/darkside50_BN (2022)

T.J. Loredo, Accounting for source uncertainties in analyses of astronomical survey data. AIP Conf. Proc. 735(1), 195–206 (2004). arXiv:astro-ph/0409387

CRESST Collaboration, A. Abdelhameed et al., First results from the CRESST-III low-mass dark matter program. Phys. Rev. D 100(10), 102002 (2019). arXiv:1904.00498

CDEX Collaboration, H. Jiang et al., Limits on light weakly interacting massive particles from the first 102.8 kg \({\times }\) day data of the CDEX-10 experiment. Phys. Rev. Lett. 120(24), 241301 (2018). arXiv:1802.09016

Acknowledgements

The DarkSide Collaboration offers its profound gratitude to the LNGS and its staff for their invaluable technical and logistical support. We also thank the Fermilab Particle Physics, Scientific, and Core Computing Divisions. Construction and operation of the DarkSide-50 detector was supported by the U.S. National Science Foundation (NSF) (Grants No. PHY-0919363, No. PHY-1004072, No. PHY-1004054, No. PHY-1242585, No. PHY-1314483, No. PHY-1314501, No. PHY-1314507, No. PHY-1352795, No. PHY-1622415, and associated collaborative grants No. PHY-1211308 and No. PHY-1455351), the Italian Istituto Nazionale di Fisica Nucleare, the U.S. Department of Energy (Contracts No. DE-FG02-91ER40671, No. DEAC02-07CH11359, and No. DE-AC05-76RL01830), the Polish NCN (Grant No. UMO-2019/33/B/ST2/02884) and the Polish Ministry for Education and Science (Grant No. 6811/IA/SP/2018). We also acknowledge financial support from the French Institut National de Physique Nucléaire et de Physique des Particules (IN2P3), the IN2P3-COPIN consortium (Grant No. 20-152), and the UnivEarthS LabEx program (Grants No. ANR-10-LABX-0023 and No. ANR-18-IDEX-0001), from the São Paulo Research Foundation (FAPESP) (Grant No. 2016/09084-0), from the Interdisciplinary Scientific and Educational School of Moscow University “Fundamental and Applied Space Research”, from the Program of the Ministry of Education and Science of the Russian Federation for higher education establishments, project No. FZWG-2020-0032 (2019-1569), from IRAP AstroCeNT funded by FNP from ERDF, and from the Science and Technology Facilities Council, United Kingdom. I. Albuquerque is partially supported by the Brazilian Research Council (CNPq). This project has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No 952480. Isotopes used in this research were supplied by the United States Department of Energy Office of Science by the Isotope Program in the Office of Nuclear Physics.

Author information

Authors and Affiliations

Consortia

Corresponding author

Appendices

A Expected joint posterior pdf

In Fig. 10 we report the joint posterior pdf of the fit on the expected pseudo-dataset assuming a DM mass \(m_\chi = 4.5\,\textrm{GeV}/c^2\), and the evolution of the posterior pdfs as a function of the mass is reported in Fig. 11.

B Background only joint posterior pdf

In Fig. 12 we report joint posterior pdf of the background only fit on the DS-50 observed dataset.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Agnes, P., Albuquerque, I.F.M., Alexander, T. et al. Search for low mass dark matter in DarkSide-50: the bayesian network approach. Eur. Phys. J. C 83, 322 (2023). https://doi.org/10.1140/epjc/s10052-023-11410-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-023-11410-4