Abstract

Parameter estimation via unbinned maximum likelihood fits is central for many analyses performed in high energy physics. Unbinned maximum likelihood fits using event weights, for example to statistically subtract background contributions via the sPlot formalism, or to correct for acceptance effects, have recently seen increasing use in the community. However, it is well known that the naive approach to the estimation of parameter uncertainties via the second derivative of the logarithmic likelihood does not yield confidence intervals with the correct coverage in the presence of event weights. This paper derives the asymptotically correct expressions and compares them with several commonly used approaches for the determination of parameter uncertainties, some of which are shown to not generally be asymptotically correct. In addition, the effect of uncertainties on event weights is discussed, including uncertainties that can arise from the presence of nuisance parameters in the determination of sWeights.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Unbinned maximum likelihood fits are an essential tool for parameter estimation in high energy physics, due to the desirable features of the maximum likelihood estimator. In the asymptotic limit the maximum likelihood estimator is normally distributed around the true parameter value and its variance is equal to the minimum variance bound [1, 2]. Furthermore, in the unbinned approach no information is lost due to binning.

The inclusion of weights into the maximum likelihood formalism is desirable in many applications. Examples are the statistical subtraction of background events in the sPlot formalism [3] through the use of per-event weights, and the correction of acceptance effects via weighting by the inverse efficiency. However, with the inclusion of per-event weights the confidence intervals determined by the inverse second derivative of the negative logarithmic likelihood (in the multidimensional case the inverse of the Hessian matrix of the negative logarithmic likelihood) are no longer asymptotically correct.Footnote 1 There are several approaches that are commonly used to determine confidence intervals in the presence of event weights. However, as will be shown below, not all of these techniques are guaranteed to give asymptotically correct coverage. In this paper, the asymptotically correct expressions for the determination of parameter uncertainties will be derived and then compared with these approaches.

This paper is structured as follows: In Sect. 2 the unbinned maximum likelihood formalism is briefly summarised and the inclusion of event weights is discussed. The asymptotically correct expression for static event weights is derived in Sect. 2.1 and compared with several commonly used approaches to determine uncertainties in weighted maximum likelihood fits in Sect. 2.2. Section 3.1 details the correction of acceptance effects through event weights and discusses the impact of weight uncertainties in this context. Section 3.2 derives the asymptotically correct expressions for parameter uncertainties from fits of sWeighted data, and also details the impact of potential nuisance parameters present in the determination of sWeights. Different approaches to the determination of parameter uncertainties are compared and contrasted using two specific examples in Sect. 4, an angular fit correcting for an acceptance effect (Sect. 4.1) and the determination of a lifetime when statistically subtracting background events using sWeights (Sect. 4.2). Finally, conclusions are given in Sect. 5.

2 Unbinned maximum likelihood fits and event weights

The maximum likelihood estimator for a set of \(N_P\) parameters \(\varvec{\lambda }=\left\{ \lambda _{1},\ldots ,\lambda _{N_P}\right\} \), given N independent and identically distributed measurements \(\varvec{x}=\left\{ x_1,\ldots ,x_N\right\} \), is determined by solving (typically numerically using a software package like Minuit [5]) the maximum likelihood condition

where \({{\mathcal {P}}}(x_e;\varvec{\lambda })\) denotes the probability density function evaluated for the event \(x_e\) and parameters \(\varvec{\lambda }\). Maximising the logarithmic likelihood \(\ln {{\mathcal {L}}}\) finds the parameters \(\hat{\varvec{\lambda }}\) for which the measured data \(\varvec{x}\) becomes the most likely. The covariance matrix \(\varvec{C}\) for the parameters in the absence of event weights can be calculated from the inverse matrix of second derivatives (the Hessian matrix) of the negative logarithmic likelihood

evaluated at \(\varvec{\lambda }=\hat{\varvec{\lambda }}\). When including event weights \(w_{e=1,\ldots ,N}\) to give each measurement a specific weight the maximum likelihood condition becomesFootnote 2

Depending on the application, the weight \(w_e\) can be a function of \(x_e\) and other event quantities \(y_e\) that \({{\mathcal {P}}}(x_e;\varvec{\lambda })\) does not depend on directly. For efficiency corrections the weights are given by \(w_e(x_e,y_e)=1/\epsilon (x_e,y_e)\) as detailed in Sect. 3.1, for sWeights the weights \(w_e(y_e)\) depend on the discriminating variable y as described in Sect. 3.2. It should be noted, that the weighted inverse Hessian matrix

will generally not give asymptotically correct confidence intervals. This can be most easily seen when assuming constant weights \(w_e=w\) which will result in an over-estimation (\(w>1\)) or under-estimation (\(w<1\)) of the statistical power of the sample and confidence intervals that thus under- or overcover.

2.1 Asymptotically correct uncertainties in the presence of per-event weights

To derive the parameter variance in the presence of event weights, which for now are considered to be static, the simple case of a single parameter \(\lambda \) is discussed first. In this case, the estimator \({\hat{\lambda }}\) is defined implicitly by the condition

which is referred to as an estimating equation in the statistical literature. A central prerequisite for the following derivation is the propertyFootnote 3

which is shown for event weights to correct an acceptance effect in Sect. 3.1 (Eq. 29). The more complex case of sWeights will be discussed in Sect. 3.2 (see Eqs. 42–44). Using the fact that \(E( w\partial ^2 \ln {{\mathcal {P}}}/\partial \lambda ^2|_{\lambda })<0\) (see Eq. 30) it can be shown that the estimator \({\hat{\lambda }}\) defined by Eq. 5 is consistent [6]. We can then Taylor-expand Eq. 5 to first order around the (unknown) true value \(\lambda _0\), to which \({\hat{\lambda }}\) converges in the asymptotic limit of large N:

This equation can be rewritten as

giving the deviation of the estimator \({\hat{\lambda }}\) from the true value \(\lambda _0\). Here, we used that the sum in the denominator goes to

in the asymptotic limit due to the law of large numbers. Due to the central limit theorem, the numerator converges to a Gaussian distribution with mean zero (according to Eq. 6) and variance

Using Eqs. 9 and 10, the variance in the asymptotic limit at leading order is thus given by

The right-hand side of Eq. 11 is the inverse Godambe information [7, 8], which is central to the theory of estimating equations. As the estimator is consistent, we replace \(\lambda _0\) with \({\hat{\lambda }}\) in the asymptotic limit, and further estimate the expectation values through the sample, resulting in

This expression is also known as the sandwich estimator. In the case where event weights are absent (\(w_e=1\)), the numerator in Eq. 11 cancels with one of the inverse Hessian matrices as in this case

For \(w_e=1\) the Godambe information thus simplifies to the well known Fisher information.

For the multidimensional case we analogously Taylor-expand Eq. 3 to first order, resulting in

which can be written as a matrix equation

Matrix inversion yields an expression for the deviation of the estimator \({\hat{\lambda }}_i\) from the true value \(\lambda _{0i}\)

The covariance matrix \(\varvec{C}\) is then given by

which can be compactly written as

The above expressions are familiar from the derivation of Eq. 2 (in the absence of event weights) in standard textbooks (e.g. Ref. [9]). Equation 18 has been previously discussed in Ref. [4] in the context of event weights for efficiency correction. However, it does not seem to be commonly used and often one of the approaches detailed below in Sect. 2.2 is employed instead.

2.2 Commonly used approaches to uncertainties in weighted fits

Instead of using the asymptotically correct approach for static event weights given by Eq. 18, often other techniques are used to determine parameter uncertainties in weighted unbinned maximum likelihood fits which are presented below. We stress that of the techniques (a)–(c) listed below only the bootstrapping approach (c) will result in generally asymptotically correct uncertainties.

-

(a)

A simple approach used sometimes (e.g. in Ref. [10]) is to rescale the weights \(w_e\) according to

$$\begin{aligned} w_e^\prime&= w_e\frac{\sum _{e=1}^N w_e}{\sum _{e=1}^N w_e^2} \end{aligned}$$(19)and to use Eq. 4 with the weights \(w_e^\prime \). This will rescale the weights such that their sum corresponds to Kish’s effective sample size [11], however, this approach will not generally reproduce the result in Eq. 18.

-

(b)

A method proposed in Refs. [1, 2] is to correct the covariance matrix according to

$$\begin{aligned} {C}_{ij}^\prime&= \sum _{k,l=1}^{N_P} \left. {H}_{ik}^{-1} {W}_{kl} {H}_{lj}^{-1}\right| _{\hat{\varvec{\lambda }}}, \end{aligned}$$(20)where \(\varvec{H}\) is the weighted Hessian matrix defined in Eq. 15 and \(\varvec{W}\) is the Hessian matrix determined using squared weights \(w_e^2\) according to

$$\begin{aligned} {W}_{kl}&= -\sum _{e=1}^N w_e^2\frac{\partial ^2\ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k\partial \lambda _l}. \end{aligned}$$(21)This method is the nominal method used in the Roofit software package when using weighted events [12] and is thus widely used in particle physics. It corresponds to the result in Eq. 18 only if

$$\begin{aligned}&E\biggl ( \sum _{e=1}^N w_e^2 \left. \frac{\partial \ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k}\right| _{\hat{\varvec{\lambda }}} \left. \frac{\partial \ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}} \biggr ) \nonumber \\&\quad = -E\biggl ( \sum _{e=1}^N w_e^2 \left. \frac{\partial ^2\ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}}\biggr ). \end{aligned}$$(22)This is however not generally the case. This becomes more clear when rewriting the left- and right-hand side of Eq. 22 according to

$$\begin{aligned}&\sum _{e=1}^N w_e^2 \left. \frac{\partial \ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k}\right| _{\hat{\varvec{\lambda }}} \left. \frac{\partial \ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}}\nonumber \\&= \sum _{e=1}^N \frac{w_e^2}{{{\mathcal {P}}}^2(x_e;\varvec{\lambda })} \left. \frac{\partial {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k}\right| _{\hat{\varvec{\lambda }}} \left. \frac{\partial {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}}\quad \mathrm{and} \end{aligned}$$(23)$$\begin{aligned}&\qquad -\sum _{e=1}^N w_e^2 \left. \frac{\partial ^2\ln {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}}\nonumber \\&\quad = \sum _{e=1}^N \frac{w_e^2}{{{\mathcal {P}}}^2(x_e;\varvec{\lambda })} \left. \frac{\partial {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k}\right| _{\hat{\varvec{\lambda }}} \left. \frac{\partial {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}}\nonumber \\&\qquad -\sum _{e=1}^N\frac{w_e^2}{{{\mathcal {P}}}(x_e;\varvec{\lambda })} \left. \frac{\partial ^2 {{\mathcal {P}}}(x_e;\varvec{\lambda })}{\partial \lambda _k\partial \lambda _l}\right| _{\hat{\varvec{\lambda }}}. \end{aligned}$$(24)The expectation value of the second part on the right-hand side of Eq. 24 is not generally zero. While Refs. [1, 2] correctly derive that the expectation value

$$\begin{aligned} E\biggl (\frac{w}{{{\mathcal {P}}}(x;\varvec{\lambda })}\frac{\partial ^2{{\mathcal {P}}}(x;\varvec{\lambda })}{\partial \lambda _k\partial \lambda _l}\biggr )&= 0 \end{aligned}$$(25)for an efficiency correction \(\epsilon _e=1/w_e\), this is not generally the case for the expression with squared weights

$$\begin{aligned} E\biggl (\frac{w^2}{{{\mathcal {P}}}(x;\varvec{\lambda })}\frac{\partial ^2{{\mathcal {P}}}(x;\varvec{\lambda })}{\partial \lambda _k\partial \lambda _l}\biggr ) \end{aligned}$$(26)resulting in confidence intervals that are not generally asymptotically correct when using this approach. This will be detailed for efficiency corrections in Sect. 3.1. For the specific example discussed in Sect. 4.1, the corresponding expectation values are calculated explicitly in Appendix A.

-

(c)

A general approach for the determination of parameter uncertainties is to bootstrap the data [13]. Repeatedly resampling the data set with replacement allows new samples to be generated that can in turn be used to estimate the parameters \(\varvec{\lambda }\) using the maximum likelihood method. The width of the distribution of estimated parameter values can then be used as estimator for the parameter uncertainty. This approach is generally valid, however repeatedly (typically \({{\mathcal {O}}}(10^3)\) times) solving Eq. 3 numerically can be computationally expensive and thus this approach is often unfeasible.

3 Event weights and inclusion of weight uncertainties

3.1 Acceptance corrections

Following the notation in Refs. [1, 2], this section details the correction of acceptance effects using event weights. Acceptance of events with a certain probability \(\epsilon \), depending on the measurements \(x_e\) and \(y_e\), can be accounted for in unbinned maximum likelihood fits by using event weights \(w_e=1/\epsilon (x_e,y_e)\) in Eq. 3. The efficiency \(\epsilon (x,y)\) should be positive over the full phasespace considered, regions of phasespace where the efficiency is zero should be excluded from the analysis.Footnote 4 Here, we differentiate between the quantities x that the probability density function \({{\mathcal {P}}}(x;\varvec{\lambda })\) in Eq. 3 depends on directly, and potential additional quantities y that can depend on x. Using event weights can be advantageous when it is difficult or computationally expensive to determine the norm of the probability density function when including the efficiency as an explicit additional multiplicative factor \(\epsilon (x,y)\). The covariance in this case can be estimated using Eq. 18 as previously suggested in Ref. [4].

To determine expectation values it is necessary to include the acceptance effect in the probability density function. The probability density function \({{\mathcal {P}}}(x,y;\varvec{\lambda })\) gives the probability to find the measurements x and y depending on the parameters \(\varvec{\lambda }\) with

and the proper normalisation \(\int {{\mathcal {P}}}(x;\varvec{\lambda }){\text {d}}x=1\) and \(\int {{\mathcal {Q}}}(y;x){\text {d}}y=1\). The resulting total probability density function including the acceptance effect is then given by

with normalisation \({{\mathcal {N}}}\). This is the probability density function that needs to be used when determining expectation values. For the likelihood condition we find

confirming the central property of Eq. 6. Further, we obtain

where also the expectation value in Eq. 25 is shown, and

However, the equality derived above is not generally fulfilled for squared weights. In this case, we find

and

where the term in the last line of Eq. 33, which corresponds to the expectation value in Eq. 26, is not generally zero, as the integral in the numerator can retain a dependence on \(\varvec{\lambda }\). For the example discussed in Sect. 4.1 this is explicitly calculated in Appendix A. This shows that parameter uncertainties determined using Eq. 20 are not generally asymptotically correct when performing weighted fits to account for acceptance corrections.

3.1.1 Weight uncertainties

If the weights to correct for an acceptance effect are only known to a certain precision, i.e. they are not fixed as assumed in Sect. 2.1, this induces an additional variance that is not included in Eq. 18 and that needs to be accounted for. This additional covariance can be estimated using standard error propagation, starting from Eq. 16. For weights depending on the \(N_T\) parameters \(p_m\) with covariance matrix \(\varvec{M}\), this results in

where \(d_j\) and \({H}_{ij}\) are defined in Eq. 15. Due to the likelihood condition \(d_j\bigr |_{\hat{\varvec{\lambda }}}=0\), the second term in Eq. 35 behaves as \({{\mathcal {O}}}(1/\sqrt{N})\) and only the first term needs to be considered.

For the case of an efficiency histogram, where the efficiency is given in \(N_B\) bins with weight uncertainty \(\sigma _{m=1,\ldots ,N_B}\), weights inside a bin are fully correlated, but typically uncorrelated with other bins. In this case, the additional covariance matrix that needs to be added to account for the weight uncertainties is given by

If the efficiency is modelled analytically, for example by a parameterisation that is fit to simulated samples, the impact of the uncertainty of the parameters \(\varvec{p}\) on the event weights \(w_e(\varvec{p})=1/\epsilon _e(\varvec{p})\) needs to be accounted for. For \(N_T\) parameters with covariance \(\varvec{M}\) the additional covariance matrix that needs to be added to Eq. 18 is given by

Identical results are obtained when using the systematic approach to error propagation that is employed for sWeights in the next section, which is based on combining the estimating equations for the parameters \(\varvec{p}\) and \(\varvec{\lambda }\) in a single vector.

3.2 The sPlot formalism

The sPlot formalism was introduced in Ref. [3] to statistically separate different event species in a data sample using per-event weights, the so-called sWeights, that are determined using a discriminating variable (in the following denoted by y). The sWeights allow to reconstruct the distribution of the different species in a control variable (in the following denoted by x), assuming the control and discriminating variables are statistically independent for each species. In this section, only a brief recap of the sPlot formalism is given, it is described in more detail in Ref. [3].

The sWeights are determined using an extended unbinned maximum likelihood fit of the discriminating variable y where the \(N_S\) different event species are well separated. An example of a discriminating variable (which will be discussed in more detail in Sect. 4.2) would be the reconstructed mass of a particle which is flat for the background components and peaks clearly for the signal component. For the typical use case of a signal component of interest and a single background component, the sWeight for event e is given by

where \(\hat{N}_{\mathrm {s}}=\hat{V}_{ss}+\hat{V}_{sb}\) and \(\hat{N}_{\mathrm {b}}=\hat{V}_{bb}+\hat{V}_{sb}\) is used [3]. Retaining only the dependency on the inverse covariance matrix elements \(V_{ij}^{-1}\) simplifies the following derivations. The estimates for the inverse covariance matrix elements are given by

Using the sWeights in a weighted unbinned maximum likelihood fit allows to statistically subtract events originating from species not of interest [14], by including them as event weights in Eq. 3, resulting in the estimating equations

The sWeights depend on estimates for the inverse covariance matrix elements \(V_{ij}^{-1}\) (Eq. 38), which in turn depend on estimates for the signal and background yields (Eq. 39) determined from the same sample.Footnote 5 To account for this effect, i.e. in order to systematically perform error propagation, it is useful to combine the estimating equations for the yields \(N_{\mathrm {s}}\) and \(N_{\mathrm {b}}\), the inverse covariance matrix elements \(V_{ij}^{-1}\), and the parameters of interest \(\varvec{\lambda }\) in a single vector

where \(\varvec{\theta }\) denotes the vector of parameters \(\varvec{\theta }=\{N_{\mathrm {s}},N_{\mathrm {b}},V_{ss}^{-1},V_{sb}^{-1},V_{bb}^{-1},\varvec{\lambda }\}\). It should be noted that solving \(\varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })|_{\hat{\varvec{\theta }}}=0\) is equivalent to solving the estimating equations for the yields, the inverse covariance matrix elements, and the parameters of interest \(\varvec{\lambda }\) sequentially. It can be shown that \(E\bigl (\varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })|_{\varvec{\theta }_0}\bigr )=0\), i.e. the estimating equations are unbiased,Footnote 6 as

and

The covariance matrix for the full system in the asymptotic limit is given byFootnote 7 [6, 18]

The covariance can be estimated from the sample by replacing the expectation values \(E\bigl (\partial g_i(\varvec{x},\varvec{y};\varvec{\theta })/\partial \theta _j\bigr )\) and \(E\bigl (g_i(\varvec{x},\varvec{y};\varvec{\theta })g_j(\varvec{x},\varvec{y};\varvec{\theta })\bigr )\) by their sample estimates, which are given in Appendices B.1 and B.2, respectively.

For the case of classic sWeights, where the shapes of all probability density functions are known, the above expression can be simplified further. The detailed calculation is given in Appendix B. For the covariance of the parameters of interest \(\varvec{\lambda }\) it results in

Note that using only the first term in Eq. 46 (which corresponds to Eq. 18) would not generally be asymptotically correct but instead conservative for the parameter variances, as the matrix \(\varvec{C}^\prime \) is positive definite.

The same technique used for the calculation of Eq. 46 can also be used to determine the (co)variance of the sum of sWeights in non-overlapping bins in the control variable x, which is needed to perform \(\chi ^2\) fits of binned sWeighted data. The detailed calculation for the covariance of \(\varvec{S}\) (with \(S_i=\sum _{e\,\in \,\text {bin}\,i}w_{\mathrm {s}}(y_e)\) for bin i) is given in Appendix C and results in

As is apparent, using only the first term in Eq. 47, i.e. using \(\sum _{e\,\in \,{\mathrm {bin}}\,i}w_{\mathrm {s}}^2(y_e)\) as estimate for the variance of the content of bin i, is not generally asymptotically correct but conservative, as \(\varvec{C}^\prime \) is positive definite. The second term in Eq. 47 also induces correlations between bins which should be accounted for in a binned \(\chi ^2\) fit.

3.2.1 sWeights and nuisance parameters

Additional nuisance parameters \(\varvec{\alpha }\) present in the extended maximum likelihood fit of the event yields (e.g. shape parameters of \(\mathcal{P}_{\mathrm {s}}\) or \(\mathcal{P}_{\mathrm {b}}\)) can be easily included in the formalism used in the previous section. The estimating equations \(\varphi _i(\varvec{y};\varvec{\alpha },N_{\mathrm {s}},N_{\mathrm {b}})\) for the parameters \(\varvec{\alpha }\) need to be added to the vector \(\varvec{g}\) defined in Eq. 41, resulting in a modified

where the vector of parameters is \(\varvec{\theta }^\prime =\{N_{\mathrm {s}},N_{\mathrm {b}},\varvec{\alpha },V_{ss}^{-1},V_{sb}^{-1},V_{bb}^{-1},\varvec{\lambda }\}\). The covariance matrix in the asymptotic limit is then given by

The covariance can again be estimated from the sample by replacing the expectation values \(E\bigl (\partial g_i^\prime (\varvec{x},\varvec{y};\varvec{\theta }^\prime )/\partial \theta _j^\prime \bigr )\) and \(E\bigl (g_i^\prime (\varvec{x},\varvec{y};\varvec{\theta }^\prime )g_j^\prime (\varvec{x},\varvec{y};\varvec{\theta }^\prime )\bigr )\) by their sample estimates. It should be noted that the nuisance parameters \(\varvec{\alpha }\) in this case will induce additional covariance terms beyond Eq. 46.Footnote 8

4 Examples

4.1 Correcting for an acceptance effect with event weights

The first example discussed in this paper is the fit of an angular distribution to determine angular coefficients, using event weights to correct for an acceptance effect. The probability density function used to generate and fit the pseudoexperiments is a simple second order polynomial in the angle \(\cos \theta \):

In the generation, the values \(c_0^{\mathrm{gen}}=0\) and \(c_1^{\mathrm{gen}}=0\) are used. Events are generated using a \(\cos \theta \)-dependent efficiency \(\epsilon (\cos \theta )\). Two efficiencies shapes are studied, given by

-

(a)

\(\epsilon (\cos \theta ) = 1.0-0.7\cos ^2\theta \) and

-

(b)

\(\epsilon (\cos \theta ) = 0.3+0.7\cos ^2\theta \).

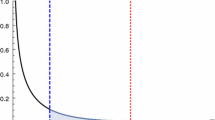

For simplicity, no uncertainty is assumed on the description of the acceptance effect by \(\epsilon (\cos \theta )\), otherwise the effect of uncertainties on event weights would need to be included as described in Sect. 3.1. Figure 1 shows the generated data (including the acceptance effect) in black and the efficiency corrected distributions, weighted by \(w_e=1/\epsilon (\cos \theta _e)\) in red.

The parameters \(c_0\) and \(c_1\) are determined using a weighted unbinned maximum likelihood fit, solving Eq. 3. The uncertainties on the parameters \(c_0\) and \(c_1\) are determined using the approaches to determine parameter uncertainties that are discussed in Sect. 2. The following methods are studied:

-

(a)

The method of using the uncertainties determined according to Eq. 4 without any correction, denoted as wFit in this section.

-

(b)

Scaling the weights according to Eq. 19. This approach is referred to as scaled weights.

-

(c)

Determining the covariance matrix using Eq. 20. This method is referred to as squared correction in the following.

-

(d)

Bootstrapping the data (using 1000 bootstraps) with replacement, denoted as bootstrapping.

-

(e)

The method to determine the covariance according to Eq. 18 as discussed in Sect. 2.1, referred to as asymptotic method.

-

(f)

A conventional fit (cFit) modelling the efficiency correction effect in the probability density function (and its normalisation) instead of using event weights.

The performance of the methods is compared using pseudoexperiments, with each study consisting of 10 000 toy data samples. The same data samples are used for every method. The distribution of the pull, defined as \(p_i(c_0)=(c_{0,i}-c_{0}^{\mathrm{gen}})/\sigma _i(c_0)\) (and analogously for parameter \(c_1\)), is used to test the different methods for uncertainty estimation. Here, the fitted value for parameter \(c_0\) in pseudoexperiment i is denoted as \(c_{0,i}\), the corresponding uncertainty is denoted as \(\sigma _{i}(c_0)\) and the generated value as \(c_0^{\mathrm{gen}}\). If the fit is unbiased and the uncertainties are determined correctly, the pull distribution is expected to be a Gaussian distribution with a mean compatible with zero and a width compatible with one. Different event yields per data sample (\(N=500\), 1000, 2000, 5000, \(10\,000\), \(20\,000\), \(50\,000\)) are studied to investigate the influence of statistics.

The pull distributions for the parameters \(c_0\) and \(c_1\) for 2000 events are shown in Figs. 2 and 3. The pull means and widths depending on statistics are given in Figs. 4 and 5. Numerical values are given in Tables 2 and 3 in Appendix D. A few remarks are in order.

Pull distributions from 10 000 pseudoexperiments for the different approaches to the uncertainty estimation for a total yield of 2000 events in each pseudoexperiment. The different figures shown correspond to the different background models as specified in Sect. 4

Pull distributions from 10 000 pseudoexperiments for the different approaches to the uncertainty estimation for a total yield of 2000 events in each pseudoexperiment. The different figures shown correspond to the different background models as specified in Sect. 4

The wFit method is unbiased but shows significant undercoverage for \(c_0\) and \(c_1\) for both acceptance corrections tested. The scaled weights approach shows significant undercoverage for both \(c_0\) and \(c_1\) for the acceptance (a) and overcoverage for acceptance (b). In both cases, the coverage remains incorrect even for high statistics. Both the use of the wFit as well as the scaled weights methods are therefore strongly disfavoured to determine the parameter uncertainties in this example for a simple efficiency correction. The squared method shows good behaviour for parameter \(c_0\) but incorrect coverage for parameter \(c_1\). For parameter \(c_1\) the method shows overcoverage for acceptance (a) and very significant undercoverage (more severe than even the wFit) for acceptance (b). The reason for this behaviour is the different expectation value of Eq. 26 with respect to the second derivatives to \(c_0\) and \(c_1\), as detailed in Appendix A. This illustrates that the squared correction method, which is widely used in particle physics, in general does not provide asymptotically correct confidence intervals when using event weights to correct for acceptance effects. Bootstrapping the data sample or using the asymptotic approach results in pull distributions with correct coverage for both \(c_0\) and \(c_1\) and both acceptance effects. No bias is observed for parameter \(c_0\) and only a small bias is found for \(c_1\) at low statistics. This paper therefore advocates for the use of the asymptotic method (or alternatively bootstrapping) when using event weights to account for acceptance corrections. The pull distributions for the cFit also show, as expected, good behaviour. As there is no loss of information for the cFit, it can result in better sensitivity, as shown by the relative efficiencies given in Tables 2 and 3, and its use should be strongly considered, where feasible.

4.2 Background subtraction using sWeights

As second specific example for the determination of confidence intervals in the presence of event weights, the determination of the lifetime \(\tau \) of an exponential decay in the presence of background is discussed. The sPlot method [3] is used to statistically subtract the background component. As discriminating variable, the reconstructed mass is used. In this example, the signal is distributed according to a Gaussian in the reconstructed mass, and the background is described by a single Exponential function with slope \(\alpha _{\mathrm{bkg}}\). Figure 6 shows the mass distribution for signal and background components. The parameters used in the generation of the pseudoexperiments are listed in Table 1a. The configuration is purposefully chosen such that there is a significant correlation between the yields and the slope of the background exponential, to illustrate the effect of fixing nuisance parameters in the sPlot formalism, as discussed in Sect. 3.2. The resulting mean correlation matrix for the mass fit is shown in Table 1b. The simpler case, where no significant correlation between \(\alpha _{\mathrm{bkg}}\) and the event yields is present, due to a different choice of mass range, is discussed in Appendix F.

The decay time distribution (the control variable) that is used to determine the lifetime is a single Exponential for the signal. For the background component, several different shapes were tested: (a) A single Exponential with long lifetime, (b) a Gaussian distribution, (c) a triangular distribution, and (d) a flat distribution in the decay time. Figure 7 shows the decay time distribution for the different options. The decay time distributions for signal and background components shown are obtained using the sPlot formalism [3] described in Sect. 3.2.

The parameter \(\tau \) is determined using a weighted unbinned maximum likelihood fit solving the maximum likelihood condition Eq. 3 numerically. Its uncertainty \(\sigma (\tau )\) is determined using the different methods for weighted unbinned maximum likelihood fits discussed in Sect. 2. The following approaches are studied:

-

(a)

A weighted fit determining the uncertainties according to Eq. 4 without any correction. This method is denoted as sFit in the following.

-

(b)

Scaling the weights according to Eq. 19. The approach is denoted as scaled weights.

-

(c)

Determining the covariance matrix using Eq. 20. This method is referred to as squared correction.

-

(d)

Bootstrapping the data (using 1000 bootstraps) with replacement, without rederiving the sWeights (i.e. keeping the original sWeights for each event). Denoted as bootstrapping in the following.

-

(e)

Bootstrapping the data (again using 1000 bootstraps) and rederiving the sWeights for every bootstrapped sample, in the following denoted as full bootstrapping.

-

(f)

The asymptotic method to determine the covariance according to Eq. 46 as discussed in Sect. 3.2, but not accounting for the impact of nuisance parameters in the determination of the sWeights. This approach is referred to as asymptotic method.

-

(g)

The method to determine the covariance according to Eq. 49, which includes the effect of nuisance parameters in the sWeight determination. This method is denoted as full asymptotic.

-

(h)

A conventional fit (cFit) modelling both signal and background components in two dimensions (mass and decay time) for comparison. As the main point of using sWeights is to remove the need to model the background contribution in the fit, this method is given purely for comparison.

The performance of the different methods is evaluated using pseudoexperiments. Every study consists of 10 000 data samples generated and then fit for an initial determination of the sWeights. For every method, the same data samples are used.

The performance of the different methods is compared using the distribution of the pull, defined as \(p_i(\tau )=(\tau _i-\tau ^{\mathrm{gen}}_{\mathrm{sig}})/\sigma _i(\tau )\). Here, \(\tau _i\) is the central value determined by the weighted maximum likelihood fit and \(\sigma _i(\tau )\) the uncertainty determined by the above methods. The lifetime used in the generation is denoted as \(\tau ^{\mathrm{gen}}_{\mathrm{sig}}\). To study the influence of statistics, pseudoexperiments are performed for different numbers of events. The total yields \(N_{\mathrm{tot}}=N_{\mathrm{sig}}+N_{\mathrm{bkg}}\) generated correspond to 400, 1000, 2000, 4000, 10 000 and 20 000 events. The signal fraction used in the generation is \(f_{\mathrm{sig}}=N_{\mathrm{sig}}/(N_{\mathrm{sig}}+N_{\mathrm{bkg}})=0.5\).

The pull distributions from 10 000 pseudoexperiments, each with a total yield of 2000 events, are shown in Figs. 8 and 9. The pull means and widths are shown in Figs. 10 and 11. Numerical values for the different configurations are given in Tables 4 and 5 in Appendix E.

As expected, both the sFit as well as the approach using scaled weights perform quite poorly, as they show large undercoverage, both for low statistics as well as for high statistics. Furthermore, they exhibit significant bias at low statistics (which reduces at large statistics) due to a strong correlation of the uncertainty with the parameter \(\tau \). This strongly disfavours the use of these methods for these sWeighted examples. The squared correction method shows better performance, nevertheless also exhibits significant bias (which reduces for higher statistics) and undercoverage. It should be stressed that significant undercoverage is still present at large statistics. This shows that the squared correction method in general does not provide asymptotically correct confidence intervals. Both bootstrapping as well as the asymptotic methods perform better for the examples studied here. However, both methods show some remaining undercoverage even at high statistics. It is instructive that bootstrapping the data without redetermining the sWeights performs identically to the asymptotic method without accounting for the uncertainty due to nuisance parameters. However, when performing a full bootstrapping including rederiving the sWeights for the bootstrapped samples or when using the full asymptotic method the confidence intervals generally cover correctly and no significant biases are observed. Only at low statistics some slight overcoverage can be observed. This paper therefore advocates the use of the full asymptotic method, or alternatively, if computationally possible, the full bootstrapping approach for the determination of uncertainties in unbinned maximum likelihood fits using sWeights. If nuisance parameters have no large impact on the sWeights, the asymptotic method can also be appropriate, as shown in Appendix F.

The conventional fit describing the background component in the decay time explicitly instead of using sWeights also shows good behaviour, as expected. When the background distribution is known, a conventional fit is generally advantageous as it has improved sensitivity due to the additional available information. For this example, where the background pollution and parameter correlations are large, the parameter sensitivity is significantly improved when using the conventional (unweighted) fit, as shown by the relative efficiencies given in Tables 4 and 5.

5 Conclusions

This paper derives the asymptotically correct method to determine parameter uncertainties in the presence of event weights for acceptance corrections, which was previously discussed in Ref. [4] but does not currently see widespread use in high energy particle physics. The performance of this approach is validated on pseudoexperiments and compared with several commonly used methods. The asymptotically correct approach performs well, while several of the commonly used methods are shown to not generally result in correct coverage, even for large statistics. In addition, the effect of weight uncertainties for acceptance corrections is discussed. The paper furthermore derives asymptotically correct expressions for parameter uncertainties in fits that use event weights to statistically subtract background events using the sPlot formalism [3]. The asymptotically correct expression accounting for the presence of nuisance parameters in the determination of sWeights is also given. On pseudoexperiments the asymptotically correct methods perform well, whereas several commonly used methods show incorrect coverage also for this application. Finally, the (co)variance for the sum of sWeights in bins of the control variable is calculated, which is a prerequisite for binned \(\chi ^2\) fits of sWeighted data. If statistics are sufficiently large this paper advocates the use of the asymptotically correct expressions in weighted unbinned maximum likelihood fits, in particular over the current nominal method used in the Roofit [12] fitting framework, which was proposed in Refs. [1, 2], and is shown to not generally result in asymptotically correct uncertainties. If computationally feasible, the bootstrapping approach [13] can be a useful alternative. A patch for Roofit to allow the determination of the covariance matrix according to Eq. 18 has been provided by the author and is available starting from Root v6.20.

Data Availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The pseudodata used to illustrate the different approaches to the estimation of uncertainties can be easily reproduced following the procedure described in the text.]

Notes

It should further be noted, that the inclusion of event weights involves some loss of information [4].

It should be noted that the left-hand side of Eq. 3 strictly speaking is no longer a standard logarithmic likelihood, however that does not preclude its use in parameter estimation as an estimating function.

Estimating functions fulfilling Eq. 6 are referred to as unbiased estimating equations in the statistical literature (see e.g. Ref. [6]). It should be noted that the existence of an unbiased estimating equation does not imply that the corresponding estimator itself is unbiased. In particular, it is well known that maximum likelihood estimators are biased for finite samples.

To exclude pathological cases we furthermore require \(\sum _{e=1}^N w_e=\sum _{e=1}^N 1/\epsilon _e\) to be of \({{\mathcal {O}}}(N)\).

Note that the expectation values are evaluated at the true parameter values \(\varvec{\theta }_0\) which simplifies their calculation significantly.

Here and in the following the superscript \({}^{-T}\) refers to the transposed inverted matrix.

For the binned case nuisance parameters \(\varvec{\alpha }\) induce additional covariance terms beyond Eq. 47 as well.

In the following, the arguments of the estimating functions \(\varvec{\varphi }\), \(\varvec{\psi }\) and \(\varvec{\xi }\) will be omitted for brevity.

Reference [3] gives this variance as \(\sum _{e\in \,\text {bin}\,i}w_{\mathrm {s}}^2(y_e)\) in Eq. 22.

References

W.T. Eadie, D. Drijard, F.E. James, M. Roos, B. Sadoulet, Statistical Methods in Experimental Physics (North-Holland, Amsterdam, 1971)

F. James, Statistical Methods in Experimental Physics (World Scientific, Hackensack, 2006)

M. Pivk, F.R. Le Diberder, SPlot: a statistical tool to unfold data distributions. Nucl. Instrum. Methods A 555, 356 (2005). https://doi.org/10.1016/j.nima.2005.08.106. arXiv:physics/0402083

F.T. Solmitz, Analysis of experiments in particle physics. Annu. Rev. Nucl. Sci. 14, 375 (1964). https://doi.org/10.1146/annurev.ns.14.120164.002111

F. James, M. Roos, Minuit: a system for function minimization and analysis of the parameter errors and correlations. Comput. Phys. Commun. 10, 343 (1975). https://doi.org/10.1016/0010-4655(75)90039-9

A.C. Davison, Statistical Models. Cambridge Series in Statistical and Probabilistic Mathematics (Cambridge University Press, Cambridge, 2003). https://doi.org/10.1017/CBO9780511815850

V.P. Godambe, An optimum property of regular maximum likelihood estimation. Ann. Math. Stat. 31, 1208 (1960). https://doi.org/10.1214/aoms/1177705693

V. Godambe, M. Thompson, Some aspects of the theory of estimating equations. J. Stat. Plan. Inference 2, 95 (1978). https://doi.org/10.1016/0378-3758(78)90026-5

R.J. Barlow, Statistics: A Guide to the Use of Statistical Methods in the Physical Sciences. Manchester Physics Series (Wiley, Chichester, 1989)

LHCb Collaboration, Measurement of CP violation and the \(B^0_s\) meson decay width difference with \(B^0_s\rightarrow J/\psi K^+K^-\) and \(B^0_s\rightarrow J/\psi \pi ^+\pi ^-\) decays. Phys. Rev. D 87, 112010 (2013). https://doi.org/10.1103/PhysRevD.87.112010. arXiv:1304.2600

L. Kish, Survey Sampling. Wiley Classics Library (Wiley, New York, 1965)

W. Verkerke, D.P. Kirkby, The RooFit toolkit for data modeling. eConf C0303241, MOLT007 (2003). arXiv:physics/0306116

B. Efron, Bootstrap methods: another look at the jackknife. Ann. Stat. 7, 1 (1979)

Y. Xie, sFit: a method for background subtraction in maximum likelihood fit (2009). arXiv e-prints. arXiv:0905.0724

J. Wooldridge, Econometric Analysis of Cross Section and Panel Data, Econometric Analysis of Cross Section and Panel Data (MIT Press, Cambridge, 2010)

W.K. Newey, D. McFadden, Chapter 36 Large Sample Estimation and Hypothesis Testing. Handbook of Econometrics, vol. 4 (Elsevier, Amsterdam, 1994), pp. 2111–2245. https://doi.org/10.1016/S1573-4412(05)80005-4

K.M. Murphy, R.H. Topel, Estimation and inference in two-step econometric models. J. Bus. Econ. Stat. 3, 370 (1985)

A. van der Vaart, Asymptotic Statistics (Cambridge University Press, Cambridge, 2000)

Acknowledgements

C. L. gratefully acknowledges support by the Emmy Noether programme of the Deutsche Forschungsgemeinschaft (DFG), grant identifier LA 3937/1-1. Furthermore, C. L. would like to thank Roger Barlow for helpful comments and questions on an early version of this paper. Finally, C. L. would like to acknowledge useful communication from Michael Schmelling, Hans Dembinski and Matt Kenzie.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Expectation value Eq. 26 for examples correcting for acceptance effects

As mentioned in Sect. 2.2, Eq. 22 is not generally asymptotically valid. To demonstrate this with an example, the expectation value in Eq. 26 is explicitly calculated below for the angular fit in Sect. 4.1. Using the probability density function

and the efficiency correction (b)

we derive the expectation value in Eq. 26 according to (showing the asymptotic behaviour for the double partial derivative to \(c_1\) is sufficient):

Using computer algebra, these integrals can be easily evaluated analytically. For the denominator we obtain

The expression for the numerator is slightly more complicated, it results in

We thus find

This shows clearly, that Eq. 22 is not generally asymptotically correct. The result in Eq. 56 has been crosschecked using the pseudoexperiments described in Sect. 4.1 and indeed the additional term fluctuates around \(N\times 0.2742\) as derived above.

For acceptance (a) we find similarly

which is also confirmed using the pseudoexperiments. The fact that the expectation value is negative for acceptance (a) and positive for acceptance (b) indicates that, as observed using the pseudoexperiments, the squared correction method overcovers for acceptance (a) and undercovers for acceptance (b).

It is instructive to note that for the double partial derivative to \(c_0\), \(E( \epsilon ^{-2} {{\mathcal {P}}}^{-1}\partial ^2/\partial c_0^2 {{\mathcal {P}}})\), we find an expectation value of zero, as the integration of the numerator removes the \(c_0\) dependence in that case. This is the reason why the squared correction method results in compatible results with the asymptotic method for \(c_0\), but shows incorrect coverage for \(c_1\).

Appendix B: Covariance determination for unbinned sWeighted fits

The asymptotic covariance for sWeighted unbinned maximum likelihood fits is given by Eq. 45 [6, 18] which is reproduced below for convenience.

Here, the vector of estimating equations \(\varvec{g}\) is given by Eq. 41, which is also repeated below

The elements of the denominatorFootnote 9\(E\bigl (\partial \varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })/\partial \varvec{\theta }^T\bigr )\) given by

are determined in Appendix B.1 and the elements of the (symmetric) numerator

are determined in Appendix B.2. For both numerator and denominator the sample estimates are derived first and their expectation values are given afterwards. The resulting covariance is derived in Appendix B.3.

1.1 B.1 Determination of the denominator

We first determine the expectations \(E(\partial \varvec{g}/\partial \varvec{\theta }^T)\), the denominator in Eq. 58, which would be corresponding to the Hessian matrix if we were doing purely maximum likelihood estimation. We find for the two \(\varvec{\varphi }\) components

for the \(\varvec{\psi }\) components

and finally for the \(\varvec{\xi }\) components

where \(\kappa _i=\int N_{\mathrm {b}}\frac{\mathcal{P}_{\mathrm {b}}(x)}{\mathcal{P}_{\mathrm {s}}(x;\varvec{\lambda })}\frac{\partial \mathcal{P}_{\mathrm {s}}(x;\varvec{\lambda })}{\partial \lambda _i}\mathrm {d}x\) was defined in Eq. 44. In summary, the matrix in the denominator is

As a reminder, the matrix \(\varvec{A}\) is the \(2\times 2\) matrix for the signal and background yields defined by Eq. 62, the matrix \(\varvec{B}\) is a \(3\times 2\) matrix defined by Eq. 65, \(\varvec{E}\) is the \(\dim (\varvec{\lambda })\times 3\) matrix defined by Eqs. 69–71, and \(\varvec{H}\) the \(\dim (\varvec{\lambda })\times \dim (\varvec{\lambda })\) matrix given by Eq. 72. The upper right corner of Eq. 73 is filled with zero matrices, as the estimation of the yields does not depend on the parameters \(V_{ij}^{-1}\), and the estimators for the \(V_{ij}^{-1}\) and the yields do not depend on \({\varvec{\lambda }}\). This simplifies the inversion of the matrix in Eq. 73, which results in

This matrix can be further simplified, as

and

We therefore find \(\varvec{E}\varvec{B}=\varvec{0}\) and the inverse of Eq. 73 is given by

1.2 B.2 Determination of numerator

We now have to determine the elements of the numerator \(E\bigl (\varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })\varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })^T\bigr )\) in Eq. 58. For completeness we determine all sub-matrices, but it will be shown that we only need the components \(E\bigl (\psi _{ij}\psi _{kl}\bigr )\equiv C_{(ij)(kl)}^\prime \), \(E\bigl (\psi _{ij}\xi _l\bigr )\equiv E_{l(ij)}^\prime \) and \(E\bigl (\xi _k\xi _l\bigr )\equiv H^\prime _{kl}\) to determine the covariance for the parameters of interest \(\varvec{\lambda }\). We find

and

The matrix in the numerator is therefore given by

with the matrix elements defined in Eqs. 78–83.

1.3 B.3 Resulting covariance

The full covariance matrix is then given by the following matrix multiplication

We are interested in the bottom right elements for the covariance matrix for the parameters of interest \(\varvec{\lambda }\). The matrix multiplication yields

The additional covariance beyond the first term can be further simplified. To this end we use our definitions

and

and furthermore replace the \(V_{ij}^{-1}\) to only retain dependencies on \({C}_{ij}^\prime \) and the yields

We can then calculate (easiest to verify using computer algebra)

Equation 86 therefore simplifies to

The additional variance for the parameters of interest is therefore \(\le 0\), as the matrix \(\varvec{C}^\prime \) (and also \(\varvec{E}\varvec{C}^\prime \varvec{E}^T\)) is positive definite. Using only the first term of Eq. 91 (the naive sandwich estimate) is therefore conservative for sWeights, but can overestimate the variances. To guarantee asymptotically correct coverage, Eq. 91 should be used.

Appendix C: Covariance determination for binned sWeighted quantities

To determine the variance for the sum of sWeights in a bin i in the control variable,Footnote 10\(\sum _{e\in \,\text {bin}\,i}w_{\mathrm {s}}(y_e)\), we use the same technique of systematic error propagation as detailed above in Appendix B. We have as estimating equations

where \(S_i\) denotes the expected signal yield in bin i. We now want to determine the variance for the expected signal yield in bin i, and, in addition, its covariance with a different, non-overlapping bin j with expected signal yield \(S_j\).

1.1 C.1 Determination of the denominator

The elements of the denominator \(E(\partial \varvec{g}/\partial \varvec{\theta }^T)\) are calculated in full analogy to the unbinned case. We have

for the \(\varvec{\varphi }\) components. For the \(\varvec{\psi }\) components we find

and finally, using the shorthand

we find

and

and finally

The denominator matrix is then given by

with the inverse

for which we again needed to show \(\varvec{E}\varvec{B}=\varvec{0}\), i.e.

1.2 C.2 Determination of the numerator

The elements of the numerator \(E\bigl (\varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })\varvec{g}(\varvec{x},\varvec{y};\varvec{\theta })^T\bigr )\) are given by

For the combinations including the terms \(\varvec{\xi }\) we find

and furthermore

i.e.

and finally

As before, the matrix in the numerator is given by

1.3 C.3 Resulting covariance

The full covariance matrix is given by the product

The covariance matrix for the parameters of interest, in this instance the sWeighted bin contents, is given by

Equation 120 is shown by first realising

and

The first term in Eq. 120 can be estimated by \(\sum _{e\in {\mathrm {bin}}\,k}w_{\mathrm {s}}^2(y_e)\) from the sample. As the matrix \(\varvec{C}^\prime \) is positive definite, using only the first term can overestimate the variances and is therefore conservative. To guarantee asymptotically correct coverage for a \(\chi ^2\) fit the full expression in Eq. 120 should be used, which also accounts for correlations between bins.

Appendix D: Results from pseudoexperiments correcting acceptance effects

Appendix E: Results from pseudoexperiments using sWeights

Appendix F: sWeights with negligible nuisance parameter correlations

In Sect. 4.2 the mass range which is used to determine the sWeights is chosen such that the background slope \(\alpha _{\mathrm{bkg}}\) is significantly correlated with the event yields. This results in an additional uncertainty on the sWeights that needs to be accounted for using Eq. 49. For completeness, in this section the different methods are studied in the absence of any significant impact of nuisance parameters on the sWeights. To this end, the mass range \([5\,267,5\,467]{\mathrm {\,Me}\mathrm {V\!/}c^2} \) is chosen, which is a symmetrical mass window around the peak at \(5\,367{\mathrm {\,Me}\mathrm {V\!/}c^2} \), as shown in Fig. 12. This choice results in negligible correlation of \(\alpha _{\mathrm{bkg}}\) with \(N_{\mathrm{sig}}\) and \(N_{\mathrm{bkg}}\). All other settings are kept as in Sect. 4.2.

The means and widths of the resulting pull distributions are given in Tables 6 and 7. As for the case discussed in Sect. 4.2, the sFit, the scaled weights method and the squared weights method show significant undercoverage. All other methods, namely the (full) bootstrapping, the (full) asymptotic method and the cFit show correct coverage.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Langenbruch, C. Parameter uncertainties in weighted unbinned maximum likelihood fits. Eur. Phys. J. C 82, 393 (2022). https://doi.org/10.1140/epjc/s10052-022-10254-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10254-8