Abstract

The Pythia event generator is used in several contexts to study hadron and lepton interactions, notably \(\mathrm{p}\mathrm{p}\) and \(\mathrm{p}{\bar{\mathrm{p}}}\) collisions. In this article we extend the hadronic modelling to encompass the collision of a wide range of hadrons h with either a proton or a neutron, or with a simplified model of nuclear matter. To this end we model \(h\mathrm{p}\) total and partial cross sections as a function of energy, and introduce new parton distribution functions for a wide range of hadrons, as required for a proper modelling of multiparton interactions. The potential usefulness of the framework is illustrated by a simple study of the evolution of cosmic rays in the atmosphere, and by an even simpler one of shower evolution in a solid detector material. The new code will be made available for future applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout the history of high energy particle physics, one of the most studied processes is proton–proton collisions. Originally the Pythia (+ Jetset) event generator [1, 2] was designed to simulate \(\mathrm{e}^+\mathrm{e}^-/\mathrm{p}\mathrm{p}\)/\(\mathrm{p}{\bar{\mathrm{p}}}\) collisions. Later it was extended partly to \(\mathrm{e}\mathrm{p}\) and some photon physics, while the coverage of other hadron and lepton collision types has remained limited. For QCD studies, as well as other Standard-Model and Beyond-the-Standard-Model ones, \(\mathrm{e}^+\mathrm{e}^-/\mathrm{p}\mathrm{p}\)/\(\mathrm{p}{\bar{\mathrm{p}}}/\mathrm{e}\mathrm{p}\) has provided the bulk of data, and so there has been little incentive to consider other beam combinations.

In recent years, however, there has been an increasing interest to extend the repertoire of beams. Prompted by the ongoing heavy-ion experiments at RHIC and the LHC, the most significant addition to Pythia is the Angantyr framework for heavy-ion interactions [3, 4], which implements \(\mathrm{p}\mathrm{A}\) and \(\mathrm{A}\mathrm{A}\) collisions building on Pythia ’s existing framework for nucleon–nucleon interactions.

A second new addition is low-energy interactions, which was developed as part of a framework for hadronic rescattering in Pythia [5, 6]. In this framework, common collisions (ie. mainly those involving nucleons or pions) are modelled in detail, including low-energy versions of standard high-energy processes like diffractive and non-diffractive interactions, as well as low-energy-only non-perturbative processes like resonance formation and baryon number annihilation. Less common collisions (involving eg. excited baryons or charm/ bottom hadrons) use simplified descriptions, the most general being the Additive Quark Model (AQM) [7, 8], which gives a cross section that depends only on the quark content of the involved hadrons. This way, the low-energy framework supports interactions for all possible hadron–hadron combinations.

These non-perturbative models are accurate only for low energies, however, up until CM energies around \(E_\mathrm {CM} = 10\) GeV. This means that, at perturbative energies, still mainly nucleon–nucleon interactions are supported. While other hadron species seldom are used directly as beams in experiments, their collisions still have relevance, in particular for hadronic cascades in a medium. One such example is cosmic rays entering the atmosphere, with collision center-of-mass (CM) energies that stretch to and above LHC energies, and thus give copious particle production. Secondary hadrons can be of rare species, and may interact with the atmosphere at perturbative energies. The objective of this article is to implement general perturbative hadron–nucleon interactions in Pythia, using cosmic rays as a test case for the resulting framework.

Two significant extensions are introduced to this end. One is a modelling of total, elastic, diffractive and nondiffractive cross sections for the various beam combinations, as needed to describe collision rates also at energies above \(E_\mathrm {CM} = 10\) GeV. The other is parton distribution functions (PDFs) for a wide selection of mesons and baryons, as needed to describe the particle production in high-energy collisions. Important is also a recent technical improvement, namely the support for selecting beam energies on an event-by-event basis for the main QCD processes, made possible by initializing relevant quantities on an interpolation grid of CM energies. At the time of writing, this is supported for hadron–hadron beams, but not yet for heavy-ion collisions in Angantyr, which will prompt us to introduce a simplified handling of nuclear effects in hadron–nucleus collisions. Nucleus–nucleus ones, such as iron hitting the atmosphere, is not yet considered.

Key to the understanding of atmospheric cascades is the model for hadronic interactions. Several different ones are used in the community, such as SIBYLL [9,10,11,12,13], QGSJET [14,15,16,17,18], DPMJET [19,20,21], VENUS/NEXUS/EPOS [22,23,24,25,26], and HDPM (described in Ref. [27]). It is in this category that Pythia could offer an alternative model, constructed completely independently of either of the other ones, and therefore with the possibility to offer interesting cross-checks. In some respects it is likely to be more sophisticated than some of the models above, eg. by being able to handle a large range of beam particles almost from the threshold to the highest possible energies, with semi-perturbative interactions tailored to the incoming hadron type. In other respects it is not yet as developed, like a limited handling of nuclear effects and a lack of tuning to relevant data.

Neither of these programs can describe the important electromagnetic cascades, which instead typically are delegated to EGS [28]. At low energies GHEISHA [29] is often used for nuclear effects, with ISOBAR (described in Ref. [27]), UrQMD [30, 31] and FLUKA [32] as alternatives. Generally a typical full simulation requires many components to be combined, under the control of a framework that does the propagation of particles through the atmosphere, taking into account eg. the atmospheric density variation and the bending of charged particles by the earth magnetic field. Two well-known examples of such codes are CORSIKA [27] and AIRES [33, 34]. Interestingly for us, the new CORSIKA 8 [35, 36] framework is written in C++, like Pythia 8 but unlike the other hadronic interaction models, and Pythia 8 is already interfaced to handle particle decays, so a further integration is a possibility.

One should also mention that an alternative to Monte Carlo simulation of cascades is to construct a numerical simulation from the cascade evolution equations, one example being MCeq [28]. Other examples include hybrid approaches, where the high-energy part is tracked explicitly but the low-energy part is handled by evolution equations, eg. in SENECA [28] and CONEX [37, 38]. Also in these cases the hadronic interaction models provide necessary input. Angantyr has in fact already been used to this end, to describe \(\mathrm{p}/\pi /\mathrm{K}\) interactions with nuclei [39].

Another application of hadronic cascades is in detector simulations with programs such as FLUKA [32] and GEANT [40,41,42,43], which have also been used for cascades in the atmosphere, see eg. [44,45,46]. GEANT4 depends on external frameworks for simulating collisions, like CORSIKA 8, and has been explicitly designed with an object-oriented architecture that allows users to insert their own physics implementations, one of the current possibilities being Pythia 6 [1]. One central difference is that the medium is much denser in a detector, so particles propagate shorter distances before interacting. Hence, some particles that are too short-lived to interact in the atmosphere can do so in detector simulations, eg. \(\mathrm{D}\), \(\mathrm{B}\), \(\Lambda _\mathrm{c}^+\) and \(\Lambda _\mathrm{b}^0\). For the rest of this article we will focus on the atmospheric case, but we still implement all hadronic interactions relevant for either medium.

To describe hadron–hadron interactions, we need to set up the relevant cross sections and event characteristics. In particular, the latter includes modelling the parton distribution functions for the incoming hadrons. These aspects are developed in Sect. 2. Some simple resulting event properties for \(h\mathrm{p}\) collisions are shown in Sect. 3. In practice, mediums consist of nuclei such as nitrogen or lead, rather than of free nucleons. Since Angantyr does not yet efficiently support nuclear collisions with variable energy, we also introduce and test a simplified handling of nuclear effects in Sect. 3. The main intended application of this framework is to cascades in a medium, so we implement a simple atmospheric model in Sect. 4, and give some examples of resulting distributions. There is also a quick look at passage through a denser medium. Either setup is much simplified relative to CORSIKA or GEANT4, so has no scientific value except to to test and explore features of our new hadronic interactions. The atmospheric toy-model code will be included in a future release of Pythia as an example of how to interface a cascade simulation with Pythia. Finally we present some conclusions and an outlook in Sect. 5.

2 Cross sections and parton distributions

The first step in modelling the evolution of a cascade in a medium is to have access to the total cross sections for all relevant collisions. Crucially, this relates to how far a particle can travel before it interacts. Once an interaction occurs, the second step is to split the total cross section into partial ones, each with a somewhat different character of the resulting events. Each event class therefore needs to be described separately. At high energies a crucial component in shaping event properties is multiparton interactions (MPIs). Pythia models MPIs in terms of parton distribution functions (PDFs), and these have to be made available for all relevant hadrons. Special attention also has to be given to particles produced in the forward direction, that take most of the incoming energy and therefore will produce the most energetic subsequent interactions. These topics will be discussed in the following.

2.1 New total cross sections

The description of cross sections depends on the collision energy. At low energies various kinds of threshold phenomena and resonance contributions play a key role, and these can differ appreciably depending on the incoming hadron species. At high energies a more smooth behaviour is expected, where the dominating mechanism of pomeron exchange should give common traits in all hadronic cross sections [47,48,49,50].

In a recent article [5] we implemented low-energy cross sections for most relevant hadron–hadron collisions, both total and partial ones, all the way down to the energy threshold. Input came from a variety of sources. The main ones were mostly based on data or well-established models, while others involved larger measures of uncertainty. Extensions were also introduced to the traditional string fragmentation framework, to better deal with constrained kinematics at low energy.

In cases where no solid input existed, the Additive Quark Model (AQM) [7, 8] was applied to rescale other better-known cross sections. In the AQM, the total cross section is assumed to be proportional to the product of the number of valence quarks in the respective hadron, so that eg. a meson–meson cross section is 4/9 that of a baryon–baryon one. The contribution of a heavy quark is scaled down relative to that of a \(\mathrm{u}/\mathrm{d}\) quarks, however, presumably by mass effects giving a narrower spatial wave function. Assuming that quarks contribute inversely proportionally to their constituent masses, we define an effective number of valence quarks in a hadron to be approximately

This expression will also be used as a guide for high-energy cross sections, as we shall see.

The emphasis of the low-energy cross sections lies on the description of collisions below \(E_\mathrm {CM} = 5\) GeV, say, but the models used should be valid up to 10 GeV. Many processes also have a sensible behaviour above that, others gradually less so.

At the other extreme then lies models intended to describe high-energy cross sections. Here \(\mathrm{p}\mathrm{p}/\mathrm{p}{\bar{\mathrm{p}}}\) collisions are central, given the access to data over a wide energy range, and the need to interpret this data. A few such models have been implemented in Pythia [51], giving the possibility of comparisons. Fewer models are available for diffractive topologies than for the total and elastic cross sections.

For the purposes of this study we will concentrate on the SaS/DL option, not necessarily because it is the best one for \(\mathrm{p}\mathrm{p}/\mathrm{p}{\bar{\mathrm{p}}}\) but because we have the tools to extend it to the necessary range of collision processes in a reasonably consistent manner. The starting point is the Donnachie–Landshoff modelling of the total cross section [52]. In it, a common ansatz

is used for the collisions between any pair of hadrons A and B. Here s is the squared CM energy, divided by 1 \(\hbox {GeV}^2\) to make it dimensionless. The terms \(s^{\epsilon }\) and \(s^{-\eta }\) are assumed to arise from pomeron and reggeon exchange, respectively, with tuned universal values \(\epsilon = 0.0808\) and \(\eta = 0.4525\). The \(X^{AB}\) and \(Y^{AB}\), finally, are process-specific. \(X^{{\overline{A}}B} = X^{AB}\) since the pomeron is charge-even, whereas generally \(Y^{{\overline{A}}B} \ne Y^{AB}\), which can be viewed as a consequence of having one charge-even and one charge-odd reggeon. Recent experimental studies [53, 54] have shown that the high-energy picture should be complemented by a charge-odd odderon [55] contribution, but as of yet there is no evidence that such effects have a major impact on total cross sections.

In the context of \(\gamma \mathrm{p}\) and \(\gamma \gamma \) studies, the set of possible beam hadrons was extended by Schuler and Sjöstrand (SaS) to cover vector meson collisions [56, 57]. Now we have further extended it to cover a range of additional processes on a \(\mathrm{p}/\mathrm{n}\) target, Table 1. The extensions have been based on simple considerations, notably the AQM, as outlined in the table. They have to be taken as educated guesses, where the seeming accuracy of numbers is not to be taken literally. For simplicity, collisions with protons and with neutrons are assumed to give the same cross sections, which is consistent with data, so only the former are shown. The reggeon term for \(\phi ^0\mathrm{p}\) is essentially vanishing, consistent with the OZI rule [58,59,60], and we assume that this suppression of couplings between light \(\mathrm{u}/\mathrm{d}\) quarks and \(\mathrm{s}\) quarks extends to \(\mathrm{c}\) and \(\mathrm{b}\). Thus, for baryons, the reggeon \(Y^{AB}\) values are assumed proportional to the number of light quarks only, while the AQM of Eq. (2) is still used for the pomeron term. Another simplification is that \(\mathrm{D}/\mathrm{B}\) and \(\bar{\mathrm{D}}/{\bar{\mathrm{B}}}\) mesons are assigned the same cross section. Baryons with the same flavour content, or only differing by the relative composition of \(\mathrm{u}\) and \(\mathrm{d}\) quarks, are taken to be equivalent, ie. \(\Lambda \mathrm{p}= \Sigma ^+\mathrm{p}= \Sigma ^0\mathrm{p}= \Sigma ^-\mathrm{p}\).

The DL parametrizations work well down to 6 GeV, where testable. Thus there is an overlap region where either the low-energy or the high-energy cross sections could make sense to use. Therefore we have chosen to mix the two in this region, to give a smooth transition. More precisely, the transition is linear in the range between

and

where \(E_{\mathrm {min}}\) is 6 GeV and \(\Delta E\) is 8 GeV by default.

2.2 New partial cross sections

The total cross section can be split into different components

Here ND is short for nondiffractive, el for elastic, SD(XB) and SD(AX) for single diffraction where either beam is excited, DD for double diffraction, CD for central diffraction, exc for excitation, ann for annihilation and res for resonant. Again slightly different approaches are applied at low and at high energies, where the former often are based on measurements or models for exclusive processes, whereas the latter assume smoother and more inclusive distributions. The last three subprocesses in Eq. (5) are only used at low energies. In the transition region between low and high energies, the two descriptions are mixed the same way as the total cross section.

High-energy elastic cross sections are modelled using the optical theorem. Assuming a simple exponential fall-off \(\mathrm{d}\sigma _{\mathrm {el}}/\mathrm{d}t \propto \exp (B_{\mathrm {el}}t)\) and a vanishing real contribution to the forward scattering amplitude (\(\rho = 0\))

(with \(c = \hbar = 1\)). The slope is given by

where \(\alpha ' \approx 0.25\) \(\hbox {GeV}^{-2}\) is the slope of the pomeron trajectory and \(s_0 = 1/\alpha '\). In the final expression the SaS replacement is made to ensure that \(\sigma _{\mathrm {el}}/\sigma _{\mathrm {tot}}\) goes to a constant below unity at large energies, while offering a reasonable approximation to the logarithmic expression at low energies. The hadronic form factors \(b_{A,B}\) are taken to be 1.4 for mesons and 2.3 for baryons, except that mesons made only out of \(\mathrm{c}\) and \(\mathrm{b}\) quarks are assumed to be more tightly bound and thus have lower values. As a final comment, note that a simple exponential in t is only a reasonable approximation at small |t|, but this is where the bulk of the elastic cross section is. For \(\mathrm{p}\mathrm{p}\) and \(\mathrm{p}{\bar{\mathrm{p}}}\) more sophisticated larger-|t| descriptions are available [51].

Also diffractive cross sections are calculated using the SaS ansatz [57, 61]. The differential formulae are integrated numerically for each relevant collision process and the result suitably parametrized, including a special threshold-region ansatz [5]. Of note is that, if the hadronic form factor from pomeron-driven interactions is written as \(\beta _{A{\mathbb {P}}}(t) = \beta _{A{\mathbb {P}}}(0) \, \exp (b_A t)\) then, with suitable normalization, \(X^{AB} = \beta _{A{\mathbb {P}}}(0) \, \beta _{B{\mathbb {P}}}(0)\) in Eq. (2). Thus we can define \(\beta _{\mathrm{p}{\mathbb {P}}}(0) = \sqrt{X^{\mathrm{p}\mathrm{p}}}\) and other \(\beta _{A{\mathbb {P}}}(0) = X^{A\mathrm{p}}/\beta _{\mathrm{p}{\mathbb {P}}}(0)\). These numbers enter in the prefactor of single diffractive cross sections, eg. \(\sigma _{AB \rightarrow AX} \propto \beta _{A{\mathbb {P}}}^2(0) \, \beta _{B{\mathbb {P}}}(0) = X^{AB} \, \beta _{A{\mathbb {P}}}(0)\). This relation comes about since the A side scatters (semi)elastically while the B side description is an inclusive one, cf. the optical theorem. In double diffraction \(AB \rightarrow X_1X_2\) neither side is elastic and the rate is directly proportional to \(X^{AB}\).

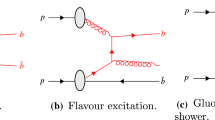

Total, nondiffractive (ND), elastic (el) and diffractive/excitation (D/E) cross sections for some common collision processes, a \(\mathrm{p}\mathrm{p}\), b \(\pi ^+\mathrm{p}\), c \(\pi ^-\mathrm{p}\) and d \(\mathrm{K}^+\mathrm{p}\). Full lines show the cross sections actually used, while dashed show the low-energy (LE) and dash-dotted the high-energy (HE) separate inputs. The LE/HE curves are shown also outside of their regions of intended validity, so should be viewed as illustrative only

In addition to the approximate \(\mathrm{d}M_X^2/ M_X^2\) mass spectrum of diffractive systems, by default there is also a smooth low-mass enhancement, as a simple smeared representation of exclusive resonance states. In the low-energy description of nucleon–nucleon collisions this is replaced by a set of explicit low-mass resonances (eg. \(AB \rightarrow AR\)) [5]. The low-energy description also includes single-resonance (\(AB \rightarrow R\)) and baryon–antibaryon annihilation contributions that are absent in the high-energy one.

The nondiffractive cross section, which is the largest fraction at high energies, is defined as what remains when the contributions above have been subtracted from the total cross section.

Some examples of total and partial cross sections are shown in Fig. 1.

2.3 Hadronic collisions

At low energies the character of an event is driven entirely by nonperturbative processes. In a nondiffractive topology, this can be represented by the exchange of a single gluon, so soft that the momentum transfer can be neglected. The colour exchange leads to two colour octet hadron remnants, however. Each can be split into a colour triplet and a colour antitriplet part, \(\mathrm{q}\)–\(\bar{\mathrm{q}}\) for a meson and \(\mathrm{q}\)–\(\mathrm{q}\mathrm{q}\) for a baryon. This leads to two (Lund [62]) strings being pulled out, each between the colour of one hadron and the anticolour of the other. In diffraction either a quark or a gluon is kicked out from the diffracted hadron, giving either a straight string or one with a kink at the gluon. Other processes have their own descriptions [5].

At high energies, on the other hand, perturbative processes play a key role. A suitable framework is that of multiparton interactions, MPIs [63, 64]. In it, it is assumed that the composite nature of the hadrons leads to several separate parton–parton interactions, each dressed up with associated parton showers. At first glance the interactions occur independently, but at closer look they are connected by energy–momentum-flavour-colour conservation. Especially the last is nontrivial to model, and requires a special colour reconnection step. There the total string length is reduced relative to a first assignment where the MPIs are largely decoupled from each other.

The probability to offer a perturbative description of a nondiffractive event is assumed to be

when \(E_{\mathrm {CM}} > E_{\mathrm {min}}\), and else vanishing. Here \(E_{\mathrm {CM}}\) is the collision energy in the rest frame, and

while \(E_{\mathrm {min,0}}\) and \(E_{\mathrm {wid}}\) are two free (within reason) parameters, both 10 GeV by default. The same transition can be used for the handling of diffraction, with \(E_{\mathrm {CM}}\) replaced by the mass of the diffractive system. Note that it is separate from the transition from low- to high-energy cross section expressions.

In perturbative events the parton–parton collision rate (neglecting quark masses) is given by

differentially in transverse momentum \(p_{\perp }\). Here the PDF \(f^A_i(x, Q^2)\) represents the probability to find a parton i in a hadron A with momentum fraction x if the hadron is probed at a scale \(Q^2 \approx p_{\perp }^2\). Different subprocesses are possible, labelled by k, but the dominant one is t-channel gluon exchange. It is convenient to order MPIs in falling order of \(p_{\perp }\), like in a parton shower.

A problem is that the perturbative QCD cross section in Eq. (10) is divergent in the \(p_{\perp } \rightarrow 0\) limit. This can be addressed by multiplying it with a factor

which is finite in the limit \(p_{\perp } \rightarrow 0\). Such a modification can be viewed as a consequence of colour screening: in the \(p_{\perp } \rightarrow 0\) limit a hypothetical exchanged gluon would not resolve individual partons but only (attempt to) couple to the vanishing net colour charge of the hadron. The damping could be associated only with the PDFs or only with the \(\mathrm{d}{\hat{\sigma }}/\mathrm{d}{\hat{t}}\) factor, according to taste, but we remain agnostic on this count. The new \(p_{\perp 0}\) parameter is assumed to be varying with the collision energy, with current default

which can be related to the increase of PDFs at low x, leading to an increasing screening with energy.

Most of the MPIs occur in the nondiffractive event class. The average number is given by

MPIs can also occur in high-mass diffraction, and is simulated in Pythia [65], but this is a smaller fraction.

The amount of MPIs in a collision directly impacts the event activity, eg. the average charged multiplicity. MPIs have almost exclusively been studied in \(\mathrm{p}\mathrm{p}\) and \(\mathrm{p}{\bar{\mathrm{p}}}\) collisions, however, so we have no data to go on when we now want to extend it to all the different collision types listed in Table 1. As a guiding principle we assume that \(\langle n_{\mathrm {MPI}} \rangle \) should remain roughly constant, ie. plausibly hadronic collisions at a given (large) energy have a comparable event activity, irrespective of the hadron types. But we already assumed that total cross sections are lower for mesons than for baryons, and falling for hadrons with an increasing amount of strange, charm or bottom quarks, so naively then Eq. (13) would suggest a correspondingly rising \(\langle n_{\mathrm {MPI}} \rangle \). There are (at least) two ways to reconcile this.

One is to increase the \(p_{\perp 0}\) scale to make the MPI cross section decrease. It is a not unreasonable point of view that a lower cross section for a hadron is related to a smaller physical size, and that this implies a larger screening. But it is only then interactions at small \(p_{\perp }\) scales that are reduced, while the ones at larger scales remain.

The alternative is to modify the PDFs and to let heavier quarks take a larger fraction of the respective total hadron momentum, such that there are fewer gluons and sea quarks at small x values and therefore a reduced collision rate. (A high-momentum quark will have an enhanced high-\(p_{\perp }\) collision rate, but that is only one parton among many.) This is actually a well-established “folklore”, that all long-lived constituents of a hadron must travel at approximately the same velocity for the hadron to stick together. It is a crucial aspect of the “intrinsic charm” hypothesis [66], where a long-lived \(\mathrm{c}\bar{\mathrm{c}}\) fluctuation in a proton takes a major fraction of the total momentum. In the inverse direction it has also been used to motivate heavy-flavour hadronization [67, 68]. This is the approach we will pursue in the following.

2.4 New parton distribution functions

Most PDF studies have concerned and still concern the proton, not least given the massive influx of HERA and LHC data. Several groups regularly produce steadily improved PDF sets [69,70,71]. The emphasis of these sets are on physics at high \(Q^2\) and (reasonably) high x to NLO or NNLO precision. In our study the emphasis instead is on inclusive events, dominated by MPIs at scales around \(p_{\perp 0}\), ie. a few GeV, and stretching down to low x values. These are regions where NLO/NNLO calculations are notoriously unstable, and LO descriptions are better suited.

Moving away from protons, data is considerably more scarce. There is some for the pion, eg. [72,73,74,75], a very small amount for the Kaon [76], and nothing beyond that. There has also been some theoretical PDF analyses, based on data and/or model input, like [77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92] for the pion and [79, 82, 93,94,95,96,97] for the Kaon. But again nothing for hadrons beyond that, to the best of our knowledge, which prompts our own work on the topic.

In order to be internally consistent, we have chosen to take the work of Glück, Reya and coworkers as a starting point. The basic idea of their “dynamically generated” distributions is to start the evolution at a very low \(Q_0\) scale, where originally the input was assumed purely valence-quark-like [98]. Over the years both gluon and \(\mathrm{u}/\mathrm{d}\) sea distributions have been introduced to allow reasonable fits to more precise data [99,100,101,102], but still with ansätze for the PDF shapes at \(Q_0\) that involve a more manageable number of free parameters than modern high-precision (N)NLO ones do. Their LO fits also work well with the Pythia MPI framework. To be specific, we will use the GRS99 pion [80] as starting point for meson PDFs, and the GJR07 proton one [102] similarly for baryons. Also the GR97 Kaon one [79] will play some role.

In the LO GRS99 \(\pi ^+\) PDF the up/down valence, sea, and gluon distributions are all parameterized on the form

at the starting scale \(Q_0^2 = 0.26\) \(\hbox {GeV}^2\). The sea is taken symmetric \(\mathrm{u}_{\mathrm {sea}} = \bar{\mathrm{u}}= \mathrm{d}= \bar{\mathrm{d}}_{\mathrm {sea}}\), while \(\mathrm{s}= \mathrm{c}= \mathrm{b}= 0\) at \(Q_0\). The GRS97 \(\mathrm{K}^+\) PDFs are described by assuming the total valence distribution to be the same as for \(\pi ^+\) (as specified in the same article), but the \(\mathrm{u}\) PDF is made slightly softer by multiplying it by a factor \((1-x)^{0.17}\). That is, the \(\mathrm{K}^+\) valence PDFs are given in terms of the \(\pi ^+\) PDFs as

The coefficient \(N_{\mathrm{K}/\pi }\) is a normalization constant determined by the flavour sum relation

Gluon and sea (both \(\mathrm{u}/\mathrm{d}\) and \(\mathrm{s}\)) distributions are taken to be the same as for the pion.

a Pion PDFs, comparing our simplified form to GRV92 and GRS99, and to data. b \(\bar{\mathrm{u}}_v^{\mathrm{K}^-} / \bar{\mathrm{u}}_v^{\pi ^-}\) ratio, comparing our simplified parameterization on the form given in Eq. (14) to the slightly more detailed description of GRS97, and comparing to data. Note that both cases use our simplified \(\bar{\mathrm{u}}_v^{\pi ^-}\) shown in a, and only differ in \(\bar{\mathrm{u}}_v^{\mathrm{K}^-}\)

In our work, we make the ansatz that hadron PDFs can be parameterized on the form given in Eq. (14) at the initial scale \(Q_0\), but with \(A = B = 0\) since there are no data or guiding principles to fix them in the generic case. The a and b parameters are allowed to vary with the particular parton and hadron in question, while N is fixed by Eq. (16) for valence quarks. The deviations introduced by the \(A = B = 0\) assumption are illustrated in Fig. 2. In Fig. 2a E615 data [75] are compared with the \(\pi \) PDFs as given by GRV92 [77], by GRS99 [80], and by our simplified description (labeled SU21) where a has been adjusted to give the same \(\langle x \rangle \) as for GRS99. In Fig. 2b the \(\bar{\mathrm{u}}_v^{\mathrm{K}^-} / \bar{\mathrm{u}}_v^{\pi ^-}\) ratio is compared between data [76], GRS97 [79] and our simplified model. In both cases the model differences are comparable with the uncertainty in data.

To further illustrate the changes introduced by setting \(A = B = 0\), Fig. 3 shows the number and transverse momentum of MPIs for different (a) proton and (b) pion PDFs, with average values as in Table 2. In both cases, our simplified SU21 ansatz leads to a shift that is comparable to the difference between the two standard PDFs. Thus we feel confident that our simplified ansatz is sufficient also for other hadrons, where there are neither data nor detailed theory calculations available. Nevertheless, for accuracy, we use the NNPDF 2.3 QCD+QED LO distribution function for protons and GRS99 LO for pions in our studies, and the SU21 ansatz only for hadrons beyond that.

Given that there is no solid theory for heavy hadron PDFs, the specific choices of a and b necessarily are heuristic. Our guiding principle is that all quarks should have roughly the same velocity, as already mentioned, and thus heavier quarks must have a larger average momentum fraction \(\langle x \rangle \), and a smaller b, while gluons and sea \(\mathrm{u}\)/\(\mathrm{d}\) must be softer. The \(\langle x \rangle \) choices do not exactly agree with the assumed mass ratios in our AQM ansatz, Eq. (1), but are somewhat less uneven than that. This is supported by the Kaon data [76], and also by some modelling [66].

Except for some fine print to come later, our procedure to determine PDFs at the \(Q_0\) starting scale is as follows:

-

1.

Give the valence quark distributions by \(N x^a (1-x)^b\), ie. put \(A = B = 0\).

-

2.

Choose sensible b and \(\langle x \rangle \) values for each valence quark, based on the principles above.

-

3.

Derive a from \(\langle x \rangle = \frac{\int _0^1 dx\,x\,f(x)}{\int _0^1 dx\,f(x)} = (a + 1) / (a + b + 2)\).

-

4.

Derive N to satisfy Eq. (16).

-

5.

For sea and gluon distributions, pick a d and set \(f(x) \propto x^d f^\pi (x)\) (here with A and B values as for the pion).

-

6.

Rescale the gluon and \(\mathrm{u}/\mathrm{d}\) sea distributions by a common factor to satisfy the momentum sum relation

$$\begin{aligned} \int _0^1 dx~\sum \limits _q x f_q(x) = 1. \end{aligned}$$ -

7.

The \(\mathrm{s}\), \(\mathrm{c}\) and \(\mathrm{b}\) contents are zero at the starting scale.

Our choices of b, \(\langle x \rangle \) and d are given in Table 3. Excited particles use the same PDFs as their unexcited counterparts. Some PDFs at the initial scale are shown in Fig. 4 for (a) mesons and (b) baryons, which clearly show how heavier quarks are made harder. The baryons are normalized to two \(\mathrm{u}\) valence quarks, and still the \(\mathrm{c}\)/\(\mathrm{b}\) peaks in \(\Sigma _\mathrm{c}^{++}\)/\(\Sigma _\mathrm{b}^+\) stand out in the comparison.

Once the initial state has been set up, the DGLAP equations [104,105,106] describe the evolution towards higher \(Q^2\) scales. Any number of implementations of these equations exist, both private and public, such as QCDNUM [107], HERAFitter/xFitter [108] and APFEL [109], that in principle should be equivalent. We choose to use QCDNUM since we find it well documented and well suited for our purposes. Nevertheless there are some limitations that we had to circumvent.

One such is that the framework is not set up to handle \(\mathrm{c}\) and \(\mathrm{b}\) quarks below the respective thresholds \(Q_\mathrm{c}^2\) and \(Q_\mathrm{b}^2\). To handle their presence, we map some flavours onto others during evolution. Consider eg. a \(\mathrm{B}^+ = \mathrm{u}\bar{\mathrm{b}}\) meson, where we wish to evolve the bottom valence \(v_{\bar{\mathrm{b}}}\) by \(\bar{\mathrm{b}}\rightarrow \bar{\mathrm{b}}\mathrm{g}\) branchings starting from \(Q_0^2\), but allow \(\mathrm{g}\rightarrow \mathrm{b}\bar{\mathrm{b}}\) only above \(Q_\mathrm{b}^2\). To handle this, we can redefine the initial \(\bar{\mathrm{b}}\) valence as a contribution eg. to the \(\bar{\mathrm{d}}\) content, ie. set \({\tilde{f}}_{\bar{\mathrm{d}}}(x, Q_0^2) = f_{\mathrm {sea}}(x, Q_0^2) + v_{\bar{\mathrm{b}}}(x, Q_0^2)\). Since evolution is linear, this relation also holds for \(Q_0^2 \rightarrow Q^2 > Q_0^2\), while \(f_\mathrm{d}(x, Q^2) = f_{\mathrm {sea}}(x, Q^2)\). For \(Q^2 > Q_\mathrm{b}^2\) there will also be a “sea” bottom content \({\tilde{f}}_\mathrm{b}(x, Q^2)\) from \(g \rightarrow \mathrm{b}\bar{\mathrm{b}}\) splittings. Then the correct \(\bar{\mathrm{d}}\) and \(\bar{\mathrm{b}}\) contents are reconstructed as

For doubly heavy flavoured mesons, like \(\mathrm{B}_\mathrm{c}\), we place one valence content in \(\bar{\mathrm{d}}\) and the other in \(\mathrm{u}\), then use the same procedure. The same trick can be modified to work for flavour-diagonal mesons, like \(\phi \), \(\mathrm {J}/\psi \) and \(\Upsilon \), eg. by adding the valence content to \(\mathrm{d}\) and \(\bar{\mathrm{d}}\). Afterward the \(\mathrm{u}= \bar{\mathrm{u}}= \mathrm{d}= \bar{\mathrm{d}}\) symmetry of the unmodified sea can be used to shift the heavy flavour content back where it belongs.

Different valence PDFs at the initial scale \(Q_0^2 = 0.26\) \(\hbox {GeV}^2\), for (a) \(\pi \), \(\mathrm{K}\), \(\mathrm{D}\) and \(\mathrm{B}\) mesons, showing the flavoured valence and the q (\(=\mathrm{d}/\mathrm{u}\)) valence contents; and for (b) \(\mathrm{u}\mathrm{u}q\) baryons for \(q = \mathrm{d}\) (proton), \(\mathrm{s}\) (\(\Sigma ^+\)), \(\mathrm{c}\) (\(\Sigma _\mathrm{c}^{++}\)) and \(\mathrm{b}\) (\(\Sigma _\mathrm{b}^+\))

There is a further complication for \(\eta \) and \(\eta '\), which fluctuate between \(\mathrm{u}\bar{\mathrm{u}}/\mathrm{d}\bar{\mathrm{d}}/\mathrm{s}\bar{\mathrm{s}}\) valence states. We handle this by treating them as a \(\mathrm{u}\bar{\mathrm{u}}\) state during evolution. After a specific quark content is chosen during event generation, the valence part of the evolved \(\mathrm{u}\bar{\mathrm{u}}\) is shifted to the corresponding distribution. For simplicity both \(\eta \) and \(\eta '\) are assumed to have the same valence \(\langle x \rangle \) values, intermediate between \(\pi \) and \(\phi \), whether in a \(\mathrm{s}\bar{\mathrm{s}}\) state or in a \(\mathrm{u}\bar{\mathrm{u}}/\mathrm{d}\bar{\mathrm{d}}\) one.

Baryons are treated similarly to mesons, with the obvious exception that they have three valence distributions. In this case the starting point is the GJR07 proton. Despite the known asymmetry of the proton, that the \(\mathrm{u}\) valence is harder than the \(\mathrm{d}\) one, we take \(\mathrm{u}\) and \(\mathrm{d}\) distributions, where present, to be equal for all other baryons. For heavy-flavoured baryons, the heavy valence is shifted into the \(\mathrm{d}\) valence in analogy with the meson case. We have not implemented doubly- or triply heavy-flavoured baryons, since these should be produced at a negligible rate, and thus there are no further complications.

After having studied the PDFs resulting from this procedure, we make one additional ad hoc adjustment for the \(\mathrm {J}/\psi \), \(\mathrm{B}_\mathrm{c}\) and \(\Upsilon \) mesons, ie. the ones that have exclusively \(\mathrm{c}\) and \(\mathrm{b}\) valence content. Using only the procedure outlined so far, the average number of MPIs in interactions involving these particles is much higher than for other hadrons. This comes as no surprise in view of Eq. (13); the absence of light quarks makes for a small total and nondiffractive cross section, while the normal evolution allows a non-negligible gluon and sea to evolve right from the low \(Q_0\) scale. But in real life one should expect heavy quarks to have a reduced emission rate of gluons below their mass scale. To compensate for this, increased \(Q_0^2\) scales of 0.6 \(\hbox {GeV}^2\), 0.75 \(\hbox {GeV}^2\) and 1.75 \(\hbox {GeV}^2\) are used for \(\mathrm {J}/\psi \), \(\mathrm{B}_\mathrm{c}\) and \(\Upsilon \), respectively. One could argue that similar shifts should be made for all hadrons containing a \(\mathrm{c}\) or \(\mathrm{b}\) quark, but if there are also light valence quarks then there should be some evolution already from small scales. Any mismatch in the emission can then more easily be absorbed in the overall uncertainty of the setup at the \(Q_0\) starting scale.

2.5 The forward region

The fastest particles in the projectile region play a central role for the shower evolution in the atmosphere, so the modelling of this region is a topic of special interest. Traditionally Pythia is more aimed towards the modelling of the central region, and there are known issues in the forward region [110,111,112]. Briefly put, proton/neutron spectra are softer and pion spectra harder than data. In Sibyll, which normally uses Lund string fragmentation, this has required a separate dedicated handling of leading-baryon formation [13].

We are not here able to report a final resolution of these issues, but a beginning has been made with two new options, both which modify the fragmentation of a diquark in the beam remnant. The first is to disallow popcorn handling [113], ie. the mechanism \(\mathrm{q}_1\mathrm{q}_2 \rightarrow \mathrm{q}_1 \bar{\mathrm{q}}_3 + \mathrm{q}_2\mathrm{q}_3\) whereby a meson can become the leading particle of a jet. (If two valence quarks are kicked out from the proton, which can happen in separate MPIs, the resulting junction topology is unaffected by the popcorn handling.) The second is to set the a and b parameters of the Lund symmetric fragmentation function \(f(z) = (1/z) \, (1-z)^a \, \exp (-b m_{\perp }^2 / z)\) separately from those in normal hadronization. There is some support for such a deviation in a few Lund studies [114,115,116], where it is argued that a drifting-apart of the two quarks of an original dipole indirectly leads to a hardening of the baryon spectrum.

Some first results are shown in Fig. 5. For the nucleon production it can be noted that both steps are about equally important, where \(a = 0\) and \(b = 2\) \(\hbox {GeV}^{-2}\) in the second step. Obviously other a and b values could have given a smaller or larger hardening of the nucleon spectrum. The composition is roughly 65% \(\mathrm{p}\) and 35% \(\mathrm{n}\), over the full phase space. The diffractive peak of protons near \(x_{\mathrm {F}} = 1\) has here been removed; in diffraction only the diffracted side of the event is studied. Additional baryon–antibaryon pair production becomes important in the central region, which is why only \(x_{\mathrm {F}} > 0.1\) is shown. For pions the major effect comes from removing remnant-diquark popcorn production, while the baryon fragmentation parameters here have a lesser effect.

Further modifications are likely to be necessary, and tuning studies are in progress [117].

2.6 Technical details

The new “SU21” PDFs will be included in an upcoming release of Pythia as LHAPDF-compatible [118] files, using the lhagrid1 format, as a central grid. The grids go down to \(x = 10^{-9}\) and up to \(Q = 10^4\) GeV.

It is already possible to have a variable energy for the collisions, if switched on at initialization. Then the MPI machinery is initialized at a set of energies up to the maximal one, and later on it is possible to interpolate in tables to obtain relevant MPI values at the current energy. This rather new feature is similar to what has existed a long time for MPIs in diffractive systems, where the diffractive mass varies from event to event even for fixed total energy. Note that it is only implemented for the inclusive processes in the MPI framework, and not for rare processes.

In a future release, it will also be possible to switch between different beam particles on an event-by-event basis. It is assumed that \(h\mathrm{p}\) and \(h\mathrm{n}\) cross sections are the same, and that \(\mathrm{p}\) and \(\mathrm{n}\) PDFs are related by isospin symmetry. (The latter is not quite true when QED effects are included, but it is close enough for our purposes.) Going one step further, it is also possible to initialize MPIs for the average behaviour of processes that have the same pomeron coefficient \(X^{AB}\) in the total cross section, where the PDFs are related by strong isospin, such that the high-energy behaviour should converge. The main example is \(\pi ^+\mathrm{p}\), \(\pi ^-\mathrm{p}\) and \(\pi ^0\mathrm{p}\). In detail, the MPI initialization is then based on the average behaviour of \(\sigma _{\mathrm {ND}}\) and \(\mathrm{d}\sigma / \mathrm{d}p_{\perp }\) eg. in Eq. (13), but the total cross section for a collision to occur is still by individual particle combination.

There are other beam combinations that have different total cross sections and PDFs that are not easily related to each other, cf. Tables 1 and 3. In a study where the user wishes to switch between such beam combinations during the run, the PDFs and MPI data grids must be initialized for each individual case. This may take tens of seconds per species, and multiplied by twenty this may be annoyingly long for simple test runs. The future release therefore introduces an option where the MPI initialization data of an instance can be stored on file and reused in a later run. This puts some responsibility on the user, since the new run must then be under the same condition as the original one: same (or only a subset of) allowed processes, same PDFs, same \(p_{\perp 0}\), same (or lower) maximum energy, and so on. These features are disabled by default and will only be available if explicitly turned on by the user during initialization.

3 Event properties and nuclear effects

With the tools developed in Sect. 2 it is now possible to generate a single hadron-nucleon collision for a wide selection of hadrons and at an almost arbitrary energy. Some comparisons between these hadron-beam options are first presented. But for a realistic simulation of a full cascade, eg. in the atmosphere, we need to consider nuclear effects. Here the Angantyr model provides some reference results for fixed topologies. Currently it is not flexible enough for cascade simulation, however, so instead we introduce a simplified approach.

3.1 Hadronic interaction properties

One of the assumptions made above was that the changes in total cross sections and in PDFs would match to some approximation, such that event properties would be comparable over the range of colliding hadrons. In this section we will briefly investigate how this works out by studying non-diffractive hadron–proton collisions at 6 TeV. There is no deep reason for this choice of CM energy, except that any potential proton–oxygen (or proton–nitrogen) run at the LHC is likely to be for a nucleon–nucleon energy in that neighbourhood. The incoming “projectile” hadron will be moving in the \(+z\) direction and the “target” proton in the \(-z\) ditto.

The a, b number and c, d transverse momentum spectrum of MPIs, for a selection of a, c meson–proton and b, d baryon–proton collisions at 6 TeV. Labels denote the respective hadron beam. Note that PDFs are taken to be constant below \(Q^2 = 0.5\) GeV\({}^2\) for protons and below \(Q^2 = 0.25\) GeV\({}^2\) for the other particles, which is the reason for the strange behaviours at low \(p_\perp \)

Charged-hadron a, b multiplicity distributions, c, d \(p_{\perp }\) spectra and e, f rapidity distributions for a selection of a, c, e meson–proton and b, d, f baryon–proton collisions. All results at a 6 TeV collision energy, and for nondiffractive events only. Labels denote the respective hadron beam

Distributions for the number and transverse momentum of MPIs are shown in Fig. 6 for a few different hadron types, while Fig. 7 shows charged hadronic multiplicity, \(p_\perp \) spectra, and rapidity spectra. We note that, by and large, the various distributions follow suit quite well, and notably the charged multiplicities are comparable. A few key numbers are shown for a larger class of collisions in Table 4, cementing the general picture. This indicates that the joint handling of total cross sections and PDFs are as consistent as can be expected.

Exceptional cases are \(\Upsilon \), \(\mathrm{B}_{\mathrm{c}}^+\) and \(\mathrm {J}/\psi \) where, even after adjusting the initial \(Q_0^2\) scale for the PDF evolution to reduce the number of MPIs, these particles give a higher activity than others. The technical reason is that, even if the MPI cross section is reduced to approximately match the smaller total cross, a non-negligible fraction of the remaining MPIs now come from the heavy valence quarks at large x values. These thereby more easily can produce higher-\(\mathrm{p}_{\perp }\) collisions (see \(\left<p_{\perp ,\mathrm {MPI}}\right>\) in Table 4), which means more event activity in general.

a, b Jet \(p_\perp \) and c, d rapidity differential cross sections (in units of \(\mu \)b), with \(p_{\perp ,\mathrm {min}} = 50\) GeV. Average values are shown in Table 4. Labels denote the respective hadron beam

Studying the rapidity spectra closer, we find asymmetries around zero, depending on how different the PDFs of the projectile are from those of the target proton. That is, a projectile with harder PDFs should give a spectrum shifted towards positive rapidities, and vice versa. Harder valence quarks tend to be counteracted by softer gluons and sea quarks, however. Possible effects also are partly masked by strings being stretched out to the beam remnants, no matter the rapidity of the perturbative subcollision. To better probe larger x values, we also study jet distributions, using the anti-\(k_{\perp }\) algorithm [119, 120] with \(R = 0.7\) and \(p_{\perp \mathrm {jet}} > 50\) GeV. Some distributions are shown in Fig. 8, with average values again given in Table 4. Indeed asymmetries now are quite visible. We also note that the high-\(p_{\perp }\) jet rate, normalized relative to the total nondiffractive cross section, is enhanced in the cases with harder PDFs, even if this is barely noticeable in the average \(p_{\perp }\) of all hadrons.

3.2 Nuclear collisions with Angantyr

Pythia comes with a built-in model for heavy-ion collisions, Angantyr [4], which describes much \(p\mathrm{A}\) and \(\mathrm{A}\mathrm{A}\) data quite well. It contains a model for the selection of nuclear geometry and impact parameter of collisions. In the Glauber formalism [121] the nucleons are assumed to travel along straight lines, and a binary nucleon–nucleon subcollision can result anytime two such lines pass close to each other. Any nucleon that undergoes at least one collision is called “wounded” [122]. In our case the projectile is a single hadron, so the number of subcollisions equals the number of wounded target nucleons.

In principle all of the components of the total cross section can contribute for each subcollision, but special consideration must be given to diffractive topologies. Notably diffractive excitation on the target side gives rapidity distributions tilted towards that side, a concept used already in the older Fritiof model [123] that partly has served as an inspiration for Angantyr. Alternatively, one can view such topologies as a consequence of the Pythia MPI machinery, wherein not all colour strings from several target nucleons are stretched all the way out to the projectile beam remnant, but some tend to get “short-circuited”. If such colour connections occur flat in rapidity, then this is equivalent to a \(\mathrm{d}M^2/M^2\) diffractive mass spectrum. To first approximation, an Angantyr hadron–nucleus collision can be viewed as one “normal” subcollision plus a variable number of diffractive events on the target side.

In its basic form, Angantyr event generation is quite fast, about as fast as ordinary Pythia hadron–hadron collisions per hadron produced. That is, the overhead from nuclear geometry considerations and energy–momentum sharing between partly overlapping nucleon–nucleon collisions is negligible. The program becomes much slower if the more sophisticated features are switched on, such as ropes [124, 125], shove [126, 127], and hadronic rescattering [6]. These contribute aspects that only become apparent in a more detailed scrutiny of events, beyond what is needed for our purposes. With minor modifications to the Angantyr code itself, ie. on top of the Pythia-generic ones we have already introduced in this article, it is also possible to allow any hadron to collide with a nucleus.

There are two severe limitations, however. Firstly, a time-consuming recalculation of hadronic geometry parameters is required anytime the collision energy or incoming hadron species is to be changed in Angantyr. Potentially this could be fixed in the future, eg. by interpolation in a grid of initializations at different energies, but it appears to be less simple than what we have introduced for the MPI framework. And secondly, Angantyr is only intended to be valid for nucleon–nucleon collision energies above roughly 100 GeV.

A less severe limitation is that the handling of nuclear remnants is very primitive. All non-wounded nucleons are lumped into a new nucleus, without any possibility for it to break up into smaller fragments. For a fixed target the new nucleus is essentially at rest, however, which means that it does not contribute to the continued evolution of the hadronic cascade. Therefore even the simple approach is good enough. In a cascade initiated by a primary nucleus with a fixed energy, it would have been conceivable to handle at least the primary collision using the full Angantyr, if it was not for this last limitation.

Even given the limitations, Angantyr offers a useful reference when next we come up with a simplified framework. Firstly, the number of subcollisions in \(\mathrm{p}\mathrm{N}\) collision roughly follows a geometric series, Fig. 9a. This is largely a consequence of geometry, where peripheral collisions are common and usually only give one subcollision, while central ones are rare but give more activity. To reach the highest multiplicities one also relies on rare chance alignments of target nucleons along the projectile trajectory. The deviations from an approximate geometric series are larger if one instead considers \(\mathrm{p}\mathrm{Pb}\) collisions, Fig. 9b, but not unreasonably so.

An approximate geometric behaviour is also observed for other \(h\mathrm{N}\) and \(h\mathrm{Pb}\) collisions. The average number of subcollisions depends on the hadron species and the collision energy, but mainly via the total cross section, as can be seen from Fig. 9c,d. Here the results are shown for seven different incoming hadrons (\(\mathrm{p}\), \(\pi ^+\), \(\mathrm{K}^+\), \(\phi ^0\), \(\Lambda ^0\), \(\Xi ^0\), \(\Omega ^-\)) at five different energies (10, 100, 1000, 10,000, 100,000 GeV). In the limit of \(\sigma _{\mathrm {total}} \rightarrow 0\) one would never expect more than one subcollision. Given this constraint, a reasonable overall description is obtained as

with \(\sigma _{\mathrm {tot}}\) in mb, as plotted in the figures.

Charged rapidity distributions \(\mathrm{d}n_{\mathrm {ch}}/\mathrm{d}y\) for a nucleon–nucleon collision energy of 6 TeV. a Inclusive Angantyr \(\mathrm{p}\mathrm{N}\) events relative to Pythia events scaled up with the average number of subcollisions in Angantyr. b Angantyr for different number of subcollisions, relative to results for one less subcollision. c Pythia inclusive, the nondiffractive (ND) component only, and the target-side single diffractive one (SD (AX)) in \(\mathrm{p}\mathrm{p}\) collisions. d The Pythia emulation of \(\mathrm{p}\mathrm{N}\). e As d, but for the charged multiplicity distribution

Secondly, Angantyr may also be used as a reference for expected final-state properties, such as the charged rapidity distribution, \(\mathrm{d}n_{\mathrm {ch}}/\mathrm{d}y\). As a starting point, Fig. 10a compares the Angantyr \(\mathrm{p}\mathrm{N}\) distribution with the Pythia \(\mathrm{p}\mathrm{p}\) one at a 6 TeV collision energy. The Pythia curve has beeen scaled up by a factor of 2.05, which corresponds to the average number of subcollisions in \(\mathrm{p}\mathrm{N}\). While the total charged multiplicities are comparable, there are three differences of note. (1) The Angantyr distribution is shifted into the target region, while the Pythia one by construction is symmetric around \(y = 0\). (2) Pythia has a large peak at around \(y \approx 8.7\) from elastic scattering, and some single diffraction, that is much smaller in Angantyr. (3) At \(y \approx -8.7\) instead Angantyr has a narrow peak from the not wounded nucleons that together create a new nucleus.

The description of nuclear effects on hadronization is nontrivial. At Angantyr initialization the relative composition of different subprocesses is changed. This means eg. that the elastic rate in \(\mathrm{p}\mathrm{N}\) with one single subcollision is reduced relative to Pythia \(\mathrm{p}\mathrm{p}\), Fig. 10b,c. Each further subcollision in Angantyr involves the addition of a diffractive-like system on the target side, but the step from 1 to 2 also includes eg. a drop in the elastic fraction, Fig. 10b, and the correlations arising from nucleons being assumed to have fluctuating sizes event-to-event. The diffractive systems have a higher activity and are more symmetric than corresponding Pythia ones, Fig. 10c. As already mentioned the Angantyr mechanism is not quite equivalent with that of ordinary diffraction, which is reflected in the choice of PDF for the “pomeron”, as used for the MPI activity inside the diffractive system. In Angantyr a rescaled proton PDF is used, while by default the H1 2006 Fit B LO [128] pomeron PDF is used in Pythia. If MPIs (and parton showers) are switched off, the diffractive systems become quite similar, and have a marked triangular shape, as expected for a \(\mathrm{d}M^2 / M^2\) diffractive mass distribution. That is, without MPIs the asymmetry of Angantyr events is dramatically larger. It is possible to use the rescaled-proton approach also in the Pythia simulation to obtain better agreement, but this is not quite good enough, so next we will present a slightly different solution.

3.3 Simplified nuclear collisions

In this section we introduce a simple framework to handle the interactions of a hadron h impinging on an \(\mathrm{N}\) target at rest. Angantyr is used as a reference, but does not allow the rapid switching of beam particle and energy needed to trace the evolution of a cascade, which instead is the area where the framework developed in this article excels. The new algorithm works as follows.

-

1.

Calculate the invariant mass for the \(h\mathrm{p}\), which is the same as the one for \(h\mathrm{n}\) to first approximation.

-

2.

Evaluate the \(h\mathrm{p}\) total cross section \(\sigma ^{h\mathrm{p}}_{\mathrm {tot}}\) as already described; again assumed equal to the \(h\mathrm{n}\) one.

-

3.

Evaluate \(\langle n_{\mathrm {sub}}^{h\mathrm{N}} \rangle \) from Eq. (18).

-

4.

Define \(r = 1 - 1 / \langle n_{\mathrm {sub}}^{h\mathrm{N}} \rangle \), such that the geometric distribution \(P_n = r^{n-1} / \langle n_{\mathrm {sub}}^{h\mathrm{N}} \rangle \), \(n\ge 1\) has \(\sum P_n = 1\) and \(\langle n \rangle = \langle n_{\mathrm {sub}}^{h\mathrm{N}} \rangle \).

-

5.

Decide with equal probability that a proton or a neutron in the target is wounded, and correspondingly generate an inclusive \(h\mathrm{p}\) or \(h\mathrm{n}\) event. Do not do any decays, however.

-

6.

Continue the generation with probability r. If not go to point 10. Also go there if there are no more nucleons that can be wounded, or if another user-set upper limit has been reached.

-

7.

Find the newly produced hadron that has the largest longitudinal momentum along the direction of the mother hadron h. Redefine h to be this newfound hadron.

-

8.

Pick a new target proton or neutron among the remaining ones, and generate a corresponding \(h\mathrm{p}\) or \(h\mathrm{n}\) event. Below 10 GeV, all low energy processes are allowed. Above 10 GeV, only allow a mix between nondiffractive and target single diffractive topologies, the former with probability \(P_{\mathrm {ND}} = 0.7\). Again omit decays at this stage.

-

9.

Loop back to point 6.

-

10.

At the end allow all unstable hadrons to decay.

The procedure is to be viewed as a technical trick, not as a physical description. Obviously there is no time for hadronization during the passage of the original h through the \(\mathrm{N}\). Rather the idea is that the new h in each step represents the original one, only with some loss of momentum. This is not too dissimilar from how a \(\mathrm{p}\) projectile in Angantyr has to give up some of its momentum for each subcollision it is involved in, even if that particular loss is calculated before hadronization. The new procedure also leads to the central rapidity of each further subcollision being shifted towards the target region.

The specific value of \(P_\mathrm {ND}\) in step 8 has no deep physical meaning, but is “tuned” such that this mix of the relevant curves in Fig. 10c, combined with the rapidity shift procedure just explained, gives the same average behaviour as the consecutive steps in Fig. 10b. Notably a high \(P_{\mathrm {ND}}\) is needed to reproduce the high activity and low \(\langle y \rangle \) shift in Angantyr “diffractive” systems.

A simplified test is shown in Fig. 10d, where the new hadron h and the target nucleon is always assumed to be a proton, such that only one collision kind is needed for the simulation at this stage. We have checked that an almost equally good description is obtained also for a lead target, and for a range of collision energies. A warning is in place, however, that the picture is a bit more impressive than warranted. Specifically, the charged multiplicity distributions show non-negligible discrepancies, Fig. 10e, where the Pythia machinery gives somewhat more low-multiplicity events, reflected in the forward elastic peak region, which then is compensated elsewhere to give the same average. It should be good enough to get some reasonable understanding of nuclear effects on cascade evolution, however. Later on, for such studies, we will use the full framework.

The Angantyr model is not intended to be applied at very low energies, so there we have no explicit guidance. The same approach with successive subcollisions is still used, with the hardest hadron allowed to go on to the next interaction, meaning that the CM frame is gradually shifted in the target direction. But there are two modifications. Firstly, below 10 GeV in the CM frame, all allowed (low-energy) processes are mixed in their normal fractions. And secondly, no further subcollisions are considered once the kinetic energy of the hardest hadron falls below \(E_{\mathrm {kin,min}}\).

As a final comment, if each initial \(h\mathrm{p}\) or \(h\mathrm{n}\) collision results in an average of \(\langle n_{\mathrm {sub}}^{h\mathrm{N}} \rangle \) subcollisions, then the effective \(\sigma _{\mathrm {tot}}^{h\mathrm{p}}\) cross section must be reduced by the same factor. That is, relative to a gas of free \(\mathrm{p}/\mathrm{n}\), the incoming hadron will travel longer in a same-nucleon-density \(\mathrm{N}\) gas before interacting, but produce more subcollisions each time it interacts. More generally, for a nucleus \(\mathrm{A}\) with atomic number A, the ansatz is that \(\sigma _{\mathrm {tot}}^{h\mathrm{A}} \, \langle n_{\mathrm {sub}}^{h\mathrm{A}} \rangle = A \, \sigma _{\mathrm {tot}}^{h\mathrm{p}} \). Nontrivial nuclear effects, such as the fluctuating nuclear sizes or shadowing, could modify this. For the 28 cases studied in Fig. 9 the ratio \(\sigma _{\mathrm {tot}}^{h\mathrm{A}} \, \langle n_{\mathrm {sub}}^{h\mathrm{A}} \rangle / (A \, \sigma _{\mathrm {tot}}^{h\mathrm{p}})\) lands in the range \(1 - 1.2\) for N and \(1.2 - 1.4\) for Pb, with no obvious pattern. For now such a possible correction factor is left aside.

4 Modelling hadronic cascades

In the previous sections we have developed and tested the tools needed to described hadron–nucleon interactions, and in an approximate manner hadron–nucleus ones, over a wide range of energies. In this section we will present some simple studies making use of the resulting framework. To this end we introduce a toy model of the atmosphere and study the evolution of a cascade. At the end we also study a cascade in a slab of lead, to go from a dilute to a dense medium, and from a light to a heavy nucleus. These examples are intended to point to possibilities rather than to give any definitive answers.

4.1 Medium density

The simplest possible medium density distribution is a uniform density \(\rho \). While not accurate for the atmosphere, it may be applicable eg. for simulating solid particle detectors. In such a medium, the mean free path of a particle is

where \(\sigma \) is the cross section for an interaction between the beam particle and a medium particle. The distance traveled before an interaction then follows an exponential distribution with mean value \(l_0\). In Pythia, lengths are given in units of mm and cross sections in units of mb. For particle densities, we use the common standard g/\(\hbox {cm}^3\). The conversion reads

where m is the target nucleus mass.

If decays of long-lived particles can be neglected, the evolution of the cascade is only a function of the g/cm\(^2\) interaction depth traversed, and we will use this as standard horizontal axis along which to present several results. When decays are to be included, however, it becomes important to model density variations. Let the atmospheric density \(\rho (z)\) depend on height z above the surface. The incoming particle enters the medium at \(z = z_0\) with a zenith angle \(\theta \), and the earth curvature is neglected. Then the naive probability for an interaction at height z is given by

But we are only interested in the first time this particle interacts, and this gives the conventional “radioactive decay” exponential damping, that the particle must not have interacted between \(z_0\) and z:

If f(z) has an invertible primitive function F(z) then the Monte Carlo solution for the selection of z is

where \({\mathcal {R}}\) is a random number uniformly distributed between 0 and 1. If not, then the veto algorithm [1] can be used. Once the first interaction has been picked at a height \(z_1\), then the same algorithm can be used for each of the particles produced in it, with \(z_0\) replaced by \(z_1\) as starting point, and each particle having a separate \(\sigma \) and \(\theta \). This is iterated as long as needed. For unstable particles also a decay vertex is selected, and the decay wins if it happens before the interaction. A particle reaches the ground if the selected \(z < 0\).

In our simple study, we model the atmospheric density at altitude h starting from the ideal gas law, \(\rho (h) = p M / RT\), where T and p are temperature and pressure at h, M is the molar mass of dry air, and R is the ideal gas constant. Assuming a linear drop-off for temperature (as is the case for the troposphere [129]), we have \(T = T_0 - Lh\), where L is the temperature lapse rate. From the hydrostatic equation, \(dp/dh = -g\rho \), the pressure is

Then

where the approximation holds for \(Lh \ll T_0\), and

Using International Standard Atmosphere values for the atmospheric parameters (ISO 2533:1975 [130]) gives \(\rho _0 = 1.225\) g/m\(^3\) and \(H = 10.4\) km. This approximation is good up until around \(L = 18\) km (near the equator), but in our simplified framework, we assume the entire atmosphere follows this shape. In this case, it is possible to sample z according to Eq. (24), specifically,

In our model, we make the additional assumption that the particle will never interact above \(z_0 = 100\) km. This is a good approximation since, applying the exponential approximation in Eq. (26) to infinity, the probability of such an interaction is of order \(10^{-5}\). Dedicated programs use a more detailed description, eg. CORSIKA has an atmosphere with five different layers.

4.2 Some atmospheric studies

In this section we study the cascade initiated by a proton hitting the model atmosphere above. In the future it would be useful to study also eg. an incoming iron nucleus, but here it is still not clear how to handle the nuclear remnants. The simulation includes hadronic cascades and decays, plus muon decays. But there is no simulation of electromagnetic cascades, nor electromagnetic energy loss of charged particles, nor bending in the earth magnetic field, nor a multitude of other effects. Photoproduction of hadronic states could be added, either for primary cosmic-ray photons or for secondary ones, but would require some more work, and is not considered important enough for now. The atmosphere is assumed to consist solely of nitrogen, which is a reasonable first approximation, given that eg. oxygen has almost the same atomic number.

Four atmospheric scenarios will be compared:

-

1.

A constant-density atmosphere, like on earth surface, consisting of free protons and neutrons. It starts 10.4 km up, so has the same total interaction depth as the normal atmosphere at vanishing zenith angle.

-

2.

Also a constant-density atmosphere of same height, but using the already described emulation of collisions with nitrogen.

-

3.

An exponentially attenuated “nitrogen” atmosphere, with vanishing zenith angle. Upper cutoff at 100 km.

-

4.

An exponentially attenuated “nitrogen” atmosphere, with 45\(^{\circ }\) zenith angle. This means a factor \(\sqrt{2}\) larger interaction depth than the other three scenarios. Again a 100 km upper cutoff.

The first atmosphere is included as a reference, such that the second one can gauge the impact of nuclear effects on the cascade evolution, as follows. In a \(\mathrm{p}/\mathrm{n}\) atmosphere, or between the separate \(\mathrm{N}\) nuclei in an \(\mathrm{N}\) one, production is multiplicative: each hadron formed in a first collision can go on and produce new particles in a separate second collision. But inside a nucleus new hadrons do not have time to form until after all the \(h\mathrm{p}/h\mathrm{n}\) subcollisions have occured. Then the hadronic multiplicity is approximately proportional to the number of nucleons wounded by the original incoming hadron, ie. is additive. Therefore one expects a slower start of the cascade evolution in an \(\mathrm{N}\) atmosphere than in an equivalent-nucleon-density \(\mathrm{p}/\mathrm{n}\) one. In reality differences can be reduced by energy–momentum conservation effects, and by a smaller ability for low-energy particles to produce further hadrons. As a toy example how large effects are possible, we have studied the case where each of the products of a primary 6 TeV \(\mathrm{p}\mathrm{p}\) event, represented by a 19,200 TeV beam on a fixed target, can interact with one further \(\mathrm{p}\) in the target, Fig. 11. For this toy study the cascade stops after the second step, and hadrons are only allowed to interact if the kinetic energy of the collision is larger than 0.2 GeV.

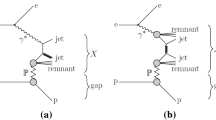

Toy study on the effect of clustering nucleons into nuclei, as reflected in the charged multiplicity distribution after an initial proton has passed through two “layers” of target nucleons. In one scenario these layers are closely spaced, as inside a (nitrogen) nucleus, so that no hadrons from the first collision have time to form before the second one. This is approximated by the sum of two independent pp collisions. In the other scenario the two “layers” are well separated, as they would be in a hydrogen atmosphere. Then all of the hadrons of the first pp collision have time to form and spread out, and each such hadron can undergo a second independent collision on a target proton. In one suboption (“All”) all such hadrons are forced to undergo a second collision. In another, more realistic (“Some”), each interacts with a probability given by the ratio of the individual hadron-proton cross section to the original pp one. See further explanations in the text

What is expected to differ between the second and the later atmospheres is the competition between decays and secondary interactions. An early interaction high up in an attenuated atmosphere gives the produced hadrons more time to decay before they can interact.

For simplicity we assume an incoming proton energy of \(10^8\) GeV. This corresponds to a \(\mathrm{p}\mathrm{p}\) collision CM energy of 13.7 TeV, ie. just at around the maximal LHC energy. Only hadrons with a kinetic energy above \(E_{\mathrm {kin,min}} = 0.2\) GeV are allowed to interact. Below that scale they can still decay, but those that do not are assumed to have dissipated and will not be counted in our studies below.

Evolution of a cascade initiated by a \(10^8\) GeV proton travelling through four different simple models of the atmosphere, as described in the text, as a function of atmospheric depth in g/cm\(^2\). The models stop when reaching the surface. Shown is the number of a interactions, b hadrons produced (full) and decayed (dashed), and c hadrons remaining. Hadrons that fall below the \(E_{\mathrm {kin,min}}\) threshold are removed from the numbers in c, but have not been counted as decays in b

Evolution of muons and neutrinos for the same cascades as in Fig. 12. Shown is the number of a muons produced (full) and decayed (dashed), b muons remaining, c muon neutrinos remaining and d electron neutrinos remaining

Kinetic energy spectra (in a, b) and transverse spread (in c, d) of the particles that reach the surface for the same cascades as in Fig. 12. Shown are the spectra of a, c hadrons and b, d muons and neutrinos

The evolution of the cascade is shown in Fig. 12 and Fig. 13, and the energy spectra and transverse spread of particles reaching the surface in Fig. 14.

Considering Fig. 12a, the hadron–nucleon interaction rate as a function of atmospheric depth, we note that interactions begin earlier in the \(\mathrm{p}/\mathrm{n}\) atmosphere than in the \(\mathrm{N}\) one, but then also peters out earlier, when most hadrons have low energies. Moving on to the exponential atmosphere, more hadrons (notably pions) decay before they can interact, which reduces the interaction rate. Even more so in the case of a 45\(^{\circ }\) zenith angle, where more of the early evolution takes place in a thin atmosphere. The production rate of hadrons, Fig. 12b, correlates rather well with the interaction rate, although the number of hadrons produced per collision would gradually decrease as each hadron gets to have a lower energy. The early hadron production is higher for the free \(\mathrm{p}/\mathrm{n}\) atmosphere, consistent with expectations but not quite as dramatic as Fig. 11 might have led one to expect. Most of the hadrons decay reasonably rapidly, leaving mainly protons and neutrons to carry on. Fig. 12c shows how the number of such undecayed hadrons increases, following the pattern of the previous plots. Specifically, the exponential atmospheres give a reduced number of final hadrons.

The long lifetime of muons, \(c\tau = 659\) m, means that muon decays lag behind production, Fig. 13a. The number of muons reaches a plateau, where production and decay roughly balance, Fig. 13b. Recall that electromagnetic cascades, largely initiated by the \(\pi ^0 \rightarrow \gamma \gamma \) decays, are not studied here, and thus their potential contribution to muon production is not included. The total number of muons follows the same pattern between the four atmospheric scenarios as noted for hadrons. The production of muon and electron neutrinos, Fig. 13c, d, is dominated by pion and muon decays, but also receives contributions from other weak decays. Neutrino oscillations are not considered here.

The bulk of hadrons that apparently reach the ground have very low kinetic energies, even given the cut \(E_{\mathrm {kin}} = E - m > E_{\mathrm {kin,min}}\), Fig. 14a. In reality most of these would be stopped by ionization energy losses or bent away by the earth magnetic field, and those that still reach the ground would do it with reduced energies, so the figure should be viewed as a study of the consequences of hadronic cascades on their own. The uniform and exponential nitrogen atmospheres have comparable rates of higher-energy hadrons. These hadrons are dominated by \(\mathrm{p}\) and \(\mathrm{n}\), which are not affected by decays. That the higher-energy hadron rate is reduced for a non-vanishing zenith angle is to be expected. Also the \(\mathrm{p}/\mathrm{n}\) atmosphere gives a lower rate, presumably as a consequence of the faster split of the original energy into several lower-energy collision chains. The kinetic energy spectra of muons and neutrinos, Fig. 14b, again are peaked at lower energies, though not quite as dramatically. The four atmospheric models also come closer to each other for leptons, though the \(\mathrm{p}/\mathrm{n}\) one remains an outlier.

The cascades disperse particles in quite different directions, implying large footprints on the earth surface. In Fig. 14c, d we show the distributions of hadrons or muons/ neutrinos as a function of the distance \(r_{\perp }\) away from the point where the original proton would have hit if it had not interacted. Recall that the relevant area element is \(\mathrm{d}^2 r_{\perp } = 2\pi \, r_{\perp } \, \mathrm{d}r_{\perp }\), while Fig. 14c, d plots \(\mathrm{d}n / \mathrm{d}r_{\perp }\), so the number of particles per area is strongly peaked around \(r_{\perp } = 0\). The area argument is also the reason why two of the curves can turn upwards at large \(r_{\perp }\). Not unexpectedly a non-vanishing zenith angle increases the spread, both by having interactions further away and by the elongation of a fictitious shower cone hitting the surface at a tilt. Conversely, the uniform nitrogen gives less spread, by virtue of cascades starting closer to the surface. It should be mentioned that kinetic-energy-weighted distributions (not shown) are appreciably more peaked close to \(r_{\perp } = 0\), as could be expected. Occasionally an event can have a large energy spike close to but a bit displaced from the origin. We have not studied this phenomenon closer, but assume it relates to an early branching where a high-energy particle is produced with a non-negligible transverse kick relative to the event axis.

Evolution of a cascade initiated by a 1000 GeV proton, \(\pi ^+\), \(\mathrm{K}^+\) or \(\Lambda ^0\) passing through a 3.5 m thick slab of lead. Shown is the number of a interactions, b hadrons produced (full) and decayed (dashed), c hadrons remaining and d muons and neutrinos remaining. Hadrons that fall below the \(E_{\mathrm {kin,min}}\) threshold are removed from the numbers in c, but have not been counted as decays in b

4.3 A lead study

The new code can also be used to track a cascade through a solid material. We have taken lead as an example of a heavy element that is used in some detectors, with rather different properties than the light elements and low density of air. Here the decays of longer-lived particles, such as \(\pi ^{\pm }\), \(\mathrm{K}^{\pm }\), \(\mathrm{K}_{\mathrm {L}}\) and \(\mu ^{\pm }\), do not play as significant a role as in the atmosphere, given the shorter distances a particle travels through a detector. The maximal primary hadronic energy is also lower than for cosmic rays. Taking LHC as example, the 7 TeV maximum translates into collision CM energies below 115 GeV. When we now study the cascades in lead, only hadronic interactions are considered, as before, ie. leptons and photons are free-streaming. Some illustrative results are shown in Fig. 15, for a \(p_z = 1\) TeV initial hadron of different kinds. The density of lead is \(\rho = 11.35\) g/cm\(^3\), so an interaction depth of 4000 g/cm\(^2\) corresponds to 3.5 m. Hadrons below \(E_{\mathrm {kin,min}} = 0.2\) GeV are assumed to stop in the matter and not interact any further. Thus the number of hadrons vanishes after som depth.