Abstract

This document presents the physics case and ancillary studies for the proposed CODEX-b long-lived particle (LLP) detector, as well as for a smaller proof-of-concept demonstrator detector, CODEX-\(\beta \), to be operated during Run 3 of the LHC. Our development of the CODEX-b physics case synthesizes ‘top-down’ and ‘bottom-up’ theoretical approaches, providing a detailed survey of both minimal and complete models featuring LLPs. Several of these models have not been studied previously, and for some others we amend studies from previous literature: In particular, for gluon and fermion-coupled axion-like particles. We moreover present updated simulations of expected backgrounds in CODEX-b’s actively shielded environment, including the effects of shielding propagation uncertainties, high-energy tails and variation in the shielding design. Initial results are also included from a background measurement and calibration campaign. A design overview is presented for the CODEX-\(\beta \) demonstrator detector, which will enable background calibration and detector design studies. Finally, we lay out brief studies of various design drivers of the CODEX-b experiment and potential extensions of the baseline design, including the physics case for a calorimeter element, precision timing, event tagging within LHCb, and precision low-momentum tracking.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Executive summary

The Large Hadron Collider (LHC) provides unprecedented sensitivity to short-distance physics. Primary achievements of the experimental program include the discovery of the Higgs boson [1, 2], the ongoing investigation of its interactions [3], and remarkable precision Standard Model (SM) measurements. Furthermore, a multitude of searches for physics beyond the Standard Model (BSM) have been conducted over a tremendous array of channels. These have resulted in greatly improved BSM limits, with no new particles or force carriers having been found.

The primary LHC experiments (ATLAS, CMS, LHCb, ALICE) have proven to be remarkably versatile and complementary in their BSM reach. As these experiments are scheduled for upgrades and data collection over at least another 15 years, it is natural to consider whether they can be further complemented by one or more detectors specialized for well-motivated but currently hard-to-detect BSM signatures. A compelling category of such signatures are long-lived particles (LLPs), which generally appear in any theory containing a hierarchy of scales or small parameters, and are therefore ubiquitous in BSM scenarios.

The central challenge in detecting LLPs is that not only their masses but also their lifetimes may span many orders of magnitude. This makes it impossible from first principles to construct a single detector which would have the ultimate sensitivity to all possible LLP signatures; multiple complementary experiments are necessary, as summarized in Fig. 1.

In this expression of interest we advocate for CODEX-b (“COmpact Detector for EXotics at LHCb”), a LLP detector that would be installed in the DELPHI/UXA cavern next to LHCb’s interaction point (IP8). The approximate proposed timeline is given in Fig. 2: Here “CODEX-\(\beta \) ” refers to a smaller proof-of-concept detector with otherwise the same basic geometry and technology as CODEX-b.

The central advantages of CODEX-b are:

-

Very competitive sensitivity to a wide range of LLP models, either exceeding or complementary to the sensitivity of other existing or proposed detectors;

-

An achievable zero background environment, as well as an accessible experimental location in the DELPHI/UXA cavern with all necessary services already in place;

-

Straightforward integration into LHCb’s trigger-less readout and the ability to tag events of interest with the LHCb detector;

-

A compact size and consequently modest cost, with the realistic possibility to extend detector capabilities for neutral particles in the final state.

We survey a wide range of BSM scenarios giving rise to LLPs and demonstrate how these advantages translate into competitive and complementary reach with respect to other proposals. We furthermore detail the experimental and simulation studies carried out so far, showing that CODEX-b can be built as planned and operate as a zero background experiment. We also discuss possible technology options that may further enhance the reach of CODEX-b. Finally, we discuss the timetable for the construction and data taking of CODEX-\(\beta \), and show that it may also achieve new reach for certain BSM scenarios.

2 Introduction

2.1 Motivation

New Physics (NP) searches at the LHC and other experiments have primarily been motivated by the predictions of various extensions of the SM, designed to address long-standing open questions. These include e.g. the origin and nature of dark matter, the detailed dynamics of the weak scale, the mechanism of baryogenesis, among many others. However, in the absence of clear experimental NP hints, the solutions to these puzzles remain largely mysterious. Combined with increasing tensions from current collider data on the most popular BSM extensions, it has become increasingly imperative to consider whether the quest for NP requires new and innovative strategies: A means to diversify LHC search programs with a minimum of theoretical prejudice, and to seek signatures for which the current experiments are trigger and/or background limited.

A central component of this program will be the ability to probe ‘dark’ or ‘hidden’ sectors, comprised of degrees of freedom that are ‘sterile’ under the SM gauge interactions. Hidden sectors are ubiquitous in many BSM scenarios, and typically may feature either suppressed renormalizable couplings, heavy mediator exchanges with SM states, or both.Footnote 1 The sheer breadth of possibilities for these hidden sectors mandates search strategies that are as model-independent as possible.

Suppressed dark–SM couplings or heavy dark–SM mediators may in turn give rise to relatively long lifetimes for light degrees of freedom in the hidden spectrum, by inducing suppressions of their total widths via either small couplings, the mediator mass, loops and/or phase space. This scenario is very common in many models featuring e.g. Dark Matter (Sects. 2.4.4, 2.4.5, 2.4.6, 2.4.7 and 2.4.8), Baryogenesis (Sect. 2.4.9), Supersymmetry (Sect. 2.4.1) or Neutral Naturalness (Sect. 2.4.3). The canonical examples in the SM are the long lifetimes of the \(K^0_L\), \(\pi ^\pm \), neutron and muon, whose widths are suppressed by the weak interaction scale required for flavor changing processes, as well as phase space. Vestiges of hidden sectors may then manifest in the form of striking morphologies within LHC collisions, in particular the displaced decay-in-flight of these metastable, light particles in the hidden sector, commonly referred to as ‘long-lived particles’ (LLPs). Surveying a wide range of benchmark scenarios, we demonstrate in this document that by searching for such LLP decays, CODEX-b would permit substantial improvements in reach for many well-motivated NP scenarios, well beyond what could be gained by an increase in luminosity at the existing detectors.

2.2 Experimental requirements

In any given NP scenario, the decay width of an LLP may exhibit strong power-law dependencies on a priori unknown ratios of various physical scales. As a consequence, theoretical priors for the LLP lifetime are broad, such that LLPs may occupy a wide phenomenological parameter space. In the context of the LHC, LLP masses from several MeV up to \(\mathcal {O}(1)\) TeV may be contemplated, and proper lifetimes as long as \(\lesssim 0.1\) seconds may be permitted before tensions with Big Bang Nucleosynthesis arise [4,5,6].

Broadly speaking, the ability of any given experiment to probe a particular point in this space of LLP mass and lifetimes will depend strongly not only on the center-of-mass energy available to the experiment, but also on its fiducial detector volume, distance from the interaction point (IP), triggering limitations, and the size of irreducible backgrounds in the detector environment [7]. The latter is large for light LLP searches, requiring a shielded, background-free detector. Further, LLP production channels involving the decay of a heavy parent state – e.g. a Higgs decay – require sufficient partonic center-of-mass energy, \(\sqrt{\hat{s}}\), to produce an abundant sample of heavy parents. Such channels are thus probed most effectively transverse to an LHC interaction point. Taken together, these varying requirements prevent any single experimental approach from attaining comprehensive coverage over the full parameter space.

Experimental coverage of LLP searches is also determined by the morphology of LLP decays. The simplest scenario contemplates a large branching ratio for 2-body LLP decays to two charged SM particles – for instance \(\ell ^+\ell ^-\), \(\pi ^+\pi ^-\) or \(K^+K^-\). In many well-motivated benchmark scenarios (see Sect. 2), however, the LLP may decay to various final states involving missing energy, photons, or high multiplicity, softer final states. In any experimental environment, these more complex decay morphologies can be much more challenging to detect or reconstruct: Reconstructing missing energy final states requires the ability to measure track momenta; detecting photons requires a calorimeter element or preshower component; identifying high multiplicity final states requires the suppression of soft hadronic backgrounds. The CODEX-b baseline concept, as described below in Sect. 1.3, is well-suited to reconstruct several of these morphologies, in addition to the simple 2-body decays. Extensions of the baseline design may permit some calorimetry or pre-shower capabilities, which would enable the reconstruction of photons and other neutral hadrons.

2.3 Baseline detector concept

The proposed CODEX-b location is in the UX85 cavern, roughly 25 meters from the interaction point 8 (IP8), with a nominal fiducial volume of \(10\text { m}\times 10\text { m} \times 10\) m (see Fig. 3a) [8]. Specifically, the fiducial volume is defined by \(26\text { m}<x<36\text { m}\), \(-7\text { m}<y<3\text { m}\) and \(5\text { m}<z<15\text { m}\), where the z direction is aligned along the beam line and the origin of the coordinate system is taken to be the interaction point. This location roughly corresponds to the pseudorapidity range \(0.13<\eta <0.54\). Passive shielding is partially provided by the existing UXA wall, while the remainder is achieved by a combination of active vetos and passive shielding located nearer to the IP. A detailed description of the backgrounds and the required amount of shielding can be found in Sect. 3.

The actual reach of any LLP detector will be tempered by various efficiencies, including efficiencies for tracking and vertex reconstruction. In particular, no magnetic field will be available in the CODEX-b fiducial volume. To design an LLP detection program, rather than only an exclusionary one, it is therefore important to be able to confirm the presence of exotic physics and reject possibly mis-modeled backgrounds. This requires capabilities for particle identification, mass reconstruction and/or event reconstruction.

To address these considerations, several detector concepts are being considered. The baseline CODEX-b conceptual design makes use of Resistive Plate Chambers (RPC) tracking stations with \(\mathcal {O}(100)\) ps timing resolution. A hermetic detector, with respect to the LLP decay vertex, is needed to achieve good signal efficiency and background rejection. In the baseline design, this is achieved by placing six RPC layers on each surface of the detector. To ensure good vertex resolution five additional triplets of RPC layers are placed equally spaced along the depth of the detector, as shown in Fig. 3b. Other, more ambitious options are being considered, that use both RPCs as well as large scale calorimeter technologies such as liquid [10] or plastic scintillators, used in accelerator neutrino experiments such as NO\(\nu \)A [11], T2K upgrade [12] or Dune [13]. If deemed feasible, implementing one of these options would permit measurement of decay modes involving neutral final states, improved particle identification and more efficient background rejection techniques.

Because the baseline CODEX-b concept makes use of proven and well-understood technologies for tracking and precision timing resolution, any estimation or simulation of the net reconstruction efficiencies is expected to be reliable. These estimates must be ultimately validated by data-driven determinations from a demonstrator detector, which we call CODEX-\(\beta \) (see Sect. 4). Combined together, the baseline tracking and timing capabilities will permit mass reconstruction and particle identification for some benchmark scenarios.

The transverse location of the detector permits reliable background simulations based on well-measured SM transverse production cross-sections. The SM particle propagation through matter – necessary to simulate the response of the UXA radiation wall and the additional passive and active shielding – is also well understood for the typical particle energies generated in that pseudorapidity range. The proposed location behind the UXA radiation wall will also permit regular maintenance of the experiment, e.g. during technical or other stops. In addition to background simulations, the active veto and the ability to vary the amount of shielding over the detector acceptance permit LLP measurements or exclusions to be determined with respect to data-driven baseline measurements or calibrations of relevant backgrounds (see Sect. 3).

2.4 Search power, complementarities and unique features

Although ATLAS, CMS and LHCb were not explicitly designed with LLP searches in mind, they have been remarkably effective at probing a large region of the LLP parameter space (see [7, 14] for recent reviews). The main variables which provide the necessary discrimination for triggering and off-line background rejection are often the amount of energy deposited and/or the number of tracks connected to the displaced vertex. In most searches, the signal efficiency therefore drops dramatically for low mass LLPs, especially when they are not highly energetic (e.g. from Higgs decays.) For instance, the penetration of hadrons into the ATLAS or CMS muon systems, combined with a reduced trigger efficiency, attenuates the LHC reach for light LLPs, \(m_{\text {LLP}} \lesssim 10\) GeV, decaying in the muon systems.

Beam dump experiments such as SHiP [15,16,17], NA62 [18] in beam-dump mode, as well as forward experiments like FASER [19,20,21] evade this problem by employing passive and/or active shielding to fully attenuate the SM backgrounds. The LLPs are moreover boosted in a relatively narrow cone, and very high beam luminosities can be attained. This results in excellent reach for light LLPs that are predominantly produced at relatively low center-of-mass energy, such as a kinetically mixed dark photon. The main trade-off in this approach is, however, the limited partonic center-of-mass energy, which severely limits their sensitivity to heavier LLPs or LLPs primarily produced through heavy portals (e.g. Higgs decays).

Finally, proposals in pursuit of shielded, transverse, background-free detectors such as MATHUSLA [22, 23], CODEX-b [8] and AL3X [24] aim to operate at relatively low pseudorapidity \(\eta \), but with far greater shielding compared to the ATLAS and CMS muon systems. This removes the background rejection and triggering challenges even for low mass LLPs, \(m_{\text {LLP}} \lesssim 10\) GeV, though at the expense of a reduced geometric acceptance and/or reduced luminosity. Because of their location transverse from the beamline, they can access processes for which a high parton center-of-mass energy is needed, such as Higgs and Z production.

In this light, the regimes for which existing and proposed experiments have the most effective coverage can be roughly summarized as follows:

-

1.

ATLAS and CMS: Heavy LLPs (\(m_{\text {LLP}} \gtrsim 10\) GeV) for all lifetimes (\(c\tau \lesssim 10^7\) m).

-

2.

LHCb: Short to medium lifetimes (\(c\tau \lesssim 1\) m) for light LLPs (\(0.1\,\text {GeV}\lesssim m_{\text {LLP}} \lesssim 10\) GeV).

-

3.

Forward/beam dump detectors (FASER, NA62, SHiP): Medium to long lifetime regime (\(0.1 \lesssim c\tau \lesssim 10^7\) m) for light LLPs (\(m_{\text {LLP}} \lesssim \) few GeV), for low \(\sqrt{\hat{s}}\) production channels.

-

4.

Shielded, transversely displaced detectors (MATHUSLA, CODEX-b, AL3X): Relatively light LLPsFootnote 2 (\(m_{\text {LLP}} \lesssim 10\)–100 GeV) in the long lifetime regime (\(1 \lesssim c\tau \lesssim 10^7\) m), and high \(\sqrt{\hat{s}}\) production channels.

In Fig. 4 we provide a visual schematic summarizing these LLP coverages, showing slices in the space of LLP mass, lifetime, and \(\sqrt{\hat{s}}\), that provide a sketch of the complementarity and unique features of various LLP search strategies and proposals. Relative to the existing LHC detectors, CODEX-b will be able to probe unique regimes of parameter space over a large range of well motivated models and portals, explored further in the Physics Case in Sect. 2. A more extensive discussion and evaluation of the landscape of LLP experimental proposals can be found in Refs. [7] and [25].

While the ambitiously sized ‘transverse’ detector proposals such as MATHUSLA and AL3X would explore even larger ranges of the parameter space, the more manageable and modest size of CODEX-b provides a substantially lower cost alternative with good LLP sensitivity. It also allows for the possibility of additional detector subsystems, such as precision tracking and calorimetry. Furthermore, the proximity of CODEX-b to the LHCb interaction point (IP8) and LHCb’s trigger-less readout (based on standardized and readily available technologies) makes it straightforward to integrate the detector into the LHCb readout for triggering and/or partial event reconstruction. This capability is not available to any other proposed LLP experiment at the LHC interaction points, and may prove crucial to authenticate any signals seen by CODEX-b. For a further discussion of the experimental design drivers and preliminary case studies of how different detector capabilities can effect the sensitivity for different models, we refer to Sect. 5.

2.5 Timeline

The CODEX-\(\beta \) demonstrator detector is proposed for Run 3 and is therefore complementary in time to the other funded proposals such as FASER. In contrast, the full version of CODEX-b, as well as FASER2, SHiP, MATHUSLA, and AL3X are all proposed to operate in Runs 4 or 5 during the HL-LHC. We show the nominal timeline for CODEX-\(\beta \) and CODEX-b in Fig. 5. Results as well as design and construction lessons from CODEX-\(\beta \) are expected to inform the final design choices for the full detector, and may also inform the evolution of the schedule shown in Fig. 5. The modest size of CODEX-b, the accessibility of the DELPHI cavern, and the use of proven technologies in the baseline design, is expected to imply not only lower construction and maintenance costs but also a relatively short construction timescale. It should be emphasized that CODEX-b may provide complementary data both in reach and in time, at relatively low cost, to potential discoveries in other more ambitious proposals, should they be built, as well as to existing LHC experiments.

3 Physics case

3.1 Theory survey strategies

Long-lived particles occur generically in theories with a hierarchy of mass scales and/or couplings (see Sect. 1.1), such as the Standard Model and many of its possible extensions. This raises the question how best to survey the reach of any new or existing experiment in the theory landscape. Given the vast range of possibilities, injecting some amount of “theory prejudice” cannot be avoided. We therefore consider two complementary strategies to survey the theory space: (i) studying minimal models or “portals”, where one extends the Standard Model with a single new particle that is inert under all SM gauge interactions. The set of minimal modes satisfying this criteria is both predictive and relatively small – we restrict ourselves to the set of minimal models generating operators of dimension 4 or lower, as well as the well-motivated dimension 5 operators for axion-like particles. It is important to keep in mind, however, that minimal models are merely simplified models, meant to parametrize different classes of phenomenological features that may arise in more complete models. To mitigate this deficiency to some extent, we then also consider: (ii) studying a number of complete models, which are more elaborate but aim to address one or more of the outstanding problems of the Standard Model, such as the gauge hierarchy problem, the mechanism of baryogenesis, or the nature of dark matter. These complete models feature LLPs as a consequence of the proposed mechanisms introduced to solve these problems.

3.2 Novel studies

While many of the models surveyed below have been studied elsewhere and are recapitulated here, several of the studies in this section contain new and novel results, either correcting previous literature, recasting previous studies for the case of CODEX-b, or introducing new models not studied before. Specifically, we draw the reader’s attention to:

-

1.

The axion-like particles (ALPs) minimal model (Sect. 2.3.3), which includes new contributions to ALP production from parton fragmentation. This can be very important in LHC collisions, significantly enhancing production estimates and consequent reaches, but was overlooked in previous literature.

-

2.

The heavy neutral leptons (HNLs) minimal model (Sect. 2.3.4), which includes modest corrections to the HNL lifetime and \(\tau \) branching ratios, compared to prior treatments.

-

3.

The neutral naturalness complete model (Sect. 2.4.3), which is recast for CODEX-b from prior studies.

-

4.

The coscattering dark matter complete model (Sect. 2.4.5), which contains LLPs produced through an exotic Z decay, and has not been studied previously.

3.3 Minimal models

The underlying philosophy of the minimal model approach is the fact that the symmetries of the SM already strongly restrict the portals through which a new, neutral state can interact with our sector. The minimal models can then be classified via whether the new particle is a scalar (S), pseudo-scalar (a), a fermion (N) or a vector (\(A'\)). In each case there are a only a few operators of dimension 4 or lower (dimension 5 for the pseudo-scalar) which are allowed by gauge invariance. The most common nomenclature of the minimal models and their corresponding operators are

where \(F'^{\mu \nu }\) is the field strength operator corresponding to a U(1) gauge field \(A'\), H is the SM Higgs doublet, and h the physical, SM Higgs boson.Footnote 3 Where applicable, we consider cases in which a different operator is responsible for the production and decay of the LLP, as summarized in Fig. 6. Note that the \(h A'_{\mu }A'^{\mu }\) and \(S^2 H^\dagger H\) operators respect a \({\mathbb {Z}}_2\) symmetry for the new fields and will not induce a decay for the LLP on their own.

For the axion portal, the ALP can couple independently to the SU(2) and U(1) gauge bosons. In the infrared, only the linear combination corresponding \(a F{\tilde{F}}\) survives, though the coupling to the massive electroweak bosons can contribute to certain production modes. Moreover, the gauge operators mix into the fermionic operators through renormalization group running. Classifying the models according to production and decay portals obscures this key pointFootnote 4, and we have therefore chosen to present the model space for the ALPs in Fig. 6 in terms of UV operators. Once the UV boundary condition at a scale \(\Lambda \) is given, such a choice fully specifies both the ALP production and the decay modes, which often proceed via a combination of the listed operators.

Minimal model tabular space formed from either: production (green) and decay (blue) portals, where well-defined by symmetries or suppressions; or, UV operators (orange), where either the production and decay portal may involve linear combinations of operators under RG evolution or field redefinitions. Each table cell corresponds to a minimal model: cells for which the CODEX-b reach is known refer to the relevant figure in this document; cells denoted ‘pending’ indicate a model that may be probed by CODEX-b, but no reach projection is presently available

3.3.1 Abelian hidden sector

The Abelian hidden sector model [26,27,28] is a simple extension of the Standard Model, consisting of an additional, massive U(1) gauge boson (\(A'\)) and its corresponding Higgs boson (\(H'\)) (see e.g. [29,30,31,32,33,34,35] for an incomplete list of References containing other models with similar phenomenology). The \(A'\) and the \(H'\) can mix with respectively the SM photon [36, 37] and Higgs boson, each of which provide a portal into this new sector. In the limit where the \(H'\) is heavier than the SM Higgs, it effectively decouples from the phenomenology, such that only the operators in (1a) remain in the low energy effective theory.

The mixing of the \(A'\) with the photon through the \(F_{\mu \nu }F'^{\mu \nu }\) operator can be rewritten as a (millicharged) coupling of the \(A'\) to all charged SM fermions. In the limit that the \(h A'_{\mu }A'^{\mu }\) coupling is negligible (along with higher dimension operators, such as \(h F'_{\mu \nu }F'^{\mu \nu }\)), the mixing with the photon alone can induce both the production and decay of the \(A'\) in a correlated manner, which has been studied in great detail (see e.g. [25] and references therein). CODEX-b has no sensitivity to this scenario, because the large couplings required for sufficient production cross-sections imply an \(A'\) lifetime that is too short for any \(A'\)s to reach the detector. However, the LHCb VELO and various forward detectors are already expected to greatly improve the reach for this scenario [19, 38,39,40,41,42,43,44,45].

Reach for \(h\rightarrow A'A'\), as computed in Ref. [8]. Shaded bands refer to the optimistic and conservative estimates of the ATLAS sensitivity [46, 47] for \(3\,\text {ab}^{-1}\), as explained in the text. The horizontal dashed line represents the estimated HL-LHC limit on the invisible branching fraction of the Higgs [48]. The MATHUSLA reach is shown for its 200 m \(\times \) 200 m configuration with 3 \(\hbox {ab}^{-1}\); for AL3X \(100\text { fb}^{-1}\) of integrated luminosity was assumed

The \(h A'_{\mu }A'^{\mu }\) operator, by contrast, is controlled by the mixing of the \(H'\) with the SM Higgs. This can arise from the kinetic term \(\left| D_\mu H'\right| ^2\), with \(\left\langle H'\right\rangle \ne 0\) and \(H-H'\) mixing. This induces the exotic Higgs decay \(h\rightarrow A'A'\). In the limit where the mixing with the photon is small, this becomes the dominant production mode for the \(A'\), which then decays through the kinetic mixing portal to SM states. CODEX-b would have good sensitivity to this mixing due to its transverse location, with high \(\sqrt{\hat{s}}\). Importantly, the coupling to the Higgs and the mixing with the photon are independent parameters, so that the lifetime of the \(A'\) and the \(h\rightarrow A'A'\) branching ratio are themselves independent, and therefore convenient variables to parameterize the model. Figure 7 shows the reach of CODEX-b for two different values of the \(A'\) mass, as done in Ref. [8] (see commentary therein), as well as the reach of AL3X [24] and MATHUSLA [22].

For ATLAS and CMS, the muon spectrometers have the largest fiducial acceptance as well as the most shielding, thanks to the hadronic calorimeters. The projected ATLAS reach for 3 \(\text {ab}^{-1}\) was taken from Ref. [46] for the low mass benchmark. In Ref. [47] searches for one displaced vertex (1DV) and two displaced vertices (2DV) were performed with \(36.1\,\text {fb}^{-1}\) of 13 TeV data. We use these results to extrapolate the reach of ATLAS for the high mass benchmark to the full HL-LHC dataset, where the widths of the bands corresponds to a range between a ‘conservative’ and ‘optimistic’ extrapolation for each of the 1DV and 2DV searches. Concretely, the 1DV search in Ref. [47] is currently background limited, with comparable systematic and statistical uncertainties. For our optimistic 1DV extrapolation we assume that the background scales linearly with the luminosity and that the systematic uncertainties can be made negligible with further analysis improvements. This corresponds to a rescaling of the current expected limit with \(\sqrt{36.1\, \text {fb}^{-1}/3000\,\text {fb}^{-1}}\). For our conservative 1DV extrapolation we assume the systematic uncertainties remain the same, with negligible statistical uncertainties. This corresponds to an improvement of the current expected limit with roughly a factor of \(\sim 2\). The 2DV search in Ref. [47] currently has an expected background of 0.027 events, which implies \(\sim 3\) expected background events, if the background is assumed to scale linearly with the luminosity. For our optimistic 2DV extrapolation we assume the search remains background free, which corresponds to a rescaling of the current expected limits with \({36.1\, \text {fb}^{-1}/3000\,\text {fb}^{-1}}\). For the conservative 2DV extrapolation we assume 10 expected and observed background events, leading to a slightly weaker limit than with the background free assumption.

Upon rescaling \(c\tau \) to account for difference in boost distributions, the maximum CODEX-b reach is largely insensitive to the mass of the \(A'\), modulo minor differences in reconstruction efficiency for highly boosted particles (see Sect. 5.1). This is not the case for ATLAS and CMS, where higher masses generate more activity in the muon spectrometer, which helps greatly with reducing the SM backgrounds.

3.3.2 Scalar-Higgs portal

The most minimal extension of the SM consists of adding a single, real scalar degree of freedom (S). Gauge invariance restricts the Lagrangian to

where the ellipsis denotes higher dimensional operators, assumed to be suppressed. This minimal model is often referred to as simply the “Higgs portal” in the literature, though the precise meaning of the latter can vary depending on the context. LHCb has already been shown to have sensitivity to this model [49, 50], and CODEX-b would greatly extend its sensitivity into the small coupling/long lifetime regime.

The parameter \(A_S\) can be exchanged for the mixing angle, \(\sin \theta \), of the S with the physical Higgs boson eigenstate. In the mass eigenbasis, the new light scalar therefore inherits all the couplings of the SM model Higgs: Mass hierarchical couplings with all the SM fermions, as well couplings to photons and gluons at one loop. All such couplings are suppressed by the small parameter \(\sin \theta \). The couplings induced by Higgs mixing are responsible not only for the decay of S [51, 52, 52,53,54,55], but also contribute to its production cross-section. Concretely, for \(m_K< m_S < m_B\), the dominant production mode is via the \(b \rightarrow s\) penguin in Fig. 8a [56,57,58], because S couples most strongly to the virtual top quark in the loop. If the quartic coupling \(\lambda \) is non-zero, the rate is supplemented by a penguin with an off-shell Higgs boson, shown in Fig. 8b [59], as well as direct Higgs decays, shown in Fig. 8c.

In Fig. 9 we show the reach of CODEX-b taking two choices of \(\lambda \), following [25]: (i) \(\lambda =0\), corresponding to the most conservative scenario, in which the production rate is smallest; (ii) \(\lambda =1.6\times 10^{-3}\) was chosen such that the \(\text {Br}[h\rightarrow SS]=0.01\).Footnote 5 The latter roughly corresponds to the future reach for the branching ratio of the Higgs to invisible states. In this sense it is the most optimistic scenario that would not be probed already by ATLAS and CMS. The reach for other choices of \(\lambda \) therefore interpolates between Fig. 8a and Fig. 8b. Also shown are the limits from LHCb [49, 50] and CHARM [60], and projections for MATHUSLA [61], FASER2 [62], SHiP [63], AL3X [24] and LHCb, where for the latter we extrapolated the limits from [49, 50], assuming (optimistically) that the large lifetime signal region remains background free with the HL-LHC dataset.

The scalar-Higgs portal is, by virtue of its minimality, very constraining as a model. When studying LLPs produced in B decays, it is therefore worthwhile to relax its assumptions, in particular relaxing the full correlation between the lifetime and the production rate – the \(b \rightarrow s S\) branching ratio – as is the case in a number of non-Minimally Flavor Violating (MFV) models (see e.g. [64,65,66,67]). Fig. 10 shows the CODEX-b reach in the \(b \rightarrow s S\) branching ratio for a number of benchmark LLP mass points, as done in Ref. [8]. The LHCb reach and exclusions are taken and extrapolated from Refs. [49, 50], assuming 30% (10%) branching ratio of \(S\rightarrow \mu \mu \) for the 0.5 GeV (1 GeV) benchmark (see Ref. [8]). Also shown are the current and projected limits for \(B\rightarrow K^{(*)}\nu \nu \) [68, 69]. A crucial difference compared to LHCb is that the CODEX-b reach depends only on the total branching ratio to charged tracks, rather than on the branching ratio to muons.

Interestingly, the CODEX-\(\beta \) detector proposed for Run 3 (see Sect. 4) may already have novel sensitivity to the \(b \rightarrow s S\) branching ratio, as shown in Fig. 29. This reach is estimated under the requirement that the number of tracks in the final state is at least four, in order to control relevant backgrounds (see Sect. 3). A more detailed discussion of this reach is reserved for Sect. 4.

3.3.3 Axion-like particles

Axion-like particles (ALPs) are pseudoscalar particles coupled to the SM through dimension-5 operators. They arise in a variety of BSM models and when associated with the breaking of approximate Peccei–Quinn-like symmetries they tend to be light. Furthermore, their (highly) suppressed dimension-5 couplings naturally renders them excellent candidates for LLP searches. The Lagrangian for an ALP, a, can be parameterized as [70]

where \(\tilde{G}_{\mu \nu }=1/2\,\epsilon _{\mu \nu \rho \sigma }G^{\rho \sigma }\). The couplings to fermions do not have to be aligned in flavor space with the SM Yukawas, leading to interesting flavor violating effects. The gauge operators mix into the fermionic ones at 1-loop, and therefore in choosing a benchmark model one needs to specify the values of these couplings as a UV boundary condition at a scale \(\Lambda \). In the following we will focus on the same benchmark models chosen in the Physics Beyond Colliders (PBC) community study [25] based on the ALP coupling to photons (“BC9”, defined as \(c_W+c_B\ne 0\)), universally to quarks and leptons (“BC10”, \(c_q^{ij}=c\, \delta ^{ij}\), \(c_\ell ^{ij}=c\, \delta ^{ij}\), \(c\ne 0\)) and to gluons (“BC11”, \(c_G\ne 0\)). Another interesting benchmark to consider is the so-called photophobic ALP [71], in which the ALP only couples to the \(SU(2)\times U(1)\) gauge bosons such that it is decoupled from the photons in the UV and has highly suppressed photon couplings in the IR.

Reach of CODEX-b for LLPs produced in B-meson decays, in a non-minimal model. Also shown is the current (shaded) and projected (dashed) reach for: LHCb via \(B \rightarrow K^{(*)}(S \rightarrow \mu \mu )\), for \(m_S = 0.5\) GeV (green) and \(m_S = 1\) GeV (blue), assuming a muon branching ratio of 30% and 10%, respectively (see Ref. [8]); and for \(B \rightarrow K^{(*)}+\text {inv.}\) (gray)

CODEX-b is expected to have a potentially interesting reach for all these cases. This is true with the nominal design provided the ALP has a sizable branching fraction into visible final states, while for ALPs decaying to photons one would require a calorimeter element, as discussed below in Sect. 5.3. In this section, we will present the updated reach plots for BC10 and BC11 and leave the ALP with photon couplings (BC9) and the photophobic case for future study.

ALPs coupled to quark and gluons can be copiously produced at the LHC even though their couplings are suppressed enough to induce macroscopic decay lengths. They therefore provide an excellent target for LLP experiments such as CODEX-b. Based on the fragmentation of partons to hadrons in LHC collisions, we can divide the ALP production into four different mechanisms:

-

1.

radiation during partonic shower evolution (using the direct ALP couplings to quarks and/or gluons),

-

2.

production during hadronization of quarks and gluons via mixing with \((J^{PC} =) 0^{-+}\) \({\bar{q}} q\) operators (dominated at low ALP masses via mixing with \(\pi ^0,\eta ,\eta '\)),

-

3.

production in hadron decays via mixing with neutral pseudoscalar mesons, and

-

4.

production in flavor-changing neutral current bottom and strange hadron decays, via loop-induced flavor-violating penguins.

The last mechanism has been already considered extensively in the literature. The ALP production probability scales parametrically as \((m_t/\Lambda )^2\) and is proportional to the number of strange or b-hadrons produced. In general, the population of ALPs produced by this mechanism is not very boosted at low pseudorapidities. For the PBC study, it was the only production mechanism considered for BC10, and it was included in BC11.

The second and third mechanism are related as they both incorporate how the ALP couples to low energy QCD degrees of freedom. Conventionally the problem is rephrased into ALP mixing with neutral pseudoscalar mesons. This production is parametrically suppressed by \((f_\pi /\Lambda )^2\) and it quickly dies off for ALP masses much above 1 GeV. The population of ALPs produced by these mechanisms is not very boosted at low pseudorapidities, while the forward experiments will have access to very energetic ALPs. Compared to the PBC study, we treat separately the two cases of hadronization and hadron decays as they give rise to populations of ALPs with different energy distributions, and include them both in BC10 and BC11.

Finally, the first mechanism listed above has been so far overlooked in the literature. However, emission in the parton shower can be the most important production mechanism at transverse LHC experiments such as CODEX-b. Emission of (pseudo)scalars is expected to exhibit neither collinear nor soft enhancements, such that ALPs emitted in the shower may then carry an \(\mathcal {O}(1)\) fraction of the parent energy and can be emitted at large angles.

For the case of quark-coupled ALPs (BC10), emission in the parton shower is suppressed by the quark mass – a consequence of the soft pion theorem – i.e. by \(m_q^2/\Lambda ^2\) (or by loop factors to the induced gluon coupling, as below). The shower contribution may nevertheless still dominate at high ALP masses, where the other production mechanisms are forbidden by phase space or kinematically suppressed. For gluon-coupled ALPs (BC11), however, no such suppression arises in the shower. In a parton shower approximation, the ALP emission is attributed to a single parton with a given probability: While interference terms between ALP emissions from adjacent legs – e.g. in \(g\rightarrow g g a\) – cannot be neglected, such an approximation still captures the bulk of the production, even when the ALP is not emitted in the soft limit.

The parton shower approximation greatly simplifies the description of ALP emission, allowing the implementation in existing Monte Carlo tools. In this approximation, the probability for a parton to fragment into an ALP scales parametrically as \(Q^2/\Lambda ^2\), with Q of the order of the virtuality of the parent parton. For example, the \(g \rightarrow g a\) splitting function

where \(t = Q^2\). While the population of partons with large energies is much smaller than the final number of hadrons, the production rate is enhanced by a large \(\mathcal {O}(Q^2/f_\pi ^2)\) factor, compared to the second and third mechanisms. In LHC collisions this is sufficient to produce a large population of energetic ALPs at low pseudorapidities, with boosts exceeding \(10^3\) for ALP masses in the 0.1–1 GeV range. The CODEX-b reach can therefore be extended to higher ALP masses and larger couplings compared to previous estimates if very collimated LLP decays can be detected.

We estimate these production mechanisms using Pythia 8, with the code modified to account for the production of ALPs during hadronization. We include ALP production in decays by extending its decay table in such a way that for each decay mode containing a \(\pi ^0,\eta ,\eta '\) meson in the final state, we add another entry with the meson substituted by the ALP. The branching ratio is rescaled by the ALP mixing factor and phase space differences.

The ALP production from the shower is computed by navigating through the generated QCD shower history and for each applicable parton an ALP is generated by re-decaying that parton with a weight: The ratio of the ALP branching (integrated) probability over the total (SM+ALP) (integrated) probabilities. This is correct for time-like showers in the limit that the ALP branching probability is small, because in this limit the branching scale is still controlled by the SM Sudakov factor. This procedure is not applicable to space-like showers, without also incorporating information from parton distribution functions. Such space-like showers, however, provide only a sub-leading contribution to transverse production, i.e. at the low pseudorapidities for the CODEX-b acceptance, and we therefore neglect them. For forward experiments at the LHC, such as FASER2, we do not include any shower contribution in the reach estimates, since a more complete treatment is required in order to fully estimate ALP production at high pseudorapidities, and the effect is expected to be at most \(\mathcal {O}(1)\). For the case of a fermion-coupled ALP we include both the emission from heavy quark lines, proportional to \((m_q c_q/\Lambda )^2\), and from loop-induced coupling to gluons, taking \(c_G= N_f c_q/32\pi ^2\) in Eq. (4) above [70], where \(N_f\) is the number of flavors. Further details will be given in upcoming work.

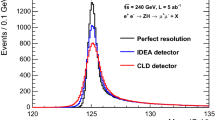

The reach predictions are shown in Fig. 11 for a fermion-coupled ALP (BC10) and in Fig. 12 for an ALP coupled to gluons (BC11), for the case of the nominal – i.e. tracker-only – CODEX-b design. In this case, an ALP decaying only to neutral particles such as photons is invisible, and highly boosted ALPs may decay to merged tracks, such that the signature resembles more closely a single appearing track inside the detector volume. For such a signature, the CODEX-b baseline design is not background-free; we use the background estimates presented in Table 3 (see Sect. 3 below), corresponding to 50 events of background in the entire detector in 300 \(\hbox {fb}^{-1}\). The CODEX-b reach with a calorimeter option (shown here as a dashed line) is further discussed in Sect. 5.3.

The MATHUSLA estimates and the CHARM exclusion in Fig. 12 have been recomputed, while all the other curves have been taken from [25], after rescaling them to the appropriate lifetime and branching ratio expressions used in our plots. For MATHUSLA we used the \(200\,\mathrm{m} \times 200\,\mathrm{m}\) configuration and assumed that a floor veto for upward going muons entering the decay volume is available with a rejection power of \(10^5\). Based on the estimates of \(10^7\) upward going muons [23, 72], we therefore used 100 events as the background for unresolved highly boosted ALPs.

Reach of CODEX-b for fermion-coupled ALPs. The vertical axis on the left corresponds to the couplings defined in Eq. (3), while the one on the right to the normalization used in the PBC study [25]. The baseline (tracker only) CODEX-b design is shown as solid, while the gain by a calorimeter option is shown as dashed. All the curves for the other experiments except MATHUSLA are taken from [25], after rescaling with to the different lifetime/branching ratio calculation used here. The MATHUSLA reach is based on our estimates, see text for details

Reach of CODEX-b for gluon-coupled ALPs. The vertical axis on the left corresponds to the couplings defined in Eq. (3), while the one on the right to the normalization used in the PBC study [25]. The baseline (tracker only) CODEX-b design is shown as solid, while the gain by a calorimeter option is shown as dashed. See Fig. 34 for further information about how the CODEX-b reach changes with different detector designs. FASER2 and REDTop curves are taken from [25], after rescaling to the different lifetime/branching ratio calculation used here. The CHARM curve has been recomputed with the same assumptions used for the CODEX-b curve. The MATHUSLA reach is based on our estimates, see text for details

For the case of the fermion-coupled ALP we have further improved the lifetime and branching ratio calculations compared to those used in Refs. [25, 73], by including the partial widths of ALP into light QCD degrees of freedom, using the same procedure as in Ref. [74]. The result is shown in Fig. 13. In particular in the \(1\lesssim m_a \lesssim 3\) GeV range, for a given coupling the ALP lifetime is \(\mathcal {O}(10)\) smaller than previously assumed, and the decays are mostly to hadrons instead of muon pairs.

3.3.4 Heavy neutral leptons

Heavy neutral leptons (HNLs) may generically interact with the SM sector via the lepton Yukawa portal, mediated by the marginal operator \(\bar{L}_i \tilde{H} N\), or may feature in a range of simplified NP models coupled to the SM via various higher-dimensional operators. In the \(m_{N} \sim 0.1\)–10 GeV regime, that we consider below, these models can be motivated e.g. by explanations for the neutrino masses [75], the \(\nu \)MSM [76, 77], dark matter theories [78], or by models designed to address various recent semileptonic anomalies [79,80,81].

Lifetime (left) and branching ratios (right) for an ALP coupled to fermions, used in Fig. 11. For comparison we plot as dashed lines the corresponding values used for BC10 in the PBC document

UV completions of SM–HNL operators typically imply an active-sterile mixing \(\nu _\ell = U_{\ell j} \nu _j + U_{\ell N} N\), where \(\nu _j\) and N are mass eigenstates, and U is an extension of the PMNS neutrino mixing matrix to incorporate the active-sterile mixings \(U_{\ell N}\). If \(|U_{\ell N}|\) are the dominant couplings of N to the SM and N has negligible partial width to any hidden sector, then the N decay width is electroweak suppressed, scaling as \(\Gamma \sim G_{F}^2 |U_{\ell N}|^2 m_N^5\). Because the mixing \(|U_{\ell N}|\) can be very small, N can then become long-lived. We assume hereafter for the sake of simplicity that N couples predominantly to only a single active neutrino flavor, i.e.

and refer to \(\ell \) as the ‘valence’ lepton.

The width of the HNL can be expressed as

where \(s = 1\) (\(s=2\)) for a Dirac (Majorana) HNL and the final state M corresponds to a single kinematically allowed (ground-state) meson. Specifically, M considers: charged pseudoscalars, \(\pi ^\pm \), \(K^\pm \); neutral pseudoscalars \(\pi ^0\), \(\eta \), \(\eta '\); charged vectors, \(\rho ^\pm \), \(K^{*\pm }\); and neutral vectors, \(\rho ^0\), \(\omega \), \(\phi \). For \(m_N > 1.5\) GeV, we switch from the exclusive meson final states to the inclusive decays widths \(\Gamma _{\ell _i qq'}\) and \(\Gamma _{\nu _i qq}\), which are disabled below 1.5 GeV. Expressions for each of the partial widths may be found in Ref. [82]; each is mediated by either the W or Z, generating long lifetimes for N once one requires \(U_{\ell N} \ll 1\). Apart from the \(3\nu \), and some fraction of the \(\nu M\) and \(\nu qq\) (e.g. \(\nu \pi ^0\pi ^0\)) decay modes, all the N decays involve two or more tracks, so that the decay vertex will be reconstructible in CODEX-b, up to \(\mathcal {O}(1)\) reconstruction efficiencies. We model the branching ratio to multiple tracks by considering the decay products of the particles produced. Below 1.5 GeV, we consider the decay modes of the meson M to determine the frequency of having 2 or more charged tracks; above 1.5 GeV where \(\nu qq\) production is considered instead of exclusive single meson modes, we conservatively approximate the frequency of having two or more charged tracks as 2/3.

HNLs may be abundantly produced by leveraging the large \(b\bar{b}\) and \(c\bar{c}\) production cross-section times branching ratios into semileptonic final states. In particular, for \(0.1\,\text {GeV} \lesssim m_N \lesssim 3\,\text {GeV}\), the dominant production modes are the typically fully inclusive \(c \rightarrow s \ell N\) and \(b \rightarrow c \ell N\). In order to capture mass threshold effects, production from these heavy flavor semileptonic decays is estimated by considering a sum of exclusive modes. The hadronic form factors are treated as constants: An acceptable estimate for these purposes, as corrections are expected to be small, \(\sim \Lambda _{\text {qcd}}/m_{c,b}\). In certain kinematic regimes, the on-shell (Drell–Yan) \(W^{(*)} \rightarrow \ell N\) or \(Z^{(*)} \rightarrow \nu N\) channels can become important, as can the two-body \(D_s \rightarrow \ell N\) and \(B_c \rightarrow \ell N\) decays (a prior study in Ref. [83] for HNLs at CODEX-b neglected the latter contributions).

In our reach projections, we assume the production cross-section \(\sigma (b\bar{b}) \simeq 500\,\mu \text {b}\) and \(\sigma (c\bar{c})\) is taken to be 20 times larger, based on FONLL estimates [84, 85]. The EW production cross-sections used are \(\sigma (W \rightarrow \ell \nu ) \simeq 20\) nb and \(\sum _j\sigma (Z \rightarrow \nu _j \nu _j) \simeq 12\) nb [86]. The \(\sigma (D_s)/\sigma (D)\) production fraction is taken to be \(10\%\) [87, 88], and we assume a production fraction \(\sigma (B_c)/\sigma (B) \simeq 2\times 10^{-3}\) [89, 90].

In the case of the \(\tau \) valence lepton, with \(m_N < m_\tau \), the HNL may be produced not only in association with the \(\tau \), but also as its daughter. For example, both \(b \rightarrow c\tau N\) and \(b \rightarrow c (\tau \rightarrow N e\nu _e) \nu \) are comparable production channels. When kinematically allowed, we approximate this effect by including for the valence \(\tau \) case an additional factor of \(1+\)BR\((\tau \rightarrow N+X)/\left| U_\tau \right| ^2\), where BR\((\tau \rightarrow N+X)\) is the HNL mass dependent BR of the tau into a valence \(\tau \) HNL plus anything [82]. HNL production from Drell–Yan \(\tau \)’s is also included, but typically sub-leading: The relevant production cross-section is estimated with MadGraph [91] to be \(\sigma (\tau _{\text {DY}}) \simeq 37\) nb.

The projected sensitivity of CODEX-b to HNLs in the single flavor mixing regime is shown in Fig. 14. The breakdown in terms of the individual production modes is shown in the left panels, while the right panels compare CODEX-b sensitivity versus constraints from prior experiments, including BEBC [92], PS191 [93], CHARM [94,95,96], JINR [97], and NuTeV [98], DELPHI [99], and ATLAS [100] (shown collectively by gray regions). Also included are projected reaches for other current or proposed experiments, including NA62 [101], DUNE [102], SHiP [17], FASER [103], and MATHUSLA [83]. We adopt the Dirac convention in all our reach projections; the corresponding reach for the Majorana case is typically almost identical, though relevant exclusions may change.Footnote 6

Projected sensitivity of CODEX-b to Dirac heavy neutral leptons. Left: Contributions from the individual decay channels with the net result (orange). Right: Comparison with current constraints (gray) and other proposed experiments, including NA62 [101], DUNE [102], SHiP [17], FASER2 [103], and MATHUSLA [83], which is shown for its 200 m \(\times \) 200 m configuration. ATLAS [100] and CMS [104] constraints on prompt Majorana HNL decays are not shown as the sensitivity is currently subdominant to the DELPHI exclusions, and they moreover use a lepton number violating final state only accessible to Majorana HNLs – \(\mu ^{\pm }\mu ^\pm e^\mp \) or \(e^{\pm }e^\pm \mu ^\mp \) – to place limits

3.4 Complete models

The LLP search program at the LHC is extensive and rich. In the context of complete models, it has been driven so far primarily by searches for weak scale supersymmetry, along with searches for dark matter, mechanisms of baryogenesis, and hidden valley models. In this section, we review the part of the theory space relevant for CODEX-b, which is typically the most difficult to access with the existing experiments. A comprehensive overview of all known possible signatures is neither feasible nor necessary, the latter thanks to the inclusive setup of CODEX-b. Instead we restrict ourselves to a few recent and representative examples. For a more comprehensive overview of the theory space we refer to Ref. [105].

3.4.1 R-parity violating supersymmetry

The LHC has placed strong limits on supersymmetric particles in a plethora of different scenarios. The limits are especially strong if the colored superpartners are within the kinematic range of the collider. If this is not the case, the limits on the lightest neutralino (\({\tilde{\chi }}_1^0\)) are remarkably mild, especially if the lightest neutralino is mostly bino-like. In this case \(\tilde{\chi }_1^0\) can still reside in the \(\sim \) GeV mass range, and be arbitrarily separated from the lightest chargino. Such a light neutralino must be unstable to prevent it from overclosing the universe, which will happen if R-parity is violated [106]. The \({\tilde{\chi }}^0_1\) then decays through an off-shell sfermion coupling to SM particles through a potentially small R-parity violating coupling. The combination of these effects typically provide a macroscopic \({\tilde{\chi }}^0_1\) proper lifetime.

The sensitivity of CODEX-b to this scenario was recently studied for \({\tilde{\chi }}^0_1\) production through exotic B and D decays [107], as well as from exotic \(Z^0\) decays [83]. Dercks et al. [107] studied the interaction

and considered five benchmarks, corresponding to different choices for the matrix \(\lambda '_{ijk}\), each with a different phenomenology. We reproduce here their results for their benchmarks 1 and 4, and refer the reader to Ref. [107] for the remainder. The parameter choices, production modes and main decay modes are summarized in Table 1. The reach of CODEX-b is shown in Fig. 15. In both benchmarks, CODEX-b would probe more than 2 orders of magnitude in the coupling constants. For benchmark 4 the reach would be substantially increased if the detector is capable of detecting neutral final states by means of some calorimetry.

Projected sensitivity of CODEX-b for light neutralinos with R-parity violating coupling produced in D and B meson decays, reproduced from [107] with permission of the authors. The light blue, blue and dark blue regions enclosed by the solid black lines correspond to \(\gtrsim 3\), \(3 \times 10^3\) and \(3 \times 10^6\) events respectively. The dashed curve represents the extended sensitivity if one assumes CODEX-b could also detect the neutral decays of the neutralino. The hashed solid lines indicate the single RPV coupling limit for different values of the sfermion masses. See Ref. [107] for details

The above results assume the wino and higgsino multiplets are heavy enough to be decoupled from the phenomenology. This need not be the case. For instance, the current LHC bounds allow for a higgsino as light as \(\sim 150\) GeV [108], as long as the wino is kinematically inaccessible and the bino decays predominantly outside the detector. In this case, the mixing of the bino-like \( {\tilde{\chi }}^0_1\) can be large enough to induce a substantial branching ratio for the \(Z\rightarrow {\tilde{\chi }}^0_1 {\tilde{\chi }}^0_1\) process. Helo et. al. [83] showed that the reach of CODEX-b would exceed the \(Z\rightarrow \) invisible bound for \(0.1 \,\mathrm {GeV}< m_{{\tilde{\chi }}^0_1}<m_Z/2\) and \(10^{-1}\,\mathrm {m}< \;c\tau \; <\; 10^6\, \mathrm {m}\), as shown in Fig. 16. The reach is independent of the flavor structure of the RPV coupling(s), so long as the branching ratio to final states with at least two charged tracks is unsuppressed. It should be noted that the ATLAS searches in the muon chamber [46, 47] are expected to have sensitivity to this scenario, although no recasted estimate is currently available. As with exotic Higgs decays in Sect. 2.3.1, the expectation is, however, that CODEX-b would substantially improve upon the ATLAS reach for low \(m_{{\tilde{\chi }}_0}\).

Projected sensitivity of CODEX-b for light neutralinos with R-parity violating coupling, as produced in Z decays, reproduced from Ref. [83] with permission of the authors. Also shown are projections for the 200 m \(\times \) 200 m MATHUSLA configuration and \(\hbox {FASER}^R\), the 1 m radius configuration (referred to as FASER2 elsewhere in this document)

3.4.2 Relaxion models

Relaxion models rely on the cosmological evolution of a scalar field – the relaxion – to dynamically drive the weak scale towards an otherwise unnaturally low value [109]. The relaxion sector therefore must be in contact with the SM electroweak sector, and the implications of relaxion-Higgs mixing have been studied extensively [109,110,111,112,113]. The phenomenological constraints were mapped out in detail in Refs. [114, 115] (see [52] for similar phenomenology in a model where the light scalar is identified with the inflaton). Following the discussion in Ref. [105], the phenomenologically relevant physics of the relaxion, \(\phi \), is contained in the term

in which h is the real component of the SM Higgs field that obtains a vacuum expectation value v, \(\Lambda \) is the cut-off scale of the effective theory, \(\Lambda _N\) is the scale of a confining hidden sector, f is the scale at which a UV U(1) symmetry is broken spontaneously, and finally, C and \(\delta \) are real constants. After \(\phi \) settles into its vacuum expectation value, \(\phi _0\), Eq. (8) can be expanded in large \(\phi _0/f\), such that

with \(\lambda '=C \Lambda _N^3/v^2 \Lambda \). The model in Eq. (9) now directly maps onto the scalar-Higgs portal in Eq. (2) of Sect. 2.3.2. CODEX-b and other intensity and/or lifetime frontier experiments can then probe the model in the regime \(\lambda '\sim 1\) and \(f\sim \mathrm {TeV}\). The angle \(\phi _0/f+\delta \) controls whether the mixing or quartic term is most important: On the one hand, if it is small, the lifetime of \(\phi \) increases but the quartic in Eq. (9) can be sizable, enhancing the \(h\rightarrow \phi \phi \) branching ratio (Fig. 9b). On the other hand, for \(\phi _0/f+\delta \simeq \pi /2\) the quartic is negligible and the phenomenology is simply that of a scalar field mixing with the Higgs (Fig. 9a).

3.4.3 Neutral naturalness

The Abelian hidden sector model in Sect. 2.3.1 has enough free parameters to set the mass (\(m_{A'}\)), the Higgs branching ratio (\(\text {Br}(h\rightarrow A'A')\)) and the width (\(\Gamma _{A'}\)) independently. It therefore allows for a very general parametrization of the reach for exotic Higgs decays in terms of the lifetime, mass and production rate of the LLP. The downside of this generality is that the model has too many independent parameters to be very predictive. In many models, however, the lifetime has a very strong dependence on the mass, favoring long lifetimes for low mass states. We therefore provide a second, more constrained example where the lifetime is not a free parameter.

The example we choose is the fraternal twin Higgs [116], which is a recent incarnation of the Twin Higgs paradigm [117, 118], which is designed to address the little hierarchy problem. It is itself an example of a hidden valley [119, 120]. The model consists of a dark or “twin” sector containing an \(SU(2)\times SU(3)\) gauge symmetry, that are counterparts of the SM weak and color gauge groups. It further contains a dark b-quark and a number of heavier states which are phenomenologically less relevant. The most relevant interactions are

with H the SM Higgs doublet and \(H'\) the dark sector Higgs doublet. The “twin quarks” \(q'_L\), \(b'_R\) and \(t'_R\) are dark sector copies of the 3rd generation quarks.

The Higgs potential of this model has an accidental SU(4) symmetry, which protects the Higgs mass at one loop provided that \(y'_t \approx y_t\), with \(y_{t}\) the SM top Yukawa coupling. The corresponding top partner – the “twin top” – carries color charge under the twin sector’s SU(3) rather than SM color, and is therefore not subject to existing collider constraints from searches for colored top partners. The accidental symmetry exchanging \(H\leftrightarrow H'\) may further be softly broken, such that \(\langle H\rangle = v\) and \(\langle H'\rangle \approx f\). The parameter f is typically expressed in terms of the mass of the twin top quark, \(m_T\), through the relation \(m_T= y_t f/\sqrt{2}\). The existing constraints on the branching ratio of the SM Higgs already demand \(m_T/m_t \gtrsim 3\) [121].

Left: Lifetime of the \(0^{++}\) glueball as a function of its mass. Right: Projected reach of CODEX-b, MATHUSLA (200 m \(\times \) 200 m) and ATLAS at the full luminosity of the HL-LHC. The solid (dashed) ATLAS contours refer to the optimistic (conservative) extrapolations of the ATLAS reach, as discussed in Sect. 2.3.1. The horizontal dashed line indicates the reach of precision Higgs coupling measurements at the LHC [48]

We consider the scenario in which the \(b'\) mass is heavier than the dark SU(3) confinement scale, \(\Lambda '\), such that the lightest state in the hadronic spectrum is the \(0^{++}\) glueball [122, 123], with a mass \(m_0\approx 6.8 \Lambda '\). The \(0^{++}\) glueball mixes with the SM Higgs boson through the operator

where h is the physical Higgs boson and \(\alpha '_3\) the twin QCD gauge coupling. After mapping the gluon operator to the low energy glueball field, this leads to a very suppressed decay width of the \(0^{++}\) state, even for moderate values of \(m_t/m_T\). In particular, the lifetime is a very strong function of the mass, and can be roughly parametrized as

This is naturally in the range where displaced detectors like CODEX-b, AL3X and MATHUSLA are sensitive. The full lifetime curve is shown in the left hand panel of Fig. 17, where we have accounted for the running of \(\alpha '_3\), as in Ref. [116, 124].

For simplicity we assume that the second Higgs is too heavy to be produced in large numbers at the LHC, as is typical in composite UV completions. However, even in this pessimistic scenario the SM Higgs has a substantial branching ratio to the twin sector. Specifically, this Higgs has a branching ratio of roughly \(\sim m_t^2/m_T^2\) for the \(h\rightarrow b'b'\) channel. The \(b'\) quarks subsequently form dark quarkonium states, which in turn can decay to lightest hadronic states in the hidden sector. While this branching ratio is large, the phenomenology of the dark quarkonium depends on the detailed spectrum of twin quarks (see e.g. Ref. [124]). There is however a smaller but more model-independent branching ratio of the SM Higgs directly to twin gluons, given by [125]

with \(\text {Br}[h\rightarrow gg]=0.086\). \(\alpha _s(m_h)\) and \(\alpha '_s(m_h)\) are the strong couplings, respectively in the SM and twin sectors, evaluated at \(m_h\). The hidden glueball hadronization dynamics is not known from first principles, and we have assumed that the Higgs decays to the twin sector on average produces two \(0^{++}\) glueballs. Especially at the rather low \(m_0\) of interest for CODEX-b, this is likely a conservative approximation.

The projected reach of CODEX-b, MATHUSLA and ATLAS is shown in the right hand panel of Fig. 17. The projections for ATLAS were obtained as in Sect. 2.3.1. The high mass, short lifetime regime may be covered with new tagging algorithms for the identification of merged jets at LHCb [126, 127]. We find that CODEX-b would significantly extend the reach of ATLAS for models of neutral naturalness. For hidden glueballs, the factor of \(\sim 30\) larger geometric acceptance times luminosity for MATHUSLA only results in roughly a factor of \(\sim 2\) more reach in \(m_0\) for a fixed \(m_T\), because of the scaling in Eq. (12). For higher glueball masses, CODEX-b outperforms MATHUSLA due to it shorter baseline. However, this region will likely be covered by ATLAS.

In summary, this hidden glueball model serves to illustrate an important point: For light hidden sector states, the lifetime often grows as a strong power-law of its mass, as illustrated by Fig. 17. For ATLAS and CMS, this means that the standard background rejection strategy of requiring two vertices becomes extremely inefficient for such light hidden states. Instead, displaced detectors like CODEX-b, MATHUSLA and FASER are needed to cover the low mass part of the parameter space.

3.4.4 Inelastic dark matter

Berlin and Kling [128] have studied the reach for various (proposed) LLP experiments in the context of a simple model for inelastic dark matter [129, 130]. The ingredients are two Weyl spinors with opposite charges under a dark, higgsed U(1) gauge interaction. In the low energy limit, the model reduces to

where the second term indicates the mixing of the dark gauge boson with the SM photon. The ellipsis represents sub-leading terms which do not significantly contribute to the phenomenology. The pseudo-Dirac fermions \(\chi _1\) and \(\chi _2\) are naturally close in mass, which leads to a phase space suppression of the width of \(\chi _2\). The fractional mass difference is parameterized by \(\Delta \equiv (m_2 - m_1)/m_1\ll 1\).

Sensitivity estimates for the inelastic dark matter benchmark, reproduced from Ref. [128] with permission of the authors. The black line indicates the line on which the correct dark matter relic density is predicted by the model. Darker/lighter shades correspond to larger/smaller minimum energy thresholds for the decay products of \(\chi _2\), with CODEX-b shown in orange shades. For CODEX-b and MATHUSLA (200 m \(\times \) 200 m), the minimum energy is taken to 1200, 600, or 300 MeV per track. For FASER2 (1 m radius), the total visible energy deposition is taken to be greater than 200, 100, or 50 GeV. For a displaced muon-jet search at ATLAS/CMS and a timing analysis at CMS with a conventional monojet trigger, the minimum required transverse lepton momentum is 10, 5, or 2.5 GeV and 6, 3, or 1.5 GeV, respectively

At the LHC, the production occurs through \(q{\bar{q}} \rightarrow A' \rightarrow \chi _2 \chi _1\), which is controlled by the mixing parameter \(\epsilon \). The decay width of \(\chi _2\) is given by

where \(\alpha _D =e_D^2/4\pi \) is the dark gauge coupling. CODEX-b, MATHUSLA, FASER and the existing LHC experiments can search for the pair of soft, displaced fermions from the \(\chi _2\) decay. The expected sensitivity of the various experiments is shown in Fig. 18 for an example slice of the parameter space. In particular, CODEX-b will be able to probe a large fraction of the parameter space that produces the observed dark matter relic density, as indicated by the black line in Fig. 18. It is worth noting that for this model, the minimum energy threshold per track is an important parameter in determining the reach, which should inform the design of the detector. For more benchmark points and details regarding the cosmology, we refer to Ref. [128].

3.4.5 Dark matter coscattering

The process of coscattering [131, 132] has been studied as a way to generate the correct relic DM abundance. Coscattering has a similar framework to coannihilating dark matter models: Both models contain at least one dark matter particle \(\chi \), a second state charged under the \({\mathbf {Z}}_2\) of the dark sector, \(\psi \), and a third particle X that allows the two particles to transition into one another via an interaction such as a Yukawa, \(y X \chi \psi \). In many coannihilation scenarios \(\psi \psi \leftrightarrow XX\) (or SM) is an efficient annihilation mechanism, while \(\chi \chi , \chi \psi \leftrightarrow XX\) (or SM) is not. Throughout the coannihilation, the “coscattering” process \(\psi X \leftrightarrow \chi X\) (or similar) remains efficient and allows the \(\chi \) and \(\psi \) species to interchange, without changing the dark particle number. Eventually, \(\psi \psi \leftrightarrow XX\) freezes out, and the total dark particle number is fixed.

By contrast, one may consider coscattering DM [131], in which the \(\psi X \leftrightarrow \chi X\) coscattering process drops out of equilibrium before the \(\psi \psi \leftrightarrow XX\) coannihilation process. This requires three ingredients: \(m_X \sim m_\psi \sim m_\chi \); a large \(\psi \psi \leftrightarrow XX\) cross-section; and a small \(\psi X \leftrightarrow \chi X\) cross-section. As \(\chi \) does not have any sizable interactions other than with \(\psi \) by assumption, there are no interactions beyond \(\psi X \leftrightarrow \chi X\) that allow for \(\chi \) to maintain a thermal distribution while it is in the process of decoupling from the thermal bath. This results in important non-thermal corrections that require tracking the full phase space density, rather than just the particle number \(n_\chi \), in order to correctly evaluate the relic abundance [131].

The vector portal model we consider throughout the rest of this subsection is similar to the one in the Sect. 2.4.4. Here we introduce a new U(1)\(_D\) gauge group with fairly strong couplings, a scalar charged under the U(1)\(_D\) that obtains a VEV, a Dirac spinor \(\chi _2\) charged under the gauge group, and a second Dirac spinor \(\chi _1\) that is not. The Lagrangian for the model is

The scalar VEV \(\left\langle \phi \right\rangle \) gives a mass to the dark vector and generates a small mixing between the U(1)\(_D\) active \(\chi _2\) and sterile \(\chi _1\). For simplicity, we set \(y\equiv y_{12}=y_{21}\). When \(\Delta m \equiv m_2-m_1 \gg y \left\langle \phi \right\rangle \) a small mixing angle \(\theta \approx y \left\langle \phi \right\rangle / \Delta m\) is generated. We assume that \(m_\phi \gtrsim m_{Z_D}\), so that then when \(y\ll g_D\), the phenomenology is insensitive to the presence of the scalar.

Projected sensitivity to the coscattering dark matter benchmark for \(\alpha _D=1\) (green) and \(\alpha _D=4\pi \) (red). The shaded region represents the reach for CODEX-b with 300 \(\hbox {fb}^{-1}\). The dashed line is the reach for MATHUSLA in the 200 m \(\times \) 200 m configuration with 3 \(\hbox {ab}^{-1}\). To the left of the dark hatched line, the coannihilation process of \(\chi _2 {\bar{\chi }}_1 \rightarrow Z_D Z_D\) remains active long enough to deplete the relic abundance of \(\chi _1\) below the observed amount so that the model is inconsistent to the left of these lines

The mixing of \(Z_D\) with the Z boson allows for \(Z\rightarrow \chi _2\bar{\chi }_2\) with a branching ratio of

The daughter \(\chi _2\) particles from the Z decay can propagate several meters before decaying to \(\chi _1\) through an off-shell dark photon, i.e. \(\chi _2 \rightarrow \chi _1ff\). The ‘ff’ indicates a pair of SM fermions, which CODEX-b can detect. The decay rate is dictated by the splitting between the two states. For example, the partial width to electrons, neglecting the electron mass

From this expression we can approximate the lifetime as

where \(\text {BR}(Z_D\rightarrow ee; \Delta m)\) is the branching ratio for a kinetically mixed dark vector of mass \(\Delta m\) into ee. This is done to approximate the inclusion of additional accessible final states, as splittings in this model are commonly \(\mathcal {O}\left( \text {GeV}\right) \). While a more thorough treatment would integrate over phase space for each massive channel separately, this approximation captures the leading effect to well within the precision desired here. Additionally, \(\chi _2\) pairs can be directly produced through an off-shell \(Z_D\). Because the \(Z_D\) is off-shell, this does not generate a large contribution unless \(m_{\chi _2} \lesssim 10\) GeV. This model provides a scenario containing an exotic Z decay into long-lived particles.

In Fig. 19 we show the projected sensitivity for CODEX-b (shaded) and MATHUSLA (dashed) [105] to the model setting \(\epsilon = 10^{-3}\), \(m_{Z_D} = 0.6 m_{\chi _1}\), and for two choices of \(\alpha _D = 1\) and \(4\pi \) (green and red, respectively). With these parameters fixed, the choice of \(\sin \theta \) fixes the mass splitting from the DM relic abundance criteria. At small masses, the \({\bar{\chi }}_2\chi _1 \leftrightarrow Z_DZ_D\) coannihilation process remains in equilibrium long enough to deplete the \(\chi _1\) number density below the relic abundance today. This region is illustrated by the dark hatched lines.

3.4.6 Dark matter from sterile coannihilation

D’Agnolo et. al. [133] have explored the mechanism of sterile coannihilation, for which the number density in the dark sector is set by the annihilation of states that are heavier than the dark matter. In this scenario the dark matter remains in chemical equilibrium with these heavy states until after their annihilation process freezes out, which naturally allows for much lighter dark matter than in standard thermal freeze-out models.

Concretely, the example model that is considered in Ref. [133] is given by

where the parameter \(\delta m \ll m_\psi , m_\chi \) generates a small mixing between \(\psi \) and \(\chi \). For the choice \(m_\psi \gtrsim m_\chi > m_\phi \), the relic density of \(\chi \) is effectively set by \(\psi \psi \rightarrow \phi \phi \) annihilations. Finally, \(\phi \) is assumed to mix with the SM Higgs, and it is this coupling which keeps the dark sector in thermal equilibrium with the SM sector. For a summary of the direct detection and cosmological probes of this model, we refer to Ref. [133]. From a collider point of view, the most promising way to probe the model is to search for the scalar \(\phi \) through its mixing with the Higgs. This scenario is identical to the scalar-Higgs portal model with \(\lambda =0\), which is discussed in Sect. 2.3.2. Fig. 20 shows the projected reach for CODEX-b, overlaid with the relevant constraints and projections from dark matter direct detection and CMB measurements.

Projected sensitivity to the coannihilation dark matter benchmark, reproduced from Ref. [133] with permission of the authors. The projections are effectively the same as those in Fig. 9a. In every point of the plot, \(\Delta \) is fixed to reproduce the observed relic density. Left: The remaining parameters are set as \(y = e^{i\pi /4}\), \(m_\phi = m_\chi /4\) and \(\delta = 5 \times 10^{-3}e^{i\pi /4}\), with \(\delta \equiv \delta m/m_\chi \). Right: The remaining parameters are set instead as \(y = e^{i\pi /4}\), \(m_\phi = m_\chi /2\) and \(\delta =10^{-4}e^{i\pi /4}\). MATHUSLA sensitivity is shown for the 200 m \(\times \) 200 m configuration, while FASER sensitivity is shown for the 1 m radius configuration, now referred to as FASER2

3.4.7 Asymmetric dark matter

In many asymmetric dark matter models, the DM abundance mass is directly tied to the matter anti-matter asymmetry in the SM sector [134,135,136]. Therefore the generic expectation for the DM is to carry \(B-L\) quantum numbers and have a mass \(\simeq \) GeV. For this mechanism to operate, the DM sector interactions with the SM should be suppressed and both sectors communicate in the early universe through operators of the form

where \(\mathcal {O}_X\) and \(\mathcal {O}_{SM}\) are operators consisting of dark sector and SM fields respectively, with \(\Delta _{X}\) and \(\Delta _{SM}\) their respective operator dimensions. In supersymmetric models of asymmetric dark matter [134], the simplest operators in the superpotential are of the form

with \(X\equiv {\tilde{x}} +\theta x\) the chiral superfield containing the DM, denoted by x. The phenomenology of this scenario is very similar to that of RPV supersymmetry, with decay chains such as \({\tilde{\chi }}^{0}\rightarrow {\tilde{x}} u^c d^c d^c\) (see Sect. 2.4.1). To accommodate the correct cosmology, macroscopic lifetimes \(c\tau \sim 10\) m are typically required [135, 137]. Moreover, \({\tilde{x}}\) itself may or may not be stable, depending on the model.

More generally, if the dark sector has additional symmetries and multiple states in the GeV mass range, as occurs naturally in hidden valley models with asymmetric dark matter (see e.g. Refs. [119, 138]), these excited states often must decay to the DM plus some SM states. Such decays must necessarily occur through higher dimensional operators, and macroscopic lifetimes are therefore generic. As for previous portals, LLP searches in the GeV mass range are best suited to displaced, background-free detectors such as CODEX-b.

3.4.8 Other dark matter models

There are many other dark matter models that could provide signals observable with CODEX-b. Presenting projections for all possibilities is beyond the scope of this work, but here we briefly summarize many of the existing scenarios that can provide long-lived particles. Below we detail: SIMPs, ELDERs, co-decaying DM, dynamical DM, and freeze-in DM.