Abstract

We consider the problem of quantifying the uncertainty on theoretical predictions based on perturbation theory due to missing higher orders. The most widely used approach, scale variation, is largely arbitrary and it has no probabilistic foundation, making it not suitable for robust data analysis. In 2011, Cacciari and Houdeau proposed a model based on a Bayesian approach to provide a probabilistic definition of the theory uncertainty from missing higher orders. In this work, we propose an improved version of the Cacciari–Houdeau model, that overcomes some limitations. In particular, it performs much better in case of perturbative expansions with large high-order contributions (as it often happens in QCD). In addition, we propose an alternative model based on the same idea of scale variation, which overcomes some of the shortcomings of the canonical approach, on top of providing a probabilistically-sound result. Moreover, we address the problem of the dependence of theoretical predictions on unphysical scales (such as the renormalization scale), and propose a solution to obtain a scale-independent result within the probabilistic framework. We validate these methods on expansions with known sums, and apply them to a number of physical observables in particle physics. We also investigate some variations, improvements and combinations of the models. We believe that these methods provide a powerful tool to reliably estimate theory uncertainty from missing higher orders that can be used in any physics analysis. The results of this work are easily accessible through a public code named THunc.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computing exactly physical observables in a generic quantum field theory (QFT) is still an open challenge. The closest approximation to such a computation is achieved through numerical simulations of the theory on a discretized space-time (lattice). This approach, however, cannot be used universally. For instance, for some observables (e.g. involving large momenta) the required computing power would be out of reach. A complementary approach, applicable when the coupling of the theory is sufficiently small, is the so-called perturbative approach, or perturbation theory. Namely, physical observables are expressed as a power series in the coupling. Computing the coefficients of this power series can be done systematically, e.g. using Feynman diagram techniques, but the complication of the calculation grows considerably with the perturbative order. Therefore, in practice, only a limited number of coefficients is achievable for a given observable.

This poses an important question: how far is the approximate perturbative result from the unknown exact one? The answer to this question can be cast in an uncertainty, usually called theory uncertainty from missing higher orders. Since the exact result is unknown, one can only quantify this uncertainty in terms of a probability distribution. How to determine such a distribution is the subject of this paper.

The most standard and widespread approach to estimate this uncertainty is the so-called scale variation method. This approach is based on the observation that physical observables do not depend on unphysical scales (such as the renormalization scale) appearing in QFTs. However, this independence is strictly valid only for the exact result. Any approximate result computed in perturbation theory will in fact depend on unphysical scales, the dependence being formally of higher order. The idea is thus to estimate the theory uncertainty by varying the scale, as the result will accordingly change by an amount that is formally of the same order as what this uncertainty wants to quantify. While this idea is certainly valid and powerful, the canonical method used to exploit it has various caveats. Indeed, the canonical recipe consists in varying the unphysical scale \(\mu \) by a factor of two about a central value \(\mu _0\) of choice, and then using the maximal variation of the observable with respect to the value at central scale as a measure of the uncertainty. This is depicted in Fig. 1. In formulae, the canonical way of writing a result based on a perturbative computation of a physical observable \(\Sigma \) isFootnote 1

where \(\Sigma _{\mathrm{pert}}(\mu )\) is the scale dependent perturbative result, \(\Sigma _{\mathrm{pert}}(\mu _0)\) represents the “central value” of the prediction, and the scale variation is appended as a theory “error”. The caveats of this approach are apparent:

-

it is largely arbitrary, in the choice of the central scale \(\mu _0\) and of the interval of variation (factor of two);

-

in the vicinity of stationary points the uncertainty can become accidentally small;

-

the uncertainty has no probabilistic interpretation.

The latter point can be overcome by assigning an interpretation (for instance, the “error” could be interpreted as the standard deviation of a gaussian distribution), but any choice would be totally arbitrary. In addition to all this, it is well known that the scale variation uncertainty often underestimates the size of higher order contributions.Footnote 2

Schematic representation of the canonical method to estimate theory uncertainty, namely the scale variation approach. Since the left and right variations of the scale generally lead to different sizes of variation of the perturbative result (shown by the two double-headed arrows), one may either choose to keep the uncertainty asymmetric, or to select the largest and symmetrize it

In 2011, Cacciari and Houdeau [3] proposed a completely different approach to estimate the uncertainty from missing higher orders. In their groundbreaking work they constructed a probabilistic model to define this uncertainty, based on some assumptions on the progression of the perturbative expansion. Roughly speaking, the model assumes that the coefficients of the power expansion in the coupling are bounded by an unknown (hidden) parameter. The knowledge of the first few orders of the perturbative expansion allow to perform (Bayesian) inference on the hidden parameter, whose improved knowledge can in turn be used to make inference on the unknown subsequent perturbative coefficients. Once the likelihood of the perturbative coefficients given the hidden parameter and the prior distribution of the hidden parameter are defined, the model produces probability distributions for the unknown coefficients, and thus for the physical observable itself.

This approach to theory uncertainties is unquestionably superior and more elegant than canonical scale variation, mainly because it is probabilistically founded. However, it also has limitations. For instance, while it performs well for QCD observables at \(e^+e^-\) colliders [3], it leads to less reliable results in the case of proton-proton collider observables [4, 5], that are usually affected by larger perturbative corrections. Perhaps for this reason, in conjunction with the simplicity and the deep-rooted attitude of using the scale variation method, the Cacciari–Houdeau (CH) approach is not very popular in the high-energy physics community, with only few applications [5,6,7,8,9,10,11,12,13,14,15,16,17,18], most of which in the context of effective theories. Moreover, the CH approach does not deal with the unphysical scale dependence of the result – the CH prediction is computed for a given choice of the scale, so the final probability distributions is de facto scale dependent.

In this paper, we use a Bayesian approach to build new probabilistic models, similar to the CH model, that overcome the limitations of the previous approach. The inference structure of any model that we will consider is depicted in full generality in Fig. 2. Starting from the known orders, under some model assumptions one can make inference on the (hidden) parameters characterizing the model, to be used in turn to infer the probability distribution of the unknown higher orders. In formulae, we have, schematically,

where P(A|B) is the conditional probability distribution of A given B. Eq. (1.2) is given in terms of the posterior distribution of the hidden parameters

which depends on the prior distribution \(P_0(\text {pars})\) of the hidden parameters and on the model assumptions through the likelihood \(P(\text {orders}|\text {pars})\), that appears also explicitly in Eq. (1.2). These two model-dependent ingredients are sufficient to let Bayesian inference work, and can be used to eventually construct a probability distribution for the observable, that contains all the information on the uncertainty from missing higher orders.

In this work, we propose two main models: one is an improved version of the CH model that efficiently describes perturbative expansions with large perturbative corrections; the other is a model inspired by the scale variation method, but constructed in such a way to be reliable and probabilistically sound. Both methods outperform the current approaches in terms of reliability. They are also sufficiently general to be used for perturbative expansion that are not necessarily fixed-order expansions in powers of the coupling, but can be for instance resummed expansions or other generalized expansions. Moreover, the first model can be applied also beyond quantum field theory, for instance to quantum mechanics or in general to any expansion that behaves perturbatively. We also explore variants of the methods and combinations of them.

These methods are still applied to the perturbative expansion at a given fixed value of the unphysical scale. An important and innovative development proposed in this work is a way to “remove” the scale dependence of the result. This is achieved by dealing with the scale dependence within the probabilistic framework, and leads to a result that is to a large extent scale independent. As a byproduct of this procedure, the central value of the prediction (identified with the mean of the probability distribution for the observable) does not necessarily correspond to the canonical perturbative result at a given “central” scale. Thanks to this feature our method improves not only the reliability of the uncertainty, but also that of the central prediction for the observable.

To facilitate the adoption of the results of this work, a computer code named THunc is publicly released. The code is very easy to use: the user provides the perturbative expansion, and the code outputs the probability distribution of the result, together with a number of statistical estimators (mean, mode, median, standard deviation, degree-of-belief intervals). It is also fast and efficient, and flexible as it allows the user to define customized models.

The structure of this paper is the following. In Sect. 2 we give some preliminary information on the perturbative expansions and their scale dependence, we provide some basic concepts on the probabilistic definition of the theory uncertainty as well as a brief recap of the CH method, and we define a working example to be used in the subsequent sections. In Sect. 3 we start defining some notations and describe general features common to all models we will later consider. We then move on presenting our two main models (at fixed scale) in Sects. 4 and 5. We propose a way to construct scale-independent results and uncertainties in Sect. 6. We then validate our methods in Sect. 7, where we also consider some realistic applications. In Sect. 8, we discuss the issue of defining correlations between theory uncertainties. After concluding in Sect. 9, we collect details on numerical implementations in Appendix A and propose a number of variants and possible improvements in Appendix B.

2 Preliminaries

2.1 Basic concepts and assumptions on perturbative expansions

We consider a (renormalizable) quantum field theory (QFT) depending on a single coupling \(\alpha \). We will often refer to practical examples in quantum chromodynamics (QCD), since its coupling is not very small and thus perturbation theory produces somewhat large high-order corrections, which is the case where reliably estimating theory uncertainties is both important and challenging. We focus on a generic physical observable \(\Sigma \). The theory predicts a unique well-defined value for this observable, that we call \(\Sigma _{\mathrm{true}}\). This is the value we aim to obtain. However, we usually cannot compute it exactly, and we thus use a perturbative approach to approximate it.

According to the perturbative hypothesis, namely that the dimensionless coupling \(\alpha \) of the theory is sufficiently small, it is possible to compute the observable \(\Sigma \) as a power series in the coupling itself. We then write

where \(c_k\) are the coefficients of the perturbative expansion, and n is some order at which we stop the expansion. Eq. (2.1) is not an equality. One may be tempted to think that if \(n\rightarrow \infty \), then it would become an equality. This limit, however, does not exist, as perturbative expansions are divergent [19, 20]. The divergence of the series is related to the fact that \(\Sigma _{\mathrm{true}}\) is a non-analytic function of the coupling \(\alpha \) in \(\alpha =0\). One may try to treat the divergent series using e.g. the Borel summation method. For some known (to all orders) divergent contributions, such as those due to renormalons (see e.g. Ref. [21]), one can obtain a finite result through Borel summation.Footnote 3 However, it is not guaranteed that the Borel-sum of the series captures the full result: there may be intrinsically non-perturbative contributions that cannot be reconstructed from the perturbative expansion.Footnote 4 Moreover, in order to use the Borel summation method, the series should be known to all orders, or at least its asymptotic behaviour should be known.Footnote 5 However, this is usually not the case, so in practice the only information we have about an observable is its (truncated) perturbative expansion, Eq. (2.1).

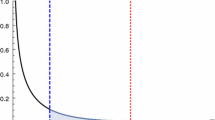

The asymptotic expansion of the function Eq. (2.2) truncated at various orders from 0 to 15 (orange dots), normalized to the value of the function itself (blue line). The lower panel shows the absolute difference between the truncated and exact results. The left plot corresponds to \(\alpha =0.13\), the right plot corresponds to \(\alpha =0.2\)

The fact that the series is divergent and that summation methods cannot be used may suggest that the perturbative result is useless. However, this is in constrast with the well known fact that perturbation theory works, namely it predicts results in decent (or even good) agreement with data, at least when the coupling is sufficiently small (for instance, in QED it works very well). The explanation of this fact relies on the assumption that perturbative series are asymptotic expansions of the exact result. To our knowledge, there is no general proof of this statement, but it seems very reasonable and we take it as valid. The asymptotic nature of the perturbative expansion implies that up to some order \(k_{\mathrm{asympt}}\) adding terms to the expansion improves the accuracy of the prediction, but beyond \(k_{\mathrm{asympt}}\) the divergent contributions to the series dominate and the sum explodes. A visual example of this fact is shown in Fig. 3, where the following non-analytic function of \(\alpha \) is compared with its asymptotic expansion at small \(\alpha \):

From the figure one sees that for a sufficiently small value of \(\alpha =0.13\) (left plot) the first few (approximately 7) orders give a good approximation of the result, showing an apparently converging behaviour. However, adding extra orders deteriorates the prediction, because the factorial growth of the series wins over the power suppression. The best prediction one can make using the asymptotic expansion is thus obtained truncating it to \(k_{\mathrm{asympt}}\sim 7\). This prediction, however, has an irreducible uncertainty due to the truncation itself,Footnote 6 of the size of the last term included in the truncated expansion. The right plot, that is obtained with a larger coupling \(\alpha =0.2\), shows that this irreducible uncertainty grows with the value of the coupling, and the value of \(k_{\mathrm{asympt}}\) decreases accordingly.Footnote 7

From these considerations we can conclude that a physical observable can be expressed as the sum

where the first term is perturbative expansion is truncated at \(k_{\mathrm{asympt}}\), which is the best prediction we can make using perturbation theory, while \(\Delta _{\mathrm{asympt}}\) represents the irreducible difference between the truncated expansion and its all-order sum. The \(\Delta _\text {non-pert}\) term, instead, represents possible intrinsically non-perturbative contributions that cannot be captured by perturbation theory. The general expectation on the size of these contribution is

at least when the coupling is sufficiently small. This expectation is again not a proof, but a consequence of the goodness of perturbation theory, together with the fact that both \(\Delta \) terms are known to be exponentially suppressed by \(\exp (-a/\alpha )\), \(a>0\), in some established cases (namely, when \(\Delta _\text {non-pert}\) contains instanton contributions and when \(\Delta _{\mathrm{asympt}}\) is dominated by factorially divergent contributions like renormalons [21]).

Unfortunately, since we do not generally know the asymptotic behaviour of the expansion, we cannot know a priori the value of \(k_{\mathrm{asympt}}\), and we thus cannot truncate the expansion at the optimal value. This is not a real issue, as in most cases we know just a rather small number of orders, typically two or three, corresponding to next-to-leading order (NLO) and next-to-next-to-leading order (NNLO) computations. Only in very few cases we know physical quantities at \(\hbox {N}^3\hbox {LO}\) (four terms in the expansion) or beyond. We thus expect (and assume) the number n of known orders to be smaller than \(k_{\mathrm{asympt}}\).Footnote 8 Therefore, we can rewrite Eq. (2.3) as

having defined the contribution from missing higher orders

where n is the highest known perturbative order. Assuming that n is sufficiently smaller than \(k_{\mathrm{asympt}}\), using similar considerations to those that led to Eq. (2.4) we can conclude that in most cases the contribution from missing higher orders is larger than the asymptotic and non-perturbative contributions,

This implies that our knowledge of the observable \(\Sigma \) is determined by the perturbative expansion truncated at order n with an uncertainty that is dominated by the missing higher order term \(\Delta _{\mathrm{MHO}}^{(n)}\). Quantifying this term, or better determining its probability distribution, is the main task of the rest of this paper.

2.2 Constructing a probability distribution for a physical observable

Defining the theoretical uncertainty from missing higher orders in a probabilistic way may sound impossible or completely arbitrary to many. This would certainly be true in the context of the so-called frequentist approach to probability, in which the definition of a probability requires the existence of a repeatable event, which is typically the case for an experiment but clearly not the case for a theoretical prediction. However, the frequentist approach to probability is not the only one – actually, the frequentist formulation is mathematically inconsistent (see e.g. [28]), and thus certainly not the best one.

The only mathematically correct formulation of a probability theory is the so-called Bayesian approach, where the probability is defined as the “degree of belief” of an event, which is then intrinsically subjective. Initially, when no information is available, the probability of an event is given by a prior distribution, which encodes our subjective and arbitrary prejudices. Acquiring information on the event changes the degree of belief through statistical inference (Bayes theorem). Therefore, any probability will depend on subjective assumptions through the prior distribution, but adding more and more information updates the probability making it less and less dependent on the prior.

In case of repeatable events, one can acquire information on the process by repeating them (and, in the limit of large number of repetitions, one recovers the frequentist result). However, repetion is not the only way of acquiring information, and thus one can use the Bayesian approach also in cases (like ours) when the event is not repeatable. Here “event” means something that can happen in different ways with different likelihoods, which we want to describe through a probability distribution. In our case, the event is “the observable takes the value \(\Sigma \)”, and its probability distribution will be a function of \(\Sigma \) ranging over all possible values. The information on this event that we want to use is the perturbative expansion of the observable. How this will be used in practice depends on the model and will be discussed at length in the rest of this paper. Thanks to this information, we can then use probabilistic inference to improve the knowledge on the observable, namely to update the distribution of \(\Sigma \).

The goal of this work is thus the construction of a probability distribution of the observable \(\Sigma \) given the perturbative expansion up to order n, namely

where we have indicated with H any assumption (hypothesis), including both prior distributions and the model we want to use (we will come back to this point later). P(A|B) indicates the probability distribution of A given the information B. In our case, the information is given by the first \(n+1\) coefficients \(c_0,\ldots ,c_n\), and H. This distribution contains all the information we desire about our knowledge of the observable. For instance, we can compute the best estimate of the observable \(\Sigma \) as its expectation value according to such distribution,

and its uncertainty either as the standard deviation or using degree-of-belief (DoB) intervals. Most importantly, the probability distribution can be used directly in physical analyses, when comparing theory predictions with data.

In the limit of “infinite information”, namely when we know the exact result \(\Sigma _{\mathrm{true}}\), the probability Eq. (2.8) should become

which represents the certainty (not probability) that \(\Sigma =\Sigma _{\mathrm{true}}\). In this limit any a priori assumption H does not matter. Eq. (2.10) cannot be seen as the all-order limit of Eq. (2.8), due to the divergent nature of the series and to the non-perturbative contributions discussed in Sect. 2.1. However, it suggests that when adding information (i.e. when increasing the number n of known orders, up to \(k_{\mathrm{asympt}}\)) the probability distribution should become narrower and more localised. We shall consider this behaviour as a property that a good model for theory uncertainty must satisfy.

Note that knowing the probability distribution for the missing higher order term \(\Delta _{\mathrm{MHO}}^{(n)}\) is practically the same as knowing the distribution for \(\Sigma \). Indeed, in the limit Eq. (2.7) where we neglect the asymptotic and non-perturbative contributions, the distributions for \(\Delta _{\mathrm{MHO}}^{(n)}\) and \(\Sigma \) are the same up to a trivial shift given by the perturbative result (Eq. 2.5). In the following, we will always deal directly with the distribution of \(\Sigma \) (Eq. 2.8), but we will compute it by estimating the missing higher orders \(\Delta _{\mathrm{MHO}}^{(n)}\).

2.3 The role of unphysical scales

A general feature of renormalizable QFTs is the appearance of an unphysical scale \(\mu \) as a consequence of the regularization procedure needed to deal with ultraviolet divergences. This is known as the renormalization scale. Physical observables do not depend on it, as this scale is an artefact of the scheme adopted to renormalize the theory. However, in practical computations using perturbation theory, a scale dependence is present in each order, in a way that it is compensated order by order. Any finite-order truncation of the perturbative series will thus have a residual scale dependence, which is formally of higher order. As discussed in the introduction, this observation is at the core of the canonical scale variation method to estimate theory uncertainties.

Because of renormalization scale dependence, perturbative expansions do not uniquely determine a series, but rather a family of series parametrized by the renormalization scale \(\mu \). We shall thus rewrite Eq. (2.5) as

where the left-hand side, the exact result, is scale independent:

Therefore, whenever we want to estimate the value of an observable using perturbation theory, we need to face with the fact that the perturbative result is scale dependent, and also the missing higher orders are.

An immediate consequence of this fact is that also the probability distribution Eq. (2.8) will unavoidably depend on such the choice of scale \(\mu \). We can express this by writing the probability Eq. (2.8) as

where we have emphasised that each coefficient depends on the scale \(\mu \),Footnote 9 or equivalently as

where the coefficients \(c_k\) are intended as functions, to be computed at the value \(\mu \) passed as an extra parameter. This dependence on the scale is clearly undesired, because this probability distribution, which depends on \(\mu \), is for the true observable, which is independent of \(\mu \). In the limit of infinite knowledge (Eq. 2.10), the distribution should tend to \(\delta (\Sigma -\Sigma _{\mathrm{true}})\) irrespectively of the value of \(\mu \). This implies that increasing the order, the probability distributions at different values of \(\mu \) should become more and more similar. While this feature is certainly nice, having an infinite number of different results for the same object is obviously not ideal. Rather, one would like to obtain a probability distribution for \(\Sigma \) that does not depend on the choice of scale. This can be achieved in two ways: either having a criterion for selecting an “optimal value” of the scale, or combining in some way the results at different scales.

The first way is obviously simpler, provided such a criterion exists. In the literature there are various approaches that aim at selecting an optimal scale, e.g. the Brodsky-Lepage-Mackenzie (BLM) method [29,30,31], the principle of minimal sensitivity (PMS) [32, 33], the principle of maximal conformality (PMC) [34,35,36,37] and the recent principle of observable effective matching (POEM) [38]. The PMC is probably the most widespread approach. It provides a way to select, order by order, an optimal scale that removes non-conformal \(\beta \)-function contributions from the perturbative expansion. This is believed to remove the renormalons from the perturbative expansion, thereby leading to a possibly convergent series (or at least to a less divergent one). For our purposes, this approach could provide a way to select, among the infinitely many probability distributions for \(\Sigma \), a specific one.Footnote 10 Note, however, that the PMC fixes the scale at each known order except the last one, which is free and thus arbitrary. This implies that a residual scale dependence is present also in the PMC approach, even though it is claimed to be much milder than the canonical scale dependence. However, it has been pointed out that a proper study of all the ambiguities in the approach leads to larger uncertainties, comparable to the canonical scale uncertainty [40,41,42,43]. We conclude that while the PMC approach is certainly intersting, it cannot provide the full solution to our problem.

The second way to obtain a scale-independent probability distribution for \(\Sigma \) is what we pursue in this work. The treatment of the renormalization scale is addressed within the methodology for computing the probability distribution Eq. (2.8). The actual procedure to combine the probability distributions at different scales, which represents one of the most innovative proposals of this work, will be presented in Sect. 6.

Before moving further, another aspect of scale dependence must be discussed. So far, we have described scale dependence as an obstacle to obtain a unique probability distribution for the observable. In fact, scale dependence can be also considered as a tool. This relies on the fact, already discussed in the introduction, that the \(\mu \)-dependence of the finite-order truncation of the perturbative series is of higher order, namely

This fact provides additional information on the expansion, which can be very useful as in most cases of interest in particle physics the available information is very limited (typically \(n=2\) or 3). In practice, let us assume for simplicity that the \(\mu \) dependence at a given order k can be translated in a single number, \(r_k\). It can for instance be the canonical scale uncertainty error, or the slope of the cross section as a function of \(\mu \), or something similar (we will provide a precise definition later in Sect. 3.2). We can then generalize the probability distribution as

where we have made explicit that also the \(r_k\) numbers generally depend on the choice of \(\mu \) about which the scale dependence is computed. In other words, also in this case we get a family of distributions depending on the value of \(\mu \) at which the perturbative expansion is computed, however this time we also include in each member of the family some information on the scale dependence. Since these parameters double the previous informationFootnote 11 they are clearly very precious.

Note that the parameters \(r_k\) represent the kind of information used in the construction of the canonical scale uncertainty. More precisely, the canonical scale uncertainty is based only on the last one, \(r_n\) (assuming \(r_n\) is defined according to Eq. (1.1)), and it does not provide a probabilistic interpretation. In Sect. 5 we will instead make use of the scale variation information \(r_k\) in a fully fledged probabilistic model, thereby providing a method that is, in some sense, a more reliable and statistically sound version of canonical scale variation.

We finally stress that the renormalization scale is not the only scale appearing in perturbative computations. For instance, in QCD processes involving hadrons in the initial or final states, the factorization of collinear singularities introduces a (perturbative) dependence on another unphysical scale, the so-called factorization scale. Also, in effective field theories widely used in collider phenomenology (e.g. heavy quark effective theory or soft-collinear effective theory), other unphysical scales may appear. The way to deal with these scales strictly depends on the scale itself. For instance, the dependence on the factorization scale cancels between the perturbatively computable coefficients and the non-perturbative parton distribution functions (PDFs). Therefore, if we wish to obtain factorization scale independent probability distribution for a physical observable, we may try to extend the procedure that we propose in Sect. 6 to this scale, with proper caveats due to the fact that PDFs are non-perturbative objects. Instead, if we wish to include our definition of theory uncertainties in the fits used to determine PDFs from data, the situation is completely different, as the PDFs are not physical observables and they are thus scheme and scale dependent. Addressing this issue is beyond the scope of this paper and it is left to future work. Here, we only focus on the renormalization scale dependence, which is universal.

2.4 The Cacciari–Houdeau method

Before moving to our new proposals, we now present the Cacciari–Houdeau (CH) method for estimating theory uncertainties [3]. Let us forget about the scale dependence (which is not dealt with in the original paper) and consider the perturbative expansion

We know that this series is divergent, but for the moment we ignore this fact. The basic assumption made in the CH model is that all the coefficients \(c_k\) are bounded in absolute value by a common number \({\bar{c}}\), namely

The coefficient \({\bar{c}}\) is a parameter of the model, and specifically a hidden parameter, which will disappear (through marginalization) in the final results. Moreover, they assume that all \(c_k\) are independent from each other, with the exception for the common bound, which implies that

The conditional probability \(P(c_k|{\bar{c}})\), that we shall call the likelihood, encodes in a probabilistic way the assumption Eq. (2.18). In the CH approach it is given by

namely the condition Eq. (2.18) must be strictly satisfied (the probability that the condition is violated is zero), and within the allowed range all values are equally likely (flat distribution). Finally, they provide a prior distribution for the hidden parameter,

which corresponds to a flat distribution in the logarithm of \({\bar{c}}\), to encode the idea that the order of magnitude of \({\bar{c}}\) is a priori unknown. Note that this prior distribution is not normalizeable, and thus it requires a regularization procedure to be used.

The ingredients above are sufficient to define the model, and using standard Bayesian inference they allow to compute the sought probability distribution for the observable. In practice, since the starting point is the perturbative expansion Eq. (2.17), to obtain a probability distribution for the full sum it is sufficient to have a probability distribution for the unknown \(c_k\) coefficients given the knowledge of the first \(n+1\) coefficients \(c_0,\ldots ,c_n\). The key ingredient is thus \(P(c_k|c_0,\ldots ,c_n)\), with \(k>n\), which can be computed as

where we have used the relation between the joint probability and the conditional probability \(P(A,B)=P(A|B)P(B)\) in the first step, introduced the hidden parameter in the second step, used again the definition of the conditional probability in the third step and finally used Eq. (2.19). The last line is written in terms of known functions (the likelihood and the prior), and can thus be easily computed. This result can be easily generalized to the joint probability of more than one unknown coefficient,

At this point one can also compute, at least formally, the probability distribution for the full sum, which is given by

Since this is an infinite-dimensional integration, it is impossible to perform it numerically and too hard to compute it analytically. Therefore, one can approximate the full sum with a truncated sum at some finite order \(n+j\) to get

which can be easily handled, at least numerically. The easiest approximation is obtained with \(j=1\), where only the first missing higher order is used to approximate the distribution, and it leads to a simple analytical expression [3].

The CH approach is a breakthrough in the context of estimating the theory uncertainty from missing higher orders, as it provides for the first time a probabilistic way to determine the uncertainty of an observable computed in perturbation theory. Note that this approach only considers the behaviour of the expansion, without using any information from the scale dependence. This is exactly the opposite of the canonical scale variation method, which is based on the scale dependence and does not use any information on the behaviour of the expansion.

Despite the nice properties of the CH approach, there are some caveats that need to be considered. The most obvious one is the assumption Eq. (2.18), that implies that the perturbative expansion is bounded by a convergent (geometric) series,

where we have assumed \(\alpha <1\) (which is consistent with the perturbative hypothesis). This is in contrast with the known fact that perturbative expansions are divergent. In a subsequent paper, Ref. [4], the CH approach has been modified to account for the divergence of the series, by modifying the condition Eq. (2.18) into

with \({\bar{b}}\) being the new hidden parameter, with the same prior as \({\bar{c}}\). This condition on the coefficients is much less stringent and compatible with the assumption that the divergence of the series is dominated by a factorial growth such as those due to renormalons. However, with this choice it is no longer possible to use Eq. (2.24) to compute the full sum, as it does not exist. Therefore only the approximation Eq. (2.25) can be considered, and typically with a low value of j otherwise the probability distribution becomes large due to the factorial growth. In Ref. [4] only \(j=1\) is considered.

The second issue is related to the fact that Eq. (2.18) does not account for a possible power growth of the coefficients. In other words, each term of the perturbative expansion is assumed to have a power scaling given just by \(\alpha \). This limitation was stressed already in the first CH paper [3], where they propose to solve it by rescaling \(\alpha \),

to obtain new coefficients \(c_k'\) that satisfy Eq. (2.18), or Eq. (2.27) in the factorial divergent hypothesis of Ref. [4]. The trouble is how to find such a rescaling factor \(\eta \). In Ref. [4], a global survey over a quite large number of observables is proposed to determine an optimal value of \(\eta \). In this survey they compare the uncertainty computed at the next-to-last known order with the actual (known) next order, to quantify how reliable the uncertainty is for each given value of the rescaling factor. Apart from the details (for which we refer the Reader to Ref. [4]), we stress that this approach assumes that the rescaling factor is the same for all the observables.Footnote 12 However, this is hardly the case, as different processes and observables are characterized by different dominant perturbative corrections.Footnote 13 Therefore, it is more appropriate to assume that the rescaling factor \(\eta \) is process and observable dependent. A different way to obtain it has been proposed in Ref. [9], where a fitting procedure is suggested to find an optimal value of \(\eta \) such that the known \(c_k\) are all of the same order. This method is observable dependent and uses only the information on the perturbative expansion to obtain the optimal rescaling. However, a fitting procedure to determine the rescaling factor clashes with the probabilistic nature of the rest of the procedure.

Finally, the CH approach or its modified versions do not deal with scale dependence. The CH machinery is applied to the perturbative expansion at a given value of the scale, and if one changes the scale the result changes accordingly. The difference in the final probability distribution at different values of the scales can be sizeable, see e.g. Ref. [5].

2.5 A working example: Higgs production at the LHC

In Sect. 7 we will consider various examples of perturbative expansions, and apply our methods to each of them. Nevertheless, in order to be clearer when discussing our new proposals, we think it is instructive to have a working example to immediately visualize how the various methods work.

The observable we choose is the inclusive cross-section for Higgs production in gluon fusion at the LHC for this purpose. This process has a number of advantages:

-

it is known up to \(\hbox {N}^3\hbox {LO}\) [44,45,46,47] (four orders in the perturbative expansion) in the so-called large top mass effective theory (this is a rarity in QCD processes at LHC, most of which are only known to NLO or NNLO);

-

it is characterized by large perturbative corrections;

-

canonical scale variation underestimates the impact of (large) higher orders;

-

its factorization scale dependence is very mild, so the whole scale dependence is basically fully captured by its renormalization scale dependence;

-

because the process starts at \({\mathcal {O}}(\alpha _s^2)\), the LO is scale dependent;

-

it is a real process and not a toy example.

On top of these reasons, the process is interesting also from a phenomenological point of view (see e.g. Ref. [48]), and indeed it was subject of several investigations of theory uncertainties from missing higher orders [4, 5, 8, 46].

Specifically, we consider LHC at \(\sqrt{s}=13\) TeV and set the Higgs mass to \(m_{H}=125\) GeV. We fix the factorization scale to \(\mu _{\scriptscriptstyle \mathrm F}=m_{H}/2\) (which is a standard choice [48]), even though changing this value has a negligible effect on the cross section, in particular at high orders. The “raw” result of the computation, which is the cross section as a function of the renormalization scale \(\mu \), is plotted in Fig. 4. Note that this process depends on a single hard scale, the Higgs mass \(m_{H}\). Therefore, it is natural to choose \(\mu \) of the order of \(m_{H}\), in order to avoid the presence of large unresummed logarithms of \(\mu /m_{H}\) in the perturbative coefficients. Nevertheless, we believe it is instructive to visualize the scale dependence for wide range of scales: the plot covers almost four decades in \(\mu /m_{H}\).

We see that for large values of the scale, where the QCD coupling is smaller, the expansion is characterized by all positive contributions and it progresses very slowly, with large perturbative corrections. Conversely, at small scales where the strong coupling blows up the expansion is highly unstable. In a “central” region, where \(\mu \sim m_{H}\), the expansion behaves in a reasonably perturbative way, even though the perturbative corrections are rather large and it is not at all clear what could possibly be the true cross section. Note also the presence of a stationary point at NNLO and of two stationary points at \(\hbox {N}^3\)LO, which could corrupt an estimate of the uncertainty based on canonical scale variation.

We stress that the full plot of Fig. 4 can be constructed from just the sequence of partial sums of the observable at the various orders at a given scale, and the knowledge of the value of the coupling at that scale. In this example, we have at \(\mu =m_{H}\) (and \(\mu _{\scriptscriptstyle \mathrm F}=m_{H}/2\))Footnote 14

where the values in curly brackets correspond to the cross section at LO, NLO, NNLO and \(\hbox {N}^3\hbox {LO}\) respectively. The way to reconstruct the cross section at any scale from these ingredients is discussed in Sect. A.1.

3 Model-independent features of the inference approach at fixed scale

In this section we start introducing our notation and present some general features of the construction of the models. For the time being we consider only the models at a fixed scale. How to obtain scale-independent probability distributions will be discussed in Sect. 6.

3.1 Basic notations

Let us denote with \(\Sigma _n(\mu )\) the partial sum of the perturbative series up to order n depending on the scale \(\mu \). If we are considering a standard perturbative expansion in powers of \(\alpha \) this is given by

where we have explicitly introduced an offset \(k_0\) for observables starting at \({\mathcal {O}}\left( \alpha ^{k_0}\right) \). This notational change with respect to the previous section allows us to be sure that the first order, \(k=0\), namely the leading order (LO), is non-zero. Note that the information contained in the coefficients \(c_k\) is fully contained in the sequence of partial sums

once the value of \(\alpha \) and of \(k_0\) are specified. From now on, we shall consider the partial sums as the basic objects, and forget about the coefficients \(c_k\) and even of \(\alpha \). In this way, the “perturbative expansion” is more general, as it does no longer necessarily need to be a strict expansion in powers of \(\alpha \). For instance, it can be a logarithmic-ordered expansion of a resummed result, or a (non-)linear transformation of the perturbative expansion. In what follows we simply assume that \(\Sigma _n\) represents the partial sum of a non-specified expansion that behaves perturbatively, defined such that the “LO” \(\Sigma _0\) is non-zero.

For a number of reasons that will become clear later, it is convenient to introduce a normalized version of the expansion, where the LO is factored out,

where we have defined the dimensionless coefficientsFootnote 15

According to this definition, \(\delta _0=1\) always, so we can also write

The coefficients \(\delta _k\) contain the information on the perturbative orders. The fact that \(\delta _0=1\) sets a common size for all perturbative expansions (useful when defining the model), and also tells us that the LO does not contain any useful information on the behaviour of the expansion and thus on the uncertainty due to missing higher orders (which is obvious, as with just the LO one cannot know how large the perturbative corrections will be). This is true even when the LO is scale dependent, because without another order to compare with one cannot know how to reliably translate the scale dependence into an information on the size of missing higher orders. Only when at least two orders, namely \(\delta _0\) and \(\delta _1\), are known one can start making inference. The only role of the LO is to set the dimension and the size of the observable through the prefactor \(\Sigma _0(\mu )\).

3.2 Definition of the scale-dependence numbers

Because the expansion is scale dependent, as discussed in Sect. 2.3 one can construct numbers \(r_k(\mu )\) that encode this dependence at each order. The importance of these numbers stems from the observation that scale dependence of a physical observable is formally of higher order, Eq. (2.15), namelyFootnote 16

The actual construction of these numbers must be such that this equation is satisfied. The simplest measure of the scale dependence is the slope of \(\Sigma _k(\mu )\) as a function of \(\mu \), or more precisely its derivative with respect to \(\log \mu \) (the logarithm is natural because the dependence on the scale is logarithmic). In order to directly compare these numbers with the \(\delta _k\) coefficients, it is convenient to normalise the derivative to the observable itself to make them dimensionless. The two most natural ways to do this are

where in the first case we have normalized to the LO \(\Sigma _0\), while in the second case we have normalized to the observable at the same order at which the scale dependence is computed. If the observable does not change much at different orders, the two options are equivalent. However, in presence of large perturbative corrections there can be a substantial difference between the two. None of them is better in an absolute sense. However, we argue that the second option has a nicer perturbative behaviour, with higher order \(r_k\) being typically smaller than lower order ones, as one would expect from Eq. (3.6).

The absolute value of the normalized slopes defined in Eq. (3.7), respectively shown in the left and right plots, for the Higgs production process

To prove this, we consider the example of Higgs production introduced in Sect. 2.5, and show in Fig. 5 the (absolute value of) the two options in Eq. (3.7). From the left plot, corresponding to the left option in Eq. (3.7), we see that at NLO (green curve) the slope is always larger than the LO one (black curve), and at the next orders there is a large variability without a precise hierarchy. Conversely, in the right plot, corresponding to the right option in Eq. (3.7), the higher order curves are smaller than the lower order ones over a wide range of scales, which is the expected perturbative behaviour. So the definition of the \(r_k\) coefficients that we propose is morally given by

where the absolute value is introduced to keep only the information on the size of the dependence but not the sign. Note that at small scales, where the perturbative expansion is unstable (Fig. 4), the expected hierarchy is violated. This is the case also in proximity of the stationary points. To avoid problems arising from stationary points, we propose to define the \(r_k\) numbers in a slightly different way. Namely, we replace the derivative with a finite difference, and look for the largest finite difference in a range around the point \(\mu \). In formulae, we haveFootnote 17

that selects the largest finite difference in the range of scales between \(\mu /f\) and \(f\mu \), where \(f>1\) is a factor to be fixed. This definition is more robust than the one based on the derivative.

The coefficients \(r_k(\mu )\) (Eq. 3.9), for \(f=2\) (left) and \(f=4\) (right), for the Higgs production process

We show this in Fig. 6, for \(f=2\) (left plot) and \(f=4\) (right plot). Increasing f allows to be less sensitive to stationary points, and indeed in the right plot the expected hierarchy is preserved over a wide range of scales. At low scales, the value of \(r_k\) at NNLO and \(\hbox {N}^3\hbox {LO}\) blows up because the finite difference probes the low-scale region where the perturbative result is unstable (Fig. 4). For larger values of f, the blow-up region obviously moves to larger scales. Therefore, the robustness gained increasing f has to be balanced with the stability loss. We believe that \(f=4\) can be considered as a reasonable compromise.Footnote 18

We stress that for an efficient computation of the \(r_k(\mu )\) coefficients a fast evaluation of the scale dependence of the observable is needed. Since the renormalization scale dependence is universal and governed by the \(\beta \)-function of the theory, it can be constructed automatically from the knowledge of the observables at the various orders at a single value of the scale. The details are reported in Appendix A.1.

We finally note that if the LO is scale independent, then \(r_0=0\). Given that we aim at using the \(r_k\) numbers to estimate the higher orders, a zero value would be inappropriate. More precisely, \(r_0=0\) means that the LO cannot be used to probe higher orders through scale variation. We can fix this by assuming that, when the LO is scale independent, we can only say that the NLO corrections may be of the order of the LO itself. Given the definition of the \(r_k\) as normalized quantities, this corresponds to assuming \(r_0={\mathcal {O}}(1)\). For definiteness, we arbitrarily set \(r_0(\mu )=1/2\) in these cases. We shall see later that this assumption is rather conservative.

3.3 General features of the models

Before discussing the actual models that we propose, we want to give some general features that are common to all of them. Let us recall that the goal of this work is to construct a probability distribution for \(\Sigma \) given the first known \(n+1\) orders \(\Sigma _0,\Sigma _1,\ldots ,\Sigma _n\), or equivalently \(\Sigma _0,\delta _1,\ldots ,\delta _n\) (using our new notation). This was given in Eq. (2.8), or, when working at fixed scale, in Eq. (2.14).

According to our assumption Eq. (2.7) that the uncertainty of a theoretical prediction based on perturbation theory is dominated by the missing higher orders, we shall compute the distribution for \(\Sigma \) through these missing higher orders, ignoring the other contributions from the asymptotic expansion truncation and the non-perturbative part. In other words, we shall approximate the observable \(\Sigma \) as

where the best approximation is obtained using \(k=k_{\mathrm{asympt}}\), namely including all the missing higher orders up to the point in which the asymptotic expansion starts to grow. Since we typically do not know a priori the value of \(k_{\mathrm{asympt}}\), the best we can do is to include a few extra orders beyond the known ones, without exaggerating in order not to risk to go beyond \(k_{\mathrm{asympt}}\).

The inference on the observable \(\Sigma \) can be obtained in terms of the more fundamental inference of the higher orders from the known ones. This was already done in Sect. 2.4, and we repeat that derivation here in more generality and with our new notation. Assuming we know the coefficients of the expansion up to order n and we approximate the observable with its expansion up to order \(n+j\) with \(j>0\), \(n+j\le k_{\mathrm{asympt}}\), the probability distribution is given by (suppressing for ease of notation the implicit dependence on the hypothesis H)

where we have used in the last step the \((n+j)\)-th order approximation

which is a direct consequence of Eq. (3.10), and the definition of \(\Sigma _n(\mu )\), Eq. (3.5).Footnote 19 If \(\Sigma \) is a positive definite observable, like a cross section, one could also impose a positivity constraint through a factor \(\theta (\Sigma )\), which however requires the computation of a normalization factor as in general the distribution is no longer normalized. The result Eq. (3.11) is written in terms of \(P(\delta _{n+j},\ldots ,\delta _{n+1}|\delta _n,\ldots ,\delta _1,\Sigma _0,\mu )\), which represents the probability of the higher orders given the known ones, and depends on the model under consideration. Note that we have also included \(\Sigma _0\) among the known information, even though it is just a prefactor: indeed \(\Sigma _0\) is required to compute the \(r_k\) numbers introduced in Sect. 3.2 that are needed in models that use information on the scale dependence.

The delta function in Eq. (3.11) can be used to perform the integral over one of the higher orders, say \(\delta _{n+j}\), which gives

The other integrations are more complicated, and should be performed numerically (unless the model is particularly simple). The simplest result is obtained when considering \(j=1\), namely when approximating the observable using just the first unknown order,

In practice, since the order \(n+j\) that approximates best the observable is not known a priori, a convenient approach to choose properly j is the following. We start considering the simplest approximation, \(j=1\) (Eq. 3.14). Then, we include the next order, namely we use \(j=2\). If the distribution changes visibly, then we further increase j by a unity, and so on until the distribution changes only mildly, up to a tolerance decided by the user.

We now turn our attention to the probability \(P(\delta _{n+j},\ldots ,\delta _{n+1}|\delta _n,\ldots ,\delta _1,\Sigma _0,\mu )\). As we said, this is model dependent. However, we can further write it in terms of more fundamental probabilities, using the relation

The numerator and the denominator are the same object, simply with a different number of \(\delta _k\) terms. In the numerator only some of them are known while the others are unknown, but mathematically this does not make any difference. The joint distribution \(P(\delta _m,\ldots ,\delta _1,\Sigma _0|\mu )\) at fixed scale is the basic object of the model that is needed to make inference on the higher orders. In all the models we will consider, we will assume that there is a number of hidden parameters characterizing the model. Denoting with \(\vec p\) the vector of such parameters, the joint distribution can be written as

where in the second line we have written explicitly the prior of these parameters, as they are hidden and thus can never be known exactly.

The most general Bayesian network of models of inference of missing higher orders (left), and a more specific one, using explicitly the scale variation numbers \(r_k\), that covers all the cases considered in this work (except the one of Sect. B.2)

To compute the joint distribution, it is sometimes useful to visualize the relation between the different objects using a Bayesian network. The most general network for any model of theory uncertainties is rather simple, and it is depicted in Fig. 7 (left) showing only the first four orders (the generalization to more than four orders is obvious). The various orders depend in general on all previous orders (but not on future ones, otherwise the model cannot be predictive), and on the hidden parameters \(\vec p\). Since \(\Sigma _0\) is the first order and it only sets the size of the observable, it does not depend on anything. In addition to this general structure, we want to consider more explicitly the role of the scale dependence numbers \(r_k\). Since these are functions of the \(\delta _k\) coefficients and \(\Sigma _0\), there is no need to specify them in the network. However, it may be useful to introduce them explicitly in order to better appreciate their role. Therefore, in the same Fig. 7 (right) we also show explicitly a network depending on these \(r_k\). The dashed arrows represent deterministic links, namely analytic relations rather than probabilistic ones, and mean that the \(r_k\) numbers are computable analytically from the various orders. Note that this network is not as general as the previous one. Indeed now we have made the assumption that each \(\delta _k\) depend only on the previous \(\delta _{k-1}\) and \(r_{k-1}\) (and on \(\vec p\)), but not on all the previous orders. This simplification is not necessary, but it will be adopted in all our models (except the one of Sect. B.2).

4 Model 1: geometric behaviour model

We now present our first model, that uses only information on the behaviour of the expansion. As such, this model is very general, and not restricted to a QFT application.

4.1 The hypothesis of the model

The first model that we consider is a generalization of the Cacciari–Houdeau model introduced in Sect. 2.4. The main difference is that our model accounts for a possible power growth of the coefficients of the expansion within the probabilistic approach. In the CH model each term of the expansion is bounded by

which is Eq. (2.18) in which we have emphasised that the power behaviour of the full \({\mathcal {O}}(\alpha ^k)\) term is entirely described by \(\alpha ^k\). As we have discussed in Sect. 2.4, this hypothesis is hardly satisfied, and a variant of the CH method in which the expansion parameter \(\alpha \) is rescaled by a factor \(\eta \) is advisable. So far, this has never been done within the context of the probabilistic model.

Now, in our new notation (Eq. 3.5), we have lost information on the coupling \(\alpha \), as the whole information is contained in the \(\delta _k\) coefficients Eq. (3.4). This was done on purpose and is to be considered as an advantage, as the expansion Eq. (3.5) is more general than a strict expansion in powers of \(\alpha \). If we want to translate the CH condition Eq. (4.1) in the new language, we are forced to introduce back an expansion parameter. Since from our point of view this is a new parameter (as we have lost information about \(\alpha \)), it is natural to consider it as a parameter of the model, rather than an external one. As such, it is not fixed to be \(\alpha \) or a fraction of it. The condition that we consider is thus

where both c and a are positive hidden parameters of the model. This condition implies that the expansion is bounded by a geometric expansion, and we thus call this model a geometric behaviour model. We have specified that this bound can only be valid for orders k smaller than \(k_{\mathrm{asympt}}\), otherwise it is certainly violated. This is anyway the only region which we are interested in, according to the discussion in Sect. 2.1.

Equation (4.2), though very similar to the CH condition Eq. (4.1), differs from it in a number of very important aspects, that we now list.

-

The fact that a is a parameter makes not only the model more general than CH, but it also allows to find, through inference, the most appropriate values (in a probabilistic sense) of the expansion parameter a compatible with the behaviour of the expansion. In other words, a can be interpreted as the rescaled expansion parameter \(\alpha /\eta \) introduced in Sect. 2.4, but with the rescaling factor \(\eta \) being determined through inference from the perturbative expansion itself, as opposed to the approaches of Refs. [4, 9].

-

The parameter c is dimensionless, as opposed to \({\bar{c}}\) which has the dimension of the observable. This is useful as we can legitimately use a universal prior for c without knowing anything about the observable.Footnote 20

-

Since the condition Eq. (4.2) is limited to \(k< k_{\mathrm{asympt}}\), the fact that the perturbative expansion is typically factorially divergent does not imply that the geometric bound is unacceptable. Of course one cannot say that the entire series is bounded by a geometric series, but a small portion of it may well be. Therefore, the condition Eq. (4.2) limited to the first few orders can be considered as perfectly acceptable.Footnote 21

The CH assumption Eq. (2.18) can be recovered from this new approach by fixing \(a=\alpha \) (or \(a=\alpha /\eta \) in the rescaled variant) and rewriting \(c={\bar{c}}/\Sigma _0(\mu )\). Note that the hidden parameters c, a depend on the scale \(\mu \). This has not been written explicitly, because in a statistical language this information is expressed by saying that c and a are correlated with \(\mu \).

In order to construct a probabilistic model to estimate theory uncertainties, we need to translate the condition Eq. (4.2) into a likelihood function. We assume the simple conditional probability

which is the straightforward extension of the CH choice, Eq. (2.20). We have considered the idea of allowing violation of the bound, by adding tails to the likelihood. However, the fact of having two hidden parameters already makes the model much more flexible than the original CH model, so adding a violation of the bound would not lead to any substantial improvement to the model stability.

In similarity with the CH model, we assume that all \(\delta _k\) are independent of each other at fixed c, a and \(\mu \), namely

which generalizes to any set of \(\delta _k\) coefficients. These conditions, together with the prior distributions for the hidden parameters that we are going to discuss, are sufficient to fully define the model. The Bayesian network of this model is a simplified version of the general one introduced in Sect. 3.3, and is depicted in Fig. 8.

Bayesian network for the geometric behaviour model. Each \(\delta _k\) depends only on the hidden parameters (and \(\mu \)) through the likelihood Eq. (4.3)

4.2 The choice of priors

The two hidden parameters c, a need a prior distribution. We assume that a priori the two variables are uncorrelated

where we have emphasised that in principle the prior can depend on the scale \(\mu \), considered to be externally given for the moment (this will change in Sect. 6).

Before proposing a functional form for the prior, let us comment on the first step of the inference. The information from the LO is encoded in \(\Sigma _0\), that appears as a prefactor in our normalized expansion (Eq. 3.5). Since the likelihood Eq. (4.3) looks at the \(\delta _k\) and not at \(\Sigma _0\), the knowledge of the LO does not change our prior: \(P(c,a|\Sigma _0,\mu )=P_0(c,a|\mu )\). While this is strictly speaking correct according to our notation, it misses a conceptual point. Indeed, it exists also a \(\delta _k\) for the LO, which is \(\delta _0\). The fact that \(\delta _0=1\) makes it a trivial variable, in the sense that it carries no information, which is the reason why it does not appear in our functions. However, from a mathematical viewpoint, it would play a role if we assume that the likelihood Eq. (4.3) is also valid for the LO. Indeed, at order zero, the likelihood becomes

This equation implies that the distribution for a is unmodified by the knowledge of the LO, while the distribution for c changes as

This result shows that the requirement that the likelihood Eq. (4.3) applies also at LO implies the constraint \(c\ge 1\). Since \(\delta _0\) is not explicitly part of our parameters, we will not perform the inference in Eq. (4.7) in our model. In other words, our prior Eq. (4.5) is to be considered as the posterior after the (trivial and universal) knowledge of \(\delta _0=1\). We will keep track of this result by constructing the prior such that the condition \(c\ge 1\) is satisfied.

At this point we are free to choose the functional form of our prior. Note that it is convenient to use simple functional forms, such that analytic computations can be performed. Let us start with the prior for c. Since we do not have any a priori knowledge on the expected size of c, only a monotonic prior is acceptable. We find it reasonable to assume a power law function

where we have included the \(\theta (c-1)\) for the reason explained above. Note that we do not include any dependence on the scale, namely for any value of \(\mu \) we use the same prior distribution. The parameter \(\epsilon \) is an arbitrary parameter, and can be chosen at will (we will discuss our favourite choices later in Sect. 4.4). We note that for \(\epsilon =1\) we obtain the form \(\theta (c-1)/c^2\), which results from a “pre-prior” (without knowledge of \(\delta _0\)) proportional to \(\theta (c)/c\), as obvious from Eq. (4.7). This is the prior used for \({\bar{c}}\) in the CH model, Eq. (2.21). Since \(\theta (c)/c\) is an improper distribution, a regularization procedure is needed in CH to perform practical computation. Rather, when including the trivial information \(\delta _0=1\) within the model, the prior is a proper distribution, and the computations are simplified. This is one advantage of using from the start the universal information \(\delta _0=1\), which is in turn a consequence of using the normalized version of the expansion (Eq. 3.5).

We stress that the choice \(\theta (c)/c\) for the “pre-prior” corresponds to a flat (and thus non-informative) distribution for the variable \(\log c\), justified in the CH work by the argument that the order of magnitude of the hidden parameter is unknown. The other natural non-informative “pre-prior” is given simply by \(\theta (c)\), namely a flat distribution in the hidden parameter c, which is again improper. This choice corresponds to the value \(\epsilon =0\) in Eq. (4.8). With \(\epsilon =0\) also the prior Eq. (4.8) is improper, and indeed in that equation we have assumed \(\epsilon \) to be strictly greater than zero. In fact, computations can be easily performed also in the \(\epsilon \rightarrow 0\) limit with just a little care. We find however that this complication is not necessary: if we wish to mimic the effect of a flat “pre-prior” in c, we can just use a very small positive value for \(\epsilon \). This will indeed be our favourite choice, see Sect. 4.4. Variations of this parameter will be explored in Sect. 4.6.

As far as the parameter a is concerned, we have to make an initial choice about the expected behaviour of the expansion. Indeed, the geometric bound is convergent (namely, at finite order, decreasing with the order) only for \(a<1\). In principle, we could allow \(a\ge 1\), which would describe a (power) divergent behaviour of the expansion.Footnote 22 However, allowing \(a\ge 1\) is in contrast with the asymptotic nature of the expansion that we are assuming, see Sect. 2.1. Therefore, we suggest to limit our interest to the region \(a<1\). We thus propose the functional form

Once again, we assume that this prior is independent of the scale \(\mu \). For \(\omega =0\), we obtain a flat distribution in the allowed region \(0\le a\le 1\) (in this case, the extreme value \(a=1\) is included), while for \(\omega >0\) we suppress the region \(a\rightarrow 1\) to favour small values of a. The actual value of \(\omega \) that we recommend will be discussed in Sect. 4.4, and its variations will be considered in Sect. 4.6.

4.3 Inference on the unknown higher orders

We have now all the ingredients to perform the inference in this model. The basic probability that we need is the conditional probability of unknown higher orders given the first n known non-trivial orders \(\delta _1,\ldots ,\delta _n\). Note that because of our assumption Eq. (4.2), only a limited number of higher orders, up to order \(k_{\mathrm{asympt}}\), can be predicted within this model. Therefore, the most generic probability distribution we need to consider, according to Eq. (3.15), is

namely the probability of the unknown orders \(n+1,\ldots ,n+j\) given the known orders \(1,\ldots ,n\). In contrast with Eq. (3.15), we have removed here the explicit dependence on \(\Sigma _0\), as it does not appear in the likelihood and thus it does not play any role in the inference procedure.

The numerator and the denominator are the same object, therefore we can focus on the distribution (at fixed scale) for a generic number m of consecutive coefficients, which is given by

which corresponds to Eq. (3.16) specialized to our case. In the first line we have introduced the hidden parameters, in the second line we have used the independence of the coefficients, Eq. (4.4), and in the third line we have explicitly written the likelihood Eq. (4.3) and used the prior independence of the hidden parameters Eq. (4.5). The integral in Eq. (4.11) can be computed analytically for our choice of priors Eq. (4.8) and Eq. (4.9). The inner integral is given by

Depending on the value of a, the max function selects a different term with a different a dependence. In order to compute the a integral analytically, it is therefore convenient to partition the integration region \(0\le a<\infty \) into a finite number of intervals, in each of which the max function returns one of its arguments. Since the arguments of the max function contain powers of a that grow with k, the intervals are ordered with k. More precisely, smaller values of a will select larger powers k, and viceversa. We can thus introduce consecutive decreasing numbers \(a_k\), representing the boundaries of these consecutive intervals, defined such that

and assuming \(a_0\equiv \infty \) and \(a_{m+1}\equiv 0\). An algorithm for extracting the various \(a_k\)’s from the knowledge of the \(\delta _k\)’s is described in Appendix A.2. The a integral is then given by

where in the last line we have used the explicit form of the prior for a (Eq. 4.9), that further restricts the integration region to \(a\le 1\). The general result of this integral can be written in terms of the incomplete Beta function. However, a simpler form is obtained if \(\omega \) is an integer. Indeed in this case

The advantage of having such a simple analytic form is that the numerical evaluation is very fast. However, nothing prevents one from making more complicated choices for the prior distributions, paying the price that the numerical integration will typically slow down the computation of the distribution.

Equation (4.15) can be used directly in Eq. (4.10) to obtain the probability distribution of the unknown higher orders. Following the derivation of Sect. 3.3 we can then construct the distribution for the observable \(\Sigma \) (Eq. 3.13). A useful property of the result is that the tails of such distributions are dominated by the first missing higher order (this is a consequence of the hierarchy in the arguments of the max function, Eq. (4.13)). Therefore, the asymptotic behaviour of the distribution is given by

4.4 The posterior of the hidden parameters

Even if the hidden parameters of the model are never part of the final distribution, it is instructive to understand how their distribution changes with the knowledge of the first few orders. The posterior distribution of c and a can be easily computed as

where the denominator is given in Eq. (4.15), and also corresponds to the integral over c and a of the numerator. It’s clear that even if in our prior c and a were uncorrelated, correlations arise from the model after inference takes place. Using the explicit form of our likelihood (Eq. 4.3), the posterior becomes

where in the second line we have also used the explicit form of our prior (Eqs. 4.8 and 4.9). The theta function cuts out the region of small c and small a, with a boundary given by a sequence of contours identified by \(ca^k=\left| \delta _k \right| \) in the region \(a_{k+1}<a<a_k\) selected by the max function, see Eq. (4.13). On the other hand, the growing negative power of both c and a tend to favour small values of c, a, thus close to this boundary. Since the power of a grows quadratically with n while that of c only linearly, inference tends to favour smaller a at the price of having somewhat larger c. A visual example of this behaviour is given in Fig. 9.

The plots show the probability distribution of the parameters c and a. The first plot (upper left) is the prior, the second (upper right) is the posterior after the knowledge of \(\delta _1\), the third (bottom left) adds the knowledge of \(\delta _2\) and the last (bottom right) the one of \(\delta _3\). The observable under consideration is the inclusive Higgs cross section, Sect. 2.5, for fixed scale \(\mu =m_{H}/2\). Each line represents the contour \(ca^k=\left| \delta _k \right| \), for increasing values of k from 1 to 3, to clarify the role of the theta function. Note that the c axis is shown in log scale, but the probability distribution is for c and not \(\log c\). For this plot, we used \(\omega =1\) and \(\epsilon =0.1\) for the prior parameters

This preference is a nice outcome of the model: inference favours small values of the parameter a that lead to a better behaviour of the expansion, with smaller higher orders. The prediction of the observable will then be more precise, namely subject to a smaller uncertainty. Of course one also (and more importantly) wants the prediction to be accurate, namely with a reliable uncertainty that does not underestimate the missing higher orders. Judging whether the outcome of the model is reliable is not immediate, and requires explicit examples to verify it. We will come back later to this point in Sects. 4.5 and 7.

Note that all the considerations so far are independent of the prior. The prior has the only role of changing the “starting point” of the inference procedure. With sufficiently many known orders, our choice of the prior will not matter. However, since the number of known orders is typically limited, choosing wisely is important. Since we like the “direction” selected by the inference procedure (it’s better to have larger c and smaller a than the opposite) it seems convenient to choose a prior distribution that already favours the same region of parameter space. This is achieved in our Eqs. (4.8) and (4.9) choosing a small value for \(\epsilon \) and a large value for \(\omega \). Note however that a large \(\omega \) suppresses the region \(a\sim 1\), while in the inference there is no such suppression, simply the small a region is enhanced by a negative power of a. Therefore, using a large value of \(\omega \) may introduce a significant bias. A good compromise, that we advocate as the best choice, is \(\omega =1\). In this way there is a preference for smaller a with only a mild suppression for \(a\sim 1\). On the other hand, for \(\epsilon \) we can choose an arbitrarily small (positive) value, for instance \(\epsilon =0.1\) or \(\epsilon =0.01\). The difference between either choice is relevant only at very low orders: in Fig. 9 only the first plot (the prior) would change visibly. We thus use \(\epsilon =0.1\) in the rest of this work, with the exception of Sect. 4.6 where we will consider variations of the prior parameters.

4.5 Representative results

Before moving further, we now present some representative results of this method. We use our working example of Higgs production to examine the distribution for the cross section. We fix the renormalization scale \(\mu =m_{H}/2\), which is the most widely used choice for this process. Of course the result of this model depends on the choice of scale made, and in addition it does not know anything about the scale dependence. So the input for this model are just 4 numbers, the values of the cross section at the chosen scale at LO, NLO, NNLO and \(\hbox {N}^3\)LO. How to deal with scale dependence in this model will be discussed in Sect. 6.

In Fig. 10 we plot the distribution for the observable \(\Sigma \) (the Higgs cross section), Eq. (3.13), using only the first missing higher order (solid lines) or the first two missing higher orders (dashed lines). The four colors correspond to different knowledge: LO (black), NLO (green), NNLO (blue) and \(\hbox {N}^3\)LO (red).

We immediately notice that the solid and dashed curves are basically identical. This implies that, for any given status of knowledge, the uncertainty in this model is dominated by the first missing higher order. This is a consequence of having \(a<1\), that implies that higher and higher orders are smaller and smaller in this model. Moreover, given that the distribution of c and a favours small values of a (Fig. 9), the impact of the next higher orders is significantly smaller than the first missing higher order. The reason why the curves are almost identical also depends on the fact that the tails of these distributions behave as a negative power (Eq. 4.16), and are thus very “long”: therefore, the effect by the next higher orders of “enlarging” the distribution is almost invisible as the tails already cover large deviations of the observable. We thus conclude that for this model it is sufficient to consider only the first missing higher order, so we can directly use Eq. (3.14) for all practical applications. This makes the implementation of this model very fast, as no numerical integration is needed.

Let us now comment on the predictions of this model. Of course, every distribution is centered on the cross section at the known order, and it is symmetric, because in our assumption Eq. (4.2) the sign of the missing higher order is treated agnostically (we will consider a possible way of taking the sign into account in Sect. B.5). We consider the four states of knowledge in turn.

-

When only the LO is known, the shape of the curves is fully determined by the prior (no inference took place yet), so the black curve is not particularly useful. Indeed, for our choice of prior, the distribution is very broad, so it is basically not predictive. Note also that the distribution is barely normalizable, because it asymptotically behaves as \(\left| \Sigma -\Sigma _0 \right| ^{-1-\epsilon }\), Eq. (4.16), with \(\epsilon \) small. Another consequence of this behaviour is that the variance of this distribution is infinite. Also, the distribution allows with a high probability (\(\sim 40\%\)) unphysical negative values of the cross section. These can be avoided imposing a positivity constraint on \(\Sigma \), but it is instructive to see how much information is needed to obtain a distribution sufficiently narrow to have negligible probability of negative cross section.

-