Abstract

While the low-energy excess observed at MiniBooNE remains unchallenged, it has become increasingly difficult to reconcile it with the results from other sterile neutrino searches and cosmology. Recently, it has been shown that non-minimal models with new particles in a hidden sector could provide a better fit to the data. As their main ingredients they require a GeV-scale \(Z'\), kinetically mixed with the photon, and an unstable heavy neutrino with a mass in the 150 MeV range that mixes with the light neutrinos. In this letter we point out that atmospheric neutrino experiments (and, in particular, IceCube/DeepCore) could probe a significant fraction of the parameter space of such models by looking for an excess of “double-bang” events at low energies, as proposed in our previous work (Coloma et al., Phys Rev Lett 119(20):201804, https://doi.org/10.1103/PhysRevLett.119.20180, 2017). Such a search would probe exactly the same production and decay mechanisms required to explain the anomaly.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the discovery of neutrino oscillations, a tremendous effort in the neutrino sector has been made to measure the leptonic mixing parameters precisely and to test the three-neutrino oscillation paradigm. While most of the experimental results are perfectly consistent with oscillations in three families, several long-standing anomalies have sparked the interest of the neutrino community on light sterile neutrinos in the past two decades.

The evidence favoring the existence of an eV sterile neutrino was first reported by LSND [2], an experiment designed to measure the oscillation probability in the appearance channel \({\bar{\nu }}_\mu \rightarrow {\bar{\nu }}_e\). At the very small values of L / E considered in LSND (L being the distance to the detector, and E the neutrino energy), standard neutrino oscillations have not yet started to develop and, therefore, a positive signal could indicate the existence of an extra sterile neutrino with a mass at the eV scale. The LSND result was later confirmed by the MiniBooNE experiment [3]. MiniBooNE used a higher energy neutrino beam and a longer baseline that at LSND but keeping the same value of L / E in order to probe the same sterile neutrino mass scale. The collaboration reported excesses both in the \(\nu _\mu \rightarrow \nu _e\) and \({\bar{\nu }}_\mu \rightarrow {\bar{\nu }}_e\) channels, with a significance which is now at the \(4.7\sigma \) [4].

However, in spite of this impressive statistical significance, the interpretation of the data in a \(3+1\) scenario (that is, adding a sterile neutrino to the three neutrinos in the Standard Model) suffers from tension on several fronts when confronted with the results from other experiments. First, the excess observed at MiniBooNE takes place at lower energies than expected from the LSND result. Moreover, a positive result in the appearance channels should be supported by a signal in the disappearance channels ( , and

, and  ) as well, since in a minimal sterile neutrino scenario the probabilities in the appearance and disappearance channels are related. While reactor and radioactive neutrino experiments seem to observe a \(\sim 3\sigma \) deficit of events with respect to theoretical predictions, all searches in the

) as well, since in a minimal sterile neutrino scenario the probabilities in the appearance and disappearance channels are related. While reactor and radioactive neutrino experiments seem to observe a \(\sim 3\sigma \) deficit of events with respect to theoretical predictions, all searches in the  disappearance experiments have been negative so far (for recent global fits to neutrino data see, e.g., Refs. [5,6,7,8,9]). This effectively rejects the minimal \(3+1\) sterile neutrio hypothesis at high confidence level [5, 6]. Finally, additional tensions arise from cosmological observables, both from measurements of the number of effective degrees of freedom at the time of Big-Bang Nucleosynthesis as well as from measurements of the sum of neutrino masses from the Cosmic Microwave Background and structure formation data [10, 11].

disappearance experiments have been negative so far (for recent global fits to neutrino data see, e.g., Refs. [5,6,7,8,9]). This effectively rejects the minimal \(3+1\) sterile neutrio hypothesis at high confidence level [5, 6]. Finally, additional tensions arise from cosmological observables, both from measurements of the number of effective degrees of freedom at the time of Big-Bang Nucleosynthesis as well as from measurements of the sum of neutrino masses from the Cosmic Microwave Background and structure formation data [10, 11].

In view of the difficulties that the vanilla \(3+1\) hypothesis is facing in order to explain the MiniBooNE low-energy excess (LEE), it is worth exploring non-minimal explanations. At this point, it is worth noting that while the MiniBooNE excess takes place in electromagnetic showers, the detector cannot distinguish if these are produced by photons or electrons. Therefore, an interesting possibility (to be tested by the MicroBooNE experiment at Fermilab [12]) is that the excess comes from photons instead. Within the Standard Model (SM) framework, an excess of photons may come from cross section uncertainties or an underestimated background. While a promising candidate would be single-photon production in NC interactions [13,14,15,16] it has been shown that this contribution is insufficient to successfully fit the observed excess [17, 18]. Conversely, if the LEE is due to new physics (NP), it could be explained by the production of heavy neutrino that decays very promptly emitting a photon [19,20,21]. Such scenarios successfully evade the constraints from disappearance experiments, as the heavy neutrino would not be produced in oscillations but in the up-scattering of light neutrinos inside the detector. Models of this type, where the neutrino has a non-standard transition magnetic moment, are able to fit the energy distribution observed at MiniBooNE better than a \(3+1\) hypothesis with an eV-scale sterile neutrino. However, if the production of the heavy state takes place through a photon this leads to a too forward-peaked angular distribution of events, which fails to reproduce the measurements reported by the collaboration. In addition, it is unclear that the required value of the transition magnetic moment is experimentally allowed by other constraints, see Refs. [22, 23].

More recently, variations of the models presented in Refs. [19,20,21] have been put forward, where the heavy neutrino interacts with a new massive gauge boson (\(Z'\)) resulting from an extra \(U(1)'\) symmetry [24, 25]. The new particles introduced in this case interact with the SM fermions only via mixing: the \(Z'\) is kinetically mixed with the photon, while the heavy neutrino mixes with the active neutrinos. In this case, the heavy neutrino would also be produced in up-scattering of light neutrinos, but in this case the interaction would be mediated by the \(Z'\). Once produced, it would travel a macroscopic distance and decay via the new interaction, leading to an \(e^+ e^-\) pair in the final state: if the two Cherenkov rings overlap, the observed signal would also be misidentified as a single electron-like event at MiniBooNE. Thanks to the newly introduced massive gauge boson, the angular distributions obtained are less forward-peaked, allowing the model to successfully fit both the energy and angular distributions observed at MiniBooNE. Moreover, besides explaining the LEE, these scenarios are also theoretically well-motivated: a minimal modification of the phenomenological models proposed in Refs. [24, 26] with two hidden states may be able to generate the SM neutrino masses [26, 27] and could even accommodate a dark matter candidate [28, 29].

Given that the interactions to the SM fermions are heavily suppressed via mixing, the parameter space where the dark neutrino models are able to explain the MiniBooNE anomaly is difficult to probe experimentally. Modifications to the \(\nu _\mu \) neutral-current (NC) scattering cross section with nucleons would take place only at the percent level, well below current experimental uncertainties [30] (for reviews, see e.g. Refs. [31, 32]). However, it has been recently pointed out that relevant constraints can be derived from \(\nu -e\) scattering data, given the electron-like nautre of the produced signal from the decay of the heavy neutrino [33]. In fact, the authors of Ref. [33] have reanalyzed CHARM-II and MINERvA data and their results disfavor the model proposed in Ref. [26] in the region of parameter space where both the MiniBooNE energy and angular distributions are successfully reproduced. However, while for the model in Ref. [26] the heavy neutrino production cross section would be coherent, for the model from Ref. [24] the incoherent contribution would be dominant (due to the higher \(Z'\) mass considered), and a separate analysis would be required to derive a constraint.

In this Letter we point out that atmospheric neutrino experiments could probe a significant fraction of the allowed parameter space of this class of models and, in particular, for the model proposed in Ref. [24]. For large values of the heavy neutrino mixing we find that the model would lead to a significant excess of NC-like events, since the NP cross section would be comparable to the SM NC cross section. In addition, the heavy neutrino would be relatively long-lived and could propagate over macroscopic distances in the detector after being produced. As it decays, it may lead to a separate second shower inside Icecube/DeepCore. Thus, for small values of the active-sterile neutrino mixing a search for “double-bang” (DB) events at low energies, as proposed in our previous work [1], would lead to impressive sensitivities. Such a search would probe exactly the same production and decay mechanisms needed to explain the anomaly.

2 Model details

The model proposed in Refs. [24, 25] extends the SM gauge group with an additional \(U(1)'\) symmetry, which is however broken at low energies. A priori, it is assumed that none of the SM fermions are charged under the new symmetry. However, unless explicitly forbidden, the new gauge boson \(X_\mu \) associated to the hidden \(U(1)'\) symmetry will kinetically mix with the SM hypercharge boson through a term of the form [34] \(B_{\mu \nu }X^{\mu \nu }\), where B and X stand for the hypercharge and \(U(1)'\) field strength tensors. This induces couplings to the SM fermions that are suppressed by the kinetic mixing parameter \(\chi \). At first order in \(\chi \), the \(Z'\) will interact with the SM fermions through the following term in the Lagrangian:

where \(Z'_\mu \) is the mass state associated to \(X_\mu \), \(c_W\) is the cosine of the weak mixing angle, e is the electron charge and \(q_f\) is the charge of the fermion f. The model also requires the addition of a fourth massive neutrino which does not couple directly to any of the SM gauge bosons but couples to the \(Z'\) directly. The whole neutrino flavor and mass bases are related by the usual unitary transformation \(\nu _\alpha = \sum _i U_{\alpha i} \nu _i\), where \(i =1,2,3,4\) and \(\alpha =e,\mu ,\tau ,s\) refer to the mass and flavor indices, respectively. As a result, the active neutrinos will also inherit interactions with the \(Z'\) which are suppressed with the mixing to the sterile state:

where \(g'\) is the coupling constant between the fourth neutrino and the \(Z'\). At this point, it should be stressed that the mixing between active neutrinos and sterile neutrinos in the MeV-GeV range is tightly constrained in the \(\mu \) and e sectors thanks to precision measurements of \(\beta \)- and meson decays in the laboratory. In the \(\tau \) sector, on the other hand, laboratory searches are much weaker due to the intrinsic difficulties of producing mesons with large branching ratios into \(\nu _\tau \). In the region of interest (\(m_4 \sim 150\) MeV) the most relevant constraints come from CHARM [35] and NOMAD [36] data (see e.g., Refs. [37, 38] for a compilation of bounds). However, since in the model considered here the heavy neutrino would decay very promptly these bounds would be considerably relaxed, and values as large as \(|U_{\tau 4}|^2 \sim 10^{-3}\) are still allowed [24]. Finally, while astrophysical constraints may in principle be relevant for this type of models (see e.g. Ref. [39]), these are successfully avoided in the region of parameter space considered in Ref. [24].

At MiniBooNE an intense neutrino flux is produced from meson decays, resulting in a neutrino energy distribution that peaks at around 500 MeV. The beam composition is primarily \(\nu _\mu \) in neutrino mode, and \({\bar{\nu }}_\mu \) in anti-neutrino mode. The requirements that the model should satisfy in order to fit the MiniBooNE LEE are summarized as follows (we refer the interested reader to Ref. [24] for further details):

- 1.

The NP should induce an up-scattering cross section for \(\nu _\mu \) to the heavy state \( \sigma _{\mu }^{Z'} \sim 0.01 \sigma _{SM}\) (in the QE regime), where \(\sigma _{SM}\) is the SM NC cross section. This effectively imposes a constraint on the combination \(g'^2 \chi ^2 |U_{\mu 4}|^2/m_{Z'}^4\), and favors low masses for the \(Z'\) (at the GeV scale) in order to reach large enough cross sections.

- 2.

The masses of the new particles introduced in the model control the energy and angular distributions of the events. In particular, the neutrino mass should lie between 100 and 200 MeV in order to be able to fit the observed energy distribution. The angular distribution, on the other hand, is sensitive to the \(Z'\) mass. For the model considered in Ref. [24] (where \(m_{Z'} > m_4\)), \(Z'\) masses below a GeV are disfavored by the fit.

- 3.

The decay of the neutrino should take place inside the MiniBooNE detector, which imposes a requirement on its decay length in the lab frame of \(L_{lab}^{mboone}\lesssim {\mathcal {O}}(1)~\mathrm {m}\). For a heavy neutrino with a mass \(m_4\sim 150~\mathrm {MeV}\) and energies around 500 MeV, this implies a lifetime \(c\tau \sim 0.3\) m. This is satisfied setting \(|U_{\tau 4}|^2 \sim 8\times 10^{-4}\).

- 4.

In addition, the branching ratio of the heavy neutrino into the channel \(\nu _4 \rightarrow \nu _\alpha e^+ e^-\) should be dominant. Since \( |U_{\tau 4}|^2 \gg |U_{\mu 4}|^2, |U_{e4}|^2\), the decay takes place predominantly into \(\nu _\tau e^+ e^-\).

3 Heavy neutrino production in atmospheric neutrino experiments

Atmospheric neutrinos are a by-product of meson decays produced when cosmic rays hit the top layers of the atmosphere. While the resulting flux is primarily composed by \(\nu _\mu \) and \({\bar{\nu }}_\mu \) (resulting from pion and kaon decays), standard neutrino oscillations in the \(\nu _\mu \rightarrow \nu _\tau \) channel provide a sizable \(\nu _\tau \) flux contribution for neutrino trajectories crossing the Earth.

To get an estimate on the expected number of heavy neutrinos produced in atmospheric neutrino detectors, it is useful to compare the expected size of the NP cross section to the NC cross section in the SM. Within the parton model, the double differential cross section for the up-scattering in a neutrino-nucleon interaction reads:

where M is the proton mass, \(E_\nu \) is the initial neutrino energy, x is the fraction of the nucleon momentum carried by the parton and \(y\equiv \nu /E_\nu \), with \(\nu =E_\nu - E_N\) being the energy transferred in the interaction and \(E_N\) the energy of the heavy neutrino in the final state. Here, \({\mathcal {F}}(x) = x\sum _{q}(f_q(x) + f_{{{\bar{q}}}}(x))\) contains the dependence on the parton distribution functions (PDFs) of the proton (\(f_{q,{{\bar{q}}}}\)). In deriving Eq. (3), any effects due to the mass of the heavy neutrino in the final state have been neglected (since we are mainly interested in the mass range below 200 MeV), and an isoscalar target nucleus has been assumed. Moreover, we have introduced an effective coupling constant \(G_\alpha '\) (analogous to the Fermi constant in the SM, \(G_F\)) which depends on the flavor of the incident light neutrino, \(\alpha \):

As seen from Eq. (3), the comparison to the SM cross section cannot be performed in a straightforward manner due to the different chirality of the currents involved (left-handed in the SM, as opposed to vector currents in the \(Z'\) case). However, considering that the final event sample contains contributions from both neutrino and antineutrino fluxes (with similar intensities) interacting both on protons and neutrons (for an isoscalar target), the number of heavy neutrinos produced \(N_{\alpha }^{Z'}\) due to the up-scattering process \(\nu _\alpha {\mathcal {N}} \rightarrow \nu _4 {\mathcal {X}}\) (where \({\mathcal {N}}\) is a nucleon and \({\mathcal {X}}\) is the final state hadron(s)) can be taken as approximately proportional to the number of \(\nu _\alpha \)-nucleon NC events in the SM, \(N_{\alpha }^{Z'} \simeq \epsilon _\alpha N^{Z}_{\alpha }\). The proportionality constant reads

with \(g_{L,f} = T_{3,f} - q_f\sin ^2\theta _w\). Here, \(T_{3,f}\) is the weak isospin of fermion f while \(\langle Q^2 \rangle \) should be taken as the typical value of the squared momentum transfer involved in the interaction, and we have neglected \(g_{R,q}\) (since \(g_{L,q}^2 \gg g_{R,q}^2 \)). Note that the energy transfer \(\nu \) and the invariant mass of the hadronic shower \(W^2\) relate to the value of \(Q^2\) as \( Q^2 = 2M\nu + M^2 - W^2\).

An estimate on the number of events can be obtained for the benchmark values given in Ref. [24] that provide a best-fit to the MiniBooNE anomaly: \(|U_{\tau 4}|^2 = 7.8\times 10^{-4}\), \(|U_{\mu 4}|^2 =1.5\times 10^{-6}\), \(\chi ^2=5\times 10^{-6}\), \(g'=1\) and \( m_{Z'}=1.25\) GeV. For DIS, assuming \(\langle Q^2 \rangle \sim {\mathcal {O}}(5)\,\mathrm {GeV}^2\), this leads to \(\epsilon _\tau \sim 0.5\), and \(\epsilon _\mu \sim 10^{-3}\) (or, more generally, \(\epsilon _\alpha \sim 6\times 10^{2} |U_{\alpha 4}|^2 \)). From this naive estimate it is easy to see that a large number of \(N_{\tau }^{Z'}\), indistinguishable from SM NC events, would be expected at atmospheric neutrino experiments due to the relatively large values of \(U_{\tau 4}\) required in order to fit the LEE. A search for an excess of cascade events was proposed in Ref. [40] to test the model from Ref. [21], and in fact a similar search could also be performed in this case. However, it should be noted that while in Ref. [40] the excess would take place for down-going events, for the model considered in this work the excess would appear in the up-going sample. A search of this sort naively presents several advantages with respect to a DB search. On one hand, for the benchmark parameters considered the size of the signal may be much larger than for DB events. Moreover, while observing and identifying two separate showers may be challenging from the experimental point of view, the observation of NC-like events represents an easier task for most experiments. However, this signal would be subject to much larger backgrounds, coming not only from standard neutrino NC events but also from \(\nu _e\) and \(\nu _\tau \) CC interactions in the detector.

Similarly, a search for an excess of NC-like events at the T2K experiment may also be able to constrain the minimal realization of the model from Ref. [24], since by the time the neutrino beam reaches the detector most of the muon neutrinos have already oscillated into tau neutrinos. A possibility to avoid this would be to considerably reduce the size of \(|U_{\alpha 4}|^2\), in order to bring the number of events due to the NP below experimental uncertainties.Footnote 1 In this case, a DB search at Icecube/DeepCore would still yield impressive sensitivities, as we will see in the next sections.

4 Icecube/DeepCore Double-Bangs to test the anomaly

The IceCube South Pole neutrino telescope contains over 5000 Digital Optical Modules (DOM) deployed between 1450 and 2450 m below the ice surface. When high-energy charged particles travel through the ice, they emit radiation that is then detected by the DOMs. At Icecube, two event topologies are distinguished: track events, produced by muons, and cascades (or showers), produced by other charged particles such as electrons or hadrons. The inner core of Icecube, approximately 2100 m below the surface cap, is called DeepCore. With a DOM density roughly five times higher than that of the standard IceCube array, DeepCore can observe showers with much lower energies than Icecube (down to \(E \sim 5.6\) GeV).

At Icecube, DB events are a standard signal for \(\tau \) leptons at ultra-high energies, where the boosted decay length of the \(\tau \) is long enough to be able to resolve the two showers from its production and decay vertices. In this work, however, we will be considering much lower energies as proposed in our previous work [1]. In this context, a DB event is defined as an event that satisfies the following conditions: (a) it should lead to two distinct showers separated by a macroscopic distance of at least 20 m; (b) each of the showers should have a minimum energy of 5.6 GeV in order to be observed at the DOMs; and (c) the first event should be observed a a minimum of three (or four) DOMs if the event takes place inside (outside) DeepCore, in order to set the detection trigger off. Allowing events to trigger the detector outside the DeepCore volume may lead to additional backgrounds from atmospheric muons. We expect a sufficient reduction of this background by requiring that the two DB events fall on a straight line and take place in the up-going direction, which should be achievable given the very good timing resolution of the DOMs [41]. At low energies and in the up-going direction, the most important background that could induce a DB would be coincidental showers from atmospheric neutrino events (estimated at 0.05 events/yr, see Ref. [1] for details). However, a careful computation of the background levels for this search should be carried out within the experimental collaboration.

From a model-independent perspective, the DB event rate at Icecube depends solely on two physics observables: the value of the production cross section inside the detector, which determines the number of heavy neutrinos produced in the interaction of atmospheric neutrinos; and the decay length of the heavy neutrino in the lab frame, \(L_{lab}\), which determines if the second decay will occur inside the detector. Of course, \(L_{lab}\) eventually depends on the value of the heavy neutrino lifetime \(c\tau \), its mass \(m_N\) and its energy \(E_N\). The total number of DB events per unit of time, where the production vertex takes place in the up-scattering of \(\nu _\alpha \), can be expressed as:

where \(\rho _{n}\) is the average nucleon density in the ice, \(c_\theta \) is the cosine of the zenith angle \(\theta \), \(\phi _\mu \) is the atmospheric \(\nu _\mu \) (or \({\bar{\nu }}_\mu \)) flux, \(\sigma _{\alpha }^{Z'}\) is the cross section for the production of the heavy (anti)neutrino in  -nucleon interactions, \(P_{\mu \alpha }\) is the standard oscillation probability in the

-nucleon interactions, \(P_{\mu \alpha }\) is the standard oscillation probability in the  channel, and \(V_{det}\) is the effective volume of the detector, which depends on the heavy neutrino decay length in the lab frame and on the zenith angle.

channel, and \(V_{det}\) is the effective volume of the detector, which depends on the heavy neutrino decay length in the lab frame and on the zenith angle.

The effective volume of the detector was computed in Ref. [1] via Monte Carlo integration, where it was found to be maximal for decay lengths in the lab frame of around \(L_{lab} \sim 100\) m. For smaller decay lengths, the effective volume decreases since the density of the DOM grid is too low to be able to distinguish the two showers. For much longer decay lengths, on the other hand, it decreases roughly as \(1/L_{lab}\) as the neutrinos will typically exit the detector before decaying. The effective volume also depends on the zenith angle, as expected from geometrical arguments, and is maximized for trajectories crossing the Earth’s core (\(\cos \theta \simeq -1\)), where we expect the transition probability \(\nu _\mu \rightarrow \nu _\tau \) to be maximal for energies around \(E_\nu \sim 25~\,\text {GeV}\). Coincidentally, at these energies, for \(m_4=150\) MeV and \(c\tau \sim 0.3~\mathrm {m}\) we find a boosted decay length of 50 m, precisely in the region where we expect the effective volume of the detector to be close to maximal. As a reference value, for \(L_{lab}\sim 50~\mathrm {m}\) and \(\cos \theta =-1\) we find \(V_{det}\simeq 0.05~\mathrm {km}^3\). This is larger than the DeepCore volume, since we allow events to trigger the detector outside the DeepCore volume if at least four DOMs are hit simultaneously. We have checked that if we additionally require that the first event takes place inside DeepCore we recover its volume, as expected.

5 Numerical results

Our exact numerical calculation agrees reasonably well with the naive estimate outlined above. The number of DB events have been computed following Eq. (6). The cross section has been computed as outlined in Eq. (3) with the CTEQ6.6 set of parton distribution functions [42, 43], together with the Parton package [44] for their numerical evaluation in Python3. For simplicity, only the contributions from \(u, {{\bar{u}}}, d, {{\bar{d}}}\) quarks have been considered, which is expected to be a good approximation within the range of momentum transfer considered. In order to ensure that the two showers are above 5.6 GeV and that the interaction falls in the DIS regime, the following cuts are imposed: \(5.6 \le (\nu /\,\text {GeV}) \le E_\nu - 5.6\), \(Q^2 \ge 4~\hbox {GeV}^2\), and \(W^2 > 1.5~\hbox {GeV}^2\). Neutrino oscillation probabilities are computed using the GLoBES package [45, 46] dividing the Earth matter density profile into 20 different layers, each of them with a constant density according to the PREM model [47, 48]. The values of the oscillation parameters are taken at the best fit from a global analysis of neutrino oscillation data [49, 50]. The atmospheric neutrino and antineutrino fluxes have been computed for zenith angles in the range \(-1 \le \cos \theta \le 0\) using the MCEq module [51, 52] for Python2, with the SYBILL-2.3 hadronic interaction model [53], the Hillas-Gaisser cosmic-ray model [54] and the NRLMSISE-00 atmospheric model [55]. Finally, the effective volume of the detector is computed using Monte Carlo integration, as in Ref. [1].

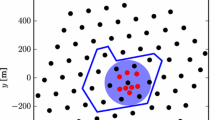

Expected number of DB events per year at Icecube/DeepCore. The left panel shows the result for \(\nu _\tau \) up-scattering into the heavy neutrino, while the right panel considers \(\nu _\mu \) up-scattering. The black star indicates a representative point in the parameter space for the model of Ref. [24], corresponding to \(m_4=140\) MeV and \(c\tau ~\sim 0.3~\mathrm {m}\). Note that the benchmark values required to fit the MiniBooNE LEE imply \(\epsilon _\tau \sim 0.5\) and \(\epsilon _\mu \sim 10^{-3}\) (assuming \(\langle Q^2\rangle \sim 5\,\mathrm {GeV}^2\), see Eq. (5))

Our main result is given in Fig. 1, where the colored bands indicate the regions of parameter space where the expected number of signal events per year would exceed a certain number, as indicated by the legend. While the number of events has been exactly computed following the procedure described above, our results are given as a function of \(\epsilon _\alpha \) and the branching ratio of the neutrino decay into visible particles, \(\mathrm {BR}_{vis}\). This way we ensure that our results can be easily adapted to non-minimal versions of this model simply multiplying by the corresponding value of the product \(\epsilon _\alpha \mathrm {BR}_{vis}\). Note that while at MiniBooNE the up-scattering would take place exclusively in \(\nu _\mu \) interactions, at Icecube/DeepCore both \(\nu _\mu \) and \(\nu _\tau \) fluxes are available and may up-scatter into \(\nu _4\). However, since the expected \(\nu _\mu \) and \(\nu _\tau \) fluxes will be very different due to standard neutrino oscillations, our results are provided for the two cases separately.

Given the low background level expected in the SM for this type of signal [1], even a handful of events may be enough to reject the background-only hypothesis at high confidence. Therefore, if the heavy neutrino is mixed with \(\nu _\tau \), we find that a DB search may be sensitive to models with values of \(\epsilon \) as low as \(\epsilon _\alpha \sim {\mathcal {O}}(10^{-3})\), as long as the lifetime and mass of the heavy neutrino fall inside the region \(5 \lesssim \frac{c\tau }{\mathrm {m}}\frac{\,\text {GeV}}{m_4} \lesssim 10\). It should be noted that \(\nu _\tau \) up-scattering takes place at \( E_\nu \sim 25\) GeV (where the oscillation probability \(\nu _\mu \rightarrow \nu _\tau \) is close to 1), while for \(\nu _\mu \) up-scattering the heavy neutrino production typically takes place at energies above 30 GeV (where the flux does not oscillate). Therefore, the higher neutrino energies in the \(\nu _\mu \) case with respect to the \(\nu _\tau \) case leads to maximal sensitivities in the right panel for lower values of \(c\tau \). Finally, in Fig. 1 the black star indicates the benchmark point from Ref. [24], which provides a best-fit to the MiniBooNE LEE. Assuming \(\mathrm {BR}_{vis}\sim {\mathcal {O}}(1)\) we obtain, for their benchmark values, approximately \(10^3\) DB events/yr for \(\nu _\tau \) up-scattering and 6 DB events/yr for \(\nu _\mu \) up-scattering.

6 Conclusions

In this Letter we have shown that the minimal model in Ref. [24] proposed to explain the MiniBooNE anomaly would lead to a cross section for \(\nu _\tau \) up-scattering into the heavy state comparable to the SM NC cross section. Consequently, this would yield a significant excess in NC-like events with respect to the SM prediction, observable in the up-going sample at atmospheric neutrino experiments. Modified versions of this model, however, may be able to avoid such large values of \(U_{\tau 4}\). We have shown that in this case Icecube/DeepCore could search for an excess of DB events [1], for heavy neutrino production cross section as low as the per mille level of the SM NC cross section. However, while the assumptions made in our computation of the DIS cross section have been generally conservative, a careful study of the actual sensitivity of this signal is needed. This should eventually be carried out by the experimental collaboration, in order to include detection efficiencies and a proper Monte Carlo simulation of the expected background rates.

As a final remark it is also worth pointing out that, even though this work is mainly motivated by the model proposed in Ref. [24], our result is more general. In particular, further modifications of the model could avoid the correlation between production and decay processes (either completely or partially), since this eventually depends on its particle content and on the mixing between the heavy and SM neutrinos. Following a completely model-independent approach, in our numerical analysis we have precisely assumed that the production and decay mechanisms may be completely decoupled. Of course, once a particular model is specified, the available region of parameter space will be limited to a subset of the full region shown in Fig. 1, depending on how \(\sigma \), \(m_4\) and \(c\tau \) relate to one another.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Data sharing is not applicable to this article, as no datasets were generated or analysed during this study.]

Notes

It should be stressed that, in the minimal model considered in Ref. [24], this would lead to a value of \(c\tau \) too large to explain the MiniBooNE LEE. Nevertheless it might be possible to work around this in non-minimal variations of the model.

References

P. Coloma, P.A.N. Machado, I. Martinez-Soler, I.M. Shoemaker, Phys. Rev. Lett. 119(20), 201804 (2017). https://doi.org/10.1103/PhysRevLett.119.201804

A. Aguilar-Arevalo et al., Phys. Rev. D 64, 112007 (2001). https://doi.org/10.1103/PhysRevD.64.112007

A.A. Aguilar-Arevalo et al., Phys. Rev. Lett. 105, 181801 (2010). https://doi.org/10.1103/PhysRevLett.105.181801

A.A. Aguilar-Arevalo et al., Phys. Rev. Lett. 121(22), 221801 (2018). https://doi.org/10.1103/PhysRevLett.121.221801

C. Giunti, T. Lasserre, Annu. Rev. Nucl. Part. Sci. 69, (2019). https://doi.org/10.1146/annurev-nucl-101918-023755

M. Dentler, A. Hernandez-Cabezudo, J. Kopp, P.A.N. Machado, M. Maltoni, I. Martinez-Soler, T. Schwetz, JHEP 08, 010 (2018). https://doi.org/10.1007/JHEP08(2018)010

M. Dentler, A. Hernandez-Cabezudo, J. Kopp, M. Maltoni, T. Schwetz, JHEP 11, 099 (2017). https://doi.org/10.1007/JHEP11(2017)099

G.H. Collin, C.A. Argüelles, J.M. Conrad, M.H. Shaevitz, Nucl. Phys. B 908, 354 (2016). https://doi.org/10.1016/j.nuclphysb.2016.02.024

A. Diaz, C.A. Argüelles, G.H. Collin, J.M. Conrad, M.H. Shaevitz, (2019). arXiv:1906.00045 [hep-ex]

P.A.R. Ade et al., Astron. Astrophys. 594, A13 (2016). https://doi.org/10.1051/0004-6361/201525830

R.H. Cyburt, B.D. Fields, K.A. Olive, T.H. Yeh, Rev. Mod. Phys. 88, 015004 (2016). https://doi.org/10.1103/RevModPhys.88.015004

R. Acciarri et al., JINST 12(02), P02017 (2017). https://doi.org/10.1088/1748-0221/12/02/P02017

R.J. Hill, Phys. Rev. D 81, 013008 (2010). https://doi.org/10.1103/PhysRevD.81.013008

R.J. Hill, Phys. Rev. D 84, 017501 (2011). https://doi.org/10.1103/PhysRevD.84.017501

X. Zhang, B.D. Serot, Phys. Rev. C 86, 035504 (2012). https://doi.org/10.1103/PhysRevC.86.035504

E. Wang, L. Alvarez-Ruso, J. Nieves, Phys. Rev. C 89(1), 015503 (2014). https://doi.org/10.1103/PhysRevC.89.015503

E. Wang, L. Alvarez-Ruso, J. Nieves, Phys. Lett. B 740, 16 (2015). https://doi.org/10.1016/j.physletb.2014.11.025

J.L. Rosner, Phys. Rev. D 91(9), 093001 (2015). https://doi.org/10.1103/PhysRevD.91.093001

S.N. Gninenko, Phys. Rev. Lett. 103, 241802 (2009). https://doi.org/10.1103/PhysRevLett.103.241802

S.N. Gninenko, Phys. Rev. D 83, 015015 (2011). https://doi.org/10.1103/PhysRevD.83.015015

M. Masip, P. Masjuan, D. Meloni, JHEP 01, 106 (2013). https://doi.org/10.1007/JHEP01(2013)106

D. McKeen, M. Pospelov, Phys. Rev. D 82, 113018 (2010). https://doi.org/10.1103/PhysRevD.82.113018

S.N. Gninenko, Phys. Lett. B 710, 86 (2012). https://doi.org/10.1016/j.physletb.2012.02.071

P. Ballett, S. Pascoli, M. Ross-Lonergan, Phys. Rev. D 99, 071701 (2019). https://doi.org/10.1103/PhysRevD.99.071701

E. Bertuzzo, S. Jana, P.A.N. Machado, R. Zukanovich Funchal, Phys. Rev. Lett. 121(24), 241801 (2018). https://doi.org/10.1103/PhysRevLett.121.241801

E. Bertuzzo, S. Jana, P.A.N. Machado, R. Zukanovich Funchal, Phys. Lett. B 791, 210 (2019). https://doi.org/10.1016/j.physletb.2019.02.023

P. Ballett, M. Hostert, S. Pascoli, Phys. Rev. D 99(9), 091701 (2019). https://doi.org/10.1103/PhysRevD.99.091701

P. Ballett, M. Hostert, S. Pascoli, (2019). arXiv:1903.07589 [hep-ph]

M. Blennow, E. Fernandez-Martinez, A.O.D. Campo, S. Pascoli, S. Rosauro-Alcaraz, A.V. Titov, Eur. Phys. J. C 79, 555 (2019). https://doi.org/10.1140/epjc/s10052-019-7060-5

M.A. Acero, et al., (2019). arXiv:1902.00558 [hep-ex]

J.A. Formaggio, G.P. Zeller, Rev. Mod. Phys. 84, 1307 (2012). https://doi.org/10.1103/RevModPhys.84.1307

L. Alvarez-Ruso et al., Prog. Part. Nucl. Phys. 100, 1 (2018). https://doi.org/10.1016/j.ppnp.2018.01.006

C.A. Argüelles, M. Hostert, Y.D. Tsai, (2018). arXiv:1812.08768 [hep-ph]

B. Holdom, Phys. Lett. 166B, 196 (1986). https://doi.org/10.1016/0370-2693(86)91377-8

J. Orloff, A.N. Rozanov, C. Santoni, Phys. Lett. B 550, 8 (2002). https://doi.org/10.1016/S0370-2693(02)02769-7

P. Astier et al., Phys. Lett. B 506, 27 (2001). https://doi.org/10.1016/S0370-2693(01)00362-8

A. Atre, T. Han, S. Pascoli, B. Zhang, JHEP 05, 030 (2009). https://doi.org/10.1088/1126-6708/2009/05/030

M. Drewes, B. Garbrecht, Nucl. Phys. B 921, 250 (2017). https://doi.org/10.1016/j.nuclphysb.2017.05.001

T. Rembiasz, M. Obergaulinger, M. Masip, M.A. Perez-Garcia, M.A. Aloy, C. Albertus, Phys. Rev. D 98(10), 103010 (2018). https://doi.org/10.1103/PhysRevD.98.103010

M. Masip, AIP Conf. Proc. 1606(1), 59 (2015). https://doi.org/10.1063/1.4891116

M.G. Aartsen et al., Phys. Rev. D 93(2), 022001 (2016). https://doi.org/10.1103/PhysRevD.93.022001

P.M. Nadolsky, H.L. Lai, Q.H. Cao, J. Huston, J. Pumplin, D. Stump, W.K. Tung, C.P. Yuan, Phys. Rev. D 78, 013004 (2008). https://doi.org/10.1103/PhysRevD.78.013004

A. Buckley, J. Ferrando, S. Lloyd, K. Nordström, B. Page, M. Rüfenacht, M. Schönherr, G. Watt, Eur. Phys. J. C 75, 132 (2015). https://doi.org/10.1140/epjc/s10052-015-3318-8

D.M. Straub, Parton—A Python package for parton densities and parton luminosities. https://pypi.org/project/parton/ . Accessed 1 May 2019

P. Huber, J. Kopp, M. Lindner, M. Rolinec, W. Winter, Comput. Phys. Commun. 177, 432 (2007). https://doi.org/10.1016/j.cpc.2007.05.004

P. Huber, M. Lindner, W. Winter, Comput. Phys. Commun. 167, 195 (2005). https://doi.org/10.1016/j.cpc.2005.01.003

A.M. Dziewonski, D.L. Anderson, Phys. Earth Planet. Inter. 25, 297 (1981). https://doi.org/10.1016/0031-9201(81)90046-7

F. Stacey, Physics of the earth (Wiley, New York, 1977)

I. Esteban, M.C. Gonzalez-Garcia, A. Hernandez-Cabezudo, M. Maltoni, T. Schwetz, JHEP 01, 106 (2019). https://doi.org/10.1007/JHEP01(2019)106

NuFIT 4.0, (2018). www.nu-fit.org. Accessed 1 May 2019

A. Fedynitch, R. Engel, T.K. Gaisser, F. Riehn, T. Stanev, EPJ Web Conf. 99, 08001 (2015). https://doi.org/10.1051/epjconf/20159908001

A. Fedynitch, J. Becker Tjus, P. Desiati, Phys. Rev. D 86, 114024 (2012). https://doi.org/10.1103/PhysRevD.86.114024

A. Fedynitch, F. Riehn, R. Engel, T.K. Gaisser, T. Stanev, (2018). arXiv:1806.04140 [hep-ph]

T.K. Gaisser, Astropart. Phys. 35, 801 (2012). https://doi.org/10.1016/j.astropartphys.2012.02.010

J.M. Picone, A.E. Hedin, D.P. Drob, A.C. Aikin, J. Geophys. Res. Space Phys. 107(A12), SIA-15 (2002). https://doi.org/10.1029/2002JA009430. https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2002JA009430

Acknowledgements

The author is especially grateful to Pilar Hernandez for illuminating discussions, to Matheus Hostert for pointing out a mistake in the estimate of the number of events and to Enrique Fernandez-Martinez and Ian Shoemaker for a careful reading of the manuscript. She warmly thanks as well Carlos Argüelles, Andrea Donini, Jacobo Lopez-Pavon, Ivan Martinez-Soler and Carlos Pena for useful discussions. This work has been supported by the Spanish MINECO Grants FPA2017-85985-P and SEV-2014-0398, and by the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreements No. 690575 and 674896.

Author information

Authors and Affiliations

Corresponding author

Additional information

Preprint number: IFIC/19-27.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Coloma, P. Icecube/DeepCore tests for novel explanations of the MiniBooNE anomaly. Eur. Phys. J. C 79, 748 (2019). https://doi.org/10.1140/epjc/s10052-019-7256-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-019-7256-8