Abstract

We present NNFF1.0, a new determination of the fragmentation functions (FFs) of charged pions, charged kaons, and protons/antiprotons from an analysis of single-inclusive hadron production data in electron–positron annihilation. This determination, performed at leading, next-to-leading, and next-to-next-to-leading order in perturbative QCD, is based on the NNPDF methodology, a fitting framework designed to provide a statistically sound representation of FF uncertainties and to minimise any procedural bias. We discuss novel aspects of the methodology used in this analysis, namely an optimised parametrisation of FFs and a more efficient \(\chi ^2\) minimisation strategy, and validate the FF fitting procedure by means of closure tests. We then present the NNFF1.0 sets, and discuss their fit quality, their perturbative convergence, and their stability upon variations of the kinematic cuts and the fitted dataset. We find that the systematic inclusion of higher-order QCD corrections significantly improves the description of the data, especially in the small-z region. We compare the NNFF1.0 sets to other recent sets of FFs, finding in general a reasonable agreement, but also important differences. Together with existing sets of unpolarised and polarised parton distribution functions (PDFs), FFs and PDFs are now available from a common fitting framework for the first time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the framework of Quantum Chromodynamics (QCD), Fragmentation Functions (FFs) [1] encode the long-distance dynamics of the interactions among quarks and gluons which lead to their hadronisation in a hard-scattering process [2, 3]. In order to obtain theoretical predictions for the observables involving identified hadrons in the final state, FFs have to be convoluted [4] with partonic cross-sections encoding instead the short-distance dynamics of the interaction. If the hard-scattering process is initiated by nucleons, additional convolutions with the Parton Distribution Functions (PDFs) [5,6,7], the space-like counterparts of FFs, are required.

The knowledge of FFs is an important ingredient in our understanding of non-perturbative QCD dynamics, as well as an essential tool in the description of a number of processes used to examine the internal structure of nucleons. For example, processes probing nucleon momentum, spin, flavour and spatial distributions [8], as well as the dynamics of cold [9] and hot [10] nuclear matter. While partonic cross-sections can be computed perturbatively in QCD, FFs cannot, although their dependence on the factorisation scale results in the perturbatively computable DGLAP evolution equations [11,12,13,14]. In this respect, FFs and PDFs are on the same footing. Therefore, as PDFs, FFs need to be determined from a global analysis of a suitable set of experimental measurements, possibly from a variety of hard-scattering processes (see e.g. Refs. [15, 16] for a review).

These processes include hadron production in electron–positron Single-Inclusive Annihilation (SIA), in lepton–nucleon Semi-Inclusive Deep-Inelastic Scattering (SIDIS), and in proton–proton (pp) collisions. Information from SIDIS multiplicities and from pp collisions is particularly useful in order to achieve a complete flavour decomposition into quark and antiquark FFs alongside a direct determination of the gluon FF. However, SIA remains the theoretically cleanest process among the three, since its interpretation does not require the simultaneous knowledge of PDFs.

Recent progress in the determination of FFs has been focussed on charged pions and kaons, for which data are more abundant as they dominate the identified hadron yields. In the last few years, at least three groups have determined sets of FFs with uncertainties for these two hadronic species: DEHSS [17, 18], HKKS [19], and JAM [20]. All these determinations were performed at next-to-leading order (NLO) accuracy in perturbative QCD. Their primary focus was put on quantifying the effects of the inclusion of new measurements, although in the HKKS and JAM fits these were limited to SIA. These FF analyses also introduced some methodological and theoretical improvements over previous determinations. Specifically, in order to achieve a more reliable estimate of the uncertainties of FFs, various techniques widely used in PDF determinations have been adopted. For example, the iterative Hessian approach developed in Refs. [21, 22] has been used in the DEHSS analyses, while the iterative Monte Carlo procedure developed in Ref. [23] has been used in the JAM analysis. Separately, theoretical investigations of the effect of next-to-next-to-leading order (NNLO) QCD corrections [24], of a general treatment of heavy-quark mass effects [25], and of all-order small-z resummation [26] were performed in the framework of the DEHSS analyses, although only for the SIA production of charged pions.

Despite this progress, available determinations of FFs are still potentially affected by some sources of procedural bias, the size and effect of which are difficult to quantify. First, the parametrisation of FFs in terms of a simple functional form, customary in current analyses, may not encapsulate all the possible functional behaviours of the FFs. Second, the Hessian method supplemented with a tolerance parameter, used to determine FF uncertainties for instance in the HKKS analysis, lacks a robust statistical interpretation. Third, if a global analysis of FFs including PDF-dependent processes is carried out, PDFs and FFs should be determined within a consistent methodology. This is not the case in current global analyses like DEHSS.

This work represents a first step in overcoming some of these limitations. Building on the preliminary results of Refs. [27, 28], here we present NNFF1.0, a new determination of the FFs of charged pions, charged kaons, and protons/antiprotons from a comprehensive set of SIA measurements. This analysis is performed at leading order (LO), NLO, and NNLO accuracy in perturbative QCD. This analysis is based on the NNDPF methodology, a fitting framework designed to provide a statistically sound representation of FF uncertainties and to reduce potential procedural biases as much as possible. This is achieved by representing FFs as a Monte Carlo sample, from which central values and uncertainties can be computed, respectively, as a mean and a standard deviation, and by parametrising FFs with a flexible function provided by a neural network.

The NNPDF methodology for the determination of PDFs was originally applied to the analysis of inclusive Deep-Inelastic Scattering (DIS) structure functions [29, 30], and then extended to a determination of the PDFs of the proton, first from DIS data only [31,32,33] and then from a wider dataset including hadron collider data [34,35,36]. Several developments have been achieved since then. These include determinations of PDFs with LHC data [37,38,39], of PDFs with effects of threshold resummation [40], of PDFs with QED corrections [41], of a fitted charm PDF [42], and of longitudinally polarised PDFs [43, 44]. Applying the NNPDF framework to a determination of FFs is therefore a natural extension of the NNPDF fits. It is also a first step towards a simultaneous determination of polarised and unpolarised PDFs and FFs, as recently attempted by the JAM Collaboration [45].

This paper is organised as follows. In Sect. 2 we present the dataset used in our analysis, along with the corresponding observables and kinematic cuts. In Sect. 3 we discuss the theoretical details of the NNFF1.0 determination, including the computation of the observables, the evolution of FFs, and our choice of physical parameters. In Sect. 4 we revisit the NNPDF fitting methodology and its application to our current determination of FFs. Specifically, we focus on the aspects of the parametrisation and minimisation strategy which are introduced here for the first time. We validate the fitting methodology by means of closure tests. In Sect. 5 we present the NNFF1.0 sets, their fit quality, their perturbative convergence, and their comparison with other available FF sets. In Sect. 6 we study their stability upon variations in the kinematic cuts and the fitted dataset. Finally, in Sect. 7 we conclude and outline possible future developments. The delivery of the NNFF1.0 sets is discussed in Appendix A.

2 Experimental data

The determination of FFs presented in this work is based on a comprehensive dataset from SIA, i.e. electron–positron annihilation into a single identified hadron h, inclusive over the rest of the final state X,

This process is the time-like counterpart of inclusive DIS, to which it is related by crossing symmetry. Similarly to the Bjorken-x variable in DIS, one usually defines the scaling variable

with \(P_h\) the four-momentum of the outgoing identified hadron, \(q=k_1+k_2\) the four-momentum of the exchanged virtual gauge boson, \(\sqrt{q^2}=Q\). The center-of-mass energy of the electron–positron collision is given by \(\sqrt{s}=Q\). In this section we provide the details of the dataset included in this analysis. We describe first the experiments considered, then the corresponding physical observables and finally the kinematic cuts that we apply to the data.

2.1 The NNFF1.0 dataset

The dataset entering the NNFF1.0 analysis is based on electron–positron SIA cross-sections for the sum of charged pion, charged kaon, and proton/antiproton production, i.e. \(h=\pi ^++\pi ^-,K^++K^-,p+\bar{p}\) in Eq. (2.1). These cross-sections are differential with respect to either the scaling variable z, Eq. (2.2), or a closely related quantity (see Sect. 2.2). We include measurements performed by experiments at CERN (ALEPH [46], DELPHI [47], and OPAL [48]), DESY (TASSO [49,50,51]), KEK (BELLE [52, 53] and TOPAZ [54]), and SLAC (BABAR [55], TPC [56], and SLD [57]).

In addition to inclusive measurements, we also include flavour-tagged measurements from TPC [58], DELPHI [47] and SLD [57] experiments. The tagged quark flavour refers to the primary quark–antiquark pair produced in the \(Z/\gamma ^*\) decay. For these measurements, differential cross-sections corresponding to either the sum of light quarks (u, d, s) or to individual charm and bottom quarks (c, b) are provided, with the former obtained by subtracting the latter from the inclusive untagged cross-sections. Unlike inclusive untagged data, heavy-flavour tagged data cannot be measured directly, but are instead unfolded from flavour enriched samples based on Monte Carlo simulations. These are therefore affected by additional model uncertainties. The OPAL experiment has also measured fully separated flavour-tagged probabilities for a quark flavour to produce a jet containing the hadron h [59]. We do not include these data because they do not allow for an unambiguous interpretation in perturbative QCD beyond LO.

We now discuss specific features of some of the datasets included in the NNFF1.0 analysis. In the case of the BABAR experiment, two sets of data are available, based on prompt and conventional yields, respectively. The former includes primary hadrons or decay products from particles with lifetime shorter than \(\tau =10^{-11}\) s. The latter includes all decay products with lifetime up to \(3\times 10^{-1}\) s. The conventional cross-sections are about 5–15% larger than the prompt ones for charged pions and about 10–30% for protons/antiprotons. They are almost the same for charged kaons. Although the conventional dataset was derived by means of an analysis closer to that adopted by other experiments, we include the prompt dataset in our baseline fit of charged pions and proton/antiproton FFs. The motivation for this choice is that the prompt measurements are more consistent with other SIA data than the conventional measurements. A similar choice based on similar considerations was adopted in previous analyses of charged pion FFs [17, 20].

In the case of the BELLE experiment, various sets of data are also available. In a first analysis [52], based on an integrated luminosity \(\mathcal {L}=68\) fb\(^{-1}\), differential cross-sections were extracted only for charged pions and charged kaons. A second analysis [53], based on an increased luminosity \(\mathcal {L}=159\) fb\(^{-1}\), was focussed instead on the determination of the proton/antiproton cross-sections. In this study charged pion and kaon measurements were updated, although they were not intended to be publicly released [60]. The second analysis differs from the first in a less dense z binning (particularly in the large-z region), a moderately improved coverage at small z (\(z\sim 0.1\) instead of \(z\sim 0.2\)), smaller systematic uncertainties, and a slightly larger center-of-mass energy (\(\sqrt{s}=10.58\) GeV instead of \(\sqrt{s}=10.52\) GeV). Here we include the data from Ref. [52] for charged pions and kaons, and the data from Ref. [53] for protons/antiprotons.

In the case of the OPAL experiment, we have excluded the proton/antiproton measurements because we experienced difficulties in providing a satisfactory description of the data. This approach was also adopted in a previous FF analysis [61], where the proton/antiproton OPAL data were shown to be in tension with other SIA data at the same center-of-mass energy, \(\sqrt{s}=M_Z\) (see also Ref. [62]).

The dataset included in the NNFF1.0 analysis is summarised in Table 1, where experiments are ordered by increasing center-of-mass energy. In Table 1, we specify the name of the experiment, the corresponding publication reference, the measured observable, the relative normalisation uncertainty (r.n.u.), and the number of data points included in the fit for each hadronic species. Available datasets that are not included (n.i.) in the NNFF1.0 analysis, for the reasons explained above, are also indicated. The kinematic coverage of the dataset is illustrated in Fig. 1.

The kinematic coverage in the \((z,\sqrt{s})\) plane of the NNFF1.0 dataset (see Table 1). Data are from DESY (black), KEK (green) and CERN (red)

As one can see from Table 1 and Fig. 1, the bulk of the NNFF1.0 dataset is composed of the LEP and SLD measurements, taken at \(\sqrt{s}=M_Z\), and of the B-factory measurements, taken at the lower scale \(\sqrt{s}\simeq 10\) GeV. They collectively account for about two thirds of the total dataset and feature relative uncertainties at the level of a few percent. The rest of the dataset corresponds to measurements taken at intermediate energy scales that are typically affected by larger uncertainties. From Fig. 1 one also observes that the coverage in z is limited roughly to the region \(0.006\lesssim z \lesssim 0.95\). As expected from kinematic considerations, experiments at higher center-of-mass energies provide data at smaller values of z, while experiments at lower center-of-mass energies provide data at larger values of z.

The quantity and the quality of the available data varies depending on the hadronic species (see also Figs. 4, 5, 6, 7). Measurements for charged pions, for which the yield is the largest, are the most abundant and precise. In comparison, measurements for charged kaons are slightly less abundant and precise, while protons/antiprotons measurements are the most sparse and the most uncertain among the three hadronic species. As a consequence, charged pion FFs are better constrained than charged kaon and proton/antiproton FFs (see Sect. 5.2).

We now briefly discuss the differences between the NNFF1.0 dataset and the dataset included in some of the most recent determinations of FFs.

In comparison to JAM [20], we do not consider the ARGUS inclusive [63], the HRS inclusive [64], and the OPAL fully flavour-tagged data. Note that these differences are restricted to the charged pion and charged kaon FFs, as proton/antiproton FFs were not determined in the JAM analysis.

In comparison to HKKS [19], we include the TPC tagged data for charged pions and remove the HRS data for charged pions and kaons. A determination of the proton/antiproton FFs was not performed in Ref. [19], but in an earlier analysis based on a similar framework [62]. In comparison to this, here we exclude the OPAL inclusive data and include the BELLE and BABAR data, which were not available when the analysis in Ref. [62] was performed.

In comparison to DEHSS [17, 18], we include the older TASSO (at \(\sqrt{s}=12,14,22,30\) GeV) and TOPAZ measurements. These datasets are affected by rather large experimental uncertainties. Their effect in the DEHSS fits was deemed negligible and hence they were removed. Note that the DEHSS determinations also include the OPAL fully flavour-tagged data, not considered here, as well as additional measurements of hadroproduction in SIDIS and pp collisions. Similar considerations also apply to their earlier analysis for proton/antiproton FFs [61], in comparison to which we also include the BELLE and BABAR data. In the case of proton/antiproton FFs, the NNFF1.0 analysis is the first to include the B-factory measurements.

We take into account all the available information on statistical and systematic uncertainties, including their correlations. The full breakdown of bin-by-bin correlated systematics is provided only by the BABAR experiment. No information on correlations among various sources of systematics is provided for all the other experiments. In these cases we sum in quadrature statistical and systematic uncertainties. Normalisation uncertainties are assumed to be fully correlated across all data bins in each experiment. The asymmetric uncertainties quoted by BELLE are symmetrised as described in Ref. [30].

Systematic uncertainties, with the exception of normalisation uncertainties, are treated as additive. Because the naive inclusion of multiplicative normalisation uncertainties in the covariance matrix would lead to a biased result [65], we treat them according to the \(t_0\) method [66, 67]. This method is based on constructing a modified version of the covariance matrix where the contribution from multiplicative uncertainties is determined from theory predictions rather than from the experimental central values for each measurement. This procedure is iterative, with the results of a fit being used for the subsequent one until convergence is reached.

The available information on statistical, systematic, and normalisation uncertainties is used to construct the covariance matrix associated to each experiment. Following the NNPDF methodology, this covariance matrix is used to generate a Monte Carlo sampling of the probability distribution determined by the data. The statistical sample used in the NNFF1.0 fits is obtained by generating \(N_\mathrm{rep}=100\) pseudo-data replicas according to a multi-Gaussian distribution around the data central values and with the covariance of the original data [32].

2.2 Physical observables

The SIA differential cross-section involving a hadron h in the final state can be expressed as

where \(\alpha \) is the Quantum Electrodynamics (QED) running coupling and \(F_2^h\) is the fragmentation (structure) function, defined in analogy with the structure function \(F_2\) in DIS. While in the literature \(F_2^h\) is often called fragmentation function, we will denote it as fragmentation structure function in order to avoid any confusion with the partonic FFs.

The SIA cross-sections used in this analysis are summarised in the third column of Table 1. For some experiments, they are presented as multiplicities, i.e. they are normalised to \(\sigma _\mathrm{tot}\), the total cross-section for the inclusive electron–positron annihilation into hadrons. In addition to the normalisation to \(\sigma _\mathrm{tot}\), the format of the experimental data can differ among the various experiments due to the choice of scaling variable and/or an additional overall rescaling factor. These differences are indicated in Table 1, where the following notation is used: \(z=E_h/E_b=2E_h/\sqrt{s}\) is the energy \(E_h\) of the observed hadron h scaled to the beam energy \(E_b\); \(x_p=|\mathbf {p}_h|/|\mathbf {p}_b|=2|\mathbf {p}_h|/\sqrt{s}\) is the hadron three-momentum \(|\mathbf {p}_h|\) scaled to the beam three-momentum \(|\mathbf {p}_b|\); \(\xi =\ln (1/x_p)\); and \(\beta =|\mathbf {p}_h|/E_h\) is the velocity of the observed hadron h.

Starting from the measured observables defined in Table 1, the corresponding data points have been rescaled by the inverse of \(s/\beta \) or \(1/\beta \) whenever needed to match Eq. (2.3), modulo the normalisation to \(\sigma _\mathrm{tot}\). Corrections depending on the hadron mass \(m_h\) are retained according to the procedure described in Ref. [68]. This implies that the distributions differential in \(x_p\), \(p_h\) or \(\xi \) are modified by a multiplicative Jacobian factor determined by the following relations between the scaling variables:

The typical size of these hadron-mass corrections is illustrated in the left plot of Fig. 2, where we show the ratio \(x_p/z\) as a function of z, at three representative values of \(\sqrt{s}\), for pions, kaons, and protons. Hadron-mass corrections become larger when z and/or \(\sqrt{s}\) decrease, as well as when \(m_h\) is increased. These corrections can become significant in the kinematic region covered by the data. For instance, at \(z=0.1\) and \(Q=M_Z\) hadron-mass corrections are less than \(10\%\) for all hadronic species, while at \(z=0.1\) and \(Q=10\) GeV they rise up to \(20\%\) (\(70\%\) or more) for pions (kaons and protons/antiprotons). For protons/antiprotons, these corrections are already larger than \(30\%\) around \(z=0.4\) at the center-of-mass energy of the B-factory data. Therefore, the inclusion of hadron-mass corrections should improve the description of the data.

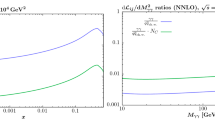

Left the scaling variable ratio \(x_p/z\) as a function of z, at three representative values of \(\sqrt{s}\), for pions, kaons and protons. Right the SIA K-factor, defined as the ratio \(F_2^h(\mathrm{N}^\mathrm{m}\mathrm{LO})/F_2^h(\mathrm{N}^\mathrm{m-1}\mathrm{LO})\) for \(m=1,2\), at the same three values of \(\sqrt{s}\). The K-factors have been computed with fixed NLO charged pion FFs from the DEHSS determination [17]

In the case of the BELLE experiment we multiply all data points by a factor 1 / c, with \(c=0.65\) for charged pions and kaons [69] and with c a function of z for protons/antiprotons [53]. This correction is required in order to treat the BELLE data consistently with all the other SIA measurements included in NNFF1.0. The reason is that a kinematic cut on radiative photon events was applied to the BELLE data sample in the original analysis instead of unfolding the radiative QED effects. Specifically, the energy scales in the measured events were kept within \(0.5\%\) of the nominal fragmentation scale Q / 2; a Monte Carlo simulation was then performed to estimate the fraction of events with initial-state (ISR) or final-state radiation (FSR) photon energies below \(0.5\%\times Q/2\). For each bin, the measured yields are then reduced by these fractions in order to exclude events with large ISR or FSR contributions.

Finally, note that the B-factory measurements correspond to samples where the effect of bottom-quark production is not included because they were taken at a center-of-mass energy below the threshold to produce a B-meson pair. The corresponding theoretical predictions should therefore be computed without the bottom-quark contribution, as explained in Sect. 3.1.

2.3 Kinematic cuts

Our baseline determination of FFs is based on a subset of all the available data points described above. Specifically, we impose two kinematic cuts at small and large values of z, \(z_\mathrm{min}\) and \(z_\mathrm{max}\), and retain only the data points with z in the interval \([z_\mathrm{min}, z_\mathrm{max}]\). These cuts are needed to exclude the kinematic regions where effects beyond fixed-order perturbation theory should be taken into account for an acceptable description of the data. For instance, soft-gluon logarithmic terms proportional to \(\ln z\) and threshold logarithmic terms proportional to \(\ln (1-z)\) can significantly affect the time-like splitting functions and the SIA coefficient functions below certain values of \(z_\mathrm{min}\) and above certain values of \(z_\mathrm{max}\). As a consequence, the convergence of the fixed-order expansion can be spoiled.

While all-order resummation techniques have been developed both at small [70,71,72,73] and large z [74,75,76,77,78], their inclusion is beyond the scope of the present work. However, we note that the impact of small- and large-z unresummed logarithms is alleviated when higher-order corrections are included in the perturbative expansion of splitting and coefficient functions. To illustrate the perturbative convergence of the SIA structure function in Eq. (2.3), we show in Fig. 2 the SIA K-factors at three representative values of \(\sqrt{s}\). They are defined as the ratios \(F_2^h(\mathrm{N}^\mathrm{m}\mathrm{LO})/F_2^h(\mathrm{N}^\mathrm{m-1}\mathrm{LO})\), for \(m=1,2\), and have been computed with fixed NLO charged pion FFs taken from the DEHSS determination [17]. The NNLO/NLO K-factors are significantly smaller than the NLO/LO ones for most of the kinematic range, except at small z and \(\sqrt{s}\), where they become comparable. For most of the values of z in the kinematic range of the data, the NNLO corrections are at the level of about \(10\%\) or less, except below \(z\sim 0.02\) (\(z\sim 0.07\)) and above \(z\sim 0.9\) at \(Q=M_Z\) (\(Q=10\) GeV), where they become larger. This suggests that in these regions large logarithms can spoil the convergence of the truncated perturbative series even at NNLO, indicating the need of resumming them.

In general the values of \(z_\mathrm{min}\) and \(z_\mathrm{max}\) can vary with the center-of-mass energy \(\sqrt{s}\). Based on the considerations above, here we choose the following values of \(z_\mathrm{min}\) and \(z_\mathrm{max}\): \(z_\mathrm{min}=0.02\) for experiments at \(\sqrt{s}=M_Z\); \(z_\mathrm{min}=0.075\) for all other experiments; and \(z_\mathrm{max}=0.9\) for all experiments. The same kinematic cuts are applied to the three hadronic species. The number of data points before applying these kinematic cuts is reported in parentheses in Table 1. Further motivation for our choice of \(z_\mathrm{min}\) is provided by studying the deterioration of the fit quality upon its variation, as we will discuss in detail in Sect. 6.1. Specifically, we find that our choice of \(z_\mathrm{min}\) leads to the smallest total \(\chi ^2\). The value of \(z_\mathrm{max}\) used here also minimises possible tensions between different datasets in the large-z region.

3 From fragmentation functions to physical observables

In this section we review the collinear factorisation of the fragmentation structure function and the time-like DGLAP evolution of FFs. We also provide the details of the numerical computation of the SIA cross-sections, including our choice of the theoretical settings and the physical parameters.

3.1 Factorisation and evolution

The factorised expression of the inclusive fragmentation structure function \(F_2^h(z,Q)\) in Eq. (2.3) is given as a convolution between coefficient functions and FFs,

where both factorisation and renormalisation scales are set equal to the center-of-mass energy of the collision, \(\mu _F=\mu _R=\sqrt{s}=Q\). In Eq. (3.1) \(\otimes \) denotes the usual convolution integral with respect to z,

and

is the average of the effective quark electroweak charges \(\hat{e}_q\) (see e.g. Appendix A of Ref. [79] for their definition) over the \(n_f\) active flavours at the scale Q; \(\alpha _s\) is the QCD running coupling; and \(C_{2,q}^\mathrm{S}\), \(C_{2,q}^\mathrm{NS}\), \(C_{2,g}^\mathrm{S}\) are the coefficient functions corresponding, respectively, to the singlet and nonsinglet combinations of FFs,

and to the gluon FF, \(D_g^{h}\). The notation \(D_{q^+}^h \equiv D_q^h+D_{\bar{q}}^h\) has been used.

The total cross-section for \(e^+e^-\) annihilation into hadrons \(\sigma _\mathrm{tot}\), required to normalise the differential cross-section in Eq. (2.3) in the case of multiplicities, is

The coefficients \(K_\mathrm{QCD}^{(i)}\) indicate the QCD perturbative corrections to the LO result and are currently known up to \(\mathcal {O}(\alpha _s^3)\) [80].

The evolution of FFs with the energy scale Q is governed by the DGLAP equations [11,12,13,14]

where \(P_{ji}\) are the time-like splitting functions. The choice of FF combinations defined in Eq. (3.4) allows one to rewrite Eq. (3.6) as a decoupled evolution equation

for the nonsinglet combination of FFs, and a system of two coupled equations

for the singlet combination of quark FFs and the gluon FF. In comparison to the space-like case, the off-diagonal splitting functions are interchanged and multiplied by an extra colour factor.

Both the coefficient functions in Eq. (3.1) and the splitting functions in Eqs. (3.7)–(3.8) allow for a perturbative expansion in powers of the QCD coupling

where \(i,j=q,g\) and \(a_s \equiv \alpha _s/(4\pi )\). The SIA coefficient functions have been computed up to \(\mathcal {O}\left( a_s^2\right) \) (\(k=2\)) [81,82,83,84,85], and the time-like splitting functions up to \(\mathcal {O}\left( a_s^3\right) \) (\(k=2\)) [86,87,88], both in the \(\overline{\mathrm{MS}}\) scheme. A residual theoretical uncertainty on the exact form of \(P^{qg,(2)}\) still remains, though this is unlikely to have any phenomenological relevance [87]. Note that space- and time-like splitting functions are identical at LO, while they differ at NLO and beyond.

Expressing the SIA fragmentation structure function \(F_2^h\) in Eq. (3.1) in terms of the quark flavour singlet and non-singlet combinations of FFs defined in Eq. (3.4) allows one to identify some of the limitations that affect a determination of FFs based exclusively on SIA data. These include the following.

-

Quark and antiquark FFs always appear through the combinations \(D_{q^+}^h\) in Eqs. (3.1) and (3.4). Therefore, SIA measurements are not sensitive to the separation between quark and antiquark FFs.

-

The leading contribution to the gluon coefficient function \(C_{2,g}^\mathrm{S}\) in Eq. (3.1) is \(\mathcal {O}(a_s)\), hence the gluon FF directly enters the fragmentation structure function starting at NLO.

-

The separation between different light quark flavour FFs is probed indirectly via the dependence of the electroweak charges \(\hat{e}_q\) on the energy scale Q. For instance, at the scale of the LEP/SLC data, \(Q=M_Z\), the fragmentation structure function in Eq. (3.1) receives its leading contribution from a Z-boson exchange, which couples almost equally to up- and down-type quarks. At this scale, the term \(\hat{e}_q^2/\left\langle \hat{e}^2 \right\rangle \), which appears in Eq. (3.4) is close to one, therefore the quark nonsinglet contribution to the structure function is suppressed. Conversely, at the typical scale of the B-factory measurements, \(Q\sim 10\) GeV, SIA largely proceeds via a photon exchange, which couples differently to up- and down-type quarks. Therefore the term \(\hat{e}_q^2/\left\langle \hat{e}^2 \right\rangle \) is significantly different from one, and the relative contribution of the quark nonsinglet combination to \(F_2^h\) is sizeable.

-

A direct handle on the separation between light- and heavy-quark flavour FFs is provided by the heavy-flavour tagged data from the LEP, SLC, and TPC experiments.

3.2 Computation of SIA cross-sections

The computation of the SIA cross-sections and the DGLAP evolution of the FFs are performed in the \(\overline{\mathrm{MS}}\) factorisation scheme using the z-space public code APFEL [89]. The numerical solution of the time-like evolution equations, Eqs. (3.7)–(3.8), has been extensively benchmarked up to NNLO QCD in APFEL; see Ref. [90]. The FastKernel method, introduced in Refs. [34, 37] and revisited in Ref. [38], is used to ensure a fast evaluation of the theoretical predictions. We include QED running coupling effects in these calculations.

Concerning the treatment of heavy-quark mass effects, here we adopt the zero-mass variable-flavour-number (ZM-VFN) scheme in which they are neglected. Taking into account such effects would require the use of a general-mass variable-flavour-number (GM-VFN) scheme, as customarily done in the case of unpolarised PDF fits [91,92,93]. Their inclusion into a fit of FFs could improve the description of some of the SIA datasets, particularly the BELLE and BABAR measurements whose center-of-mass energy is not far above the bottom-quark mass [25]. We leave a possible extension of our analysis along the lines of Ref. [25] for a future work.

Whenever multiplicities should be computed, the differential cross-section in Eq. (2.3) is normalised to the total cross-section for electron–positron annihilation \(\sigma _\mathrm{tot}\) defined in Eq. (3.5). In the calculation of \(\sigma _\mathrm{tot}\), perturbative corrections are consistently included up to \(\mathcal {O}(1)\), \(\mathcal {O}(a_s)\), and \(\mathcal {O}(a_s^2)\) in the LO, NLO and NNLO fits, respectively.

As mentioned in Sect. 2.1, the BELLE and BABAR measurements correspond to an observable for which the contribution from bottom quarks is explicitly excluded. This is taken into account in the corresponding theoretical calculation by setting to zero the bottom-quark electroweak charge appearing in Eq. (3.1). Analogously, only the contributions proportional to the electroweak charges of the relevant flavours are retained in Eq. (3.1) for the computation of the light- and heavy-quark tagged cross-sections.

The values of the physical parameters used in the computation of the SIA cross-sections and in the evolution of FFs are the same as those used in the NNPDF3.1 global analysis of unpolarised PDFs [39], supplemented with the PDG averages [94] for those parameter values not specified there. Specifically, we use: \(M_Z=91.1876\) GeV for the Z-boson mass, \(\alpha _s(M_Z)=0.118\) and \(\alpha (M_Z)=1/128\) as reference values for the QCD and QED couplings, and \(m_c=1.51\) GeV and \(m_b=4.92\) GeV for the charm- and bottom-quark pole masses. For the Weinberg mixing angle and Z-boson decay width, entering the definition of the electroweak charges \(\hat{e}_q\), we use \(\sin ^2\theta _W=0.231\) and \(\Gamma _Z=2.495\) GeV. Finally, the values of the hadron masses used to correct the scaling variables in Eq. (2.4) are \(m_\pi =0.140\) GeV, \(m_K=0.494\) GeV, and \(m_p=0.938\) GeV.

4 Fitting methodology

The NNPDF fitting methodology has been described at length in previous publications [29,30,31,32,33,34,35,36]. In this section, we present its aspects specific to the NNFF1.0 analysis, some of which are introduced here for the first time. We first discuss the parametrisation of FFs in terms of neural networks, then the minimisation strategy to optimise their parameters, and finally a comprehensive validation of the whole methodology by means of closure tests.

4.1 Neural network parametrisation

As discussed in Sect. 3.1, inclusive SIA data allow for the determination of only three independent combinations of FFs, namely the singlet and nonsinglet combinations in Eq. (3.4) and the gluon FF. In addition, charm- and bottom-quark tagged data make possible to constrain two additional nonsinglet combinations involving heavy-quark FFs, adding up to a total of five independent combinations of FFs.

We adopt the parametrisation basis

where the light-flavour combinations of quark FFs in Eq. (3.4) have been separated according to the values of the corresponding electroweak charges. In Eq. (4.1) we have used the shorthand notation \(D^h_{d^++s^+}=D^h_{d^+}+D^h_{s^+}\). This parametrisation basis is used for all hadronic species. The superscript h in Eq. (4.1) denotes in turn the sum of positive and negative pions, \(h=\pi ^\pm =\pi ^++\pi ^-\), of positive and negative kaons, \(h=K^\pm =K^++K^-\), or of protons and antiprotons, \(h=p/\bar{p}=p+\bar{p}\). The choice of the parametrisation basis is of course not unique. Any linear combination of the FFs in Eq. (4.1) could be used.

Each FF in the basis defined in Eq. (4.1) is parametrised at the initial scale \(Q_0\) as

where \(\mathrm{NN}_i\) is a neural network, specifically a multi-layer feed-forward perceptron [29, 31]. It consists of a set of nodes organised into sequential layers, in which the output \(\xi _i^{(l)}\) of the ith node of the lth layer is

where the function g is a given activation function. The parameters \(\{\omega _{ij}^{(l)},\theta _i^{(l)}\}\), known as weights and thresholds, respectively, are determined during the minimisation procedure.

As in previous NNPDF analyses, here we use the same neural network architecture for all the fitted FFs, namely 2–5–3–1 (that is, four layers with 2, 5, 3 and 1 nodes each). This corresponds to 37 free parameters for each FF, and to a total of 185 free parameters for each hadronic species. This is to be compared to about 15–30 free parameters per hadronic species typically used in other determinations of FFs. The 2–5–3–1 architecture is sufficiently flexible to avoid any parametrisation bias, as we will demonstrate by means of closure tests in Sect. 4.3.

In contrast with previous NNPDF analyses, here we do not supplement the neural network parametrisation with a preprocessing function of the form \(z^\alpha (1-z)^\beta \). Such a function is used in previous NNPDF fits in order to speed up the minimisation process. By absorbing in this prefactor the bulk of the behaviour in the extrapolation regions, the neural network only has to fit deviations from it. In order to minimise potential biases in the final result, the values of the exponents \(\alpha \) and \(\beta \) are chosen for each replica at random within a suitable range determined iteratively [38, 43].

A typical fit of FFs in this analysis is by far computationally less expensive than a typical NNPDF fit of PDFs. This is because the quantity, the quality, and the variety of the data are much more limited in the former case than in the latter. Therefore, removing the preprocessing function from the FF parametrisation does not significantly affect the efficiency of the fitting procedure. Moreover, it avoids the need for the iterative determination of the preprocessing exponents. However, the absence of the preprocessing function in Eq. (4.2) affects the behaviour of the FFs in the extrapolation regions at small and large values of z, and requires further modifications of the parametrisation.

At large z, it is sufficient to explicitly enforce the constraint that \(D_i^h(z,Q_0)\) vanishes in the limit \(z\rightarrow 1\) by subtracting the term \(\mathrm{NN}_i(1)\) in Eq. (4.2). Indeed, in the case of FFs, the large-z extrapolation region is significantly reduced as compared to the PDF case. Data from the B-factory and LEP/SLD experiments ensure a kinematic coverage up to the large-z kinematic cut \(z_\mathrm{max}=0.9\) for all hadronic species (see Fig. 1).

At small z instead, the available experimental information becomes more sparse as the lower kinematic cut \(z_\mathrm{min}=0.02\) (\(z_\mathrm{min}=0.075\)) at \(\sqrt{s}=M_Z\) (\(\sqrt{s}<M_Z\)) is approached. Therefore, without the preprocessing function, the behaviour of the FFs in the small-z extrapolation region will exhibit a significant dependence on the choice of the activation function g in Eq. (4.3). For instance, if g is chosen to be the sigmoid function, \(g(x)=\left[ 1+\exp (-x)\right] ^{-1}\), as usual in NNPDF fits, all FF replicas freeze out to a constant in a region close and below \(z_\mathrm{min}\). Such a behaviour is clearly unphysical, thus it should be avoided.

In order to overcome this issue, an activation function that preserves the features of the sigmoid in the data region and avoids its limitations in the extrapolation region should be adopted. Specifically, we choose g as

Like the sigmoid, the function in Eq. (4.4) exhibits two different regimes: it is linear for values of a close to zero and becomes nonlinear for values of a far from zero. In contrast with the sigmoid, the function in Eq. (4.4) does not saturate asymptotically to zero (one) for large negative (positive) values of a. This feature prevents FFs from freezing out to a constant for values of z close and below the low-z cut \(z_\mathrm{min}\). We have explicitly verified that the choice of activation function does not affect the behaviour of the fitted FFs in the kinematic region covered by data.

An important theoretical requirement on FFs is that they must satisfy positivity, i.e. any physical cross-section computed from them must be positive. At LO, this simply implies that FFs are positive definite. Beyond LO FFs do not need to be positive definite, and positivity could be imposed for instance by requiring a set of at least as many independent observables as the parametrised FFs to be positive. However, for simplicity, here we impose positivity by requiring that FFs are positive definite at all orders, as it is customarily assumed in all other analyses of FFs. This is achieved by squaring the output of Eq. (4.2). We have explicitly checked that this choice does not bias our determination, in that the differences with a fit in which positivity is not imposed at all are negligible. This suggests that the quality of the dataset included in our analysis is good enough to ensure the positivity of FFs for most of the relevant kinematics.

Finally, we should mention that the initial parametrisation scale in Eq. (4.2) is \(Q_0=5\) GeV. This value is both above the bottom-quark threshold (\(m_b=4.92\) GeV) and below the lowest center-of-mass energy of the data included in the fits (\(\sqrt{s}=10.52\) GeV). This choice is advantageous for two reasons. First, it implies that no heavy-quark thresholds have to be crossed in the evolution between the initial scale and the scale of the data. Therefore, the number of active flavours is always \(n_f=5\) and no matching is required. This is advantageous because time-like matching conditions are currently known only up to NLO [95]. Second, in a VFN scheme, our choice implies that charm- and bottom-quark FFs are parametrised on the same footing as the light-quark and gluon FFs. This is beneficial because heavy-quark FFs receive large non-perturbative contributions. Indeed, perturbatively generated heavy-quark FFs lead to a poor description of the data, in particular of heavy-quark tagged data.

4.2 Optimisation of neural network parameters

The determination of neural network parameters in a fit of FFs to experimental data is a fairly involved optimisation problem. In our analysis, it requires the minimisation of the \(\chi ^2\) estimator

where i and j run over the number of experimental data points \(N_\mathrm{dat}\), \(D_i\) are their measured central values, \(T_i\) are the corresponding theoretical predictions computed with a given set of FFs f, and \(C_{ij}^{t_0}\) is the \(t_0\) covariance matrix discussed in Sect. 2.1.

In most situations where neural networks are applied, optimisation is performed by means of some variation of simple gradient descent. In order to optimise the model parameters in this way, it is necessary to be able to straightforwardly compute the gradient of \(\chi ^2\) with respect to model parameters,

Computing these gradients in the case of PDF or FF fits is complicated by the non-trivial relationship between PDFs/FFs and physical observables.

In previous NNPDF analyses of PDFs, minimisation was performed by means of a simple example of a genetic algorithm. At each iteration of the fit, variations (or mutants) of PDFs are generated by random adjustment of the previous best-fit neural-network parameters. The mutant PDF parameters with the lowest \(\chi ^2\) to data are then selected as the best-fit for the next iteration. Such a procedure is blind to the higher-order structure of the problem in parameter space and does not require the computation of the gradients in Eq. (4.6). The most prominent drawback of such a procedure is that it is considerably less efficient than standard gradient descent. Furthermore, while this basic procedure is adequate in the case of PDF fits to a global dataset, it can be sensitive to the noise in the \(\chi ^2\) driven by noisy experimental data.

In the present fit of FFs, the dataset is much more limited than in typical global PDF fits. It is therefore worth considering alternative minimisation strategies that may be less sensitive to such effects. There are a great deal of strategies available in the literature for the optimisation of problems where standard gradient descent methods are difficult or impossible to apply. One such strategy, the Covariance Matrix Adaption-Evolutionary Strategy (CMA-ES) family of algorithms [96, 97], finds regular application in this context.

In this analysis we apply a standard variant of the CMA-ES procedure for the minimisation of the neural network parameters. While we leave the details and the specification of algorithm parameters to Ref. [97], we will outline the procedure schematically here. We denote the set of fit parameters \(\{\omega _{ij}^{(l)},\theta _i^{(l)}\}\) as a single vector \(\mathbf {a}^{(i)}\). In all relevant quantities described here, the superscript i indicates the values at the \(i^{th}\) iteration of the algorithm. The fit parameters are initialised at the start of the fit according to a multi-Gaussian distribution \(\mathcal {N}\) with zero mean and unit covariance

where we use \(\sim \) to denote the distribution of the random vector. This vector is then used as the centre of a search distribution in parameter space. At every iteration of the algorithm, \(\lambda =80\) mutants \(\mathbf {x}_1, \ldots , \mathbf {x}_\lambda \) of the parameters are generated as

that is, mutants are generated around the search centre according to a multi-Gaussian \(\mathcal {N}\) with covariance \(\mathbf {C}^{(i)}\) and according to a step-size \(\sigma ^{(i)}\) initialised as \(\sigma ^{(0)}=0.1\). The mutants are then sorted according to their fitness such that \(\chi ^2(\mathbf {x}_k) < \chi ^2(\mathbf {x}_{k+1})\) and the new search centre is computed as a weighted average over the \(\mu =\lambda /2\) best mutants

where the weights \(\{w_k\}\) are internal parameters of the CMA-ES algorithm.

A key feature of the CMA-ES algorithms is that both the step size \(\sigma ^{(i)}\) and the search distribution covariance matrix \(\mathbf {C}^{(i)}\) are optimised by the fit procedure. To this purpose, the information present in the ensemble of mutants is used to learn preferred directions in parameter space without the need for the explicit computation of gradients. This adaptive behaviour improves the efficiency of the minimisation procedure in comparison to the genetic algorithm adopted in all previous NNPDF fits. Also, we implement a non-elitist version of the CMA-ES, whereby each iteration’s best fit is computed by means of weighted average over some number of mutants. In contrast an elitist selection would take only the best mutant from each iteration. In this way the effect of the noise induced in the \(\chi ^2\) by a relatively small dataset should be reduced.

The procedure outlined in Eqs. (4.8)–(4.9) is iterated until the optimal fit is achieved. As in previous NNPDF analyses, the stopping point is determined by means of a cross-validation method [32], based on the separation of the whole dataset into two subsets: a training set and a validation set. Equal training and validation data fractions are chosen for each experimental dataset, except for those datasets with less than 10 data points. In this case, 80% of the data are included in the training set and the remaining 20% in the validation set. The \(\chi ^2\) of the training set is then minimised while the \(\chi ^2\) of the validation set is monitored. The best-fit configuration is determined according to the look-back criterion [38], according to which the stopping point is identified as the absolute minimum of the validation \(\chi ^2\) within a maximum number of generations, \(N_\mathrm{gen}^\mathrm{max}\). Here we take \(N_\mathrm{gen}^\mathrm{max}=4\times 10^4\). This value is large enough to guarantee that the best-fit FFs do not depend on it.

Finally, as in the NNPDF3.0 [38] and NNPDF3.1 [39] PDF fits, an a posteriori selection on the resulting sample of Monte Carlo replicas is performed for each fit. Specifically, replicas whose \(\chi ^2\) is more than four-sigma away from its average value are discarded and replaced by other replicas which instead satisfy this condition. This ensures that outliers, which might be present in the Monte Carlo ensemble due to residual inefficiencies of the minimisation procedure, are removed.

4.3 Closure testing fragmentation functions

The determination of FFs through a fit to experimental data is a procedure that necessarily implies a number of assumptions, mostly concerning their parametrisation and the propagation of the experimental uncertainties into them. Therefore it is crucial to systematically validate the fitting methodology in order to avoid any procedural bias that could limit the reliability of the fitted quantities.

As discussed in Ref. [38], the robustness of the fitting procedure used in a global QCD analysis can be assessed by means of closure tests. The basic idea of a closure test is to perform a fit of FFs to a set of pseudo-data generated using theoretical predictions obtained with a pre-existing set of FFs as an input. In such a scenario, the underlying physical behaviour of the FFs is known by construction. Therefore, it is possible to assess the reliability of the fitting methodology by comparing the distributions obtained from the fit to those used as an input. We refer the reader to Ref. [38] for a thorough description of the various levels of closure tests and of the statistical estimators designed to validate different aspects of the fitting methodology. Here we focus on two types of closure tests.

-

Level 0 (L0). Pseudo-data in one-to-one correspondence with the data discussed in Sect. 2.1 are generated using the theoretical predictions obtained with a given set of FFs; no random noise is added at this level. Then \(N_\mathrm{rep}\) fits, each to exactly the same set of pseudo-data, are performed. In order to take into account correlations, the error function that is minimised (i.e. the \(\chi ^2\) evaluated for each replica) is still computed using the covariance matrix of the real data, even though the pseudo-data have zero uncertainty.

In a L0 closure test, provided a sufficiently flexible parametrisation and a sufficiently efficient minimisation algorithm, the fit quality can become arbitrarily good. The \(\chi ^2\) should decrease to arbitrarily small values, and the resulting FFs should coincide with the input ones in the kinematic region covered by the pseudo-data.

-

Level 2 (L2). Exactly as in the case of the fits to real data, \(N_\mathrm{rep}\) Monte Carlo replicas of the data are generated applying the standard NNPDF procedure. The only difference is that the central values of the single measurements are replaced by the respective theoretical predictions obtained using the input FFs. Then a set of FFs is fitted to each replica.

In a L2 closure test, the final \(\chi ^2\) should be close to unity, provided that the fitted procedure correctly propagates the fluctuations of the pseudo-data, due to experimental statistical, systematic, and normalisation uncertainties, into the FFs. In the kinematic region covered by the data, the input FFs should fall inside the one-\(\sigma \) band of their fitted counterparts with a probability of around 68%.

In summary, the goal of a L0 closure test is to assess whether the fitting methodology, including the parametrisation form and the minimisation algorithm, is such to avoid any procedural bias. The goal of a L2 closure test, instead, is to verify whether the fitting methodology allows for a faithful propagation of the data uncertainties into the FFs.

Comparison between the central value of the charged pion HKNS07 FFs [62] (red dashed line) and the corresponding FFs obtained from the L0 (blue bands) and L2 (green bands) closure tests. The five panels show the \(D_g^{\pi ^\pm }\), \(D_{u^+}^{\pi ^\pm }\), \(D_{d^++s^+}^{\pi ^\pm }\), \(D_{c^+}^{\pi ^\pm }\), and \(D_{b^+}^{\pi ^\pm }\) FFs at \(Q=5\) GeV, in the z range which roughly matches the kinematic coverage of the fitted data. Shaded bands indicate their one-\(\sigma \) uncertainties. The central (lower) inset show the ratio of the L0 (L2) FFs to the HKNS07 FFs

Here we present results for the L0 and L2 closure tests applied to the determination of the charged pion FFs at NLO. We use as input FFs the central distributions of the HKNS07 set [62]. We have also verified that closure tests are successful for all the hadronic species considered in the NNFF1.0 analysis, when either the HKNS07 or the DSS07 sets [61, 98] at LO or NLO are used as an input.

The value of the total \(\chi ^2/N_\mathrm{dat}\) resulting from the L0 and L2 closure tests is displayed in Table 2. In Fig. 3 we compare the input FFs from the HKNS07 set and the corresponding fitted FFs from the L0 and L2 closure tests. The comparison is shown for the five combinations of FFs parametrised in our analysis, see Eq. (4.1), at the input scale \(Q = 5\) GeV, and in a range of z which roughly corresponds to the kinematic coverage of the data included in the fits. The upper panel of the plots in Fig. 3 displays the absolute distributions, while the central and the lower panels show the ratio of the L0 and L2 FFs to the input HKNS07 FFs, respectively. The shaded bands for the L0 and L2 distributions indicate the one-\(\sigma \) FF uncertainty.

From Table 2, as expected, the \(\chi ^2/N_\mathrm{dat}\) is close to zero for the L0 closure test and close to one for the L2 closure test. These results indicate that our fitting methodology is adequate to reproduce the input FFs without introducing any significant procedural bias.

From Fig. 3, it is evident that the FFs of the L0 closure test are almost identical to the input HKNS07 FFs all over the range in z, hence the \(\chi ^2\) close to zero. However, we also observe a spread of the uncertainty bands in the very large-z region. This is due to the upper kinematic cut (\(z_\mathrm{max} = 0.9\)) imposed on the fitted dataset, such that distributions at large values of z remain unconstrained. This effect is more enhanced for the gluon, due to the reduced sensitivity of the data included in the fit to this distribution and to the smallness of its input FF in that region. Analogously, in the small-z region, where the data included in the fit are rather sparse, the fitted FFs display an increase of the uncertainties. This confirms that the fitting methodology used here can faithfully reproduce the input FFs in the region where the data are sufficiently constraining.

From Fig. 3, it is also apparent that the fitted FFs in the L2 closure test are in good agreement with the input FFs within their uncertainties, hence the \(\chi ^2\) close to one. This indicates that our fitting methodology correctly propagates the experimental uncertainty of the data into the uncertainties of the fitted FFs. As in the case of the L0 closure test, we note that the uncertainty bands of the FFs in the large- and small-z regions inflate.

In the light of the results of the L0 and L2 closure tests, we conclude that the fitting methodology adopted here for the determination of FFs is suitable to ensure a negligible procedural bias and a faithful representation of their uncertainties.

5 The NNFF1.0 fragmentation functions

In this section we present the main results of this work, namely the NNFF1.0 sets of FFs for charged pions, charged kaons, and protons/antiprotons at LO, NLO, and NNLO. First we discuss the quality of the fits and compare the NNFF1.0 predictions to the fitted dataset. Then we show the resulting FFs and their uncertainties, focusing on their perturbative convergence upon inclusion of higher-order QCD corrections, on a comparison of the NLO pion and kaon FFs with their counterparts in the DEHSS and JAM analyses, and on the momentum sum rule.

5.1 Fit quality and data/theory comparison

In Table 3 we report the values of the \(\chi ^2\) per data point, \(\chi ^2/N_\mathrm{dat}\), for both the individual and the total datasets included in the NNFF1.0 analysis. The values are shown at LO, NLO, and NNLO for all the hadronic species.

Concerning the fit quality of the total dataset, the most noticeable feature is the sizeable improvement upon inclusion of higher-order corrections. The improvement of the total \(\chi ^2/N_\mathrm{dat}\) is particularly pronounced when going from LO to NLO, and more moderate, but still significant, when going from NLO to NNLO. This demonstrates that the inclusion of the NNLO corrections improves the description of the data. This effect is particularly evident for the charged pion fits, which are based on the most abundant and accurate dataset.

Comparison between the dataset for charged pions, charged kaons, and protons/antiprotons from the BELLE and BABAR experiments and the corresponding NNLO theoretical predictions using our best-fit NNLO FFs. We show both the absolute distributions (left and central panel) and the data/theory ratios (right panel). Shaded areas indicate the kinematic regions excluded by our cuts, and the bands correspond to one-\(\sigma \) FF uncertainties

Concerning the fit quality of the individual experiments, the general trend of the \(\chi ^2/N_\mathrm{dat}\) is the same as that of the total \(\chi ^2/N_\mathrm{dat}\), with two main exceptions. First, the \(\chi ^2/N_\mathrm{dat}\) value for charged kaons and protons/antiprotons data in the BELLE experiment, despite remaining good, increases as higher-order QCD corrections are included. Such an increase is accompanied by a decrease of the \(\chi ^2/N_\mathrm{dat}\) value in the BABAR experiment. Since the kinematic coverage of these two experiments largely overlaps, and given the precision of the corresponding measurements, the opposite trend of the \(\chi ^2/N_\mathrm{dat}\) suggests a possible tension between the two. Such a tension was already reported in Ref. [19], although its origin is still not completely understood. Second, the \(\chi ^2/N_\mathrm{dat}\) value for inclusive and light-tagged charged pion data in the DELPHI experiment deteriorates as higher-order QCD corrections are included. This behaviour indicates a possible tension between the DELPHI measurements and the other datasets at the same scale (\(\sqrt{s}=M_Z\)), whose description instead significantly improves when going from LO to NNLO. The origin of such a tension arises mostly from the large-z region, where the DELPHI inclusive and light-tagged measurements for charged pions are undershot by the theoretical predictions.

From Table 3 we also observe that in all our fits the \(\chi ^2/N_\mathrm{dat}\) value for the BELLE experiment is anomalously small. This result was already observed in previous analyses [17,18,19,20] and is likely to be due to an overestimate of the uncorrelated systematic uncertainty.

We also notice that for some datasets the \(\chi ^2/N_\mathrm{dat}\) is poor even at NNLO: this happens specifically for the TASSO14, TASSO22, TASSO44 and OPAL experiments in the case of charged pions, for the TASSO14, TASSO22, and OPAL experiments in the case of charged kaons, and for the BABAR experiment in the case of protons/antiprotons. As we will show below, the experimental data points for the TASSO datasets fluctuate around the theoretical predictions by an amount that is typically larger than their uncertainties. This explains the poor \(\chi ^2/N_\mathrm{dat}\) values reported in Table 3 at all perturbative orders. For charged kaons and pions, the large \(\chi ^2/N_\mathrm{dat}\) associated to the OPAL data comes from the large-z region, where theoretical predictions overshoot the data. For charged protons/antiprotons, the large \(\chi ^2/N_\mathrm{dat}\) of the BABAR experiment is driven by a genuine tension between BABAR and TPC/TASSO34 data below \(z=0.2\). Indeed, if the TPC and TASSO34 data are removed from the fits, the value of the \(\chi ^2/N_\mathrm{dat}\) for the BABAR experiment improves significantly (see Sect. 6.2).

In order to give further weight to these considerations, we present a comparison of the dataset used in this analysis to the corresponding NNLO theoretical predictions obtained using the NNLO FFs from our fits. In Fig. 4 such a comparison is displayed for the BELLE and BABAR data for charged pions, charged kaons, and protons/antiprotons. The plots on the r.h.s. of Fig. 4 display the corresponding data/theory ratios. The uncertainty bands indicate the one-\(\sigma \) FF uncertainty, while the shaded areas represent the regions excluded by kinematic cuts (see Sect. 2.2). In Fig. 5 we show the same comparison as in Fig. 4 for all the inclusive measurements at \(\sqrt{s}=M_Z\). To complete the picture, we display the data/theory ratios for all the remaining datasets: in Fig. 6 for charged pions, in Fig. 7 for charged kaons, and in Fig. 8 for protons/antiprotons.

Same as Fig. 4 for the ALEPH, DELPHI, OPAL and SLD inclusive measurements

The data/theory ratio for the charged pion data included in the NNFF1.0 fit and not accounted for in Figs. 4 and 5. As before, theoretical predictions are computed at NNLO with our best-fit NNLO FFs, shaded areas indicate the regions excluded by kinematic cuts, and the bands correspond to one-\(\sigma \) FF uncertainties

Same as Fig. 6 but for charged kaons

Same as Fig. 6 but for protons/antiprotons, \(p/\bar{p}\)

In general, an overall good agreement between data and theoretical predictions is achieved for all experiments, consistently with the \(\chi ^2/N_\mathrm{dat}\) values reported in Table 3. Remarkably, theoretical predictions and data are in reasonable agreement also in the small- and large-z extrapolation regions excluded by kinematic cuts, although the uncertainties of the predictions inflate in these regions.

A few remarks concerning the individual datasets are in order. A significant deviation from the theoretical predictions is observed for the low-z proton/antiproton measurements from the BABAR experiment. This is the origin of the large \(\chi ^2\) reported in Table 3. As already mentioned and as we will further demonstrate in Sect. 6.2, this is a consequence of the tension between the BABAR and TPC/TASSO34 measurements. We have explicitly verified that the low-z BABAR data can be satisfactorily described if the TPC and TASSO34 datasets are removed from the fit. However, we have chosen to keep these two experiments in our baseline dataset because FFs turn out to be very stable irrespective of their inclusion (see Sect. 6.2).

The BABAR measurements for pions and kaons tend to be overshot by the NNLO theoretical predictions at large z. This is not the case for the BELLE data that cover a similar large-z region and are fairly described by the predictions. This points to a tension between the BELLE and BABAR measurements in that region. We also note that, as compared to pions and kaons, the BELLE and BABAR proton/antiproton measurements are affected by larger experimental uncertainties, especially at \(z\gtrsim 0.6\). This consistently propagates into larger uncertainty bands for the corresponding predictions.

In the case of the DELPHI experiment, theoretical predictions undershoot the data for charged pions at \(z\gtrsim 0.3\). This is the reason of the large \(\chi ^2/N_\mathrm{dat}\) value reported in Table 3 for this experiment. The tension between DELPHI and the other experiments at the same value of \(\sqrt{s}\) (ALEPH, OPAL, and SLD), which are instead well described by our FFs, is apparent from Fig. 5.

Some of the observations made in this section on possible tensions between different experiments in certain kinematic regions will be quantified in Sect. 6.2, where a thorough study of the stability of our fits upon variations of the dataset will be presented.

5.2 Fragmentation functions

We now turn to discuss the NNFF1.0 sets. In order to study the perturbative convergence of the FFs upon inclusion of higher-order QCD corrections, we first compare our LO, NLO, and NNLO determinations among each other. Then we compare our best-fit NLO pion and kaon FFs to their counterparts in the DEHSS and JAM analyses. Finally, we conclude with a comment on the momentum sum rule.

5.2.1 Perturbative stability

We display the five FF combinations parametrised in our fits, Eq. (4.1), and their one-\(\sigma \) uncertainties in Figs. 9, 10, and 11 for charged pions, charged kaons and protons/antiprotons, respectively. For each hadronic species, the FFs are shown at LO, NLO, and NNLO as functions of z at \(Q=10\) GeV. The upper panel of each plot displays the absolute distributions, while the central and the lower panels display the NLO/LO and NNLO/NLO ratios.

Comparison among the LO, NLO, and NNLO NNFF1.0 charged pion FFs, together with their one-\(\sigma \) uncertainties, in the parametrisation basis of Eq. (4.1) at \(Q=10\) GeV. The corresponding NLO/LO and NNLO/NLO ratios are displayed in the two insets below each FF

Same as Fig. 9 but for the sum of charged kaons, \(K^\pm \)

Same as Fig. 9 but for the sum of protons and antiprotons, \(p/\bar{p}\)

A remarkable feature of the distributions shown in Figs. 9, 10 and 11 is their perturbative convergence. While LO and NLO distributions can in some cases differ by more than one standard deviation (see for example \(D_{u^+}^h\) for the three hadronic species in the medium-/large-z region), the differences between NLO and NNLO FFs are small. This is consistent with the perturbative convergence of the global \(\chi ^2\) discussed in Sect. 5.1.

Comparison among the NLO NNFF1.0, DEHSS and JAM FF sets for the sum of charged pions, \(\pi ^\pm \). The FFs in the parametrisation basis, Eq. (4.1), are shown at \(Q=10\) GeV as a function of z, together with their corresponding one-\(\sigma \) uncertainties. The ratios of NNFF1.0 to DEHSS and JAM are displayed, respectively, in the two insets below each FF

Same as Fig. 12, but for the sum of charged kaons, \(K^\pm \)

A further noticeable aspect of the comparison in Figs. 9, 10 and 11 is related to the size of the FF uncertainties. While the NLO and NNLO uncertainties are similar in size, the LO uncertainty bands are in general visibly larger, particularly those of the gluon FFs. This was expected because LO predictions for SIA data are only indirectly sensitive to the gluon FF through DGLAP evolution. This entails a broadening of the uncertainties of all FFs due to the cross-talk induced by DGLAP evolution.

5.2.2 Comparison with other FF sets

We now compare our FFs to the most recent determinations available in the literature, namely the DEHSS [17, 18] and the JAM [20] sets. The HKKS sets [19] mentioned in Sect. 2.1 were also recently presented but were not intended to be publicly released [99]. Since these analyses were performed only for pions and kaons at NLO, we limit the comparison to these hadronic species and this perturbative order. Such a comparison is shown in Figs. 12 and 13 at \(Q=10\) GeV in the basis of Eq. (4.1). The upper panel of each plot shows the absolute distributions, while the central and the lower insets show the ratio to NNFF1.0 of DEHSS and JAM, respectively. Note that the JAM FFs are not extrapolated below the lowest kinematic cut used in their fits (\(z=0.05\)), hence the truncated curves in Figs. 12 and 13.

Concerning the shapes of the FFs, a number of interesting differences between the three sets can be seen from the comparisons in Figs. 12 and 13.

For the charged pion FFs, the NNFF1.0 and DEHSS results are in fairly good agreement, despite differences in the dataset (see Sect. 2.1). Moderate differences are observed only for \(D_{u^+}^{\pi ^\pm }\) at \(0.2\lesssim z \lesssim 0.5\), for \(D_{u^+}^{\pi ^\pm }\) and \(D_{c^+}^{\pi ^\pm }\) below \(z\sim 0.1\), and for all quark combinations of FFs above \(z\sim 0.7\). A more pronounced difference in shape is observed for the gluon FF, \(D_g^{\pi ^\pm }\), for which the NNFF1.0 distribution is more suppressed at large z. However, the two sets still agree at the one-\(\sigma \) level. The NNFF1.0 and JAM results are also in fair agreement, except for \(D_{d^++s^+}^{\pi ^\pm }\) above \(z\sim 0.1\) and for \(D_g^{\pi ^\pm }\) around \(z\sim 0.2\), where the discrepancy exceeds two standard deviations. Again, the gluon FF from NNFF1.0 is more suppressed at large z than that from JAM. Differences are also seen for all quark FF combinations at large z, although they are always compatible within uncertainties.

For charged kaons, the differences in shape among the three FF sets are more marked than in the case of charged pions. A fair agreement is observed only in the case of \(D_{b^+}^{K^\pm }\). The discrepancies are typically within a couple of standard deviations for the \(D_{d^++s^+}^{K^\pm }\) and \(D_g^{K^\pm }\) and more than three/four standard deviations for \(D_{u^+}^{K^\pm }\) and \(D_{c^+}^{K^\pm }\).

The origin of the differences among the three sets, at low z for most of the quark FFs and over the whole z range for the gluon FF, is likely to be mostly due to the hadron-mass corrections. These are included in NNFF1.0 but not in the other two sets. Using a more conservative small-z cut in the NNFF1.0 analysis, similar to that adopted in the DEHSS and JAM analyses, can exclude the region where hadron-mass corrections are sizeable (see Fig. 2). If a fit is performed with such a conservative cut, most of the differences among the three FF sets are reconciled, as we will explicitly show in Sect. 6.1. The differences at large z might arise from our choice of the kinematic cut too. Indeed, we exclude all data above \(z=0.9\), which are instead retained in the DEHSS and JAM analyses.

Concerning the FF uncertainties, we observe that for the quark distributions the three FF sets are in good agreement in the region covered by the common data, roughly \(0.1\lesssim z \lesssim 0.7\). Conversely, in the regions where a different amount of experimental information is included or where such information is more sparse, differences are more significant. Typically, the uncertainties of the NNFF1.0 FFs are larger than those of their DEHSS and JAM counterparts at small and large values of z, where data are less abundant or even absent. Exceptions to this trend are the uncertainties of the \(D_{c^+}^{K^\pm }\) and \(D_{b^+}^{K^\pm }\) DEHSS distributions below \(z\sim 0.1\). They are larger than the NNFF1.0 ones, again because of their more conservative small-z cuts.

The uncertainty of the gluon FFs, for both pions and kaons, deserves a separate comment. As already mentioned, SIA cross-sections are directly sensitive to the gluon FF only beyond LO. As a consequence, one would expect that the gluon FF is determined with larger uncertainties than the quark FFs. This is clearly shown in Figs. 12 and 13 for the NNFF1.0 sets. The gluon FFs of the DEHSS and JAM sets, instead, have uncertainties comparable to those of the quark FFs. While the smaller uncertainties of the DEHSS gluon FFs may be due to the larger dataset used in their analysis (which also includes pp measurements sensitive to the gluon FF already at LO), this is not the case for the JAM sets, whose dataset mostly coincides with that of NNFF1.0 (see Sect. 2.1). We ascribe this difference to the more restrictive functional form used in the JAM analysis to parametrise their FFs. An underestimate of the gluon FF uncertainty due to the functional form might also affect the DEHSS determinations.

5.2.3 The momentum sum rule

We conclude this section with a brief discussion of the momentum sum rule

which must be satisfied by FFs irrespective of the value of Q. Note that the sum in Eq. (5.1) runs over all possible hadrons h produced in the fragmentation of the parton i, not only over those determined in the present analysis. The physical interpretation of Eq. (5.1) is very simple: it ensures that the momentum carried by all hadrons produced in the fragmentation of a given parton i is the same as that carried by the parton itself. If Eq. (5.1) is true at some scale Q, it must remain true at all scales. This is guaranteed by DGLAP evolution as a direct consequence of the energy conservation.

In principle Eq. (5.1) could be used in a fit to constrain simultaneously the behaviour of the FFs for different hadrons, especially in the small-z region where no experimental information is available. In practice we determine the FFs of pions, kaons, and protons/antiprotons separately and we do not impose the momentum sum rule. The momentum sum rule cannot be enforced in our fits for two reasons. First, it requires the knowledge of the FFs of all hadronic species h, while we consider only a subset of them. Second, it requires one to integrate FFs down to \(z=0\), while our FFs are determined only down to \(z=10^{-2}\).

This said, Eq. (5.1) can still be used as an a posteriori check of the consistency of the fitted FFs. In particular, one expects that

with \(z_\mathrm{min}=10^{-2}\). Since the lower bound of the integral in Eq. (5.2) is not zero, the quantity \(M_i\) depends on Q. We have computed the central value and the uncertainty of \(M_i\) for the gluon FFs (\(i=g\)) using the NNLO NNFF1.0 FF sets for three different values of Q, obtaining

The uncertainties from each hadronic species have been added in quadrature. Remarkably, we find that Eq. (5.2) is fulfilled in the range of energies covered by the data included in the fits.

We cannot unambiguously check Eq. (5.2) for the individual quark and antiquark FFs, unless some ad hoc assumptions are imposed to separate them from the fitted FF combinations in Eq. (4.1). Nevertheless, we can check a modified, less restrictive, version of Eq. (5.2)

where \(N=2\) for \(i=u^+,c^+,b^+\) and \(N=4\) for \(i=d^++s^+\). We have verified that also Eq. (5.4) is satisfied by the NNFF1.0 FFs. For instance, at \(Q=5\) GeV we find

in agreement with the expectations.

6 Fit stability

In this section we study the stability of the results presented in Sects. 5.1 and 5.2 upon variations of the small-z kinematic cuts and of the dataset defined in Sect. 2.1.

6.1 Dependence on the small-z kinematic cuts

We first study the dependence of our results upon the small-z kinematic cuts applied to the data included in the NNFF1.0 fits. Our aim is to assess the interplay between higher-order QCD corrections and the description of small-z data, in order to motivate the choice of kinematic cuts made in Sect. 2.2. To this purpose, we perform some additional fits, all to the same baseline dataset described in Sect. 2.1 but with different small-z cuts. For each value of the kinematic cut, the additional fits are performed at LO, NLO, and NNLO.

The various small-z kinematic cuts considered here are summarised in Table 4, together with our baseline choice (denoted as BL henceforth). For each hadronic species we consider the two limiting cases in which the small-z kinematic cuts are either completely removed or set to conservative values. The latter are defined in such a way that only data in the kinematic region where both hadron-mass and NNLO QCD corrections are expected to be negligible are included in the fit. Conservative cuts are similar to those adopted in other analyses of FFs. Additional choices of small-z kinematic cuts between the two cases above are also investigated. As the data for charged pions extend down to smaller values of z than data for charged kaons and protons/antiprotons, a more dense scanning is adopted in the first case.

The values of \(\chi ^2/N_\mathrm{dat}\) for each fit to \(\pi ^\pm \) data with the choices of small-z kinematic cuts summarised in Table 4, at LO, NLO, and NNLO

Same as Fig. 14, but for \(K^\pm \)

Same as Fig. 14, but for \(p/\bar{p}\)

In Figs. 14, 15 and 16 we show the values of \(\chi ^2/N_\mathrm{dat}\) for the LO, NLO, and NNLO fits of charged pions, charged kaons, and protons/antiprotons FFs performed with the kinematic cuts in Table 4. Inspection of the \(\chi ^2/N_\mathrm{dat}\) values for the total dataset in Figs. 14, 15 and 16 allows us to draw three remarks.

First, there is clear evidence of perturbative convergence: irrespective of the specific choice of the small-z cuts, the \(\chi ^2/N_\mathrm{dat}\) values at NNLO are always lower than at NLO, which are in turn always lower than at LO.

Second, the spread of the \(\chi ^2/N_\mathrm{dat}\) values for different cuts at a fixed perturbative order is reduced as the perturbative order is increased. The value of the \(\chi ^2/N_\mathrm{dat}\) for the less restrictive cuts moves closer to the corresponding value for the conservative cuts. This confirms that the inclusion of higher-order QCD corrections significantly improves the description of the data at small z and that the results become accordingly less dependent on the choice of small-z cuts. These results are consistent with what was reported in Ref. [26] where, at least for charged pions, it was found that a fixed-order NNLO fit is able to describe data down to \(z_\mathrm{min}=0.02\) with the same accuracy as a small-z resummed NNLO+NNLL fit.

Third, at any perturbative order, the \(\chi ^2/N_\mathrm{dat}\) of the fit with baseline kinematic cuts is always very close to the lowest \(\chi ^2/N_\mathrm{dat}\), usually associated to the fit with conservative cuts. The only exception is the proton/antiproton case, where the fit with the baseline cuts has a \(\chi ^2/N_\mathrm{dat}\) significantly larger than the fit with conservative cuts. This behaviour is mostly driven by the high value of the \(\chi ^2/N_\mathrm{dat}\) for the BABAR data. However, as already mentioned in Sect. 5.1, this is due to a genuine tension between the BABAR and TPC/TASSO34 data below \(z=0.2\). This will be explicitly demonstrated in Sect. 6.2 by studying fits to reduced datasets.

Similar conclusions as those for the total dataset can be drawn for the individual datasets, based on Figs. 14, 15 and 16. However, the baseline cuts do not always minimise the \(\chi ^2/N_\mathrm{dat}\) of the individual experiments, especially of those with a limited number of data points. This is a feature of any global analysis, where the total \(\chi ^2\) always represents a compromise among the different pulls from individual experiments.